Abstract

We investigate the blowup conditions to the Cauchy problem for a semilinear wave equation with scale-invariant damping, mass and general nonlinear memory term (see Eq. (1.1) in the Introduction). We first establish a local (in time) existence result for this problem by Banach’s fixed point theorem, where Palmieri’s decay estimates on the solution to the corresponding linear homogeneous equation play an essential role in the proof. We then formulate a blowup result for energy solutions by applying the iteration argument together with the test function method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the Cauchy problem for semilinear wave equations with scale-invariant dampings, mass and general nonlinear memory terms

where \(u=u(t,x)\) is the unknown function, t is the time variable and \(x \in {\mathbb {R}}^n\), the parameters \(\mu _1 >0,\mu _2\geqslant 0\), the index \(p>1\) and the convolution nonlinear term with respect to time variable is denoted by

where the time-dependent memory kernel (or called relaxation function) \(g=g(t)>0\).

Equation (1.1) is scale-invariant as the corresponding linear model is invariant under the \(hyperbolic \, scaling\) (see [18])

In physics, the equation

models the motion of a macroparticle in a solvent, where m is the mass, k is the memory kernel, and \(F_{R}(t)\) is a random force. A stationary solution V(t) can be regarded as a centered Gaussian process, whose autocorrelation function r(t) satisfies the deterministic delay differential equation

where the right term is a special nonlinear memory term, more details can be found in [16].

The equation

where \(0<\gamma <1,\ p>1\), \(\Gamma \) is the Euler–Gamma function, can be regarded as an approximation of the classical semilinear wave equation

as it is easy to see that the limit

holds in the distribution sense. Equation (1.3) determines a critical index which divides small-data global existence, blowup has been studied extensively, it is referred as the Strauss conjecture. That is, if \(1<p\leqslant p_S(n)\), the solution with nonnegative initial values blows up in finite time; otherwise, if \(p>p_S(n)\), there exists a global solution with small initial data. The critical index \(p=p_S(n)\) is the Strauss exponent which is the positive root of the quadratic equation

See [7,8,9, 11, 12, 15, 20,21,22, 24,25,26] for more details.

Let us review some known results on the semilinear wave equations. Wakasugi [23] studied the following scale-invariant damped wave equation

where \(p>1\), \(\mu _1>0\). A blowup result was formulated under the condition that \(1<p\leqslant p_F(n)\) and \(\mu _1>1\) or \(1<p\leqslant p_F(n+\mu _1-1)\) and \(0<\mu _1\leqslant 1\), where \(p_F(n)=1+\frac{2}{n}\) is the Fujita exponent. In addition, the well-posedness and asymptotic behavior of solution to (1.4) have been extensively studied in [4, 10, 14].

If Eq. (1.4) involves a mass term, it becomes

where \(p>1\), \(\mu _1\), \(\mu _2\) are nonnegative constants. Set

which is useful to describe the interplay between damping and mass. On the one hand, for \(\delta \geqslant (n+1)^2\), the damping term is predominant and the shift of the Fujita index \(p_F\big (n+\frac{\mu _1-1-\sqrt{\delta }}{2}\big )\) is shown to be the critical exponent [5], so in this case the equation behaves like parabolic equation. On the other hand, if \(\delta \geqslant 0\), \(1<p<p_S(n+\mu _1)\) or \(0\leqslant \delta <n^2\), \(p=p_S(n+\mu _1)\), then the energy solution to (1.5) blows up in a finite time and an upper life span estimate was established in [19], and Equation (1.5) seems to be wave-like at least concerning blowup.

Let’s turn to the semilinear wave equations/systems with nonlinear memory term. The simplest case is the undamped equation

For the special memory term as in (1.2), Chen and Palmieri [2] found a generalized exponent \(p_{0,S}(n,\gamma )\) which is the positive root of the following equation

Moreover, they proved that in the subcritical case (i.e., \(1 < p\leqslant p_{0,S}(n,\gamma )\) for \(n\geqslant 2\) and \(p > 1\) for \(n=1\)) and in the critical case (i.e., \(p=p_{0,S}(n,\gamma )\) and \(n\geqslant 2\)), the energy solution to (1.7) blows up in finite time. Some blowup conditions for a simplest weakly coupled system with general nonlinear memory terms

with \(p,q>1\) were obtained by Chen in [1]. He showed that if the kernels \(g_k~(k=1,2)\) of the general memory terms satisfy two different growth conditions ([1], Theorem 2.2), then the energy solution (u, v) to Eq. (1.8) must blow up in finite time. It should be point out that the skill of constructing G(t) in [1] enables us to deduce the first lower bound estimates for U(t), see (4.22) in this paper.

Up to now, to our knowledge there is few results on the blowup to semilinear wave equations with scale-invariant dampings, mass and general nonlinear memory terms. We will study this problem in this paper.

In order to investigate blowup, it requires a local (in time) existence result (Theorem 2.1) as prerequisite. We will employ the Banach’s fixed point theorem to prove Theorem 2.1. In this context, Palmieri’s decay estimates on the solution to the corresponding linear homogeneous equations are necessary to construct the working space (see Lemmas 3.2, 3.3). However, the sign of the interplay quantity \(\delta \) is uncertain due to different parameters \(\mu _1\) and \(\mu _2\); thus, we have to make classifications according to the value of \(\delta \).

An efficient way to study blowup for semilinear wave equations is the test function combined with Kato-type differential inequalities, see [21, 24]. However, due to the effect of the memory terms, we cannot get sharp lower estimates on the blowup functional (cf. (2.7) in [24]). Inspired by [2] and [24], we will employ the iteration argument together with test functions (see Chen and Reissig [3], Palmieri and Tu [19] and references therein) to study blowup. As Eq.(1.1) is more complicated than (1.7), the testing function used in [1] cannot work here. Instead, we select

as the testing function, where

with \( {\mathbb {S}}^{n-1}\) is the \(n-1\) dimensional unit sphere and

with \(K_{\frac{\sqrt{\delta }}{2}}(t+1)\) is the modified Bessel function of the second kind (\(t\geqslant 0\)). Note the structure of the memory terms being general, the classical Kato’s type lemma ([13]) does not work well in our model; however, thanks to Chen’s idea on constructing the function G(t) ([1]), the iteration procedure can be deduced.

This paper is organized as follows. In Sect. 2, we state our main results including the local well-posedness (Theorem 2.1) and blowup result (Theorem 2.2). The local well-posedness result will be proved in Sect. 3 after some preliminaries for the corresponding linear homogeneous equation being introduced. Finally, the main result Theorem 2.2 will be proved by the combination of the test function method and the iteration argument in Sect. 4.

\(\textbf{Notation}\). \(f\lesssim g\) means there exists a constant \(C>0\) such that \(f\le Cg\), \(f > rsim g\) means there exists a constant \(C>0\) such that \(f\ge Cg\) and \(f\sim g\) when \(g\lesssim f \lesssim g \). \(N_0=N\cup \{0\}\) is the set of all nonnegative integer. All spaces of functions are assumed to be over \({\mathbb {R}}^n\) and \({\mathbb {R}}^n\) is dropped in function space notation if there is no ambiguity.

2 Main Results

In this section, we will give the main results of the paper. The local well-posedness result to (1.1) is

Theorem 2.1

(Local existence) Suppose that \((u_0,u_1) \in H^1 \times L^2\) compactly supported in a ball \(B_R(0)=\{x:|x|\leqslant R\}\) with some radius \(R>0\). Suppose further that

\(\mu _1 >0\), \(\mu _2\geqslant 0\) such that

and the nonnegative relaxation function \(g(t)\in L^1_{loc}([0,\infty ))\). Then, there exist a maximal existence time \(T_m \in [0,\infty )\) and a unique (mild) solution

to (1.1) satisfying \( supp\, u(t,\cdot )\subset B_{R+t}\) for all \(t \in [0,T_m).\)

Our blowup result is concerned with the so-called energy solution, which is defined by

Definition 2.1

Let \((u_0,u_1) \in H^1 \times L^2\), u is an energy solution to (1.1) on [0, T) if

satisfies \(u(0,\cdot )=u_0\in H^1(R^n)\) and the integral relation

for any test function \(\phi \in C_0^{\infty }([0,\infty )\times R^n)\) and any \(t\in (0,T)\).

The main result in this paper is concerning blowup, which is stated as

Theorem 2.2

(Blowup) Let \(p>1\) such that \(1<p<\infty ,~n=1,~2;~1<p\leqslant \frac{n}{n-2},~n\geqslant 3\) and \(\mu _1 >0\), \(\mu _2\geqslant 0\) such that \(\delta > 0\). Suppose that the nonnegative relaxation \(g(t)\in L^1_{loc}([0,\infty ))\cap C^1([0,\infty ))\). Suppose further that

and \((u_0,u_1) \in H^1(R^n) \times L^2(R^n)\) are nonnegative and compactly supported in the ball \(B_R(0)\), \(u_1\) is not identically zero and satisfy

Let u be the local (in time) energy solution to (1.1) on [0, T) according to Theorem 2.1, where T is the life span. Then,

and the solution u blows up in finite time.

Example 2.1

Let us consider Eq. (1.1) with nonlinear memory terms of polynomial decay, i.e., \(g(t)=(1+t)^{-\gamma }\), namely

Under the assumptions in Theorem 2.2, we know that the solution u to (2.6) blows up in finite time.

3 Proof of Theorem 2.1

3.1 Preliminaries

The solution of the linear homogeneous equation

is given by

where \(\mu _1 >0\), \(\mu _2\geqslant 0\), \(E_0(t,s,x)\) and \(E_1(t,s,x)\) are the distributional solutions with data \((w_0,~w_1)=(\delta _0,0)\) and \((0,\delta _0)\), respectively, \(\delta _0\) is the Dirac distribution in the x variable and \(*_{(x)}\) denotes the convolution with respect to the x variable. In addition, we require the initial data belong to the classical energy pace \(H^1\) with \(L^1\) regularity, namely

For any \(k \in [0,1]\), denote

The following decay results are useful to prove Theorem 2.1. One may check for more details in [17]. If the data are taken at \(s=0\), one has

Lemma 3.1

( [17]) Let \(\mu _1 >0\), \(\mu _2\geqslant 0\) such that \(\delta =(\mu _1-1)^2-4\mu _2^2>0\). Let us consider \((w_0,w_1) \in D(R^n)\). Then for all \(k \in [0,1]\), the energy solution w to (3.1) satisfies

where \(\ell (t)=1+(\ln (1+t))^{\frac{1}{2}}\). Moreover, \(||w_t(t,\cdot )||_{L^2(R^n)}\) and \(||\nabla w(t,\cdot )||_{L^2(R^n)}\) satisfy the same estimates as (3.2) after taking \(k=1\).

If the data are taken at initial time \(s\geqslant 0\) and the first datum is zero, we have

Lemma 3.2

( [17]) Let \(\mu _1 >0\), \(\mu _2\geqslant 0\) such that \(\delta =(\mu _1-1)^2-4\mu _1^2>0\). Let us assume \(w_0=0\) and \(w_1\in L^2\cap L^1\). Then, for \(t\geqslant s\) and \(k \in [0,1]\), the energy solution w to (3.1) satisfies

where \({\tilde{\ell }}(t,s)=1+(\ln (\frac{1+t}{1+s}))^{\frac{1}{2}}\). Moreover, \(||w_t(t,\cdot )||_{L^2(R^n)}\) and \(||\nabla w(t,\cdot )||_{L^2(R^n)}\) satisfy the same estimates as (3.3) after taking \(k=1\).

Lemma 3.3

(Gagliardo–Nirenberg Inequality, [6]) Let \(p,q,r~(1\leqslant p,q,r\leqslant \infty )\) and \(\sigma \in [0,1] \) satisfy

except for \(p=\infty \) or \(r=n\) when \(n\geqslant 2.\) Then for some constant \(C=C(p,q,r,n)>0,\) the inequality

holds for any \(h \in C_0^1\).

We now prove Theorem 2.1. Define

with

where

It is easy to see that \(\big (X(T),||\cdot ||_{X(T)}\big )\) is a Banach space. Let \(T,K>0\), define

By Duhamel’s principle, let us define

where

and \(Nu=u^l+u^n\) is the unique solution to

Our goal is to prove that, for suitable choices of \(T,\ K\), the mapping N is contractive from X(T, K) into itself such that the local (in time) solution of (1.1) is the fixed point of N. For this purpose, it suffices to prove

where \(C_{0,T}\) depends on the norm of the initial data \(u_0,u_1,v_0,v_1\) and is bounded as \(T\rightarrow 0\) and \(C_{T},\, C_{T}^{'}\rightarrow 0 \,\, as\,\, T\rightarrow 0.\) In fact, by (3.9), for sufficiently large K, we can choose T small enough such that both terms in the right side of (3.9) are less than \(\frac{K}{2}\), so N maps X(T, K) into itself. N being contractive for an appropriately small T can be easily followed from (3.10) as \(C_T'\rightarrow 0\) when \(T\rightarrow 0\). Thus, the local (in time) existence and uniqueness of the solution in X(T) can be guaranteed by Banach’s fixed point theorem.

Note \(Nu = u^l + u^n\), in order to prove (3.9) and (3.10), it suffices to estimate \(u^l\) and \(u^n\), respectively.

3.2 Proof of (3.9)

Taking \(k=0,1\) in Lemma 3.1 for \(w=u^l\), we have

where \(\ell (t)=1+(\ln (1+t))^{\frac{1}{2}}\).

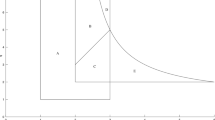

The remainder proof will be divided into five cases according to the different values of \(\delta \), see Fig. 1 at the end of the paper.

\(\mathbf {Case~1}\) \(\frac{1+\sqrt{\delta }-n}{2}>1>0\), namely \(\delta>(n+1)^2>(n-1)^2\).

By (3.11a) and (3.12a), we get

then

Thus,

where \(C_{0,T}\) is dependent on the norm of initial data and bounded as \(T\rightarrow 0\). Applying the same argument, we can deal with

Case 2: \(0<\frac{1+\sqrt{\delta }-n}{2}<1\), i.e., \((n-1)^2<\delta <(n+1)^2\) (by (3.11a) and (3.12c));

Case 3: \(\frac{1+\sqrt{\delta }-n}{2}<0<1\), i.e., \(\delta<(n-1)^2 <(n+1)^2\) (by (3.11c) and (3.12c));

Case 4: \(0=\frac{1+\sqrt{\delta }-n}{2}<1\), i.e., \((n-1)^2=\delta <(n+1)^2\) (by (3.11b) and (3.12c));

Case 5: \(0<\frac{1+\sqrt{\delta }-n}{2}=1\), i.e., \((n-1)^2<\delta =(n+1)^2\) (by (3.11a) and (3.12b)).

Hence,

Next, we estimate \(u^n\). Using the classical Gagliardo–Nirenberg inequality (Lemma 3.3), one gets

for all \(\eta \in [0,T]\), where \(r>1\) if \(n=1,2\) and \(1<r\leqslant \frac{n}{n-2}\) if \(n\geqslant 3\).

Let’s take \(s=0\), \(k=0,1\) in Lemma 3.2, respectively, then

and

\(\mathbf {Case~1}\) \(\frac{1+\sqrt{\delta }-n}{2}>1>0\), namely \(\delta>(n+1)^2>(n-1)^2\).

By Hölder’s inequality and the property of compact support of u, we have

Applying the Gagliardo–Nirenberg inequality (G-N Ineq for short) yields

where \(1<p\leqslant \frac{n}{n-2}\), for \(n\geqslant 3\) and \(1<p<\infty \), for \(n=1,~2\) . In view of (3.19a), by Duhamel’s principle, we get

where we used

due to \(g(t) \in L_{loc}^1([0,\infty ))\).

Denote \(\nabla ^j\partial _t^m u^n=\nabla ^j\partial _t^m\int _0^t E_1(t-\tau ,0,x)*(g *|u|^p)(\tau ,x) \text {d} \tau \), where \(j+m=1\) and \(j,~m\in N_0\). It is easy to see that for \(j=0,~m=1\), \(\nabla ^j\partial _t^m~u^n=u_t^n\) and \(j=1,~m=0\), \(\nabla ^j\partial _t^mu^n=\nabla u^n\).

By (3.20a), (3.21) and (3.23), one has

where

has been used as \(\delta >(n+1)^2\) in

\(\mathbf {Case~1}\).

Combining (3.22) and (3.24), we derive

Moreover, we can use the same argument to deal with \(u^n\) in the other Cases 2–5. Hence,

where \(C_{T}\rightarrow 0\) as \(T\rightarrow 0.\)

The desired inequality (3.9) can be derived from (3.17) and (3.26).

3.3 Proof of (3.10)

Suppose that \(u,\ {\overline{u}}\in X(T)\), then

where

Note

then

where \(p'\) is the conjugate index of p and we used the fact

and

for all \(\eta \in [0,T]\) with \(p>1\) if \(n=1,2\) and \(1<p\leqslant \frac{n}{n-2}\) if \(n\geqslant 3\). \(I_p\) defined by (3.29) can be estimated as

Therefore, from (3.27) it follows that

where \(C_{T}^{'}\rightarrow 0\) as \(T\rightarrow 0.\) \(\Vert \nabla ^j\partial _t^m(Nu-N{\overline{u}})\Vert _{L^2}\) can be estimated in the same way. Thus, we complete the proof of (3.10).

4 Proof of Theorem 2.2

4.1 Preliminaries for Test Function

Before starting with the construction of the test function, we recall the modified Bessel function of the second kind of order \(\zeta \),

which is a solution of the equation

The following are some useful properties about \(K_{\zeta }(t)\) with \(\zeta \) being a real parameter. More details can be found in [19].

-

The limiting behavior

$$\begin{aligned} K_{\zeta }(t)=\sqrt{\frac{\pi }{2t}}e^{-t}\big [1+O(t^{-1})\big ]~~~\textrm{as}~ t\rightarrow \infty . \end{aligned}$$(4.1) -

The derivative identity

$$\begin{aligned} \frac{\text {d}}{\text {d}t}K_{\zeta }(t)=-K_{\zeta +1}(t)+\frac{\zeta }{t}K_{\zeta }(t). \end{aligned}$$(4.2)Set

$$\begin{aligned} \lambda (t):=(1+t)^{\frac{\mu _1+1}{2}}K_{\frac{\sqrt{\delta }}{2}}(t+1),~~~\text {for}~t\geqslant 0. \end{aligned}$$which satisfies

$$\begin{aligned} \left( \frac{\text {d}^2}{\text {d}t^2}-\frac{\mu _1}{1+t}\frac{\text {d}}{\text {d}t}+\frac{\mu _1+\mu _2^2}{(1+t)^2}-1 \right) \lambda (t)=0,~t>0. \end{aligned}$$Following Yordanov and Zhang [24], we introduce the function

$$\begin{aligned} \varphi (x):= {\left\{ \begin{array}{ll} \int _{s^{n-1}}e^{x\cdot \omega }d \omega ,&{}n\geqslant 2,\\ e^{x}+e^{-x},&{}n=1, \end{array}\right. } \end{aligned}$$(4.3)where \( {\mathbb {S}}^{n-1}\) is the \(n-1\)-dimensional unit sphere. The function \(\varphi \) satisfies

$$\begin{aligned} \Delta \varphi (x)=\varphi (x),~~~x \in {\mathbb {R}}^n \end{aligned}$$and

$$\begin{aligned} \varphi (x)\thicksim C_n|x|^{-\frac{n-1}{2}}e^{|x|}~~as~~|x|\rightarrow \infty . \end{aligned}$$The function

$$\begin{aligned} \Phi (x,t):=\lambda (t)\varphi (x) \end{aligned}$$(4.4)constitutes a solution to

$$\begin{aligned} \begin{aligned}&\Phi _{tt}-\Delta \Phi +\partial _t(\frac{\mu _1}{1+t}\Phi )+\frac{{\mu _2}^2}{(1+t)^{2}}\Phi =0, ~~~~~~t>0,~x \in {\mathbb {R}}^n. \end{aligned} \end{aligned}$$(4.5)We choose \(\Phi (x,t)\) as the test function to prove Lemma 4.1. Indeed, note the nonlinearity term in (1.1) being nonnegative, applying the same method to prove ( [19], Lemma 2.1), one has

Lemma 4.1

Assume that \(u_0,u_1 \) are nonnegative and compactly supported in the ball \(B_R(0)\) and satisfy

then the local solution u to (1.1) fulfills

and there exists a large \(T_0\), which is independent of \(u_0,u_1\) such that for any \(t>T_0\) and \(p>1\), the following estimate holds

4.2 Iteration Argument

In order to investigate blowup, we will track the development of the time-dependent functional

related to the solution. It can be see that this functional will become infinity as the variable t approaches a finite time; therefore, the solution u must blow up in a finite time. We divide the remainder proof into four steps.

Step1 Deriving the iteration frame. Choose a test functions \(\phi \) which satisfies

in (2.3), we get

i.e.,

Differentiating with respect to t gives

The quadratic equation

has a pair of real roots

since \(\delta =(\mu _1-1)^2-4\mu _2^2> 0\). Clearly,

Rewrite (4.11) as

Multiplying by \((1+t)^{r_2+1}\), integrating over [0, t] and using (2.5) yield

Another multiplying by \((1+t)^{r_1}\) and integrating over [0, t] give

where \(U(0)=\int _{R^n}u(0,x)\text {d}x=\int _{R^n}u_0\text {d}x\) is nonnegative due to \(u_0(x)\geqslant 0\), thus

Note \(r_1-r_2-1<0\), substituting

into (4.17) gives that

This is the iteration frame to be used in the sequel.

Step2 Deducing the first lower bound estimate for U(t). For \(g\in C^1([0,\infty ))\), we introduce

which has the following properties

-

\(G^{'}(t)=g(t)>0,G(0)=0.\)

-

G(t) is strictly increasing and \(G(t)\geqslant 0\).

-

\(\int _0^tg(t-\eta )\eta ^{\alpha }\text {d}\eta = \int _0^tg(\eta )(t-\eta )^{\alpha }\text {d}\eta \)

$$\begin{aligned} \begin{aligned} =[G (\eta )(t-\eta )^{\alpha }]\big |_{\eta =0}^{\eta =t}+\alpha \int _0^t G (\eta )(t-\eta )^{\alpha -1}\text {d} \eta \quad \quad \quad \quad \quad \quad \,\,\\ \geqslant \, {\left\{ \begin{array}{ll} G (t_0),&{} \alpha =0,\\ \alpha \int _{t_0}^{t} G (\eta )(t-\eta )^{\alpha -1}\text {d} \eta , &{}\alpha >0,\,\,\\ \end{array}\right. } \geqslant G (t_0)(t-t_0)^{\alpha }\quad \quad \, \end{aligned} \end{aligned}$$(4.21)for all \(t\geqslant t_0>0\), \(\alpha \geqslant 0\).

From Lemma (4.1), (4.17) and (4.21), for \(t\geqslant t_0\), it follows that

Step3 Formulating the iteration procedure. Assuming inductively that

where \(\{Q_j\}_{j\geqslant 1}, \{\theta _{j}\}_{j\geqslant 1}, \{\alpha _j\}_{j\geqslant 1}\) are sequences of nonnegative real numbers that will be determined inductively. When \(j=1\),

Inspired by Chen [1], we construct \(\{L_j\}_{j\geqslant 1}\) as follows

where \(L_j\) is monotonically increasing and \(\prod _{k=0}^{\infty }l_k\) is convergent due to the ratio test method and the fact that \(\lim \limits _{k\rightarrow \infty }(\ln l_{k+1})/(\ln l_k)=p^{-1} < 1\); then using induction, we know that \(L\triangleq \prod _{k=0}^{\infty }l_k>1\) and \(L_j \in [1,L]\).

Substituting (4.23) into (4.19), one has

Making change of the variable and using integration by parts yield

for any \(s\geqslant L_{j+1}t_0\), where we used the fact

In view of \(g(t) \in C^1([0, \infty ))\), and \(L>1\), \(L_j \in [1,L]\) for all \(j\geqslant 1\), there exists a positive real integer \(j_0\) such that if \(j\geqslant j_0\), it holds

Thus,

where \(\xi \in (0,L_jt_0p^{-j}),~\zeta \in (0,~\xi ) \), for any \(j\geqslant \max \{j_0,j_1\}\), \(j_1\) is chosen to guarantee that \(p^{j} g(0)+g^{'}(\zeta )O(1)\geqslant C>0\) which due to \(g(0)>0\). Summing up (4.27), (4.28), we conclude that

for any \(t\geqslant L_{j+1}t_0~,~j\geqslant \max \{j_0,j_1\}.\) Therefore, the desired iteration procedure (4.23) is valid for

Step 4 Achieving blowup. For any number j such that \(j\geqslant \max \{j_0,j_1\}\), from (4.30) one has

and

Then for any number \(j\geqslant \max \{j_0,j_1\}\), it holds that

If we take \(j_2\) is the minimum integer such that

then for any \(j\geqslant \max \{j_0,j_1,j_2\}\), one has

where \(E_3= Q_1 p^{-\frac{4p}{(p-1)^2}} E_2^{\frac{1}{p-1}}\) is the suitable positive constants independent of j.

It is easy to obtain that, for \(t\geqslant \max \{1,2Lt_0\}\),

Then, for any number \(j\geqslant \max \{j_0,j_1,j_2\}\), noting (4.31), (4.32), (4.35) and \(L_j \in [1,L]\), it follows from (4.23) that

where

For any number \(j\geqslant \max \{j_0,j_1,j_2\}\), the condition (2.4) guarantees that the power of t in J(t) is positive. So, there exists a \(t_1\) such that

If we take \(t\geqslant \max ~\{1,2Lt_0,t_1\}\), then the lower bound of U(t) in (4.37) must blow up as \(j\rightarrow \infty \), which completes the proof of Theorem 2.2.

References

Chen, W.: Interplay effects on blow-up of weakly coupled systems for semilinear wave equations with general nonlinear memory terms. Nonlinear Anal. 202, 23 (2021)

Chen, W., Palmieri, A.: Blow-up result for a semilinear wave equation with a nonlinear memory term, Anomalies in partial differential equations, Springer INdAM Ser 43 Springer, Cham (2021), pp. 77–97

Chen, W., Reissig, M.: Blow-up of solutions to Nakao’s problem via an iteration argument. J. Differ. Equ. 275, 733–756 (2021)

D’Abbicco, M.: The threshold of effective damping for semilinear wave equations. Math. Methods Appl. Sci. 38, 1032–1045 (2015)

do Nascimento, W.N., Palmieri, A., Reissig, M.: Semi-linear wave models with power non-linearity and scale-invariant time-dependent mass and dissipation. Math. Nachr. 108(11–12), 1779–1805 (2017)

Friedman, A.: Partial Differential Equations. Krieger, New York (1976)

Georgiev, V., Lindblad, H., Sogge, C.D.: Weighted Strichartz estimates and global existence for semilinear wave equations. Amer. J. Math. 119(6), 1291–1319 (1997)

Glassey, R.T.: Existence in the large for \(u_{tt}-\Delta u = F (u)\) in two space dimensions. Math. Z. 178(6), 233–261 (1981)

Glassey, R.T.: Finite-time blow-up for solutions of nonlinear wave equations. Math. Z. 177(3), 323–340 (1981)

Ikeda, M., Sobajima, M.: Life-span of solutions to semilinear wave equation with time-dependent critical damping for specially localized initial data. Math. Ann. 372(3–4), 1017–1040 (2018)

Jiao, H., Zhou, Z.: An elementary proof of the blow-up for semilinear wave equation in high space dimensions. J. Differ. Equ. 189(2), 355–365 (2003)

John, F.: Blow-up of solutions of nonlinear wave equations in three space dimensions. Manuscripta Math. 28(1–3), 235–268 (1979)

Kato, T.: Blow up solutions of some nonlinear hyperbolic equations. Commun. Pure Appl. Math. 33(4), 501–505 (1980)

Lai, N.-A., Takamura, H., Wakasa, K.: Blow-up for semilinear wave equations with the scale invariant damping and super-Fujita exponent. J. Differ. Equ. 263(9), 5377–5394 (2017)

Lindblad, H., Sogge, C.D.: Long-time existence for small amplitude semilinear wave equations. Amer. J. Math. 118(5), 1047–1135 (1996)

Martin, H.: Mathematical analysis of some iterative methods for the reconstruction of memory kernels. Electron. Trans. 54, 483–498 (2021)

Palmieri, A.: Global existence of solutions for semi-linear wave equation with scale-invariant damping and mass in exponentially weighted spaces. J. Math. Anal. Appl. 461(2), 1215–1240 (2018)

Palmieri, A., Reissig, M.: A competition between Fujita and Strauss type exponents for blow-up of semi-linear wave equations with scale-invariant damping and mass. J. Differ. Equ. 266(2–3), 1176–1220 (2019)

Palmieri, A., Tu, Z.: Lifespan of semilinear wave equation with scale invariant dissipation and mass and sub-Strauss power nonlinearity. J. Math. Anal. Appl. 470, 447–469 (2019)

Schaeffer, J.: The equation \(u_{tt}-\Delta u = |u|^p\) for the critical value of p. Proc. Roy. Soc. Edinburgh Sect. A 101(1–2), 31–44 (1985)

Sideris, T.C.: Nonexistence of global solutions to semilinear wave equations in high dimensions. J. Differ. Equ. 52(3), 378–406 (1984)

Strauss, W.A.: Nonlinear scattering theory at low energy. J. Funct. Anal. 41(1), 110–133 (1981)

Wakasugi, Y.: Critical exponent for the semilinear wave equation with scale invariant damping, Fourier analysis, Trends Math Birkhäuser/Springer, Birkhäuser/Springer, Cham, pp. 375–390, (2014)

Yordanov, B.T., Zhang, Q.S.: Finite time blow up for critical wave equations in high dimensions. J. Funct. Anal. 231(2), 361–374 (2006)

Zhou, Y.: Blow up of solutions to semilinear wave equations with critical exponent in high dimensions. Chin. Ann. Math. Ser. B 28(2), 205–212 (2007)

Zhou, Y.: Cauchy problem for semilinear wave equations in four space dimensions with small initial data. J. Partial Differ. Equ. 8(2), 135–144 (1995)

Acknowledgements

This work is supported by the NSF of China (11731007) and the Priority Academic Program Development of Jiangsu Higher Education Institutions and the NSF of Jiangsu Province (BK20221320 and the Postgraduate Research & Practice Innovation Program of Jiangsu Province).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflict of interest.

Additional information

Communicated by Hongjun Gao.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Feng, Z., Guo, F. & Li, Y. Blowup for a Damped Wave Equation with Mass and General Nonlinear Memory. Bull. Malays. Math. Sci. Soc. 47, 77 (2024). https://doi.org/10.1007/s40840-024-01673-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40840-024-01673-9