Abstract

Sparse tensors play fundamental roles in hypergraph data, sensor node network data and remote sensing data. In this paper, we establish new H-eigenvalue inclusion sets for sparse tensors by their majorization matrix’s digraph and representation matrix’s digraph. Numerical examples are proposed to verify that our conclusions are more accurate and less computations than existing results. As applications, we provide some checkable sufficient conditions for the positive definiteness of even-order sparse tensors, and propose lower and upper bounds of H-spectral radius of nonnegative sparse tensors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\mathbb {C} (\mathbb {R})\) be the set of complex (real) numbers and \(\mathbb {C}^{n} (\mathbb {R}^{n})\) be the set of n-dimensional complex (real) vectors. An m-order n-dimensional tensor \(\mathcal {A}=(a_{i_{1}i_{2}\cdots i_{m}})\) is a multi-way array with entries

Tensor \(\mathcal {A}\) is called nonnegative (positive) if \(a_{{i_1}{i_2}\ldots {i_m}}\ge 0 \,(a_{{i_1}{i_2}\ldots {i_m}}>0\)).

Tensor is a higher-order extension of matrix, and hence many definitions and associated properties for matrix, such as eigenvalue theory, can be extended to higher order tensor by investigating its multilinear algebra analysis [12, 18].

Definition 1.1

Let \(\mathcal {A}\) be an m-order n-dimensional tensor. Then \((\lambda ,x)\) is called an eigenpair of tensor \(\mathcal {A}\) if

where \((\mathcal {A} x^{m-1})_i=\sum \limits _{i_2,\ldots , i_m\in N}a_{{i}{i_2}\ldots {i_m}}x_{i_2}\ldots x_{i_m}\) and \(x^{[m-1]}=(x^{m-1}_1,x^{m-1}_2,\ldots , x^{m-1}_n)^{\top }\). Further, \((\lambda ,x)\) is called an H-eigenpair if they are both real.

Due to their numerous applications in fields such as higher-order Markov chains [14], medical resonance imaging [1, 18, 19], Hypergraph [2, 16] and positive definiteness of multivariate forms in automatical control [15, 18, 20, 23], tensor eigenvalue problems have attracted a great deal of critical attention. For example, we can use the smallest H-eigenvalue to ascertain whether a multivariate form is positive definite [15]. However, it is NP-hard to locate all H-eigenvalues or the smallest H-eigenvalue [6, 18]. To check the positive definiteness, researchers created a set that contained all H-eigenvalues [4, 8, 9, 24, 25, 28, 29]. Recently, sparse tensor eigenvalue problems, which the number of non-zero elements is far less than the number of zero elements, have recently been crucial in data problems, such as hypergraph data, sensor node network data and remote sensing data [2, 21, 26, 27]. Unfortunately, the computing effort is large if we use the aforementioned methods to build the H-eigenvalue inclusion set for sparse tensor eigenvalue problems with high-dimensional variables. Therefore, the sparsity of tensors encourages us to develop new H-eigenvalue inclusion sets. Very recently, Liu et al. [13] established bounds for the spectral radius of a nonnegative sparse tensor by its majorization matrix’s digraph. There are two intriguing issues that come up: can we use the aforementioned method for generic sparse tensors? can new matrix’s digraph be introduced to characterize H-eigenvalues of both generic and nonnegative sparse tensors?

Motivated and inspired by the above works, we explore the relations between general sparse tensors and their majorization matrix’s digraph and representation matrix’s digraph introduced by [5, 7, 16]. By drawing on the information of \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(i)\) and \(\Gamma _{|\mathring{\mathcal {A}}|}(i)\) of majorization matrix’s digraph and representation matrix’s digraph, we establish tight H-eigenvalue inclusion sets with reduced calculations, which enhances the results [8, 9]. Based on new H-eigenvalue inclusion sets, we propose several sufficient conditions to identify positive definiteness of even-order real supersymmetric sparse tensors. Finally, we estimate sharp lower and upper bounds for H-spectral radius of nonnegative sparse tensors with simple computations.

The remainder of the paper is organized as follows. In Sect. 2, important definitions and preliminary results are recalled. In Sect. 3, we establish the improved H-eigenvalue inclusion sets and show that they have their own advantages by Examples 3.1 and 3.2. In Sect. 4, we check the positive definiteness of even-order real supersymmetric sparse tensors and estimate the bounds for H-spectral radius of nonnegative sparse tensors using these H-eigenvalue inclusion sets.

2 Preliminaries

In this section, we introduce some definitions and related properties of the tensor analysis.

Definition 2.1

[3, 18] Let \(\mathcal {A}\) be an m-order n-dimensional tensor.

-

(i)

Tensor \(\mathcal {A}\) is called reducible if there exists a nonempty proper index subset \(I\subset \{1,2,\dots ,n\}\) such that

$$\begin{aligned} a_{{i_1}{i_2}\ldots {i_m}}=0, \quad \forall i_1\in I, i_2, \dots ,i_m\notin I. \end{aligned}$$If \(\mathcal {A}\) is not reducible, then it is called irreducible.

-

(ii)

Tensor \(\mathcal {A}\) is called supersymmetric if its entries are invariant under any permutation of their indices.

-

(iii)

Let \(\sigma (\mathcal {A})\) be the set of all H-eigenvalues of \(\mathcal {A}\). Then, H-spectral radius \(\rho (\mathcal {A})\) is denoted by

$$\begin{aligned} \rho (\mathcal {A})=\max \{|\lambda |: \lambda \in \sigma (\mathcal {A})\}. \end{aligned}$$

As we know, H-spectral radius \(\rho (\mathcal {A})\) coincides with the maximum eigenvalue of nonnegative tensors.

In what follows, we introduce the relations between directed graph and matrices (tensors).

The directed graph of a nonnegative matrix \(A =(a_{ij})\) has as vertices the indices \(\{1,\cdots ,n\},\) and there is an arc from vertex i to vertex j if \(a_{ij}\ne 0.\) Matrix A is irreducible, if and only if one can get from any vertex to any other vertex (perhaps in several steps) and is called a strongly connected graph [16].

Definition 2.2

[5, 7, 16] Let \(\mathcal {A}\) be an m-order n-dimensional nonnegative tensor.

-

(i)

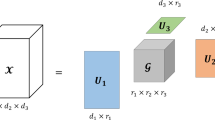

A nonnegative matrix \(\mathring{\mathcal {A}}=(a_{ij})_{n\times n}\) is called the majorization associated to tensor \(\mathcal {A},\) if the (i, j)-th element of \(\mathring{\mathcal {A}}\) is defined to be \(a_{ij\cdots j}\) for any \(i, j \in N \).

-

(ii)

A nonnegative matrix \(\mathcal {G}(\mathcal {A})=(a_{ij})_{n\times n}\) is called the representation associated to the tensor \(\mathcal {A},\) if the (i, j)-th element of \(\mathcal {G}(\mathcal {A})\) is defined to be \(\sum \limits _{j\in \{i_{_{2}},\cdots ,i_{m}\}}a_{i i_2\cdots i_m}.\)

-

(iii)

We associate with \(\mathring{\mathcal {A}}\) digraphs as \(\Gamma _{\mathring{\mathcal {A}}}=\left( V(\mathring{\mathcal {A}}),E(\mathring{\mathcal {A}})\right) \), where \(V(\mathring{\mathcal {A}})=\{1,\cdots ,n\}\) is the vertex set of \(\Gamma _{\mathring{\mathcal {A}}},\) and \(E(\mathring{\mathcal {A}})=\{e_{ij}: e_{ij}=a_{ij\ldots j}\ne 0, i\ne j\}\) is the arc set of \(\Gamma _{\mathring{\mathcal {A}}},\) i.e., \(e_{ij}\) is the directed edge of \(\Gamma _{\mathring{\mathcal {A}}}\).

-

(iv)

We associate with \(\mathcal {G}(\mathcal {A})\) digraphs as \(\Gamma _{\mathcal {G}(\mathcal {A})}=\left( V(\mathcal {G}(\mathcal {A})),E(\mathcal {G}(\mathcal {A}))\right) ,\) where \(V(\mathcal {G}(\mathcal {A}))=\{1,\cdots ,n\}\) is the vertex set of \(\Gamma _{\mathcal {G}(\mathcal {A})},\) and \(E(\mathcal {G}(\mathcal {A}))=\{g_{ij}: g_{ij}=\sum \limits _{j\in \{i_{_{2}},\cdots ,i_{m}\}}a_{i i_2\cdots i_m}\ne 0, i\ne j\}\) the arc set of \(\Gamma _{\mathcal {G}(\mathcal {A}))},\) i.e., \(g_{ij}\) is the directed edge of \(\Gamma _{\mathcal {G}(\mathcal {A})}\).

-

(v)

Tensor \(\mathcal {A}\) is called weakly irreducible if \(\mathcal {G}(\mathcal {A})\) is irreducible.

From Theorem 2.3 of [16], if \(\mathring{\mathcal {A}}\) is irreducible, then \(\mathcal {A}\) is irreducible. Further, if \(\mathcal {A}\) is irreducible, then \(\mathcal {A}\) is weakly irreducible in [7]. When \(\mathcal {A}\) is a general tensor, we use \(|\mathcal {A}|\) to denote the nonnegative tensor composed of \(\mathcal {A}.\) In this paper, \(|\mathring{\mathcal {A}}|\) and \(\mathcal {G}(|\mathcal {A}|)\) denote the majorization matrix and the representation matrix of general tensors, respectively.

We end this section with important results of [8, 9, 25]. Given an m-order n-dimensional tensor \(\mathcal {A}=(a_{{i_1}{i_2}\cdots {i_m}}),\) denote

where

Lemma 2.1

Let \(\mathcal {A}\) be an m-order n-dimensional tensor. Then,

-

(I)

(Theorem 6 of [18])

$$\begin{aligned} \sigma (\mathcal {A})\subseteq \Upsilon (\mathcal {A})=\bigcup _{i\in N}\Upsilon _{i}(\mathcal {A}), \end{aligned}$$where \(\Upsilon _{i}(\mathcal {A})=\{z\in \mathbb {R}:|z-a_{i\ldots i}| \le r_i(\mathcal {A})\}.\)

-

(II)

(Theorem 2.1 of [8])

$$\begin{aligned} \sigma (\mathcal {A})\subseteq \mathcal {K}(\mathcal {A})=\bigcup _{i,j\in N, i\ne j}\mathcal {K}_{i,j}(\mathcal {A}), \end{aligned}$$where \(\mathcal {K}_{i,j}(\mathcal {A})=\{z\in \mathbb {R}:(|z-a_{i\ldots i}|-r^j_i(\mathcal {A}))|z-a_{j\ldots j}| \le |a_{i j\ldots j}|r_j(\mathcal {A})\}\) and \(r^j_i(\mathcal {A})= r_i(\mathcal {A})-|a_{ij\cdots j}|.\)

-

(III)

(Theorem 2.1 of [9])

$$\begin{aligned} \sigma (\mathcal {A})\subseteq \Theta (\mathcal {A})=\bigcup _{i,j\in N, i\ne j}\Theta _{i,j}(\mathcal {A}), \end{aligned}$$where \(\Theta _{i,j}(\mathcal {A})=\{z\in \mathbb {R}:(|z-a_{i\cdots i}|-r_{i}^{\Delta _{i}}(\mathcal {A}))|z-a_{j\cdots j}|\le r_{i}^{\overline{\Delta _{i}}}(\mathcal {A})\mathop {r_{j}(\mathcal {A})}\}.\)

3 H-eigenvalue Inclusion Sets of Sparse Tensors

In this section, we establish two tight H-eigenvalue inclusion sets of a sparse tensor by its majorization matrix’s digraph and representation matrix’s digraph, which can reduce calculations and improve H-eigenvalue inclusion sets in [8, 11].

Theorem 3.1

Let \(\mathcal {A}\) be an m-order n-dimensional tensor with \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(i)=\{i:\exists ~j\in N~ \text{ such } \text{ that }~ g_{ij}\in E(\mathcal {G}(|\mathcal {A}|)) \}\ne \emptyset .\) Then,

where \(\Theta _{i,j}(\mathcal {A})=\left\{ z\in \mathbb {R}:\left( |z-a_{i\cdots i}|-r_{i}^{\Delta _{i}}(\mathcal {A})\right) |z-a_{j\cdots j}|\le r_{i}^{\overline{\Delta }_{i}}(\mathcal {A})r_{j}(\mathcal {A})\right\} .\)

Proof

Let \((\lambda ,x)\) be an H-eigenpair of \(\mathcal {A},\) i.e.

Without loss of generality, we assume

Since \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(t_{1})\ne \emptyset ,\) we set \(|x_{t_{s}}|= \max \{|x_{t_{i}}|: \sum \limits _{(i_{2},\cdots ,i_{m})\in \overline{\Delta }_{t_{1}}}\mid a_{t_{1}i_{2}\cdots i_{m}}\mid \ne 0, i\in N\},\) which means \(g_{t_{1}t_{s}}\in E(\mathcal {G}(|\mathcal {A}|)).\) In view of the \(t_1\)-th equation of (2), we deduce

equivalently,

We now break up the argument into two cases.

Case 1: \(|x_{t_{s}}|=0.\) Then, \(|\lambda -a_{t_{l}\cdots t_{l}}|-r_{t_{1}}^{\Delta _{t_{1}}}(\mathcal {A})\le 0\) and it is obvious that \(\lambda \in \Theta _{t_{1},t_{s}}(\mathcal {A})\subseteq \widetilde{\Theta }(\mathcal {A}).\)

Case 2: \(|x_{t_{s}}|>0.\) It follows from (2) and \(i=t_s\) that

Multiplying inequalities (3) and (4) gives

From \(|x_{t_{1}}|^{m-1}|x_{t_{s}}|^{m-1}>0,\) it holds that

which implies \(\lambda \in \Theta _{t_{1},t_{s}}(\mathcal {A})\subseteq \widetilde{\Theta }(\mathcal {A})\). \(\square \)

Corollary 3.1

Let \(\mathcal {A}\) be an m-order n-dimensional tensor. Then,

where \(\Theta _{i,j}(\mathcal {A})\) is defined in Theorem 3.1.

Proof

When \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(i)\ne \emptyset ,\) by Theorem 3.1, the results hold. We only prove \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(i)=\emptyset .\) For any \(\epsilon >0\), set

where

Thus, \(\Gamma _{\mathcal {G}(|\mathcal {A}(\epsilon )|)}(i)\ne \emptyset .\) Following the similar arguments to the proof of Theorem 3.1, we have

Letting \(\epsilon \rightarrow 0,\) we obtain

\(\square \)

Remark 3.1

Compared with Theorem 2.1 of [9], the result of Corollary 3.1 has minor computations and tight H-eigenvalue inclusion sets, i.e., \(\widetilde{\widetilde{\Theta }}(\mathcal {A})\subseteq \Theta (\mathcal {A}).\) Indeed, \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(i)\ne \emptyset \) is a condition easy to verify and meet.

Now, we arrive at the following H-eigenvalue inclusion sets for sparse tensors by their majorization matrix’s digraph.

Theorem 3.2

Let \(\mathcal {A}\) be an m-order n-dimensional tensor with \(\Gamma _{|\mathring{\mathcal {A}}|}(i)=\{i: \exists ~j\in N~\text{ such } \text{ that }~e_{ij}\in E(|\mathring{\mathcal {A}}|)\}\ne \emptyset .\) Then,

where \(\varpi _{i,j}(\mathcal {A})=\left\{ z\in \mathbb {R}:\left( |z-a_{i\cdots i}|-r_{i}^{'}(\mathcal {A})\right) |z-a_{j\cdots j}|\le \widetilde{r}_{i}(\mathcal {A})r_{j}(\mathcal {A})\right\} , \widetilde{r}_{i}(\mathcal {A})=\sum \limits _{{\mathop {\delta _{i i{_2}\cdots i_{m}=0}}\limits ^{\delta _{i{_2}\cdots i_{m}=1}}}}|a_{i i{_2}\cdots i_{m}}|\) and \(r_{i}^{'}(\mathcal {A})=r_i(\mathcal {A})-\widetilde{r}_{i}(\mathcal {A}).\)

Proof

Let \((\lambda ,x)\) be an H-eigenpair of \(\mathcal {A}\). Without loss of generality, we assume that \(|x_{t_{1}}|\ge |x_{t_{2}}|\ge \cdots \ge |x_{t_{n}}|\). Since \(\Gamma _{|\mathring{\mathcal {A}}|}(i)\ne \emptyset ,\) there exists \(j\ne t_{1}\) with \(a_{t_{1}j\cdots j}\ne 0.\) Assume

which implies \(e_{t_{1}t_{s}}\in E(|\mathring{\mathcal {A}}|).\) Recalling the \(t_{1}\)-th equation of (2), we have

equivalently,

Next, we break up the argument into two cases.

Case 1: \(x_{t_{s}}=0.\) Then, \(|(\lambda -a_{t_{1}\cdots t_{1}})|-r_{t_{1}}^{'}(\mathcal {A})\le 0.\) Clearly, \(\lambda \in \varpi _{t_{1},t_{s}}(\mathcal {A}).\)

Case 2: \(x_{t_{s}}\ne 0.\) It follows from (2) and \(i=t_s\) that

Multiplying inequalities (5) and (6) gives

From \(|x_{t_{1}}|^{m-1}|x_{t_{s}}|^{m-1}>0\), it holds that

which implies \(\lambda \in \varpi _{t_{1},t_{s}}(\mathcal {A})\subseteq \varpi (\mathcal {A})\). \(\square \)

Following the similar arguments to the proof of Corollary 3.1, we obtain the desired conclusions.

Corollary 3.2

Let \(\mathcal {A}\) be an m-order n-dimensional tensor. Then,

Compared with Theorem 2.1 of [8], the result of Corollary 3.2 requires minor calculations but has accurate results. Detailed investigation is given in Corollary 3.3.

Lemma 3.1

(Lemma 2.2 of [9])

-

(i)

Let \(a,b,c\ge 0\) and \(d>0.\) If \(\frac{a}{b+c+d}\le 1\), then

$$\begin{aligned} \frac{a-(b+c)}{d}\le \frac{a-b}{c+d} \le \frac{a}{b+c+d}. \end{aligned}$$ -

(ii)

Let \(a,b,c\ge 0\) and \(d>0.\) If \(\frac{a}{b+c+d}\ge 1,\) then

$$\begin{aligned} \frac{a-(b+c)}{d}\ge \frac{a-b}{c+d} \ge \frac{a}{b+c+d}. \end{aligned}$$

Corollary 3.3

Let \(\mathcal {A}\) be an m-order n-dimensional tensor with \(n\ge 2.\) Then,

Proof

Let \(z\in \widehat{\varpi }(\mathcal {A})\). Then there exist \(p, q \in N\) with \(p\ne q\) such that \(z\in \varpi _{p,q}(\mathcal {A})\), i.e.

We now break up the argument into two cases.

Case 1: \(\widetilde{r}_{q}(\mathcal {A})r_{p}(\mathcal {A})=0,\) it holds that \(\widetilde{r}_{q}(\mathcal {A})=0\) or \(r_{p}(\mathcal {A})=0.\)

When \(\widetilde{r}_{q}(\mathcal {A})=0\), we have \(|a_{qp\cdots p}|=0\) and \(r_{q}^{p}(\mathcal {A})=r_{q}^{'}(\mathcal {A}),\)

which implies that \(z\in \mathcal {K}_{q,p}(\mathcal {A}).\) Consequently, \(\widehat{\varpi }(\mathcal {A})\subseteq \mathcal {K}(\mathcal {A}).\)

When \(r_{p}(\mathcal {A})=0,\) one has \(r_{q}^{p}(\mathcal {A})\ge r_{q}^{'}(\mathcal {A})\) and

which leads to \(z\in \mathcal {K}_{q,p}(\mathcal {A}).\) Certainly, \(\widehat{\varpi }(\mathcal {A})\subseteq \mathcal {K}(\mathcal {A}).\)

Case 2: \(\widetilde{r}_{q}(\mathcal {A})r_{p}(\mathcal {A})> 0,\) dividing both sides by \(\widetilde{r}_{q}(\mathcal {A})r_{p}(\mathcal {A})\) on (7), one has

which implies

or

Let \(a=|z-a_{q\cdots q}|,\) \(b=r_{q}^{'}(\mathcal {A}),\) \(c=\sum \limits _{{\mathop {\delta _{qi_{2}\cdots i_{m}=0}}\limits ^{i_{2}\cdots i_{m}=1}}}^{n}a_{qi_{2}\cdots i_{m}}-|a_{qp\cdots p}|\) and \(d=|a_{qp\cdots p}|.\)

If (9) holds with \(d=|a_{qp\cdots p}|> 0\), it follows from Lemma 3.1 and (8) that

equivalently,

This implies \(\widehat{\varpi }(\mathcal {A})\subseteq \mathcal {K}(\mathcal {A}).\)

If (9) holds with \(d=|a_{qp\cdots p}|=0,\) we obtain

Hence,

which shows \(\widehat{\varpi }(\mathcal {A})\subseteq \mathcal {K}(\mathcal {A}).\)

Otherwise, (10) holds. We only prove \(\widehat{\varpi }(\mathcal {A})\subseteq \mathcal {K}(\mathcal {A})\) under the case that

Owing to \(\widetilde{r}_{i}(\mathcal {A})=r_{i}(\mathcal {A})-r_{i}^{'}(\mathcal {A})\), from (11), we deduce

If \(d=|a_{pq\cdots q}|>0\), from Lemma 3.1 and (8), we have

equivalently,

This implies \(\widehat{\varpi }(\mathcal {A})\subseteq \mathcal {K}(\mathcal {A}).\)

If \(d=|a_{pq\cdots q}|=0,\) from Lemma 3.1 and (8), it holds that

Hence,

Consequently, \(\widehat{\varpi }(\mathcal {A})\subseteq \mathcal {K}(\mathcal {A}).\)

Based on the above two cases, we obtain the desired results. \(\square \)

To illustrate the validity of Theorems 3.1 and 3.2, we employ a running example.

Example 3.1

Let \(\mathcal {A}\) be a 3-order 4-dimensional tensor defined as follows:

Recalling Definition 2.2, we obtain

By virtue of Theorem 6 of [18], one has

From Theorem 2.1 of [8], we obtain Table 1 and

From Theorem 2.1 of [9], following the similar computations of \(\mathcal {K}_{i,j}(\mathcal {A}),\) we calculate 12 times \(\Theta _{i,j}(\mathcal {A})\) with \(i\ne j\in \{1,2,3,4\}\) and obtain

From representation matrix \(\mathcal {G}(|\mathcal {A}|)\), for any \(i\in \{1,2,3,4\},\) we verify \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(i)\ne \emptyset .\) In view of Theorem 3.1, we only compute Table 2 and

By majorization matrix \(|\mathring{\mathcal {A}}|,\) for any \(i\in \{1,2,3,4\},\) we observe \(\Gamma _{|\mathring{\mathcal {A}}|}(i)\ne \emptyset .\) It follows from Theorem 3.2 that

and

Tight bounds and simple computations are advantages of the H-eigenvalue inclusion sets given by Theorems 3.1 and 3.2 over Theorems 2.1 of [8] and Theorem 2.1 of [9]. The conclusions of Theorems 3.1 and 3.2 generally have their own benefits. The conclusion of Theorem 3.1 in Example 3.1 is more precise than Theorem 3.2. The following example implies the converse results.

Example 3.2

Let \(\mathcal {A}\) be an 3-order 4-dimensional tensor defined as follows:

We can obtain

From representation matrix \(\mathcal {G}(|\mathcal {A}|)\), for any \(i\in \{1,2,3,4\},\) we know \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(i)\ne \emptyset .\) In view of Theorem 3.1, we compute Table 4 and

By majorization matrix \(|\mathring{\mathcal {A}}|,\) for any \(i\in \{1,2,3,4\},\) we observe \(\Gamma _{|\mathring{\mathcal {A}}|}(i)\ne \emptyset .\) It follows from Theorem 3.2 that

and

4 Applications

4.1 Testing Positive Definiteness of Even-Order Real Supersymmetric Sparse Tensors

This subsection focuses on proving that an even-order real supersymmetric sparse tensor is positive definite based on the principle that \(\mathcal {A}\) is positive definite if and only if all of its H-eigenvalues are positive [19]. In order to achieve this, we provide the following adequate conditions for the positive definiteness of sparse tensors via H-eigenvalue inclusion sets in Sect. 3.

Theorem 4.1

Let \(\mathcal {A}\) be an even m-order n-dimensional supersymmetric tensor with \(\Gamma _{\mathcal {G}(|\mathcal {A}|)}(i)\ne \emptyset .\) If all \((i,j)\in \{(k,l): g_{kl} \in E(\mathcal {G}(|\mathcal {A}|)), l\ne k\}\) and \(a_{i\cdots i}>0, i\in N\) such that

then \(\mathcal {A}\) is positive definite.

Proof

Let \(\lambda \) be an H-eigenvalue of \(\mathcal {A}\). Suppose on the contrary that \(\lambda \le 0.\) It follows from Theorem 3.1 that there is a \(g_{i_{0}j_{0}}\in E(\mathcal {G}(|\mathcal {A}|)) \) such that \(\lambda \in \Phi _{i_{0},j_{0}}(\mathcal {A}),\) that is,

From \(a_{i\cdots i}>0\) and \(a_{j\cdots j}>0,\) we have

This is a contradiction. Hence, \(\lambda > 0\) and \(\mathcal {A}\) is positive definite. \(\square \)

Corollary 4.1

Let \(\mathcal {A}\) be an even m-order n-dimensional supersymmetric tensor. If all \((i,j) \in \{(k,l): {g_{kl}\in E(\mathcal {G}(|\mathcal {A}|))\bigcup {l-k=1~or~1-n}}, l\ne k\}\) and \(a_{i\cdots i}>0, i\in N\) such that

then \(\mathcal {A}\) is positive definite.

Proof

Following the proof of Theorem 4.1, we obtain the desired conclusions. \(\square \)

Theorem 4.2

Let \(\mathcal {A}\) be an even m-order n-dimensional supersymmetric tensor with \(\Gamma _{|\mathring{\mathcal {A}}|}(i)\ne \emptyset .\) If all \((i,j) \in \{(k,l): e_{kl}\in E(|\mathring{\mathcal {A}}|)\}, l\ne k\}\) and \(a_{i\cdots i}>0, i\in N\) such that

then \(\mathcal {A}\) is positive definite.

Proof

Let \(\lambda \) be an H-eigenvalue of \(\mathcal {A}\). Suppose on the contrary that \(\lambda \le 0.\) It follows from Theorem 3.2 that there is a \(e_{i_{0}j_{0}}\in E(|\mathring{\mathcal {A}}|) \) such that \(\lambda \in \varpi _{i_{0},j_{0}}(\mathcal {A}),\) that is

Since \(a_{i\cdots i}>0\) and \(a_{j\cdots j}>0,\) it holds that

which a contradiction arises. Therefore, \(\lambda > 0\) and \(\mathcal {A}\) is positive definite. \(\square \)

In virtue of Theorem 4.2 and Corollary 3.2, we can get the following conclusions.

Corollary 4.2

Let \(\mathcal {A}\) be an even m-order n-dimensional supersymmetric tensor. If all \((i,j) \in \{(k,l): e_{kl}\in E(|\mathring{\mathcal {A}}|)\bigcup {l-k=1~or~1-n},~l\ne k\}\) and \(a_{i\cdots i}>0, i\in N\) such that

then \(\mathcal {A}\) is positive definite.

The following example shows that our results can exactly judge the positive definiteness of an even-order real supersymmetric sparse tensor.

Example 4.1

Let \(\mathcal {A}\) be a 4-order 4-dimensional symmetric tensor defined as follows:

We get the minimum H-eigenvalues is 1.000. Hence, \(\mathcal {A}\) is positive definite. We verify

According to the Theorem 4.1, we compute Table 6 and

which shows that \(\mathcal {A}\) is positive definite.

In view of Corollary 4.2, we compute Table 7 and

Therefore, \(\mathcal {A}\) is positive definite.

However, from Theorem 4.1 of [9], we obtain

which implies that Theorem 4.1 of [9] cannot identify the positiveness of \(\mathcal {A}.\)

Unfortunately, from Theorem 4.2 of [8], we obtain

which implies that Theorem 4.2 of [8] are not suitable to test the positiveness of \(\mathcal {A}.\)

4.2 Bounds for H-spectral radius of nonnegative sparse tensors

We give bounds of the H-spectral radius a nonnegative sparse tensor via H-eigenvalue inclusion theorems in Sect. 3. We start this subsection with some fundamental results of nonnegative tensors.

Lemma 4.1

(Lemma 3.2 of [8]) Let \(\mathcal {A}\) be a nonnegative tensor with order m and dimension \(n\ge 2\). Then,

Lemma 4.2

(Theorem 4.1 of [5]) Let \(\mathcal {A}\) be an m-order n-dimensional weakly irreducible nonnegative tensor. Then there exists a unique x such that \((\rho (\mathcal {A}), x)\) is a positive eigenpair.

Theorem 4.3

Let \(\mathcal {A}\) be an m-order n-dimensional nonnegative tensor with \(\Gamma _{\mathcal {G}(\mathcal {A})}(i)\ne \emptyset \) and \(n\ge 2.\) Then

where

Proof

Let \(\rho (\mathcal {A})\) be H-spectral radius of \(\mathcal {A}.\) Since \(\mathcal {A}\) is nonnegative, then \(\rho (\mathcal {A})\) is an H-eigenvalue of \(\mathcal {A}\) with \(\rho (\mathcal {A})\in \widetilde{\Theta }(\mathcal {A})\) from Theorem 3.1, that is,

-

(i)

We show \(\rho (\mathcal {A})\le \max \limits _{{g_{ij}\in E(\mathcal {G}(\mathcal {A}))}}\Psi _{i,j}(\mathcal {A}).\) Referring to \(i=t_{1},\) \(j=t_{s}\) of (12), one has

$$\begin{aligned} \left( |\rho (\mathcal {A})-a_{t_{1}\cdots t_{1}}|-r_{t_{1}}^{\Delta _{t_{1}}}(\mathcal {A})\right) |\rho (\mathcal {A})-a_{t_{s}\cdots t_{s}}|\le r_{t_{1}}^{\overline{\Delta }_{t_{1}}}(\mathcal {A})r_{t_{s}}(\mathcal {A}). \end{aligned}$$This together with \(\rho (\mathcal {A})\ge a_{i\cdots i}\) yields

$$\begin{aligned} \left( \rho (\mathcal {A})-a_{t_{1}\cdots t_{1}}-r_{t_{1}}^{\Delta _{t_{1}}}(\mathcal {A})\right) (\rho (\mathcal {A})-a_{t_{s}\cdots t_{s}})\le r_{t_{1}}^{\overline{\Delta }_{t_{1}}}(\mathcal {A})r_{t_{s}}(\mathcal {A}), \end{aligned}$$equivalently,

$$\begin{aligned} \rho (\mathcal {A})^{2}- & {} \left( a_{t_{1}\cdots t_{1}}+a_{t_{s}\cdots t_{s}}+r_{t_{1}}^{\Delta _{t_{1}}}(\mathcal {A})\right) \rho (\mathcal {A})+a_{t_{s}\cdots t_{s}}\left( a_{t_{1}\cdots t_{1}}+r_{t_{1}}^{\Delta _{t_{1}}}(\mathcal {A})\right) \\- & {} r_{t_{1}}^{\overline{\Delta }_{t_{1}}}(\mathcal {A})r_{t_{s}}(\mathcal {A})\le 0. \end{aligned}$$Solving for \(\rho (\mathcal {A})\) gives

$$\begin{aligned} \rho (\mathcal {A})\le & {} \frac{1}{2}\left\{ a_{t_{1}\cdots t_{1}}+a_{t_{s}\cdots t_{s}}+r_{t_{1}}^{\Delta _{t_{1}}}(\mathcal {A})\right. \\{} & {} +\left. \sqrt{\left( a_{t_{1}\cdots t_{1}}-a_{t_{s}\cdots t_{s}}+r_{t_{1}}^{\Delta _{t_{1}}}(\mathcal {A})\right) ^{2}+4{r_{t_{1}}}^{\overline{\Delta }_{t_{1}}}(\mathcal {A})r_{t_{s}}(\mathcal {A})}\right\} , \end{aligned}$$which implies

$$\begin{aligned} \rho (\mathcal {A})\le \Psi _{t_{1},t_{s}}(\mathcal {A}) \le \max \limits _{{g_{ij}\in E(\mathcal {G}(\mathcal {A}))}}\Psi _{i,j}(\mathcal {A}). \end{aligned}$$ -

(ii)

We prove \(\min \limits _{{g_{ij}\in E(\mathcal {G}(\mathcal {A}))\bigcup {j-i=1,1-n}}}\Psi _{i,j}(\mathcal {A})\le \rho (\mathcal {A}).\) Let \(x=(x_{1},x_{2},\cdots ,x_{n})^{\top }\) be an H-eigenvector of \(\mathcal {A}\) corresponding to \(\rho (\mathcal {A}).\) We break up the argument into two cases. Case 1: \(\mathcal {G}(\mathcal {A})\) is irreducible. Then, \(\mathcal {A}\) is weakly irreducible. Therefore, \(x=(x_{1},x_{2},\cdots ,x_{n})^{\top }\) is a positive vector from Lemma 4.2. Suppose

$$\begin{aligned} 0<x_{t_{n}}\le x_{t_{r}}=\min \{x_{t_{j}}: \sum \limits _{(i_{2},\cdots ,i_{m})\in \overline{\Delta }_{t_{n}}}a_{t_{n}i_{2}\cdots i_{m}}\ne 0, j\in N\}. \end{aligned}$$In view of the \(t_n\)-th equation of (2), we deduce

$$\begin{aligned} \begin{array}{lllll} (\rho (\mathcal {A})-a_{t_{n}\cdots t_{n}})x_{t_{n}}^{m-1}&{}=\sum \limits _{{\mathop {\delta _{ t_{n}i{_2}\cdots i_{m}=0}}\limits ^{(i{_2},\cdots , i_{m})\in \Delta _{t_{n}}}}}a_{t_{n}i{_2}\cdots i_{m}}x_{i{_2}}\cdots x_{i_{m}}+\sum \limits _{(i{_2},\cdots ,i_{m})\in \overline{\Delta }_{t_{n}}}a_{t_{n}i{_2}\cdots i_{m}}x_{i{_2}}\cdots x_{i_{m}}\\ &{}\ge \sum \limits _{{\mathop {\delta _{ t_{n}i{_2}\cdots i_{m}=0}}\limits ^{(i{_2},\cdots ,i_{m})\in \Delta _{t_{n}}}}}a_{t_{n}i{_2}\cdots i_{m}}x_{t_{n}}^{m-1}+\sum \limits _{(i{_2},\cdots ,i_{m})\in \overline{\Delta }_{t_{n}}}a_{t_{n}i{_2}\cdots i_{m}}x_{t_{r}}^{m-1}\\ &{}= r_{t_{n}}^{\Delta _{t_{n}}}(\mathcal {A})x_{t_{n}}^{m-1}+r_{t_{n}}^{\overline{\Delta }_{t_{n}}}(\mathcal {A})x_{t_{r}}^{m-1}, \end{array} \end{aligned}$$equivalently,

$$\begin{aligned} \left( \rho (\mathcal {A})-a_{t_{n}\cdots t_{n}}-r_{t_{n}}^{\Delta _{t_{n}}}(\mathcal {A})\right) x_{t_{n}}^{m-1} \ge r_{t_{n}}^{\overline{\Delta }_{t_{n}}}(\mathcal {A})x_{t_{r}}^{m-1}\ge 0. \end{aligned}$$(13)Referring to \(t_{r}\)-th equation of (2), we have

$$\begin{aligned} (\rho (\mathcal {A})-a_{t_{r}\cdots t_{r}})x_{t_{r}}^{m-1}=\sum \limits _{\delta _{t_{r} i{_2}\cdots i_{m}=0}}a_{t_{r}i{_2}\cdots i_{m}}x_{i{_2}}\cdots x_{i_{m}} \ge r_{t_{r}}(\mathcal {A})x_{t_{n}}^{m-1}\ge 0. \nonumber \\ \end{aligned}$$(14)Multiplying (13) and (14) yields

$$\begin{aligned} \left( \rho (\mathcal {A})-a_{t_{n}\cdots t_{n}}-r_{t_{n}}^{\Delta _{t_{n}}}(\mathcal {A})\right) (\rho (\mathcal {A})-a_{t_{r}\cdots t_{r}})x_{t_{n}}^{m-1}x_{t_{r}}^{m-1}\ge r_{t_{n}}^{\overline{\Delta }_{t_{n}}}(\mathcal {A})r_{t_{r}}(\mathcal {A})x_{t_{n}}^{m-1}x_{t_{r}}^{m-1}. \end{aligned}$$By virtue of \(x_{t_{r}}\ge x_{t_{n}}>0,\) we obtain

$$\begin{aligned} \left( \rho (\mathcal {A})-a_{t_{n}\cdots t_{n}}-r_{t_{n}}^{\Delta _{t_{n}}}(\mathcal {A})\right) (\rho (\mathcal {A})-a_{t_{r}\cdots t_{r}})\ge r_{t_{n}}^{\overline{\Delta }_{t_{n}}}(\mathcal {A})r_{t_{r}}(\mathcal {A}). \end{aligned}$$Solving for \(\rho (\mathcal {A})\) gives

$$\begin{aligned} \rho (\mathcal {A})\ge & {} \frac{1}{2}\left\{ a_{t_{n}\cdots t_{n}}+a_{t_{r}\cdots t_{r}}+r_{t_{n}}^{\Delta _{t_{n}}}(\mathcal {A})\right. \\{} & {} \left. +\sqrt{\left( a_{t_{n}\cdots t_{n}}-a_{t_{r}\cdots t_{r}}+r_{t_{n}}^{\Delta _{t_{n}}}(\mathcal {A})\right) ^{2}+4{r_{t_{n}}}^{\overline{\Delta }_{t_{n}}}(\mathcal {A})r_{t_{r}}(\mathcal {A})}\right\} , \end{aligned}$$which shows

$$\begin{aligned} \rho (\mathcal {A})\ge \Psi _{t_{n},t_{r}}(\mathcal {A})\ge \min \limits _{{g_{ij}\in E(\mathcal {G}(\mathcal {A}))}}\Psi _{i,j}(\mathcal {A}). \end{aligned}$$Case 2: \(\mathcal {G}(\mathcal {A})\) is reducible. For any \(\epsilon >0,\) set

$$\begin{aligned} \mathcal {A}(\epsilon )=\mathcal {A}+\Phi (\epsilon )\quad and \quad \Phi (\epsilon )=(\theta _{i_1 \cdots i_m}), \end{aligned}$$where

$$\begin{aligned} \theta _{i_1 \cdots i_m}=\left\{ \begin{array}{ll} \theta _{i j\cdots j} = \epsilon , &{}~if j-i=1,1-n,\\ 0, &{}~ \text{ otherwise }. \end{array}\right. \end{aligned}$$Thus, \(\mathcal {A}(\epsilon )\) is irreducible. Following the similar proof of Case 1 in Theorem 4.3, we have

$$\begin{aligned} \min \limits _{g_{ij}\in E(\mathcal {G}(\mathcal {A}))}\Psi _{i,j}(\mathcal {A}(\epsilon ))\le \rho (\mathcal {A}(\epsilon )). \end{aligned}$$Letting \(\epsilon \rightarrow 0,\) we obtain

$$\begin{aligned} \min \limits _{{g_{ij}\in E(\mathcal {G}(\mathcal {A}))}\bigcup {j-i=1,1-n}}\Psi _{i,j}(\mathcal {A})\le \rho (\mathcal {A}). \end{aligned}$$Combining Cases 1 and 2, we obtain the desired results.

\(\square \)

When the condition \(\Gamma _{\mathcal {G}(\mathcal {A})}(i)\ne \emptyset \) is replaced by weak irreducibility of \(\mathcal {A},\) we obtain accurate conclusion.

Corollary 4.3

Let \(\mathcal {A}\) be an m-order n-dimensional weakly irreducible nonnegative tensor. Then,

When the condition \(\Gamma _{\mathcal {G}(\mathcal {A})}(i)\ne \emptyset \) is omitted, we obtain general results.

Corollary 4.4

Let \(\mathcal {A}\) be an m-order n-dimensional nonnegative tensor. Then,

Based on Theorem 3.2, we can establish the conclusions.

Theorem 4.4

Let \(\mathcal {A}\) be an m-order n-dimensional nonnegative tensor with \(\Gamma _{\mathring{\mathcal {A}}}(i)\ne \emptyset \) and \(n\ge 2.\) Then,

where

Remark 4.1

Compared with Theorem 2.1 of [13], the results of Theorem 4.4 is sharp under the condition \(\Gamma _{\mathring{\mathcal {A}}}(i)\ne \emptyset .\) Compared with Corollary 4 of [13] under irreducibility of \(\mathcal {A},\) we deduce the following resluts with weak irreducibility of \(\mathcal {A}\)

Corollary 4.5

Let \(\mathcal {A}\) be an m-order n-dimensional nonnegative tensor. Then,

In what follows, we test the efficiency of the obtained results.

Example 4.2

Let \(\mathcal {A}\) be a 3-order 4-dimensional nonnegative tensor defined as follows:

We compute \(\rho (\mathcal {A})=7.112\) and identify

By Lemma 5.2 of [25], one has

From Theorem 3.3 of [10], Table 8 holds

and

From Theorem 5 of [11], following the similar computations of \(\Omega _{i,j}(\mathcal {A}),\) we obtain

We observe \(\mathring{\mathcal {A}}\) is reducible. It follows from Theorem 2.1 of [13] that

i.e.,

From representation matrix \(\mathcal {G}(\mathcal {A})\), we know that \(\mathcal {A}\) is weakly irreducible. Based on Corollary 4.3, we obtain Table 10 and

By majorization matrix \(\mathring{\mathcal {A}}\), for any \(i\in \{1,2,3,4\},\) we observe \(\Gamma _{\mathring{\mathcal {A}}}(i)\ne \emptyset .\) Recalling Theorem 4.4, we calculate Table 11 and

It is easy to see that the bounds in Corollary 4.3 and Theorem 4.4 are sharper than those of Lemma 5.2 in [25], Theorem 3.3 in [10], Theorem 5 in [11] and Theorem 2.1 of [13].

5 Conclusion

In this paper, we established the improved H-eigenvalue inclusion sets of a sparse tensor by its majorization matrix’s digraph and representation matrix’s digraph, which have advantages of tight bounds and minor computations. Meanwhile, two sufficient conditions were proposed to check positive definiteness of an even-order real supersymmetric sparse tensor. Further studies can be considered to develop certain algorithms for solving image restoration from sparse tensor data based on improved H-eigenvalue inclusion sets.

Data Availability

All relevant data are within the paper.

References

Bloy, L., Verma, R.: On computing the underlying fiber directions from the diffusion orientation distribution function. In: Medical Image Computing and Computer-Assisted Intervention, pp. 1-8. Springer, Berlin (2008)

Chang, J., Chen, Y., Qi, L.: Computing eigenvalues of large scale sparse tensors arising from a hypergraph. SIAM J. Sci. Comput. 38(6), 3618–3643 (2016)

Chang, K., Pearson, K., Zhang, T.: Perron-Frobenius theorem for nonnegative tensors. Commun. Math. Sci. 6(2), 507–520 (2008)

Cui, J., Peng, G., Lu, Q., Huang, Z.: Several new estimates of the minimum \(H\)-eigenvalue for nonsingular \(M\)-tensors. Bull. Malays. Math. Sci. Soc. 42(3), 1213–1236 (2019)

Friedland, S., Gaubert, S., Han, L.: Perron-Frobenius theorem for nonnegative multilinear forms and extensions. Linear. Algebra. Appl. 438(2), 738–749 (2013)

He, J., Liu, Y., Xu, G.: \(Z\)-eigenvalues-based sufficient conditions for the positive definiteness of fourth-order tensors. Bull. Malays. Math. Sci. Soc. 43(2), 1069–1093 (2020)

Hu, S., Huang, Z., Qi, L.: Strictly nonnegative tensors and nonnegative tensor partition. Sci. China Math. 57(1), 181–195 (2014)

Li, C., Li, Y., Kong, X.: New eigenvalue inclusion sets for tensors. Numer. Linear Algebra Appl. 21(1), 39–50 (2014)

Li, C., Li, Y.: An eigenvalue localization set for tensors with applications to determine the positive (semi-) definiteness of tensors. Linear Multilinear Algebra 64(4), 587–601 (2016)

Li, C., Wang, Y., Yi, J., Li, Y.: Bounds for the spectral radius of nonnegative tensors. J. Ind. Manag. Optim. 12(3), 975–990 (2016)

Li, L., Li, C.: New bounds for the spectral radius for nonnegative tensors. J. Inequal. Appl. 2015, 166 (2015)

Lim, L.H.: Singular values and eigenvalues of tensors: a variational approach. In: Proceedings of the IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing, pp. 129-132 (2005)

Liu, G., Lv, H.: Bounds for spectral radius of nonnegative tensors using matrix-digragh-based approach. J. Ind. Manag. Optim. 19(1), 105–116 (2023)

Ng, M., Qi, L., Zhou, G.: Finding the largest eigenvalue of a nonnegative tensor. SIAM J. Matrix. Anal. Appl. 31(3), 1090–1099 (2009)

Ni, Q., Qi, L., Wang, F.: An eigenvalue method for testing the positive definiteness of a multivariate form. IEEE Trans. Automat. Contr. 53(5), 1096–1107 (2008)

Pearson, K.: Essentially positive tensors. Int. J. Algebra 4(9), 421–427 (2010)

Pearson, K., Zhang, T.: On spectral hypergraph theory of the adjacency tensor. Graphs Comb. 30(5), 1233–1248 (2014)

Qi, L.: Eigenvalues of a real supersymmetric tensor. J. Symb. Comput. 40(6), 1302–1324 (2005)

Qi, L., Yu, G., Wu, E.: Higher order positive semi-definite diffusion tensor imaging. SIAM J. Imaging. Sci. 3(3), 416–433 (2010)

Qi, L., Luo, Z.: Tensor Analysis: Spectral Theory and Special Tensors. SIAM, Philadelphia (2017)

Sidiropoulos, N., Kyrillidis, A.: Multi-way compressed sensing for sparse low-rank tensors. IEEE Signal Proc. Lett. 11(2), 403–418 (2014)

Sun, L., Wang, G., Liu, L.: Further study on \(Z\)-eigenvalue localization set and positive definiteness of fourth-order tensors. Bull. Malays. Math. Sci. Soc. 44(1), 1685–1707 (2021)

Wang, G., Zhou, G., Caccetta, L.: \(Z\)-eigenvalue inclusion theorems for tensors. Discrete Contin. Dyn. Syst. Ser. B. 22(1), 187–198 (2017)

Wang, G., Wang, Y., Wang, Y.: Some Ostrowski-type bound estimations of spectral radius for weakly irreducible nonnegative tensors. Linear Multlinear Algebra 68(9), 1817–1834 (2020)

Yang, Y., Yang, Q.: Further results for Perron-Frobenius theorem for nonnegative tensors. SIAM J. Matrix. Anal. Appl. 31(5), 2517–2530 (2010)

Zeng, J., Wang, C.: Sparse tensor model-based spectral angle detector for hyperspectral target detection. IEEE Trans. Geosci. Remote 60, 1–15 (2022)

Zhang, X., Ng, M.: Sparse nonnegative tensor factorization and completion with noisy observations. IEEE Trans. Inform. Theory 68(4), 2551–2572 (2022)

Zhao, J., Sang, C.: Two new eigenvalue localization sets for tensors and theirs applications. Open Math. 15, 1267–1276 (2017)

Zhao, J.: \(E\)-eigenvalue localization sets for fourth-order tensors. Bull. Malays. Math. Sci. Soc. 43(2), 1685–1707 (2020)

Acknowledgements

This work was supported by the Natural Science Foundation of China (12071250) and the Natural Science Foundation of Shandong Province (ZR2020MA025).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Additional information

Communicated by Fuad Kittaneh.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, G., Feng, X. H-eigenvalue Inclusion Sets for Sparse Tensors. Bull. Malays. Math. Sci. Soc. 46, 164 (2023). https://doi.org/10.1007/s40840-023-01560-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40840-023-01560-9