Abstract

In this paper, we consider two kinds of nonlinear matrix equations \(X+ \sum _{i=1}^{m}B_{i}^*X^{t_{i}}B_{i}=I\;(0<t_{i}<1)\) and \(X^{s}-\sum _{i=1}^{m}A_{i}^*X^{p_{i}}A_{i}=I\;(p_{i}>1,\;s\ge 1)\). By means of the integral representation of matrix functions, properties of Kronecker product and the monotonic p-concave operator fixed point theorem, we derive necessary conditions and sufficient conditions for the existence and uniqueness of the Hermitian positive definite solution for the matrix equations. We also obtain some properties of the Hermitian positive definite solutions, the bounds of the determinant’s sum for \(A_{i}^{*}A_{i}\) and the spectral radius of \(A_{i}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the Hermitian positive definite (HPD) solutions of the nonlinear matrix equations

and

where \(A_{i}, B_{i} \;(i=1, 2, \ldots , m) \) are \(n\times n\) matrices, I is an \(n\times n\) identity matrix and m is a positive integer. Here, \(A_{i}^{*}\) denotes the conjugate transpose of the matrix \(A_{i}\).

Nonlinear matrix equations with form (1.1) and (1.2) in the case \(m=s=1\) arise from many fields such as nano research, ladder networks, dynamic programming, control theory, stochastic filtering, statistics [1,2,3,4,5,6,7,8] and the references therein.

In the last few years, (1.1) and (1.2) were investigated in some special cases. For (1.1) with \(m=1\), Zhang et al. [9] considered the existence of HPD solutions and the iterative method. Gao and Zhang [10] studied HPD solutions of \(X-A^{*}X^{q}A=Q\;(q>0)\). For (1.2) with \(s=1\), \(0<|p_{i}|<1\), there were some contributions in the literature to the solvability, numerical solutions and perturbation analysis [11,12,13,14,15]. Duan et al. [11] obtained the existence of a unique HPD solution by fixed point theorems for monotone and mixed monotone operators in a normal cone. Lim [12] derived the existence of a unique HPD solution by using a strict contraction for the Thompson metric on the open convex cone of positive definite matrices. Duan et al. [13] and Li and Zhang [14, 15] discussed perturbation analysis for the HPD solution of this matrix equation.

In addition, the related matrix equations \(X^{s}\pm A^*\mathcal {F}(X)A=Q\) [16,17,18,19,20,21,22], \(X-\sum _{i=1}^{m}f(\Phi _{i}(X))=Q\) [23] and \(X^{s}\pm \sum _{i=1}^{m}A_{i}^{*}X^{-t_{i}}A_{i}=Q\;(t_{i}>0)\) [24,25,26,27,28,29,30,31,32,33] were studied by some scholars. However, (1.1) and (1.2) have not been thoroughly studied either qualitatively or quantitatively. Motivated by this, this paper will focus on the solvability for (1.1) and (1.2) by means of the integral representation of matrix functions, the properties of Kronecker product and the monotonic p-concave operator fixed point theorem.

The rest of this paper is organized as follows: In Sect. 2, we give some preliminary lemmas that will be needed to develop this work. In Sect. 3, we discuss the existence of a unique HPD solution of (1.1). Furthermore, in Sect. 4, some properties of HPD solutions to (1.2) are presented. We obtain the trace and the determinant for the HPD solutions, the bounds of eigenvalues and the determinant of \(A_{i}^{*}A_{i}\). Finally, in the case \(s>p_{i}>1,\) by the monotonic p-concave operator fixed point theorem (which was proposed in [20]), we obtain a sufficient condition for the existence of a unique HPD solution.

The following notations are used throughout this paper. We denote by \(\mathcal {C}^{n\times n},\)\(\mathcal {H}^{n\times n}\) and \(\mathcal {U}^{n\times n}\) the set of all \(n\times n\) complex matrices, Hermitian matrices and unitary matrices, respectively. For \(A=(a_{1},\dots , a_{n})=(a_{ij})\in \mathcal {C}^{n\times n}\) and a matrix B, \(A\otimes B=(a_{ij}B)\) is a Kronecker product, and \(\mathrm {vec}A\) is a vector defined by \(\mathrm {vec}A=(a_{1}^{T},\dots , a_{n}^{T})^{T}\). The symbol \(\Vert \cdot \Vert \) stands for the spectral norm, \(\Vert \cdot \Vert _{F}\) is the Frobenius norm. We denote by \(\lambda _i(M)\) the eigenvalues of M, by \(\det (M)\) the determinant of M, by \(\rho (M)\) the spectral radius of M, by \(\mathrm {tr}(M)\) the trace of M, by \(\lambda _{1}(M)\) and \(\lambda _{n}(M)\) the maximal and minimal eigenvalues of M, respectively. For \(X, Y\in \mathcal {H}^{n\times n}\), we write \(X\ge Y(X>Y)\) if \(X-Y\) is a Hermitian positive semi-definite (definite) matrix. For \(A, B\in \mathcal {H}^{n\times n}\), the sets [A, B] and (A, B] are defined by \([A, B]=\{X\in \mathcal {H}^{n\times n}|A\le X\le B\}\) and \((A, B]=\{X\in \mathcal {H}^{n\times n}|A< X\le B\}.\)

2 Preliminaries

In this section, we present some lemmas that will be needed to develop this paper.

Lemma 2.1

[34]. Let A and B be positive operators on a Hilbert space H such that \(M_{1} I\ge A \ge m_{1} I>0,\)\(M_{2} I\ge B \ge m_{2} I>0\) and \(B\ge A>0.\) Then \(A^{t}\le (\frac{M_{1}}{m_{1}})^{t-1}B^{t}>0,\)\(A^{t}\le (\frac{M_{2}}{m_{2}})^{t-1}B^{t}\) hold for any \(t\ge 1.\)

Lemma 2.2

[35]. If \(A\ge B>0\) and \(0\le \gamma \le 1,\) then \(A^{\gamma }\ge B^{\gamma }.\)

Lemma 2.3

[36]. For every Hermitian positive definite matrix X, it yields that

Lemma 2.4

[37, Theorem 1.9.1]. Let \(A\in \mathcal {C}^{m\times n}, B\in \mathcal {C}^{p\times q}, C\in \mathcal {C}^{n\times k}, D\in \mathcal {C}^{q\times r}.\) Then

-

(i)

\((A\otimes B)(C\otimes D)=(AC)\otimes (BD);\)

-

(ii)

\((A\otimes B)^{*}=A^{*}\otimes B^{*}.\)

Lemma 2.5

[37, Lemma 1.9.1]. Let \(A\in \mathcal {C}^{l\times m}, X\in \mathcal {C}^{m\times n}, B\in \mathcal {C}^{n\times k}.\) Then

Lemma 2.6

[38, Theorem 6.19]. Let \(A\in \mathcal {C}^{m\times m}\) and \(B\in \mathcal {C}^{n \times n}\) with eigenvalues \(\lambda _{i}\) and \(\mu _{j},\;i=1,2, \ldots , m,\;j=1,2, \ldots , n\) , respectively. Then the eigenvalues of \(A\otimes B\) are \(\lambda _{i}\mu _{j},\;i=1,2, \ldots , m,\)\(j=1,2, \ldots , n.\)

Lemma 2.7

[39, Theorem 3.2.1]. For \(A\ge 0,\)\(B\ge 0,\)\(A, B\in \mathcal {C}^{n\times n},\) then \(\det (A+B)\ge \det (A)+\det (B).\)

3 Hermitian Positive Definite Solutions of \(X+\sum \nolimits _{i=1}^{m}B_{i}^*X^{t_{i}}B_{i}=I\)

In this section, some necessary conditions and sufficient conditions for the existence and uniqueness of HPD solutions of (1.1) are derived.

The next theorem proposes a sufficient condition for the existence of HPD solutions of (1.1). Meanwhile, the bounds for HPD solutions of (1.1) are derived.

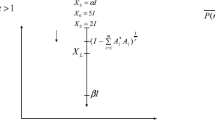

Theorem 3.1

If \(\sum \nolimits _{i=1}^{m}\lambda _{1}(B_{i}^{*}B_{i})<1\) , then the equations

and

have real positive solutions. If \(\alpha \) and \(\beta \) are the solutions of the above equations, respectively, then Eq. (1.1) has a HPD solution in \([\alpha I, \beta I ]\).

Moreover,

Proof

Step 1. We will prove (3.1) and (3.2) have positive solutions. Define the sequences \(\{\beta _{n}\}\) and \(\{\alpha _{n}\}\):

and

By the hypothesis of this theorem and the definition of sequences \(\{\beta _{n}\}\) and \(\{\alpha _{n}\}\), we have

Suppose \(\beta _{k-1}\ge \beta _{k}\ge \alpha _{0}=1-\sum \limits _{i=1}^{m}\lambda _{1 }(B_{i}^{*}B_{i})\) and \(1=\beta _{0}\ge \alpha _{k}\ge \alpha _{k-1}\), then

Hence, for each k we have \(\beta _{k}\ge \beta _{k+1}\ge 1-\sum \nolimits _{i=1}^{m}\lambda _{1 }(B_{i}^{*}B_{i})\) and \(1\ge \alpha _{k+1}\ge \alpha _{k},\) which imply the sequences \(\{\alpha _{n}\}\) and \(\{\beta _{n}\}\) are monotonic and bounded. Therefore, they are convergent to certain positive numbers. Let

Taking limits in (3.4) and (3.5) yields

which imply

Therefore, \(\alpha \) and \(\beta \) satisfy (3.1) and (3.2), respectively. Moreover,

Step 2. We will prove that (1.1) has a HPD solution under the assumption \(\sum \nolimits _{i=1}^{m}\lambda _{1}(B_{i}^{*}B_{i})<1\).

Let \(\Omega =\left[ (1-\sum _{i=1}^{m}\lambda _{1}\left( B_{i}^{*}B_{i}\right) I, \;I\right] \). Define

Obviously, \(\Omega \) is a bounded convex closed set and F is continuous on \(\Omega .\)

For any \(X\in \Omega ,\) we have \(X\le I.\) Note that \(s\ge 1.\) It follows from Lemmas 2.1 and 2.2 that

Therefore, \(F(X)\subseteq \Omega .\) By Brouwer’s fixed point theorem, the map F has a fixed point \(X_{0}\in \Omega \), which is a HPD solution of(1.1).

In what follows, we suppose that X is a HPD solution of (1.1).

Step 3. We will prove that \(X\in [\alpha I,\;\beta I]\). According to Lemmas 2.1, 2.2 and the sequences defined by (3.4) and (3.5), we have \(\alpha _{0}I= (1-\sum _{i=1}^{m}\lambda _{1}(B_{i}^{*}B_{i}))I\le X\le I =\beta _{0}I\). It follows from \(X=I-\sum \nolimits _{i=1}^{m}B_{i}^{*}X^{t_{i}}B_{i}\) that \(X=I-\sum \nolimits _{i=1}^{m}B_{i}^{*}(I-\sum \nolimits _{i=1}^{m}B_{i}^{*}X^{t_{i}}B_{i})^{t_{i}}B_{i}.\) Hence

Since \(\alpha _{0}I\le X\le \beta _{0}I, \) it follows that \(\alpha _{0}\le \lambda _{n}(X)\) and \(\lambda _{1}(X)\le \beta _{0}.\) Note that inequality (3.6) implies \(\alpha _{1}I\le X\le \beta _{1}I.\) By similar induction, it yields that

Taking limits on both sides of inequality (3.7), we have \(\alpha I\le X\le \beta I.\)\(\square \)

The next estimates for HPD solutions of (1.1) are more precise than that in Theorem 3.1.

Corollary 3.1

If \(\sum \nolimits _{i=1}^{m}\lambda _{1}(B_{i}^{*}B_{i})<1\) , then every HPD solution of (1.1) is in \([I-\sum \nolimits _{i=1}^{m}\beta ^{t_{i}}B_{i}^{*}B_{i},\; I-\sum \nolimits _{i=1}^{m}\alpha ^{t_{i}}B_{i}^{*}B_{i}],\) where \(\alpha \) and \(\beta \) are defined as in Theorem 3.1.

Proof

We suppose that X is a HPD solution of (1.1). By Theorem 3.1, it follows that

Using \(X=I-\sum \nolimits _{i=1}^{m}B_{i}^{*}X^{t_{i}}B_{i}\), we obtain \(I-\sum \nolimits _{i=1}^{m}\lambda _{1}^{t_{i}}(X)B_{i}^{*}B_{i}\le X\le I-\sum \nolimits _{i=1}^{m}\lambda _{n}^{t_{i}}(X)B_{i}^{*}B_{i}\). Applying inequality (3.8) yields \(I-\sum \nolimits _{i=1}^{m}\beta ^{t_{i}}B_{i}^{*}B_{i}\le X\le I-\sum \nolimits _{i=1}^{m}\alpha ^{t_{i}}B_{i}^{*}B_{i}.\)\(\square \)

In what follows, we will discuss the uniqueness of HPD solutions of (1.1) by means of the properties of (1.1).

The following lemma plays an important role for discussing the uniqueness of HPD solution of (1.1).

Lemma 3.1

If \(B_{1}, B_{2}, \ldots , B_{m}\) are \(n\times n\) complex nonsingular matrices, then (1.1) has a HPD solution if and only if there exist \(Q_{i}\in \mathcal {C}^{n\times n}, i=1, 2,\ldots , m\), \(P\in \mathcal {U}^{n\times n}\), and diagonal matrices \(\Gamma , \Lambda > 0\) such that

where \(\Lambda ^{2}+\Gamma =I\) and \(\sum _{i=1}^{m}Q_{i}^{*}Q_{i}=I\). In this case, \(Y=P^{*}\Gamma P\) is a HPD solution of (1.1).

Proof

If (1.1) has a HPD solution Y, it follows from the spectral decomposition theorem that there exists \(P\in \mathcal {U}^{n\times n}\) and a diagonal matrix \(\Gamma >0\) such that \(Y=P^{*}\Gamma P.\) Then (1.1) can be rewritten as

Multiplying the left side of (3.9) by P and the right side by \(P^{*},\) we have

Note that \(B_{i}\;(i=1, 2,\ldots , m)\) are nonsingular matrices. Then \(0<Y<I,\) which implies

It follows that (3.10) will be turned into the following form

Let \(\Lambda =(I-\Gamma )^{\frac{1}{2}}\), \(Q_{i}=\Gamma ^{\frac{t_{i}}{2}}PB_{i}P^{*}\Lambda ^{-1}.\) It is easy to verify that \(\Gamma +\Lambda ^{2}=I\) and \(B_{i}=P^{*}\Gamma ^{-\frac{t_{i}}{2}}Q_{i}\Lambda P\). It follows from (3.12) that \(\sum _{i=1}^{m}Q_{i}^{*}Q_{i}=I.\)

Conversely, assume there exist \(P\in \mathcal {U}^{n\times n}\), \(Q_{i}\in \mathcal {C}^{n\times n}\), \(\sum _{i=1}^{m}Q_{i}^{*}Q_{i}=I\) and diagonal matrices \(\Gamma , \Lambda > 0\), \(\Lambda ^{2}+\Gamma =I\) such that

Let \(Y=P^{*}\Gamma P\), then Y is a HPD matrix, and it follows that

which implies Y is a HPD solution of (1.1). \(\square \)

To prove the next theorem, we first verify the following lemmas.

Lemma 3.2

Suppose that \(m\ge 1,\;0<t<1\) and \(\frac{mt}{mt+1}<x,y<1.\) Then

Proof

Let \(g_{1}(x)=\frac{(1-x)^{1/2}}{x^{t/2}},\;\frac{mt}{mt+1}<x<1,\;0<t<1.\) It is easy to verify that the function \(g_{1}(x)\) is monotonically decreasing on \((\frac{mt}{mt+1},1).\) It follows that

Let \(g_{2}(x)=x^{t},\;\frac{mt}{mt+1}<x<1,\;0<t<1.\) By the mean value theorem, there exists \(\xi \in (\frac{mt}{mt+1},1)\) such that

Combining (3.13) and (3.14), we have

\(\square \)

Lemma 3.3

For every Hermitian positive definite matrix X and \(0<t<1\), it yields that

Proof

Multiplying the left side of (2.1) in Lemma 2.3 by X and letting \(t=1-p\), we have

\(\square \)

Theorem 3.2

Assume that \(B_{1}, B_{2}, \ldots , B_{m}\) are \(n\times n\) complex nonsingular matrices and \(0<t_{i}<1\). If (1.1) has a HPD solution on \([\frac{mt}{mt+1}I, I]\), then the HPD solution of (1.1) is unique, where \(t=\max _{1\le i\le m}\{t_{i}\}.\)

Proof

If \(Y_{1}\) is a HPD solution of (1.1), according to Lemma 3.1, there exist \(P_{1}\in \mathcal {U}^{n\times n},\)\(Q_{i}\in \mathcal {C}^{n\times n},\;i=1, 2, \ldots , m\) and diagonal matrices \(\Gamma _{1}, \Lambda _{1}>0\) such that

where

In this case, \(Y_{1}=P_{1}^{*}\Gamma _{1}P_{1},\) where \(\Gamma _{1}=\mathrm{diag}(\lambda _{11}, \lambda _{12}, \ldots , \lambda _{1n})\) with \(\{\lambda _{1j}\}\) the eigenvalues of \(Y_{1}.\)

Similarly, if \(Y_{2}\) is a HPD solution of (1.1), there exist \(P_{2}\in \mathcal {U}^{n\times n},\)\(U_{i}\in \mathcal {C}^{n\times n},\;i=1, 2, \ldots , m\) and diagonal matrices \(\Gamma _{2}, \Lambda _{2}>0\) such that

where

In this case, \(Y_{2}=P_{2}^{*}\Gamma _{2}P_{2},\) where \(\Gamma _{2}=\mathrm{diag}(\lambda _{21}, \lambda _{22}, \ldots , \lambda _{2n})\) with \(\{\lambda _{2j}\}\) the eigenvalues of \(Y_{2}.\)

According to Lemma 3.3, we have

Note that

and

Combing (3.15), (3.17) and (3.19)–(3.21), we have

Let

Then (3.22) can be rewritten as

From (3.24) and Lemmas 2.4 and 2.5, it follows that

Assume that

According to (3.11), (3.16) and (3.18) , we have

Let

Then (3.25) can be rewritten as

By Lemma 2.6, we have

It follows that

Note that B is nonsingular. Multiplying the left side of Eq. (3.28) by \(B^{-1},\) we have

A combination of (3.27) and Lemma 2.4 gives

It follows (3.16), (3.18) and Lemma 2.6 that \(0<||J_{i}||\le 1.\) Then

By the hypothesis of the theorem, we have \(\frac{mt}{mt+1}I<Y_{1}, Y_{2}<I,\) which implies that \(\frac{mt}{mt+1}<\lambda _{1l}, \lambda _{2j}<1,\;l, j=1, 2, \ldots ,n.\) Note that \(\frac{mt_{i}}{mt_{i}+1}<\frac{mt}{mt+1},\;i=1, 2,\ldots , m.\) Then \(\frac{mt_{i}}{mt_{i}+1}<\lambda _{1l}, \lambda _{2j}<1,\;l, j=1, 2, \ldots ,n,\;i=1, 2,\ldots , m.\) Therefore, it follows (3.29) and (3.30) that

where f(x, y, t) is defined in Lemma 3.2.

A combination of Lemma 3.2, (3.32)−(3.33) gives that

which implies \(I+\sum _{i=1}^{m}J_{i}(C_{i}-D_{i})B\) is nonsingular. It follows (3.31) that \(\mathrm {vec}W=0.\) By (3.23), we have \(Y_{1}=Y_{2}.\)\(\square \)

4 Hermitian Positive Definite Solutions of \(X^{s}-\sum \nolimits _{i=1}^{m}A_{i}^*X^{p_{i}}A_{i}=I\)

In this section, the properties of HPD solutions and coefficient matrices of (1.2) are derived. The sufficient conditions for the existence of a unique HPD solution are given.

We first give the following lemma. This lemma is easy to verify.

Lemma 4.1

Let \(g(x)=x^{-p}(x^{s}-1),\;x>1,\;p>s\ge 1.\) Then

-

(i)

g is increasing on \([1, (\frac{p}{p-s})^{\frac{1}{s}}]\) and decreasing on \([(\frac{p}{p-s})^{\frac{1}{s}}, +\infty );\)

-

(ii)

the maximal value of g(x) is \(g((\frac{p}{p-s})^{\frac{1}{s}})=\frac{s(p-s)^{\frac{p}{s}-1}}{p^{\frac{p}{s}}}.\)

The spectral radius of coefficient matrices of (1.2) is derived in the next theorem.

Theorem 4.1

Assume that \(A_{1}, A_{2}, \ldots , A_{m}\) are nonsingular matrices. If (1.2) with \(p_{i}> s\ge 1\) has a HPD solution, then

where \(p=\min _{1\le i\le m}\{p_{i}\}.\)

Proof

Suppose that X is a HPD solution of (1.2). Let \(Y=X^{s}.\) Then (1.2) can be rewritten as

Let \(\lambda _{j}\) be any eigenvalue of \(A_{j}\;(j=1, 2, \ldots , m)\) and \(e_{j}\) be the corresponding unit eigenvector of \(\lambda _{j}.\) Multiplying the left side of (4.1) by \(e_{j}^{*}\) and the right side by \(e_{j},\) we have

which implies

Let \(\sigma _{1}, \sigma _{2}, \ldots , \sigma _{n}\) be eigenvalues of Y. Then

Let \(h(x)=x-|\lambda _{j}|^{2}x^{\frac{p}{s}},\;x>0,\;p>s\ge 1.\) It is easy to verify that \(h''(x)=-|\lambda _{j}|^{2}\frac{p}{s}(\frac{p}{s}-1)x^{\frac{p}{s}-2}<0.\) Therefore, \(\max h(x)=h((\frac{s}{p|\lambda _{j}|^{2}})^{\frac{s}{p-s}})= \frac{(p-s)s^{\frac{s}{p-s}}}{p^{\frac{s}{p-s}+1}|\lambda _{j}|^{\frac{2s}{p-s}}}.\) Since \(\sigma _{i}\ge 1,\) then \(\sigma _{i}-|\lambda _{j}|^{2}\sigma _{i}^{\frac{p_{j}}{s}} \le \sigma _{i}-|\lambda _{j}|^{2}\sigma _{i}^{\frac{p}{s}}=h(\sigma _{i})\le \frac{(p-s)s^{\frac{s}{p-s}}}{p^{\frac{s}{p-s}+1}|\lambda _{j}|^{\frac{2s}{p-s}}}.\) It follows that

By inequality (4.2), we obtain

which implies

\(\square \)

In the next theorem, we obtain the bounds of \(\sum _{i=1}^{m}\lambda _{n}(A_{i}^{*}A_{i}),\)\(\sum _{i=1}^{m}\det (A_{i}A_{i}^{*})\) and \(\det (X)\).

Theorem 4.2

Let \(t=\min _{1\le i\le m}\{p_{i}\}.\) If (1.2) with \(p_{i}> s\ge 1\) has a HPD solution X, then

-

(1)

\(\sum _{i=1}^{m}\lambda _{n}(A_{i}^{*}A_{i})\le \frac{{s(t-s)^{\frac{t}{s}-1}}}{{t^{\frac{t}{s}}}};\)

-

(2)

\(\sum _{i=1}^{m}\det (A_{i}A_{i}^{*})\le \frac{{s(t-s)^{\frac{t}{s}-1}}}{{t^{\frac{t}{s}}}}\) and \(\delta _{1}\le \det (X)\le \delta _{2},\) where \(\delta _{1}, \delta _{2}\;(\delta _{1}\le \delta _{2})\) are the positive solutions of the equation \(x^{-t}(x^{s}-1)-\sum _{i=1}^{m}\det (A_{i}A_{i}^{*})=0,\;x>1,\;t>s\ge 1.\)

Proof

(1) Since X is a HPD solution of (1.2), then \(X\ge I.\) It follows that

which implies \(\sum _{i=1}^{m}\lambda _{n}(A_{i}^{*}A_{i})\le \lambda _{n}^{-t}(X)(\lambda _{n}^{s}(X)-1)\). According to Lemma 4.1, we have

(2) Since X is a HPD solution of (1.2), then \(X^{s}=I+\sum _{i=1}^{m}A_{i}^{*}X^{p_{i}}A_{i}.\) Note that \(X\ge I.\) Then \(\det (X)\ge 1,\) which implies \((\det (X))^{p_{i}}\ge (\det (X))^{t}.\) It follows from Lemma 2.7 that

which implies

It follows from Lemma 4.1 that \(\sum _{i=1}^{m}\det (A_{i}A_{i}^{*})\le \frac{{s(t-s)^{\frac{t}{s}-1}}}{{t^{\frac{t}{s}}}}.\) On the other hand, it is easy to verify that if \(\sum _{i=1}^{m}\det (A_{i}A_{i}^{*})\le \frac{{s(t-s)^{\frac{t}{s}-1}}}{{t^{\frac{t}{s}}}}\), the equation \(x^{-t}(x^{s}-1)-\sum _{i=1}^{m}\det (A_{i}A_{i}^{*})=0\) has two solutions \(\delta _{1}, \delta _{2}\;(1<\delta _{1}\le \delta _{2})\). By inequality (4.3), we have

\(\square \)

To derive the bounds of the trace for HPD solutions of (1.2), we need some properties of the trace in the following lemma.

Lemma 4.2

Let \(A\ge 0\) and \(B\ge 0\) be \(n \times n\) matrices. Then for \(q\ge 1,\) it yields that

-

(1)

\(\lambda _{n}(A)\mathrm {tr}{(B)}\le \mathrm {tr}{(AB)}\le \lambda _{1}(A)\mathrm {tr}{(B)},\)

-

(2)

\(\frac{(\mathrm {tr}{(A)})^{q}}{n^{q-1}}\le \mathrm {tr}(A^{q})\le (\mathrm {tr}(A))^{q}.\)

Proof

-

(1)

Since \(A\ge 0,\) so we get \(\lambda _{n}(A)I\le A \le \lambda _{1}(A)I,\) then

$$\begin{aligned} 0\le \mathrm {tr}((A-\lambda _{n}(A))B)=\mathrm {tr}(AB-\lambda _{n}(A)B)=\mathrm {tr}(AB)-\lambda _{n}(A)\mathrm {tr}(B), \end{aligned}$$which implies \(\lambda _{n}(A)\mathrm {tr}{(B)}\le \mathrm {tr}{(AB)}\).

Similarly, it is easy to verify that \(\mathrm {tr}{(AB)}\le \lambda _{1}(A)\mathrm {tr}{(B)}.\)

-

(2)

Since \(A\ge 0,\) so \(\lambda _{i}(A)\ge 0,\;i=1, 2, \ldots , n.\) By Hölder’s inequality (see Lemma 1.1.2 on Page 1 in [40]), we have

$$\begin{aligned} \mathrm {tr}(A)=\sum _{i=1}^{n}\lambda _{i}(A)\le n^{1-\frac{1}{q}}\left( \sum _{i=1}^{n}\lambda _{i}^{q}(A)\right) ^{\frac{1}{q}} =n^{1-\frac{1}{q}}(\mathrm {tr}(A^{q}))^{\frac{1}{q}}, \end{aligned}$$which implies \(\frac{(\mathrm {tr}{(A)})^{q}}{n^{q-1}}\le \mathrm {tr}(A^{q})\).

When \(\lambda _{1}(A)=\lambda _{2}(A)=\ldots =\lambda _{n}(A)=0,\) obviously \(\mathrm {tr}(A^{q})= (\mathrm {tr}(A))^{q}.\) If \(\lambda _{1}(A)+\lambda _{2}(A)+ \ldots +\lambda _{n}(A)\ne 0,\) then

$$\begin{aligned}&\frac{\lambda _{1}^{q}(A)}{(\mathrm {tr}(A))^{q}}+\frac{\lambda _{2}^{q}(A)}{(\mathrm {tr}(A))^{q}} +\cdots +\frac{\lambda _{n}^{q}(A)}{(\mathrm {tr}(A))^{q}}\\= & {} \left( \frac{\lambda _{1}(A)}{\lambda _{1}(A)+\lambda _{2}(A)+\cdots +\lambda _{n}(A)}\right) ^{q} +\cdots +\left( \frac{\lambda _{n}(A)}{\lambda _{1}(A)+\lambda _{2}(A)+\cdots +\lambda _{n}(A)}\right) ^{q}\\\le & {} \frac{\lambda _{1}(A)}{\lambda _{1}(A)+\lambda _{2}(A)+\cdots +\lambda _{n}(A)} +\cdots +\frac{\lambda _{n}(A)}{\lambda _{1}(A)+\lambda _{2}(A)+\cdots +\lambda _{n}(A)}=1, \end{aligned}$$which implies \(\mathrm {tr}(A^{q})=\sum _{i=1}^{n}\lambda _{i}^{q}(A)\le (\mathrm {tr}(A))^{q}.\)

\(\square \)

Theorem 4.3

Let \(t=\min _{1\le i\le m}\{p_{i}\}.\) If (1.2) with \(p_{i}> s\ge 1\) has a HPD solution X, then

where \(\gamma _{1},\;\gamma _{2}\;(\gamma _{1}<\gamma _{2})\) are the positive solutions of the equation \(n^{t-1}x^{-t}(x^{s}-n)-\sum _{i=1}^{m}\lambda _{n}(A_{i}^{*}A_{i})=0.\)

Proof

Since X is a HPD soluiton of (1.2),

Note that \(s\ge 1,\;p_{i}>1\) and \(\mathrm {tr}(X)\ge n.\) Taking the trace on both sides of (4.4), by Lemma 4.2, we obtain

which implies

Let \(h_{1}(x)=n^{t-1}x^{-t}(x^{s}-n),\;x\ge n^{1/s},\;t>s\ge 1.\) A calculation gives that the maximal value of \(h_{1}(x)\) is \(\max h_{1}(x)=h_{1}((\frac{nt}{t-s})^{1/s})=\frac{n^{t(1-\frac{t}{s})}s(t-s)^{\frac{t}{s}-1}}{t^{\frac{t}{s}}}.\) Note that \(n^{t(1-\frac{t}{s})}>1.\) It follows Theorem 4.2 (1) that

which implies the equation \(n^{t-1}x^{-t}(x^{s}-n)-\sum _{i=1}^{m}\lambda _{n}(A_{i}^{*}A_{i})=0\) has two positive solution \(\gamma _{1},\;\gamma _{2}\;(\gamma _{1}<\gamma _{2}).\) By inequality (4.6), we obtain \(\gamma _{1}\le \mathrm {tr}(X)\le \gamma _{2}.\)

\(\square \)

To prove the uniqueness of the HPD solution of (1.2) with \(1<p_{i}<s,\) we will use the following definition and lemmas which can be found in [41].

Let \(\mathcal {X}\) be a real Banach space, and let K be a closed cone in \(\mathcal {X}\), \(K^{+}=K\setminus \{0\}\).

Definition 1

[41, Definition 3.1]. Let \(T: K\rightarrow K,\) and let \(p\ge 0.\) We say that

-

(a)

T is increasing if \(0\le x\le y\) implies \(T x\le T y,\)

-

(b)

T is p -concave if \(T(\lambda x)\ge \lambda ^{p} T x\) for all \(x\in K\) and \(0<\lambda <1.\)

Lemma 4.3

[41, Theorem 3.2]. Let the norm in \(\mathcal {X}\) be monotonic. Suppose that \(T : K \rightarrow K \) is an increasing p-concave mapping with \(0< p < 1\), and that \(T f \in K_{f}\) for some \(f \in K^{+}\) with \(\Vert f\Vert = 1\). Suppose in addition that T : \(K_{f} \rightarrow K_{f}\) is continuous in the norm topology. Then there exists a unique \(z \in K_{f}\) such that \(T z = z\).

Let \(\mathcal {X}\) be M(n), which denotes the set of \(n\times n\) real matrices. Then a closed cone in \(\mathcal {X}\) is given by \(\overline{P}(n),\) the set of \(n\times n\) real positive semi-definite matrices. The interior of this cone is the set of \(n\times n\) real positive definite matrices, which we will denote by P(n).

Theorem 4.4

If \(A_{1}, A_{2}, \ldots , A_{m}\) are \(n\times n\) real nonsingular matrices and \(1<p_{i}<s,\) then (1.2) has a unique positive definite solution \(X_{0}\in P(n)\).

Proof

Let

It follows from \(1<p_{i}<s\) that \(0<\frac{p_{i}}{s}<1.\) Then F is increasing and continuous in the norm topology. Let \(q=\max _{1\le i\le m}\{p_{i}\}\). For any \(Y\in P(n)\) and \(0<\lambda <1,\) we have

which implies F is \(\frac{q}{s}\)-concave. Since \(I\in P(n),\)\(F(I)\in P(n)\) and \(\Vert I\Vert =1,\) then by Lemma 4.3, there exists a unique \(Y_{0}\in P(n)\) such that \(F(Y_{0})=Y_{0}.\) Let \(X_{0}=Y^{\frac{1}{s}}_{0}.\) Then \(X_{0}\in P(n)\) is a unique positive definite solution of (1.2). \(\square \)

Remark 4.1

In this section, our method is not valid when \( p_{i} = s\) for some i in equation (1.2). The case of \( p_{i} = s\) for some i in equation (1.2) is worth investigating further.

References

Guo, C.H., Lin, W.W.: The matrix equation \(X+A^{T}X^{-1}A=Q\) and its application in nano research. SIAM J. Sci. Comput. 32, 3020–3038 (2010)

Zabezyk, J.: Remarks on the control of discrete time distributed parameter systems. SIAM J. Control 12, 721–735 (1974)

Anderson, W.N., Kleindorfer, G.B., Kleindorfer, M.B., Woodroofe, M.B.: Consistent estimates of the parameters of a linear systems. Ann. Math. Stat. 40, 2064–2075 (1969)

Bucy, R.S.: A priori bounds for the Riccati equation. In: proceedings of the Berkley Symposium on Mathematical Statistics and Probability, Vol. III: Probability Theory, pp. 645-656. Univ. of California Press, Berkeley (1972)

Ouellette, D.V.: Schur complements and statistics. Linear Algebra Appl. 36, 187–295 (1981)

Anderson, W.N., Morley, T.D., Trapp, G.E.: The cascade limit, the shorted operator and quadratic optimal control. In: Byrnes, C.I., Martin, C.F., Saeks, R.E. (eds.) Linear Circuits, Systems and Signal Processsing: Theory and Application, pp. 3–7. North-Holland, New York (1988)

Pusz, W., Woronowitz, S.L.: Functional caculus for sequlinear forms and purification map. Rep. Math. Phys. 8, 159–170 (1975)

Buzbee, B.L., Golub, G.H., Nielson, C.W.: On direct methods for solving Poisson’s equations. SIAM J. Numer. Anal. 7, 627–656 (1970)

Zhang, G.F., Xie, W.W., Zhao, J.Y.: Positive definite solutions of the nonlinear matrix equation \(X+A^{*}X^{q}A=Q\;(q>0)\). Linear Algebra Appl. 217, 9182–9188 (2011)

Gao, D.J., Zhang, Y.H.: Hermitian positive definite solutions of matrix equation \(X-A^{*}X^{q}A=Q\;(q>0)\). Math. Numer. Sinca 29(1), 73–80 (2007)

Duan, X.F., Liao, A.P., Tang, B.: On the nonlinear matrix equation \(X-\sum \nolimits _{i=1}^{m}A_{i}^{*}X^{\delta _{i}}A_{i}=Q\). Linear Algebra Appl. 429, 110–121 (2008)

Lim, Y.: Solving the nonlinear matrix equation \(X=Q+\Sigma _{i=1}^{m}M_{i}X^{\delta _{i}}M_{i}^{*}\) via a contraction principal. Linear Algebra Appl. 430, 1380–1383 (2009)

Duan, X.F., Wang, Q.W., Li, C.M.: Perturbation analysis for the positive definite solution of the nonlinear matrix equation \(X-\sum \nolimits _{i=1}^{m}A_{i}^{*}X^{\delta _{i}}A_{i}=Q\). J. Appl. Math. Inform. 30, 655–663 (2012)

Li, J.: Perturbation analysis of the nonlinear matrix equation \(X-\Sigma _{i=1}^{m}A_{i}^{*}X^{p_{i}}A_{i}=Q\). Abatr. Appl. Anal. 2013, 979832 (2013)

Li, J., Zhang, Y.H.: Notes on the Hermitian positive definite solutions of a matrix equation. J. Appl. Math. (2014). https://doi.org/10.1155/2014/128249

El-Sayed, S.M., Ran, A.C.M.: On an iteration method for solving a class of nonlinear matrix equaitons. SIAM J. Matrix Anal. Appl. 23(3), 632–645 (2001)

Ran, A.C.M., Reurings, M.C.B.: On the nonlinear matrix equation \(X+A^{*}\cal{F}(X)A=Q:\) solutions and perturvation theory. Linear Algebra Appl. 346, 15–26 (2002)

Ran, A.C.M., Reurings, M.C.B.: A fixed point theorem in partially ordered sets and some applications to matrix equations. Proc. Am. Math. Soc. 132(5), 1435–1443 (2004)

Ran, A.C.M., Reurings, M.C.B., Rodman, L.: A perturbation analysis for nonlinear selfadjoint operator equations. SIAM J. Matrix Anal. Appl. 28(1), 89–104 (2006)

Reurings, M.C.B.: Contractive maps on normed linear spaces and their applications to nonlinear matrix equations. Linear Algebra Appl. 418(1), 292–311 (2006)

Reurings, M.C.B.: Symmetric Matrix Equations. PhD thesis, VU university Amsterdam (2003)

Zhou, D.M., Chen, G.L., Wu, G.X., Zhang, X.Y.: On the nonlinear matrix equation \(X^{s}+A^{*}F(X)A=Q\) with \(s\ge 1\). J. Comput. Math. 31(2), 209–220 (2013)

Mousavi, Z., Mirzapour, F., Moslehian, M.S.: Positive definite solutions of certain nonlinear matrix equations. Oper. Matrices 10, 113–126 (2016)

Vaezzadeh, S., Vaezpour, S.M., Saadati, R., Park, C.: The iterative methods for solving nonlinear matrix equation \(X+A^{*}X^{-1}A+B^{*}X^{-1}B=Q\). Adv. Differ. Equ. 2013, 229 (2013)

Sarhan, A.M., El-Shazly, N.M., Shehata, E.M.: On the existence of extremal positive definite solutions of the nonlinear matrix equation \(X^{r}+\sum _{i=1}^{m}A_{i}^{*}X^{-\delta _{i}}A_{i}=I\). Math. Comput. Model. 51, 1107–1117 (2010)

Liu, A.J., Chen, G.L.: On the Hermitian positive definite solutions of nonlinear matrix equation \(X^{s}+\sum _{i=1}^{m}A_{i}^{*}X^{-t_{i}}A_{i}=Q\). Appl. Math. Comput. 243, 950–959 (2014)

Yin, X.Y., Wen, R.P., Fang, L.: On the nonlinear matrix equation \(X+\sum _{i=1}^{m}A_{i}^{*}X^{-q}A_{i}=Q\;(0<q\le 1)\). Bull. Korean Math. Soc. 51(3), 739–763 (2014)

Duan, X.F., Wang, Q.W., Liao, A.P.: On the matrix equation arising in an interpolation problem. Linear Multilinear Algebra 61(9), 1192–1205 (2013)

Yin, X.Y., Fang, L.: Perturbation analysis for the positive definite solution of the nonlinear matrix equation \(X-\Sigma _{i=1}^{m}A_{i}^{*}X^{-1}A_{i}=Q\). J. Appl. Math. Comput. (2013). https://doi.org/10.1007/s12190-013-0659-z

Li, J., Zhang, Y.H.: Sensitivity analysis of the matrix equation from interpolation problems. J. Appl. Math. (2013). https://doi.org/10.1155/2013/518269

Li, J., Zhang, Y.H.: On the existence of positive definite solutions of a nonlinear matrix equation. Taiwan. J. Math. 18(5), 1345–1364 (2014)

Wang, M.H., Wei, M.S., Hu, S.R.: The extremal solution of the matrix equation \(X^{s}+ A^*X^{-q}A=I\). Appl. Math. Comput. 220, 193–199 (2013)

Zhou, D.M., Chen, G.L., Zhang, X.Y.: Some inequalities for the nonlinear matrix equation \(X^{s}+A^{*}X^{-t}A=Q:\) Trace, determinant and eigenvalue. Appl. Math. Comput. 224, 21–28 (2013)

Furuta, T.: Operator inequalities associated with Hilder–McCarthy and Kantorovich inequalities. J. Inequal. Appl. 6, 137–148 (1998)

Bhatia, R.: Matrix Analysis. Springer, New York (1997)

Li, J., Zhang, Y.H.: Perturbation analysis of the matrix equaiton \(X-A^{*}X^{-p}A=Q\). Linear Algebra Appl. 431(9), 1489–1501 (2009)

Xu, S.F.: Matrix computation from control system. Higher education press, Beijing (2011)

Zhang, F.Z.: Matrix Theory: Basic Results and Techniques. Springer, Berlin (1999)

Wang, S.G., Wu, M.X., Jia, Z.Z.: Matrix Inequalities. Science Press, Beijing (2006)

Yan, Z.Z., Yu, R.Y., Xiong, Q.X.: Matrix Inequalities. Tongji University Press, Shanghai (2012)

Huang, M.J., Huang, C.Y., Tsai, T.M.: Applications of Hilbert’s projective metric to a class of positive nonlinear operators. Linear Algebra Appl. 413, 202–211 (2006)

Acknowledgements

The authors are grateful to anonymous referees for their valuable comments and suggestions, which greatly improve the original manuscript of this paper. The work was supported in part by National Nature Science Foundation of China (11601277).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by V. Ravichandran.

Rights and permissions

About this article

Cite this article

Li, J., Zhang, Y. The Investigation on Two Kinds of Nonlinear Matrix Equations. Bull. Malays. Math. Sci. Soc. 42, 3323–3341 (2019). https://doi.org/10.1007/s40840-018-0674-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-018-0674-1

Keywords

- Nonlinear matrix equation

- Hermitian positive definite solution

- Integral representation

- Kronecker product

- Spectral radius