Abstract

This paper presents statistical methods for analyzing partially accelerated life test from constant-stress test. The lifetime of items under use condition follows the two-parameter exponentiated Weibull distribution. Based on progressively Type-II censored sample, the classical maximum likelihood method as well as a fully Bayesian method based on Lindley (Trabajos de Estadistica 31:223–237, 1980) approximation form and the Markov chain Monte Carlo technique are developed for inference about model parameters and acceleration factor. Furthermore, approximate confidence intervals and credible intervals are presented. A Monte Carlo simulation is given to study the precision of different estimators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Accelerated life test (ALT) is a popular experimental strategy to get information on life distribution of highly reliable product. Test units under normal operating conditions are often extremely reliable with large mean times to fail. To overcome such a problem, ALT experiments are preferred to obtain the reliability information of product components in a short period of time by subjecting it to higher than usual stress (pressure, temperature, voltage,..., etc.). In ALT, items are tested under stress levels that are far more than those normally applied in practice with the conclusive aim of inducing early failure. At the accelerated conditions, the test data obtained are analyzed in terms of a mathematical model, and then extrapolated to the normal stress to estimate the life distribution parameters. In some cases, such mathematical model which relating the lifetime of the unit and the stress is not known, i.e., the data obtained from ALT cannot be extrapolated to normal stress. So, in such cases, partially accelerated life test (PALT) is better criterion to use. The objective of a PALT is to collect more failure data in a limited time without necessarily using high stresses to all test units.

In ALT, all units are run only at accelerated conditions, while in PALT, they are run at both normal and accelerated conditions. The stress can be applied in various ways: commonly used methods are constant-stress and step-stress. In constant-stress PALT, the total test units are first divided into two groups. Items of group 1 are allocated to normal condition and items of group 2 are allocated to accelerated condition. Each unit is run at a constant level of stress until the unit fails or censored.

Several authors have dealt with problems of constant-stress ALT. Based on progressively Type-II censored samples, [2] considered constant-stress PALT when the lifetime of units under use condition follows the Burr Type-XII distribution. Bayes and maximum likelihood (ML) methods of estimation are applied on constant-stress ALTs to finite mixtures of distributions by [3, 4].

In the step-stress PALT, a test unit is first run at use condition and if it does not fail in a specified time, and then it is run at accelerated condition until failures occur or the observation censored, see, for example, [1]. Most of the current literature on the accelerated life testing problem is based on the sample theoretic methods as discussed in [17].

In Bayesian estimation, we consider several types of loss functions. The squared-error, LINEX, and entropy loss functions are widely used in literature. These loss functions were used by several authors, among them [9, 10, 18, 20].

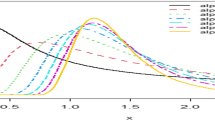

The exponentiated Weibull distribution with two parameters (\(\alpha ,\beta \)) (EW(\(\alpha ,\beta \))) was introduced by [14] as an extension of the Weibull family of distribution by adding an additional shape parameter. Jiang and Murthy [11] obtained the parameter estimation of the model by graphical approaches. New statistical measures on the EW were provided by [15].

The cumulative distribution function (CDF), probability density function (PDF), and the hazard rate function (HRF) of the EW are given, respectively, by

Here (and in the sequel of the paper) Equation (1) refers to all equations above.

Bayes estimators for the two-shape parameters, reliability, and hazard rate functions of the EW distribution based on Type-II censored and complete samples were discussed by [16]. Choudhury [7] obtained a simple derivation for the moments of the EW distribution.

Several recent monographs provide more complete reviews of Markov chain Monte Carlo (MCMC) methods. The Metropolis–Hastings (MH) is one of the most important MCMC methods. It is a general method for constructing a Markov chain that draws samples from transition distributions for arbitrary posterior distributions. The Gibbs sampler may be one of the best known MCMC sampling algorithms in the Bayesian computational literature. For more details see, [6, 8, 13, 19].

The most common censoring schemes are Type-I and Type-II censoring. The conventional Type-I and Type-II censoring schemes do not have the flexibility of allowing removal units at points other than the terminal point of the experiment. One censoring scheme known as the progressive Type-II censoring scheme has this advantage, see the details from [5].

In addition to this introductory section, this article includes five sections. Model description and assumptions, the ML estimates (MLEs) of model parameters, acceleration factor, and asymptotic variance–covariance matrix of the estimators are given in Sect. 2. Section 3 provides the Bayes estimates of model parameters and acceleration factor using Lindley’s approximation and the MCMC procedure [12]. Simulation studies are given in Sect. 4. Finally, Sect. 5 is devoted to the concluding remarks.

2 Model Description and Maximum Likelihood Estimation

In a constant-stress PALT, the total sample size n of test units is divided into two groups: \(n_{1}\ \)items of group 1 are allocated to use condition and \(n_{2}=n-n_{1}\) items of group 2 are allocated to accelerated condition.

Lifetimes \(T_{ji},i=1,\ldots n_{j},j=1,2\) denoted two progressively Type-II censored samples from EW distribution, where \(T_{1i}, i=1,\ldots ,n_{1}\) be the items allocated at use condition and \(T_{2i},i=1,\ldots ,n_{2}\) at accelerated condition. At the first failure time \( t_{j1},R_{j1}~\)units are randomly removed from the remaining\(\ n_{j}-1\) surviving units. At the second failure time \(t_{j2},R_{j2}\) units are randomly removed from the remaining \(n_{j}-R_{j1}-2\). The test continues until the \(m_{j}th\) failure at which time, all remaining \(R_{jm_{j}}=n_{j}-m_{j}-R_{j1}-R_{j2}-\cdots -R_{j(m_{j}-1)}\) items are withdrawn. The \(R_{ji}\) are fixed prior to the study, \(m_{j}\le n_{j}\).

2.1 Assumptions

-

(1)

The lifetime of a test unit is EW(\(\alpha ,\beta \)) distribution at use condition.

-

(2)

At accelerated condition, the hazard rate of a test unit is increased to \(\lambda h_{1}(t),\) where \(\lambda \) is an acceleration factor. Therefore CDF, PDF, and HRF are given, respectively, by

$$\begin{aligned} F_{2}(t)= & {} 1-[1-(1-e^{-t^{\alpha }})^{\beta }]^{\lambda }, \qquad t>0,(\alpha ,\beta >0, \lambda >1), \nonumber \\ f_{2}(t)= & {} \alpha \beta \lambda t^{\alpha -1}e^{-t^{\alpha }} (1-e^{-t^{\alpha }})^{\beta -1}[1-(1-e^{-t^{\alpha }})^{\beta }]^{\lambda -1}, \nonumber \\ h_{2}(t)= & {} \frac{\alpha \beta \lambda t^{\alpha -1}e^{-t^{\alpha }} (1-e^{-t^{\alpha }})^{\beta -1}}{1-(1-e^{-t^{\alpha }})^{\beta }}. \end{aligned}$$(2) -

(3)

The lifetimes of test units are independent and identically distributed.

2.2 Maximum Likelihood Estimation

Let \(t_{ji,m_{j},n_{j}}\ \)be two progressively Type-II censored samples from EW \((\alpha ,\beta )\) distribution with censoring schemes \( R_{j}=(R_{j1},\ldots ,R_{jm_{j}}),~j=1,2\ .\ \)The likelihood function is given by

where \( A_{j}=n_{j}(n_{j}-1-R_{j1})(n_{j}-2-R_{j1}-R_{j2})\ldots (n_{j}-(m_{j}-1)-R_{j1}-\cdots -R_{j(m_{j}-1)})\ \)and \(r_{ji}=(R_{ji}+1).\)

The log-likelihood function may be written as

where, for simplicty, we use

For determining the MLEs of (\(\alpha \),\(\beta \),\(\lambda \)), we first have the partial derivatives of (4) with respect to \(\alpha ,\beta , ~\)and \( \lambda \) as

where

with

MLEs of \(\alpha \), \(\beta \), and \(\lambda \) are obtained by equating the partial derivatives (6) to zero. Since the MLEs of \(\alpha \), \(\beta \), and \( \lambda \) can not be expressed explicitly, we apply the Newton–Raphson method to obtain \(\hat{\alpha }\), \(\hat{\beta }\), and \(\hat{\lambda }\).

2.3 Observed Fisher’s Information Matrix

The observed Fisher’s information matrix is given by

where the second derivatives of \(L(\alpha ,\beta ,\lambda |\mathbf {t})\) are given in Appendix A and the caret indicates that the derivative is calculated at \((\hat{\alpha }, \hat{\beta },\hat{\lambda })\).

Consequently, the asymptotic variance–covariance matrix for the MLEs of the parameters \(\alpha , \beta \), and \(\lambda \) is defined by inverting the observed Fisher’s information matrix defined above.

Based on the asymptotic normality of the MLEs, we compute the approximate confidence intervals for \(\alpha ,\beta \), and \(\lambda \), respectively. The \( 100(1-\gamma )\%\) confidence intervals for \(\alpha ,\beta \), and \(\lambda \) are given by

where \(z_{1-\gamma /2}\) is the upper \((\gamma /2)\) percentile of the standard normal distribution.

3 Bayes Estimation

We suggest the joint prior density of \(\alpha ,\beta \), and \(\lambda \) as follows:

where \(\alpha \) and \(\beta \) are dependent with bivariate prior density suggested by [16], where \(\beta |\alpha \sim \hbox {Gamma}(\mu ,\alpha )\) and \(\alpha \sim \hbox {Exp}(\nu )\), and \(\lambda \) is independent of them with non-informative prior \(\pi _{3}(\lambda )\propto \frac{1}{ \lambda },~\lambda >0\). So, the joint prior of \(\alpha ,\beta \), and \(\lambda \) is written as

The joint posterior density function of \(\alpha ,\beta \), and \(\lambda \) can be written from (3) and (9) as

Based on squared-error loss function, the Bayes estimate of a function of the model parameters and acceleration factor is its posterior expectation. If \(U=U(\mathbf {\theta })\equiv U(\alpha ,\beta ,\lambda )\), then

Unfortunately, we cannot compute explicitly this integral in most cases. Therefore, we adopt two different procedures to approximate this integral: Lindley’s approximation and MCMC procedure.

3.1 Lindley’s Approximation

Based on Lindley’s approximation, the approximate Bayes estimates of \(\alpha ,\beta \), and \(\lambda \) for the squared-error loss function are given, respectively, by

For the detailed derivations, see Appendix B.

3.2 Markov Chain Monte Carlo

For generating samples from the posterior density function, we consider hybrid algorithm, which combines the MH algorithm and Gibbs sampler. The conditional posterior distributions of the parameters \(\alpha ,\beta \), and \( \lambda \) can be written, respectively, by

where \(\pi ^{*}(\lambda |\alpha ,\beta ,\mathbf {t})\) is a Gamma(\( m_{2},-\sum _{i=1}^{m_{2}}r_{2i}\ln (1-(1-e^{-t_{2i}^{\alpha }})^{\beta })\) distribution, so we use the Gibbs sampler to generate random numbers of \( \lambda \). Moreover, the conditional posterior distribution of \(\alpha \) and \(\beta \) cannot be reduced analytically to well-known distributions, and therefore, it is not possible to sample directly by standard methods. So, we use the MH method with normal proposal distribution to generate random numbers from these distributions.

The following algorithm can be used to evaluate samples for \(\alpha \),\(\beta \), and \(\lambda \).

-

Step 1.

Choose the MLEs \(\hat{\alpha }\), \(\hat{\beta }\) and \(\hat{\lambda }\), as the starting values (\(\alpha ^{(0)},\beta ^{(0)},\lambda ^{(0)}\)) of \(\alpha \),\(\beta \) and \(\lambda \).

-

Step 2.

Set \(i=1\)

-

Step 3.

Generate \(\lambda ^{(i)}\) from \(\pi ^{*}(\lambda |\alpha ^{(i-1)},\beta ^{(i-1)},\mathbf {t})\).

-

Step 4.

Generate \(\alpha ^{(i)}\) from \(N(\alpha ^{(i-1)},\sigma _{11}^{2})\).

-

Step 5.

Generate\(\ \beta ^{(i)}\) from \(N(\beta ^{(i-1)},\sigma _{22}^{2})\).

-

Step 6.

Set \(i=i+1\)

-

Step 7.

Repeat steps 3–6 N times.

-

Step 8.

The approximate means of \(U(\alpha ,\beta ,\lambda )\) are given, respectively, by

$$\begin{aligned} E(U|\mathbf {t})=\frac{1}{N-M}\sum _{i=M+1}^{N}U(\alpha ^{(i)},\beta ^{(i)},\lambda ^{(i)}), \end{aligned}$$

where M is the burn-in period.

-

Step 9.

\((U_{(N-M)\gamma /2}(\alpha ^{(i)},\beta ^{(i)},\lambda ^{(i)}),U_{(N-M)(1-\gamma /2)}(\alpha ^{(i)},\beta ^{(i)},\lambda ^{(i)}))\) yields an approximate \(100(1-\gamma )\) credible interval for \(U(\alpha ^{(i)},\beta ^{(i)},\lambda ^{(i)}).\)

4 Simulation Study

A Monte Carlo simulation study was conducted in order to assess the performance of all methods of estimations (ML and the approximate Bayes estimations). The simulation study is carried out according to the following algorithm.

-

Step 1.

We choose the values \(n_{1},n_{2},m_{1},m_{2},c,\lambda \) and the censored scheme \(R_{ji},i=1,\ldots ,m_{j},~j=1,2.\)

-

Step 2.

For given values of the prior parameters, \(\mu \ \)and\(\ \nu \) generate \(\alpha \ \)and\(\ \beta \) from Exp(\(\nu \)) and Gamma(\(\mu ,\alpha \)).

-

Step 3.

Generate two independent random samples of sizes \(m_{1}\) and \(m_{2}\) from Uniform (0,1) distribution.

-

Step 4.

Set

$$\begin{aligned} U_{ji}=V_{ji}^{1/(i+\sum _{q=m_{j}-i+1}^{m_{j}}R_{jq})},\ \ \ j=1,2,~i=1,2,\ldots ,m_{j}. \end{aligned}$$ -

Step 5.

\(W_{j1},W_{j2},\ldots ,W_{jm_{j}},~j=1,2\) are two progressive Type-II censored samples of sizes \(m_{j},j=1,2,\) from U(0, 1)where

$$\begin{aligned} W_{ji}=1-\prod \limits _{q=m_{j}-i+1}^{m_{j}}U_{jq},~i=1,\ldots ,m_{j}. \end{aligned}$$ -

Step 6.

Generate two random samples \(t_{j1},t_{j2},\ldots ,t_{jm_{j}},~j=1,2,\) from CDFs \(F_{1}(t)\) and \(F_{2}(t)\)

$$\begin{aligned} t_{1i}= & {} (-\ln (1-W_{ji}^{\frac{1}{\beta }})^{\frac{1}{\alpha } }),~i=1,\ldots ,m_{1}, \\ t_{2i}= & {} (-\ln (1-(1-W_{ji})^{\frac{1}{\lambda }})^{\frac{1}{\beta }})^{ \frac{1}{\alpha }},~i=1,\ldots ,m_{2}. \end{aligned}$$ -

Step 7.

For each sample, the MLEs of acceleration factor and the parameters of EW distribution, (\(\lambda ,\alpha ,\beta \)), are obtained by solving the nonlinear Eq. (6) using the Newton–Raphson method.

-

Step 8.

The approximate confidence intervals are constructed, through asymptotic variance–covariance matrix of the estimators.

-

Step 9.

The Bayes estimates of \((\lambda ,\alpha ,\beta \)) are computed based on squared-error loss function.

-

Step 10.

Using MH and Gibbs sampling algorithms, generate a sequence of 5500 random samples iteratively with \(N=5500\) and \(M=500\).

-

Step 11.

The squared deviations \((\theta ^{*}-\theta )^{2}\) are computed, where \(\theta ^{*}\) stands for an estimate (ML, Bayes) of the parameter \(\theta .\)

-

Step 12.

Steps 2–11 were repeated 1000 times for different sample sizes and different censoring schemes. The mean square errors (MSEs) of all the estimates are computed.

Tables 2 and 3 represent the MSE’s of the ML and Bayes estimates of the population parameters \(\alpha ,\beta \) and acceleration factor \(\lambda \) in two different cases, while Tables 4 and 5 present the \(95~\%\) confidence intervals of the parameters \(\alpha ,\beta \) and \(\lambda \).

We consider several progressive Type-II censoring schemes on data as in Table 1 with notation that \((2*0,1)\) means (0, 0, 1).

5 Conclusion

In this article, we have considered the constant-stress PALT when the observed data come from EW distribution under progressive Type-II censoring. We have obtained the MSE of ML and Bayes methods. Furthermore, the confidence intervals for the parameters are obtained. For the Bayesian analysis, we perform the Lindley’s approximation and the MCMC algorithm. For different censoring schemes, we list the MSE from the ML and Bayes methods and 95 % confidence intervals in Tables 2, 3, 4, and 5.

It may be noticed, from Tables 2 and 3 that

-

1.

The MSE of the ML and Bayes estimates and confidence intervals decreases as the sample sizes increases.

-

2.

The Bayes estimates have the smallest MSE as compared with their corresponding ML estimates for all the considered cases.

-

3.

For the Bayesian estimates, the MSEs of the estimates calculated by Lindley’s approximation are quite close to their corresponding MSEs of the estimates calculated by MCMC.

-

4.

The credible intervals have the smallest length as compared with their corresponding approximate confidence intervals.

References

Abd-Elfattah, A.M., Hassan, A.S., Nassr, S.G.: Estimation in step-stress partially accelerated life tests for the Burr Type-XII distribution using Type-I censoring. Stat. Methodol. 5, 502–514 (2008)

Abdel-Hamid, A.H.: Constant-partially accelerated life tests for Burr Type-XII distribution with progressive Type-II censoring. Comput. Stat. Data Anal. 53, 2511–2523 (2009)

AL-Hussaini, E.K., Abdel-Hamid, A.H.: Bayesian estimation of the parameters, reliability and hazard rate functions of mixtures under accelerated life tests. Commun. Stat. Simul. Comput. 33(4), 963–982 (2004)

AL-Hussaini, E.K., Abdel-Hamid, A.H.: Accelerated life tests under finite mixture models. J. Stat. Comput. Simul. 76(8), 673–690 (2006)

Balakrishnan, N., Aggarwale, R.: Progressive Censoring: Theory. Methods and Applications. Birkhäuser, Boston (2000)

Chib, S., Greenberg, E.: Understanding the Metropolis–Hastings algorithm. Am. Stat. 49, 327–335 (1995)

Choudhury, A.: A simple derivation of moments of the exponentiated Weibull distribution. Metrika 62, 17–22 (2005)

Glasserman, P.: Monte Carlo Methods in Financial Engineering. Springer, New York (2003)

Guure, C.B., Ibrahim, N.A., Adam, M.B., Bosomprah, S., Mohammed, A.: Bayesian parameter and reliability estimate of Weibull failure time distribution. Bull. Malays. Math. Sci. Soc. (2) 37(3), 611–632 (2014)

Jaheen, Z.F.: Estimation based on generalized order statistics from the Burr model. Commun. Stat. Theory Methods 34, 785–794 (2005)

Jiang, R., Murthy, D.N.P.: The exponentiated Weibull family: a graphical approach. IEEE Trans. Reliab. 48(1), 68–72 (1999)

Lindley, D.V.: Approximate Bayesian method. Trabajos de Estadistica 31, 223–237 (1980)

Liu, J.: Monte Carlo Strategies in Scientific Computing. Springer, New York (2001)

Mudholkar, G.S., Srivastava, D.K.: Exponentiated Weibull family for analyzing bathtub failure-rate data. IEEE Trans. Reliab. 42, 99–302 (1993)

Nassar, M.M., Eissa, F.H.: On the exponentiated Weibull distribution. Commun. Stat. Theory Methods 32, 1317–1336 (2003)

Nassar, M.M., Eissa, F.H.: Bayesian estimation for the exponentiated Weibull model. Commun. Stat. Theory Methods 33, 2243–2362 (2004)

Nelson, W.: Accelerated Testing: Statistical Models. Test Plans and Data Analysis. Wiley, New York (1990)

Pandey, B.N.: Estimator of the scale parameter of the exponential distribution using LINEX loss function. Commun. Stat. Theory Methods 26(9), 2191–2202 (1997)

Robert, C., Casella, G.: Monte Carlo Statistical Methods. Springer, New York (1999)

Soliman, A.A.: LINEX and quadratic approximate Bayes estimators applied to the Pareto model. Commun. Stat. Simul. Comput. 30(1), 63–77 (2001)

Acknowledgments

The authors thank the referees for their helpful remarks and suggestions that improved the original manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Anton Abdulbasah Kamil.

Appendices

Appendix A

The second and mixed partial derivatives of \(\alpha \),\(\beta \), and \(\lambda \) are obtained from (6) as the following:

where

and \(\omega _{1}(\alpha ;t_{ji}),\omega _{3}(\alpha ;t_{ji}),\omega _{4}(\alpha ;t_{ji}),\omega _{5}(\alpha ,\beta ;t_{ji}),\frac{\partial \omega _{1}(\alpha ;t_{ji})}{\partial \alpha },\frac{\partial \omega _{2}(\alpha ,\beta ;t_{ji})}{\partial \alpha }\) and \(\frac{\partial \omega _{2}(\alpha ,\beta ;t_{ji})}{\partial \beta }~\)are as given in (5), (7), and (8).

Appendix B

In a three-parameter case \(U=U(\alpha ,\beta ,\lambda )\), Lindley’s approximation form reduces to

where\(\ U_{i}=\frac{\partial U}{\partial \xi _{i}},\sigma _{ij}~\)is the element \((i,\ j)\) in the variance–covariance matrix \((-\ L_{ij})\), \( i,j=1,2,3\), and

To apply Lindley’s approximation form (B.1), we first observe

therefore,

and

where

Furthermore,

Rights and permissions

About this article

Cite this article

Ahmad, A.EB.A., Soliman, A.A. & Yousef, M.M. Bayesian Estimation of Exponentiated Weibull Distribution Under Partially Acceleration Life Tests. Bull. Malays. Math. Sci. Soc. 39, 227–244 (2016). https://doi.org/10.1007/s40840-015-0170-9

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-015-0170-9