Abstract

This paper presents a reliable approach for solving linear systems of ordinary and fractional differential equations. First, the FDEs or ODEs of a system with initial conditions to be solved are transformed to Volterra integral equations. Then Taylor expansion for the unknown function and integration method are employed to reduce the resulting integral equations to a new system of linear equations for the unknown and its derivatives. The fractional derivatives are considered in the Riemann–Liouville sense. Some numerical illustrations are given to demonstrate the effectiveness of the proposed method in this paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional differential equations have gained considerable importance due to their varied applications as well as many problems in Physics, Chemistry, Biology, Applied Sciences and Engineering are modelled mathematically by systems of ordinary and fractional differential equations, e.g. series circuits, mechanical systems with several springs attached in series lead to a system of differential equations. On the other hand, motion of an elastic column fixed at one end and loaded at the other can be formulated in terms of a system of fractional differential equations [1, 2, 4, 8, 10, 11, 16–22, 24–26, 29]. Most realistic systems of fractional differential equations do not have exact analytic solutions, so approximation and numerical techniques must be used. Recently, Atanackovic and Stankovic [1] introduced the system of fractional differential equations into the analysis of lateral motion of an elastic column fixed at one end and loaded at the other. Daftardar-Gejji and Babakhani [5] studied the system of linear fractional differential equations with constant coefficients using methods of linear algebra and proved existence and uniqueness theorems for the initial value problem. Furthermore, a large amount of literatures were developed concerning the application of fractional systems of differential equations in nonlinear dynamics [7, 9, 13, 14].

Xian-Fang Li [12] has proposed an approximate method for solving linear ordinary differential equations which can also be considered for linear fractional differential equations in view of Riemann–Liouville fractional derivatives. In this paper, based on the proposed method in [12], we propose a novel approach for solving a system of ordinary and fractional differential equations. In this method, the FDEs or ODEs of a system are transformed to Volterra integral equations and then Taylor expansion for the unknown function and integration method are employed to reduce the resulting integral equations to a new system of linear equations for the unknown and its derivatives. In this method, the accuracy of approximate solutions depends on the order of the Taylor expansion. Clearly, for small orders of Taylor expansion, high accuracy is not expected for approximate solutions and conversely, when taking a larger order of Taylor expansion, more accuracy is expected for the approximate results.

This paper is arranged as follows. In Sect. 2, we first recall some necessary definitions and mathematical preliminaries of the fractional calculus theory used throughout the paper. This is particularly important with fractional derivatives because there are several definitions available and they have some fundamental differences. Section 3 deals with the analysis of system of linear ordinary differential equations. In Sect. 4, we analyse system of linear fractional differential equations. In Sect. 5, we discuss convergence of the method. In Sect. 6, we investigate several numerical examples, which demonstrate the effectiveness of our new approach. In Sect. 7, we summarize our findings.

2 Preliminaries and Basic Definitions

We give some basic definitions and properties of the fractional calculus theory, which are used further in this paper.

Definition 2.1

A real function \(f(x),\ \ x>0\), is said to be in the space \(C_{\mu },\ \ \mu \in {R}\) if there exists a real number \(p(>\mu )\), such that \(f(x)=x^{p}f_{1}(x)\), where \(f_{1}(x)\in {C[0,\infty )}\), and it is said to be in the space \(C_{\mu }^{m}\) iff \(f^{(m)}\in {C_{\mu }}, \ \ m\in {N}\).

Definition 2.2

The Riemann–Liouville fractional integral operator of order \(\alpha \ge 0\), of a function \(f\in {C_{\mu }}, \ \ \mu \ge -1\), is defined as

Properties of the operator \(J^\alpha \) can be found in [15, 22, 23], we mention only the following:

For \(f\in {C_{\mu }}, \ \ \mu \ge -1\), \(\alpha \), \(\beta \ge 0\) and \(\gamma >-1\):

Definition 2.3

The fractional derivative of f(x) in the Caputo sense is defined as

for \(m-1<\alpha \le m, \ \ m\in {N}, \ \ x>0, \ \ f\in {C_{-1}^{m}}\).

Definition 2.4

The fractional derivative of f(x) in the Riemann–Liouville sense is defined as

for \(m-1<\alpha \le m, \ \ m\in {N}, \ \ x>0, \ \ f\in {C_{-1}^{m}}\).

3 System of Linear Ordinary Differential Equations

Consider the following system of ordinary differential equations:

where \(b_{ij}(x)\) and \(f_{i}(x)\) are known functions, satisfying \(b_{ij}(x), \ f_{i}(x)\in {C(I)}\), I is the interval of interest. We focus our attention to first-order linear ODEs, and for higher-order linear ODEs, the method is completely similar and omitted here.

Integrating both side of Eq. (8) from 0 to x and using the initial conditions, we obtain

Hence, we transformed system of ODEs (8) under initial conditions to a system of Volterra integral equations with continuous kernels. To approximately solve the system of Volterra integral equations, we reduce the resulting Volterra integral equations to a new system of linear equations in the unknown function and its derivatives.

To achieve this end, we employ the Taylor expansion for the unknown function \(y_{j}(t)\) at x

where \(R_{j,m}(t,x)\) denotes the Lagrange remainder

for some point \(\delta _{j}\) between x and t. In general, the Lagrange remainder \(R_{j,m}(t,x)\) becomes sufficiently small when m is large enough. In particular, if the desired solution \(y_{j}(t)\) is a polynomial of the degree equal to or less than m, then \(R_{j,m}(t,x)=0\). In other words, the obtained approximate solution of Eq. (9) is just the desired exact solution.

Substituting (10) for \(y_{j}(t)\) in the integrand into (9) leads to

where

and in the above derivation, the Lagrange remainder has been dropped due to a sufficiently small truncated error. Moreover, the notation \(y_{j}^{(0)}=y_{j}(x)\) is adopted. In Eq. (12) \(y_{j}^{(k)}(x)\), for \(k=0,\ldots ,m\) are unknown functions. In order to obtain these unknown functions, we understand the above equation as a linear equation for \(y_{j}(x)\) and its derivatives up to m. Consequently, other m independent linear equations for \(y_{j}(x)\) and its derivatives up to m are needed. These equations can be obtained by integration of both sides of Eq. (9) m times as follows:

where

Substituting (10) for \(y_{j}(t)\) in the integrand into (14), we have

for \(l=1,\ldots ,m .\)

Hence, Eqs. (12) and (16) form a system of linear equations for the unknowns \(y_{j}(x)\) and its derivatives up to m. The above system composed of Eqs. (12) and (16) can be written in compact form as

where

where in (18), the first n rows refer to coefficients of \(y_{j}^{(k)}(x)\) in Eq. (12) for \(j=1,\ldots ,n\), \(k=0,\ldots ,m\) and the other rows refer to coefficients of \(y_{j}^{(k)}(x)\) in Eq. (16) for \(j=1,\ldots ,n\), \(k=0,\ldots ,m\). Application of Cramer’s rule to the resulting new system yields an approximate solution of Eq. (8). We note that not only \(y_{j}(x)\) but also \(y_{j}^{(k)}(x)\), for \(j=1,\ldots ,n\), \(k=0,\ldots ,m\), are determined by solving the resulting new system but in effect, it is \(y_{j}(x)\) that we want to look for.

4 System of Fractional Differential Equations

Consider the following system of fractional differential equations:

where \(b_{ij}(x)\) and \(f_{i}(x)\) are known functions, satisfying \(b_{ij}(x), \ f_{i}(x)\in {C(I)}\), and I is the interval of interest. In Eq. (21), \(y_{i}^{(\alpha _{i})}(x)=D^{\alpha _{i}}y_{i}(x)\) denotes Riemann–Liouville fractional derivative of order \(\alpha _{i}\) and here we assume \(0<\alpha _{i}\le 1\), \(i=1,\ldots ,n\).

Based on definition (2.4), Eq. (21) can be rewritten as

or equivalently using definition (2.2), we have

By integrating both sides of Eq. (23) from 0 to x, we obtain

To solve the above equation similar proposed method in Sect. 3, substituting (10) for \(y_{i}(t)\) in the integrand into (24) leads to

where

for \(i=1,\ldots ,n\).

In Eq. (25), \(y_{i}^{(k)}(x)\), for \(k=0,\ldots ,m\) are unknown functions. In order to obtain these unknown functions, we understand the above equation as a linear equation for \(y_{i}(x)\) and its derivatives up to m. Consequently, other m independent linear equations for \(y_{i}(x)\) and its derivatives up to m are needed.

To achieve this end, we continue to integrate both sides of Eq. (24) m times from 0 to x, and get

where

for \(i=1,\ldots ,n\), \(l=1,\ldots ,m\).

Substituting (10) for \(y_{i}(t)\) in the integrand into (27), we obtain

for \(i=1,\ldots ,n\), \(l=1,\ldots ,m\).

Hence, Eqs. (25) and (29) form a system of linear equations for the unknowns \(y_{i}(x)\) and its derivatives up to m. The above system composed of Eqs. (25) and (29) can be written in compact form as

where

where in (31), the first n rows refer to coefficients of \(y_{i}^{(k)}(x)\) in Eq. (25) for \(i=1,\ldots ,n\), \(k=0,\ldots ,m\) and the other rows refer to coefficients of \(y_{i}^{(k)}(x)\) in Eq. (29) for \(i=1,\ldots ,n\), \(k=0,\ldots ,m\). Application of Cramer’s rule to the resulting new system under the solvability condition of this system yields an approximate solution of Eq. (21). We note that not only \(y_{i}(x)\) but also \(y_{i}^{(k)}(x)\), for \(i=1,\ldots ,n\), \(k=0,\ldots ,m\), are determined by solving the resulting new system but in effect, it is \(y_{i}(x)\) that we want to look for.

5 Error Analysis

Since in our proposed method in this paper for solving systems of both linear ordinary and fractional differential equations, finally we achieve integral equations, hence the error analysis of the convergence of approximate solutions derived from the integration method here is completely similar and applicable with the proposed error analysis in [27].

6 Numerical Examples

Several authors in their papers such as Daftardar-Gejji and Babakhani [5], Bonilla et al. [3], Duan et al. [6] and Wang et al. [28] have investigated various approaches for solving systems of linear fractional differential equations and mentioned some of their applications which show the importance of these kinds of problems. Also, the differential equations involving the Riemann-Liouville differential operators of fractional order \(0<\alpha <1\) appear to be more important in modelling several physical phenomena [28]. Therefore, in this work, we studied a method for solving systems of linear ordinary and fractional differential equations of order \(0<\alpha <1\). Since our purpose is to demonstrate the effectiveness of the proposed method in this paper for both systems of ODEs and FDEs, in this section, we have chosen three specific examples of order \(0<\alpha <1\) where the exact solution is known for \(\alpha =1\). All calculations were performed using the MATHEMATICA software package.

Example 1

Consider the following linear fractional system

subject to the initial conditions

The exact solution, when \(\alpha _{1}=\alpha _{2}=1\), is

The values of \(\alpha _{1}=\alpha _{2}=1\) are the only case for which we know the exact solution, and using our proposed method in Sect. 3, we can evaluate the approximate solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=1\). Figures 1, 2 and 3 show the approximate solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=1\) for \(m=1\), 2 and 3, respectively, in Taylor expansion in comparison with the exact solutions. Our approximate solutions are in good agreement with the exact values. Of course, the accuracy can be improved as m is raised in Taylor expansion.

Also, we calculated the approximate solutions for \(m=4\) and 5 in Taylor expansion which due to the graphs of the approximate solutions, which coincide with the graph of the exact solution, we cannot use the graphs for comparing the approximate solutions with the exact solution. Consequently, we have to employ tables for comparing errors when m is increased. The absolute errors at thirty equidistant points in the interval [0, 3] are shown in Tables 1 and 2, taking \(m=4, 5\), respectively. In Tables 1 and 2, E1 and E2 show the absolute errors of \(y_{1}\) and \(y_{2}\), respectively.

A considerable point is that the accuracy of the approximate solutions is improved remarkably when we take larger values of m as well as the runtime computations are mutually increased. In this test case the calculation times for \(m=1,\ldots ,5\) measured in seconds are 1, 4, 14, 32 and 48, respectively in a computer with hardware configuration: Intel Core i5 CPU 1.33 GHz, 4 GB of RAM and 64-bit Operating System (Windows 7).

Setting \(\alpha _{1}=\alpha _{2}=\frac{1}{2}\) and applying our proposed method in Sect. 4, we can evaluate the solutions of \(y_{1}(x)\) and \(y_{2}(x)\). Figure 4 shows the solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=\frac{1}{2}\) for \(m=2\) in Taylor expansion.

Example 2

Consider the following linear fractional system

subject to the initial conditions

The exact solution, when \(\alpha _{1}=\alpha _{2}=1\), is

Using our proposed method in Sect. 3, we can evaluate the approximate solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=1\). Figures 5 and 6 show the approximate solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=1\) for \(m=3\) and \(m=4\), respectively, in Taylor expansion in comparison with the exact solutions. Our approximate solutions are in good agreement with the exact values. Of course it is clear that the accuracy can be improved as m is raised in Taylor expansion and in this test case for \(m=4,\ldots \) the graphs of the approximate solutions coincide with the graphs of the exact solutions and there is no deviation to exact solution.

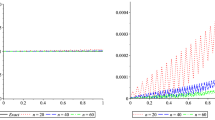

In order to show the variation of the accuracy of approximations, the errors, between several approximate solutions and the exact values are plotted in Figs. 7 and 8 for \(y_{1}(x)\) and \(y_{2}(x)\), respectively.

Setting \(\alpha _{1}=\alpha _{2}=\frac{1}{2}\) and applying our proposed method in Sect. 4, we can evaluate the solutions of \(y_{1}(x)\) and \(y_{2}(x)\). Figure 9 shows the solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=\frac{1}{2}\) for \(m=3\) in Taylor expansion.

Example 3

Consider the following linear fractional system

subject to the initial conditions

The exact solution, when \(\alpha _{1}=\alpha _{2}=1\), is

Using our proposed method in Sect. 3, we can evaluate the approximate solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=1\). Figures 10 and 11 show the approximate solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=1\) for \(m=3\) and \(m=4\), respectively, in Taylor expansion in comparison with the exact solutions. Our approximate solutions are in good agreement with the exact values. Of course the accuracy can be improved as m is raised in Taylor expansion which in this example for \(m=4,\ldots \) the graphs of the approximate solutions coincide with the graphs of the exact solutions.

In order to show the variation of the accuracy of approximations, the errors, between several approximate solutions and the exact values are plotted in Figs. 12 and 13 for \(y_{1}(x)\) and \(y_{2}(x)\), respectively.

Setting \(\alpha _{1}=\alpha _{2}=\frac{1}{2}\) and applying our proposed method in Sect. 4, we can evaluate the solutions of \(y_{1}(x)\) and \(y_{2}(x)\). Figure 14 shows the solutions of \(y_{1}(x)\) and \(y_{2}(x)\) when \(\alpha _{1}=\alpha _{2}=\frac{1}{2}\) for \(m=3\) in Taylor expansion.

7 Conclusion

In this paper, we have proposed an approximate method suitable for both systems of linear ordinary and fractional differential equations. In the method, first, the FDEs or ODEs of a system with initial conditions to be solved have transformed to Volterra integral equations. Then Taylor expansion for the unknown function and integration method have employed to reduce the resulting integral equations to a new system of linear equations for the unknown and its derivatives. Application of Cramer’s rule the resulting new system has been solved. We must emphasize that the method can only be used to solve linear systems of ODEs or FDEs.

References

Atanackovic, T.M., Stankovic, B.: On a system of differential equations with fractional derivatives arising in rod theory. J. Phys. A. 37, 1241–1250 (2004)

Bagley, R.L., Torvik, P.L.: On the fractional calculus models of viscoelastic behaviour. J. Rheol. 30, 133–155 (1986)

Bonilla, B., Rivero, M., Trujillo, J.J.: On systems of linear fractional differential equations with constant coefficients. Appl. Math. Comput. 187, 68–78 (2007)

Bonilla, B., Rivero, M., Rodrguez-Germ, L., Trujillo, J.J.: Fractional differential equations as alternative models to nonlinear differential equations. Appl. Math. Comput. 187(1), 79–88 (2007)

Daftardar-Gejji, V., Babakhani, A.: Analysis of a system of fractional differential equations. J. Math. Anal. Appl. 293, 511–522 (2044)

Duan, J.-S., Temuer, C.-L., Sun, J.: Solution for system of linear fractional differential equations with constant coefficients. J. Math. 29, 509–603 (2009)

Gao, X., Yu, J.: Synchronization of two coupled fractional-order chaotic oscillators. Chaos Solitons Fractals 26(1), 141–145 (2005)

Gaul, L., Klein, P., Kempfle, S.: Damping description involving fractional operators. Mech. Syst. Signal Process. 5, 81–88 (1991)

Grigorenko, I., Grigorenko, E.: Chaotic dynamics of the fractional Lorenz system. Phys. Rev. Lett. 91(3), 034101–034104 (2003)

Hilfer, R.: Applications of Fractional Calculus in Physics. World Scientific Publishing Company, Singapore (2000)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Li, X.-F.: Approximate solution of linear ordinary differential equations with variable coefficients. Math. Comput. Simul. 75, 113–125 (2007)

Lu, J.G.: Chaotic dynamics and synchronization of fractional-order Arneodo’s systems. Chaos Solitons Fractals 26(4), 1125–1133 (2005)

Lu, J.G., Chen, G.: A note on the fractional-order Chen system. Chaos Solitons Fractals 27(3), 685–688 (2006)

Luchko, Y., Gorneflo, R.: The initial value problem for some fractional differential equations with the Caputo derivative. Preprint series A08-98, Fachbereich Mathematik und Informatik. Freie Universitat Berlin, Berlin (1998)

Magin, R.L.: Fractional calculus in bioengineering. Crit. Rev. Biomed. Eng. 32, 1–104 (2004)

Mainardi, F.: On the initial value problem for the fractional diffusion-wave equation. In: Rionero, S., Ruggeri, T. (eds.) Waves and Stability in Continuous Media (Bologna 1993). World Scientific Publishing Company, Singapore (1994)

Mainardi, F.: Fractional calculus: some basic problems in continuum and statistical mechanics. In: Carpinteri, A., Mainardi, F. (eds.) Fractals and Fractional Calculus in Continuum Mechanics. Springer, Wien (1997)

Matsuzaki, T., Nakagawa, M.: A chaos neuron model with fractional differential equation. J. Phys. Soc. Jpn. 72, 2678–2684 (2003)

Metzler, F., Schick, W., Kilian, H.G., Nonnenmacher, T.F.: Relaxation in filled polymers: a fractional calculus approach. J. Chem. Phys. 103, 7180–7186 (1995)

Metzler, R., Klafter, J.: The random walks guide to anomalous diffusion: a fractional dynamic approach. Phys. Rep. 339(1), 1–77 (2000)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Oldham, K.B., Spanier, J.: The Fractional Calculus. Academic Press, New York (1974)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Rossikhin, Y., Shitikova, M.: Applications of fractional calculus to dynamic problems of linear and nonlinear hereditary mechanics of solids. Appl. Mech. Rev. 50, 15–67 (1997)

Samko, G., Kilbas, A., Marichev, O.: Fractional Integrals and Derivatives: Theory and Applications. Gordon and Breach, Amsterdam (1993)

Tang, B.-Q., Li, X.-F.: A new method for determining the solution of Riccati differential equations. Appl. Math. Comput. 194, 431–440 (2007)

Wang, F., Liu, Z.-H., Wang, P.: Analysis of a System for Linear Fractional Differential Equations. J. Appl. Math. (2012). doi:10.1155/2012/193061

Zaslavsky, G.M.: Hamiltonian Chaos and Fractional Dynamics. Oxford University Press, Oxford (2005)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Mohsen Didgar.

Rights and permissions

About this article

Cite this article

Didgar, M., Ahmadi, N. An Efficient Method for Solving Systems of Linear Ordinary and Fractional Differential Equations. Bull. Malays. Math. Sci. Soc. 38, 1723–1740 (2015). https://doi.org/10.1007/s40840-014-0060-6

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40840-014-0060-6

Keywords

- System of ordinary differential equations

- System of fractional differential equations

- Riemann–Liouville fractional derivative

- Taylor expansion