Abstract

A numerical algorithm for solving multi-term fractional differential equations (FDEs) is established herein. We established and validated an operational matrix of fractional derivatives of the generalized Fibonacci polynomials (GFPs). The proposed numerical algorithm is mainly built on applying the collocation method to reduce the FDEs with its initial conditions into a system of algebraic equations in the unknown expansion coefficients. Output of the numerical results asserted that our developed algorithm is applicable, efficient and accurate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

There are a lot of methods to solve differential equations. Spectral methods are the most important of these methods. They have many applications in the field of applied mathematics and scientific computing. For some of these applications, see [1,2,3]. The main idea of spectral methods is to approximate the solution of a differential equation by a finite sum of certain basis functions and then to determine the expansion coefficients in the sum in order to satisfy the differential equation and its conditions. In addition, we have some versions which are used to determine the expansion coefficients. They are the collocation, Galerkin and tau methods. In the first method, collocation, it enforce the residual of the given differential equation vanishes at given set of points. For example, Doha et al. [4] used the collocation method to solve nonlinear FDEs subject to initial/boundary conditions. For the second method, Galerkin, which requires choosing orthogonal polynomials as basis functions which satisfy the initial/boundary conditions of the given differential equation, and then enforcing the residual to be orthogonal with the basis functions. For example, Youssri and Abd-Elhameed [5] used the Galerkin method for solving time fractional telegraph equation. For the last method, tau, it is based on minimizing the residual and then, applying the initial/boundary conditions of the differential equation. For example, Abd-Elhameed and Youssri [6] used the tau method to solve coupled system of FDEs and also, in [7] they used the modified tau to solve some types of linear and nonlinear FDEs.

As known that second-order recurrence relations may generate many polynomial sequences. One of the most important of these sequences is the Fibonacci polynomials. The Fibonacci polynomial is a polynomial sequence which can be considered as a generalization circular for the Fibonacci numbers. It used in many applications like biology, statistics, physics, and computer science [8]. There are several studies that discussed practically Fibonacci polynomials and GFPs. For example, see [6, 9].

Fractional calculus is a generalization for ordinary differentiation and integration to an arbitrary (non-integer) order. It is a branch of the mathematical analysis which focus on studying the possibility of defining real/even complex number, powers of the differential operator and the integration operator. The fractional calculus is recently used in many fields of engineering, science, finance, applied mathematics, and engineering [10,11,12]. It is definitely hard to obtain analytical solutions for FDEs. Therefore, it is important approach to use numerical methods to obtain efficient and appropriate solutions to these equations. Many researches dealt with FDEs using many different methods. For example, the finite difference method [13], the Adomian decomposition method [14] and the ultraspherical wavelets method [15].

In accordance with the previous aspects, this paper focuses on the following two aspects:

-

(i)

Deriving operational matrices for integer and fractional derivatives of the GFPs.

-

(ii)

Presenting an algorithm for solving multi-term FDE by using collocation spectral method.

This paper is organized as follows: in “Preliminaries and Essential Relations” section, some necessary definitions and mathematical preliminaries of the fractional calculus is introduced. In “Generalized Fibonacci Operational Matrix of Fractional Derivatives” section, a new operational matrix of fractional derivatives of GFPs is presented. Section “A New Matrix Algorithm for Solving Multi-term FDE” is interested in solving one-dimensional multi-term FDE. In “Illustrative Examples” section, we apply the suggested method to several examples. Finally a conclusion is presented in “Concluding Remarks” section.

Preliminaries and Essential Relations

This section is devoted to presenting some important definitions of the fractional calculus.

Some Definition and Properties of the Fractional Calculus

Definition 1

As shown in Podlubny [16], the Riemann–Liouville fractional integral operator \(I^{\rho }\) of order \(\rho \) on the usual Lebesgue space \(L_1[0,1]\) is defined as

Definition 2

As shown in Podlubny [16], the Caputo definition of the fractional-order derivative is defined as:

where \(m-1\leqslant \rho <m,\; m\in {\mathbb {N}}\).

The operator \(D^{\rho }\) satisfies the following properties for \(\,m-1\leqslant \rho <m,\; m\in {\mathbb {N}},\)

where \(\lceil \rho \rceil \) denotes the smallest integer greater than or equal to \(\rho \).

Some Properties and Relations of the GFPs

The following recurrence relation

generates the sequence of Fibonacci polynomials with the initial values: \(F_0(y)=0\) and \(F_1(y)=1\).

Let a and b be any two real constants, we define GFPs which may be generated with the aid of the following recurrence relation:

with the initial values: \({\varphi _{0}^{a,b}}(y)=0\) and \({\varphi _{1}^{a,b}}(y)=1\). The analytic form of \({\varphi _{j}^{a,b}}(y)\) is

where \(\lfloor {j}\rfloor \) denotes the largest integer that less than or equal to j. Note that \({\varphi _{j}^{a,b}}(y)\) is a polynomial of degree \((j-1)\).

Now, let \({P_{j}^{a,b}}(y)\) of degree j which can be obtained by the following formula:

This means that the sequence of polynomials \(\{{P_{j}^{a,b}}(y)\}\) is generated by the following recurrence relation:

with the initial values: \({P_{0}^{a,b}}(y)=1\) and \({P_{1}^{a,b}}(y)=a\,y\).

The analytic form of \({P_{j}^{a,b}}(y)\) is

which can be expressed alternatively as:

where

For more properties about GFPs, see [17, 18].

Theorem 1

As shown by Abd-Elhameed and Youssri [6], for every nonnegative integer m, the following inversion formula holds:

Remark 1

The inversion formula (11) can be written alternatively as:

Generalized Fibonacci Operational Matrix of Fractional Derivatives

This section is devoted to establish an operational matrix of fractional derivatives of the GFPs.

Operational Matrix of Integer Derivatives

Let u(y) be a square Lebesgue integrable function on (0,1) satisfies the following homogenous initial conditions:

Assume that it can be written as a combination of a linearly independent GFPs:

where

Suppose that u(y) can be approximated as:

where

and

At this end, the first derivative of the vector \(\varvec{\psi (y)}\) can be expressed in a matrix form as

where

and \(L=(l_{ij})_{0\le i,j\le M}\) is the \((M+1)\times (M+1)\) operational matrix of derivative given by:

For example, for \(M=5\), one gets

Operational Matrix of Fractional Derivatives

Now, we will state and prove an important theorem. The following theorem displays the fractional derivatives of the vector \(\varvec{\psi (y)}\).

Theorem 2

Let \(\varvec{\psi (y)}\) be the GFP vector defined in Eq. (17). Then for all \(\alpha >0\) the following formula holds:

where \(L^{(\alpha )}=(l_{ij}^\alpha )_{0\le i,j\le M}\) is an \((M+1)\times (M+1)\) matrix and the elements can be written explicitly in the form

where

\(\delta _r\) is defined in (10). The operational matrix \(L^{(\alpha )}\) can expressed explicitly as:

Proof

Equation (9) enables us to write Eq. (14) as

The application of the fractional differential operator \(D^\alpha \) to Eq. (27) yields

by using the formula given in (12) and performing some algebraic calculations we have

where \(\beta _\alpha (i,j)\) is given in (25). \(\square \)

A New Matrix Algorithm for Solving Multi-term FDE

In this section, we are interested in solving one-dimensional multi-term (FDE)

which governed by the nonhomogeneous initial conditions:

where

and \(\epsilon _i:[0,1]\rightarrow {\mathbb {R}},\quad i=2,3,\ldots ,N,\quad f:[0,1]\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) is a given continuous function.

Nonhomogenous Initial Conditions

In the following, our aim is to illustrate how problems with nonhomogeneous initial conditions convert to problems with homogeneous initial conditions.

Let

then Eq. (30) becomes

where

The transformation (32) converts the nonhomogeneous initial conditions (31) to homogeneous initial conditions

With the aid of the approximations in Eqs. (15), (23). The residual of Eq. (33) takes the form

We choose the equidistant collocation points \(y_i=\frac{i}{M+2} ,\quad i=1,2,\ldots ,M+1\). As a result of collocation method, the following system of equations can be obtained as

Equation should be Eq. (36) form a nonlinear equations in the expansion coefficients \(e_i\). They may be solved with the aid of the well-known Newton’s iterative method by using the initial guess \(\{e_i=10^{-i},\quad i=0,1,\ldots ,M\}.\)

Illustrative Examples

In this section, we apply the generalized Fibonacci collocation method (GFCM) which is presented in “A New Matrix Algorithm for Solving Multi-term FDE” section. Numerical results show that GFCM is applicable and effective.

Example 1

As given by Abd-Elhameed and Youssri [7], consider the nonlinear fractional initial value problem:

where the exact solution of Eq. (37) is \(u(y)=ln(9+y)\).

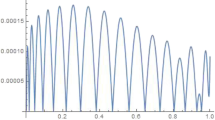

By applying GFCM to Eq. (37). The maximum absolute error (MAE) for different values of M are shown in Table 1. Also, Table 2 compares our results with this obtained in [7]. Moreover, Fig. 1 shows the absolute error for the case \(M=6\) and \(a=b=1\).

The error of Example 1 when M=6

Example 2

As given by Doha et al. [19], consider the initial value problem:

whose exact solution is given by \(u(y)=e^{\gamma {y}}\) and \(f(y)=\frac{e^{\gamma \,y}\,\gamma ^{\frac{2}{3}} \left[ \left( -3+\gamma ^{\frac{11}{6}}\,erf(\sqrt{\gamma \,y})\right) \,\Gamma (\frac{1}{3})+3\,\Gamma (\frac{1}{3},\gamma \,y)\right] }{\Gamma (\frac{1}{3})}\).

\(\Gamma (.)\) and \(\Gamma (.,.)\) are called gamma and incomplete gamma functions respectively [20] and \(\text {erf(y)}\) is defined as:

We apply GFCM. In Table 3 we list the MAE of Eq. (38) for the case \(a=b=1\). Table 4 compares our results with those obtained in [19]. Figure 2 shows the absolute error for the case \(M=20\) at \(\gamma =1\) and \(a=b=1\).

The error of Example 2 when \(M=20\) at \(\gamma =1\)

Example 3

As given by Doha et al. [4], consider the initial value problem:

where g(y) is chosen such that the exact solution is \(u(y)=\cos (\alpha y)\).

In Table 5, we introduce the MAE resulted from the application of GFCM for the case \(a=b=1\) and \(M=4,8,12\).

Example 4

As given by Keshavarz et al. [21], in this example we consider the following Riccati fractional differential equation:

For \(\beta =1\), the exact solution is \(u(y)=\frac{e^{2y}-1}{e^{2y}+1}\).

In Table 6 we compare our results with those obtained in [21]. Figure 3 shows that the approximate solutions have smaller variations for values of \(\beta \) near the value 1.

Different solutions of Example 4

Concluding Remarks

The current work derived a general operational matrix of fractional derivatives of the GFPs together with the appropriate recruitment spectral collocation method. The results given in “Illustrative Examples” section demonstrated a good accuracy of this algorithm. The proposed algorithm can be applied to treat different kinds of FDEs. Therefore, the algorithm is powerful and propitious.

References

Hesthaven, J., Gottlieb, S., Gottlieb, D.: Spectral Methods for Time-Dependent Problems. Cambridge University Press, Cambridge (2007)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods. Courier Corporation, North Chelmsford (2001)

Kopriva, D.A.: Implementing Spectral Methods for Partial Differential Equations, Algorithms for Engineers and Scientists. Springer, Berlin (2009)

Doha, E.H., Bhrawy, A.H., Ezz-Eldien, S.S.: A Chebyshev spectral method based on operational matrix for initial and boundary value problems of fractional order. Comput. Math. Appl. 62(5), 2364–2373 (2011)

Youssri, Y.H., Abd-Elhameed, W.M.: Numerical spectral Legendre–Galerkin algorithm for solving time fractional Telegraph equation. Rom. J. Phys. 63, 107 (2018)

Abd-Elhameed, W.M., Youssri, Y.H.: Spectral Tau Algorithm for certain coupled system of fractional differential equations via generalized Fibonacci polynomial sequence. Iran. J. Sci. Technol. 1-12 (2017)

Abd-Elhameed, W.M., Youssri, Y.H.: Fifth-kind orthonormal Chebyshev polynomial solutions for fractional differential equations. Comput. Appl. Math. 1-25 (2017)

Koshy, T.: Fibonacci and Lucas Numbers with Applications. Wiley, New York (2017)

Abd-Elhameed, W.M., Youssri, Y.H.: A novel operational matrix of Caputo fractional derivatives of Fibonacci polynomials. Entropy 18(10), 345 (2016)

Shen, S., Liu, F., Anh, V., Turner, I.: The fundamental solution and numerical solution of the riesz fractional advection dispersion equation. IMA J. Appl. Math. 73(6), 850–872 (2008)

Su, L., Wang, W., Xu, Q.: Finite difference methods for fractional dispersion equations. Appl. Math. Comput. 216(11), 3329–3334 (2010)

Celik, C., Duman, M.: Crank-Nicolson method for the fractional diffusion equation with the riesz fractional derivative. J. Comput. Phys. 231(4), 1743–1750 (2012)

Sweilam, N.H., Khader, M.M., Nagy, A.M.: Numerical solution of two-sided space-fractional wave equation using finite difference method. J. Comput. Appl. Math. 235(8), 2832–2841 (2011)

Daftardar-Gejji, V., Jafari, H.: Solving a multi-order fractional differential equation using Adomian decomposition. Appl. Math. Comput. 189(1), 541–548 (2007)

Abd-Elhameed, W.M., Youssri, Y.H.: New ultraspherical wavelets spectral solutions for fractional Riccati differential equations. Abstract Appl. Anal. (2014), 626275 (2014)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of their Solution and Some of their Applications. Academic, New York (1998)

Abd-Elhameed, W.M., Zeyada, N.A.: A generalization of generalized Fibonacci and generalized Pell numbers. Int. J. Math. Educ. Sci. Technol. 48(1), 102–107 (2017)

Abd-Elhameed, W.M., Zeyada, N.A.: New identities involving generalized Fibonacci and generalized Lucas numbers. Indian J. Pure Appl. Math. 49(3), 527–537 (2018)

Doha, E.H., Bhrawy, A.H., Ezz-Eldien, S.S.: Efficient Chebyshev spectral methods for solving multi-term fractional orders differential equations. Appl. Math. Model. 35(12), 5662–5672 (2011)

Jameson, G.J.O.: The incomplete gamma functions. Math. Gaz. 100, 298–306 (2016)

Keshavarz, E., Ordokhani, Y., Razzaghi, M.: Bernoulli wavelet operational matrix of fractional order integration and its applications in solving the fractional order differential equations. Appl. Math. Model. 38(24), 6038–6051 (2014)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Atta, A.G., Moatimid, G.M. & Youssri, Y.H. Generalized Fibonacci Operational Collocation Approach for Fractional Initial Value Problems. Int. J. Appl. Comput. Math 5, 9 (2019). https://doi.org/10.1007/s40819-018-0597-4

Published:

DOI: https://doi.org/10.1007/s40819-018-0597-4