Abstract

Fuzzy regression is a generalized regression model to represent the relationship between dependent and independent variables in a fuzzy environment. The fuzzy linear regression analysis seeks for regression models fitting well all the data based on a specific criterion. In this paper, an adaptive neuro-fuzzy inference system (ANFIS) is employed for the analysis and prediction of a nonparametric fuzzy regression function with non-fuzzy inputs and symmetric trapezoidal fuzzy outputs. To this end, two new hybrid algorithms are proposed in which the fuzzy least squares and linear programming have been used to optimize the secondary weights. The algorithms are applied to a multi-layered validation method to confirm the models’ reliability. In addition, three methods of nonparametric fuzzy regression with crisp inputs and asymmetric trapezoidal fuzzy outputs, are compared. Three nonparametric techniques in statistics, namely local linear smoothing (L-L-S), K-nearest neighbor smoothing (K-NN) and kernel smoothing (K-S) with trapezoidal fuzzy data have been analyzed to obtain the best smoothing parameters. The performance of the models is illustrated through numerical examples and simulations. More specifically, the accuracy of the algorithms is confirmed by exhaustive simulations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Regression is a very powerful method to understand the relationship between the dependent and independent variables. The theory of fuzzy sets was introduced in 1965 by Zadeh [1], who generalized the theory of formal and conventional collections. Fuzzy sets are potentially a wider field of operation, especially in the area of information processing and classification patterns. Fuzzy theory is able to infer, control, and make decisions against uncertainties, especially, for concepts of inaccurate and vague nature. Fuzzy regression, which firstly introduced by Tanaka et al. [2], is a simple modification of regression that it demonstrates the relationship among variables in a fuzzy environment. The expression “linear regression model” points out to situations where fuzzy membership functions are used to describe behaviors of the parameters instead of the probability distribution functions. The concept became more known by other researchers afterwards, for example, fuzzy regression methods by Ishobishi [3], and the least squares method by Diamond [4], chang and Lee [5] and Yang et al. [6].

In 1996, Karacapulos [7] showed that fuzzy neural systems, i.e., the integration of neural networks and fuzzy logic, have capabilities far beyond those of either system individually. This advantage has been mirrored in studies such as Ishobishi and Tanaka [8], Cheng and Lee [9]. Arnold [10] combined the neural network, fuzzy logic and genetic algorithm. Mosleh. et al. [11] used the fuzzy Neural Networks to evaluate fuzzy linear regression, fuzzy polynomial regression, fuzzy matrix equations, simulation and evaluation of fuzzy derivative equations. Statistical nonparametric smoothing techniques have achieved significant development in recent years. Danesh. et al. [12] used ANFIS in fuzzy nonparametric regression with triangular numbers in order to predict and estimate the data based on the least squares regression method. A regression analysis of fuzzy trapezoidal numbers with both fuzzy inputs and fuzzy outputs was performed by Sun and Lu [13].

Cheng and Lee [14] have extended the K-nearest neighbor (K-NN) and kernel smoothing (K-S) methods for the context of fuzzy nonparametric regression. Razzaghnia et al. [15, 16] used hybrid methods in nonparametric regression with triangular fuzzy data. Also, Razzaghnia [17] upgraded the neural network in fuzzy linear regression to avoid the effect of outliers. Skrjanc [18] focused on evolving fuzzy rule-based models and neuro-fuzzy networks for clustering, classification and regression and system identification, in online and real-time environments. Junhong et al. [19] proposed a new fuzzy regression model based on trapezoidal fuzzy number and least absolute deviation method. Deng and Zhao [20] used The adaptive control parameters to make the relatively uniform distribution, and solved the contradiction between expanding search and finding optimal solution. Khosravi and shahabi [21] introduced three Multi-Criteria Decision-Making (MCDM) analysis techniques, along with two machine learning methods. Liu et al. [22] proposed a new algorithm and as compared with some commonly used learning algorithms, such as support vector machine, the k-nearest neighbors and other combination algorithms.

In this paper, a fuzzy regression algorithm is proposed based on ANFIS whose aim is to reduce errors compared to other algorithms. The input data are assumed to be of fuzzy nature. The output estimated parameters must be better or at least overlap with the rival algorithms. The choice of trapezoidal membership function (MF) is due to their lower ambiguities against to the other MFs.

The advantages of the present study with respect to many of previous works can be summarized as follows:

One of the advantage of this work is to use trapezoidal data. In this paper, we extended the triangular fuzzy numbers to trapezoidal fuzzy numbers for avoiding unnecessary fuzziness of the linear fuzzy model in ANFIS. When a large membership grades are given to many observations, the linear fuzzy model with triangular fuzzy coefficients tends to have large fuzziness. In some cases, such large fuzziness numbers can be avoiding by using trapezoidal fuzzy numbers as fuzzy coefficients. The other advantage is to use V-fold Cross Validation technique for training ANFIS network. The proposed method and the smoothing methods are compared with the CV scale. The conducted simulation of experiments are shown that the performance of the proposed method is better than that of the smoothing methods, which reduces the CV. In the proposed approach when the observation numbers are increased, compared to the existing smoothing methods, the accuracy is increased. Generally, the proposed method (V-fold cross validation technique with trapezoidal data) reduces the fuzziness of the system and it has faster adaptation.

The rest of the paper is as follows. Section 2 presents the preliminary math of nonparametric fuzzy regression and trapezoidal MF needed for the sections afterwards. The validation method, smoothing methods for trapezoidal fuzzy numbers, ANFIS and the projected hybrid algorithm will be presented, respectively in Sects. 3 and 4 and 5. Section 6 gives some numerical examples receptively. Finally, Sect. 7 concludes the paper.

2 Definitions

In this section, the basic notations used in the paper are defined. We start by trapezoidal fuzzy number.

A fuzzy number \(\tilde{A}\) is a convex normalized fuzzy subset of the real line R with an upper semi-continuous membership function of bounded support [23].

Definition 1

A symmetric fuzzy number \(\tilde{A}\), denoted by \(\tilde{A}={(\alpha . c)}_{L}\) is defined as.

where α and c are the center and spread of \(\tilde{A}\) and \(L\left(x\right),\) a shape function of fuzzy numbers such that:

-

i.

\(L\left(x\right)=L\left(-x\right);\)

-

ii.

\(L\left(0\right)=1.\,\, L\left(1\right)=0;\)

-

iii

\(L\) is strictly decreasing on [0, \(\infty )\);

-

iv.

\(L\) is invertible on [0, 1].

The set of all symmetric fuzzy numbers is denoted by \({F}_{L}\left(R\right)\). Specifically, If \(L\left(x\right)=1-\left|x\right|\) the fuzzy number is a symmetric triangular fuzzy number [23].

Definition 2

An asymmetric trapezoidal fuzzy number \(\tilde{A}\), denoted by \(\tilde{A} = (a^{(1)} ,a^{(2)} ,a^{(3)} ,a^{(4)} )\), is defined as [24]:

where \(a^{(1)} ,a^{(2)} a^{(3)} ,a^{(4)}\) are four parameters of the asymmetric trapezoidal fuzzy number. (See Fig. 1).

Definition 3

For two trapezoidal fuzzy numbers \(\tilde{A}=({a}^{\left(1\right)}.{a}^{\left(2\right)}.{a}^{\left(3\right)}.{a}^{(4)})\) and \(\tilde{A}=({b}^{\left(1\right)}.{b}^{\left(2\right)}.{b}^{\left(3\right)}.{b}^{(4)})\), the Diamond distance [4] between \(\tilde{A}\) and \(\tilde{B}\) can be expressed as:

The Diamond distance measures the closeness of the MFs of the two trapezoidal fuzzy numbers. The definition three satisfies the distance properties, i.e.

-

\({d}^{2}\left(\tilde{A}.\tilde{B}\right)\ge 0\);

-

\({d}^{2}\left(\tilde{A}.\tilde{B}\right)=0\) \(\Leftrightarrow\) \(\tilde{A}=\tilde{B}.\) (i.e.\({a}^{(1)}={b}^{(1)}.{a}^{(2)}={b}^{(2)}.{a}^{(3)}={b}^{(3)}.{a}^{(4)}={b}^{(4)})\));

-

\({d}^{2}\left(\tilde{A}.\tilde{B}\right)\le {d}^{2}\left(\tilde{A}.\tilde{C}\right)+{d}^{2}\left(\tilde{C}.\tilde{B}\right).\)

Definition 4

Suppose that \(F=\{Y:Y=({Y}^{\left(1\right)}.{Y}^{\left(2\right)}.{Y}^{\left(3\right)}.{Y}^{\left(4\right)})\}\) be a set of all trapezoidal fuzzy numbers. The following univariate fuzzy regression model is considered by,

In which, \(\tilde{Y}\) is the dependent trapezoidal fuzzy number (output), variable \(X\) is an independent variable (input),\(\varepsilon\) is the error and \(\{+\}\) is an operator whose definition depends on the applied fuzzy ranking method. X’s domain is assumed to be \(D\). Thus, \(F(x)\) is a mapping \(D\to F\).

Definition 5

The CV is a measure for the difference between a fuzzy regression function \({\widehat{Y}}_{\mathrm{i}}=\left({Y}_{i}^{(1)}.{Y}_{i}^{(2)}.{Y}_{i}^{(3)}.{Y}_{i}^{(4)}\right)\) and its estimation \({\widehat{\stackrel{\sim }{\mathrm{Y}}}}_{\mathrm{i}}=\left({\widehat{Y}}_{i}^{(1)}.{\widehat{Y}}_{i}^{(2)}.{\widehat{Y}}_{i}^{(3)}.{\widehat{Y}}_{i}^{(4)}\right)\). Based on the Diamond distance, the CV is defined as,

Remark 1

In k-fold cross-validation (CV), the original samples are randomly partitioned into k equal size subsamples. Of these, one subsample is retained as the validation data for testing the model, and the remaining k − 1 subsamples are used as training data. The CV process is then repeated k times, with each of the k subsamples used exactly once as the validation data. The k results can then be averaged to produce a single estimation. In total, k fit models and k validation statistics are derived. The model giving the best validation statistic is chosen as the final model. This method is specifically useful for small data sets, because it makes efficient use of the limited data samples. In this paper we assume \(k=5\).

3 Smoothing Methods for Trapezoidal Fuzzy Numbers

The basic idea of smoothing is that if a function f is fairly smooth, then the observations made at and near \(x\) should contain information about value of \(x\). Thus, it should be possible to use local averaging of the data \(x\) to construct an estimator for \(F(x)\) which is called the smoother. There are several smoothing techniques. We proposed K-NNK-S and (L-L-S) methods for trapezoidal variable in this section.

In the following discussion, asymmetric trapezoidal fuzzy numbers are applied as asymmetric trapezoidal membership functions for deriving nonparametric regression model based on the smoothing parameters.

These models are considered as univariate fuzzy nonparametric regression model:

where Y is a trapezoidal fuzzy dependent variable as output and \(x\) is a crisp independent variable as the input whose domain and range are assumed to be D and \({\mathbb{R}}\). Also \(F\left(x\right)\) is the mapping \(D\to F\). The definition of the three smoothing methods for trapezoidal fuzzy variables is as follows:

3.1 Local Linear Smoothing Method (L-L-S)

Razzaghnia et al. [25] proposed the first linear regression analysis with trapezoidal coefficients. The asymmetric trapezoidal fuzzy numbers are applied as asymmetric trapezoidal membership functions for deriving bivariate regression model. A univariate regression model can be expressed as

This model can be rewritten as

where \(i=1. 2. \dots .n\), is the sample size and \({\stackrel{\sim }{Y}}_{i}=\left({Y}_{i}^{(1)}.{Y}_{i}^{(2)}.{Y}_{i}^{(3)}.{Y}_{i}^{(4)}\right)\) is the observed value for \(i=1. 2. \dots .n\). So \({\widehat{\stackrel{\sim }{Y}}}_{i.L}\) and \({\widehat{\stackrel{\sim }{Y}}}_{i.R}\) are the left bound and right bound of the predicted \({\widehat{\stackrel{\sim }{Y}}}_{i}\) at membership level h. Also \({\stackrel{\sim }{Y}}_{i.L}\) and \({\stackrel{\sim }{Y}}_{i.R}\) are left bound and right bounds of observed \({\stackrel{\sim }{Y}}_{i}\) at membership level h. Thereupon,

Let \(\left({X}_{i}.{\stackrel{\sim }{Y}}_{i}\right)\) be a sample of the observed crisp inputs and trapezoidal fuzzy outputs with underlying fuzzy regression function of model (6).

\(F(x)\) is estimated at any \(x\in D\) based on \((x_{i} .\tilde{Y}_{i} )\) for \(i=1. 2. \dots .n\). When the local linear smoothing technique is used, we shall estimate \({Y}^{\left(1\right)}\left(x\right). {Y}^{\left(2\right)}\left(x\right). {Y}^{\left(3\right)}\left(x\right)\) and \({Y}^{(4)}(x)\) for each \(x\in D\) by using the distance proposed by Diamond [4] as a measure of the fit. This distance is used to fit the fuzzy nonparametric model (5).

Let \({Y}^{\left(1\right)}\left(x\right).{Y}^{\left(2\right)}\left(x\right).{Y}^{\left(3\right)}\left(x\right)\) and \({Y}^{(4)}(x)\) have continuous derivatives in the domain \(x\in D\). Then for a given \({x}_{0}\in D\), The Taylor expansions of \({Y}^{\left(1\right)}\left(x\right).{Y}^{\left(2\right)}\left(x\right).{Y}^{\left(3\right)}\left(x\right)\) and \({Y}^{(4)}(x)\) can be locally approximated in neighborhood of \({x}_{0}\), respectively by the following linear functions:

where \({Y{^{\prime}}}^{\left(1\right)}\left({x}_{0}\right).{Y{^{\prime}}}^{\left(2\right)}\left({x}_{0}\right). {Y{^{\prime}}}^{(3)}({x}_{0})\) and \({{Y}{^{\prime}}}^{(4)}({x}_{0})\) are respectively, the derivatives of \({Y}^{\left(1\right)}\left(x\right).{Y}^{\left(2\right)}\left(x\right).{Y}^{\left(3\right)}\left(x\right)\) and \({Y}^{\left(4\right)}\left(x\right)\) based on Diamond distance. The local linear smoothing method estimated at \(x_{0}\) is:

by minimizing,

with respect to \({Y}_{i}^{\left(1\right)}.{Y}_{i}^{\left(2\right)}.{Y}_{i}^{\left(3\right)}.{Y}_{i}^{\left(4\right)}\) and \({\widehat{Y}}_{i}^{\left(1\right)}.{\widehat{Y}}_{i}^{\left(2\right)}.{\widehat{Y}}_{i}^{\left(3\right)}.{\widehat{Y}}_{i}^{\left(4\right)}\) for the given kernel k (\(\cdot\)) and the smoothing parameter h, where \({K}_{h}\left(\left|{x}_{i}-{x}_{0}\right|\right)=k\left(\frac{\frac{\left|{x}_{i}-{x}_{0}\right|}{h}}{h}\right)\) for \(i=1. 2. \dots .n\), are a sequence of weights at \({x}_{0}\). The two commonly use.

d kernel functions are parabolic shape functions:

and Gaussian function:

Also, by substituting (12) into (14), the following can be obtained

By solving this weighted least-squares problem, the following can be obtained for \({Y}^{\left(1\right)}\left(x\right)\text{,}{Y}^{\left(2\right)}\left(x\right)\text{,}{Y}^{\left(3\right)}\left(x\right)\text{,}{Y}^{\left(4\right)}\left(x\right)\) and \({{Y}^{^{\prime}}}^{\left(1\right)}\left(x\right)\text{,}\;{{Y}^{^{\prime}}}^{\left(2\right)}\left(x\right)\text{,}\;{{Y}^{^{\prime}}}^{\left(3\right)}\left(x\right)\text{,}\;{{Y}^{^{\prime}}}^{\left(4\right)}\left(x\right)\) at \({x}_{0}\). So the estimation \(F(x)\) at \({x}_{0}\) is: \(\widehat{\stackrel{\sim }{Y}}\left({x}_{0}\right)=({\widehat{Y}}^{\left(1\right)}\left({x}_{0}\right).{\widehat{Y}}^{\left(2\right)}\left({x}_{0}\right).{\widehat{Y}}^{\left(3\right)}\left({x}_{0}\right).{\widehat{Y}}^{\left(4\right)}\left({x}_{0}\right))\). Equation (17) has eight unknown parameters \({Y}^{\left(1\right)}\left(x\right).{Y}^{\left(2\right)}\left(x\right).{Y}^{\left(3\right)}\left(x\right).{Y}^{\left(4\right)}\left(x\right)\) and \({{Y}^{^{\prime}}}^{\left(1\right)}\left({x}_{0}\right).{{Y}^{^{\prime}}}^{\left(2\right)}\left({x}_{0}\right).{{Y}^{^{\prime}}}^{\left(3\right)}\left({x}_{0}\right).{{Y}^{^{\prime}}}^{\left(4\right)}\left({x}_{0}\right)\). In order to derive a formula for the unknown parameters of nonparametric regression based on minimizing this distance, the derivatives of Eq. (8) with respect to the eight unknown parameters need to be derived, set to zero and solve for the eight unknown parameters.

According to the principle of the weighted least-squares (and utilizing matrix notations), we can obtain

where \(X\left({x}_{0}\right)=\left(\begin{array}{cc}1& {x}_{1}-{x}_{0}\\ 1& {x}_{2}-{x}_{0}\\ \begin{array}{c}\vdots \\ 1\end{array}& \begin{array}{c}\vdots \\ {x}_{n}-{x}_{0}\end{array}\end{array}\right). \, {\stackrel{\sim }{Y}}^{(k)}=\left(\begin{array}{c}\begin{array}{c}{{Y}_{1}}^{(k)}\\ {{Y}_{2}}^{(k)}\end{array}\\ \begin{array}{c}\vdots \\ {{Y}_{n}}^{(k)}\end{array}\end{array}\right). \, \, \, \, {k }\text{=1, 2, 3, 4,} \,\) and \(W\left({x}_{0};h\right)={\text{Diag}}\left({K}_{h}\left(\left|{x}_{1}-{x}_{0}\right|\right).{K}_{h}\left(\left|{x}_{2}-{x}_{0}\right|\right).\dots .{K}_{h}\left(\left|{x}_{n}-{x}_{0}\right|\right)\right).\) is a \(n\times n\) diagonal matrix with its diagonal elements being \({K}_{h}(\left|{x}_{i}-{x}_{o}\right|)\) for \(i=1. 2. \dots .n\). If we suppose \({e}_{1}={(1. 0)}^{T}\) and \(H\left({x}_{0};h\right)={({X}^{T}\left({x}_{0}\right)W\left({x}_{0};h\right)X\left({x}_{0}\right))}^{-1}{X}^{T}\left({x}_{0}\right)W\left({x}_{0};h\right)\), then the estimate of \(F(x)\) at \({x}_{0}\) would be:

3.2 K-Nearest Neighbor Smoothing (K-NN)

The K-NN weight sequence was introduced by Loftsgaarden and Quesenberry [26] in the related field of density estimation and has been used by Cover and Hart [27] for classification purposes. The K-NN smother is defined as:

where\({\omega }_{j}\left(x\right)\begin{array}{c}for\end{array} j=1. 2.\dots .n\), is a the weight sequence at \(x\) and is defined as \(:\)

where \({J}_{x}\) is one of the K-nearest observations to \(x\) and \(\widehat{\stackrel{\sim }{{Y}_{i}}}\) is the estimate of the observations \(\stackrel{\sim }{{Y}_{i}}\)for \(i=1. 2. \dots . n\) and \({\stackrel{\sim }{Y}}_{i}=({Y}_{i}^{\left(1\right)}.{Y}_{i}^{\left(2\right)}.{Y}_{i}^{\left(3\right)}.{Y}_{i}^{\left(4\right)})\), asymmetric trapezoidal fuzzy numbers. Based on (20) and (21) we have

The K-NN smoothing parameter is the neighborhood size k. So if a relatively small neighborhood size is used, this will increase the regression noise; on the other hand, a relatively large neighborhood size will increase the regression error. So Remark 1 describes leave-one-out cross-validation for finding k optimal value which can be obtained by minimizing cross-validation criterion.

3.3 Kernel Smoothing (K-S)

K-NN smoothing is a weighted averaging neighborhood in which weights in neighborhood are treated equally. In kernel smoothing S(x) is defined by a fixed neighborhood around x. It is determined by kernel function and band of h. The fuzzy regression equations for kernel smoothing and K-NN smoothing are the same and represented by Eq. (20). In kernel smoothing method, \({\omega }_{j}\left({x}_{o}\right)\) for \(j=1.\dots .n\), is defined as

in which weight sequence is defined by \({K}_{h}\left(x\right)=\frac{1}{h}K\left(\frac{x}{h}\right)\), which is the kernel with scale factor. So the kernel smoothing parameter is band h and weight depends on smoothing parameter h.

3.4 Selection of Smoothing Parameters

The most important aspect for averaging techniques and local linear smoothing method is selecting the size of neighborhood to average k and parameter h. There are different methods for selecting parameter h such as the cross-validation method, and generalized cross validation which are used to obtain parameter h. Let \(\widehat{\stackrel{\sim }{Y}}\left({x}_{i}.h\right)=\left({\widehat{Y}}^{\left(1\right)}\left({x}_{i}.h\right).{\widehat{Y}}^{\left(2\right)}\left({x}_{i}.h\right).{\widehat{Y}}^{\left(3\right)}\left({x}_{i}.h\right).{\widehat{Y}}^{\left(4\right)}\left({x}_{i}.h\right)\right).\)

The fuzzified cross-validation procedure (\(CV\)) for selecting parameter h in local linear smoothing method based on Diamond distance is defined as:

where minimization gives the optimal value h, \(CV\left({h}_{0}\right)={\mathrm{min}}_{h>o}CV\left(h\right)\).

Indeed, \(h\) can be obtained by \(\mathrm{minimization}\) of \(CV\left(h\right)\). The selected optimal value of h by the \(CV\left(h\right)\) depends on the degree of smoothness of \({Y}_{iL}\) and \({Y}_{iR}\). Large value of h leads to lack-of-fit and small value of h to an over-fit.

Also the cross validation leave-one-out technique is used for selecting values k and h in K-NN and KS methods that are obtained by minimization of the cross-validation criterion. According to Stone [28], the \(CV\) criterion is defined as

where \({\widehat{\stackrel{\sim }{Y}}}_{b}\left({x}_{i}.{o}_{i}\right)=\sum_{j=1j\ne i}^{n}{\omega }_{j}({x}_{i}){\stackrel{\sim }{Y}}_{j}\) and \({D}_{i}\left(b\right)\) is the measure of difference and \({C}_{i}\left(b\right)\) is the measure of inclusion as in:

where P and Q are penalty terms defined as:

\(P=\left\{\begin{array}{c}1; \quad if {\left[{\stackrel{\sim }{Y}}_{i}\right]}_{\alpha }^{L}\le {\left[{\widehat{\stackrel{\sim }{Y}}}_{b}\left({x}_{i}.{o}_{i}\right)\right]}_{\alpha }^{L}.\\ \begin{array}{ccc}\begin{array}{cc}\begin{array}{cc}\begin{array}{cc}0;& \end{array}& \end{array}& \end{array}& & \end{array}Otherwise.\end{array}\right.\) and \(Q=\left\{\begin{array}{c}1; \quad if {\left[{\stackrel{\sim }{Y}}_{i}\right]}_{\alpha }^{R}\le {\left[{\widehat{\stackrel{\sim }{Y}}}_{b}\left({x}_{i}.{o}_{i}\right)\right]}_{\alpha }^{R}.\\ \begin{array}{ccc}\begin{array}{cc}\begin{array}{cc}\begin{array}{cc}0;& \end{array}& \end{array}& \end{array}& & \end{array}Otherwise.\end{array}\right.\)

To obtain\({D}_{i}\left(b\right)\), we will use difference measure of a trapezoidal fuzzy number A, by using the method of Chang and Lee [5], which is defined as: \(OM\left(A\right)={\int }_{0}^{1}\stackrel{\sim }{\omega }{[\upchi }_{1}\left(\nu \right){A}_{L}\left(\nu \right)+{\upchi }_{2}\left(\nu \right){A}_{R}\left(\nu \right)]d\nu .\) Thus \({D}_{i}\left(b\right)\) is calculated using Eq. (26). For the calculation of parameter b, we minimize the CV criterion Eq. (25) such that \({b}^{*}=argmin\left\{CV(b)\right\}\). So \({b}^{*}\) is the neighborhood size k in K-NN smoothing method and band h in kernel smoothing method.

4 Extension of the Proposed Method to Multivariate Input

It is straightforward to extent the proposed methods to the case of multivariate input. In fact, let \(X=\left({x}_{1}. {x}_{2}.\dots .{x}_{p}\right)\) be a p-dimensional crisp input and Y be a trapezoidal fuzzy output. The fuzzy nonparametric regression model can be written as follows:

4.1 K-Nearest Neighbor Smoothing (K-NN)

The K-NN smother is defined as:

where \({\omega }_{j}\left(x\right)\) for\(j=1. 2. \dots . n\), is a the weight sequence at \(x\) and is defined as \(:\)

where \({J}_{x}\) is one of the K-nearest observations to \(x\) and \(\widehat{{\stackrel{\sim }{Y}}_{i}}\), the estimate of the observations \({\stackrel{\sim }{Y}}_{i}\) for \(i=1. 2. \dots .n\) and \({\stackrel{\sim }{Y}}_{i}=\left({Y}_{i}^{\left(1\right)}.{Y}_{i}^{\left(2\right)}.{Y}_{i}^{\left(3\right)}.{Y}_{i}^{\left(4\right)}\right)\) be asymmetric trapezoidal fuzzy numbers. Based on (29) and (30) we have

The K-NN smoothing parameter is the neighborhood size k. \({\widehat {\tilde Y}_i}\left(l=1. 2. \dots .p\right)\) are computed for \(X=\left({x}_{1}. {x}_{2}. \dots . {x}_{p}\right)\); then \(\widehat{{\stackrel{\sim }{Y}}_{i}}=\frac{1}{n}{\widehat{\stackrel{\sim }{Y}}}_{il}\).

4.2 Kernel Smoothing Method

This is the same as K-Nearest neighbor smoothing method in which \({\omega }_{j}({X}_{o})\) for \(j=1. 2. \dots .n\), at \({X}_{0}\) are defined as:

4.3 Local Linear Smoothing

Suppose that \({Y}^{\left(1\right)}\left(X\right).{Y}^{\left(2\right)}\left(X\right).{Y}^{\left(3\right)}\left(X\right)\) and \({Y}^{\left(4\right)}\left(X\right)\) have continuous derivatives in the domain\(x\in D\). Then for a given \({x}_{0}\in D\) and Taylor's expansion, \({Y}^{\left(1\right)}\left(X\right).{Y}^{\left(2\right)}\left(X\right).{Y}^{\left(3\right)}\left(X\right)\) and \({Y}^{\left(4\right)}\left(X\right)\) can be locally approximated in neighborhood of \({x}_{0}\), respectively by the following linear functions:

where \({Y}^{\left(1\right)\left({x}_{j}\right)}\left({x}_{0}\right).{Y}^{\left(2\right)\left({x}_{j}\right)}\left({x}_{0}\right).{Y}^{(3)({x}_{j})}({x}_{0})\) and \({Y}^{(4)({x}_{j})}({x}_{0})\) are, respectively, the derivatives of \({Y}^{\left(1\right)}\left(X\right).{Y}^{\left(2\right)}\left(X\right).{Y}^{\left(3\right)}\left(X\right)\) and \({Y}^{\left(4\right)}\left(X\right)\) with respect to \(\left({x}_{j}\right)\) based on Diamond distance and the local linear smoothing method is estimated at \({x}_{0}\),

by minimizing:

with respect to \({Y}_{i}^{\left(1\right)}.{Y}_{i}^{\left(2\right)}.{Y}_{i}^{\left(3\right)},.{Y}_{i}^{\left(4\right)}\) and \({\widehat{Y}}_{i}^{\left(1\right)}.{\widehat{Y}}_{i}^{\left(2\right)}.{\widehat{Y}}_{i}^{\left(3\right)}.{\widehat{Y}}_{i}^{\left(4\right)}\) for the given kernel k(.) and smoothing parameter h, where \({K}_{h}\left(\Vert {X}_{i}-{X}_{0}\Vert \right)=k\left(\frac{\frac{\Vert {X}_{i}-{X}_{0}\Vert }{h}}{h}\right)\) for \(i=1. 2. \dots .n\) are a sequence of weights at\({X}_{0}\). Also, by substituting (33) into (35), the following can be obtained \(:\)

where \(\Vert {X}_{i}-{X}_{0}\Vert\) is Euclidean distance between \({X}_{i}\) and \({X}_{0}\) and

where \(X\left({x}_{0}\right)=\left(\begin{array}{cc}1& {x}_{11}-{x}_{01} ... {x}_{1p}-{x}_{0p}\\ 1& {x}_{21}-{x}_{01} ... {x}_{2p}-{x}_{0p}\\ \begin{array}{c}\vdots \\ 1\end{array}& \begin{array}{c}\vdots \\ {x}_{n1}-{x}_{01} ...{ x}_{np}-{x}_{0p}\end{array}\end{array}\right).\) \({\stackrel{\sim }{Y}}^{(k)}=\left(\begin{array}{c}\begin{array}{c}{{Y}_{1}}^{(k)}\\ {{Y}_{2}}^{(k)}\end{array}\\ \begin{array}{c}\vdots \\ {{Y}_{n}}^{(k)}\end{array}\end{array}\right). \, \, {\text{k}}=1, 2, 3, 4,\) and \(W\left({X}_{0};h\right)={\text{Diag}}({K}_{h}\left(\Vert {X}_{1}-{X}_{0}\Vert \right).{K}_{h}\left(\Vert {X}_{2}-{X}_{0}\Vert \right).\dots .{K}_{h}(\Vert {X}_{p}-{X}_{0}\Vert ))\)

is a \(n\times n\) diagonal matrix with its diagonal elements being \({K}_{h}(\Vert {x}_{i}-{x}_{o}\Vert )\) for \(i=1. 2.\dots .n\). If we suppose \({e}_{1}={(1. 0)}^{T}\) and

then the estimate of \(F(x)\) at \({x}_{0}\)is

5 Adaptive Neural Fuzzy Inference System (ANFIS)

ANFIS borrows its name from fuzzy deduction system which are based on open adaptive networks or adaptive neural fuzzy detection systems. It is a kind of artificial neural network based on Takagi–Sugeno fuzzy inference system. The network is a famous hybrid method, in which combinations of nodes \({o}_{k,i}\), are placed as layers for performing specific functions (Fig. 1).

Referring to Fig. 2, the first and second layers are called, the input and fuzzification layers. The third layer expresses the rules and number of Sugeno fuzzy logic deduction system. The output of each rule node \({\mu }_{{\stackrel{\sim }{A}}_{i}}\left({x}_{j}\right)\) is the multiplication of membership degrees coming from the second layer. The outputs of layer three, then drives the layer forth whose task is calculation of the normalized ignition level for each rule. The fifth layer is called the debugging layer, which produces the weighted outputs from the previous layers. The output of rules is calculated for each node and the last layer takes all the outputs then gives an algebraic summation. Table 1 presents layers of ANFIS.

5.1 The Proposed Hybrid Algorithm

The proposed hybrid algorithm benefits from both post-propagation and propagation techniques. First, the fuzzy least squares method is used to optimize the secondary parameters. In the second stage, when secondary parameters are obtained, the initial parameters are optimized using the slope slider algorithm. Here, we have used the MATLAB software package to implement the algorithms. Figure 3 shows the proposed hybrid algorithm.

Report network with the lowest error in the data output of the test along with the corresponding results.

6 Examples

In this section, a simulation examples and a numerical one are presented, in which the input is a crisp number and the output, a trapezoidal fuzzy number. We estimate the values of the parameters using ANFIS methods; then these results are compared with each other based on CV and the charts.

Example 1

The grinding is a process in which using an abrasive material, along with a grinding wheel. The aim is providing surfaces with precise tolerances, create optimal surface, creating accurate surface form and machining of hard and brittle materials (see Fig. 4).

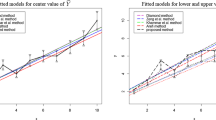

The trapezoidal fuzzy numbers have been constructed using Table 3 in Ref. [29]. The \({\stackrel{\sim }{Y}}_{i}\) is the roughness of a workpiece surface and input \(\mathrm{x}\) is the feed speed of a grinding wheel. The output \({\stackrel{\sim }{Y}}_{i}\) is measured by symmetric trapezoidal fuzzy numbers as \({\stackrel{\sim }{Y}}_{i}=\left({Y}_{i}^{(1)}.{Y}_{i}^{(2)}.{Y}_{i}^{(3)}.{Y}_{i}^{(4)}\right)\). The estimation is performed using ANFIS method. Thus, regarding this dataset, the nonparametric regression model is an appropriate one. The proposed method is used to fit the regression model while as before, CV is computed for validation purposes. In the proposed method, we use 5-layer validation technique. The results of the parameters estimation and those of the corresponding errors are listed in Tables 2 and 3, respectively. In this example, the network was convergent and, as a justification, the error value reaches a constant value with acceptable level after the training. The observed values and the predicted values of proposed method is shown in Fig. 5. The center lines, the upper and lower boundary lines of the real function and their estimates are plotted in the figure. The observed and estimated values of different smoothing methods (K- S, L-L-S and K-NN) are shown in Fig. 6, 7 and 8, respectively. In these figures, the center lines, the upper and lower boundary lines of the real function and their estimates are plotted. Moreover, the low CV value, as shown in Table 2, indicates that the proposed method tends to produce predicted values close to the observed values and in turn, has a remarkable accuracy in prediction. For different fitting methods, the different smoothing methods are applied such as K-NN, K- S and L-L-S technique. The value of parameter smoothing method in K-NN and K- S method is determined by cross validation method. Table 3 shows the values of the primary and secondary parameters of proposed method.

Example 2

In this example the function is considered to be\(f\left(x\right)=\frac{{x}^{2}}{5}+2{e}^\frac{x}{10}\). Suppose that \({x}_{i}s\) are uniformly random variables,within the interval [0,1] and i = 1, 2, …,100,

So that \({y}_{i}=f\left({X}_{i}\right)+rand[-0.5. 0.5]\) and \({e}_{i}=1/4f\left({X}_{i}\right)+rand[0. 1].\)

In this example we estimate the values by using the proposed method (ANFIS). So in this dataset, the nonparametric regression model is a convenient model. The proposed method is used to fit the regression model and, like the example above, we compute the amount of CV to evaluate the model. In the proposed method, we use 5-layer Validation technique. The low CV value, as shown in Table 4, indicates that the proposed method tends to produce predicted values close to the observed values and in turn, has a remarkable accuracy in prediction. The behavior of the proposed method is shown in Fig. 9, 10, 11 and 12 (like the above example). The values of the parameters obtained in the proposed algorithm are shown in Table 5, respectively. In this example, this network is convergent and, as can be seen, after the training, the error value reaches a constant value. Linear smoothing method, K-NN and kernel smoothing are applied to the fitting model. Gauss and Parabolic shape kernels are used to produce the weight sequence for local linear smoothing and kernel smoothing methods.

7 Conclusions

The method of adaptive fuzzy inference system for fitting non-parametric fuzzy regression model with crisp inputs and fuzzy outputs was investigated. Then two hybrid fuzzy regression algorithms have been proposed. Within the algorithm, a reduction slope method was used to optimize the initial parameters (fuzzy weights). The secondary parameters have been estimated by least squares Diamond method. It has been considered non-fuzzy inputs and trapezoidal fuzzy outputs. Simulation and numerical examples have been used to the evaluate performance of method. Using the results, the conducted simulation experiments are shown that the performance of the proposed method is better than that of the smoothing methods, which reduces the CV. In the proposed approach, when the observations numbers are increased, the accuracy is increased, in comparison with the existing smoothing methods. These advantages would make our algorithm an acceptable one to generate nonparametric regression functions. Thus current proposed method reduced the fuzziness of the system and it has faster adaptation.

Abbreviations

- \({MF}_{i}\) :

-

Membership function

- \(\stackrel{\sim }{{Y}_{i}}\) :

-

A trapezoidal fizzy number

- \({o}_{k.i}\) :

-

Placed as layers

- \(d^{2} \left( {\tilde{Y}_{i} \cdot \widehat{{Y_{i} }}} \right)\) :

-

Diamond distance measures

- \(\left( \widehat{{\widetilde{{Y_{i} }}}} \right)\) :

-

A predicted of a trapezoidal fuzzy number

- \({\omega }_{j}\left(x\right)\) :

-

Weight sequence at \(x\)

- \(CV\) :

-

Cross-validation

- k(.):

-

Kernel smoothing

References

Zadeh, A.: Fuzzy sets. Inform Control. 8, 338–353 (1965)

Tanaka, H., Uejima, S., Asia, K.: Linear regression analysis with fuzzy model. IEEE Trans. Syst. Man Cybernet. 12, 903–907 (1982)

Ishibuchi, H., Tanaka, H.: Fuzzy regression analysis using neural networks. Fuzzy Sets Syst. 50, 257–265 (1992)

Diamond, P.: Fuzzy least squares. Inf. Sci. 46, 141–157 (1988)

Chang, P.T., Lee, E.S.: A generalized fuzzy weighted least-squares regression. Fuzzy Sets Syst. 82, 289–298 (1996)

Yang, M.S., Lin, T.S.: Fuzzy least-squares linear regression analysis for fuzzy input-output data. Fuzzy Sets Syst. 126, 389–399 (2002)

Kartalopous, S.: Underestanding neural networks and fuzzy logic. IEEE Press, New York (1996)

Tanaka, H., Hayashi, I., Watada, J.: Possibilistic linear regression analysis for fuzzy data. Eur. J. Oper. Res. 40, 389–396 (1989)

Cheng, C.B., Lee, E.S.: Fuzzy regression with radial basis function networks. Fuzzy Sets Syst. 119, 291–301 (2001)

Arnold, S.: The merging of neural networks, fuzzy logic, and genetic algorithms. Insur. Math. Econ. 31(1), 115–131 (2002)

Mosleh, M., Otadi, M., Abbasbandy, S.: Evaluation of fuzzy regression model by fuzzy neural networks. J. Comput. Appl. Math. 234, 825–834 (2010)

Danesh, S., Farnoosh, R., Razzaghnia, T.: Fuzzy nonparametric regression based on an adaptive neuro-fuzzy inference system. Neuro comput. 173, 1450–1460 (2016)

Sun, J., Lu, Q.: Regression analysis of a kind of trapezoidal fuzzy numbers based on a shape preserving operator. Data Anal. Inf. Process. 5, 96–114 (2017)

Cheng, C.B., Lee, E.S.: Nonparametric fuzzy regression k-NN and kernel smoothing techniques. Comput. Math. Appl. 38, 239–251 (1999)

Razzaghnia, T., Danesh, S., Maleki, A.: Hybrid fuzzy regression with trapezoidal fuzzy data. In: Proceedings of the SPIE. 8349, 834921–1–6 (2011).

Razzaghnia, T., Danesh, S.: Nonparametric regression with trapezoidal fuzzy data. Int. J. Recent Innov. Trends Comput. Commun. (IJRITCC) 3, 3826–3831 (2015)

Razzaghnia, T.: Regression parameters prediction in data set with outliers using neural network. Hacettepe J. Math. Stat. 48(4), 1170–1184 (2019)

Škrjanc, I., Antonio Iglesias, J., Sanchis, A., Leite, D., Lughofer, E., Gomide, F.: Evolving fuzzy and neuro-fuzzy approaches in clustering, regression, identification, and classification: a survey. Inf. Sci. 490, 344–369 (2019)

Junhong, L., Zeng, W.X., Yin, Q.: A new fuzzy regression model based on least absolute deviation. Eng. Appl. Artif. Intell. 52, 54–64 (2016)

Deng, W., Zhao, A.: A novel collaborative optimization algorithm in solving complex optimization problems. Soft Comput. 21(15), 4387–4398 (2017)

Khosravia, K., Shahabib, H.: A comparative assessment of flood susceptibility modeling using multi-criteria decision-making analysis and machine learning methods. J. Hydrol. 573, 311–323 (2019)

Liu, T., Zhang, W., Mc Lean, P.: Electronic nose-based odor classification using genetic algorithms and fuzzy support vector machines. Int. J. Fuzzy Syst. 20, 1309–1320 (2018)

Dubois, D., Prade, H.: Fuzzy Sets and Systems: Theory and Application. Academic Press, New York (1980)

Ishibuchi, H., Nii, M.: Fuzzy regression using asymmetric fuzzy coefficients and fuzzified neural networks. Fuzzy Sets Syst. 119, 273–290 (2001)

Razzaghnia, T., Pasha, E., Khorram, E., Razzaghnia, A.: Fuzzy linear regression analysis with trapezoidal coefficients. First Joint Congress On Fuzzy and Intelligent Systems. Aug. 29–31 (2007).

Loftsgaarden, D.O., Quesenberry, G.P.: A nonparametric estimate of a multivariate density function. Ann. Math. Stat. 36, 1049–1051 (1965)

Hart, J.D.: Nonparametric Smoothing and Lack-of-Fit Tests. Springer, New York (1997)

Stone, M.: Cross validation choice and assessment of statistical predictions. J. Roy. Stat. Soc. 36, 111–147 (1974)

Lee, H., Tanaka, H.: Fuzzy approximations with non-symmetric fuzzy parameters in fuzzy regression analysis. J. Oper. Res. Soc. Jpn. 42, 98–112 (1999)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Rights and permissions

About this article

Cite this article

Naderkhani, R., Behzad, M.H., Razzaghnia, T. et al. Fuzzy Regression Analysis Based on Fuzzy Neural Networks Using Trapezoidal Data. Int. J. Fuzzy Syst. 23, 1267–1280 (2021). https://doi.org/10.1007/s40815-020-01033-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-020-01033-2