Abstract

This paper is devoted to constructing Wolfe and Mond–Weir dual models for interval-valued pseudoconvex optimization problem with equilibrium constraints, as well as providing weak and strong duality theorems for the same using the notion of contingent epiderivatives with pseudoconvex functions in real Banach spaces. First, we introduce the Mangasarian–Fromovitz type regularity condition and the two Wolfe and Mond–Weir dual models to such problem. Second, under suitable assumptions on the pseudoconvexity of objective and constraint functions, weak and strong duality theorems for the interval-valued pseudoconvex optimization problem with equilibrium constraints and its Mond–Weir and Wolfe dual problems are derived. An application of the obtained results for the GA-stationary vector to such interval-valued pseudoconvex optimization problem on sufficient optimality is presented. We also give several examples that illustrate our results in the paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Optimality condition and duality for interval-valued optimization problems with equilibrium constraints play a crucial role in nonlinear analysis and optimization theory because of their fields of application. For example, these problems arise frequently in various real-world problems such as robotics, fuzzy sets, robust optimizations, engineering designs, optimal controls, image restoration problems, power unit problems, optimal shape design problems, and molecular distance geometry problems, e.g., in Bot et al. (2009), Bonnel et al. (2005), Iusem and Mohebbi (2019), López and Still (2007), Luo et al. (1996), Mangasarian (1969), Mangasarian (1965), Mond and Weir (1981), Movahedian and Nabakhtian (2010), Pandey and Mishra (2016), Pandey and Mishra (2018), Suneja and Kohli (2011), Ye (2005) and Wolfe (1961) and the references therein. The interval-valued optimization problem with equilibrium constraints is extended from the constrained interval-valued optimization involving set, generalized inequality and equality constraints, which belongs to the class of constrained vector optimization problems in which the coefficients of objective and constraint functions are taken as closed intervals. These problems may provide an alternative choice for considering uncertainty in vector optimization. Extending the concept of lower–upper (in short, LU) optimal solution in Wu (2008), and as well as in Jayswal et al. (2011), we may receive the notion of lower–upper optimal solution to the interval-valued optimization problems with constraints, even in any vector optimization problem with equilibrium constraints. Based on the fact that the class of interval-valued nonlinear programming problems has been extensively studied on optimality and duality by many researchers in recent years, e.g., in Bhurjee and Panda (2015), Bhurjee and Panda (2016), Bot and Grad (2010), Jayswal et al. (2011), Jayswal et al. (2016), Luu and Mai (2018), More (1983), Wu (2008) and the construction of Wolfe and Mond–Weir dual models to these has not been established yet, we continue to study and develop these result on optimality and duality to the interval-valued optimization problem with equilibrium constraints and an application of the obtained results for these dual models will be presented in the literature.

There are many tools which involving generalized derivatives have been used for establishing optimality conditions and as well as strong and weak duality theorems in constrained interval-valued optimization problems. For example, under suitable assumptions on convexificators, Jayswal et al. (2016) obtained duality and optimality conditions for the interval-valued nonlinear programming problems without constraints in finite-dimensional spaces. More recently, Luu and Mai (2018) gave Fritz John and Karush–Kuhn–Tucker necessary and sufficient optimality conditions for the local lower–upper optimal solution of interval-valued optimization problem with set, inequality and equality constraints with locally Lipschitz functions and regular functions in the sense of Clarke. They also used these obtained results for establishing a Mond–Weir type dual model to such problem. As far as we known, there have not been results on strong and weak duality theorems as well as optimality conditions for the interval-valued optimization problem with equilibrium constraints with pseudo-convex functions in terms of contingent epiderivatives. The notion of contingent epiderivatives with pseudoconvex functions was first introduced in the book of Aubin and Frankowska (1990), used after by many other authors, which these is one of the good calculus tools for establishing optimality conditions in vector optimization (see, e.g. Aubin 1981; Aubin and Frankowska 1990; Jahn and Khan 2003, 2013; Jahn and Rauh 1997; Jiménez and Novo 2008; Jiménez et al. 2009; Luc 1989, 1991; Luu and Hang 2015; Luu and Su 2018; Rodríguez-Marín and Sama 2007a, b; Su 2016, 2018; Su and Hien 2019 and the references therein). These notion plays a key role for constructing Mond–Weir and Wolfe dual models for the interval-valued optimization problems with equilibrium constraints, and as well as for establishing weak and strong duality theorems for the same. Therefore, it would be interesting to construct two Wolfe and Mond–Weir dual models for the interval-valued optimization problems with equilibrium constraints, and investigate weak and strong duality theorems for the same. Besides, an application of these results for the GA-stationary vector is also mentioned.

Motivated and inspired by these observations, our main purpose in the paper is devoted to constructing two Mond–Weir and Wolfe dual models for the interval-valued optimization problem with equilibrium constraints in real Banach spaces in terms of contingent epiderivatives with pseudoconvex functions (also called as the interval-valued pseudoconvex optimization problem with equilibrium constraints in our paper). These epiderivatives combined with pseudoconvex functions, the weak and strong duality theorems are derived. As an application, sufficient optimality conditions for the lower–upper optimality solution (also known as LU-optimal solutions in this paper) of interval-valued pseudoconvex optimization problem with equilibrium constraints are also provided. The content of this paper is organized as follows. In Sect. 2, we give some preliminaries and recall the main notions of contingent derivative, epiderivative and hypoderivative for extended real-valued functions and then give the two concepts to the pseudoconvex functions in Banach spaces. Section 3 is devoted to formulating the two Wolfe and Mond–Weir dual models for the interval-valued pseudoconvex optimization problem with equilibrium constraints. Section 4 presents the results on weak and strong duality theorems for the interval-valued pseudoconvex optimization problem with equilibrium constraints and its Mond–Weir and Wolfe dual models. Section 5 deals with sufficient optimality conditions for the generalized alternatively stationary vector of interval-valued pseudoconvex optimization problem with equilibrium constraints. Some examples are also provided to illustrate the obtained result in the paper.

2 Preliminaries

In this section, we recall some basic concepts and results, which will be needed in what follows. The set of real numbers (resp., natural numbers) is denoted by \(\mathbb {R}\) (resp., \(\mathbb {N}\)), and the positive real number sequence \((t_n)_{n\ge 1}\) with limit 0 is expressed as \(t_n\rightarrow 0^+.\) We use the symbol \(\mathbb {R}^m_+\) to denote the nonnegative orthant of the \(m-\)dimensional Euclidean space \(\mathbb {R}^m,\) and use the symbol \(\text {int}\mathbb {R}^m_+\) to denote the interior of \(\mathbb {R}^m_+.\) Let X be a real Banach space and \(X^*\) topological dual space of X. We also use the symbol \(B({\overline{x}}, \delta ):=\{x\in X\,:\,\Vert x-{\overline{x}}\Vert <\delta \}\) to denote the open ball of radius \(\delta \) around \({\overline{x}}\in X.\) For each \(C\subset X,\) as usual we write \(\text {cl}C\) and \(\text {int}C\) instead of the closure and the interior of C, respectively. Let \(\emptyset \ne C\subset X\) and \({\overline{x}}\in \text {cl}C,\) the contingent cone to the set C at the point \({\overline{x}},\) is defined by

The normal cone to the set C at the point \({\overline{x}},\) is defined by

Let us denote \({\mathscr {I}}\) the set of all closed and bounded intervals in \(\mathbb {R}.\) For each \(A=[a_1, a_2]\in {\mathscr {I}},\)\(B=[b_1, b_2]\in {\mathscr {I}},\) a partial ordering for intervals can be formulated as follows (see More More 1983, for instance):

Let F be a mapping from X into \({\mathscr {I}},\) defined by

where \(F_1\) and \(F_2\) be two functions defined on X with \(F_1(x)\le F_2(x)\,\,\,(\forall \,x\in X). \) Let \(g: X\rightarrow \mathbb {R}^m,\)\(h: X\rightarrow \mathbb {R}^n,\)\(G: X\rightarrow \mathbb {R}^p\) and \(H: X\rightarrow \mathbb {R}^p\) be mappings defined on X and let C be a closed subset of X. Then \(g=(g_1,\ldots , g_m);\)\(h=(h_1, \ldots , h_n);\)\(G=(G_1,\ldots , G_p)\) and \(H=(H_1, \ldots , H_p).\) For the sake of convenience in the statements, one writes \(g_{I_g({\overline{x}})}\) instead of \((g_i)_{i\in I_g({\overline{x}})};\)\(g\le 0\) stands for \(g_i\le 0\) for all \(i\in I_m;\)\(h=0\) for \(h_i=0\) for all \(i\in I_n\) and \(G\ge 0\) (resp. \(H\ge 0\)) for \(G_i\ge 0\) (resp. \(H_i\ge 0\)) for every \(i\in I_p.\)

We consider an interval-valued pseudoconvex optimization problem with equilibrium constraints (shortly, IOPEC) of the following form:

where \(K:=\big \{x\in C: g(x)\le 0,\,h(x)=0,\,G(x)\ge 0,\, H(x)\ge 0,\,G(x)^TH(x)=0\big \}\) indicates the feasible set of problem (IOPEC), and \(^T\) signifies the transpose.

We now introduce the notion of local LU-optimal solution to the (IOPEC).

Definition 1

A vector \({\overline{x}}\in K\) is said to be a local LU-optimal solution for the problem (IOPEC) iff, there exists a real number \(\delta >0\) such that there is no \(x\in K\cap B({\overline{x}}, \delta )\) satisfying

which means that \(F(x)\le _I F({\overline{x}})\) and \(F(x)\ne F({\overline{x}}).\)

We describe next the relationship between interval-valued optimization problems and vector optimization problems in some details. If the mapping \({\widetilde{F}}: X\rightarrow \mathbb {R}^2\) is defined by \({\widetilde{F}}(x)=(F_1(x), F_2(x))\) for all \(x\in X,\) then a local LU-optimal solution of problem (IOPEC) will become a local weak minimum of the following vector optimization problem with equilibrium constraints:

In fact, by the definition one finds \(\delta >0\) such that there is no \(x\in K\cap B({\overline{x}}, \delta )\) satisfying either \(F_i(x)<F_i({\overline{x}})\)\((i=1,2),\) or \(F_i(x)<F_i({\overline{x}})\) and \(F_j(x)\le F_j({\overline{x}})\) for each \(i, j\in \{1, 2\},\,\,i\ne j,\) and so, \({\widetilde{F}}(x)-{\widetilde{F}}({\overline{x}})\not \in -\text {int}\,\mathbb {R}^2_+\) for any \(x\in K\cap B({\overline{x}}, \delta ).\)

We mention that this problem has been studied deeply, and the literature for duality results is very very rich. Many results regarding pseudoconvexity may be found in Bot, Grad and Wanka’s book in 2009 (see Bot et al. 2009, for instance). The difference between a local LU-optimal solution with a local efficient solution is only the order relation.

Regarding the vector equilibrium problems, consider a bifunction \({\hat{F}}: X\times X\rightarrow \mathbb {R}^2\) is defined by \( {\hat{F}}(x, y)={\widetilde{F}}(y)-{\widetilde{F}}(x)\) for any \(x, y\in X.\) Then for any local LU-optimal solution for the problem (IOPEC) will become local weakly efficient solution of the following vector equilibrium problem with equilibrium constraints (VEPEC): finding \({\overline{x}}\in K\) such that

for some \(\delta >0.\) We mention that if the vector \({\overline{x}}\) solves (2.1), then \({\overline{x}}\) is said to be a local weakly efficient solution for the problem (VEPEC).

Let a set-valued mapping S from X into a real Banach space Y with Q be a partial ordering cone in Y. For the sake of brevity, we recall the notion of domain, graph, epigraph and hypograph of S which will be defined, respectively, by

In the sequel, we provide the concepts of contingent derivative, epiderivative and hypoderivative, which were first introduced in the books of Aubin and Frankowska and Luc (see Aubin and Frankowska 1990; Luc 1989 for more details), used after by many authors such as Luc (1991), Rodríguez-Marín and Sama (2007a, b), and Su (2016, 2018); Su and Hien (2019).

Definition 2

(Aubin 1981; Aubin and Frankowska 1990; Luc 1989, 1991) The contingent derivative of S at \((x, y)\in \text {graph}S\) is the set-valued mapping \(D_c\,S(x, y)\) from X into Y which defined by

When \(S:=s\) is single valued, we set \(D_cs(x):=D_cs(x, s(x)).\) In this case, it is well known that definition 2 is of the following form (see Jiménez and Novo 2008, for instance):

Definition 3

(Aubin and Frankowska 1990) The contingent epiderivative of S at \((x, y)\in \text {graph}S\) is the single-valued mapping \({\underline{D}}\,S(x, y)\) from X into Y which is defined by

When \(S:=s\) is single valued, \(Y:=\mathbb {R},\)\(Q:=\mathbb {R}_+,\) the contingent epiderivative of s at \({\overline{x}}\in X\) is denoted as \(D_{\uparrow } s({\overline{x}})\) and given by (see Aubin and Frankowska 1990; Rodríguez-Marín and Sama 2007a, b, for instance)

The concept of contingent hypoderivatives \({\overline{D}}\,S(x, y)\) and \(D^{\downarrow }s({\overline{x}})u\) is defined similarly. It is not hard to check that \( D^{\downarrow }s({\overline{x}})=-D_{\uparrow }(-s)({\overline{x}}),\) where

Definition 4

(Aubin and Frankowska 1990) (Subdifferential) The contingent generalized gradient (or subdifferential) of an extended real-valued function s defined on X at \(x\in \text {dom}s\) is a closed convex subset \(\partial _{\uparrow }s(x)\) given by

Hereafter, we provide the concept of pseudo-convexity to the set-valued mapping S at the point \(({\overline{x}}, {\overline{y}})\in \text {graph}S,\) which plays a crucial role in the paper. We mention that the pseudo-convexity notion here is different. Pseudoconvex functions are a subclass of the quasi-convex functions which are differentiable and satisfies other properties. In this paper, we define pseudoconvexity using a weaker differentiability notion, that is, via the contingent derivative.

Definition 5

(Aubin and Frankowska 1990) We say that S is pseudo-convex at the point \(({\overline{x}}, {\overline{y}})\in \text {graph}S,\) iff

(i.e. if its graph is pseudo-convex at \(({\overline{x}}, {\overline{y}})\)).

Definition 6

(Aubin and Frankowska 1990) We say that an extended real-valued function s defined on X is pseudo-convex at the point \({\overline{x}}\in \text {dom}s,\) if its graph is pseudo-convex at the point \(({\overline{x}}, s({\overline{x}}))\). In addition, \(\pm s\) are said to be pseudoconvex at \({\overline{x}}\) iff, both s and \(-s\) are pseudo-convex at that point.

It is well known that an important property of pseudoconvex functions is that every local minimum is global minimum, e.g., in Mangasarian (1965). This important property is still true for the sense of the Definition 6. In fact, if \({\overline{x}}\in \text {dom}s\) is a local minimizer of s on X, in view of Theorem 6.1.9 in Aubin and Frankowska’s book (see Aubin and Frankowska 1990, for instance), \({\overline{x}}\) is a solution to the following variational inequality:

Consequently,

By observing (2.5), one has on the one side

On the other hand, it is well known that (see Rodríguez-Marín and Sama 2007a, b, for instance)

which combined with both (2.6) and (2.7), we deduce that

By the definition, \({\overline{x}}\) achieves the minimum of s, as it was checked.

3 The Wolfe and Mond–Weir dual models

Based on the fact that necessary and sufficient optimality conditions for the local LU-optimal solution of constrained interval-valued optimization problems were well known in many literature, e.g., in Bhurjee and Panda (2015, 2016), Jayswal et al. (2011, 2016), Wu (2008), Luu and Mai (2018), and the obtained results for the local efficient solution types of vector equilibrium problems, e.g., in Gong (2010), Luu and Hang (2015); Luu and Su (2018), our main aim here is to construct the two Wolfe and Mond–Weir dual models for the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) in terms of contingent epiderivatives.

Given a feasible vector \({\overline{x}}\in K,\) we denote the following index sets:

The set \(\beta \) is called the degenerate set. If the set \(\beta \) is null, then the vector \({\overline{x}}\) is said to satisfy the strict complementarity condition. Until now, for simplicity we use the notions \(\lambda _{\gamma }^G=(\lambda _i^G)_{i\in \gamma },\,\, \lambda _{\alpha }^H=(\lambda _i^H)_{i\in \alpha },\,\, \mu _{\gamma }^G=(\mu _i^G)_{i\in \gamma },\,\, \mu _{\alpha }^H=(\mu _i^H)_{i\in \alpha }\,\,\, \,\,\,\mu =(\mu ^h, \mu ^G, \mu ^H)\in \mathbb {R}^{n+2p}\) and \(\lambda =(\lambda ^g, \lambda ^h, \lambda ^G, \lambda ^H)\in \mathbb {R}^{m+n+2p}.\)

Definition 7

(Wolfe dual) The Wolfe dual (WIOPEC) for the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) is defined by

An example is provided as follows.

Example 1

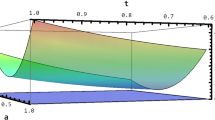

Let \(X=\mathbb {R},\)\(C=[0, 1]\) and \(m=n=p=1.\) Consider the interval-valued mapping \(F: \mathbb {R}\rightarrow {\mathscr {I}}\) is defined by \(F(x)=[F_1(x), \,\,F_2(x)]\) (\(\forall \,\, x\in \mathbb {R}\)), where

We have the following interval-valued pseudoconvex optimization problem (IOPEC) in \(\mathbb {R}:\)

It is evident that \(F(x)\in {\mathscr {I}}\) for all \(x\in C.\) By directly calculating, \(I_g({\overline{x}})=\beta =\{1\},\)\(\alpha =\gamma =\emptyset ,\) and for all \(u\in C,\) one obtains on the one side \(\partial _{\uparrow }F_1(u)=\{-1\},\,\,\,\, \partial _{\uparrow }F_2(u)=\{2u+1\},\)\(\partial _{\uparrow }g(u)=\{2u-1\},\,\,\,\, \partial _{\uparrow }(-G)(u)=\{-1\}\) and \( \partial _{\uparrow }(-H)(u)=\{0\}.\) On the other side, the normal cone to the set C at the point u is given by

Thus, the Wolfe dual problem (WIOPEC) for the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) is rewritten as follows:

Definition 8

(Mond–Weir dual)

The Mond–Weir dual (MWIOPEC) for the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) is defined by

The forthcoming example will be provided to illustrate the Mond–Weir dual (MWIOPEC) for the problem (IOPEC).

Example 2

Consider the interval-valued pseudoconvex optimization problem with equilibrium problem (IOPEC) is defined as in Example 1. Similarly, we also have the following Mond–Weir dual (MWIOPEC) for the problem (IOPEC):

4 Duality theorems

This section presents strong and weak duality theorems for the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) and its Wolfe and Mond–Weir dual models, say (WIOPEC) and (MWIOPEC), in terms of contingent epiderivatives with pseudoconvex functions in Banach spaces.

For the sake of convenience in the statements, for each feasible vector \({\overline{x}}\in K,\) we define the following index sets:

A weak duality theorem for the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) and its Wolfe dual (WIOPEC) will be derived.

Theorem 1

(Weak duality) Let \({\overline{x}}\) and \((u, s, \lambda )\) be the feasible vectors to the primal problem (IOPEC) and the dual problem (WIOPEC), respectively. Suppose that

- (i)

C is convex and \(L_{\mu }=\emptyset ;\)

- (ii)

The functions \(F_1, F_2\), \(g_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) are pseudoconvex at u.

Then for any x is feasible vector to the primal problem (IOPEC), we have

Proof

By the initial assumptions, one has the following system:

It follows from the convexity of C that \(x-u\in T(C, u).\) Since \((u, s, \lambda )\) is a feasible of (WIOPEC), there exist \(\xi _i\in \partial _{\uparrow }F_i(u)\)\((i=1, 2),\)\(\xi _i^g\in \partial _{\uparrow }g_i(u)\)\((i\in I_g),\)\(\xi ^h_j\in \partial _{\uparrow }h_j(u)\)\((j\in I_n),\)\(\eta ^h_j\in \partial _{\uparrow }(-h_j)(u)\)\((j\in I_n),\)\(\xi _i^G\in \partial _{\uparrow }(-G_i)(u)\) (\(i\in I_p\)) and \(\xi _i^H\in \partial _{\uparrow }(-H_i)(u)\) (\(i\in I_p\)) such that for each \(\xi _s:=s\xi _1+(1-s)\xi _2,\) one obtains

Because \(F_i\) (i=1,2) is a pseudoconvex function at u, which equivalents its graph is pseudoconvex at \((u, F_i(u)),\) i.e.

By taking \(y=x\) in (4.6), we deduce that

Therefore,

In the same way as above, we also obtain the following results:

If \(L_\mu =\emptyset \) then multiplying (4.9)–(4.15) by \(s>0,\)\(1-s>0,\)\(\lambda _i^g\ge 0\) (\(i\in I_g\)), \(\lambda _j^h>0\)\((j\in I_n),\)\(\mu _j^h>0\)\((j\in I_n),\)\(\lambda _i^G>0\) (\(i\in \nu _1\)), \(\lambda _i^H>0\) (\(i\in \nu _2\)), respectively, and adding (4.9)–(4.15), it holds that

In fact, the left-hand side of the inequality (4.16) is greater than or equal to

while the right-hand side of the preceding obtained inequality is equal to the right-hand side of the inequality (4.16), it means that (4.16) is valid. This combined with (4.5) yields that

Combining (4.1)–(4.4), we have

This along with (4.17) leads to

Setting \(\theta _j^h=\lambda _j^h-\mu _j^h\) for \(j\in I_n.\) Let us see that

Indeed, if (4.19) does not true, then there would be

By the definition, we have

or

or

Since \(s, 1-s \in ]0, 1[\) and \(s+(1-s)=1,\) the following strict inequality holds:

which contradicts (4.18). So we have checked that (4.19) holds, as it was shown. \(\square \)

To obtain strong duality theorem for the Wolfe dual (WIOPEC) and the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC), we also introduce next the Mangasarian–Fromovitz type regularity condition \((RC^{t}):\) there exist \(t\in \{1, 2\},\)\(v_0\in T(C, {\overline{x}})\) and positive real numbers \(a^g_i\) (\(i\in I_g({\overline{x}})\)), \(a_i^G\) (\(i\in \nu _1\)), \(a_i^H\) (\(i\in \nu _2\)), \(b_k\) (\(k\in \{1, 2\},\,\,k\ne t\)) such that

- (i)

\(\left\langle \xi _i^g, v_0\right\rangle \le -a^g_i\)\((\forall \,\xi _i^g\in \partial _{\uparrow }g_i({\overline{x}}),\,\,\forall \,i\in I_g({\overline{x}}));\)

\(\left\langle \xi _i^G, v_0\right\rangle \le -a^G_i\)\((\forall \,\xi _i^G\in \partial _{\uparrow }(-G_i)({\overline{x}}),\)\(\forall \,i\in \nu _1);\)

\(\left\langle \xi _i^H, v_0\right\rangle \le -a^H_i\)\((\forall \,\xi _i^H\in \partial _{\uparrow }(-H_i)({\overline{x}}),\,\,\forall \,i\in \nu _2);\)

\(\left\langle \xi _k, v_0\right\rangle \le - b_k\)\((\forall \,\xi _k\in \partial _{\uparrow }F_k({\overline{x}}),\, k\in \{1, 2\},\,\,k\ne t)\);

- (ii)

\(\left\langle \xi _i^h, v_0\right\rangle =0\)\((\forall \,\xi _j^h\in \partial _{\uparrow }h_j({\overline{x}}),\,\,\forall \,j\in I_n);\)

\(\left\langle \eta _i^h, v_0\right\rangle =0\)\((\forall \,\eta _j^h\in \partial _{\uparrow }(-h_j)({\overline{x}}),\,\,\forall \,j\in I_n).\)

Hereafter, we give a strong duality theorem for the problems (IOPEC) and (WIOPEC).

Theorem 2

(Strong duality) Let \({\overline{x}}\in K\) be a local LU-optimal solution to the interval-valued pseudoconvex optimization problem (IOPEC). Suppose that the functions \(F_1, F_2,\)\(g_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) are pseudo-convex at \({\overline{x}}.\) If dim\(X\,<\,+\infty ,\)C is convex, \(L_{\mu }=\emptyset \) and the regularity condition of the \((RC^t)\) type holds (for \(t=1, 2\)). Then there exist \({\overline{s}}\in \mathbb {R}\) and \({\overline{\lambda }}=\Big ({\overline{\lambda }}^g, {\overline{\lambda }}^h, {\overline{\lambda }}^G, {\overline{\lambda }}^H\Big )\in \mathbb {R}^{m+n+2p}\) such that \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is a LU- optimal solution of the dual (WIOPEC) and the respective objective values are equal.

Proof

Since \({\overline{x}}\) is a local LU-optimal solution of (IOPEC), there exists a real number \(\delta >0\) such that there is no \(x\in K\cap B({\overline{x}}, \delta )\) satisfying

We consider the mappings \({\widetilde{F}}(x)=(F_1(x), F_2(x))\) and \({\hat{F}}_{{\overline{x}}}(x)={\hat{F}}({\overline{x}}, x)={\widetilde{F}}(x)-{\widetilde{F}}({\overline{x}})\) for all \(x\in X.\) It is evident that

On the one hand, the vector \({\overline{x}}\) is a local weakly minimum to the following problem (MPEC):

Following Gong’s result Gong (2010), one can find a continuous positively homogeneous subadditive function f on \(\mathbb {R}^2\) such that

It can be easily seen that

On the other hand, using the continuity and subadditively of f, it holds that

Notice that the contingent generalized gradients of objective and constraint functions in (IOPEC) at \({\overline{x}}\) are closed convex subsets and further X is finite-dimensional space. Arguing similarly as for proving Theorem 4.1 and Corollary 3.2 (see Luu and Mai 2018, for instance), invoking the set \(\partial {\hat{F}}_{i, {\overline{x}}}({\overline{x}})\) instead of \(\partial _{\uparrow }{\hat{F}}_{i, {\overline{x}}}({\overline{x}})\)\((i=1,2),\) and the set \(\partial ^*g_i({\overline{x}})\,\,\,(i\in I({\overline{x}}))\) stands for the sets \(\partial _{\uparrow }g_i({\overline{x}})\) (\(i\in I_g({\overline{x}})\)), \(\partial _{\uparrow }(-G_i)({\overline{x}})\)\((i\in \nu _1)\) and \(\partial _{\uparrow }(-H_i)({\overline{x}})\)\((i\in \nu _2),\) there exists the feasible vector \((s, \lambda , \mu )\in \mathbb {R}^{1+m+2n+4p},\) where \(s\in [0, 1],\)\(\lambda =(\lambda ^g, \lambda ^h, \lambda ^G, \lambda ^H)\in \mathbb {R}^{m+n+2p}\) and \(\mu =(\mu ^h, \mu ^G, \mu ^H)\in \mathbb {R}^{n+2p}\) satisfying

By reasons of similarly, we proof only the case \(s<1\) under the regularity condition of the \((RC^t)\) type (for \(t=1, 2\)). Indeed, we may assume that this assertion holds for \(s=1,\) or equivalently,

For \(t=2,\) there exists \(v_0\in T(C, {\overline{x}})\) and there exist positive real numbers \(b_1,\)\(a^g_i\) (\(i\in I_g({\overline{x}})\)), \(a_i^G\) (\(i\in \nu _1\)), \(a_i^H\) (\(i\in \nu _2\)) satisfying

In other words, we can find the linear functions defined on X such as \(\xi _1\in \partial _{\uparrow }F_1({\overline{x}}),\)\(\xi _i^g\in \partial _{\uparrow }g_i({\overline{x}})\)\((i\in I_g({\overline{x}})),\)\(\xi ^h_j\in \partial _{\uparrow }h_j({\overline{x}})\)\((j\in I_n),\)\(\eta ^h_j\in \partial _{\uparrow }(-h_j)({\overline{x}})\)\((j\in I_n),\)\(\xi _i^G\in \partial _{\uparrow }(-G_i)({\overline{x}})\) (\(i\in I_p\)), and \(\xi _i^H\in \partial _{\uparrow }(-H_i)({\overline{x}})\) (\(i\in I_p\)) satisfying

Furthermore, we also have

which conflicts with (4.20). So the inequality \(0<s<1\) holds true. To finish the proof of theorem, we set \({\overline{s}}=s,\)\({\overline{\lambda }}={\lambda },\)\({\overline{\mu }}={\mu },\) it results that

Taking into account the definition of (WIOPEC), we shall be allowed to say that \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is a feasible vector of that problem. By virtue of Theorem 1, for \(\theta _j^h=\lambda _j^h-\mu _j^h\) (\(j\in I_n\)), the following result holds:

f or any feasible solution \((u, s, \lambda )\) for the Wolfe dual (WIOPEC). Since \(i\in I_g({\overline{x}}),\)\(g_i({\overline{x}})=0,\)\(j\in I_n,\)\(h_j({\overline{x}})=0\) and \(i\in \nu _1,\)\(G_i({\overline{x}})=0,\)\(i\in \nu _2,\)\(H_i({\overline{x}})=0,\) it holds that

This along with (4.21)–(4.22) yields that

Thus, the vector \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is an optimal solution to the Wolfe dual (WIOPEC) and, moreover, the respective objective values are equal, which completes the proof. \(\square \)

For the next result considering the objective and constraints functions are affine, we obtain a strong duality theorem for the problem (IOPEC) and the Wolfe dual (WIOPEC) as follows.

Corollary 1

(Strong duality) Let \({\overline{x}}\in K\) be a local LU-optimal solution to the interval-valued pseudoconvex optimization problem (IOPEC). Assume that the functions \(F_1, F_2,\)\(g_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) are affine. If dim\(X\,<\,+\infty ,\)C is convex, \(L_{\mu }=\emptyset \) and the regularity condition of the \((RC^t)\) type holds (for \(t=1, 2\)). Then there exist \({\overline{s}}\in \mathbb {R}\) and \({\overline{\lambda }}=\Big ({\overline{\lambda }}^g, {\overline{\lambda }}^h, {\overline{\lambda }}^G, {\overline{\lambda }}^H\Big )\in \mathbb {R}^{m+n+2p}\) such that \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is a LU- optimal solution of the dual (WIOPEC) and the respective objective values are equal.

Proof

Since the objective and constraint functions defined on X are affine, which entails that all them are pseudo-convex at \({\overline{x}}.\) By directly applying Theorem 2, we get the desired conclusion. \(\square \)

Remark 1

If \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is a LU-optimal solution to the problem (WIOPEC), then there is no \((u, s, \lambda )\) feasible solution for the problem (WIOPEC) such that

or

or

We next give an example to illustrate the strong duality theorem for the problem (IOPEC) and its Wolfe dual problem (WIOPEC).

Example 3

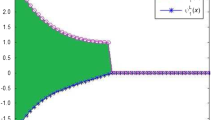

Let \(X=\mathbb {R},\)\(C=[0, 1]\) and \(m=n=p=1.\) Let \(F: \mathbb {R}\rightarrow {\mathscr {I}}\) be defined as \(F(x)=[F_1(x), \,\,F_2(x)]\) (\(\forall \,\, x\in \mathbb {R}\)), where

Consider the following interval-valued optimization problem (IOPEC) in \(\mathbb {R}:\)

It can be easily seen that \(F_1(x)\le F_2(x),\) and so, \(F(x)\in {\mathscr {I}}\) for all \(x\in C.\) By direct computation, the feasible set of problem (IOPEC) is \(K=\{0, 1\}.\) Obviously, for a real number \(0<\delta <1,\) there is no \(x\in K\cap B(0, \delta )\) such that \(F(x)<_I F({\overline{x}}),\) it means that \({\overline{x}}=0\) is a local LU-optimal solution for the problem (IOPEC). Invoking the concept of contingent cone T(C, u) with \(u\in C,\) it holds that

In other words, let \(u\in C\) be arbitrary, it is not difficult to see that \(\partial _{\uparrow }F_1(u)=\{-1\},\)\(\partial _{\uparrow }F_2(u)=\{2u-1\},\)\( \partial _{\uparrow }g(u)=\{2(u-1)\}, \)\(\partial _{\uparrow }(-G)(u)=\{-3\}\) and \(\partial _{\uparrow }(-H)(u)=\{2u-1\}.\) We thus conclude that the functions \(F_1, F_2,\)\(g_1,\)\(\pm h_1,\)\(-G_1\) and \(-H_1\) are pseudo-convex at \({\overline{x}}\) in the sense of Definition 6. Further, one gets \(I_g({\overline{x}})=\beta =\{1\},\)\(\alpha =\gamma =\emptyset ,\)C is convex, dim\((X)=1<+\infty ,\)\(L_{\mu }=\emptyset \) and the regularity condition of the \((RC^t)\) type holds for \(t=1, 2.\) Then the Wolfe dual problem (WIOPEC) for the interval-valued optimization problem with equilibrium constraints (IOPEC) is given as

Applying Theorem 2 above, we will be allowed to conclude that there exist \({\overline{s}}\in \mathbb {R}\) and \({\overline{\lambda }}=\Big ({\overline{\lambda }}_1^g, {\overline{\lambda }}_1^h, {\overline{\lambda }}_1^G, {\overline{\lambda }}_1^H\Big )\in \mathbb {R}^{4}\) such that \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is a LU- optimal solution of the Wolfe dual problem (WIOPEC) and the respective objective values are equal.

In what follows, a weak duality theorem for the Mond–Weir dual problem (MWIOPEC) and the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) will be stated as follows.

Theorem 3

(Weak duality) Let \({\overline{x}}\) and \((u, s, \lambda )\) be the feasible vectors to the interval-valued pseudoconvex optimization problem (IOPEC) and the dual problem (MWIOPEC), respectively. Assume that

- (i)

C is convex and \(L_{\mu }=\emptyset ;\)

- (ii)

The functions \(F_1, F_2, g_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) are pseudo-convex at u.

Then for any x is feasible vector to the primal problem (IOPEC), we have

Proof

Let x be a feasible vector to the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC). By an argument analogous to that used for the proof of Theorem 1, it holds that

Since \(g_i(u)\ge 0\,\,\,(i\in {I_g({\overline{x}})}),\,\,h_j(u)=0\,\,\,(j\in I_n),\,\,\, G_i(u)\le 0\,\,\,(i\in {\nu _1}),\,\,H_i(u)\le 0\)\((i\in {\nu _2}), \)\(\lambda ^g_i\ge 0\,\,\,(i\in {I_g({\overline{x}})}),\,\,\)\(\lambda _i^G\ge 0,\,\,\lambda _i^H\ge 0,\,\,\mu _i^G\ge 0,\,\,\mu _i^H\ge 0,\,\,i\in I_p,\)\(\lambda _{\gamma }^G=\lambda _{\alpha }^H= \mu _{\gamma }^G=\mu _{\alpha }^H=0,\,\,\,\)\(\forall \,i\in \beta ,\,\mu _i^G=0,\,\,\mu _i^H=0,\) it results in

Combining (4.23)–(4.24), we obtain that

Note that (4.25) is equivalent to

Therefore, the following systems are impossible:

This means that \(F(x)\not <_I F(u),\) which completes the proof. \(\square \)

Hereafter, we shall derive a strong duality theorem for the problem (IOPEC) and its Mond–Weir dual problem (MWIOPEC).

Theorem 4

(Strong duality) Let \({\overline{x}}\in K\) be a local LU-optimal solution to the interval-valued pseudoconvex optimization problem (IOPEC). Assume that the functions \(F_1, F_2, g_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) are pseudo-convex at \({\overline{x}}.\) If dim\(X\,<\,+\infty ,\)C is convex, \(L_{\mu }=\emptyset \) and the regularity condition of the \((RC^t)\) type holds (for \(t=1, 2\)) then there exist \({\overline{s}}\in \mathbb {R},\)\({\overline{\lambda }}=\Big ({\overline{\lambda }}^g, {\overline{\lambda }}^h, {\overline{\lambda }}^G, {\overline{\lambda }}^H\Big )\in \mathbb {R}^{m+n+2p}\) such that \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is a LU- optimal solution of the dual (MWIOPEC) and the respective objective values are equal.

Proof

By a similar argument as in the proof of Theorem 2 with observing Theorem 3, we get the desired conclusions. \(\square \)

Remark 2

According to Corollary 1, we see that the obtained result in Theorem 4 is still true in the sense the functions \(F_1, F_2, g_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) are affine.

We next give some examples to illustrate the above-obtained result as follows.

Example 4

Consider the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) is given as in Example 3. Then the Mond–Weir dual (MWIOPEC) for the problem (IOPEC) is rewritten as follows:

Thanks to the obtained result of Theorem 4, there exist \({\overline{s}}\in \mathbb {R}\) and \({\overline{\lambda }}=\Big ({\overline{\lambda }}_1^g, {\overline{\lambda }}_1^h, {\overline{\lambda }}_1^G, {\overline{\lambda }}_1^H\Big )\in \mathbb {R}^{4}\) such that \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is a LU- optimal solution of problem (MWIOPEC) and the respective objective values are equal. In fact, in this setting, we can verify directly that \(u=0,\) i.e., \(u\equiv {\overline{x}},\) the normal cone to C at u has form \(N(C, 0)=-\mathbb {R}_+,\) which proves that

They mean that there is no \(({\hat{u}}, {\hat{s}}, {\hat{\lambda }})\) feasible vector for the problem (MWIOPEC) such that \(F(u)<_I F({\hat{u}}), \) and Theorem 4 is, therefore, checked completely.

Example 5

Let \(X=\mathbb {R}^2,\)\(C=[0, 1]\times [0, 1],\)\({\overline{x}}=(0, 0),\)\(m=n=p=1,\) and the interval-valued mapping \(F: \mathbb {R}\rightarrow {\mathscr {I}}\) be defined by \(F(x)=[F_1(x), \,\,F_2(x)]\)\(\forall \,\, x=(x_1, x_2)\in \mathbb {R}^2,\) where

Consider the following interval-valued pseudoconvex optimization problem (IOPEC) in \(\mathbb {R}^2:\)

It is obvious that \(F_1(x)\le F_2(x),\) and so, \(F(x)\in {\mathscr {I}}\) for all \(x\in C.\) By directly calculating, the feasible set of problem (IOPEC) is of the form \(K=\{(a, a)\in \mathbb {R}: 0\le a\le 1\}.\) Taking \(\delta =\frac{1}{2},\) there is no \(x\in K\cap B(0, \delta )\) such that \(F(x)<_I F({\overline{x}}),\) which means that the vector \({\overline{x}}=(0, 0)\) is a local LU-optimal solution for the problem (IOPEC). By making use of the concept of contingent cone T(C, u) for any \(u=(u_1, u_2)\in C,\)

By the definition of normal cone N(C, u), one obtains on the one hand

On the other hand, by directly calculating for any \(u=(u_1, u_2)\in C,\)\(\partial _{\uparrow }F_1(u_1, u_2)=\{(-1, -1)\}\) and \(\partial _{\uparrow }F_1(0, u_2)=\{(1, -1)\},\)\(\partial _{\uparrow }F_2(u)=\{(0, -1)\}, \)\(\partial _{\uparrow }g(u)=\{(0, 2u_2-1)\},\)\(\partial _{\uparrow }(-G_1)(u)=\{(-1, -1)\}\) and \(\partial _{\uparrow }(-H_1)(u)=\{(1, -1)\}.\) It is not hard to verify that the mappings \(F_1, F_2,\)\(g_1,\)\(-G_1\) and \(-H_1\) are pseudo-convex at \({\overline{x}}\) in the sense of Definition 6. Further, one also gets \(I_g({\overline{x}})=\beta =\{1\},\)\(\alpha =\gamma =\emptyset ,\)C is convex, dim\((X)=2<+\infty ,\)\(L_{\mu }=\emptyset \) and the regularity condition of the \((RC^t)\) type holds for \(t=1, 2.\) Thus, the Mond–Weir dual problem (MWIOPEC) for the interval-valued pseudoconvex optimization problem (IOPEC) is expressed as

Thanks to the obtained result of Theorem 4, we shall be allowed to conclude that there exist \({\overline{s}}\in \mathbb {R}\) and \({\overline{\lambda }}=\Big ({\overline{\lambda }}_1^g, {\overline{\lambda }}_1^h, {\overline{\lambda }}_1^G, {\overline{\lambda }}_1^H\Big )\in \mathbb {R}^{4}\) such that \(({\overline{x}}, {\overline{s}}, {\overline{\lambda }})\) is a LU- optimal solution of the Mond–Weir dual (MWIOPEC) and the respective objective values are equal.

In fact, in this setting, we can verify directly that \(u=(0, 0),\) i.e., \(u\equiv {\overline{x}}\) and moreover, \(N(C, 0)=\mathbb {R}_-\times \mathbb {R}_-.\) It is plain that \(1+\lambda _1^g+\lambda _1^G+\lambda _1^H\le 0\) and \(s-\lambda _1^G+\lambda _1^H\ge 0,\) which means that there is no \(({\hat{u}}, {\hat{s}}, {\hat{\lambda }})\) feasible vector for (MWIOPEC) such that \(F(u)<_I F({\hat{u}}). \) So Theorem 4 is checked completely.

5 Applications

In this section, we shall derive sufficient optimality conditions for the generalized alternatively stationary (GA-stationary) vector of interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) involving the pseudo-convexity of objective and constraint functions. First of all, we introduce the concept for the GA-stationary vector of problem (IOPEC).

Definition 9

(GA-stationary vector) A feasible vector \({\overline{x}}\) of problem (IOPEC) is said to be the GA-stationary vector iff, there exist \(s\in \mathbb {R},\)\(\lambda =(\lambda ^g, \lambda ^h, \lambda ^G, \lambda ^H)\in \mathbb {R}^{m+n+2p}\) and \(\mu =(\mu ^h, \mu ^G, \mu ^H)\in \mathbb {R}^{n+2p}\) satisfying

In what follows, sufficient optimality conditions for the GA-stationary vector of the problem (IOPEC) will be derived.

Theorem 5

Let \({\overline{x}}\) be a feasible GA-stationary vector to the interval-valued pseudoconvex optimization problem (IOPEC). Suppose that

- (i)

C is convex and \(L_{\mu }=\emptyset ;\)

- (ii)

the functions \(F_1, F_2, g_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) are pseudo-convex at \({\overline{x}}\) with respect to C.

Then \({\overline{x}}\) is a LU-optimal solution of (IOPEC).

Proof

By invoking the Definition 1, we will be allowed to deduce that \({\overline{x}}\) is a LU-optimal solution of (IOPEC) iff, there is no \(x\in K\) satisfying

which means that \(F_1(x)\le F_1({\overline{x}}),\)\(F_2(x)\le F_2({\overline{x}})\) and \(F(x)\ne F({\overline{x}}).\) Let x be an arbitrarily feasible vector to the problem (IOPEC). Using the convexity of C, it ensures that \(x-{\overline{x}}\in T(C, {\overline{x}})\) because \(x\in C.\) Hence, \(\left\langle \xi , x-{\overline{x}}\right\rangle \ge 0\) for every \(\xi \in -N(C, {\overline{x}}).\) Since \({\overline{x}}\) is a feasible GA-stationary vector to the interval-valued pseudoconvex optimization problem (IOPEC), one finds \(\xi _i\in \partial _{\uparrow }F_i({\overline{x}})\)\((i=1, 2),\)\(\xi _i^g\in \partial _{\uparrow }g_i({\overline{x}})\)\((i\in I_g),\)\(\xi ^h_j\in \partial _{\uparrow }h_j({\overline{x}})\)\((j\in I_n),\)\(\eta ^h_j\in \partial _{\uparrow }(-h_j)({\overline{x}})\)\((j\in I_n),\)\(\xi _i^G\in \partial _{\uparrow }(-G_i)({\overline{x}})\) (\(i\in I_p\)) and \(\xi _i^H\in \partial _{\uparrow }(-H_i)({\overline{x}})\) (\(i\in I_p\)) satisfying

where \(\xi _s=s\xi _1+(1-s)\xi _2.\) Since \(F_i\) (i=1, 2) is pseudo-convex function at \({\overline{x}},\) it follows that its graph is pseudo-convex at \(({\overline{x}}, F_i({\overline{x}})),\) i.e.,

By taking \(y=x\) in (5.3), we deduce that

Therefore,

In the same way as above, we also obtain the following inequalities:

If \(L_\mu =\emptyset \) then multiplying (5.6)–(5.12) by \(s>0,\)\(1-s>0,\)\(\lambda _i^g\ge 0\) (\(i\in I_g\)), \(\lambda _j^h>0\)\((j\in I_n),\)\(\mu _j^h>0\)\((j\in I_n),\)\(\lambda _i^G>0\) (\(i\in \nu _1\)), \(\lambda _i^H>0\) (\(i\in \nu _2\)), respectively, and adding (5.6)–(5.12), it yields that

This together with (5.2) yields that

From the fact \(x\in K,\) we obtain

which combined with (5.13), we have the following inequality:

Together \(M({\overline{x}})=0\) with (5.13), we deduce that

Let us see that

Indeed, if it was not true, then there would be

By the definition, we obtain

Since \(s, 1-s \in ]0, 1[,\) the following strict inequality is valid:

which contradicting inequality (5.15), and the conclusion follows. \(\square \)

The following corollary is inspired by Theorem 5.

Corollary 2

Let \({\overline{x}}\) be a feasible GA-stationary vector to the interval-valued pseudoconvex optimization problem (IOPEC). Suppose that

- (i)

C is convex and \(L_{\mu }=\emptyset ;\)

- (ii)

the functions \(F_1, F_2,\)\(g_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) are affine.

Then \({\overline{x}}\) is a LU-optimal solution of problem (IOPEC).

Proof

Since the functions \(F_i\) (\(i\in I_g({\overline{x}})\)), \(\pm h_j\) (\(j\in I_n\)), \(-G_i\) (\(i\in \nu _1\)) and \(-H_i\) (\(i\in \nu _2\)) defined on X are affine, they are pseudo-convex at \({\overline{x}}.\) Thanks to the obtained result of Theorem 5, we deduce that the vector \({\overline{x}}\) is a LU-optimal solution of problem (IOPEC), which proves the claim. \(\square \)

Remark 3

In the sense that C may be taken as the whole space X, then the normal cone N(C, u) for any \(u\in C\) in the two Wolfe and Mond–Weir dual problems and in Definition 9 can be removed because

which ensures that \(N(C, u)=\{0\},\) as required.

6 Conclusion

We have formulated two Mond–Weir and Wolfe dual problems for the interval-valued pseudoconvex optimization problem with equilibrium constraints (IOPEC) in terms of contingent epiderivatives in real Banach spaces. We established the theorems on strong and weak duality for the problem (IOPEC) and its Wolfe and Mond–Weir dual problems (WIOPEC) and (MWIOPEC) in terms of contingent epiderivatives and subdifferentials with pseudoconvex functions. An application of the results for the GA-stationary vector of the problem (IOPEC) is presented. Our results in this article are new and therefore these results are not coincide with the existing one in the literature.

References

Aubin J-P (1981) Contingent derivatives of set-valued maps and existence of solutions to nonlinear inclusions and differential inclusions. In: L. Nachbin (ed) Advances in mathematics supplementary studies 7A. Academic Press, New York, pp 159–229

Aubin J-P, Frankowska H (1990) Set-valued analysis. Birkhauser, Boston

Bhurjee AK, Panda G (2015) Multi-objective interval fractional programming problems: an approach for obtaining efficient solutions. Opsearch 52:156–167

Bhurjee AK, Panda G (2016) Sufficient optimality conditions and duality theory for interval optimization problem. Ann Oper Res 243:335–348

Bot RI, Grad S-M (2010) Wolfe duality and Mond-Weir duality via perturbations. Nonlinear Anal Theory Methods Appl 73(2):374–384

Bot RI, Grad S-M, Wanka G (2009) Duality in vector optimization. Springer, Berlin

Bonnel H, Iusem AN, Svaiter BF (2005) Proximal methods in vector optimization. SIAM J Optim 15(4):953–970

Gong XH (2010) Scalarization and optimality conditions for vector equilibrium problems. Nonlinear Anal 73:3598–3612

Iusem AN, Mohebbi V (2019) Extragradient methods for vector equilibrium problems in Banach spaces. Numer Funct Anal Optim 40(9):993–1022

Jahn J, Khan AA (2003) Some calculus rules for contingent epiderivatives. Optimization 52(2):113–125

Jahn J, Khan AA (2013) The existence of contingent epiderivatives for set-valued maps. Appl Math Lett 16:1179–1185

Jahn J, Rauh R (1997) Contingent epiderivatives and set-valued optimization. Math Methods Oper Res 46:193–211

Jayswal A, Stancu-Minasian I, Ahmad I (2011) On sufficiency and duality for a class of interval-valued programming problems. Appl Math Comput 218:4119–4127

Jayswal A, Stancu-Minasian I, Banerjee J (2016) Optimality conditions and duality for interval-valued optimization problems using convexificators. Rend Cire Mat Palermo 65:17–32

Jiménez B, Novo V (2008) First order optimality conditions in vector optimization involving stable functions. Optimization 57(3):449–471

Jiménez B, Novo V, Sama M (2009) Scalarization and optimality conditions for strict minimizers in multiobjective optimization via contingent epiderivatives. J Math Anal Appl 352:788–798

López MA, Still G (2007) Semi-infinite programming. Eur J Oper Res 180:491–518

Luc DT (1989) Theory of vector optimization. In: Lecture notes in economics and mathematics systems, vol 319. Springer, Berlin

Luc DT (1991) Contingent derivatives of set-valued maps and applications to vector optimization. Math Program 50:99–111

Luo ZQ, Pang JS, Ralph D (1996) Mathematical problems with equilibrium constraints. Cambridge University Press, Cambridge

Luu DV, Mai TT (2018) Optimality and duality in constrained interval-valued optimization, 4OR- Q. J Oper Res 16:311–327

Luu DV, Hang DD (2015) On efficiency conditions for nonsmooth vector equilibrium problems with equilibrium constraints. Numer Funct Anal Optim 36:1622–1642

Luu DV, Su TV (2018) Contingent derivatives and necessary efficiency conditions for vector equilibrium problems with constraints. RAIRO Oper Res 52:543–559

Mangasarian OL (1969) Nonlinear Programming. McGraw-Hill, New York

Mangasarian OL (1965) Pseudo-convex functions. J SIAM Control Ser A 3:281–290

Mond M, Weir T (1981) Generallized concavity and duality. In: Generallized concavity in optimization and economics. Academic Press, New York

Movahedian N, Nabakhtian S (2010) Necessary and sufficient conditions for nonsmooth mathematical programs with equilibrium constraints. Nonlinear Anal 72:2694–2705

More RE (1983) Methods and applications for interval analysis. SIAM, Philadelphia

Pandey Y, Mishra SK (2016) Duality for nonsmooth optimization problems with equilibrium constraints, using convexificators. J Optim Theory Appl 17:694–707

Pandey Y, Mishra SK (2018) Optimality conditions and duality for semi-infinite mathematical programming problems with equilibrium constraints, using convexificators. Ann Oper Res 269:549–564

Rodríguez-Marín L, Sama M (2007) About contingent epiderivatives. J Math Anal Appl 327:745–762

Rodríguez-Marín L, Sama M (2007) Variational characterization of the contingent epiderivative. J Math Anal Appl 335:1374–1382

Su TV (2016) Optimality conditions for vector equilibrium problems in terms of contingent epiderivatives. Numer Funct Anal Optim 37:640–665

Su TV (2018) New optimality conditions for unconstrained vector equilibrium problem in terms of contingent derivatives in Banach spaces. 4OR- Q J Oper Res 16:173–198

Su TV, Hien ND (2019) Necessary and sufficient optimality conditions for constrained vector equilibrium problems using contingent hypoderivatives. Optim Eng. https://doi.org/10.1007/s11081-019-09464-z (to appear 2019)

Suneja SK, Kohli B (2011) Optimality and duality results for bilevel programming problem using convexificators. J Optim Theory Appl 150:1–19

Ye J (2005) J: Necessary and sufficient optimality conditions for mathematical program with equilibrium constraints. J Math Anal Appl 307:350–369

Wolfe P (1961) A duality theorem for nonlinear programming. Q J Appl Math 19:239–244

Wu H-C (2008) On interval-valued nonlinear programming problems. J Math Anal Appl 338:299–316

Acknowledgements

The author would like to express their sincere gratitude to the two anonymous reviewers for their through and helpful reviews which significantly improved the quality of the paper. Further the author acknowledges the editors for sending our manuscript to reviewers.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Ethical approval

This manuscript does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by Gabriel Haeser.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Van Su, T., Dinh, D.H. Duality results for interval-valued pseudoconvex optimization problem with equilibrium constraints with applications. Comp. Appl. Math. 39, 127 (2020). https://doi.org/10.1007/s40314-020-01153-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-020-01153-3

Keywords

- Interval-valued pseudoconvex optimization problem with equilibrium constraints

- Wolfe type dual

- Mond–Weir type dual

- Optimality conditions

- Subdifferentials

- Pseudoconvex functions