Abstract

In this paper, we propose a primal–dual interior-point method for semidefinite optimization problems. The algorithm is based on a new class of search directions and the Ai-Zhang’s wide neighborhood for monotone linear complementarity problems. The theoretical complexity of the new algorithm is calculated. It is investigated that the proposed algorithm has polynomial iteration complexity \(O(\sqrt{n}L)\) and coincides with the best known iteration bound for semidefinite optimization problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Semidefinite optimization (SDO) problems are a special class of convex programming, which have been recently intensively studied because of their applicability to various areas, such as combinatorial optimization (Alizadeh 1995), system and control theory (Boyd et al. 1994) or mechanical and electrical engineering. Due to importance of this class of optimization problems, various algorithms have been proposed for solving and finding their optimal solutions. Among these algorithms, interior-point methods (IPMs) are one of the most efficient and applicable class of iterative algorithms which solve SDO problems in polynomial time complexity.

The first IPMs for SDO problems were developed by Alizadeh (1991) and Nesterov and Nemirovskii (1994). Primal–dual IPMs for SDO problems have been widely studied by Wolkowicz et al. (1999) and Klerk (2002). Several authors such as Helmberg et al. (1996), Vandenberghe and Boyd (1995), Wang and Bai (2009), Mansouri and Roos (2009) and Mansouri (2012) have proposed some interior-point algorithms for solving SDO problems. Wang et al. (2014) proposed an interior-point algorithm and improved the complexity analysis of IPMs for SDO problems using the Nesterov–Todd (NT) direction as the search directions. However, among iterative algorithms, Mehrotra’s predictor–corrector (MPC) algorithm (Mehrotra 1992) is the most computationally successful iterative algorithm for SDO problems and because of its practical efficiency, it is the base of the most IPMs software package such as SeDuMi (Sturm 1999).

Ai (2004), introducing a new wide neighborhood of the central path, proposed an interior-point algorithm for linear complementarity problems (LCPs) and show that their algorithm enjoys the low iteration bound \(O\left(\sqrt{n}L\right)\) where \(n\) is the number of variables and \(L\) is the input data length. Later, several authors, based on this wide neighborhood, proposed some interior-point algorithms for various class of optimization problems. For instance, Li and Terlaky (2010) generalized the Ai et al.’s (2005) idea for LCP to SDO problems and proved that the iteration complexity of their algorithm is the same as that of Ai and Zhang (2005). Liu and Liu (2012) proposed the first wide neighborhood second-order corrector algorithm with the same complexity as small neighborhood IPMs for SDO problems.

Yang et al. (2013) suggested a second-order MPC algorithm for SDO problems and proved the convergence and polynomial complexity of their algorithm based on using an important inequality. Liu et al. (2013) presented a new wide neighborhood infeasible interior-point algorithm for symmetric cone optimization and proved that their algorithm has the same theoretical complexity bound as the best short step path-following algorithms. Feng and Fang (2014) proposed a predictor–corrector path-following interior-point algorithm for SDO problems. Their algorithm enjoys the low iteration bound \(O(\sqrt{n}L)\) which is better than that of usual wide neighborhood algorithm \(O(nL)\). Some wide neighborhood interior-point algorithms for sufficient LCPs have been proposed by Potra (2014) in which the proposed algorithms produce a sequence of iterates in the wide neighborhood of the central path given by Ai and Zhang (2005).

Recently, Feng et al. (2014) proposed a new primal–dual path-following interior-point algorithm for second-order cone programming using the Ai and Zhang (2005) wide neighborhood instead of classical wide neighborhood. Motivated by Feng et al. (2014), the main aim of this paper is to present a wide neighborhood feasible interior-point algorithm for SDO problems. The algorithm uses a new class of search directions and the wide neighborhood given by Ai and Zhang (2005). Furthermore, replacing the right-hand side of the complementarity equation in the system of KKT condition for SDO problems by a new term leads to a new class of search directions in our algorithm. We prove that by starting from an initial feasible point \(\left(X^0,\,y^0,\,S^0\right)\) in wide neighborhood of the central path, all generated iterations also belong to this wide neighborhood. Although, the algorithm belongs to the class of large-step algorithms we prove that the proposed algorithm enjoys the low complexity bound \(O(\sqrt{n}L)\) and it coincides with the best complexity bound in the context of wide neighborhood IPMs for SDO problems.

The rest of the paper is organized as follows. In Sect. 2, we introduce the SDO problem and review some basic concepts of IPMs such as central path and NT-search direction. We also introduce a wide neighborhood of the central path for SDO problem which plays an important role in convergence analysis of the algorithm. In Sect. 3, we present a new class of search directions and describe a general description of the proposed algorithm for SDO problem. Some technical lemmas and important results will be stated in Sect. 3.1. The convergence analysis and the proof of the polynomial complexity of the proposed algorithm are established in Sect. 4. Finally, the paper ends with some concluding remarks in Sect. 5.

We will use the following notations in the paper. \(\mathbb {R}^{n}\) denotes the space of vectors with \(n\) components. Moreover, \({S}^{n}\) denotes the set of \(n\times n\) real symmetric matrices. \(S_{++}^{n}\left(S_{+}^{n}\right)\) denotes the set of all matrices in \({S}^{n}\) which are positive definite (positive semidefinite). For \(Q\in {S}^{n}\), we write \(Q\succ 0\left(Q\succeq 0\right)\) if \(Q\) is positive definite (positive semidefinite). The Frobenius and the spectral norms are denoted respectively by \(\left\Vert \cdot \right\Vert _{F}\) and \(\left\Vert \cdot \right\Vert \). For any matrix \(A\), \(\lambda _{i}(A)\) denotes the \(i\)th eigenvalue of \(A\), \(\lambda _{\min }(A)\) the smallest eigenvalue of \(A\) and \(\mathbf {det}(A)\) its determinant whereas \(\mathbf {Tr}(A)=\sum _{i=1}^{n}\lambda _{i}(A)\) denotes its trace.

The symmetric positive definite square root matrix of any symmetric positive definite matrix \(X\) is denoted by \(X^\frac{1}{2}\). The notation \(A\sim B \Longleftrightarrow A=SBS^{-1}\) for some invertible matrix \(S\) means the similarity between \(A\) and \(B\), and the identity matrix is denoted by \(I\). For a given matrix \(Q\in S^{n},\) we show its eigenvalue decomposition as \(Q=U\Lambda U^{T}\). For any \(p\times q\) matrix \(A\), vec \(A\) denotes the \(pq\)-vector obtained by stacking the columns of \(A\) one by one from the first to the last column. The Kronecker product of two matrices \(A\) and \(B\) is denoted by \(A\otimes B\) (see Helmberg et al. 1996 for the more details of the Kronecker product). Finally, assuming the matrix \(Q\in S^{n}\), \(\lambda (Q)\) indicates the vector of eigenvalues of the matrix \(Q\) while \(Q^+\) and \(Q^-\) denote the positive and negative parts of \(Q\) as follows

where \((\lambda _{i})^{+}=\max \{\lambda _{i}, 0\}\) and \((\lambda _{i})^{-}=\min \{\lambda _{i}, 0\}\).

2 Interior-point methods for SDO problems

We consider the standard form of the SDO problem:

and its dual

where \(C, X, A_{i}\in S^{n}\) for \(i=1,2,\ldots ,m\) and \( y\in \mathbb {R}^{m}\). We assume that the relative interior set

is nonempty. Under this assumption, both problems (1) and (2) are solvable and the optimality conditions for them can be written as follows:

where the last equality is called the complementarity equation. The basic idea of primal–dual IPMs is to replace the complementarity equation \(XS=0\) by the parameterized perturbed equation \(XS=\tau \mu I\) with \(\mu > 0\) and \(\tau \in (0,1)\). By this substitution, we have the following parameterized system

If \(\mathcal {F}^0\ne \emptyset \), system (4) has a unique solution denoted by \((X(\mu ),y(\mu ), S(\mu ))\) for any positive parameter \(\mu \). The set of all such solutions constructs a guide curve, so called the central path, which converges to optimal solution pair of problems (1) and (2) as \(\mu \) reduces to zero (Kojima et al. 1997; Halicka et al. 2002). Since the left-hand side of (4) is a map from \(S^{n}\times \mathbb {R}^{m}\times S^{n}\) to \(\mathbb {R}^{n\times n}\times \mathbb {R}^{m}\times S^{n}\), it follows that system (4) is not a square system when \(X\) and \(S\) are restricted to \(S^{n}\). To remedy, assuming \(P\in \mathbb {R}^{n\times n}\) as a nonsingular matrix and using the symmetrization scheme

proposed by Zhang (1998), we replace the perturbed equation \(XS = \tau \mu I\) by \(H_P(XS) = \tau \mu I\) where the matrix \(P\) belongs to the specific class

Thus, system (4) can be rewritten in equivalent form as follows:

As it is well known, Newton’s method is a perfect procedure to solve a system of nonlinear equations. Replacing \((X,y,S)\) by \((X+\Delta X,y+\Delta y,S+\Delta S)\) in (6) and deleting the nonlinear term \(H_{P}(\Delta X\Delta S)\) from the third equation of (6), we can derive a new linearized Newton search direction system as follows

Different choices have been proposed for the nonsingular matrix \(P\). However, in our analysis, we use the matrix \(P=W^{\frac{1}{2}}\) proposed by Nesterov and Todd (1998) where

Let us further define

Then, the third equation in system (7) can be rewritten as

or equivalently as in Monteiro and Zhang (1998), in terms of the Kronecker product, it becomes

where

As in (Monteiro and Zhang 1998), for the choice \(P=W^{\frac{1}{2}}\), it is easy to check that \(PXSP^{-1}\in S^n\) and \(\hat{X}=\hat{S}\) and therefore \(\hat{E}=\hat{F}\).

As it is well known, the classical path-following interior-point algorithms for SDO problems are based on the following usual central path neighborhoods

and

where \(\tau \in (0,1)\) is a constant and \(\mu =\frac{\mathbf {Tr}(XS)}{n}\) is the normalized duality gap corresponding to \((X, y, S)\). In this paper, we define a new wide neighborhood as follows:

where \(\beta ,\tau \in (0,1)\) are given constants. The new neighborhood is similar to the proposed neighborhood by Ai and Zhang (2005). The following lemma indicates that the neighborhood \(\mathcal {N}(\tau ,\beta )\) is indeed a scaling invariant wide neighborhood. For the proof and more details see Sect. 2 in Feng and Fang (2014).

Lemma 2.1

If \(\beta ,\tau \in (0,1)\) are given constants, then \(\mathcal {N}_{\infty }^{-}(\tau )\subseteq \mathcal {N}(\tau ,\beta )\) and \(\mathcal {N}(\tau ,\beta )\) is scaling invariant. That is, \(\left(X,y,S\right)\) is in the neighborhood if and only if \(\left(\hat{X},y,\hat{S}\right)\) is.

3 Search directions and algorithm

In this section, motivated by Feng et al. (2014), we replace the right-hand side of the third equation in system (7) by a new term and obtain a class of new search directions for solving SDO problems. We also present a general description of feasible wide neighborhood interior-point algorithm for SDO problems.

Let \(V\) be a matrix related to the current iterate \((X,y,S)\) as follows:

where

According to (13) and (14), it is clear that

Introducing the new matrix \(tV-H_{P}(XS)\) with \(t\in [0,1]\) and replacing the right-hand side of the third equation in (7) by \(tV-H_{P}(XS)\), this system can be rewritten as follows:

or equivalently, in terms of the scaled search directions and Kronecker product

where \(\hat{E}\) and \(\hat{F}\) are defined as in (12) and \(\hat{A_{i}}=P^{-1}A_{i}P^{-1}\). After taking a full Newton step along \((\Delta X,\Delta y,\Delta S)\), the new iterate is given by

Below, we describe more precisely the wide neighborhood feasible interior-point algorithm for SDO problems.

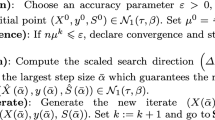

The wide neighborhood feasible algorithm for SDO problems

-

Input parameters: Required precision \(\varepsilon >0\), neighborhood parameters \(\beta ,\tau \in (0,\frac{1}{3}]\) and initial solution \(\left(X^{0},y^{0},S^{0}\right)\in \mathcal {N}(\tau ,\beta )\).

-

Output: A sequence of iterates \(\left({\hat{X}}^{k},y^{k},{\hat{S}}^{k}\right)\) for \(k=1,2,\ldots \)

-

step 0: Set \(k:=0\).

-

step 1: If \(n\mu ^{k}\le \varepsilon \), then stop. Otherwise go to step 2.

-

step 2: Let \(\left(\hat{X},y,\hat{S}\right)=({\hat{X}}^{k},y^{k},{\hat{S}}^{k})\) and \(\mu =\mu ^{k}\). Compute \(V=V^{k}\) from (13) and \(\left(\Delta \hat{X},\Delta y,\Delta \hat{S}\right)(t)\) from (17). Let \(\left(\hat{X}(t),y(t),\hat{S}(t)\right)=\left(\hat{X},y,\hat{S}\right)+\left(\Delta \hat{X},\Delta y,\Delta \hat{S}\right)(t)\) and find the smallest \(\bar{t}\) such that \(\left(\hat{X}(t),y(t),\hat{S}(t)\right)\in \mathcal {N}(\tau ,\beta )\), for any \(t\in [\bar{t},1]\).

-

step 3: Let \(t^{k}=\bar{t}\) and set \(({\hat{X}}^{k+1},y^{k+1},{\hat{S}}^{k+1})=(\hat{X}(t^{k}),y(t^{k}),\hat{S}(t^{k}))\) and \(\mu ^{k+1}=t^{k}\mu ^{k}\). Then, go to \(\mathbf {step 1}\).

Remark 1

In practice, we do not need to obtain an exact value for the parameter \(\bar{t}\). Similar to Ai (2004) and Feng et al. (2014) we only find an approximate value \(\hat{t}\) of \(\bar{t}\) such that \(\left(\hat{X}(t),y(t),\hat{S}(t)\right)\in \mathcal {N}(\tau ,\beta )\), \(\hat{t}\le 1-\frac{\alpha }{\sqrt{n}}\) and \(\hat{t}\le \gamma \bar{t}\), where \(\gamma \ge 1\) is a constant. This approximate value ensures the \(O(\sqrt{n}L)\)-iteration complexity as we prove in Theorem 4.1. An efficient method to find such an approximate value \(\hat{t}\) is the bisection method on the interval \([0,1-\frac{\alpha }{\sqrt{n}}]\), in which case we have \(\hat{t}\le 2\bar{t}\) and we can choose \(\gamma =2\).

3.1 Technical results

In this subsection, we present some technical lemmas which will be used in proof of convergence analysis of the proposed algorithm. According to (13) and (14), we have the following lemma.

Lemma 3.1

Let \(V\) and \(\eta \) be defined as (13) and (14). Then, \(\eta \in [0,1)\) and

Proof

The first part of the lemma can be easily concluded as follows:

On the other hand, using the definition of \(V\), we have

which concludes that \( V\succeq \tau \mu I\). This follows the second claim and completes the proof. \(\square \)

Lemma 3.2

After a full Newton-step one has

-

(i)

\(H_{P}\left(X(t)S(t)\right)=tV+H_{P}\left(\Delta X\Delta S\right),\)

-

(ii)

\(\mu (t)=t\mu .\)

Proof

To prove the first claim \(\mathbf {(i)}\), due to (18) and the third equation of (16), we have

On the other hand, due to (19), the orthogonality of the search directions \(\Delta X\) and \(\Delta S\) and (15) we have

which proves the second part of the lemma and ends the proof. \(\square \)

Lemma 3.3

Let \(\left(X,y,S\right)\in \mathcal {N}\left(\tau ,\beta \right)\). Then

Proof

Due to similarity of the matrices \(XS\) and \(S^{\frac{1}{2}}XS^{\frac{1}{2}}\), we have

which results (20). To prove (21), noticing the facts \(\mathbf {Tr}(Q^{+})=\left\Vert \lambda \left(Q^{+}\right) \right\Vert _{1}\) and \(\left\Vert x \right\Vert _{1}\le \sqrt{n}\left\Vert x \right\Vert _{\infty }\) for \(x\in {\mathbb {R}}^n\), we obtain

where the last inequality follows from \(\left(X,y,S\right)\in \mathcal {N}\left(\tau ,\beta \right)\). This follows the inequality (21) and completes the proof. \(\square \)

Corollary 1

Let \((X,y,S)\in \mathcal {N}(\tau ,\beta )\) and \(\beta ,\tau \in (0,\frac{1}{3}]\). Then, \(\eta \le \frac{1}{2}\sqrt{\frac{\beta \tau }{n}}\).

Proof

Considering the definition of \(\eta \), similarity of matrices \(\hat{X}\hat{S}\) and \(X^{\frac{1}{2}}SX^{\frac{1}{2}}\), and using Lemma 3.3 we immediately derive

where the last inequality follows from \(\beta ,\tau \in (0,\frac{1}{3}]\). This follows the result. \(\square \)

Now, we recall the following technical lemma from (Li and Terlaky 2010) which directly uses in Proof of Lemma 3.5.

Lemma 3.4

(Lemma 5.10 in Li and Terlaky 2010) Let \(X,S\in S_{++}^{n}\), \(P\in \mathcal {C}(X,S)\), \(\hat{X}\) and \(\hat{S}\) be defined by (9) and \(\hat{E}, \hat{F}\) be defined as in (12). Then

Lemma 3.5

Let \((X,y,S)\in \mathcal {N}(\tau ,\beta )\). Then

Proof

Using Lemma 3.4 with \(\hat{E}=\hat{F}\) and Corollary 1, we have

where the last equality is obtained because of \(\mathbf {Tr}\,\left(\hat{X}\hat{S}\right)=n\mu \). This follows the desired result. \(\square \)

Lemma 3.6

Let \((X,y,S)\in \mathcal {N}(\tau ,\beta )\). Then we have

Proof

Since \(\lambda _{\min }(\hat{E}^{2})=\lambda _{\min }(\hat{X}\hat{S})\ge (1-\beta )\tau \mu \), it follows that

where the first inequality is obtained because of the properties of norms and the last inequality follows because of \(\beta \le \frac{1}{3}\). This completes the proof. \(\square \)

Corollary 2

Let \((X,y,S)\in \mathcal {N}(\tau ,\beta )\). Then

Proof

According to the definition of the matrix \(V\), we have

Therefore, due to the orthogonality of the vectors \(\mathbf {vec}\,\left(\cdot \right)^{+}\) and \(\mathbf {vec}\,\left(\cdot \right)^{-}\) and Lemmas 3.5 and 3.6, we have

This completes the proof. \(\square \)

Lemma 3.7

Let \((X,y,S)\in \mathcal {N}(\tau ,\beta )\), \(0<\tau \le \beta \le \frac{1}{3}\) and \(1-\frac{\alpha }{\sqrt{n}}\le t\le 1\) such that \(\alpha \le \frac{1}{4}\sqrt{\beta \tau }\). Then, we have

Proof

We may write

where the last inequality follows from the fact \(\left(t+\frac{1}{4}\right)^{2}\le 2t\) for \(1-\frac{\alpha }{\sqrt{n}}\le t\le 1\) that \(\alpha \le \frac{1}{4}\sqrt{\beta \tau }\). This follows the desired result. \(\square \)

4 Convergence analysis

In this section, we investigate the proposed feasible algorithm is well defined and its complexity is \(O\left(\sqrt{n}\right)\). To this end, let us to state the following lemma which is useful for obtaining the iteration bound of the algorithm.

Lemma 4.1

Let \(P(t)\equiv \left(W(t)\right)^{\frac{1}{2}}\) where

Moreover, suppose that \(\left(\Delta X,\Delta y,\Delta S\right)\) is the solution of system (16). Then

Proof

Using the third equation of system (17) and the fact \(\hat{E}=\hat{F}\), we have

Taking Euclidean-norm squared of both side of the above equation, applying the orthogonality property of \(\mathbf {vec}{\Delta \hat{X}}\) and \(\mathbf {vec}{\Delta \hat{S}}\) and using Lemma 3.7 we get

which completes the proof. \(\square \)

We are ready to present the main result of the paper as follows.

Lemma 4.2

Assume that the current iterate \((X,y,S)\in \mathcal {N}(\tau ,\beta )\). Let \(0<\tau \le \beta \le \frac{1}{3}\) and \(1-\frac{\alpha }{\sqrt{n}}\le t\le 1\) such that \(\alpha \le \frac{1}{4}\sqrt{\beta \tau }\). Then, the new iterate \((X(t),y(t),S(t))\) generated by the feasible wide neighborhood algorithm belongs to \(\mathcal {N}(\tau ,\beta )\).

Proof

Using (19) and the facts \(\left\Vert \left(M+N\right)^{+} \right\Vert _{F}\le \left\Vert M^{+} \right\Vert _{F}+\left\Vert N^{+} \right\Vert _{F}\) and \(\left\Vert M^+ \right\Vert _{F}\le \left\Vert \left(H_{P}\left(M\right)\right)^+ \right\Vert _{F}\) (Lemmas 3.1 and 3.3 in Li and Terlaky 2010), we have

where the third equality follows because of the fact \(\left\Vert \left(\tau \mu I-V\right)^{+} \right\Vert _{F}=0\) and the last inequality is due to Lemma 4.1. On the other hand, due to (28) and similarity of matrices \(X(t)S(t)\) and \(X(t)^{\frac{1}{2}}S(t)X(t)^{\frac{1}{2}}\) we conclude

which reveals \({X}(t){S}(t)\) is non-singular matrix and further implies that \({X}(t)\) and \({S}(t)\) are non-singular as well. Using continuity of the eigenvalues of a symmetric matrix, it follows that \({X}(t)\) and \({S}(t)\) are positive definite matrices for all \(t\in [0,{1}]\), since \({X}, {S}\) are positive definite matrices. This proves that \(\left(X(t),y(t),S(t)\right)\in \mathcal {N}(\tau ,\beta )\) and completes the proof. \(\square \)

Theorem 4.1

The proposed feasible wide neighborhood algorithm terminates in at most \(O\left(\sqrt{n}{L}\right)\) iterations where \(L=\frac{1}{\alpha }\log \frac{\mathbf {Tr}\,\left(X^0S^0\right)}{\varepsilon }\) and \(\alpha =\frac{1}{4}\sqrt{\beta \tau }\).

Proof

According to Remark 1, we have \(t_{k}\simeq \hat{t}_{k}\le 1-\frac{\alpha }{\sqrt{n}}\). Since \(\mu (t)=t\mu \), it follows that

which implies \(n\mu ^{k}\le \varepsilon \) for \(k\ge \sqrt{n}L\). This follows the desired result and completes the proof. \(\square \)

5 Concluding remarks

In this paper, we proposed a new path-following wide neighborhood feasible interior-point algorithm for SDO problems. The algorithm is based on using a wide neighborhood and a new class of search directions. Although, the proposed algorithm belongs to the class of large-step algorithms, its complexity is coincide with the best iteration bound obtained by the short-step path-following algorithms for SDO problems.

References

Ai W (2004) Neighborhood-following algorithm for linear programming. Sci China Ser A 47:812–820

Ai W, Zhang SZ (2005) An \(O(\sqrt{n}L)\) iteration primal–dual path-following method, based on wide neighborhoods and large updates for monotone LCP. SIAM J Optim 16:400–417

Alizadeh F (1991) Combinatorial optimization with interior-point methods and semi-definite matrices. Ph.D. thesis, Computer Science Department, University of Minnesota, Minneapolis

Alizadeh F (1995) Interior point methods in semidefinite programming with applications to combinatorial optimization. SIAM J Optim 5:13–51

Boyd S, El Ghaoui L, Feron E, Balakrishnan V (1994) Linear matrix inequalities in system and control theory. SIAM, Philadelphia

Feng ZZ, Fang L, Guoping H (2014) An \(O(\sqrt{n}L)\) iteration primal–dual path-following method, based on wide neighbourhood and large update, for second-order cone programming. Optimization 63(5):679–691

Feng ZZ, Fang L (2014) A new \(O(\sqrt{n}L)\)-iteration predictor–corrector algorithm with wide neighborhood for semidefinite programming. J Comput Appl Math 256:65–76

Halicka M, Klerk E, Roos C (2002) On the convergence of the central path in semidefinite optimization. SIAM J Optim 12(40):1090–1099

Helmberg C, Rendl F, Vanderbei RJ, Wolkowicz H (1996) An interior-point method for semidefinite programming. SIAM J Optim 6:342–361

Klerk E (2002) Aspects of semidefinite programming: interior point methods and selected applications. Kluwer, Dordrecht

Kojima M, Shindoh M, Hara S (1997) Interior point methods for the monotone linear complementarity problem in symmetric matrices. SIAM J Optim 7:86–125

Li Y, Terlaky T (2010) A new class of large neighborhood path-following interior point algorithms for semidefinite optimization with \(O(\sqrt{n}\log \frac{Tr(X^{0}S^{0})}{\epsilon })\) iteration complexity. SIAM J Optim 20:2853–2875

Liu C, Liu H (2012) A new second-order corrector interior-point algorithm for semi-definite programming. J Math Oper Res 75:165–183

Liu H, Yang X, Liu C (2013) A new wide neighborhood primal–dual infeasible interior-point method for symetric cone programming. J Optim Theory Appl 158:796–815

Mansouri H, Roos C (2009) A new full-Newton step \(O(nL)\) infeasible interior-point algorithm for semidefinite optimization. Numer Algorithm 52(2):225–255

Mansouri H (2012) Full-Newton step infeasible interior-point algorithm for SDO problems. J Kybern 48(5):907–923

Mehrotra S (1992) On the implementation of a primal–dual interior point method. SIAM J Optim 2:575–601

Monteiro RDC, Zhang Y (1998) A unified analysis for a class of long-step primal–dual path-following interior-point algorithms for semidefinie programming. Math Program 81:281–299

Nesterov YE, Nemirovskii AS (1994) Interior-point polynomial algorithms in convex programming. SIAM, Philadelphia

Nesterov YE, Todd MJ (1998) Primal–dual interior-point methods for self-scaled cones. SIAM J Optim 8:324–364

Potra FA (2014) Interior point methods for sufficient horizontal LCP in a wide neighborhood of the central path with best known iteration complexity. SIAM J Optim 24(1):1–28

Sturm JF (1999) Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones. Optim Method Soft 11:625–653

Vandenberghe L, Boyd S (1995) A primal–dual potential reduction method for problems involving matrix inequalities. Math Program 69:205–236

Wang GQ, Bai YQ, Gao XY, Wang DZ (2014) Improved complexity analysis of full Nesterove–Todd step interior-point methods for semidefinite optimization. J Optim Theory Appl. doi:10.1007/s10957-014-0619-2

Wang GQ, Bai YQ (2009) A new primal–dual path-following interior-point algorithm for semidefinite optimization. J Math Anal Appl 353(1):339–349

Wolkowicz H, Saigal R, Vandenberghe L (1999) Handbook of semidefinite programming: theory, algorithms and applications. Kluwer, Norwell

Yang X, Liu H, Zhang Y (2013) A second-order Mehrotra-type predictor–corrector algorithm with a new wide neighborhood for semidefinte programming. Int J Comput Math 91(5):1082–1096

Zhang Y (1998) On extending some primal–dual algorithms from linear programming to semidefinite programming. SIAM J Optim 8:365–386

Acknowledgments

The authors would like to thank the anonymous referees for their useful comments and suggestions, which helped to improve the presentation of this paper. The authors also wish to thank Shahrekord University for financial support. The authors were also partially supported by the Center of Excellence for Mathematics, University of Shahrekord, Shahrekord, Iran.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Andreas Fischer.

Rights and permissions

About this article

Cite this article

Pirhaji, M., Mansouri, H. & Zangiabadi, M. An \(O\left(\sqrt{n}L\right)\) wide neighborhood interior-point algorithm for semidefinite optimization. Comp. Appl. Math. 36, 145–157 (2017). https://doi.org/10.1007/s40314-015-0220-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-015-0220-9