Abstract

This work is motivated by the need to perform the appropriate “robust” analysis on right-censored survival data. As in other domains of application, modelling and analysis of data generated by medical and biological studies are often unstable due to the presence of outliers and model misspecification. Use of robust techniques is helpful in this respect, and has often been the default in such situations. However, a large contaminating set of observations can often mean that the group is generated systematically by a model which is different from the one to which the majority of the data are attributed, rather than being stray outliers. The method of weighted likelihood estimating equations might provide a solution to this problem, where the different roots obtained can indicate the presence of distinct parametric clusters, rather than providing a single robust fit which ignores the observations incompatible with the major fitted component. Efron’s (J. Am. Stat. Assoc. 83, 402, 414–425, 1988) head-and-neck cancer data provide an ideal scenario for the application of such a method. A recently developed variant of the weighted likelihood method provides a nice illustration of the presence of different clusters in Efron’s data, and highlights the benefits of the weighted likelihood method in relation to classical robust techniques.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Analyzing survival data is a problem of immense practical interest. Survival data naturally occur in diverse medical research areas such as epidemiology, cancer research, biomedical experiments, etc., as well as in various fields such as industrial life testing, criminology, engineering, sociology, marketing and insurance. Motivated by the need to provide appropriate analysis of right-censored survival data involving a large proportion of outliers, the present paper develops a robust method of analysis for such right-censored survival data. In particular, we have attempted to provide a neat analysis of Efron’s head-and-neck cancer data set, Arm A (Efron, 1988) which exhibits several right-censored observations as well as an appreciable proportion of large outlying observations.

The application of robust statistics comes into play in such situations where one or more standard assumptions of parametric inference fail to hold. Robust procedures protect the analysis from degenerating into useless numbers under the presence of a group of observations which are incompatible with the rest of the data under the assumed model. Often robust procedures provide a nice fit to the majority of the observations in the sample while completely sacrificing a smaller but discordant minority. The application of robust techniques is increasingly becoming more popular as the need for stability under model misspecification and data contamination is becoming more and more real in the age of big data.

A robust method, however, generally tends to provide a single meaningful fit to the given data on the basis of the assumed model. Observations which stand out subsequent to the model fit may be termed as outliers. Yet it is possible that the group of outliers that are discarded by the robust model fit may themselves form a separate homogeneous cluster which may be appropriately described by a different model and is, sometimes, also of interest to us. Thus, apart from simply looking at a single robust fit to the entire data set, our aim, at times, is to uncover the different segments of the data set in question which clearly appears to be generated by different models. The primary aim of this paper is to demonstrate that our method can be useful when there are different groups in the data.

Efron’s head-and-neck cancer (Arm A) data set (Efron, 1988) is an obvious and prominent example of the type of situations we want to handle. It has a small but non-negligible proportion of moderately extreme outliers as well as a small proportion of censored observations. In addition, the largest observation in this case is an actual failure (and not a censored value). Thus we have a well defined and complete empirical estimate of the theoretical survival curve through the Kaplan-Meier product-limit estimator (Kaplan and Meier, 1958).

The rest of the paper is organized as follows. In Section 2 we describe the Arm A data set for the head-and-neck cancer study considered by Efron (1988). Section 3 is devoted to the description of the concept of the weighted likelihood estimation procedure developed in Biswas et al. (2015) and Majumder et al. (2016). Necessary adjustments via the Kaplan-Meier product-limit estimator have been done in Section 4 in order to accommodate censored observations in the analysis. Section 5 contains necessary theoretical results for the weighted likelihood estimator under the right-censored survival set up. The statistical analysis of Efron’s Arm A data set has been presented in Section 6. Some simulation results have been provided for exponential and Weibull distributions in Section 7. A tuning parameter selection technique is described in Section 8. Finally, Section 9 has some concluding remarks. All the numerical computations have been performed using the R programming language.

It is also pertinent to mention here that what we have demonstrated in this paper is an application of the robust and efficient weighted likelihood methodology that is general and not specific to this particular variant (Biswas et al. 2015; Majumder et al. 2016) of the weighted likelihood method alone. This inference procedure would work equally well with the weighted likelihood approach of Markatou et al. (1997) and Markatou et al. (1998). In addition, our method will work perfectly well as the robust estimator of choice when there is a single and prominent majority component in the data with the other observations being stray outliers, and a single robust fit is desired. We primarily emphasize the property of identifying discordant model clusters, rather than the robustness issue per se, because we want to highlight its novelty with relation to the former.

2 Efron’s Head-and-Neck Cancer Data

Efron (1988) considered two batches of head-and-neck cancer patients for the experiment conducted by the Northern California Oncology Group. The two batches, denoted Arm A and Arm B, differ in the medication administered to them. The first batch of patients was treated only with radiation therapy whereas the second batch received both radiation therapy and chemotherapy. Both batches have some large outlying observations as well as a few censored observations. We wish to study such data, particularly that in Arm A, in the present exposition. The survival times (in days) recorded for the patients in Arm A are provided in Table 1 (“+” indicates a censored observation).

The data in Arm B can also be studied by the method presented here. However, in case of Arm B, the last observation is censored (and is not an actual failure), so we do not have a complete estimate of the survival curve through the Kaplan-Meier approach, and some artificial method of completing it has to be adopted. We plan to tackle such cases in a sequel paper.

A quick look at the data, as well as the kernel density plots of Figs. 1 and 2 appear to indicate there are two distinct clusters in the data, one constituted by the first forty four observations, the other by the last seven. We will make use of this fact later in this paper.

3 Weighted Likelihood Estimation

The likelihood is normally defined as the product of the density values at the data points under the assumed model. All the observations in the sample get uniform treatment in the construction of the likelihood. Maximizing the likelihood with respect to the parameter, therefore, leads to an estimator in which contributions from all the sample points get the same, uniform weight. Similarly, in the maximum likelihood score equation, the score function is the ordinary sum of the scores of each observation. In case observations incompatible with respect to the model are present in the sample, there may be a considerable amount of influence of these points on the estimation process which may often lead to inaccurate inference. Consequently, the usefulness of the maximum likelihood estimator (MLE) is often questionable in such cases.

One of the objectives of robust statistical procedures in these situations is to develop an estimator on which the impact of the outliers should not be as high as their impact on the MLE. At the same time, the asymptotic efficiency of the estimator under the true model should not be unduly low in comparison with the MLE. Weighting the likelihood so that the observations incompatible with the model get lower weights and do not significantly affect the estimation process is one such strategy.

A variety of weighted likelihood estimation methods have been developed in the literature. In the weighted likelihood strategy proposed by Biswas et al. (2015), we obtain an estimator which is simultaneously robust and asymptotically fully efficient. The idea and form of this method closely resembles that of Markatou et al. (1998), which is another approach which could have produced similar results in this context. It has been shown in Majumder et al. (2016) that the Biswas et al. estimator enjoys many important theoretical properties (as also does the Markatou et al., 1998, method), e.g., full asymptotic efficiency at the model and location-scale equivariance. Instead of the density function, the sample (Fn(⋅) or Sn(⋅)) and model (F𝜃(⋅) or S𝜃(⋅)) cumulative distribution functions (CDF) and survival functions have been employed in Biswas et al. (2015). The idea is to construct a residual function τn,𝜃(⋅), similar to the Pearson residual (Lindsay, 1994), which is defined by

where p ∈ (0,0.5] is a tuning parameter determining the proportion of observations to be downweighted. So, essentially we are keeping the contribution of the sample points coming from the central 100 × (1 − 2p)% area of the model distribution intact. Observations representing the tails of the distribution are possible candidates for downweighting. But we will downweight them only if they appear to be incompatible with the model in relation to the rest of the data. Based on the residual in Eq. (1), the observations are weighted by a suitable function H(⋅). The function H(⋅) is chosen to be a nonnegative unimodal function, with the maximum value 1 when the residual τn,𝜃(x) equals zero; the tails decrease smoothly on both sides to downweight the effect of discordant observations. In particular, the function H(⋅) drops to zero as its argument runs away to (positive) infinity. Detailed construction of the weight function H(⋅) along with some examples has been provided in Majumder et al. (2016). One such example of H-function obtained as suitable ratios of the gamma density gα,β is given by

where β > 1 is the shape parameter and α = 1/(β − 1) is the scale parameter necessary for the function HGamma(⋅) to have the right properties. Another choice for H may be on the basis of the Weibull density hα,β, with shape parameter β and scale parameter α = (1 − 1/β)− 1/β, β > 1, which leads to the weight function

Based on the above formulation, the proposed weight function is then defined by

We now introduce the basic mathematical set up for the subsequent weighted likelihood analyses. Let X1,X2,⋯ ,Xn be independent and identically distributed (i.i.d.) observations from an unknown distribution, which we model by the family \( \mathcal {F}_{\Theta } = \{F_{\theta }: \theta \in {\Theta } \subset \mathbb {R}^{d} \} \). The aim is to estimate the unknown parameter 𝜃 that best fits the data at hand. The maximum likelihood estimator of 𝜃 is obtained by solving the estimating equation

where \( u_{\theta }(x) = \frac {\partial }{\partial \theta }\log f_{\theta }(x) \) is the likelihood score function. In weighted likelihood estimation, the estimator is the solution to

where the weight w𝜃(⋅) is as in Eq. (4). This strategy downweights outlying observations, but does so adaptively, so that it does not necessarily downweight a proportion of the observations. This provides an intuitive explanation of why the estimators are simultaneously robust and asymptotically fully efficient.

4 Weighted Likelihood Estimation for Right-Censored Data

In the earlier section, we described the weighted likelihood strategy developed for i.i.d. data. In this section we are going to describe the appropriate extension under which it may be used in the survival analysis situation to accommodate the censored observations. The precise mathematical set up is as follows.

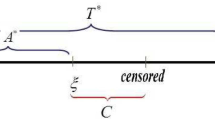

Let T1,T2,…,Tn represent the lifetimes of a group of individuals (or the failure times of a certain brand of products) obeying the law F𝜃(⋅) (with density f𝜃(⋅) and survival function S𝜃(⋅)). Letting T denote the lifetime variable, F𝜃 and S𝜃 are defined as

It may not always be possible to monitor all the items till their actual failure times; as a result, some censoring is often introduced in the sampling scheme. Here we assume that there is an independent censoring mechanism and the censoring time for each item is randomly governed by the distribution FC(⋅) (with density fC(⋅) and survival function SC(⋅)). Let C1,C2,…,Cn be the corresponding censoring times for the items under consideration. In practice, we have information about whether a particular item is censored and the corresponding time. In other words, what we know about the data set are the variables

The latter variable is the indicator of the event \( \lbrace T_{i} \leqslant C_{i}\rbrace \). For a particular realization, we shall consider the lower case versions, i.e., the pair (xi,δi) will denote the i th observation.

Next we propose the weighted likelihood strategy by defining the residual function. In the survival analysis scenario, we consider the Kaplan-Meier estimate of the survival function, defined by

where X(i), i ∈{1,2,…,n}, are the order statistics of (X1,X2,…,Xn) and δ[i] is the i th concomitant corresponding to X(i), i ∈{1,2,…,n} (i.e., δ[i] is the indicator corresponding to Xj, where Xj is the i-th order statistic). In case the largest value is a censored observation (rather than being an actual failure), the survival curve remains incomplete, and artificial methods are required to complete them. We will not bring that situation within the ambit of the present discussion. However, in case of the Arm A data set of Efron (1988), the final observation is indeed an uncensored observation. So the product-limit estimator does not need any modification in this case.

Notice that in this definition \( \hat {S}_{KM}(x) \) is the estimated probability \( \mathbb {P}(T > x) \). For example, if the first observation is a failure at x, then \( \hat {S}_{KM}(x) = (n-1)/n \). On the other hand, \( \hat {F}_{KM}(x) = 1 - \hat {S}_{KM}(x) \) is an estimate of the probability \( \mathbb {P}(T \leqslant x) \). In order to get the estimated probabilities corresponding to \( \mathbb {P}(T \geqslant x) \) we actually use \( \hat {S}_{KM}^{\ast }(x) \) in our subsequent analysis, obtained by adding the Kaplan-Meier mass, equal to the jump of the original Kaplan-Meier curve at that point, to each failure point x. This leads us to the definition of the modified residual function as

Having set up the above concepts, we now proceed to define the estimating equation in the right-censored, independent censoring scenario. The likelihood function is given by

We further consider the log-likelihood function in order to define the score equation and this leads to the expression

We obtain the maximum likelihood estimator of 𝜃 by maximizing this log-likelihood with respect to 𝜃 ∈Θ or, equivalently, solving the estimating equation given by

where ∇ represents derivative with respect to 𝜃, \( u_{1,\theta }(x) = \nabla \log f_{\theta }(x) \), \( u_{2,\theta }(x) = \nabla \log S_{\theta }(x) \) and

Hence, the proposed weighted likelihood estimating equation turns out to be

The weighted likelihood estimator (WLE) of 𝜃 is a suitable root of the estimating Eq. (10).

5 Theoretical Properties of the Proposed Weighted Likelihood Estimator

In this paper, we have primarily focused on the methodology and application of the weighted likelihood method in the right-censoring scenario. The theoretical properties, viz., the influence function and the asymptotic distribution of the corresponding weighted likelihood estimators have been dealt with elsewhere by Biswas et al. (2018). Here, we briefly mention the results for the sake of completion and understanding. We first begin with the following important lemma.

Lemma 1.

The proposed weighted likelihood estimator is Fisher-consistent.

Using the above lemma, we write down the expression of the influence function under the right-censored set up as below.

Theorem 1 (Influence Function of the Proposed Estimator).

The influence function of the proposed weighted likelihood estimator is given by

where

and

where \( \mathcal {X}_{1} = \lbrace x: F_{\theta }(x) \leqslant 1/2 \rbrace \), \( \mathcal {X}_{2} = \lbrace x: F_{\theta }(x) > 1/2 \rbrace \), \( \mathcal {X} = \mathcal {X}_{1} \cup \mathcal {X}_{2} \), \( \bar {G}(x) = \mathbb {P}(T > x) \) under the true distribution G, and 𝜃g is the solution of the weighted likelihood estimating Eq. (10) under the true distribution G.

If the true distribution G belongs to the model, i.e., \( G = F_{\theta _{0}} \) for some 𝜃0 ∈Θ, then the influence function turns out to have the simpler form

which is nothing but the influence function of the maximum likelihood estimator.

To prove the asymptotic normality of the proposed weighted likelihood estimator under the right-censored scenario, we need the following assumptions. For simplicity the results are presented with a scalar parameter in mind; however they may be extended to the vector parameter case with appropriate generalizations in the notation.

-

The weight function H(τ) is non-negative, bounded and twice continuously differentiable such that H(0) = 1 and \( H^{\prime }(0) = 0 \).

-

The functions \( H^{\prime }(\tau )(1+\tau ) \) and \( H^{\prime \prime }(\tau )(1+\tau )^{2} \) are bounded. Further, \( H^{\prime \prime }(\tau ) \) is continuous in τ.

-

For every 𝜃0 ∈Θ, there is a neighbourhood N(𝜃0) such that for every 𝜃 ∈ N(𝜃0), the quantities \( \left |{\tilde {U}_{\theta }(x)\nabla U_{\theta }(x,\delta )}\right | \), \( \left |{\tilde {U}_{\theta }^{2}(x) U_{\theta }(x,\delta )}\right | \), \( \left |{\nabla \tilde {U}_{\theta }(x)U_{\theta }(x,\!\delta )}\right | \) and \( \left |{\nabla _{2} U_{\theta }(x,\!\delta )}\right | \) are bounded by M1(x),M2(x),M3(x) and M4(x) respectively, with \( \mathbb {E}_{\theta _{0}}[M_{i}(X)] < \infty \) for all i ∈{1,2,3,4}.

-

\( \mathbb {E}_{\theta _{0}}[\tilde {U}_{\theta }^{2}(X)U_{\theta }^{2}(X,{\Delta })] < \infty \) and \( \mathbb {E}_{\theta _{0}}[\nabla U^{2}_{\theta _{0}}(X,{\Delta })] < \infty \).

-

The analogue of Fisher information in the survival context, \( J(\theta ) = \mathbb {E}_{\theta }[U_{\theta }^{2}(X,{\Delta })] \) is non-zero and finite for all 𝜃 ∈Θ.

-

\( S_{C}(x) \geqslant S^{\epsilon }_{\theta _{0}}(x) \), for some 𝜖 > 0.

Here, the function \( \tilde {U}_{\theta } \) is defined as

We now present the main result in the following theorem.

Theorem 2.

Let the true distribution G of the failure time data belong to the model, with 𝜃0 being the true parameter value. Let \( \hat {\theta }_{n,\text {WLE}} \) be the proposed weighted likelihood estimator. Define

where ∇2 represents the second derivative with respect to 𝜃. Then, under Assumptions (A1)–(A6) listed above, the following results hold.

-

(a)

\( \sqrt {n}\left |{A_{n}(\theta _{0}) - \frac {1}{n}{\sum }_{i=1}^{n} U_{\theta _{0}}(X_{i},{\Delta }_{i})}\right | = o_{P}(1). \)

-

(b)

\( \left |{B_{n}(\theta _{0}) - \frac {1}{n}{\sum }_{i=1}^{n} \nabla U_{\theta _{0}}(X_{i},{\Delta }_{i})}\right | = o_{P}(1). \)

-

(c)

\( C_{n}(\theta ^{\prime }) = O_{P}(1), \) where \( \theta ^{\prime } \) lies on the line segment joining 𝜃0 and \( \hat {\theta }_{n,\text {WLE}} \).

Based on the above theorem, we present two very important corollaries in the following.

Corollary 1.

There exists a sequence \( \lbrace \hat {\theta }_{n, \text {WLE}}\rbrace _{n \in \mathbb {N}} \) of roots of the weighted likelihood estimating Eq. (10) such that

Corollary 2.

\( \sqrt {n}(\hat {\theta }_{n,\text {WLE}} - \theta _{0}) \overset {\mathcal {D}}{\longrightarrow } N(0, J^{-1}(\theta _{0})) \).

6 Analysis of Efron’s Data

This section is devoted to the statistical analyses of the Arm A data set considered by Efron (1988). While monitoring 51 of the patients from Arm A, it was found that 9 (about 18%) of the values were censored. In order to analyze these data, we will apply the proposed weighted likelihood method under the Weibull distribution model.

The Weibull density, with shape parameter b and scale parameter a, is given by

We have also considered the Weibull model (and some other models) for a brief simulation study in Section 7. The Eqs. (16) and (17) in Section 7.2 have to be iteratively solved to obtain the WLEs of a and b. In the present section, the weight function in Eq. (4) is chosen based on the gamma density function as defined by HGamma in Eq. (2), with the shape parameter β of the gamma density being the tuning constant. Throughout the present analysis and in the subsequent simulation studies as well, the tuning parameter p of the weighting scheme is fixed at 0.5. The resulting estimates of a and b (rounded up to 4th decimal places), for various choice of the initial values, are presented in Table 2.

The MLE of the parameter vector (b,a) for the fifty one observations of the Arm A data under the Weibull model is (0.9301,426.9934). A closer look at the WLEs in Arm A for varying tuning parameters reveals that there is an increasing pattern in the values of the shape parameter as the tuning constant β increases. This is true for all the initial values, viz., the MLE, the pair (1.44,257.33) and the pair (9.30,1427.04). The last two pairs are the MLEs of the first forty four observations and the last seven observations, respectively. Also, in general the roots are fairly close to the initial values in each case.

The final fitted weights, corresponding to all the observations for each of the three initial values are provided in Table 3 corresponding to the tuning parameter β = 1.05. The column ‘status’ indicates the censoring status, with ‘0’ indicating a censored observation. It may be observed that when the MLEs represent the set of initial values all the final fitted weights are close to 1 and the root is an MLE-like root. With the second set of initial values, the final fitted weights for the last seven observations are all equal to zero (rounded up to the fourth decimal place) although the first forty four observations get weights close to 1. The situation reverses for the third set of initial values, where all the first forty four observations are now sacrificed.

In Fig. 1, we plot the densities based on the MLE and the WLEs (1.4651, 251.4444) and (10.5922,1451.7040). We also report a kernel density estimate for the Kaplan-Meier estimator (Wand and Jones, 1994) in the same plot which uses the Kaplan-Meier masses of the forty two fully observed failure times in its construction. For the kernel density estimate, we use the bandwidth

where sd(⋅) and IQR(⋅) refer to the standard deviation and interquartile range of the data respectively and n is the sample size.

While Fig. 1 is useful in demonstrating that the two components WLE1 = (1.4651,251.4444) and WLE2 = (10.5922,1451.7040) represent two different segments of the data, these densities have to be appropriately scaled to highlight the degree of their match with the different patterns in the data. To facilitate this we consider the scaled densities corresponding to WLE1 and WLE2 in Fig. 2. To do this we multiply these two densities with the sum of the Kaplan-Meier masses for the observations getting non-zero weights in the corresponding fit. Thus we multiply the WLE1 density with the sum of the masses of the thirty nine failure times among the first forty four observations (the other five observations are censored); similarly the WLE2 density is scaled with the sum of the masses of the three failure times among the last seven observations. For future reference, we refer to these two scaling constants as γ and 1 − γ. In Fig. 2 we plot the kernel density estimate of the full data, as well as the two scaled densities. It is clearly seen that the scaled densities provide nice and close fits to the kernel density estimate in the two segments of the data. The peaks of both the components are very closely matched.

Since the fitted curves (or the scaled curves) corresponding to WLE1 and WLE2 are associated with Weibull densities, they are technically supported over the entire range \([0, \infty )\). For all practical purposes, however, the densities f1 and f2 corresponding to WLE1 and WLE2, respectively, are disjoint, as is observed in Figs. 1 and 2. In Fig. 3 we plot the mixture fmix = γf1 + (1 − γ)f2 of the two Weibull densities (with γ being the sum of the masses of the first component as defined earlier) and the two component Weibull mixture fit for the full data as reported in Basu et al. (2006). It is obvious that the two curves are extremely close to each other. Thus the mixture of the fits obtained by the two non MLE-like roots is entirely successful in replicating the fit of the mixture without getting into the (quite substantial) complications of Weibull mixture modelling in this case.

Mixture of the two densities corresponding to WLE1 = (1.4651,251.4444) and WLE2 = (10.5922,1451.7040) for Efron’s Arm A data, indicated as “Proposed” in the legend together with an estimated mixture density as reported in Basu et al. (2006)

7 Simulation Results

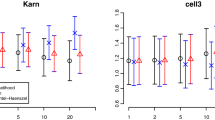

To study the performance of the estimators in a little more detail, we take up a simulation study. In the following we compare the empirical efficiency, i.e., the ratio of the mean squared error (MSE) of the MLE to the MSE of the WLE for different levels of contamination and censoring. Various values of the tuning parameters are also explored in this investigation.

We consider the following two distributions prevalent in modelling survival data.

-

(i)

The exponential distribution with rate λ, whose density is given by

$$ f_{\lambda}(x) = \lambda e^{-\lambda x}, ~~ \lambda > 0, x > 0. $$ -

(ii)

The Weibull distribution as described in Eq. (15).

We want to study the performance of the method when the data are both contaminated and censored. We take up the two distributions separately. In order to evaluate the empirical MSE for both the estimators (MLE and WLE), we draw 5000 samples with different levels (10% and 20%) of censoring and different proportions (0%, 10%, 15% and 20%) of contamination. It is to be noted that we have employed two different weighting functions for the definition of H(⋅) as discussed in Section 3 in the following analyses, viz., one based on the gamma density given by Eq. (2), and the other on the basis of the Weibull density as illustrated in Eq. (3). All the estimators corresponding to every sample are calculated for different values of the tuning constants.

7.1 Exponential Distribution

For ease of description, let us denote the exponential distribution with rate parameter λ by Exp(λ). The weighted likelihood estimating equation in this case boils down to

where wλ(xi) is as described in Eq. (10) with λ = 𝜃, and this leads to the solution

First we simulate from the Exp(5) distribution, with, say, 𝜖 proportion of contamination from Exp(1.5). We also introduce censoring at the appropriate proportion in our system. Let the failure time distribution T be Exp(λ); if the censoring variable C, independently of the failure time distribution, has distribution Exp(κ), and if the censoring proportion is ϱ, then, an evaluation of the expression

gives,

So, in case of 10 % censoring, we choose the rate parameter of the censoring distribution to be 5/9, and to be 5/4 for 20% censoring.

Tables 4 and 5 represent the empirical efficiencies of the WLE with respect to the MLE. As is evident from the tables and our theoretical intuitions, the efficiency is less than 1 at the 0% contamination, i.e., under pure data. The efficiency increases as the contamination increases, although the pattern with respect to increase in the tuning constant is not quite uniform. Under contamination, the efficiency decreases as we increase the censoring proportion. For each contamination level and weight function combination, the highest empirical efficiency is highlighted.

7.2 Weibull Distribution

For streamlining the following discussion, the Weibull density is denoted by W(b,a), where b is the shape parameter and a is the scale parameter. Here also, as before, we obtain the estimating equation for applying an algorithm similar to the Iteratively Reweighted Estimating Equation (IREE) algorithm (Basu and Lindsay, 2004). The equations turn out to be

We simulate pure data from W(5,2) distribution, in which we impose 𝜖 proportion of contamination from Exp(1.5) distribution. We choose Exp(λ(ϱ)) for censoring at proportion ϱ. As in the case of exponential distribution, we solve for λ(ϱ) and, in respect of the original W(5,2) data generating distribution, it turns out to be the solution of

which we solve numerically in order to obtain

Tables 6 and 7 display the empirical efficiencies. The increasing pattern of efficiency with increasing contamination remains the same. The other observations also are, in general, similar. The pure data efficiencies of the shape parameter appear to be lower than the scale parameter.

7.3 Half Cauchy Contamination

The simulation study presented in the previous subsections involves the exponential distribution as the contaminating distribution in both exponential and Weibull models. As advised by a referee, we have further investigated the performance of the weighted likelihood estimators of the parameters in these two models when the contaminating distribution is the one-sided Cauchy distribution with parameters (μ,σ) with the probability density function given by

We undergo the same simulation study under this modified contamination. To make the distribution comparable with the exponential contamination, we choose μ = 0 and σ = 1/π. The results are summarized in Tables 8, 9, 10 and 11. The patterns are, on the whole, similar to the exponential contamination patterns.

8 Selection of the Tuning Parameter

In the analysis of the Efron data set in Section 6 and the simulation studies in Section 7 we employ both the Gamma and Weibull type weights with a variety of tuning parameters for the evaluation of the weighted likelihood estimates. While it is our experience that the behaviour of the estimator only differs marginally over the choice of the weight function type (Gamma, Weibull or other) with comparable tuning parameter, the tuning parameter itself has a much more pronounced impact on the estimate. In general, the finite sample efficiency of the estimator appears to decrease with increase in the value of the tuning parameter β. On the other hand, larger values of β appear to give greater stability under data contamination. It is, therefore, extremely important to choose the tuning parameter judiciously. One would want to choose a larger tuning parameter for stability in estimation only if it is necessary.

In connection with choosing the optimal tuning parameter for the minimum divergence estimator based on the density power divergence (DPD; Basu et al., 1998), an algorithm was presented by Warwick and Jones (2005) which minimizes an empirical measure of mean squared error (MSE); also see Basak et al. (2019). In calculating this empirical measure of the MSE for any given real data set, the Warwick-Jones algorithm exploits the theoretical form of the asymptotic variance of the estimator, the empirical distribution, and a robust pilot estimator for the computation of the bias. Then the empirical mean squared error is the estimated, summed, asymptotic variance plus the estimated squared bias computed against an initial robust pilot estimator.

In this paper, we propose to extend this idea to make it suitable for our situation. Notice that unlike the case of the DPD, where the asymptotic variance was a function of the tuning parameter α, our estimators are all first-order efficient, and hence there is no asymptotic discrimination between the estimators in terms of the variance. The finite sample variance varies quite a bit, however, and we try to make use of this fact in the development of our algorithm, which proceeds along the following steps for any of our weight functions which depends on a single parameter β, when a real data set of size n is available.

-

1.

We choose a fine sequence of values of the tuning parameter β over the appropriate range.

-

2.

We select B bootstrap samples of the same size n from the given data set.

-

3.

We compute the weighted likelihood estimators of the parameters for each bootstrap sample and each value of β in our sequence.

-

4.

For each value of β, the variance of the estimator is calculated as the observed variance of the parameter estimates over the bootstrap samples. This is following the idea of Léger and Romano (1990) to consider the bootstrap estimate of the variance.

-

5.

We compute the empirical mean squared error at any given value of β as the sum of the estimated bootstrap variances at that β and the squared bias estimated as the squared deviation of the estimator for that β against a suitable pilot estimator.

-

6.

The optimal value of the tuning parameter is then chosen as the value of β which minimizes the above criterion.

In case of the Efron data (Arm A), we illustrate our tuning parameter selection technique with Gamma weights. Here we have used a fine sequence of β values between 1.00 and 1.05. We restrict the upper limit of the tuning parameter at 1.05 because for larger values of β the pure data efficiency of the WLEs appears to be unacceptably low, particularly for the shape parameter estimate. Note that the sample size (51) for Efron data (Arm A) is approximately equal to the sample size (50) used in our simulations as reported in Tables 4 to 11. The optimal value of β through the above exercise is found to be 1.05 for the Efron (Arm A) data, indicating a strongly robust estimator is needed in this case. Interestingly, if we were to exclude the seven large observations of this data set (i.e., the observations belonging to the second cluster), the optimal value of the tuning parameter turns out to be 1.00 which generates the MLE of this truncated group. In both cases, the pilot estimator was the WLE at β = 1.05 with Gamma weights. Thus, at least in this example, the algorithm suggested by us appears to be successful in detecting where a robust estimator is necessary and where it is not. A further refinement of the above procedure is possible which selects the tuning parameter adaptively by letting the optimal solution at any stage be the pilot for the next iteration; see Basak et al. (2019). We hope to pursue such a procedure in the future.

9 Concluding Remarks

This article has been devoted to analyzing right-censored survival data by adapting a weighted likelihood approach. Studies of various theoretical properties of this newly devised estimator have been provided in Biswas et al. (2018). While obtaining the numerical findings, it has been noticed that the convergence of the IREE type algorithm (Basu and Lindsay, 2004) heavily depends upon the choice of the starting values, and, to a lesser extent, on the value of the tuning parameter. A suitable robust estimator should be employed for the quick convergence of the IREE type algorithm.

The scope of this method has been illustrated, apart from simulation, through Efron’s (Efron, 1988) head-and-neck cancer data. In Efron’s data (Arm A), the two clusters in the data were easily separable, and, consequently, the roots representing these clusters could be easily identified. In general, we need some algorithm to uncover all the roots of the system. For this we recommend the bootstrap root search algorithm as described in Markatou et al. (1998).

The problem of early failure in reliability theory is a common one and seems an area worth exploring for the purpose of applying robust statistical methods. In this situation, failure of some items may occur at a very primary period of the experiment. These observations, we suspect, are exceptional when considering the appropriate statistical model. We wish to take up the problem of analyzing data with early failures in a subsequent work.

Another possible approach of study, which could be useful for the situation considered in this paper, was suggested by a referee. This would involve performing an initial cluster analysis, followed by an application of the weighted likelihood method of estimation for each of the identified clusters. This would, however, require a robust clustering technique appropriate for right-censored data. We hope to take it up in a sequel paper.

References

Basak, S, Basu, A and Jones, MC (2019). On the “optimal” density power divergence tuning parameter. Tech. Rep. ISRU/2019/1, Interdisciplinary Statistical Research Unit, Indian Statistical Institute. https://www.isical.ac.in/isru/tr19a.pdf.

Basu, A. and Lindsay, B.G. (2004). The iteratively reweighted estimating equation in minimum distance problems. Computational Statistics & Data Analysis 45, 2, 105–124.

Basu, A., Harris, I.R., Hjort, N.L. and Jones, M.C. (1998). Robust and efficient estimation by minimising a density power divergence. Biometrika 85, 3, 549–559.

Basu, S., Basu, A. and Jones, M.C. (2006). Robust and efficient parametric estimation for censored survival data. Ann. Inst. Stat. Math. 58, 2, 341–355.

Biswas, A., Roy, T., Majumder, S. and Basu, A. (2015). A new weighted likelihood approach. Stat 4, 1, 97–107.

Biswas, A., Guha Niyogi, P., Majumder, S., Bhandari, S.K., Ghosh, A. and Basu, A. (2018). Theoretical properties of a new weighted likelihood estimator for right censored data. Tech. Rep. ISRU/2018/2, Interdisciplinary Statistical Research Unit, Indian Statistical Institute. https://www.isical.ac.in/isru/tr18a.pdf.

Efron, B. (1988). Logistic regression, survival analysis, and the kaplan-Meier curve. J. Am. Stat. Assoc. 83, 402, 414–425.

Kaplan, E.L. and Meier, P. (1958). Nonparametric estimation from incomplete observations. J. Am. Stat. Assoc. 53, 282, 457–481.

Léger, C. and Romano, J.P. (1990). Bootstrap choice of tuning parameters. Ann. Inst. Stat. Math. 42, 4, 709–735.

Lindsay, B.G. (1994). Efficiency versus robustness: The case for minimum Hellinger distance and related methods. Ann. Stat. 22, 2, 1081–1114.

Majumder, S, Biswas, A, Roy, T, Bhandari, S and Basu, A (2016). Statistical inference based on a new weighted likelihood approach. arXiv:161007949.

Markatou, M., Basu, A. and Lindsay, B.G. (1997). Weighted likelihood estimating equations: the discrete case with applications to logistic regression. Journal of Statistical Planning and Inference 57, 2, 215–232.

Markatou, M., Basu, A. and Lindsay, B.G. (1998). Weighted likelihood equations with bootstrap root search. J. Am. Stat. Assoc. 93, 442, 740–750.

Wand, M.P. and Jones, M.C. (1994). Kernel Smoothing. CRC Press.

Warwick, J. and Jones, M.C. (2005). Choosing a robustness tuning parameter. J. Stat. Comput. Simul. 75, 7, 581–588.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Biswas, A., Majumder, S., Niyogi, P.G. et al. A Weighted Likelihood Approach to Problems in Survival Data. Sankhya B 83, 466–492 (2021). https://doi.org/10.1007/s13571-019-00214-w

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13571-019-00214-w

Keywords

- Censored survival data

- Kaplan-Meier estimator

- Root selection

- Weibull distribution

- Weighted likelihood estimation