Abstract

Automated recognition of daily human tasks is a novel method for continuous monitoring of the health of elderly people. Nowadays mobile devices (i.e. smartphone and smartwatch) are equipped with a variety of sensors, therefore activity classification algorithms have become as useful, low-cost, and non-invasive diagnostic modality to implement as mobile software. The aim of this article is to introduce a new deep learning structure for recognizing challenging (i.e. similar) human activities based on signals which have been recorded by sensors mounted on mobile devices. In the proposed structure, the residual network concept is engaged as a new substructure inside the main proposed structure. This part is responsible to address the problem of accuracy saturation in convolutional neural networks, thanks to its ability in jump over some layers which leads to reducing vanishing gradients effect. Therefore the accuracy of the classification of several activities is increased by using the proposed structure. Performance of the proposed method is evaluated on real life recorded signals and is compared with existing techniques in two different scenarios. The proposed structure is applied on two well-known human activity datasets that have been prepared in university of Fordham. The first dataset contains the recorded signals which arise from six different activities including walking, jogging, upstairs, downstairs, sitting, and standing. The second dataset also contains walking, jogging, stairs, sitting, standing, eating soup, eating sandwich, and eating chips. In the first scenario, the performance of the proposed structures is compared with deep learning schemes. The obtained results show that the proposed method may improve the recognition rate at least 5% for the first dataset against its own family alternatives in distinguishing challenging activities (i.e. downstairs and upstairs). For the second data set similar improvements is obtained for some challenging activities (i.e. eating sandwich and eating chips). These superiorities even reach to at least 28% when the capability of the proposed method in recognizing downstairs and upstairs is compared to its non-family methods for the first dataset. Increasing the recognition rate of the proposed method for challenging activities (i.e. downstairs and upstairs, eating sandwich and eating chips) in parallel with its acceptable performance for other non-challenging activities shows its effectiveness in mobile sensor-based health monitoring systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Monitoring of the activities of alone old people is one of the important issues in modern electronic healthcare [1]. Recognition and distinguishing the above activities may be utilized for several applications including assistive living, rehabilitation, and surveillance. Although video-based monitoring is the simplest way for human activity recognition but this technique was less appropriately addressed, because of its privacy-invasive nature. In parallel with recent advances in Micro Electro Mechanical Systems (MEMS), low-cost small size sensors have emerged. Such sensors are widely used in smartphones, smartwatches, and healthcare devices. Some of these sensors (for example accelerometer and gyroscope) enables smartphones and smartwatch to be utilized as equipment for monitoring human activities [2]. The similarity of the signals which are captured from different activities of a person (for example upstairs and downstairs or eating activities) caused the discrimination of several human activities remains a challenging classification problem. In addition, a high amount of recorded data increases the computational cost of recognition algorithms.

In several studies, various linear and nonlinear classification schemes have been proposed to address the above limitations. The main objective of these schemes is to obtain acceptable accuracy, especially when the similarity of the activities increases. Some primary approaches try to distinguish activities based on their simple features such as mean [3,4,5] or variance [6].Although using such methods shows acceptable results for several simple activities (e.g. standing, sitting, and running) but their outcomes for some complicated activities (e.g. stretching and riding elevator) are not satisfactory.

Some researches make use of Fourier transform domain for extracting suitable features that may distinguish between similar human activities. These features may reflect the frequency-based properties of several activities, therefore they may differentiate between activities based on their various frequency contents. Unfortunately, this family of methods has no sufficient accuracy in recognizing activities in parallel with their high computational cost.

In some studies [7], the support vector machine (SVM) is used as a classifier that makes use of Gaussian kernels. Using this type of kernels results in more flexibility in decision boundaries, therefore ultimately increase the accuracy of the recognition of several activities. However, in the above method, the strategy of selecting SVM parameters is very challenging, because the resultant accuracy is highly dependent on these parameters.

Some sophisticated methods try to classify different activities by constructing the Hidden Markov Model (HMM) [8]. Although this technique has shown better results than many of its older alternatives, however, its performance is highly dependent on the quantity and quality of the extracted features from the recorded signals.

In parallel with recent advances in manufacturing processors with high computational power, deep neural networks have attracted a lot of attention as an effective paradigm to overcome challenges of human activity recognition. In this method, a deep neural network extracts non-handcrafted features from its raw input data [9]. Furthermore, deep neural networks are based on the learning of multiple levels of representations of the data. Such a multi-level representation scheme in parallel with their deep architecture (several processing layers) enables them to get more accurate results [9]. Deep convolutional neural networks (CNNs) are initiated from deep learning theory which is based on large scale data and different types of layers. A portion of this structure is responsible for extracting discriminative features of input data, while others are responsible for the classification of the data based on extracted features. Based on the above abilities, deep convolutional networks have been widely used for separating human activities in recent years containing several standard, partial, or full weight sharing versions (e.g. partial weight sharing in the first convolutional layer and full weight sharing in the second convolutional layer) [10]. Unfortunately, temporal-dependency which is the main characteristic of human activity signals has not been addressed in classic CNN which hampers its performance in human activity recognition. Therefore in complementary researches, the temporal dependency of data has been incorporated in the solution. In some researches, recurrent neural networks (RNNs) were used to consider the time dependence of human activities in constructing deep neural networks [11]. In more complicated solutions several combinations of CNN and long-short term memory (LSTM) were introduced in order to extract temporal and local features simultaneously [12]. Although these methods enabled researchers to improve the results of human activity recognition systems, however the accuracy saturation still remains as an important limiting factor in this application.

In this paper, a new method is introduced to improve distinguishing different human activities. The proposed algorithm is based on minimizing the accuracy saturation phenomenon along with improving the optimization ability of LSTM-CNN. In our proposed algorithm the temporal deep learning scheme is modified by using the concept of residual network. The resultant architecture utilizes shortcuts to jump over some layers thanks to existing residual networks in its body. Therefore the problem of vanishing gradients is addressed by reusing activations from a previous layer until the layer next to the current one has learned its weights. Consequently, the problem of accuracy saturation is greatly reduced. The paper is organized as follows. In Sect. 2, the proposed approach is demonstrated including dataset and pre-processing, learning deep neural network and the classification of activities. In Sect. 3, the performance of the proposed method is evaluated by comparing its results with the results of deep learning methods. In Sect. 4, the obtained results from the proposed scheme are compared with the results of non-deep techniques. Finally, conclusion is presented in the last section of the paper.

2 Methods

In this section, the details of the proposed method are described. Firstly the LSTM-CNN deep structure is introduced and then the residual network is applied to improve the performance of deep structure against vanishing gradients problem and increases the optimization performance of the network by identity mapping. Finally, the classification is performed in order to distinguish six human activities.

2.1 Convolutional neural network

Convolutional neural network (CNN) is a kind of deep neural network which has high potential in extracting high-level features. The feature extraction is perfumed in so-called convolutional layers of CNN [12] thanks to its linear and nonlinear kernels and regardless of the feature positions which makes them scale-invariant. Suppose input activity signal as:

In which \(x_{i}^{0}\) may be represented by a matrix of size \(3 \times N\) which N refers to the number of incorporated accelerometers. In the same manner, the input of k-th convolutional layer includes \(Z_{i,j}\) feature map as demonstrated in Fig. 1. Therefore the component for (i, j) location in k-th layer and l-th feature map may be computed as:

In which σ and \(k^{\prime }\) demonstrate activation function and number of feature maps, respectively in \((l - 1){\text{th}}\) layer with kernel size of X and Y. Furthermore, w represents weight matrix and b shows the bias.

Finally, all feature maps are transferred into distinguished classes by using a fully connected layer. For this goal, a dense layer is used with some nodes which are equal to the number of activity classes using bellow so-called softmax function.

2.2 Long short-term memory

Feedforward networks consider all inputs and outputs as independent elements which are not a valid assumption for time sequence phenomena such as human activity signals. To overcome this limitation, recurrent neural networks (RNNs) are used which have a great potential to model the temporal dependencies thanks to their recurrent unit which serves as a memory. The main challenges in classic RNN are vanishing gradient [13] and the limited number of memory [14] which hamper its long term temporal dependency. This weakness may seriously hamper the effectiveness of RNNs in modelling of long time series of human activity signals.

Long short-term memory (LSTM) is a type of recurrent networks to solve the vanishing gradient problem mentioned above [15]. In this network, the memory cell has been used to save the information instead of the recurrent unit. Memory cells are constructed and updated by using three main gates including write (i.e. controlling input information), read (i.e. controlling output information), and reset (i.e. forgetting useless information) [15] as demonstrated in Fig. 2.

The functionality of the LSTM which was shown in Fig. 2 may be described in details by Eqs. (4–8).

In the above equations i, f, o, and c represents input gate, forget gate, output gate, and cell activation functions respectively. The combination of CNN and LSTM may be used to model local and temporal dependencies for long time series signals [12].

2.3 Batch normalization

Generally, the change of input distribution may cause several problems in the learning process of deep neural networks [16]. On the other hand, the presence of any amount of variance in the input of each layer shows itself in the form of more intense changes in the next layer, due to nonlinear and deep structure of CNN [17]. In order to reduce the impact of this unfavourable phenomenon, the normalization is widely used between successive layers [9, 18, 19]. In this research Batch Normalization (BN) function is applied to reduce the internal covariance shift between layers in parallel with increasing learning speed [20] as illustrated in Eq. (9) and Fig. 3.

In above equation \(\gamma\) and \(\beta\) are learning parameters.

2.4 Residual network

Although depth increment leads to construct more fitted model between input and output in CNN [20], but such a deep structure faces some limitations in its training procedure. One of the important problems is the vanishing/exploding gradient [19, 21] which occurs with the stacking of more layers [22]. Degradation with the network depth increasing, cause the accuracy gets saturates and then reduces fast. This problem points out that maybe the network has problems with approximating identity mapping due to stacking nonlinear layers [22].

In this research, residual network [22] is incorporated in the combination of CNN and LSTM structures (i.e. ConvLSTM) to overcome the above-mentioned problem. This solution utilizes parameter-free connections (identity shortcuts) for connecting the input of layer to output as shown in Fig. 4.

This shortcut connections help the function to play its role more optimal. Therefore, such direct input–output connections enable the deep network to overcome accuracy saturation and overfitting problems by skipping some layers. As shown in Fig. 4, the shortcut connections can help the solver function to map the identity function easier.

The final structure of our proposed network has been shown in Fig. 5 in which the convolutional layers extract local features from a 3-axis Mobile sensor-based accelerometer as feature maps. LSTM layers used to model temporal dependency existing in the feature maps. As shown in this figure in parallel with feature extraction, BNs are applied between layers to reduce the variance of each layer. Finally, the fully connected layer maps the result of ConvLSTM into six classes of activities as shown in the right end of Fig. 5.

The final structure of the proposed method: three CNN structures which each include two convolution layers and two BN layers. Residual shortcuts connect the first CNN output to the output of the last BN layer in each CNN section. Two LSTM layers were implanted to model temporal dependencies. Finally, the fully connected layer with softmax function, map the features into desired activity classes

The complete procedure of the proposed method may be observed in pseudocode of Fig. 6.

3 Results

The proposed method was applied on two datasets from wireless sensor data mining (WISDM) lab [23, WISDM2] which includes over one million and 15 million raw time series data respectively. These signals have been captured from the smartphone’s 3-axis accelerometer of 36 volunteers for first the dataset and data from the accelerometer sensor from smartphone and watch as 51 subjects performed 18 activities for the second one. The smartphone was fixed in the right pocket of each volunteer’s pants to achieve the maximum robustness in recorded signals.

The first dataset which we used included six different activities consist of walking, jogging, upstairs, downstairs, standing, and sitting which has been captured over 10 min per each. From the second dataset, we choose eight activities from the smartwatch’s accelerometer consist of walking, jogging, stairs, standing, sitting, eating soup, eating sandwich, and eating chips. For the first dataset, the volumes of data belonging to several activities are not equal because some users did not perform some of the activities due to their physical restrictions. Furthermore, some activities (i.e. sitting and standing) were limited to only a few minutes because it has been expected that the data would remain almost constant over time. More information about this dataset may be found in Table 1.

In Figs. 7 and 8 for instance, some sample signals from both datasets have been shown. These examples belong to upstairs and downstairs activities for the first dataset and two different eating activities for the second dataset respectively. These figures clearly show that there is no significant difference between two recorded signals belonging to downstairs and upstairs activities for the first dataset and eating sandwich and eating chips for the second one. Therefore it illustrates why recognizing these activities may be considered as a challenge in the domain of human activity recognition.

However for the Eating Soup activity, the signal is more distinguishable from the other two eating activities as showed in Fig. 9.

The proposed method was implemented on the Tensorflow framework, using the tensor processing unit (TPU) hardware developed by Google collaboratory. Furthermore, three deep learning-based alternative methods were also implemented to compare with the proposed scheme including: (a) basic CNN algorithm, (b) combination of CNN and LSTM which is called ConvLSTM for brevity in the rest of the article, and (c) ConvLSTM which has been modified by residual network (ResNet) concept which is called ConvLSTM + ResNet for brevity in the rest of the article. The accuracy of each method was obtained on the same data set and reported to evaluate the performance of several examined algorithms.

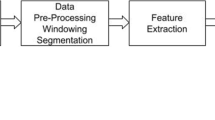

The first step of human activity recognition is data segmentation, and the most traditional approach is to use a sliding window. In this paper, the window size of 90 with a 50% overlap was used.

In this contribution, three different structures were proposed which for each of them the best configuration (number of layers and hyperparameters) were determined and showed in Table 2. The weights initialized randomly for each training procedure using stochastic gradient decent (SGD) optimizer [24] with the momentum of 0.9 and the initial learning rate of 0.01 and decay rate of 50% per 10 epochs. Table 2 shows the main parameters of examined structures.

Firstly, the performance of CNN was evaluated in distinguishing several activities of the two mentioned datasets. As demonstrated in Tables 3 and 4, this approach has obtained utterly acceptable results on those on-challenging activities in which their recorded signals were not so similar to each other.

However, the performance of this network was dropped when it was applied to recognize challenging activities (i.e. downstairs and upstairs for the first dataset and eating sandwich and eating chips for the second dataset which caused similar signals). These decrements occurred in such way that for the first dataset the accuracies were obtained 85.53 and 86.99% for upstairs and downstairs respectively and for the second dataset, the accuracies were 58.58 and 50.22 for eating sandwich and eating chips respectively.

Tables 5 and 6 show the results obtained from applying the ConvLSTM scheme. These results demonstrate although the modification of CNN by long short-term memory may marginally improve the accuracies for the mentioned similar activities. For the first dataset, 4.03 and 2.02% improvement was obtained for upstairs and downstairs and in a similar manner for the second dataset, the improvements were 2.36 and 8.06% for eating sandwich and chips respectively). Note that these improvements were not enough to make the results acceptable for these challenging activities. Furthermore, Tables 3 and 4 show that the accuracies of the other activities have had no meaningful difference from the results which had been obtained from basic CNN (e.g. these differences were about 1%).

Finally, Tables 7 and 8 demonstrate that the proposed method has significantly increased the obtained accuracies for two challenging activities compared to CNN. The obtained results showed that the residual network concept may improve the recognition accuracies against the basic CNN network for the first dataset by extents of 9.16 and 6.96% for upstairs and downstairs activities respectively. These improvements were obtained as 5.13 and 4.94 for the same activities compared to the ConvLSTM scheme. However, these results illustrated a tiny accuracy decrement in walking compared to basic CNN. For the second dataset, accuracies which were obtained for eating sandwich and chips were improved about 7.41 and 9.71% compared to the CNN and 5.05 and 1.65% compared to the ConvLSTM.

4 Discussion

In the previous section, the superiority of the proposed algorithm against CNN based schemes has been investigated. The common aspect of all those algorithms was that all of them belong to deep neural networks family, hence all of them extract features from raw data by using their convolutional layers. In this section, the performance of the proposed algorithm is compared with the feature-based classifiers as an alternative family for deep methods. To perform such comparison, five feature-based activity recognition methods were applied on the first dataset of WISDM. The alternative algorithms include (a) a combination of hand-crafted features and Random Forest classifier which is called for brevity as Basic features + RF in this article [23, 25], (b) principal component analysis (PCA) based on empirical cumulative distribution function which is called for brevity as PCA + ECDF in this article [26, 27], (c) logistic regression [23, 28], (d) a decision tree algorithm used for classification which is called J48 algorithm in the article [23, 28] and finally (e) multilayer perceptron [23]. The classification accuracy was calculated for the proposed method and the all above alternatives to compare their effectiveness. Table 9 shows the obtained accuracies for all examined methods. This table describes that for upstairs activity the recognition accuracy of the proposed algorithm was 33.23%, 35.43%, 67.17%, 35.14%, and 28.02% better than multilayer perceptron, J48, logistic regression, PCA + ECDF, and basic features + RF methods, respectively. Also, this table shows that for downstairs activity, the recognition accuracy of the proposed algorithm was 49.65%, 38.47%, 81.69%, 54.35%, and 44.13% better than the above alternatives.

For the other three non-challenging activities (e.g. sitting, standing and jogging) although the proposed algorithm recognized activities overall better than its alternatives, but for most of the test items the performances of examined methods were in acceptable range in accordance with those which were described in deep learning methods (see Tables 3, 5, 7). However, it is important to note that even for these non-challenging activities the superiorities of the proposed scheme against alternative methods have reached up to 16.03% (e.g. proposed method vs Basic features + RF in sitting activity). Finally, in case of walking activity, the results of the proposed scheme were 13.89%, 3.87%, 7.55%, and 5.77% higher than those which had been obtained by basic features + RF, logistic regression, J48, and multilayer perceptron methods. However, for this activity, the result of the proposed method has been lower than PCA + ECDF by extents of 1.09%. The above results confirmed the results which had been obtained by using deep learning-based methods which showed that the proposed algorithm caused a great accuracy improvement in recognizing challenging activities (i.e. downstairs and upstairs). On the other hand, for other non-challenging activities although the proposed method showed a slight accuracy increase or decrement compared to existing methods, but the recognition accuracies belonging to this method still remained within the acceptable range.

5 Conclusion

In recent years, deep neural networks have been widely utilized for human activity detection which the most famous among them is convolutional neural network (CNN). Despite the considerable potential of CNNs in recognizing human activities, unfortunately, such networks face with accuracy saturation phenomenon which hampers their performance in real-world applications. In this paper, a new structure was introduced to address this problem based on a combination of long short-term memory (LSTM) and residual network structures. The performance of the proposed structure was evaluated on two real data sets contained the recorded signals belonged to six human activities including walking, jogging, upstairs, downstairs, sitting, and standing for the first dataset. The second dataset contained walking, jogging, stairs, sitting, standing, eating soup, eating sandwich, and eating chips. Two different scenarios were adopted to compare the performance of the proposed method with two main categories of existing techniques. In the first scenario, the performance of the proposed method was compared with those of its own family, all based on deep learning. The obtained results showed that for the first dataset, the proposed scheme distinguished both of downstairs and upstairs (as the most challenging activities) almost 5% better than its closest deep based alternative. For the second data set improvements were 5 and 1.65% for those results which had been obtained for eating sandwich and eating chips respectively. On the other hand, the performances of the proposed method and its deep based alternatives had no meaningful difference among four other (i.e. non-challenging) activities for both datasets. The second scenario was dedicated to comparing the performance of the proposed structure and non-deep techniques. The results obtained in this scenario also indicated the superiority of the proposed method against five well known non-deep techniques in recognition of challenging activities for the first dataset. The obtained results showed that the proposed scheme distinguished downstairs and upstairs (as the most challenging activities) almost 38 and 28% better than its closest feature-based alternative. Similar to the previous scenario, the performance of the proposed method and its alternatives had no meaningful difference and both are in acceptable range when they were examined on the other four non-challenging activities. Based on the above analyses it may be concluded that the proposed structure has considerable potential to be used as a low-cost and non-invasive diagnostic modality to implement as mobile software.

References

Tahavori F, Stack E, Agarwal V, Burnett M, Ashburn A, Hoseini tabatabaei SA, Harwin W. Physical activity recognition of elderly people and people with parkinson’s (PwP) during standard mobility tests using wearable sensors. In: 2017 international smart cities conference (ISC2). Wuxi, ChinaSept, 14–17 September 2017; 2012. p. 403–407.

Dernbach S, Das B, Krishnan NC, Thomas LB, Cook JD. Simple and complex activity recognition through smart phones. In: 2012 eighth international conference on intelligent environments, Guanajuato, Mexico, 26–28 June 2012; 2012. p. 214–221.

Foerster F, Smeja M, Fahrenberg J. Detection of posture and motion by accelerometry: a validation study in ambulatory monitoring. Comput Hum Behav. 1999;15(5):571–83.

Aminian K, Robert Ph, Buchser EE, Rutschmann B, Hayoz D, Depairon M. Physical activity monitoring based on accelerometry: validation and comparison with video observation. Med Biol Eng Compu. 1999;37(3):304–8.

Bao L, Intille S. Activity recognition from user-annotated acceleration data. In: International conference on pervasive computing, pervasive 2004: pervasive computing, 1–17, Linz and Vienna, Austria, 21–23 April 2004; 2004.

Plotz P, Hammerla NY, Olivier P. Feature learning for activity recognition in ubiquitous computing. In: IJCAI’11 Proceedings of the twenty-second international joint conference on artificial intelligence, vol. 2, Barcelona, Spain, 16–22 July 2011; 2011. pp. 1729–1734.

He Z, Jin L. Activity recognition from acceleration data based on discrete consine transform and SVM. In: 2009 IEEE international conference on systems, man and cybernetics, San Antonio, TX, USA, 11–14 October 2009; 2009. p. 5041–5044.

Kim Y-J, Kang B-N, Kim D: Hidden markov model ensemble for activity recognition using tri-axis accelerometer. In: 2015 IEEE international conference on systems, man, and cybernetics, Kowloon, China, 9–12 October 2015; 2015. p. 3036–3041.

Lee S-M, Yoon SM, Cho H. Human activity recognition from accelerometer data using convolutional neural network. In: 2017 IEEE international conference on big data and smart computing (BigComp), Jeju, South Korea, 13–16 February 2017; 2017. p. 131–134.

Ha S, Choi S. Convolutional neural networks for human activity recognition using multiple accelerometer and gyroscope sensors. Vancouver, BC, Canada, 24–29 July 2016; 2016. p. 381–388.

Singh D, Merdivan E, Psychoula E, Kropf J, Hanke S, Geist M, Holzinger A. Human activity recognition using recurrent neural networks. In: International cross-domain conference for machine learning and knowledge extraction, Reggio, Italy, 29 August 2017; 2017. p. 267–274.

Ordóñez FJ, Roggen D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors. 2016;16:115.

Cho K, van Merrienboer B, Bahdanau D, Bengio Y. On the properties of neural machine translation: encoder–decoder approaches, association for computational linguistics. In: Proceedings of SSST-8, eighth workshop on syntax, semantics and structure in statistical translation, Doha, Qatar, October 2014; 2014. p. 103–111.

Arifoglu D, Bouchachia A. Activity recognition and abnormal behaviour detection with recurrent neural networks. Procedia Comput Sci. 2017;110(86–93):12.

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9(8):1735–80.

Shimodaira H. Improving predictive inference under covariate shift by weighting the log-likelihood function. J Stat Plan Inference. 2000;90(2):227–2441.

NadeemHashmi S, Gupta H, Mittal D, Kumar K: A lip reading model using CNN with batch normalization. In: 2018 eleventh international conference on contemporary computing (IC3), 1–8, New Delhi, India, August 2018; 2018.

LeCun Y, Bottou LB, Orr G, Müller K-R (2002) Efficient backprop, neural networks: tricks of the trade. Lecture notes in computer science, vol. 1524. Springer, Berlin; 2002.

Glorot X, Bengio Y. Understanding the difficulty of training deep feedforward neural networks. J Mach Learn Res. 2010;9:249–56.

Zhang Y, Chan W, Jaitly N. Very deep convolutional networks for end-to-end speech recognition. In: 2017 IEEE international conference on acoustics, speech and signal processing (ICASSP). New Orleans, LA, USA, 5–9 March 2017; 2017. p. 4845–4849.

Bengio Y, Simard P, Frasconi P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw. 1994;5(2):157–66.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; 2016. p. 770–778.

Kwapisz JR, Weiss GM, Moore SA. Activity recognition using cell phone accelerometers. ACM SIGKDD Explor Newslett. 2010;12(2):74–82.

Bottou L. Large-scale machine learning with stochastic gradient descent. In: International conference on computational statistics (COMPSTAT); 2010. p. 177–186.

Ignatov A. Real-time human activity recognition from accelerometer data using convolutional neural networks. Appl Soft Comput. 2017;62:915–22.

Zeng M, Nguyen LT, Yu B, Mengshoel OJ, Zhu J, Wu P, Zhang J. Convolutional neural networks for human activityrecognition using mobile sensors. In: 6th international conference on mobile computing, applications and services, Austin, TX, USA, 6–7 November 2014; 2014. p. 197–205.

Plotz T, Hammerla NY, Olivier P. Feature learning for activity recognition in ubiquitous computing, 1729–1735. In: Proceedings of the twenty-second international joint conference on artificial intelligence, Barcelona, Catalonia, Spain, 16–22 July 2011; 2011.

Witten H, Frank EI. Data miningpractical machine learning tools and techniques, a volume in the morgan kaufmann series in data management systems; 2011.

Acknowledgements

Both co-authors testify that our article entitled “Mobile Sensor Based Human Activity Recognition: Distinguishing of Challenging Activities by Applying Long Short-Term Memory Deep Learning Modified by Residual Network Concept” has not been published in whole or in part elsewhere is not currently being considered for publication in another journal and both authors have been personally and actively involved in substantive work leading to the manuscript, and will hold themselves jointly and individually responsible for its content.

Funding

This work was not funded.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shojaedini, S.V., Beirami, M.J. Mobile sensor based human activity recognition: distinguishing of challenging activities by applying long short-term memory deep learning modified by residual network concept. Biomed. Eng. Lett. 10, 419–430 (2020). https://doi.org/10.1007/s13534-020-00160-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13534-020-00160-x