Abstract

In this paper, we deal with an extra-gradient iterative method for finding a common solution to a generalized mixed equilibrium problem and fixed point problems for a nonexpansive mapping and for a finite family of k-strict pseudo-contraction mappings in Hilbert space. We prove a strong convergence theorem for the extra-gradient iterative method under some mild conditions. Further, we give a numerical example to illustrate the main result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let C be a nonempty, closed and convex subset of a real Hilbert space H. Let \(G:C\times C\rightarrow {\mathbb {R}}\) and \(\phi :C\times C\rightarrow {\mathbb {R}}\) be nonlinear bifunctions, where \({\mathbb {R}}\) is the set of all real numbers and let \(A:C\rightarrow H\) be a nonlinear mapping. In 1994, Blum and Oettli [2] introduced and studied the following equilibrium problem (in short, EP): Find \(x\in C\) such that

The solution set of EP(1.1) is denoted by Sol(EP(1.1)). An important generalization of EP(1.1) is the mixed equilibrium problem (in short, MEP) introduced and studied by Moudafi and Thera [15] which is of finding \(x\in C\) such that

For application of MEP(1.2), see Moudafi and Thera [15].

It is well known that the equilibrium problems have a great impact and influence in the development of several topics of science and engineering. It turned out that many well known problems could be fitted into the equilibrium problems. It has been shown that the theory of equilibrium problems provides a natural, novel and unified framework for several problems arising in nonlinear analysis, optimization, economics, finance, game theory and engineering. The equilibrium problem includes many mathematical problems as particular cases, for example, mathematical programming problem, variational inclusion problem, variational inequality problem, complementary problem, saddle point problem, Nash equilibrium problem in noncooperative games, minimax inequality problem, minimization problem and fixed point problem, see [2, 5, 14].

Now we consider the following generalized mixed equilibrium problem (in short, GMEP): Find \(x\in C\) such that

The solution set of GMEP(1.3) is denoted by Sol(GMEP(1.3)).

If we set \(G(x,y)=0, ~\forall x,y\in C\), GMEP(1.3) reduces to the following important class of variational inequalities which represents the boundary value problem arising in the formulation of Signorini problem: Find \( x \in C\) such that

Problem (1.4) was discussed in Duvaut and Lions [8] and Kikuchi and Oden [11]. For physical and mathematical formulation of the inequality (1.4), see for example Oden and Pires [19]. For related work, see also Baiocchi and Capelo [1].

If we set \(G(x,y)=0 ~\mathrm{and}~\phi (x,y)=0, ~\forall x,y\in C\), GMEP(1.3) reduces to the classical variational inequality problem (in short, VIP): Find \(x\in C\) such that

which was introduced and studied by Hartmann and Stampacchia [9]. The solution set of VIP(1.5) is denoted by Sol(VIP(1.5)).

Let S be a nonlinear mapping defined on C, the fixed point problem (in short, FPP) for the mapping S is to find \(x\in C\) such that

F(S) denote the fixed point set of S and is given by \(\{x\in C | x=Sx\}.\)

In 1976, Korpelevich [12] introduced the following iterative algorithm which is known as extra-gradient iterative method for VIP(1.5):

where \(\lambda >0\) and \(n\ge 0\), A is a monotone and Lipschitz continuous mapping and \(P_C\) is the metric projection of H onto C.

In 2006, Nadezkhina and Takahashi [16] proved that the sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) generated by the following modified version of extra-gradient iterative method (1.7):

where \(\lambda _{n}, \alpha _{n}\in (0,1)\) for \( n\ge 0\), converge weakly to a common solution to VIP(1.5) and FPP(1.6) for a nonexpansive mapping S.

In 2006, by combining a hybrid iterative method [18] with an extra-gradient iterative method (1.8), Nadezhkina and Takahashi [17] introduced the following hybrid extra-gradient iterative method for approximating a common solution of FPP(1.6) for a nonexpansive mapping S and VIP(1.5) for a monotone and Lipschitz continuous mapping A:

for \(n\ge 0\), and proved a strong convergence theorem.

In 2013, Djafari-Rouhani et al. [6] initiated the study of the following system of unrelated mixed equilibrium problems (in short, SUMEP); more precisely, for each \(i=1,2, \ldots ,N\), let \(C_i\) be a nonempty, closed and convex subset of a real Hilbert space H with \(\bigcap \nolimits _{i=1}^{N}C_i\ne \emptyset \); let \(G_i:C_i\times C_i\rightarrow {\mathbb {R}}\) be a bifunction such that \(G_i(x_i,x_i)=0,~~\forall x_i \in C_i\) and let \(A_i:H\rightarrow H\) be a monotone and Lipschitz continuous mapping, then SUMEP is to find \(x\in \bigcap \nolimits _{i=1}^{N}C_i\) such that

We note that for each \(i=1,2,\ldots .,N,\) the mixed equilibrium problem (MEP) is to find \(x_i\in C_i\) such that

We denote by \(\mathrm{Sol(MEP}\)(1.11)), the solution set of MEP(1.11) corresponding to the mappings \(G_i,A_i\) and the set \(C_i\). Then the solution set of SUMEP(1.10) is given by \(\bigcap \nolimits _{i=1}^{N}\mathrm{Sol(MEP}\)(1.11)). If \(N=1\) then SUMEP(1.10) is the mixed equilibrium problem MEP(1.2). They proved a strong convergence theorem for the following new hybrid extra-gradient iterative method which can be seen as an important extension of iterative method (1.9) given by Nadezhkina and Takahashi [17], for solving SUMEP(1.10) under some mild conditions: The iterative sequences \(\{x^{n}\}\), \(\{y_{i}^n\}\) and \(\{z_{i}^n\}\) be generated by the iterative schemes

for \(n\ge 0\) and for each \(i=1,2,\ldots ,N\), where \(\{r_{i}^{n}\},~\{\alpha _{i}^{n}\}\) are control sequences. For the further related work, see [10].

It is worth to mention that none of the strong convergence theorems established for the extra-gradient iterative methods presented so far, other than hybrid extra-gradient iterative method (1.12), for approximating a common solution to MEP (1.2), where A is monotone and Lipschitz continuous mapping, and fixed point problem for nonlinear mappings. Therefore, our main focus is to propose an extra-gradient iterative method which is not hybrid type, for solving MEP (1.2), where A is monotone and Lipschitz continuous mapping, and fixed point problems for nonlinear mappings and to establish a strong convergence theorem.

Recall that a nonself mapping \(T:C\rightarrow H\) is called k-strict pseudo-contraction if there exists a constant \(k\in [0,1)\) such that

Set \(k=0\) in (1.13), T is said to be nonexpansive and if we set \(k=1\) in (1.13), T is said to be pseudo-contractive. T is said to be strongly pseudo-contractive if there exists a constant \(\lambda \in (0,1)\) such that \(T-\lambda I\) is pseudo-contractive. Clearly, the class of k-strict pseudo-contractions falls into the one between classes of nonexpansive mappings and pseudo-contraction mappings. We note that the class of strongly pseudo-contractive mappings is independent of the class of k-strict pseudo-contraction mappings (see, e.g. [3, 4]). In a real Hilbert space H, (1.13) is equivalent to

T is pseudo-contractive if and only if

T is strongly pseudo-contractive if and only if there exists a positive constant \(\lambda \in (0,1)\) such that

Further, we note that the iterative methods for strict pseudo-contractions are far less developed than those for nonexpansive mappings though Browder and Petryshyn [4] initiated their work in 1967; the reason is probably that the second term appearing in the right-hand side of (1.13) impedes the convergence analysis for iterative algorithms used to find a fixed point of the strict pseudo-contraction T. However, on the other hand, strict pseudo-contractions have more powerful applications than nonexpansive mappings do in solving inverse problems (see, Scherzer [21]). Therefore it is interesting to develop the iterative methods for finding a common solution to GMEP(1.3) and fixed point problems for a nonexpansive mapping and for a finite family of k-strict pseudo-contraction mappings. For further work, see for example [13, 22, 25] and the references therein.

Motivated by the recent work [6, 10, 24], in this paper, we propose an extra-gradient iterative method for approximating a common solution to GMEP(1.3) and fixed point problems for a nonexpansive mapping and for a finite family of k-strict pseudo-contraction mappings in Hilbert space. Further, we prove that the sequences generated by the proposed iterative method converge strongly to the common solution to GMEP(1.3) and fixed point problems for a nonexpansive mapping and for a finite family of k-strict pseudo-contraction mappings. Further, we give a theoretical numerical example to illustrate the strong convergence theorem.

2 Preliminaries

We recall some concepts and results which are required for the presentation of the work. Let symbols \(\rightarrow \) and \(\rightharpoonup \) denote strong and weak convergence, respectively. It is well known that every Hilbert space satisfies the Opial condition, i.e., for any sequence \(\{x_n\}\) with \(x_{n}\rightharpoonup x\), the inequality

holds for every \(y \in H\) with \(y \not = x.\)

For every point \(x \in H\), there exists a unique nearest point in C denoted by \(P_ {C} x\) such that

The mapping \(P_{C}\) is called the metric projection of H onto C. It is well known that \(P_{C}\) is nonexpansive and satisfies

Moreover, \(P_{C}x\) is characterized by the fact \(P_{C}x\in C\) and

which implies

Definition 2.1

A mapping \(A:H\rightarrow H\) is said to be:

- (i)

Monotone if

$$\begin{aligned} \langle Ax-Ay,x-y\rangle \ge 0, ~~~\forall x,y\in H; \end{aligned}$$ - (ii)

\(\lambda \)-Lipschitz continuous if there exists a constant \(\lambda >0\) such that

$$\begin{aligned} \Vert Ax-Ay\Vert \le \lambda \Vert x-y\Vert , ~~~\forall x,y\in H. \end{aligned}$$

Lemma 2.1

[25] If \(T:C\rightarrow H\) is a k strict pseudo-contraction, then T is Lipschitz continuous with Lipschitz constant \(\frac{3-k}{1-k}.\)

Lemma 2.2

[25] If \(T:C\rightarrow H\) is a k-strict pseudo-contraction, then the fixed point set F(T) is closed convex so that the projection \(P_{F(T)}\) is well defined.

Lemma 2.3

[25] If \(T:C\rightarrow H\) is a k-strict pseudo-contraction with \(F(T)\ne \emptyset .\) Then \(F(P_{C}T)= F(T).\)

Lemma 2.4

[25] If \(T:C\rightarrow H\) is a k-strict pseudo-contraction and let for \(\lambda \in [k,1)\), define a mapping \( S:C\rightarrow H\) by \(Sx = \lambda x+ (1-\lambda )Tx\) for all \(x\in C.\) Then S is nonexpansive mapping such that \(F(S)=F(T).\)

Lemma 2.5

[23] Given an integer \(N\ge 1\), for each \(i=1,2,\ldots ,N\), let \( T_{i}:C\rightarrow H\) be a \(k_{i}\)-strictly pseudo-contraction for some \( 0 \le k_{i}< 1\) and \(\max \nolimits _{1\le i \le N}k_{i}<1\) such that \(\bigcap \nolimits _{i=1}^{N}F(T_{i})\ne \emptyset \). Assume that \(\{\eta _{i}\}_{i=1}^{N}\) is a positive sequence such that \(\sum \nolimits _{i=1}^{N}\eta _{i}^{n}=1\). Then \(\sum \nolimits _{i=1}^{N}\eta _{i}T_{i}:C\rightarrow H\) is a k-strictly pseudo-contraction with coefficient \( k=\max \nolimits _{1\le i \le N}k_{i}\) and \( F(\sum \nolimits _{i=1}^{N}\eta _{i}T_{i})=\bigcap \nolimits _{i=1}^{N}F(T_{i}).\)

Lemma 2.6

[20] For any \(x,y,z\in H\) and \(\alpha ,\beta ,\gamma \in [0,1]\) with \(\alpha +\beta +\gamma =1\), we have

Lemma 2.7

[24] Let \(\{s_{n}\}\) be a sequence of non-negative real numbers satisfying

where the sequences \(\{a_n\}, \{b_n\}, \{c_n\}\) satisfy the conditions: (i) \(\{a_n\}\subset [0,1]\) with \(\sum \nolimits _{n=0}^{\infty }a_{n}=\infty ,\) (ii) \(c_{n}\ge 0\) for all \(n\ge 0\) with \(\sum \nolimits _{n=0}^{\infty }c_{n} < \infty ,\) and (iii) \(\limsup \nolimits _{n\rightarrow \infty }b_{n}\le 0\). Then \(\lim \nolimits _{n\rightarrow \infty }s_{n}=0.\)

Lemma 2.8

[24] Let \(\{s_{k}\}\) be a sequence of real numbers that does not decrease at infinity in the sense that there exists a subsequence \(\{s_{k_{j}}\}\) of \(\{s_{k}\}\) such that \(s_{k_{j}}< s_{k_{j+1}}\) for all \(j \ge 0\). Define an integer sequence \(\{m_{k}\}_{k\ge k_{0}}\) as

then \(m_{k}\rightarrow \infty \) as \(k\rightarrow \infty \) and for all \(k\ge k_{0}\) we have \( \max \{s_{m_{k}},s_{k}\}\le s_{m_{k+1}}.\)

Assumption 2.1

The bifunctions \(G: C\times C\longrightarrow {\mathbb {R}}\) and \(\phi :C\times C\rightarrow {\mathbb {R}}\) satisfy the following assumptions:

- (i)

\(G(x,x)=0,~~\forall x \in C;\)

- (ii)

G is monotone, i.e., \(G(x,y)+G(y,x)\le 0,~~\forall x,y \in C;\)

- (iii)

For each \(y \in C\), \(x\rightarrow G(x,y)\) is weakly upper-semicontinuous;

- (iv)

For each \(x \in C\), \(y\rightarrow G(x,y)\) is convex and lower semicontinuous.

- (v)

\(\phi (.,.)\) is weakly continuous and \(\phi (.,y)\) is convex;

- (vi)

\(\phi \) is skew symmetric, i.e.,

\(\phi (x,x)-\phi (x,y)+\phi (y,y)-\phi (y,x)\ge 0,~~\forall x,y\in C;\)

- (vii)

for each \(z\in H\) and for each \(x\in C\), there exists a bounded subset \(D_{x}\subseteq C\) and \(z_{x}\in C\) such that for any \(y\in C\setminus D_{x}\),

\(G(y, z_{x})+ \phi (z_{x}, y)- \phi (y,y) + \frac{1}{r}\langle z_{x}-y, y-z\rangle < 0.\)

Assumption 2.2

The bifunction \(G: C\times C\longrightarrow {\mathbb {R}}\) is 2-monotone, i.e.,

By taking \(y=z\), it is clear that 2-monotone bifunction is a monotone bifunction. For example, if \( G(x,y)= x(y-x)\), then G is a 2-monotone bifunction.

Now, we give the concept of 2-skew-symmetric bifunction.

Definition 2.2

The bifunction \(\phi :C\times C\rightarrow {\mathbb {R}}\) is said to be 2-skew-symmetric if

We remark that if set \(z=x\) or \(x=y\) or \(y=z\) in (2.6) then 2-skew-symmetric bifunction becomes skew-symmetric bifunction.

Theorem 2.1

[7] Let C be a nonempty closed convex subset of a real Hilbert space H. Let the bifunctions \(G: C\times C\longrightarrow {\mathbb {R}}\) and \(\phi :C\times C\rightarrow {\mathbb {R}}\) satisfying Assumption 2.1. For \(r>0\) and \(z\in H\), define a mapping \(T_{r}: H\rightarrow C\) as follows:

for all \(z\in H\). Then the following conclusions hold:

- (a)

\( T_{r}(z)\) is nonempty for each \(z\in H;\)

- (b)

\( T_{r}\) is single valued;

- (c)

\( T_{r}\) is firmly nonexpansive mapping, i.e., for all \(z_{1},z_{2}\in H,\)

$$\begin{aligned} \Vert T_{r}z_{1}- T_{r}z_{2}\Vert ^{2}\le \langle T_{r}z_{1}- T_{r}z_{2},z_{1}-z_{2}\rangle ; \end{aligned}$$ - (d)

\( G( T_{r})=\mathrm{Sol(GMEP}\)(1.3));

- (e)

\(\mathrm{Sol(GMEP}\)(1.3)) is closed and convex.

Remark 2.1

It follows from Theorem 2.1(a)–(b) that

Further Theorem 2.1(c) implies the nonexpansivity of \(T_r\), i.e.,

Furthermore (2.7) implies the following inequality

3 Main result

We prove a strong convergence theorem for finding a common solution to GMEP(1.3) and fixed point problems for a nonexpansive mapping and for a finite family of k-strict pseudo-contraction mappings.

Theorem 3.1

Let C be a nonempty closed convex subset of a real Hilbert space H. Let the bifunction \(G: C\times C\longrightarrow {\mathbb {R}}\) satisfy Assumption 2.1(i), (iii), (v), (vii) and Assumption 2.2; let the bifunction \(\phi :C\times C\rightarrow {\mathbb {R}}\) be 2-skew-symmetric and satisfy Assumption 2.1 (v), (vii) and let \(f :C\rightarrow C\) be a \(\rho \)-contraction mapping. Let \(S: C\rightarrow H\) be a nonexpansive mapping and let \(A: C\rightarrow H\) be a monotone and Lipschitz continuous mapping with Lipschitz constant \(\lambda \). For each \(i=1,2\ldots ,N\), let \(T_{i}:C\rightarrow H\) be a \(k_{i}\)-strict pseudo-contraction mapping and let \(\{\eta _{i}^{n}\}_{i=1}^{N}\) be a finite sequence of positive numbers such that \(\sum \nolimits _{i=1}^{N}\eta _{i}^{n}=1\) for all \(n\ge 0\). Assume that \(\Gamma =\mathrm{Sol(GMEP}\)(1.3))\(\bigcap F(S)\bigcap (\bigcap \nolimits _{i=1}^{N}F(T_{i}))\ne \emptyset .\) Let the sequence \(\{x_n\}\) be generated by the iterative scheme:

for \(n\ge 0\), where \(\{r_n\} \subset [a,b] \subset (0,\lambda ^{-1})\) and \( \{\sigma _n\},\{\alpha _n\},\{\beta _n\},\{\gamma _n\}\) are the sequences in (0, 1) satisfying the following conditions:

- (i)

\(\alpha _{n} +\beta _{n} +\gamma _{n}=1\), \(\liminf \nolimits _{n\rightarrow \infty }\beta _{n}> 0\) and \(\liminf \nolimits _{n\rightarrow \infty }\gamma _{n}> 0\);

- (ii)

\(0\le k_{i}\le \alpha _{n}\le l< 1\), \(\lim \nolimits _{n\rightarrow \infty }\alpha _{n}=l;\)

- (iii)

\(\lim \nolimits _{n\rightarrow \infty }\sigma _{n}=0\) and \(\sum \nolimits _{n=0}^{\infty }\sigma _{n}=\infty ;\)

- (iv)

\(\sum \nolimits _{n=1}^{\infty }\sum \nolimits _{i=1}^{N}|\eta _{i}^{n}-\eta _{i}^{n-1}| < \infty .\)

Then \(\{x_{n}\}\) converges strongly to a point \({\hat{x}}\in \Gamma ,\) where \({\hat{x}}= P_{\Gamma }f({\hat{x}}).\)

Proof

Setting \(u_{n} := T_{r_{n}}(x_{n}-r_{n}Ay_{n})\) and \(z_{n}: =\alpha _{n}x_{n} +\beta _{n}ST_{r_{n}}(x_{n}-r_{n}Ay_{n})+ \gamma _{n}\sum _{i=1}^{N}\eta _{i}^{n}T_{i}x_{n}\), then we have \(z_{n} :=\alpha _{n}x_{n} +\beta _{n}Su_{n}+ \gamma _{n}\sum _{i=1}^{N}\eta _{i}^{n}T_{i}x_{n}\). Let \(p\in \Gamma \), we have

Further, using Remark 2.1, we have

Since A is monotone and Lipschitz continuous. Since \(p\in \mathrm{Sol}\)(GMEP(1.3)) and \(y_{n}\in C\), we have

and hence by using above inequality and monotonicity of A in (3.3), we obtain

Since G is 2-monotone and \(\phi \) is 2-skew-symmetric then (3.4) implies that

Next by using Lemma 2.6, we estimate

Now,

Denote \(W_{n}= \sum \nolimits _{i=1}^{N}\eta _{i}^{n}T_{i}\), it follows from Lemma 2.5 that the mapping \(W_{n}: C\rightarrow H\) is k-strict pseudo-contraction with \( k=\max \nolimits _{1\le i \le N}k_{i}\) and \(F(W_{n}) = \bigcap \nolimits _{i=1}^{N}F(T_{i})\) and hence using Lemma 2.6 and (3.5), we have

which implies

Hence, it follows from (3.6), (3.7) and (3.9) that

Since \( 1-\rho >0\) for \(\rho \in (0,1)\), it follows from mathematical induction that

for all \(n\ge 0\). Further, it follows from (3.11), (3.9) and (3.5) that the sequences \(\{x_{n}\}\), \(\{z_{n}\}\) and \(\{u_{n}\}\) are bounded. Again, we estimate \(\Vert x_{n+1}-{\hat{x}}\Vert ^{2}\) with \({\hat{x}}= {P_{\Gamma }}f({\hat{x}}).\) Since \({\hat{x}}\in \Gamma \subset C\), we have

Now,

where \(K = \sup \limits _{n}{2\Vert f(x_{n})-{\hat{x}}}\Vert .\) It follows from (3.12), (3.13) and (3.8) with \({\hat{x}}\) in the place of p, that

Now, we consider two cases on \(s_{n} := \Vert x_{n}-{\hat{x}}\Vert ^{2}\).

Case 1. Let the sequence \(\{s_{n}\}\) be decreasing for all \( n\ge n_{0} ~(n_{0}\in {\mathbb {N}})\), then it is convergent. Since \(\{r_n\} \subset [a,b] \subset (0,\lambda ^{-1})\), \(\lim \nolimits _{n\rightarrow \infty }\sigma _{n}=0\), \( \{\alpha _n\},\{\beta _n\},\{\gamma _n\}\) are the sequences in (0, 1) such that \(\liminf \nolimits _{n\rightarrow \infty }\beta _{n}> 0\) and \(\liminf \nolimits _{n\rightarrow \infty }\gamma _{n}> 0\) and \(k\le \alpha _{n}~\forall n\), then (3.14) implies

This implies that

It follows from (3.16), (3.17), inequality

and

that

and

Since \(\{x_{n}\} \subset C\) is bounded, there is a subsequence \(\{x_{n_{k}}\}\) of \(\{x_{n}\}\) such that \( x_{n_{k}}\rightharpoonup q\) in C and satisfying

Now, for each n, define a mapping \(V_{n}x= \alpha _{n}x + (1-\alpha _{n})W_{n}x,\) \(\forall x\in C\) and \(\alpha _{n}\in [k,1)\). Then by Lemma 2.4, \(V_{n}:C \rightarrow H\) is nonexpansive. Further, we have

Taking limit \(n\rightarrow \infty \) and using (3.18), we get

Now, by Condition (iv), we may assume that \(\eta _{i}^{n}\rightarrow \eta _{i}\) as \(n\rightarrow \infty \) for every \(1\le i\le N\). It is easy to observe that each \(\eta _{i}>0\) and \(\sum \nolimits _{i=1}^{N}\eta _{i}=1.\) It follows from Lemma 2.5 that the mapping \(W:C\rightarrow H\) defined by \( Wx=(\sum \nolimits _{i=1}^{N}\eta _{i}T_{i})x\), \( \forall x\in C\) is a k-strict pseudo-contraction and \(F(W)=\bigcap \nolimits _{i=1}^{N}F(T_{i})\). Since \(\{x_{n}\}\) is bounded, it follows from Lemma 2.2, condition (iv) and

that

Since

it follows from (3.18) and (3.24) that

Again, we observe that the mapping \(V: C \rightarrow H\) defined by \( Vx= lx+ (1-l)Wx\), for all \(x\in C\) and \(\alpha _{n}\in [k,1)\), is nonexpansive and \(F(V)=F(W).\) Hence, we have

It follows from (3.22), (3.24) and (3.26) that

Now, we prove \( q\in F(V)= F(W) = F(W_{n}) = \bigcap \nolimits _{i=1}^{N}F(T_{i})\). Assume that \( q\not \in F(V)\). Since \(x_{n_{k}}\rightharpoonup q\) and \(q\ne Vq\), from Opial condition, we have

which is a contradiction. Thus, we get \(q\in F(V) = F(W) = F(W_{n}) = \bigcap \nolimits _{i=1}^{N}F(T_{i}).\) It follows from (3.17) that the sequences \(\{x_{n}\}\) and \(\{u_{n}\}\) both have the same asymptotic behaviour and hence there is a subsequence \(\{u_{n_{k}}\}\) of \(\{u_{n}\}\) such that \(u_{n_{k}}\rightharpoonup q.\) Further, it follows from (3.17) and opial condition that \(q\in F(S)\). Next, we show that \(q\in \mathrm{Sol}\)(GMEP(1.3)).

It follows from (3.16) that sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) both have the same asymptotic behaviour. Therefore, there exists a subsequence \(\{y_{n_{k}}\}\) of \(\{y_{n}\}\) such that \(y_{n_{k}}\rightharpoonup q.\) Now, the relation \( y_{n}= T_{r_{n}}(x_{n}-r_{n}Ax_{n})\) implies

which implies that

Hence,

For t, with \(0\le t\le 1\), let \( y_{t}:= ty+ (1-t)q\in C\) and \(r_{n}\ge a,~ \forall n\), then we have

which implies, on taking limit \(k\rightarrow \infty \), that

Now,

Letting \(t\rightarrow 0^{+}\) and for each \(y\in C\), we have

which implies \(q\in \mathrm{Sol}\)(GMEP(1.3)). Thus \(q\in \Gamma .\) Now, it follows from (2.8) and (3.20) that

Since \(x_{n}\in C\), we have

and hence using \(\lim \nolimits _{n\rightarrow \infty }\sigma _{n}=0\), (3.16), (3.18), we have

Now, it follows from \(\sum \nolimits _{n=0}^{\infty }\sigma _{n}=\infty \), (3.15), (3.31), (3.32) and Lemma 2.7 that \(\lim \nolimits _{n\rightarrow \infty }s_{n}=0\). Thus \(\{x_{n}\}\) converges strongly to \({\hat{x}}= P_{\Gamma }f({\hat{x}}).\)

Case 2. Let there be a subsequence \(\{s_{k_{i}}\}\) of \(\{s_{k}\}\) such that \(s_{k_{i}}< s_{k_{i+1}} ~ \forall i \ge 0\). Then according to Lemma 2.8, we can define a nondecreasing sequence \(\{m_{k}\} \subset {\mathbb {N}}\) such that \(m_{k}\rightarrow \infty \) as \( k\rightarrow \infty \) and \(\max \{s_{m_{k}},s_{k}\}\le s_{m_{k+1}}~~\forall k\). Since \(\{r_{k}\}\in [a,b]\subset (0,\lambda ^{-1})\), \(\forall k\ge 0\) and \( \{\alpha _k\},\{\beta _k\},\{\gamma _k\}\) are the sequences in (0, 1) with conditions (i)–(ii), it follows from (3.14) that

Further, following similar steps as in Case 1, we obtain

Since \(\{x_{k}\}\) is bounded and \(\lim \nolimits _{k\rightarrow \infty }\sigma _{k}=0\), it follows from (3.17), (3.18) and inequality

that

Since \(s_{m_{k}}\le s_{m_{k+1}} ~ \forall k\), it follows from (3.15) that

Now taking limits as \(k\rightarrow \infty \), we obtain \(s_{m_{k+1}}\rightarrow 0\) as \(k\rightarrow \infty \). Since \(s_{k}\le s_{k+1} ~\forall k\), it follows that \(s_{k}\rightarrow 0\) as \( k\rightarrow \infty .\) Hence \(x_{k}\rightarrow {\hat{x}}\) as \( k\rightarrow \infty .\) Thus, we have shown that the sequence \(\{x_{n}\}\) generated by iterative algorithm (3.1) converges strongly to \({\hat{x}}= P_{\Gamma }f({\hat{x}}).\)\(\square \)

We give the following corollary which is an immediate consequence of Theorem 3.1.

Corollary 3.1

Let C be a nonempty closed convex subset of a real Hilbert space H. Let the bifunction \(G: C\times C\longrightarrow {\mathbb {R}}\) satisfy Assumption 2.1 (i), (iii), (v), (vii) and Assumption 2.2; let the bifunction \(\phi :C\times C\rightarrow {\mathbb {R}}\) be 2-skew-symmetric and satisfy Assumption 2.1 (v), (vii) and let \(f :C\rightarrow C\) be a \(\rho \)-contraction mapping. Let \(A: C\rightarrow H\) be a monotone and Lipschitz continuous mapping with Lipschitz constant \(\lambda \). For each \(i=1,2\ldots ,N\), let \(T_{i}:C\rightarrow H\) be a finite family of nonexpansive mappings and let \(\{\eta _{i}^{n}\}_{i=1}^{N}\) be a finite sequence of positive numbers such that \(\sum \nolimits _{i=1}^{N}\eta _{i}^{n}=1\) for all \(n\ge 0\). Assume that \(\Gamma _{1}=\mathrm{Sol}(\mathrm{GMEP}\)(1.3))\(\bigcap (\bigcap \nolimits _{i=1}^{N}F(T_{i}))\ne \emptyset .\) Let the sequence \(\{x_n\}\) be generated by the iterative scheme:

for \(n\ge 0\) where \(\{r_n\} \subset [a,b] \subset (0,\lambda ^{-1})\) and \( \{\sigma _n\},\{\alpha _n\},\{\beta _n\},\{\gamma _n\}\) are the sequences in (0, 1) satisfying the following conditions:

- (i)

\(\alpha _{n} +\beta _{n} +\gamma _{n}=1\), \(\liminf \nolimits _{n\rightarrow \infty }\beta _{n}> 0\) and \(\liminf \nolimits _{n\rightarrow \infty }\gamma _{n}> 0;\)

- (ii)

\(\lim \nolimits _{n\rightarrow \infty }\sigma _{n}=0\) and \(\sum \nolimits _{n=0}^{\infty }\sigma _{n}=\infty ;\)

- (iii)

\(\sum \nolimits _{n=1}^{\infty }\sum \nolimits _{i=1}^{N}|\eta _{i}^{n}-\eta _{i}^{n-1}| < \infty .\)

Then \(\{x_{n}\}\) converges strongly to a point \({\hat{x}}\in \Gamma _{1},\) where \({\hat{x}}= P_{\Gamma _{1}}f({\hat{x}}).\)

Proof

Set \(S=I\), the identity mapping on C, and \(k_{i}=0\) for \(i=1,2,\ldots ,N\) in Theorem 3.1, we get the desired result. \(\square \)

4 Numerical example

We give a theoretical numerical example which justifies Theorem 3.1.

Example 4.1

Let \(H={\mathbb {R}}\), \(C= [-1,1]\) and \(i=1,2,3.\) Define \(G: C\times C\longrightarrow {\mathbb {R}}\) and \(\phi :C\times C\rightarrow {\mathbb {R}}\) by \(G(x,y)= x(y-x)\) and \(\phi (x,y)= y-x\); let the mapping \(f:C\rightarrow C\) be defined by \(f(x)=\frac{x}{5}, \forall x \in C\); let the mapping \(A:C\rightarrow H\) be defined by \(A(x)=3x+ 1, \forall x\in C\); let the mapping \(T_{i}: C\rightarrow H\) be defined by \(T_{i}x=-(1+i)x\) for each \(i= 1,2,3\), and let the mapping \(S: C\rightarrow H\) be defined by \(Sx=\frac{x}{4}, \forall x \in C\). Setting \(\alpha _n=\frac{1}{10n}\) and \(r_{n}=\frac{1}{5},~\forall n\ge 0\), and \(\eta _{1}=\eta _{2}=\eta _{3}=\frac{1}{3}\). Then the sequence \(\{x_n\}\) in C generated by the iterative schemes:

converges to a point \({\hat{x}}=\{0\}\in \Gamma .\)

Proof

It is easy to prove that the bifunctions G and \(\phi \) satisfy Assumption 2.1 (i), (iii), (v), (vii) and Assumption 2.2, and Assumption 2.1 (v), (vii) respectively. Choose \(\alpha _{n}=0.7+\frac{0.1}{n^2}\), \(\beta _{n}= 0.2-\frac{0.2}{n^2}\) and \(\gamma _{n}=0.1+\frac{0.1}{n^2}\) for all \(n\ge 0\), then it is easy to observe that the sequences \(\{\alpha _n\},\{\beta _n\},\{\gamma _n\}\) are in (0, 1) such that \(\alpha _{n} +\beta _{n} +\gamma _{n}=1\) and satisfy the conditions \(\liminf _{n\rightarrow \infty }\beta _{n}> 0\) and \(\liminf _{n\rightarrow \infty }\gamma _{n}> 0\). Further, for each i, it is easy to prove that \(T_{i}\) are \(k_{i}\) strict pseudo-contraction mappings with \(k_{1}=\frac{1}{3}\), \(k_{2}=\frac{1}{2}\) and \(k_{3}=\frac{3}{5}\) and \(F(T_{i})=\{0\}\). Therefore \(k=\max \{k_{1},k_{2},k_{3}\}=\frac{3}{5}\). Also S is nonexpansive mapping with \(F(S)=\{0\}\). Hence Sol(GMEP(1.1))\( =\{0\}\). Thus \(\Gamma = \) Sol(GMEP(1.3))\(\bigcap F(S)\bigcap (\bigcap \nolimits _{i=1}^{N}F(T_{i}))=\{0\} \ne \emptyset .\) After simplification, iterative schemes (4.1) are reduced to the following:

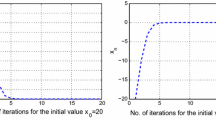

Next, using the software Matlab 7.8, we have following figure and table which show that \(\{x_n\}\) converges to \({\hat{x}}=\{0\}\).

Convergence of \(\{x_n\}\)

No. of iterations | \(x_n\) \(x_1=-1\) | No. of iterations | \(x_n\) \(x_1=-1\) | No. of iterations | \(x_n\) \(x_1=1\) | No. of iterations | \(x_n\) \(x_1=1\) |

|---|---|---|---|---|---|---|---|

1 | − 0.600000 | 14 | − 0.000784 | 1 | 0.600000 | 14 | 0.000784 |

2 | − 0.360000 | 15 | − 0.000470 | 2 | 0.360000 | 15 | 0.000470 |

3 | − 0.216000 | 16 | − 0.000282 | 3 | 0.216000 | 16 | 0.000282 |

4 | − 0.129600 | 17 | − 0.000169 | 4 | 0.129600 | 17 | 0.000169 |

5 | − 0.077760 | 18 | − 0.000102 | 5 | 0.077760 | 18 | 0.000102 |

6 | − 0.046656 | 19 | − 0.000061 | 6 | 0.046656 | 19 | 0.000061 |

7 | − 0.027994 | 20 | − 0.000037 | 7 | 0.027994 | 20 | 0.000037 |

8 | − 0.016796 | 21 | − 0.000022 | 8 | 0.016796 | 21 | 0.000022 |

9 | − 0.010078 | 22 | − 0.000013 | 9 | 0.010078 | 22 | 0.000013 |

10 | − 0.006047 | 23 | − 0.000008 | 10 | 0.006047 | 23 | 0.000008 |

11 | − 0.003628 | 24 | − 0.000005 | 11 | 0.003628 | 24 | 0.000005 |

12 | − 0.002177 | 25 | − 0.000003 | 12 | 0.002177 | 25 | 0.000003 |

13 | − 0.001306 | 26 | − 0.000002 | 13 | 0.001306 | 26 | 0.000002 |

This completes the proof. \(\square \)

5 Conclusion

We introduced an extra-gradient iterative method for finding a common solution to a generalized mixed equilibrium problem and fixed point problems for a nonexpansive mapping and for a finite family of k-strict pseudo-contraction mappings in Hilbert space and proved the strong convergence of the sequences generated by iterative method. A theoretical numerical example is given to illustrate the Theorem 3.1. It is of further research effort to extend the iterative method presented in this paper for solving these problems in Banach spaces, and for the case when A is multi-valued mapping.

References

Baiocchi, C., Capelo, A.: Variational and Quasi-Variational Inequalities. Wiley, New York (1984)

Blum, E., Oettli, W.: From optimization and variational inequalities to equilibrium problems. Math. Stud. 63, 123–145 (1994)

Browder, F.E.: Convergence of approximants to fixed points of nonexpansive nonlinear mappings in Banach spaces. Arch. Ration. Mech. Anal. 24, 82–90 (1967)

Browder, F.E., Petryshyn, W.V.: Construction of fixed points of nonlinear mappings in Hilbert spaces. J. Math. Anal. Appl. 20, 197–228 (1967)

Daniele, P., Giannessi, F., Mougeri, A. (eds.): Equilibrium Problems and Variational Models. Nonconvex Optimization and Its Application, vol. 68. Kluwer Academic Publishers, Norwell (2003)

Djafari-Rouhani, B., Kazmi, K.R., Rizvi, S.H.: A hybrid-extragradient-convex approximation method for a system of unrelated mixed equilibrium problems. Trans. Math. Pogram. Appl. 1(8), 82–95 (2013)

Djafari-Rouhani, B., Farid, M., Kazmi, K.R.: Common solution to generalized mixed equilibrium problem and fixed point problem for a nonexpansive semigroup in Hilbert space. J. Korean Math. Soc. 53(1), 89–114 (2016)

Duvaut, G., Lions, J.L.: Inequalities in Mechanics and Physcis. Springer, Berlin (1976)

Hartman, P., Stampacchia, G.: On some non-linear elliptic differential–functional equation. Acta Math. 115, 271–310 (1966)

Kazmi, K.R., Rizvi, S.H., Ali, R.: A hybrid iterative method without extrapolating step for solving mixed equilibrium problem. Creat. Math. Inform. 24(2), 163–170 (2015)

Kikuchi, N., Oden, J.T.: Contact Problems in Elasticity. SIAM, Philadelphia (1998)

Korpelevich, G.M.: The extragradient method for finding saddle points and other problems. Matecon 12, 747–756 (1976)

Marino, G., Xu, H.K.: Weak and strong convergence theorems for \(k\)-strict pseudo-contractions in Hilbert spaces. J. Math. Anal. Appl. 329, 336–349 (2007)

Moudafi, A.: Second order differential proximal methods for equilibrium problems. J. Inequal. Pure Appl. Math. 4(1), 18 (2003)

Moudafi, A., Thera, M.: Proximal and Dynamical Approaches to Equilibrium Problems. Lecture Notes in Economics and Mathematical systems, vol. 477, pp. 187–201. Springer, New York (1999)

Nadezhkina, N., Takahashi, W.: Weak convergence theorem by a extragradient method for nonexpansive mappings and monotone mappings. J. Optim. Theory Appl. 128, 191–201 (2006)

Nadezhkina, N., Takahashi, W.: Strong convergence theorem by a hybrid method for nonexpansive mappings and Lipschitz continuous monotone mappings. SIAM J. Optim. 16(40), 1230–1241 (2006)

Nakajo, K., Takahashi, W.: Strong convergence theorems for nonexpansive mappings and nonexpansive semigroups. J. Math. Anal. Appl. 279, 372–379 (2003)

Oden, J.T., Pires, E.B.: Contact problems in elastostatics with nonlocal friction laws, TICOM, Report 81–12. University of Texas, Austin (1981)

Osilike, M.O., Igbokwe, D.I.: Weak and strong convergence theorems for fixed points of pseudo-contractions and solutions of monotone type operator equations. Comput. Math. Appl. 40, 559–567 (2000)

Scherzer, O.: Convergence criteria of iterative methods based on Landweber iteration for solving nonlinear problems. J. Math. Anal. Appl. 194, 911–933 (1991)

Xu, H.K.: Iterative methods for strict pseudo-contractions in Hilbert spaces. Nonlinear Anal. 67, 2258–2271 (2007)

Xu, W., Wang, Y.: Strong convergence of the iterative methods for hierarchial fixed point problems of an infinite family of strictly nonself pseudocontractions. Abstr. Appl. Anal. 2012, 457024 (2012). https://doi.org/10.1155/2012/457024

Zegeye, H., Boikanyo, A.: Approximating solutions of variational inequalities and fixed points of nonexpansive semigroups. Adv. Nonlinear Var. Inequal. 20, 26–40 (2017)

Zhou, H.Y.: Convergence theorems of fixed points for \(k\)-strict pseudo-contractions in Hilbert space. Nonlinear Anal. 69, 456–462 (2008)

Acknowledgements

Authors are very grateful to the anonymous referees for their critical comments which led to substantial improvements in the original version of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kazmi, K.R., Yousuf, S. Common solution to generalized mixed equilibrium problem and fixed point problems in Hilbert space. RACSAM 113, 3699–3715 (2019). https://doi.org/10.1007/s13398-019-00725-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13398-019-00725-1

Keywords

- Generalized mixed equilibrium problem

- Monotone mapping

- Lipschitz continuous mapping

- k-strict pseudo-contraction mapping

- Extra-gradient iterative method