Abstract

Microarchitectural timing channels expose hidden hardware states though timing. We survey recent attacks that exploit microarchitectural features in shared hardware, especially as they are relevant for cloud computing. We classify types of attacks according to a taxonomy of the shared resources leveraged for such attacks. Moreover, we take a detailed look at attacks used against shared caches. We survey existing countermeasures. We finally discuss trends in attacks, challenges to combating them, and future directions, especially with respect to hardware support.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Computers are increasingly handling sensitive data (banking, voting), while at the same time we consolidate more services, sensitive or not, on a single hardware platform. This trend is driven by both cost savings and convenience. The most visible example is the cloud computing—infrastructure-as-a-service (IaaS) cloud computing supports multiple virtual machines (VMs) on a hardware platform managed by a virtual machine monitor (VMM) or hypervisor. These co-resident VMs are mutually distrusting: high-performance computing software may run alongside a data centre for financial markets, requiring the platform to ensure confidentiality and integrity of VMs. The cloud computing platform also has availability requirements: service providers have service-level agreements (SLAs) which specify availability targets, and malicious VMs being able to deny service to a co-resident VM could be costly to the service provider.

The last decade has seen significant progress in achieving such isolation. Specifically, the seL4 microkernel [93] has a proof of binary-level functional correctness, as well as proofs of (spatial) confidentiality, availability and integrity enforcement [94]. Thus, it seems that the goals of strong isolation are now achievable, although this has not yet made its way into mainstream hypervisors.

However, even the strong assurance provided by the formal proofs about information flow in seL4 remain restricted to spatial isolation and limited forms of temporal isolation via coarse-grained deterministic scheduling. While they, in principle, include covert storage channels [118], there exist other kinds of channels that they do not cover that must be addressed informally.

Stealing secrets solely through hardware means (for example, through differential power analysis [95, 135]) has an extensive treatment in the literature [15, 96]. While some systems, such as smartcard processors, are extensively hardened against physical attacks, some degree of trust in the physical environment will probably always be required [109, 114].

One area that has, until recently, received comparatively little attention is leakage at the interface between software and hardware. While hardware manufacturers have generally succeeded in hiding the internal CPU architecture from programmers, at least for functional behaviour, its timing behaviour is highly visible. Over the past decade, such microarchitectural timing channels have received more and more attention, from the recognition that they can be used to attack cryptographic algorithms [25, 124, 129], to demonstrated attacks between virtual machines [107, 133, 169, 180, 181]. Some recommendations from the literature have already been applied by cloud providers, e.g. Microsoft’s Azure disables Hyperthreading in their cloud offerings due to the threat of introducing unpredictability and side-channel attacks [110].

We argue that microarchitectural timing channels are a sufficiently distinct, and important, phenomenon to warrant investigation in their own right. We therefore summarise all microarchitectural attacks known to date, alongside existing mitigation strategies, and develop a taxonomy based on both the degree of hardware sharing and concurrency involved. From this, we draw insights regarding our current state of knowledge, predict likely future avenues of attack and suggest fruitful directions for mitigation development.

Acıiçmez and Koç [3] summarised the microarchitectural attacks between threads on a single core known at the time. This field is moving quickly, and many more attacks have since been published, also exploiting resources shared between cores and across packages. Given the prevalence of cloud computing, and the more recently demonstrated cross-VM attacks [83, 107, 169], we include in the scope of this survey all levels of the system sharing hierarchy, including the system interconnect, and other resources shared between cores (and even packages).

We also include denial-of-service (DoS) attacks, as this is an active concern in co-tenanted systems, and mechanisms introduced to ensure quality of service (QoS) for clients by partitioning hardware between VMs will likely also be useful in eliminating side and covert channels due to sharing. While we include some theoretical work of obvious applicability, we focus principally on practical and above all demonstrated attacks and countermeasures.

1.1 Structure of this paper

Section 2 presents a brief introduction to timing channels, and the architectural features that lead to information leakage. Section 3 presents the criteria we use to arrange the attacks and countermeasures discussed in the remainder of the paper. Section 4 covers all attacks published to date, while Sect. 5 does the same for countermeasures. In Sect. 6, we apply our taxonomy to summarise progress to date in the field and to suggest likely new avenues for both attack and defence.

2 Background

2.1 Types of channels

Interest in information leakage and secure data processing was historically centred on sensitive, and particularly cryptographic, military and government systems [44], although many, including Lampson [100], recognised the problems faced by the tenant of an untrusted commercial computing platform, of the sort that is now commonplace. The US government’s “Orange Book” standard [45], for example, collected requirements for systems operating at various security classification levels. This document introduced standards for information leakage, in the form of limits on channel bandwidth and, while these were seldom if ever actually achieved, this approach strongly influenced the direction of later work.

Leakage channels are often classified according to the threat model: side channels refer to the accidental leakage of sensitive data (for example an encryption key) by a trusted party, while covert channels are those exploited by a Trojan to deliberately leak information. Covert channels are only of interest for systems that do not (or cannot) trust internal components, and thus have highly restrictive information-flow policies (such as multilevel secure systems). In general-purpose systems, we are therefore mostly concerned with side channels.

Channels are also typically classified as either storage or timing channels. In contrast to storage channels, timing channels are exploited through timing variation [134]. While Wray [163] argued convincingly that the distinction is fundamentally arbitrary, it nonetheless remains useful in practice. In current usage, storage channels are those that exploit some functional aspect of the system—something that is directly visible in software, such as a register value or the return value of a system call. Timing channels, in contrast, can occur even when the functional behaviour of the system is completely understood, and even, as in the case of seL4, with a formal proof of the absence of storage channels [118]. The precise temporal behaviour of a system is rarely, if ever, formally specified.

In this work, we are concerned with microarchitectural timing channels.

Some previous publications classify cryptanalytic cache-based side-channel attacks as time driven, trace driven or access driven, based on the type of information the attacker learns about a victim cipher [3, 121]. In trace-driven attacks, the attacker learns the outcome of each of the victim’s memory accesses in terms of cache hits and misses [2, 56, 126]. Due to the difficulty of extracting the trace of cache hits and misses in software, trace-driven attacks are mostly applied in hardware and are thus outside the scope of this work.

Time-driven attacks, like trace-driven attacks, observe cache hits and misses. However, rather than generating a trace, the attacks observe the aggregate number of cache hits and misses, typically through an indirect measurement of the total execution time of the victim. Examples of such attacks include the Bernstein attack on AES [25] and the Evict+Time attack of Osvik et al. [125].

Access-driven attacks, such as Prime+Probe [125, 129], observe partial information on the addresses the victim accesses.

This taxonomy is very useful for analysing the power of an attacker with respect to a specific cipher implementation. However, it provides little insight on the attack mechanisms and their applicability in various system configurations. As such, this taxonomy is mostly orthogonal to ours.

2.2 Relevant microarchitecture

The (instruction set) architecture (ISA) of a processor is a functional specification of its programming interface. It abstracts over functionally irrelevant implementation details, such as pipelines, issue slots and caches, which constitute the microarchitecture. A particular ISA, such as the ubiquitous x86 architecture, can have multiple, successive or concurrent, microarchitectural implementations.

While functionally transparent, the microarchitecture contains hidden state, which can be observed in the timing of program execution, typically as a result of contention for resources in the hidden state. Such contention can be between processes (external contention) or within a process (internal contention).

2.2.1 Caches

Caches are an essential feature of a modern architecture, as the clock rate of processors and the latency of memory have diverged dramatically in the course of the last three decades. A small quantity of fast (but expensive) memory effectively hides the latency of large and slow (but cheap) main memory. Cache effectiveness relies critically on the hit rate, the fraction of requests that are satisfied from the cache. Due to the large difference in latency, a small decrease in hit rate leads to a much larger decrease in performance. Cache-based side-channel attacks exploit this variation to detect contention for space in the cache, both between processes (as in a Prime+Probe attack, see Sect. 4.1.1) and within a process (Sect. 4.3.2).

A few internal details are needed to understand such attacks:

Cache lines In order to exploit spatial locality, to cluster memory operations and to limit implementation complexity, caches are divided into lines. A cache line holds one aligned, power-of-two-sized block of adjacent bytes loaded from memory. If any byte needs to be replaced (evicted to make room for another), the entire line is reloaded.

Associativity Cache design is a trade-off between complexity (and hence speed) and the rate of conflict misses. Ideally, any memory location could be placed in any cache line, and an n-line cache could thus hold any n lines from memory. Such a fully associative cache is ideal in theory, as it can always be used to its full capacity—misses occur only when there is no free space in the cache, i.e. all misses are capacity misses. However, this cache architecture requires that all lines are matched in parallel to check for a hit, which increases complexity and energy consumption and limits speed. Fully associative designs are therefore limited to small, local caches, such as translation look-aside buffers (TLBs).

The other extreme is the direct-mapped cache, where each memory location can be held by exactly one cache line, determined by the cache index function, typically a consecutive string of bits taken from the address, although more recent systems employ a more complex hash function [74, 112, 170]. Two memory locations that map to the same line cannot be cached at the same time—loading one will evict the other, resulting in a conflict miss even though the cache may have unused lines.

In practice, a compromised design—the set-associative cache, is usually employed. Here, the cache is divided into small sets (usually of between 2 and 24 lines), within which addresses are matched in parallel, as for a fully associative cache. Which set an address maps to is calculated as for a direct-mapped cache—as a function of its address (again, usually just a string of consecutive bits). A cache with n-line sets is called n-way associative. Note that the direct-mapped and fully associative caches are special cases, with 1-way and N-way associativity, respectively (where N is the number of lines in the cache).

For both the direct-mapped and set-associative caches, the predictable map from address to line is exploited. In attacks, it is used to infer cache sets accessed an algorithm under attack (Sect. 4.1.1). In cache colouring (Sect. 5.5.4) it is exploited to ensure that an attacker and its potential victim never share sets, thus cannot conflict.

Cache hierarchy As CPU cycle times and RAM latencies have continued to diverge, architects have used a growing number of hierarchical caches—each lower in the hierarchy being larger and slower than the one above. The size of each level is carefully chosen to balance service time to the next highest (faster) level, with the need to maintain hit rate.

Flushing caches is a simple software method for mitigating cache-based channels, measured by direct and indirect cost. The direct cost contains writing back dirty cache lines and invalidating all cache lines. The indirect cost represents the performance degradation of a program due to starting with cold caches after every context switch. Therefore, the cache level that an attack targets is important when considering countermeasures: as the L1 is relatively small, it can be flushed on infrequent events, such as timesliced VM switches, with minimal performance penalty. Flushing the larger L2 or L3 caches tends to be expensive (in terms of indirect cost), but they might be protected by other means (e.g. colouring, Sect. 5.5.4). This hierarchy becomes more prominent on multiprocessor and multicore machines—see Sect. 2.2.2.

Virtual versus physical addressing Caches may be indexed by either the virtual or the physical addresses. Virtual address indexing is generally only used in L1 caches, where the virtual-to-physical translation is not yet available, while physically indexed caches are used at the outer levels. This distinction is important for cache colouring (Sect. 5.5.4), as virtual address assignment is not under operating system (OS) control, and thus, virtually indexed caches cannot be coloured.

Architects employ more than just data and instruction caches. The TLB, for example, caches virtual-to-physical address translations, avoiding an expensive table walk on every memory access. The branch target buffer (BTB) holds the targets of recently taken conditional branches, to improve the accuracy of branch prediction. The trace cache on the Intel Netburst (Pentium 4) microarchitecture was used to hold the micro-ops corresponding to previously decoded instructions. All these hardware features are forms of shared caches indexed by virtual addresses. All are vulnerable to exploitation—by exploiting either internal or external contention.

2.2.2 Multiprocessor and multicore

Traditional symmetric multiprocessing (SMP) and modern multicore systems complicate the pattern of resource sharing and allow both new attacks and new defensive strategies. Resources are shared hierarchically between cores in the same package (which may be further partitioned into either groups or pseudo-cores, as in the AMD Bulldozer architecture) and between packages. This sharing allows real-time probing of a shared resource (e.g. a cache) and also makes it possible to isolate mutually distrusting applications by using pairs of cores with minimal shared hardware (e.g. in separate packages).

The cache hierarchy is matched to the layout of cores. In a contemporary, server-style multiprocessor system with, for example, 4 packages (processors) and 8 cores per package, there would usually be 3 levels of cache: private (per-core) L1 caches (split into data and instruction caches), unified L2 that is core private (e.g. in the Intel Core i7 2600) or shared between a small cluster of cores (e.g. 2 in the AMD FX-8150, Bulldozer architecture), and one large L3 per package, which may be non-uniformly partitioned between cores, as in the Intel Sandy Bridge and later microarchitectures.

2.2.3 Memory controllers

Contemporary server-class multiprocessor systems almost universally feature non-uniform memory access (NUMA). There are typically between one and four memory controllers per package, with those in remote packages accessible over the system interconnect. Memory bandwidth is always under-provisioned: the total load/store bandwidth that can be generated by all cores is greater than that available. Thus, a subset of the cores, working together, can saturate the system memory controllers, filling internal buses, therefore dramatically increasing the latency of memory loads and stores for all processes [117].

Besides potential use as a covert channel, this allows a DoS attack between untrusting processes (or VMs) and needs to be addressed to ensure QoS. The same effect is visible at other levels: the cores in a package can easily saturate that package’s last-level cache (LLC); although, in this case, the effect is localised per package.

2.2.4 Buses and interconnects

Historically, systems used a single bus to connect CPUs, memory and device buses (e.g. PCI). This bus could easily be saturated by a single bus agent, effecting a DoS for other users (Sect. 4.6.1). Contemporary interconnects are more sophisticated, generally forming a network among components on a chip, called networks-on-chip. These network components are packet buffers, crossbar switches, and individual ports and channels. The interconnect networks are still vulnerable to saturation [159], being under-provisioned, and to new attacks, such as routing-table hijacking [138]. Contention for memory controllers has so far only been exploited for DoS (Sect. 4.5), but exploiting them as covert channels would not be hard.

2.2.5 Hardware multithreading

The advent of simultaneous multithreading (SMT), such as Intel’s Hyperthreading, exposed resource contention at a finer level than ever before. In SMT, multiple execution contexts (threads) are active at once, with their own private state, such as register sets. This can increase utilisation, by allowing a second thread to use whatever execution resources are left idle by the first. The number of concurrent contexts ranges from 2 (in Intel’s Hyperthreading) to 8 (in IBM’s POWER8). In SMT, instructions from multiple threads are issued together and compete for access to the CPU’s functional units.

A closely related technique, temporal multithreading (TMT), instead executes only one thread at a time, but rapidly switches between them, either on a fixed schedule (e.g. Sun/Oracle’s T1 and later) or on a high-latency event (an L3 cache miss on Intel’s Montecito Itanium); later Itanium processors switched from TMT to SMT.

Both hardware thread systems (SMT and TMT) expose contention within the execution core. In SMT, the threads effectively compete in real time for access to functional units, the L1 cache, and speculation resources (such as the BTB). This is similar to the real-time sharing that occurs between separate cores, but includes all levels of the architecture. In contrast, TMT is effectively a very fast version of the OS’s context switch, and attackers rely on persistent state effects (such as cache pollution). The fine-grained nature though should allow events with a much shorter lifetime, such as pipeline bubbles, to be observed. SMT has been exploited in known attacks (Sects. 4.2.1, 4.3.1), while TMT, so far, has not. (Presumably because it is not widespread in contemporary processors.)

2.2.6 Pipelines

Instructions are rarely completed in a single CPU cycle. Instead, they are broken into a series of operations, which may be handled by a number of different functional units (e.g. the arithmetic logic unit, or the load/store unit). The very fine-grained sharing imposed by hardware multithreading (Sect. 2.2.5) makes pipeline effects (such as stalls) and contention on non-uniformly distributed resources (such as floating-point-capable pipelines) visible. This has been exploited (Sect. 4.2.1).

2.2.7 The sharing hierarchy

Figure 1 gathers various of the contended resources we have described, grouped by the degree of sharing, for a representative contemporary system (e.g. Intel Nehalem). The lowest layer, labelled system shared, contains the interconnect (Sect. 2.2.4), which is shared between all processes in the system, while the highest layer contains the BTB (Sect. 2.2.1), and the pipelines and functional units (Sect. 2.2.6); these are thread shared—only shared between hardware threads (Sect. 2.2.5) on the same core. The intermediate layers are more broadly shared, the lower they are: core-shared resources, such as the L1/L2 caches and TLB; package-shared resources, the L3 cache; and the NUMA- shared memory controllers (per package, but globally accessed, Sect. 2.2.3).

In Table 1, we survey published attacks at each level. Those at higher levels tend to achieve higher severity by exploiting the higher-precision information available, while those at lower levels (e.g. the interconnect) are cruder, mostly being DoS. Simultaneously, thanks to the smaller degree of sharing, the thread-shared resources are the easiest to protect [by disabling Hyperthreading (Sect. 5.5.1), for example].

2.3 Time and exploitation

Exploiting a timing channel naturally requires some way of measuring the passage of time. This can be achieved with access to real wall-clock time, but any monotonically increasing counter can be used. A timing channel can be viewed, abstractly, as a pair of clocks [163]. If the relative rate of these two clocks varies in some way that depends on supposedly hidden state, a timing channel exists. These clocks need not be actual time sources; they are simply sequences of events, e.g. real clock ticks, network packets, CPU instructions being retired.

In theory, it should be possible to prevent the exploitation of any timing channel, without actually eliminating the underlying contention, by ensuring that all clocks visible to a process are either synchronised (as in deterministic execution or instruction-based scheduling, see Sect. 5.3.1), or sufficiently coarse (or noisy) that they are of no use for precise measurements (as in fuzzy time, see Sect. 5.2). In practice, this approach is very restrictive and makes it very difficult to incorporate real-world interactions, including networking.

3 Taxonomy

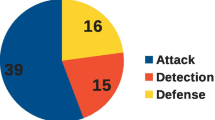

We arrange the attacks and countermeasures that we will explore in the next two sections along two axes: Firstly, according to the sharing levels indicated in Fig. 1, and secondly by the degree of concurrency used to exploit the vulnerability.

As we shall see, the types of attack possible vary with the level of sharing. At the top level, the attacker has very fine-grained access to the victim’s state, while at the level of the bus, nothing more than detecting throughput variation is possible. The degree of concurrency, either full concurrency (i.e. multicore), timesliced execution on a single core, or hardware threading (e.g. SMT, which is intermediate), also varies predictably with level: the lower-level attacks require true concurrency, as the bus has no real persistent state. Likewise, the top-level (fine-grained) attacks also require a high degree of concurrency and are usually more effective under SMT than time slicing, as the components involved (L1, TLB, BTB) have relatively small states that are rapidly overwritten. The intermediate levels (those targeting the L3 cache, for example) are exploitable at a relatively coarse granularity, thanks to the much larger persistent state. The attacks are presented in Table 1. Table 2 lists cache-based side-channel attacks due to resource contention between processes or VMs in more detail, as they form the largest class of known attacks.

Table 3 presents the known countermeasures, arranged in the same taxonomy. The shaded cells indicate where known attacks exist, giving a visual impression of the coverage of known countermeasures against known attacks. The type of countermeasure possible naturally varies with the physical and temporal sharing level. Tables 1 and 3 link to the sections in the text where the relevant attacks or countermeasures are discussed in detail. In Table 4, we list the known countermeasures that are deployed on current systems, based on published information. However, our list may not be complete, as product details may not be fully disclosed. We note that developers of cryptographic packages usually provide patches for published attacks.

4 Attacks

This section presents all known microarchitectural timing attacks. Section 4.1 summarises the common strategies used, while Sect. 4.2 through Sect. 4.6 list attacks, arranged first by sharing level (thread shared through system shared) and then by degree of temporal concurrency used in the reported exploit, from truly concurrent attacks exploiting multicores, through hardware-thread-based attacks, to those only requiring coarse interleaving (e.g. pre-emptive multitasking). Refer to Table 1 for an overview.

4.1 Attack types

4.1.1 Exploiting caches

Prime and probe (Prime+Probe) This technique detects the eviction of the attacker’s working set by the victim: The attacker first primes the cache by filling one or more sets with its own lines. Once the victim has executed, the attacker probes by timing accesses to its previously loaded lines, to see if any were evicted. If so, the victim must have touched an address that maps to the same set.

Flush and reload (Flush+Reload ) This is the inverse of prime and probe and relies on the existence of shared virtual memory (such as shared libraries or page de-duplication) and the ability to flush by virtual address. The attacker first flushes a shared line of interest (by using dedicated instructions or by eviction through contention). Once the victim has executed, the attacker then reloads the evicted line by touching it, measuring the time taken. A fast reload indicates that the victim touched this line (reloading it), while a slow reload indicates that it did not. On x86, the two steps of the attack can be combined by measuring timing variations of the clflush instruction. The advantage of Flush+Reload over Prime+Probe is that the attacker can target a specific line, rather than just a cache set.

Evict and time (Evict+Time ) This approach uses the targeted eviction of lines, together with overall execution time measurement. The attacker first causes the victim to run, preloading its working set and establishing a baseline execution time. The attacker then evicts a line of interest and runs the victim again. A variation in execution time indicates that the line of interest was accessed.

Covert channel techniques To use the cache as a covert channel, the parties employ a mutual Prime+Probe attack. The sender encodes a bit by accessing some number of cache sets. The receiver decodes the message by measuring the time to access its own colliding lines. Covert channels have been demonstrated at all cache levels.

Denial of service When a lower-level cache is shared by multiple cores, a malicious thread can compete for space in real time with those on other cores. Doing so also saturates the lower-level cache’s shared bus. The performance degradation suffered by victim threads is worse than that in a time-multiplexed attack [162]. If the cache architecture is inclusive, evicting contents from a lower-level cache also invalidates higher-level caches on other cores.

Unmasking the victim’s layout In order to perform a cache-contention-based attack, the attacker needs to be able to collide in the cache with the victim. In order to know which parts of its own address spaces will do so, it needs to have some knowledge about the virtual and/or physical layout of the victim. This was historically a simple task for the virtual address space, as this was generally set at compile time, and highly predictable. The introduction of address space layout randomisation (ASLR, initially to make code-injection attacks harder to achieve) made this much harder. Hund et al. [74] demonstrated that contention in the TLB can be exploited to expose both the kernel’s and the victim’s address space layout.

4.1.2 Exploiting real-time contention

Real-time attacks exploit resource contention by maliciously increasing their consumption of a shared resource. For instance, adversaries can exhaust shared bus bandwidth, with a large number of memory requests [155]. Other currently running entities suffer serious performance effects: a DoS attack.

4.2 Thread-shared state

4.2.1 Hardware threads

Contention between hardware threads on the multiplier unit is detectable and exploitable. Wang and Lee [157] demonstrated its use to implement a covert channel, and Acıiçmez and Seifert [9] further showed that it in fact forms a side channel, allowing a malicious thread to distinguish multiplications from squarings in OpenSSL’s RSA implementation. These attacks both measure the delay caused by competing threads being forced to wait for access to functional units (here the multiplier).

Acıiçmez et al. [5] presented two attacks that exploit contention on the BTB (Sect. 2.2.1). The first is an Evict+Time attack (Sect. 4.1.1). that selectively evicts entries from the BTB by executing branches at appropriate addresses and then observes the effect on the execution time of an RSA encryption (in OpenSSL 0.9.7e). The second attack improves on the first by also measuring the time required to perform the initial eviction (thus inferring whether OpenSSL had previously executed the branch), thereby reducing the number of observations required from \(10^7\) to \(10^4\). Shortly thereafter, the same authors presented a further improved attack, simple branch prediction analysis (SBPA), that retrieved most key bits in a single RSA operation [6]. All three attacks rely on the fine-grained sharing involved in SMT.

Yarom et al. [171] presented CacheBleed, a side-channel attack that exploits cache-bank collisions [54, 77] to create measurable timing variations. The attack is able to identify the cache bank that stores each of the multipliers used during the exponentiation in the OpenSSL “constant time” RSA implementation [34, 68], allowing a complete private key recovery after observing 16,000 decryptions.

Handling exceptions on behalf of one thread can also cause performance degradation for another SMT thread, as the pipeline is flushed during handling. Further, on the Intel Pentium 4, self-modifying code flushes the trace cache (see Sect. 2.2.1), reducing performance by 95%. Both of these DoS attacks were demonstrated by Grunwald and Ghiasi [62].

4.2.2 Time slicing

Saving and restoring CPU state on a context switch is not free. A common optimisation is to note that most processes do not use the floating-point unit (FPU), which has a large internal state, and save and restore it only when necessary. When switching, the OS simply disables access to the FPU and performs the costly context switch if and when the process causes an exception by attempting a floating-point operation. The latency of the first such operation after a context switch thus varies depending on whether any other process is using the FPU (if it was never saved, it need not be restored). Hu [73] demonstrated that this constitutes a covert channel.

Andrysco et al. [16] demonstrated that the timings of floating-point operations vary wildly, by benchmarking each combination of instructions and operands. In particular, multiplying or dividing with subnormal values causes slowdown on all tested Intel and AMD processors, whether using single instruction multiple data (SIMD) or x87 instructions [79]. With such measurable effects, they implemented a timing attack on a scalable vector graphics (SVG) filter, which reads arbitrary pixels from any victim web page though the Firefox browser (from version 23 though 27).

Acıiçmez et al. [4] argued that the previously published SBPA attack [6] should work without SMT, instead using only pre-emptive multitasking. This has not been demonstrated in practice, however.

Cock et al. [41] discovered that the reported cycle counter value on the ARM Cortex A8 (AM3358) varies with branch mis-predictions in a separate process. This forms a covert channel and might be exploitable as a side-channel by using SBPA attack.

Evtyushkin et al. [52] implemented a side-channel attack on BTB collisions, which can find both kernel-level and user-level virtual address space layout on ASLR enabled Linux platforms. Firstly, they found that different virtual addresses in the same address space can create a BTB collision, because Haswell platforms use only part of the virtual address bit as hash tags. Secondly, they found that the same virtual address from two different address spaces can also create a BTB collision.

Bulygin [37] demonstrated a side-channel attack on the return stack buffer (RSB). The RSB is a small rolling cache of function-call addresses used to predict the return address of return statements. By counting the number of RSB entries, a victim replaces the attack can detect the case of an end reduction in the Montgomery modular multiplication [115] which leads to breaking RSA [36]. Bulygin [37] also showed how to use the RSB side channel to detect whether software executes on an hypervisor.

4.3 Core-shared state

4.3.1 Hardware threads

A number of attacks have exploited hardware threading to probe a competing thread’s L1 cache usage in real time. Percival [129] demonstrated that contention on both the L1 (data) and L2 caches of a Pentium 4 employing Hyperthreading can be exploited to construct a covert channel, and also as a side channel, to distinguish between square and multiply steps and identify the multipliers used in OpenSSL 0.9.7c’s implementation of RSA, thus leaking the key. Similarly, Osvik et al. [125] and Tromer et al. [143] attacked AES in OpenSSL 0.9.8 with Prime+Probe on the L1 data-cache (D-cache). Acıiçmez [1] used L1 instruction-cache (I-cache) contention to determine the victim’s control flow, distinguishing squares and multiplies in OpenSSL 0.9.8d RSA. Brumley and Hakala [35] used a simulation (based on empirical channel measurements) to show that an attack against elliptic-curve cryptography (ECC) in OpenSSL 0.9.8k was likely to be practical. Aciiçmez et al. [7] extended their previous attack [1] to digital signature algorithm (DSA) in OpenSSL 0.9.8l, again exploiting I-cache contention, this time on Intel’s Atom processor employing Hyperthreading.

4.3.2 Time slicing

As part of the evaluation of the VAX/VMM security operating system, Hu [73] noted that sharing caches would lead to channels. Tsunoo et al. [144] demonstrated that timing variations due to self-contention can be used to cryptanalyse MISTY1. The same attack was later applied to DES [145].

Bernstein [25] showed that timing variations due to self-contention can be exploited for mounting a practical remote attack against AES. The attack was conducted on Intel Pentium III. Bonneau and Mironov [30] and Aciiçmez et al. [10] both further explored these self-contention channels in AES in OpenSSL (versions 0.9.8a and 0.9.7e). Weiß et al. [160] demonstrated that it still works on the ARM Cortex A8. Irazoqui et al. [82] demonstrated Bernstein’s correlation attack on Xen and VMware, lifting the native attack to across VMs. More recently, Weiß et al. [161] extended their previous attack [160] on the microkernel virtualisation framework, using the PikeOS microkernel [88] on ARM platform.

Neve and Seifert [120] demonstrated that SMT was not necessary to exploit these cache side channels, again successfully attacking AES’s S-boxes. Similarly, Prime+Probe and Evict+Time techniques can also detect the secret key of AES through L1 D-cache, with knowledge of the virtual and physical addresses of AES’s S-boxes [125, 143]. Aciiçmez [1] presented an RSA attack using the Prime+Probe technique on the L1 I-cache, assuming frequent pre-emptions on RSA processes. Aciiçmez and Schindler [8] demonstrated an RSA attack based on L1 I-cache contention. In order to facilitate the experiment, their attack embeds a spy process into the RSA process and frequently calling the spy routine. According to Neve [119], embedded spy process attains similar empirical results with standalone spy process, therefore Acıiçmez and Schindler [8] argued that their simplified attack model would be applicable in practice. Zhang et al. [180] took the SMT-free approach and showed that key-recovery attacks using L1 I-cache contention were practical between virtual machines. Using inter-processor interrupts (IPIs) to frequently displace the victim, they successfully employed a Prime+Probe attack to recover ElGamal keys [51].

Vateva-Gurova et al. [150] measured the signal of a cross-VM L1 cache covert channel on Xen with different scheduling parameters, including load balancing, weight, cap, timeslice and rate limiting.

Hund et al. [74] showed that TLB contention allows an attacker to defeat ASLR [29], with a variant of the Flush+Reload technique (Sect. 4.1.1): they exploited the fact that invalid mappings are not loaded into the TLB, and so a subsequent access to an invalid address will trigger another table walk, while a valid address will produce a much more rapid segmentation fault. Hund et al. [74] evaluated this technique on Windows 7 Enterprise and Ubuntu Desktop 11.10 on three different Intel architectures, with a worst-case accuracy of 95% on the Intel i7-2600 (Sandy Bridge).

4.4 Package-shared state

4.4.1 Multicore

Programs concurrently executing on multicores can generate contention on the shared LLC, creating covert channels, side channels or DoS attacks. Xu et al. [167] evaluated the covert channel bandwidth based on LLC contention with Prime+Probe technique, improving the transmission protocol of the initial demonstration by [133] to the multicore setting. Xu et al. [167] not only improved the protocol, but also improved the bandwidth from 0.2 b/s to 3.2 b/s on Amazon EC2. Wu et al. [165] stated the challenges of establishing a reliable covert channel based on LLC contention, such as scheduling and addressing uncertainties. Despite demonstrating how these can be overcome, their cache-based covert channel is unreliable as the hypervisor frequently migrates virtual CPUs across physical cores. They invented a more reliable protocol based on bus contention (Sect. 4.6.1).

Yarom and Falkner [169] demonstrated a Flush+Reload attack on the LLC (Intel Core i5-3470 and Intel Xeon E5-2430) for attacking RSA, requiring read access to the in-memory RSA implementation, through either memory mapping or page de-duplication. Yarom and Benger [168], Benger et al. [24] and Van de Pol et al. [147] used similar attacks to steal ECDSA keys. Moreover, Zhang et al. [181] demonstrated that the same type of attack can steal granular information (e.g. the number of items in a user’s shopping cart) from a co-resident VM on DotCloud [47], a commercial platform-as-a-service (PaaS) cloud platform. Gruss et al. [63] further generalised the Flush+Reload technique with a pre-computed cache template matrix, a technique that profiles and exploits cache-based side channels automatically. The cache template attack involves a profile phase and a exploitation phase. In the profiling phase, the attack generates a cache template matrix for the cache-hit ratio on a target address during processing of a secret information. In the exploitation phase, the attack conducts Flush+Reload or Prime+Probe attack for constantly monitoring cache hits and computes the similarity between collected traces and the corresponding profile from the cache template matrix. The cache template matrix attack is able to perform attacks on data and instructions accesses online, attacking both keystrokes and the T-table-based AES implementation of OpenSSL.

Because the LLC is physically indexed and tagged, constructing a prime buffer for a Prime+Probe attack requires knowledge of virtual-to-physical address mappings. Liu et al. [107] presented a solution that creates an eviction buffer with large (2 MiB) pages, as these occupy contiguous memory. By probing on one cache set, they demonstrated a covert channel attack with a peak throughput of 1.2 Mb/s and an error rate of 22%. Based on the same Prime+Probe technique, they conducted side-channel attacks on both the square-and-multiply exponentiation algorithm in ElGamal (GnuPG 1.4.13) and the sliding-window exponentiation in ElGamal (GnuPG 1.4.18). Zhang et al. [76] demonstrated that the same Prime+Probe technique can be used in a real cloud environment. They used the attack on the Amazon EC2 cloud to leak both cloud co-location information and ElGamal private keys (GnuPG 1.4.18). Maurice et al. [113] also demonstrated a Prime+Probe attack on a shared LLC, achieving covert channel throughput of 1291 b/s and 751 b/s for a virtualised set-up. The attack assumes an inclusive LLC: a sender primes the entire LLC with writes, and a receiver probes on a single set in its L1 D-cache.

Gruss et al. [63] suggested a variant of Flush+Reload that combines the eviction process of Prime+Probe with the reload step of Flush+Reload . This technique, called Evict+Reload , is much slower and less accurate than Flush+Reload ; however, it obviates the need for dedicated instructions for flushing cache lines. Lipp et al. [105] demonstrated the use of the Evict+Reload attack to leak keystrokes and touch actions on Android platforms running on ARM processors.

Flush+Flush [65] is another variant of Flush+Reload , which measures variations in the execution time of the x86 clflush instruction to determine whether the entry was cached prior to being flushed. The advantages of the technique over Flush+Reload are that it is faster, allowing for a higher resolution, and that it generates less LLC activity which can be used for detecting cache timing attacks. Flush+Flush is, however, more noisy than Flush+Reload, resulting in a slightly higher error rate. Gruss et al. [65] demonstrated that the technique can be used to implement high-capacity covert channels, achieving a bandwidth of 496 KiB/s.

Both LLCs and internal buses are vulnerable to DoS attacks. Woo and Lee [162] showed that by causing a high rate of L1 misses, an attacker can monopolise LLC bandwidth (L2 in this case), to the exclusion of other cores. Also, by sweeping the L2 cache space, threads on other cores suffer a large number of L2 cache misses. Cardenas and Boppana [38] demonstrated the brute-force approach—aggressively polluting the LLC dramatically slows all other cores. These DoS attacks are much more effective when launched from a separate core, as in the pre-emptively scheduled case, the greatest penalty that the attacker can enforce is one cache refill per timeslice. Allan et al. [12] showed that repeatedly evicting cache lines in a tight loop of a victim program slows the victim by a factor of up to 160. They also demonstrated how slowing a victim down can improve the signal of side-channel attacks. Zhang et al. [177] conducted the cache cleansing DoS attack that generated up to 5.5x slowdown for program with poor memory locality and up to 4.4x slowdown to cryptographic operations. Because x86 platform implements the inclusive LLC, replacing a cache line from LLC can also evicts its copy from upper-level caches (Sect. 4.1.1). To build this attack, the attacker massively fetched cache lines into LLC sets, causing the victim suffering from a large number of cache misses due to cache conflicts.

4.4.2 Time slicing

The LLC maintains the footprint left by previously running threads or VMs, which is exploitable as a covert or a side channel. Hu [73] demonstrated that two processes can transmit information by interleaving on accessing the same portion of a shared cache. Although Hu did not demonstrate the attack on a hardware platform, the attack can be used on the shared LLC. Similarly, Percival [129] explored covert channels based on LLC collisions. Ristenpart et al. [133] explored the same technique on a public cloud (Amazon EC2), achieving a throughput of 0.2 b/s. Their attack demonstrated that two VMs can transmit information through a shared LLC.

The LLC contention can be used for detecting co-residency, estimating traffic rates and conducting keystroke timing attacks on the Amazon EC2 platform [133].

Gullasch et al. [71] attacked AES in OpenSSL 0.98n with a Flush+Reload attack, leveraging the design of Linux’ completely fair scheduler to frequently pre-empt the AES thread. With the Flush+Reload technique, Irazoqui et al. [81] broke AES in OpenSSL 1.0.1f with page sharing enabled on an Intel i5-3320M running VMware ESXi5.5.0. More recently, García et al. [57] demonstrated the Flush+Reload attack on the DSA implementation in OpenSSL, the first key-recovery cache timing attack on transport layer security (TLS) and secure shell (SSH) protocols.

Hund et al. [74] detected the kernel address space layout with the Evict+Time technique, measuring the effect of evicting a cache set on system-call latencies on Linux.

Gruss et al. [64] discovered that the prefetch instructions not only expose the virtual address space layout via timing but also allow prefetching kernel memory from user-space because of lack of privilege checking. Their discoveries were applicable on both x86 and ARM architectures. They demonstrated two attacks: the translation-level oracle and address-translation oracle. Firstly, the translation-level oracle measures execution time for prefetching on a randomly selected virtual address and then compares against the calibrated execution time. To translate the virtual address into a physical address, the prefetch instruction traverses multiple levels of page directories (4 levels for PML4E on x86), and terminates as soon as the correct entry is found. Therefore, the timing reveals at which level does address lookup terminates, revealing the virtual address space layout even though ASLR is enabled. Secondly, the address-translation oracle verifies if two virtual addresses p and q are mapped to the same physical address by flushing p, prefetching q, and then reloading p again. If the two addresses are mapped to the same physical address, reloading p encounters a cache hit. Also, the prefech instructions can be conducted on any virtual address, including kernel addresses, and thus can be used to locate the kernel window that contains direct physical mappings to further implement return-oriented programming attack [90].

To conduct a Prime+Probe attack on the LLC, Irazoqui et al. [83] additionally took advantages of large pages for probing on cache sets from the LLC without solving virtual-to-physical address mappings (Sect. 2.2.1). The attack monitors 4 cache sets from the LLC and recovers an AES key in less than 3 min in Xen 4.1 and less than 2 min in VMware ESXI 5.5. Oren et al. [123] implemented a Prime+Probe attack in JavaScript by profiling on cache conflicts with given virtual addresses. Rather than relying on large pages, they created a priming buffer with 4 KiB pages. Furthermore, the attack collects cache activity signatures of target actions by scanning cache traces, achieving covert channel bandwidth of 320 kb/s on their host machine and 8 kb/s on a virtual machine (Firefox 34 running on Ubuntu 14.01 inside VMWare Fusion 7.1.0). A side channel version of this attack associates user behaviour (mouse and network activities) with cache access patterns. Kayaalp et al. [89] also demonstrated a probing technique that identifies LLC cache sets without relying on large pages. They used the technique in conjunction with the Gullasch et al. [71] attack on the completely fair scheduler for attacking AES.

Targeting the AES T-tables, Lipp et al. [105] showed that the ARM architecture is also vulnerable to the Prime+Probe attack.

4.5 NUMA-shared state

4.5.1 Multicore

Shared memory controllers in multicore systems allow DoS attacks. Moscibroda and Mutlu [117] simulated an exploit of the first-come first-served scheduling policy in memory controllers, which prioritises streaming access to impose a 2.9-fold performance hit on a program with a random access pattern. Zhang et al. [177] demonstrated a DoS attack by generating contentions on either bank or channel schedulers in memory controllers on the testing x86 platform (Dell PowerEdge R720). The attacker issued vast amount of memory accesses from a DRAM bank, causing up to 1.54x slowdown for a victim that accesses from either the same bank or a different bank in the same DRAM channel.

Modern DRAM comprises a hierarchical structure of channels, DIMMs, ranks and banks. Inside each bank, the DRAM cells are arranged as a two-dimensional array, located by rows and columns. Also, each bank has a row buffer that caches the currently active row. For any memory access, the DRAM firstly activates its row in the bank and then accesses the cell within that row. If the row is currently active, the request is served directly from the row buffer (a row hit). Otherwise, the DRAM closes the open row and fetches the selected row to the row buffer for access (a row miss). According to experiments conducted on both x86 and ARM platforms, a row miss leads to a higher memory latency than a row hit, which can be easily identified [130].

Pessl et al. [130] demonstrated how to use timing variances due to row buffer conflicts to reverse-engineer the DRAM addressing schemes on both x86 and ARM platforms. Based on that knowledge, they implemented a covert channel based on row buffer conflicts, which achieved a transfer rate of up to 2.1 Mb/s on the Haswell desktop platform (i7-4760) and 1.6 Mb/s on the Haswell-EP server platform (2x Xeon E5-2630 v3).

Furthermore, Pessl et al. [130] conducted a cross-CPU side-channel attack by detecting row conflicts triggered by keystrokes in the Firefox address bar. In the preparation stage, a spy process allocates a physical address s that shares the same row of the target address v in a victim process. Also, she allocates another physical address q that maps to a different row in the same bank. To run the side-channel attack, the spy firstly accesses q and then waits for the victim to execute before measuring the latency of accessing s. If the victim accessed v, the latency of accessing s is much less than a row conflict. Therefore, the spy can infer when the non-shared memory location is accessed by the victim.

4.5.2 Time slicing

Jang et al. [87] discovered that Intel transactional synchronisation extension (TSX) contains a security loophole that aborts a user-level transaction without informing kernel. In addition, the timing of TSX aborts on page faults reveals the attributes of corresponding translation entires (i.e. mapped, unmapped, executable or non-executable). They successfully demonstrated a timing channel attack on kernel ASLR of mainstream OSes, including Windows, Linux and OSx.

4.6 System-shared state

4.6.1 Multicore

Hu [72] noted that the system bus (systems of the time had a single, shared bus) could be used as a covert channel, by modulating the level of traffic (and hence contention). Wu et al. [165] demonstrated that this type of channel is still exploitable in modern systems, achieving a rate of 340 b/s between Amazon EC2 instances.

Bus contention is clearly also exploitable for DoS. On a machine with a single frontside bus, Woo and Lee [162] generated a DoS attack with L2 cache misses in a simulated environment. Zhang et al. [177] shown that an attacker can use atomic operations on either unaligned or non-cacheable memory blocks to lock the shared system bus on the testing x86 platform (Dell PowerEdge R720), therefore slow down the victim (up to 7.9x for low locality programs).

While many-core platforms increase performance, programs running on separated cores may suffer interference from competing on on-chip interconnections. Because networks-on-chip (Sect. 2.2.4) share internal resources, a program may inject network traffic by rapidly generating memory fetching requests, unbalancing the share of internal bandwidth. Wang and Suh [155] simulated both covert channels and side channels though network interference. A Trojan encodes a “1” bit through high and a “0” bit through low traffic load. A spy measures the throughput of its own memory requests. For the side channel, their simulation replaces the Trojan program with an RSA server. This side-channel attack simulated an RSA execution where every exponentiation execution suffers cache misses, resulting in memory fetches for “1 ” bits in the secret key. By monitoring the network traffic, the spy program observes that the network throughput is highly related to the fraction of bits “1” in the RSA key.

Song et al. [138] discovered a security vulnerability contained in the programmable routing table in a Hypertranport-based processor-interconnect router on AMD processors [14]. With malicious modifications to the routing tables, Song et al. demonstrated degradation of both latency and bandwidth of the processor interconnect on an 8-node AMD server (the Dell PowerEdge R815 system).

Irazoqui et al. [86] demonstrated that due to the cache coherency maintained between multiple packages, variants of the Flush+Reload attack can be applied between packages. They used the technique to attack both the AES T-Tables and a square-and-multiply implementation of ElGamal.

Lower-level buses, including PCI Express, can also be exploited for DoS attacks. Richter et al. [132] showed that by saturating hardware buffers, the latency to access a Gigabit Ethernet network interface controller, from a separate VM, increases more than sixfold.

Zhang et al. [180] demonstrated that the slow handling of inter-processor interrupts (IPIs), together with their elevated priority, allows for a cross-processor DoS attack.

5 Countermeasures

5.1 Constant-time techniques

A common approach to protecting cryptographic code is to ensure that its behaviour is never data dependent: that the sequence of cache accesses or branches, for example, does not depend on either the key or the plaintext. This approach is widespread in dealing with overall execution time, as exploited in remote attacks, but is also being applied to local contention-based channels. Bernstein [25] noted the high degree of difficulty involved.

We look at the meaning of “sequence of cache acceses” as an example of the complexities involved. The question is at what resolution the sequence of accesses needs to be independent of secret data. Clearly, if the sequence of cache lines accessed depends on secret data, the program can leak information through the cache. However, some code, e.g. the “constant-time” implementation of modular exponentiation in OpenSSL, can access different memory addresses within a cache line, depending on the secret exponent. Brickell [32] suggested not having secret-dependent memory access at coarser than cache line granularity, hinting that such an implementation would not leak secret data. However, Osvik et al. [124] warned that processors may leak low-address-bit information, i.e. the offset within a cache line. Bernstein and Schwabe [26] demonstrated that under some circumstances this can indeed happen on Intel processors. This question has been recently resolved when Yarom et al. [171] demonstrated that the OpenSSL implementation is vulnerable to the CacheBleed attack.

Even a stronger form, where the sequence of memory accesses does not depend on secret information, may not be sufficient to prevent leaks. Coppens et al. [43] listed several possible leaks, including instructions with data-dependent execution times, register dependencies and data dependencies through memory. No implementation is yet known to be vulnerable to side-channel attacks through these leaks. Coppens et al. instead developed a compiler that automatically eliminates control-flow dependencies on secret keys of cryptographic algorithms (on x86).

To guide the design of constant-time code, several authors have presented analysis tools and formal frameworks: Langley [101] modified the Valgrind [146] program analyser to trace the flow of secret information and warn if it is used in branches or as a memory index. Köpf et al. [99] described a method for measuring an upper bound on the amount of secret information that leaks from an implementation of a cryptographic algorithm through timing variations. CacheAudit [48] extended their work to provide better abstractions and a higher precision. FlowTracker [137] modified the LLVM compiler [102] to use information-flow analysis for side channel leak detection.

Constant-time techniques are used extensively in the design of the NaCl library [27], which is intended to avoid many of the vulnerabilities discovered in OpenSSL.

For addressing the timing channel on floating-point operations, Andrysco et al. [16] designed a fix-point constant-time math library, libfixedtimefixpoint. On the testing platform (Intel Core i7 2635QM at 2.00GHz), the library performs constant-time operations, which take longer than optimised hardware instructions. Later, Rane et al. [131] presented a compiler-based approach, by utilising SIMD lanes in x86 SSE and SSE2 instruction sets to provide fixed-time floating-point operations. Their key insight is that the latency of a SIMD instruction is determined by the longest running path in the SIMD lanes. Their compiler pairs original floating-point operations with dummy slow-running subnormal inputs. After the SIMD operations is executed, the compiler only preserves the results of the authentic inputs. Their evaluation shown a 0–1.5% timing variation of floating-point operations with different types of inputs on the testing machine (i7-2600). This compiler-based approach introduced 32.6x overhead on SPECfp2006 benchmarks.

The main drawback of the constant-time approach is that a constant-time implementation on one hardware platform may not perform constantly on another hardware platform. For example, Cock et al. [41] demonstrated that the constant-time fix for mitigating Lucky 13 attack (a remote side-channel attack, [11]) in OpenSSL 1.0.1e still contains a side channel on the ARM AM3358 platform.

5.1.1 Hardware support

One approach to avoiding vulnerable table lookups is to provide constant-time hardware operations for important cryptographic primitives, as suggested by Page [127]. The x86 instruction set has now been extended in exactly this fashion [66, 67], which provides instruction support for AES encryption, decryption, key expansion and all modes of operations. At current stage, Intel (from Westmere) [166], AMD (from Bulldozer) [13], ARM (from V8-A) [19], SPARC (from T4) [122] and IBM (from Power7+) [172] processor architectures all support AES with instruction extensions. Furthermore, Intel introduced PCLMULQDQ instruction that is available from microarchitecture Westmere [69, 70]. With the help from the PCLMULQDQ instruction, the AES in Galois Counter Mode can be efficiently implemented using the AES instruction extension.

5.1.2 Language-based approaches

In order to control the externally observable timing channels, language-based approaches invent specialised language semantics with non-variant execution length, which require a corresponding hardware design [175]. The solutions in this category propose that timing channels should be handled at both hardware level and software level.

5.2 Injecting noise

In theory, it should be possible to prevent the exploitation of a timing channel without eliminating contention, by ensuring that the attacker’s measurements contain so much noise as to be essentially useless. This is the idea behind fuzzy time [72], which injects noise into all events visible to a process (such as pre-emptions and interrupt delivery), as a covert channel countermeasure.

In a similar vein, Brickell et al. [33] suggested an alternative AES implementation, including compacting, randomising and preloading lookup tables. This introduced noise to the cache footprint left by AES executions, thus defending against cache-based timing attacks. As a result, the distribution of AES execution times follows a Gaussian distribution over random plaintexts. These techniques incurred 100–120% performance overhead.

Wang and Lee [158] suggested the random permutation cache (RPcache), which provides a randomised cache indexing scheme and protection attributes in every cache line. Each process has a permutation table to store memory-to-cache mappings, and each cache line contains an ID representing its owner process. Cache lines with different IDs cannot evict each other. Rather, the RPcache randomly selects a cache set and evicts a cache line in that set. Simulation results suggest a small (1%) performance hit in the SPEC2000 benchmark. Kong et al. [97] suggested an explicitly requested RPCache implementation, just for sensitive data such as AES tables. Simulations again suggest the potential for low overhead.

Vattikonda et al. [151] modified the Xen hypervisor to insert noise into the high-resolution time measurements in VMs by modifying the values returned by the rdtsc instruction. Martin et al. [111] argued that timing channels can be prevented by making internal time sources inaccurate. Consequently, they modified the implementation of the x86 rdtsc instruction so it stalls execution until the end of a predefined epoch and then adds a random number between zero and the size of the epoch, thus fuzzing the time counter. In addition, they proposed a hardware monitoring mechanism for detecting software clocks, for example inter-thread communication through shared variables. The paper presents statistical analysis that demonstrates timing channel mitigation. However, the effectiveness of this solution is based on two system assumptions: that external events do not provide sufficient resolution to efficiently measure microarchitecture events and that their monitoring makes software clocks prohibitive.

Liu and Lee [106] suggested that the demand-fetch policy of a cache is a security vulnerability, which can be exploited by reuse-based attacks that leverage previously accessed data in a shared cache. To address these attacks, they designed a cache with random replacement. On a cache miss, the requested data are directly sent to processor without allocating a cache line. Instead, the mechanism allocates lines with randomised fetches within a configurable neighbourhood window of the missing memory line.

Zhang et al. [176] introduced a bystander VM for injecting noise on the cross-VM L2-cache covert channel with a configurable workload. With a continuous-time Markov process to model the Prime+Probe -based cache covert channel, they analysed the impact of bystander VMs on the error rate of cache-based covert channels under the scheduling algorithm used by Xen. They found that as long as the bystander VMs only adjust their CPU time consumption, they do not significantly impact cross-VM covert channel bandwidth. For effectively introducing noise into the Prime+Probe channel, the bystander VMs must also modulate their working sets and memory access rates.

However, noise injection is inefficient for obtaining high security [41]. Although anti-correlated “noise” can in principle completely close the channel, producing such compensation is not possible in many circumstances. The amount of actual (uncorrelated) noise required increases dramatically with decreasing channel capacity. This significantly degrades system performance and makes it infeasible to reduce channel bandwidth by more than about two orders of magnitude [41].

5.3 Enforcing determinism

As discussed in Sect. 2.3, it should be possible to eliminate timing channels by completely eliminating visible timing variation. There are two existing solutions that attempt this, virtual time (Sect. 5.3.1) and black-box mitigation (Sect. 5.3.2).

5.3.1 Virtual time

The virtual time approach tries to completely eliminate access to real time, providing only virtual clocks, whose progress is completely deterministic, and independent of the actions of vulnerable components. For example, Determinator [23] provides a deterministic execution framework for debugging concurrent programs. Aviram et al. [22] repurposed Determinator to provide a virtual time cloud computing environment. Ford [55] extended this model with queues, to allow carefully scheduled IO with the outside world, without importing real-time clocks. Wu et al. [164] further extended the model with both internal and external event sequences, producing a hypervisor-enforced deterministic execution system. It introduces the mitigation interval; if this is 1 ms, the information leakage rate is theoretically bounded at 1 Kb/s. They demonstrated that for the 1 ms setting, CPU-bound applications were barely impacted compared to executing on QEMU/KVM, while network-bound applications experienced throughput degradation of up to 50%.

StopWatch [103] instead runs three replicas of a system, and attempts to release externally visible events at the median of the times determined by the replicas, virtualising the x86 time-stamp counter.

Because it virtualises both internally and externally visible events, complete system-time virtualisation is effective against all types of timing channels, in principle. However, it cannot prevents cross-VM DoS attacks, as generating resource contentions will still be possible on a shared platform. The first downside is that these systems pay a heavy performance penalty. For StopWatch, latency increases 2.8 times for network-intensive benchmarks and 2.3 times for computate-intensive benchmarks [103]. Secondly, systems such as Determinator [23] rely on custom-written software and cannot easily support legacy applications.

Instruction-based scheduling (IBS) is a more limited form of deterministic execution that simply attempts to prevent the OS’s pre-emption tick providing a clock source to an attacker. The idea is to use the CPU’s performance counters to generate the pre-emption interrupt after a fixed number of instructions, ensuring that the attacker always completes the same amount of work in every interval. Dunlap et al. [49] proposed this as a debugging technique, and it was later incorporated into Determinator [22]. Stefan et al. [139] suggested it as an approach to eliminating timing channels. Cock et al. [41] evaluated IBS on a range of platforms and reported that the imprecise delivery of exceptions on modern CPUs negatively affects the effectiveness of this technique. In order to schedule threads after executing a precise number of instructions, the system has to configure the performance counters to fire earlier than the target instruction count, then single-steps the processor until the target is reached [50]. In Deterland [164], single-stepping adds 30% overhead to CPU-bound benchmarks for a 1-ms mitigation interval, and 5% for a 100-ms interval.

5.3.2 Black-box mitigation

Instead of trying to synchronise all observable clocks with the execution of individual processes, this approach attempts to achieve determinism for the system as a whole, by controlling the timing of externally visible events. It is thus only applicable to remotely exploitable attacks that depend on variation in system response time. This avoids much of the performance cost of virtual time, and the thorny issue of trying to track down all possible clocks available inside a system.

Köpf and Dürmuth [98] suggested bucketing, or quantising, response times, to allow an upper bound on information-theoretic leakage to be calculated. Askarov et al. [21] presented a practical approach to such a system, using exponential back-off to provide a hard upper bound on the amount that can ever be leaked. This work was later revised and extended [174]. Cock et al. [41] showed that such a policy could be efficiently implemented, using real-time scheduling on seL4 to provide the required delays, in an approach termed scheduled delivery (SD). SD was applied to mitigate the Lucky 13 attack on OpenSSL TLS [11], with better performance than the upstream constant-time implementation.

Braun et al. [31] suggested compiler directives that annotate fixed-time functions. For such functions, the compiler automatically generates code for temporal padding. The padding includes a timing randomisation step that masks timing leaks due to the limited temporal resolution of the padding.

5.4 Partitioning time

Attacks which rely on either concurrent or consecutive access to shared hardware can be approached by either providing timesliced exclusive access (in the first case) or carefully managing the transition between timeslices (in the second).

5.4.1 Cache flushing

The obvious solution to attacks based on persistent state effects (e.g. in caches) is to flush on switches. Zhang and Reiter [179] suggested flushing all local state, including BTB and TLB. Godfrey and Zulkernine [60] suggested flushing all levels of caches during VM switches in cloud computing, when CPU switches security domains. There is obviously a cost to be paid, however. Varadarajan et al. [149] benchmarked the effect of flushing the L1 cache: They measured an 8.4 \(\mu \)s direct cost, and a substantial overall cost, resulting in a 17% latency increase in the ping benchmark that issues ping command at 1-ms interval. The experimental hardware is a 6 core Intel Xeon E5645 processor.

Flushing the top-level cache is not unreasonable on a VM switch. The sizes of L1 caches are relatively small (32 KiB on x86 platforms), and the typical VM switch rates are low, e.g. the credit scheduler in Xen normally makes scheduling decisions every 30 ms [180]. Therefore, there is a low likelihood of a newly scheduled VM finding any data or instructions hot in the cache, which implies that the indirect cost (Sect. 2.2.1) of flushing the L1 caches on a VM switch is negligible. For the much larger lower-level caches, flushing is likely to lead to significant performance degradation.

5.4.2 Lattice scheduling

Proposed by Denning [44], and implemented in the VAX/VMM security kernel [73], lattice scheduling attempts to amortise the cost of cache flushes on a context switch, by limiting them to switches from sensitive partitions to untrusted ones. In practice, this approach is limited to systems with a hierarchical trust model, unlike the mutually distrusting world of cloud computing. Cock [40] presented a verified lattice scheduler for the domain-switched version of seL4.

5.4.3 Minimum timeslice

Some attacks, such as the Prime+Probe approach of Zhang et al. [180], relied on the ability to frequently inspect the victim’s state by triggering pre-emptions. Enforcing a minimum timeslice for the vulnerable component, as Varadarajan et al. [149] suggested, prevents the attacker inspecting the state in the middle of a sensitive operation, at the price of increased latency. As this is a defence specific to one attack, it is likely that it can be circumvented by more sophisticated attacks.

5.4.4 Networks-on-chip partitioning

To prevent building a covert channel by competing on on-chip interconnects (Sect. 4.6.1), network capacity can be dynamically allocated to domains by temporally partitioning arbitrators. Wang and Suh [155] provided a priority-based solution for one-way information-flow systems, where information is only allowed to flow from lower to higher security levels. Their design assigns strict priority bounds to low-security traffic, thus ensuring non-interference [61] from high-security traffic.

Furthermore, time multiplexing shared network components constitute a straightforward scheme for preventing interference on network throughput, wherein the packets of each security domain are only propagated during time periods allocated to that domain. Therefore, the latency and throughput of each domain are independent of other domains. The main disadvantage of this scheme is that latency scales with the number of available domains, \(D\), as each packet waits for \(D-1\) cycles per hop [159]. An improved policy transfers alternate packets from different domains, such that packets are pipelined in each dimension on on-chip networks [159].

5.4.5 Memory controller partitioning

In order to prevent attacks that exploit contention in the memory controller [117], Wang et al. [156] suggested a static time-division multiplexing of the controller, to reserve bandwidth to each domain. Their hardware simulation results suggest a 1.5% overhead on SPEC2006, but up to 150% increase in latency, and a necessary drop in peak throughput.

5.4.6 Execution leases

Tiwari et al. [141] suggested a hardware-level approach to sharing execution resources between threads, leasing execution resources. A new hardware mechanism would guarantee bounds on resource usage and side effects. After one lease expires, a trusted entity obtains control, and any remaining untrusted operations are expelled. Importantly, the prototype CPU is an in-order, un-pipelined processor, containing hardware for maintaining lease contexts. Further, the model does not permit performance optimisations that introduce timing variations, such as TLBs, branch predictors, making the system inherently slow. Tiwari et al. [142] later proposed a system based on this work with top-to-bottom information-flow guarantees. The prototype system includes a Star-CPU, a microkernel, and an I/O protocol. The microkernel contains a simple scheduler for avionic systems with context switching cost of 37 cycles.

5.4.7 Kernel address space isolation

Gruss et al. [64] proposed isolating kernel form user address space by using separated page directories for each, so switching context between user and kernel spaces includes switching the page directory. This technique is designed to mitigate the timing attack on prefetch instructions (Sect. 4.4.2).

5.5 Partitioning hardware

Truly concurrent attacks can only be prevented by partitioning hardware resources among competing threads or cores. The most visible target is the cache, and it has received the bulk of the attention to date.

5.5.1 Disable hardware threading

Percival [129] advocated that Hyperthreading (Sect. 2.2.5) should be disabled to prevent the attack he described. This has since become common practice on public cloud services, including Microsoft’s Azure [110]. This eliminates all attacks that rely on hardware threading (Sects. 4.2.1, 4.3.1), at some cost to throughput.

5.5.2 Disable page sharing

Because the Flush+Reload attack and its variations depend on shared memory pages, preventing sharing mitigates the attack. VMware Inc. [152] recommends disabling the transparent page sharing feature [154] to protect against cross-VM Flush+Reload attacks. CacheBar [182] prevents concurrent access to a shared pages by automatically detecting such access and creating multiple copies. The scheme they invented is called copy-on-access, which creates a copy of a page while another security domain is trying to access on that page.

5.5.3 Hardware cache partitions

Hardware-enforced cache partitions would provide a powerful mechanism against cache-based attacks. Percival [129] suggested partitioning the L1 cache between threads to eliminate the cache contention (Sect. 4.3.1). While some, including Page [128], have proposed hardware designs, no commercially available hardware provides this option.

Wang and Lee [158] suggested an alternative approach, described as a partition-locked cache (PLcache). They aim to provide hardware mechanisms to assign locking attributes to every cache line, allowing sensitive data such as AES tables to be selectively and temporarily locked into the cache.

A series of ARM processors support cache lockdown through the L2 cache controller, such as L2C-310 cache controller [20] on ARM Coretex A9 platforms.

Domnister et al. [46] suggested reserving for each hyperthread several cache lines in each cache set of the L1 cache.

Intel’s Cache Allocation Technology (CAT) provides an implementation of a similar technique which prevents processes from replacing the contents of some of the ways in the LLC [80]. To defeat LLC cache attacks that conduct Prime+Probe and Evict+Time techniques (Sects. 4.4.1 and 4.4.2), CATalyst [108] partitions the LLC into a hybrid hardware and software managed cache. It uses CAT to create two partitions in the LLC, a secure partition and a non-secure partition. The secure partition only stores cache-pinned secure pages, whereas the non-secure partition acts as normal cache lines managed by hardware replacement algorithms. To store secure data, a user-level program allocates secure pages and preloads the data into the secure cache partition. As the secure data are locked in the LLC, the LLC timing attacks caused by cache line conflicts are mitigated. Liu et al. [108] demonstrated that the CATalyst is effective in protecting square-and-multiply algorithm in GnuPG 1.4.13. Furthermore, the system performance impact is insignificant, an average slowdown of 0.7% for SPEC and 0.5% for PARSEC.

The trend towards QoS features in hardware is encouraging—it may well be possible to repurpose these for security, especially if the community is able to provide early feedback to manufacturers, in order to influence the design of these mechanisms. We return to this point in Sect. 6.

Colp et al. [42] used the support for locking specific cache ways on ARM processors to protect encryption keys from hardware probes, and this approach will also protect against timing attacks.

5.5.4 Cache colouring

The cache colouring approach exploits set-associativity to partition caches in software. Originally proposed for improving overall system performance [28, 91] or performance of real-time tasks [104] by reducing cache conflicts, cache colouring has since been repurposed to protect against cache timing channels.