Abstract

We prove a verification theorem for a class of singular control problems which model optimal harvesting with density-dependent prices or optimal dividend policy with capital-dependent utilities. The result is applied to solve explicitly some examples of such optimal harvesting/optimal dividend problems. In particular, we show that if the unit price decreases with population density, then the optimal harvesting policy may not exist in the ordinary sense, but can be expressed as a “chattering policy”, i.e. the limit as \(\Delta x\) and \(\Delta t\) go to \(0\) of taking out a sequence of small quantities of size \(\Delta x\) within small time periods of size \(\Delta t\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The determination of an optimal harvesting policy of a stochastically fluctuating renewable resource is typically subject to at least three key factors affecting either the intertemporal evolution of the resource stock or the incentives of a rational risk neutral harvester. First, the exact size of the harvested stock evolves stochastically due to environmental or demographical randomness. Second, the interaction between different populations has obviously a direct effect on the density of the harvested stocks. Third, most harvesting decisions are subject to density dependent costs and prices. The price of the harvested resource is typically decreasing as a function of the prevailing stock due to the decreasing marginal utility of consumption. The more abundant a resource gets, the less consumers are prepared to pay from an extra unit of that particular resource and vice versa. In a completely analogous fashion the costs associated with harvesting depend typically on the abundance of the harvested resource. The scarcer a resource becomes, the higher are the costs associated with harvesting due to costly search or other similar factors. Our objective in this study is to investigate the optimal harvesting policy of a risk neutral decision maker facing all the three key factors mentioned above.

The problem of determining an optimal harvesting policy of a risk neutral decision maker can be viewed as a singular stochastic control problem. In an unstructured one-dimensional setting where the marginal profitability of a marginal unit of the harvested stock is a constant, the existing literature usually delineates circumstances under which the optimal harvesting policy is to deplete the entire resource stock immediately or to maintain it at all times below a critical threshold at which the expected present value of the cumulative yield is maximized [1, 3, 4, 6–8]. As intuitively is clear, the optimal policy is altered as soon as the marginal profitability becomes state-dependent (cf. [2]) or population interaction (cf. [9]) is incorporated into the analysis. In [2] it is shown within a one-dimensional setting that the state dependence of the instantaneous yield from harvesting results into the emergence of circumstances under which the policy resulting into the maximal value constitutes a chattering policy which does not belong into the original class of admissible càdlàg-harvesting policies. On the other hand, in [9] it is shown that the presence of interaction between the harvested resource stocks leads to a harvesting strategy where the decision maker generically harvests only a single resource at a time.

In this paper we combine the approaches developed in [2, 9] and consider the problem of determining the optimal harvesting policy from a collection of interacting populations, described by a coupled system of stochastic differential equations, when the price per unit for each population is allowed to depend on the densities of the populations. In Sect. 2 we give a general verification theorem for such optimal harvesting problems (Theorem 2.1), and in Sect. 3 we study in detail some examples where the price is a decreasing function of the density and we show, perhaps surprisingly, that in such cases the optimal harvesting strategy may not exist in the ordinary sense, but can be described as a “chattering policy”. See Theorems 3.2 and 3.4.

2 The main result

We now describe our model in detail. This presentation follows [9] closely. Consider \(n\) populations whose sizes or densities \(X_1(t),\ldots ,X_n(t)\) at time \(t\) are described by a system of \(n\) stochastic differential equations of the form

where \(B(t)=(B_1(t),\ldots ,B_m(t))\); \(t\ge 0\), \(\omega \in \Omega \) is \(m\)-dimensional Brownian motion on a filtered probability space \((\Omega , \mathcal {F}, \mathbb {F} := \{ \mathcal {F}_t \}_{t \ge 0} , P) \) and the differentials (i.e. the corresponding integrals) are interpreted in the Itô sense. We assume that \(b=(b_1,\ldots ,b_n):{\mathbb R}^{1+n}\rightarrow {\mathbb R}^n\) and \(\sigma =(\sigma _{ij})_{\begin{array}{c} 1\le i\le n \\ 1\le j\le m \end{array}}:{\mathbb R}^{1+n}\rightarrow {\mathbb R}^{n\times m}\) are given continuous functions. We also assume that the terminal time \(T=T(\omega )\) has the form

where \(S\subset {\mathbb R}^{1+n}\) is a given set. For simplicity we will assume in this paper that

where \(U\) is an open, connected set in \({\mathbb R}^n\). We may interprete \(U\) as the survival set and \(T\) is the time of extinction or simply the closing/terminal time.

We now introduce a harvesting strategy for this family of populations:

A harvesting strategy \(\gamma \) is a stochastic process \(\gamma (t)=\gamma (t,\omega )=(\gamma _1(t,\omega ),\ldots ,\gamma _n(t,\omega ))\) \( \in {\mathbb R}^n\) with the following properties:

Component number \(i\) of \(\gamma (t,\omega ),\gamma _i(t,\omega )\), represents the total amount harvested from population number \(i\) up to time \(t\).

If we apply a harvesting strategy \(\gamma \) to our family \(X(t)=(X_1(t),\ldots ,X_n(t))\) of populations the harvested family \(X^{(\gamma )}(t)\) will satisfy the \(n\)-dimensional stochastic differential equation

We let \(\Gamma \) denote the set of all harvesting strategies \(\gamma \) such that the corresponding system (2.7) has a unique strong solution \(X^{(\gamma )}(t)\) which does not explode in the time interval \([s,T]\) and such that \(X^{(\gamma )}(t)\in U\) for all \(t \in [s,T]\).

Since we do not exclude immediate harvesting at time \(t=s\), it is necessary to distinguish between \(X^{(\gamma )}(s)\) and \(X^{(\gamma )}(s^-)\): Thus \(X^{(\gamma )}(s^-)\) is the state right before harvesting starts at time \(t=s\), while

is the state immediately after, if \(\gamma \) consists of an immediate harvest of size \(\Delta \gamma \) at \(t=s\).

Suppose that the price per unit of population number \(i\), when harvested at time \(t\) and when the current size/density of the vector \(X^{(\gamma )}(t)\) of populations is \(\xi =(\xi _1,\ldots ,\xi _n)\in {\mathbb R}^n\), is given by

where the \(\pi _i:S\rightarrow {\mathbb R}\); \(1\le i\le n\), are lower bounded continuous functions. We call such prices density-dependent since they depend on \(\xi \). The total expected discounted utility harvested from time \(s\) to time \(T\) is given by

where \(\pi =(\pi _1,\ldots ,\pi _n)\), \(\pi \cdot \mathrm{d}\gamma =\sum _{i=1}^n \pi _i \mathrm{d}\gamma _i\) and \(E^{s,x}\) denotes the expectation with respect to the probability law \(Q^{s,x}\) of the time-state process

assuming that \(Y^{s,x}(s^-)=x\).

The optimal harvesting problem is to find the value function \(\Phi (s,x)\) and an optimal harvesting strategy \(\gamma ^*\in \Gamma \) such that

This problem differs from the problems considered in [1, 3, 4, 8, 9] in that the prices \(\pi _i(t,\xi )\) are allowed to be density-dependent. This allows for more realistic models. For example, it is usually the case that if a type of fish, say population number \(i\), becomes more scarce, the price per unit of this fish increases. Conversely, if a type of fish becomes abundant then the price per unit goes down. Thus in this case the price \(\pi _i(t,\xi )=\pi _i(t,\xi _1,\ldots ,\xi _n)\) is a nonincreasing function of \(\xi _i\). One can also have situations where \(\pi _i(t,\xi )\) depends on all the other population densities \(\xi _1,\ldots ,\xi _n\) in a similar way.

It turns out that if we allow the prices to be density-dependent, a number of new – and perhaps surprising – phenomena occurs. The purpose of this paper is not to give a complete discussion of the situation, but to consider some illustrative examples.

Remark

Note that we can also give the problem (2.12) an economic interpretation: We can regard \(X_i(t)\) as the value at time \(t\) of an economic quantity or asset and we can let \(\gamma _i(t)\) represent the total amount paid in dividends from asset number \(i\) up to time \(t\). Then \(S\) can be interpreted as the solvency set, \(T\) as the time of bankruptcy and \(\pi _i(t,\xi )\) as the utility rate of dividends from asset number \(i\) at the state \((t,\xi )\). Then (2.12) becomes the problem of finding the optimal stream of dividends. This interpretation is used in [5] (in the density-independent utility case). See also [9].

In the following \(H^0\) denotes the interior of a set \(H\), \(\bar{H}\) denotes its closure.

If \(G\subset {\mathbb R}^k\) is an open set we let \(C^2(G)\) denote the set of real valued twice continuously differentiable functions on \(G\). We let \(C_0^2(G)\) denote the set of functions in \(C^2(G)\) with compact support in \(G\).

If we do not apply any harvesting, then the corresponding time-state population process \(Y(t)=(t,X(t))\), with \(X(t)\) given by (2.1)–(2.2), is an Itô diffusion whose generator coincides on \(C_0^2({\mathbb R}^{1+n})\) with the partial differential operator \(L\) given by

for all functions \(g\in C^2(S)\).

The following result is a generalization to the multi-dimensional case of Theorem 1 in [2] and a generalization to density-dependent prices of Theorem 2.1 in [9]. For completeness we give the proof.

Theorem 2.1

Assume that

(a) Suppose \(\varphi \ge 0\) is a function in \(C^2(S)\) satisfying the following conditions

-

(i)

\(\frac{\partial \varphi }{\partial x_i}(t,x)\ge \pi _i(t,x)\) for all \((t,x)\in S\)

-

(ii)

\(L\varphi (t,x)\le 0\) for all \((t,x)\in S\).

Then

(b) Define the non intervention region \(D\) by

Suppose that, in addition to (i) and (ii) above,

-

(iii)

\(L\varphi (t,x)=0\) for all \((t,x)\in D\)

and that there exists a harvesting strategy \(\hat{\gamma }\in \Gamma \) such that the following, (iv)–(vii), hold:

-

(iv)

\(X^{(\hat{\gamma })}(t)\in \bar{D}\) for all \(\;t\in [s,T]\)

-

(v)

\(\big (\frac{\partial \varphi }{\partial x_i}(t,X^{(\hat{\gamma })}(t)) -\pi _i(t,X^{(\hat{\gamma })}(t))\big )\cdot d\hat{\gamma }_i^{(c)}(t)=0\); \(1\le i\le n\) (i.e. \(\hat{\gamma }_i^{(c)}\) increases only when \(\frac{\partial \varphi }{\partial x_i}=\pi _i\)) and

-

(vi)

\(\varphi (t_k,X^{(\hat{\gamma })}(t_k))-\varphi (t_k, X^{(\hat{\gamma })}(t_k^-)) =-\pi _i(t_k,X^{(\hat{\gamma })}(t_k^-))\cdot \Delta \hat{\gamma }(t_k)\) at all jumping times \(t_k\in [s,T)\) of \(\hat{\gamma }(t)\), where

$$\begin{aligned} \Delta \hat{\gamma }(t_k)=\hat{\gamma }(t_k)-\hat{\gamma }(t_k^-) \end{aligned}$$and

-

(vii)

\(E^{s,x}\big [ \varphi (T_R,X^{(\hat{\gamma })}(T_R))\big ]\rightarrow 0\) as \(R\rightarrow \infty \) where

$$\begin{aligned} T_R=T\wedge R\wedge \inf \big \{ t>s; |X^{(\hat{\gamma })}(t)|\ge R\big \};\qquad R>0. \end{aligned}$$Then

$$\begin{aligned} \varphi (s,x)=\Phi (s,x)\qquad \hbox {for all } (s,x)\in S \end{aligned}$$(2.17)and

$$\begin{aligned} \gamma ^*:=\hat{\gamma }\quad \hbox {is an optimal harvesting strategy}. \end{aligned}$$

Proof

(a) Choose \(\gamma \in \Gamma \) and \((s,x)\in S\). Then by Itô’s formula for semimartingales (the Doléans-Dade-Meyer formula) [10], Th. II.7.33] we have

where the sum is taken over all jumping times \(t_k\in (s,T_R)\) of \(\gamma (t)\) and

Let \(\gamma ^{(c)}(t)\) denote the continuous part of \(\gamma (t)\), i.e.

Then, since \(\Delta X_i^{(\gamma )}(t_k)=-\Delta \gamma _i(t_k)\) we see that (2.18) can be written

where

Therefore

Let \(y=y(r)\); \(0\le r\le 1\) be a smooth curve in \(U\) from \(X^{(\gamma )}(t_k)\) to \(X^{(\gamma )}(t_k^-)=X^{(\gamma )}(t_k)+\Delta \gamma (t_k)\). Then

We may assume that

Now suppose that (i) and (ii) hold. Then by (2.20) and (2.21) we have

Since we have assumed that \(\pi _i(t,\xi )\) is nonincreasing with respect to \(\xi _1,\ldots ,\xi _n\) we have

for all \(i,k\) and \(r\in [0,1]\). Hence

Combined with (2.22) this gives

Letting \(R\rightarrow \infty \) we obtain \(\varphi (s,x)\ge J^{(\gamma )}(s,x)\). Since \(\gamma \in \Gamma \) was arbitrary we conclude that (2.15) holds. Hence (a) is proved.

(b) Next, suppose that (iii)–(vii) also hold. Then if we apply the argument above to \(\gamma =\hat{\gamma }\) we get in (2.20) the following:

Hence \(\varphi (s,x)=J^{(\hat{\gamma })}(s,x)\le \Phi (s,x)\). Combining this with (2.14) from (a) we get the conclusion (2.16) of part (b). This completes the proof of Theorem 2.1. \(\square \)

If we specialize to the 1-dimensional case with just one population \(X^{(\gamma )}(t)\) given by

then Theorem 2.1 (a) gets the form (see also [2], Lemma 1])

Corollary 2.2

Assume that

and

Then

3 Examples

In this section we apply Theorem 2.1 or Corollary 2.2 to some special cases.

Example 3.1

Suppose \(X^{(\gamma )}(t)= (X_1^{(\gamma )}(t),X_2^{(\gamma )}(t))\) is given by

where \(\mu _i>0\) and \(\sigma _i\not =0\) are constants; \(i = 1,2,\) and \(\gamma =(\gamma _1,\gamma _2)\).

We want to maximize the total discounted value of the harvest, given by

where \(g_i:{\mathbb R}\rightarrow {\mathbb R}\) are given nonincreasing functions (the density-dependent prices) and

is the time of extinction, i.e. \(S=\{(t,x);x_i>0; i=1,2\}\). The corresponding 1-dimensional case with \(g\) constant was solved in [5]. Then it is optimal to do nothing if the population is below a certain treshold \(x^*>0\) and then harvest according to local time of the downward reflected process \(\bar{X}(t)\) at \(\bar{X}(t)=x^*\).

Now consider the case when

where \(\theta _i >0\) are given constants; \(i=1,2\). Then the prices increase as the population sizes \(x_i\) go to 0, so (2.24) holds. Suppose we apply the “take the money and run”-strategy \(\;\overset{\circ }{\!\gamma }\). This strategy empties the whole population immediately. It can be described by

Such a strategy gives the harvest value

However, it is unlikely that this is the best strategy because it does not take into account that the prices increase as the population sizes go down. So for the two populations \(X_i(t); i=1,2,\) we try the following “chattering policy”, denoted by \(\widetilde{\gamma }_i=\widetilde{\gamma }_i^{(m,\eta )}\), where \(m\) is a fixed natural number and \(\eta >0\):

At the times

we harvest an amount \(\Delta \widetilde{\gamma }_i(t_k)\) which is the fraction \(\frac{1}{m}\) of the current population. This gives the expected harvest value

where we have used the notation

Now let \(\eta \rightarrow 0,m\rightarrow \infty \). Then all the \(t_k\)’s converge to \(s\) and we get

We conclude that

We call this policy of applying \(\widetilde{\gamma }^{(m,\eta )}\) in the limit as \(\eta \rightarrow 0\) and \(m \rightarrow \infty \) the policy of immediate chattering down to 0. (This limit does not exist as a strategy in \(\Gamma \).) From (3.10) we conclude that

On the other hand, let us check if the function

satisfies the conditions of Theorem 2.1: Condition (2.14) holds trivially, and (i) of Part (a) holds, since

Now

and therefore

So (ii) of Theorem 2.1 (a) holds if \(\mu _i^2\le 2\rho \sigma _i^2\) for \(i=1,2\). By Theorem 2.1 we conclude that \(\varphi =\Phi \) in this case.

We have proved part (a) of the following result:

Theorem 3.2

Let \(X^{(\gamma )}(t)\) and \(T\) be given by (3.1) and (3.3), respectively.

(a) Assume that

Then

This value is achieved in the limit if we apply the strategy \(\widetilde{\gamma }^{(m,\eta )}\) above with \(\eta \rightarrow 0\) and \(m\rightarrow \infty \), i.e. by applying the policy of immediate chattering down to 0.

(b) Assume that

Then the value function has the form

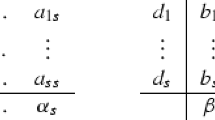

for constants \(C_i>0\), \(A_i>0\) and \(x_i^*>0; i=1,2\) satisfying the following system of 6 equations (see Remark below):

where

The corresponding optimal policy is the following, for \(i=1,2\):

(c) Assume that

Then the value function has the form

for constants \(C_1>0\), \(A_1>0\) and \(x_1^*>0\) specified by the 3 equations

where

The corresponding optimal policy \(\gamma ^*= (\gamma _1^{*},\gamma _2^*)\) is described as follows:

The optimal policy \(\gamma _2^{*}\) is to apply immediate chattering from \(x_2\) down to 0.

Proof

(b). First note that if we apply the policy of immediate chattering from \(x_i\) down to \(x_i^*\), where \(0<x_i^*<x_i\), then the value of the harvested quantity is

This follows by the argument (3.7)–(3.12) above.

To verify (3.16)–(3.18), first note that \(\lambda _1^{(i)},\lambda _2^{(i)}\) are the roots of the quadratic equation

Hence, with \(\varphi (s,x)\) defined to be the right hand side of (3.16) we have

and

Note that equations (3.17) imply that \(\varphi \) is \(C^2\) at \(x_1=x_1^*\) and at \(x_2 = x_2^*\).

We conclude that with this choice of \(C_i,A_i,x_i^*; i=1,2\) the function \(\varphi (s,x)\) becomes a \(C^2\) function and the nonintervention region \(D\) given by (2.16) is seen to be

Thus we obtain that \(\varphi \) satisfies conditions (i), (ii) of Theorem 2.1 and hence

Also, by (3.31) we know that (iii) holds.

Moreover, if \(x_i\le x_i^*\) it is well-known that the local time \(\hat{\gamma _i}\) at \(x_i^*\) of the downward reflected process \(\bar{X}_i(t)\) at \(x_i^*\) satisfies (iv)–(vi) (see e.g. [8] for more details). And (vii) follows from (3.16). By Theorem thm1 (b) we conclude that if \(x_i\le x_i^*\) then \(\gamma _i^*:=\hat{\gamma }_i\) is optimal for \(i=1,2\) and \(\varphi (s,x)=\Phi (s,x)\). Finally, as seen above, if \(x_i>x_i^*\) then immediate chattering from \(x_i\) down to \(x_i^*\) gives the value \(2e^{-\rho s}\theta _i \big ( \sqrt{x_i}-\sqrt{x_i^*}\,\big )+\Phi (s,x^*)\). Hence

Combined with (3.33) this shows that

and the proof of (b) is complete.

The proof of the mixed case (c) is left to the reader. \(\square \)

Remark

Dividing the second equation of (3.17) by the third, we get the equation

Since the left hand side of (3.34) goes to \((\lambda _1^{(i)}+\lambda _2^{(i)})^{-1}< 0\) as \(x_i^*\rightarrow 0^{+}\), and goes to \((\lambda _1^{(i)})^{-1}>0\) as \(x_i^*\rightarrow \infty \), we see by the intermediate value theorem that there exist \(x_i^*>0; i=1,2\) satisfying this equation. With these values of \(x_i^*; i=1,2\) we see that there exists a unique solution \(C_i, A_i; i=1,2\) of the system (3.17).

Example 3.3

The Brownian motion example is perhaps not so good as a model of a biological stock, since Brownian motion is a poor model for population growth. Instead, let us consider a standard population growth model (in the sense that it can be generated from a classic birth-death-process), like the logistic diffusion considered in [4]. That is, let us consider the problem

subject to

where \(\mu > 0\), \(K^{-1} > 0\), and \(\sigma > 0\) are known constants, \(B(t)\) denotes a Brownian motion in \({\mathbb R}\), and \(T = \inf \{t\ge 0: X(t) \le 0\}\) denotes the extinction time. We define the mapping \(H:\mathbb {R}_{+}\mapsto \mathbb {R}_{+}\) as

The generator \(A\) of \(X(t)\) is given by

and we find that

Thus, if \(\mu \le 2\rho + \sigma ^{2}/4\) then by the same argument as in Example 3.2 we see that the optimal policy is immediate chattering down to 0. We then have \(T=0\), and the value reads as

However, if \(\mu > 2\rho + \sigma ^{2}/4\), then we see that the mapping \(G(x)\) satisfies the conditions of Theorem 2 in [2] and, therefore we find that there is a unique threshold \(x^{*}\) satisfying the condition

where \(\psi (x)\) denotes the increasing fundamental solution of the ordinary differential equation \(((A - \rho )u)(x) = 0\), that is, \(\psi (x) = x^{\theta }M(\theta , 2\theta + \frac{2\mu }{\sigma ^2}, \frac{2\mu K^{-1}}{\sigma ^2}x)\), where \(\theta = \frac{1}{2} - \frac{\mu }{\sigma ^2} + \sqrt{(\frac{1}{2} - \frac{\mu }{\sigma ^2})^{2} + \frac{2r}{\sigma ^2}}\), and \(M\) denotes the confluent hypergeometric function. In this case, the value reads as

Especially, the value is a solution of the variational inequality

We summarize this as follows:

Theorem 3.4

(a) Assume that

Then the value function \(V(x)\) of problem (3.29) is

This value is obtained by immediate chattering down to 0.

(b) Assume that

Then \(V(x)\) is given by (3.35). The corresponding optimal policy is immediate chattering from \(x\) down to \(x^*\) if \(x>x^*\), and local time at \(x^*\) of the downward reflected process \(\bar{X}(t)\) at \(x^*\) if \(x<x^*\), where \(x^*\) is given by (3.34).

4 Discussion on a special case

Our verification Theorem 2.1 covers a large class of state dependent singular stochastic control problems arising in the literature on the rational management of renewable resources. It is worth emphasizing that there is an interesting subclass (including the case of Example 3.1) of problems where we can utilize our results in order to provide both a lower as well as an upper boundary for the maximal attainable expected cumulative harvesting yield. In order to shortly describe this case, assume that the underlying dynamics are time homogeneous and independent of each other and, accordingly, that the drift coefficient satisfies \(b_i(t,x)=b(x_i)\) and that the volatility coefficient, in turn, satisfies \(\sigma _i(t,x)=\sigma _i(x_i)\). Assume also that the price \(\pi _i(t,x)=\pi _i(x_i)\) per unit of harvested stock \(x_i\in \mathbb {R}_+\) is nonnegative, nonincreasing, and continuously differentiable as a function of the prevailing stock. Given these assumptions, define the nondecreasing and concave function

It is now a straightforward example in basic analysis to show by relying on a chattering policy described in our Example 3.1. that in the present case we have

Consequently, under the assumed time homogeneity we observe that the maximal attainable expected cumulative harvesting yield satisfies the inequality

On the other hand, utilizing the generalized Itô-Döblin-formula to the mapping \(\Pi _i\), invoking the nonnegativity of the value \(\Pi _i\), and reordering terms yields

where \(T^{*}_N\) is an increasing sequence of almost surely finite stopping times converging to \(T\) and

The concavity of the mapping \(\Pi _i\) then implies that

Hence, we find that for any admissible harvesting strategy \(\gamma _i\) we have

Summing up the individual values then finally yields

Letting \(N\uparrow \infty \) and invoking monotone convergence then shows that in the present setting

Consequently, in the time homogeneous and independent setting the value which can be attained by a chattering policy can be utilized for the derivation of both a lower as well as an upper boundary for the value of the optimal harvesting policy. Moreover, in case the generators \((\mathcal {G}_\rho ^i\Pi _{i})(X_i(s))\) are bounded above by \(M_i\) we observe that

For example, if the underlying evolves as in our 2-dimensional BM example 3.1, we observe that

Hence, \((\mathcal {G}_\rho ^i\Pi _i)(x) \le (\mathcal {G}_\rho ^i\Pi _i)(\tilde{x}_i)\), where

Consequently, we have that

References

Alvarez, L.H.R.: Optimal harvesting under stochastic fluctuations and critical depensation. Math. Biosci. 152, 63–85 (1998)

Alvarez, L.H.R.: Singular stochastic control in the presence of a state-dependent yield structure. Stoch. Process. Appl. 86, 323–343 (2000)

Alvarez, L.H.R.: On the option interpretation of rational harvesting planning. J. Math. Biol. 40, 383–405 (2000)

Alvarez, L.H.R., Shepp, L.A.: Optimal harvesting of stochastically fluctuating populations. J. Math. Biol. 37, 155–177 (1998)

Jeanblanc-Picqué, M., Shiryaev, A.: Optimization of the flow of dividends. Russ. Math. Surveys 50, 257–277 (1995)

Lande, R., Engen, S., Sæther, B.-E.: Optimal harvesting, economic discounting and extinction risk in fluctuating populations. Nature 372, 88–90 (1994)

Lande, R., Engen, S., Sæther, B.-E.: Optimal harvesting of fluctuating populations with a risk of extinction. Am. Nat 145, 728–745 (1995)

Lungu, E.M., Øksendal, B.: Optimal harvesting from a population in a stochastic crowded environment. Math. Biosci. 145, 47–75 (1996)

Lungu, E.M., Øksendal, B.: Optimal harvesting from interacting populations in a stochastic environment. Bernoulli 7, 527–539 (2001)

Protter, P.: Stochastic integration and differential equations. Springer, New York (2004)

Author information

Authors and Affiliations

Corresponding author

Additional information

The research leading to these results has received funding from the European Research Council under the European Community’s Seventh Framework Program (FP7/2007–2013)/ERC Grant agreement no [228087]. This research was carried out with support of CAS—Centre for Advanced Study, at the Norwegian Academy of Science and Letters, within the research program SEFE.

Rights and permissions

About this article

Cite this article

Alvarez, L.H.R., Lungu, E. & Øksendal, B. Optimal multi-dimensional stochastic harvesting with density-dependent prices. Afr. Mat. 27, 427–442 (2016). https://doi.org/10.1007/s13370-015-0357-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13370-015-0357-0

Keywords

- Optimal harvesting

- Interacting populations

- Itô diffusions

- Singular stochastic control

- Verification theorem

- Density-dependent prices

- Chattering policies