Abstract

This paper presents a statistical analysis and modeling of the thermophysical properties of ZnO-MWCNT/EG-water hybrid nanofluid using three artificial intelligence models, including multilayer perceptron neural network, radial basis function neural networks, and least square support vector machine (LSSVM). The thermal conductivity of the nanofluid was modeled using experimental data, and statistical parameters such as R-squared (R2), average absolute relative deviation (AARD %), root mean squared error, and standard deviation were employed to investigate the accuracy of the proposed models. The R2 values of 0.9926, 0.9951, and 0.9866 and AARD% values of 0.4996%, 0.3532%, and 0.6013% show the accuracy of the models for respective MLP, RBF, and LSSVM models. Among these models, the RBF model shows the best accuracy. The study demonstrates the potential of artificial intelligence methods in predicting the thermophysical properties of nanofluids, which can help minimize experimental time and cost for future work.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The enhancement of thermal properties of commercial oils is of paramount importance, given the significance of heat transfer in various industries such as power cycles, automotive, and refrigeration systems [1]. Extensive research has been conducted to improve the thermal properties of conventional fluids, with a particular focus on the use of nanomaterials (with an average particle size of 1 to 100 nm) to intensify heat transfer when uniformly dispersed in base fluids like oil, water, or ethylene glycol (EG) [2,3,4,5].

The remarkable properties of nanofluids are high stability, high thermal conductivity, and small size [6, 7]. The viscosity of nanofluids is improved by increasing the concentration and size of nanoparticles. Moreover, the thermal conductivity of nanofluids, which directly influences heat transfer, is improved by increasing temperature and concentration. These unique properties make nanofluids suitable for many applications, including thermal systems, fuel cells, heat exchangers, and car radiators [8,9,10,11,12].

Carbon nanotubes (CNTs) have been the subject of increased study due to their unique structural, mechanical, and electrical properties [13, 14]. Recent research has highlighted the significant potential of carbon nanotubes (CNTs) in enhancing the thermophysical properties of nanofluids, particularly in applications such as solar technologies and heat transfer. CNTs possess exceptional thermal conductivity, mechanical strength, and chemical stability, making them promising candidates for improving the thermal and optical properties of nanofluids [15]. In the context of solar technologies, nanofluids containing CNTs have been shown to enhance the efficiency of solar energy systems by improving heat transfer and optical properties, thereby contributing to the overall performance of these systems [16]. Although CNTs are expensive, they can enhance the thermal properties of fluids when used in combination with nanoparticles, resulting in a powerful and effective fluid. The most well-known cases are single-wall carbon nanotubes (SWCNTs), graphene oxide, double-wall carbon nanotubes (DWCNTs) and multi-wall carbon nanotubes (MWCNTs) [17,18,19,20,21].

The use of nanofluids, particularly hybrid nanofluids, has gained significant attention due to their potential to enhance heat transfer properties. Hybrid nanofluids have been the focus of recent research aimed at accurately predicting their thermophysical properties. For instance, a study by Bhanuteja et al. [1] developed machine learning algorithms to predict the thermophysical properties of hybrid nanofluids, demonstrating the potential for accurate estimation of these properties. Rashidi et al. [22] provided an updated and comprehensive review of the thermophysical properties of hybrid nanofluids, shedding light on the factors influencing these properties and proposing models for their accurate prediction. Rostami et al. [23] focused on the thermal conductivity modeling of nanofluids with ZnO particles, highlighting the use of artificial neural network approaches for accurate prediction of thermal conductivity. These recent studies underscore the growing interest in accurately predicting the thermophysical properties of hybrid nanofluids, particularly through the use of artificial intelligence methods. The development of accurate prediction models for hybrid nanofluids is essential for their effective utilization in various industrial applications, where improved heat transfer properties are of critical importance.

A computing method like artificial neural network (ANN) is an apt way for estimating the thermo-physical properties of nanofluids at less time and lower cost in comparison with the experiments [24] at low and high temperature conditions [25, 26]. For example, Alfaleh et al. [27] focused on the prediction of thermal conductivity and dynamic viscosity of nanofluids using support vector machines (SVMs). The study reviewed various research works on the forecasting and modeling of these properties of nanofluids with SVMs and their outcomes.

Onyiriuka [28] proposed a unique method for modeling the thermal conductivity of nanofluids. He utilized experimental data, modeling correlations from previous studies, and theoretical data streams to estimate the thermal conductivity of nanofluids. The study demonstrated the efficiency and effectiveness of this approach in predicting the enhancement in thermal conductivity, with the robust linear model emerging as the most efficient algorithm, exhibiting high accuracy on validation and test data. A statistical method named principal components analysis and back propagation network (BPN) model were employed by Yousefi et al. [29] to calculate the effective thermal conductivity of various nanofluids contains different nanoparticles, such as ZnO, CuO, Fe3O4, TiO2, and Al2O3 nanoparticles. The thermal conductivity of the aforementioned fluids was dependent on the thermal conductivity of nanoparticle, temperature, the volume fraction of nanoparticle, the thermal conductivity of base fluids, and the diameter of the nanoparticle. The net with one hidden layer whose performance was assessed by mean square error leads the best result. Absolute average deviation and correlation coefficient, which were used to compare the results with experiments and other models, were 1.47% and 0.9942, respectively.

The thermal conductivity of ZnO-MWCNT/EG-water nanofluid as a function of temperature and solid volume fraction was assessed by Hemmat Esfe et al. [30]. They presented a new correlation and a neural network model. The ANN model with two hidden layers and four neurons predicted experimental data accurately. They could validate their results with data published in the literature [31, 32]. Rostamian et al. [18] experimentally studied the thermal conductivity of CuO-SWCNTs-EG water nanofluid in terms of temperature and concentration. A precise correlation based on the experiments was developed by nonlinear regression to estimate the thermal conductivity. In addition, a feed-forward multilayer perceptron neural network was used to calculate the thermal conductivity and it was identified that based on the margin of deviation, ANN modeling can potentially have a high accuracy in predicting the physical properties of the nanofluids, especially for high-temperature applications and microfluidics and passive systems [33].

Shahsavar and Bahiraei [34] and other investigators [19, 26, 35] evaluated the thermal performance of single and two-phase systems, including the measurement of thermal conductivity and viscosity of the working fluid. For example, Shahsavar and Bahiraei [36] measured the thermal properties of a non-Newtonian hybrid nanofluid consisting of Fe3O4 and CNTs in terms of temperature and concentration. Moreover, multilayer perceptron ANN models with backpropagation rule were applied to predict the viscosity and thermal conductivity. Three approaches referred to as “resilient backpropagation,” “Quasi-Newton,” and “Bayesian regularization-based Levenberg–Marquardt” were used to obtain a suitable ANN model. Mean squared error, maximum absolute error, and coefficient of determination were utilized to estimate the accuracy of the model.

In this paper, MLP, RBF, and LSSVM methods are used to calculate the thermal conductivity of ZnO-MWCNT/EG-water nanofluid. To choose an appropriate network, root mean square error, correlation coefficient, and average absolute relative deviation are utilized. The results of modeling are compared with the experimental data and empirical correlation. It is worth saying that the novelty of this work is to develop a new ANN model for predicting the physical properties of a hybrid nanofluid. This adds to the economic viability of the projects and saves the ages of time. This is because one can estimate the physical properties of the nanofluid to ensure that it can have a plausible effect based on the target application. The developed model prevents additional experiments that are expensive and time-consuming though.

2 Details of the Intelligent Models

2.1 Multilayer Perceptron Neural Network (MLP)

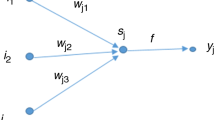

Multilayer perceptron (MLP) is a type of artificial neural network that consists of neurons with massively parallel interconnections, arranged in layers and receiving weights [37]. MLP has more than two layers, including an input layer, an output layer, and one or more hidden layers in between. Information always flows in one direction, from inputs to outputs, in a feed-forward manner. Each neuron (except input layer neurons) receives values from adjacent neurons through weighted connections. At each neuron, the weighted inputs are combined with bias values and used as the input argument of an activation function. [38]. In MLP, the number of neurons in the input and output layers depends on the dimension of inputs and outputs of the main problem. The number of hidden layers and their neurons are determined by the trial and error method to minimize the error values [39]. In this work, the MLP was trained using the back-propagation algorithm, the most widely used ANN method. The number of neurons in the input and output layers is obtained based on the conditions of the problem, as mentioned in Sect. 2.1. In contrast, determining the number of hidden layers and hidden neurons is debatable. It was mathematically proved by Cybenko [40] that the MLP using back-propagation could predict every nonlinear function accurately with only one hidden layer. Thus, in our designed neural network structure, there is only one hidden layer. The number of hidden neurons is typically determined by the trial and error method [41]. The structure and topology of the MLP model is presented in Fig. 1.

2.2 Radial Basis Function Neural Networks

Radial basis function (RBF) neural networks were proposed by many researchers [19, 42,43,44] and can be used for a wide range of applications such as function approximation, classification patterns, spline interpolations, clustering, and mixed methods [45,46,47]. An RBF neural network consists of three layers: input, output, and a hidden layer. The nodes within each layer are connected to the previous layer, as shown in Fig. 2. In this figure, n is the dimension of the input layer.

The hidden layer consists of k neurons and one bias neuron. This network is implemented according to Eq. (1) [48]:

where x is the input vector, \({c}_{i}\) is the center of the ith neuron in the hidden layer,\(\Vert x-{c}_{\text{i}}\Vert\) denotes the Euclidean distance, Q is the Gaussian function, \({\text{w}}_{i}\) is a weight from ith hidden unit to the output node and k is the number of hidden nodes. The Gaussian function Q with the spread \(\sigma\) is defined as follows [49]:

The parameters of the RBF model, such as the maximum number of neurons (MNN) and the spread of Gaussian function, are determined using the genetic algorithm (GA). Although the trial and error approach also can be used to determine these parameters, it is not recommended due to its time consuming nature. GA is one of the best optimization algorithms inspired by Darwinian evolutionary models. In this algorithm, a population of potential solutions is refined iteratively by the natural selection strategy. In this paper, a primary population size of 50 is considered to start the algorithm. The main principle of GA is "survival of the fittest," so the fitness function should be defined and calculated for each member. In this paper, RMSE is used as a fitness function to calculate the error between the model predictions and the target data. Following this process, the members who represent better solutions have more chances for reproduction than those representing poorer solutions. These better solutions are selected as parents. After that, some genetic operators, including crossover and mutation operators, are applied to parents for producing new offspring. Then, the fitness function is reused to evaluate and rank this new generation. These iterative operations are applied until the termination criteria are met (maximum iteration number of 30). Further details about this algorithm are available in published works [50]. The optimum values of spread and MNN were 1.2 and 17, respectively.

2.3 Least Square Support Vector Machine (LSSVM)

Support vector machine (SVM) has been introduced by Vapnik [51] and has been used for many classification and function estimation problems [52]. Using the concept of SVM, Suykens proposed least squared SVM (LSSVM) [53]. LSSVM is a powerful machine learning technique that has demonstrated its ability to learn complex nonlinear problems [54]. The most crucial difference between LSSVM and SVM is that SVM uses a quadratic optimization problem for training, while LSSVM utilizes linear equations [55]. Considering a given training set of N data points, where \({x}_{k}\in {R}^{M}\) is the multi-dimensional input data and \({y}_{k}\) is the dimensional output data, LSSVM can be obtained by formulating the problem as the following equation:

where \(\varphi \left(x\right)\) and \({w}^{T}\) are kernel function and the transposed output layer vector, respectively. b is the bias value [56].

In LSSVM, for calculation of w and b, Eq. (4) is minimized as cost function [56]:

Eventually, the resulting LSSVM model for the function prediction can be obtained by solving the following optimization problem (Eq. 5) [56]:

where K(x,xk) is a kernel function, and αk is the Lagrange multiplier. There are many kernel functions such as linear, polynomial, spline, and Gaussian. In this paper, Gaussian function has been used:

where σ is the width of the Gaussian function.

In the LSSVM method, two parameters, including γ and \({\upsigma }^{2}\), used in the mentioned equations, affect the accuracy of the method. These parameters should be specified by users. To determine the optimum values of the model’s parameters, the coupled simulated annealing (CSA) method is applied [57]. The values of γ and \({\sigma }^{2}\) were determined, respectively, to be 3,155,127.70 and 54.76.

3 Material and Methods

3.1 Data Gathering

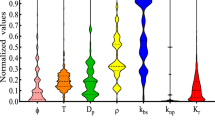

One of the steps that should be taken to present a reliable model is to utilize valid data with wide ranges [58, 59]. Data used in this study are derived from references [18]. This study computes the thermal conductivity ratio considering the following factors as the input variables: Volume concentration (φ (%)) and Temperature (°C). The statistical values of the mentioned parameters are listed in Table 1.

3.2 Model Development

In order to develop the model, the following formula is used for normalization, which produces a value between − 1 and 1. It has the same range of values for each input to the intelligent models. This can guarantee a stable convergence of weight and biases.

After normalizing the data, the data set is grouped into two sub-sets of testing and training data. This process is repeated several times for two following reasons:

-

find a homogeneous distribution in each sub-set, and

-

prevent local aggregation of data points.

In this paper, 80% of the data points belongs to the subset of the training, and the rest 20% are members of the subset of the testing.

To evaluate the precision of the proposed models, several statistical parameters such as average absolute relative deviation (AARD%), R-squared (R2), standard deviation (SD), and root mean squared error (RMSE) are utilized (Eqs. 8–11) [60, 61]. In these formulas \(\lambda\) denotes the thermal conductivity ratio.

4 Results and Discussion

Figure 3 compares the RMSE of the networks with a different number of hidden neurons. As can be seen in Fig. 3, the MLP with four hidden neurons has the least cost, so it has the best performance. Also, Fig. 4 shows the convergence process of GA during the optimization process.

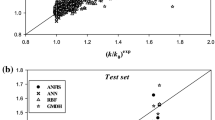

Table 2 shows the results of the aforementioned statistical parameters for all the developed models. As it is shown in this table, the RBF model is better than the other two methods as the value of the R2 is the highest, and the values of AARD%, RMSE, and SD are the lowest.

Figure 5 shows the schematic representation of these evaluation parameters. As it is evident in Fig. 5a, the R2 value for the RBF model is higher than those for the other two methods. In addition, the lowest value of RMSE for the RBF method shown in Fig. 5b indicates the superiority of this method to other ones. It should be noted that the mentioned comparison includes the total dataset.

Figure 6 represents the error distribution for these methods. In the analysis of the error distribution diagrams, two factors are essential: the distribution spread (σ) and the location of the distribution’s peak (µ). According to Fig. 6b, the amount of σ in the RBF method is the lowest, which indicates that the standard deviation in the error values is the lowest. Moreover, the location of the distribution’s peak (µ) is sufficiently close to zero.

As it can be seen in Table 2, the R2 was obtained greater than 0.98 for all the developed methods, which indicates these models are valid and acceptable. Since the RBF method owns the best performance, Fig. 7 shows just the cross-plot for the RBF method. In using the correlation coefficient to evaluate the performance of the method, it is enough to consider two things: firstly, the approach of the R2 to value 1 and secondly the closeness of accumulation of data points to the 45° line. In the RBF model, the R2 value is obtained 0.995, and the location of most of the data points is close to the 45° line. This indicates the RBF is the best predictor for the thermal conductivity ratio. Figure 8 is drawn to indicate the relative deviations of predicted values of the RBF model versus the measured thermal resistance data points. Moreover, Fig. 9 shows a very close match between the RBF model prediction and target values versus the index of the data points. As it is shown in this figure, there is a great agreement between the predicted and measured data for the RBF model. Notably, Fig. 8 compares the accuracy of the model against the target values, which not only validated the model but also shows that the model is not noisy. As can be seen, the target values overlap the model prediction line showing that the model has sufficient accuracy.

5 Conclusion

The objective of this study was to predict the thermal conductivity ratio of ZnO-MWCNT/EG-water hybrid nanofluid. In this regard, three intelligent models, including MLP, RBF, and LSSVM, were utilized. In all the developed models, the same dataset, which included wide ranges of volume concentration, temperature, and thermal conductivity ratio values, was used for training and testing the developed networks. The aim of using these three intelligent models was to access a model with the best quality for predicting the thermal conductivity ratio. In this study, the graphical graphs and statistical parameters were utilized to evaluate the accuracy of the mentioned methods. Comparing the MLP, RBF, and LSSVM models, it was revealed that the RBF model was the most accurate one.

References

Bhanuteja, S.; Srinivas, V.; Moorthy, C.V.; Kumar, S.J.; Raju, B.L.L.: Prediction of thermophysical properties of hybrid nanofluids using machine learning algorithms. Int. J. Interact. Design Manuf. (IJIDeM) (2023). https://doi.org/10.1007/s12008-023-01293-w

Wohld, J.; Beck, J.; Inman, K.; Palmer, M.; Cummings, M.; Fulmer, R.; Vafaei, S.: Hybrid nanofluid thermal conductivity and optimization: original approach and background. Nanomaterials 12, 2847 (2022)

Esfe, M.H.; Toghraie, D.; Alidoust, S.; Amoozadkhalili, F.; Ardeshiri, E.M.: Investigating the rheological behavior of a hybrid nanofluid (HNF) to present to the industry. Heliyon 8(12), e11561 (2022)

Esfe, M.H.; Esfandeh, S.; Amiri, M.K.; Afrand, M.: A novel applicable experimental study on the thermal behavior of SWCNTs (60%)-MgO (40%)/EG hybrid nanofluid by focusing on the thermal conductivity. Powder Technol. 342, 998–1007 (2019)

Sohrabi, N.; Haddadvand, R.; Nabi, H.: Numerical investigation of the effect of fluid nanohybrid type and volume concentration of fluid on heat transfer and pressure drop in spiral double tube heat exchanger equipped with innovative conical turbulator. Case Stud. Therm. Eng. 60, 104751 (2024)

Peyghambarzadeh, S.M.; Sarafraz, M.M.; Vaeli, N.; Ameri, E.; Vatani, A.; Jamialahmadi, M.: Forced convective and subcooled flow boiling heat transfer to pure water and n-heptane in an annular heat exchanger. Ann. Nucl. Energy 53, 401–410 (2013)

Salari, E.; Peyghambarzadeh, M.; Sarafraz, M.M.; Hormozi, F.: Boiling heat transfer of alumina nano-fluids: role of nanoparticle deposition on the boiling heat transfer coefficient, periodica polytechnica. Chem. Eng. 60, 252–258 (2016)

Karimipour, A.; Bagherzadeh, S.A.; Taghipour, A.; Abdollahi, A.; Safaei, M.R.: A novel nonlinear regression model of SVR as a substitute for ANN to predict conductivity of MWCNT-CuO/water hybrid nanofluid based on empirical data. Physica A 521, 89–97 (2019)

Esfe, M.H.; Hajmohammad, H.; Toghraie, D.; Rostamian, H.; Mahian, O.; Wongwises, S.: Multi-objective optimization of nanofluid flow in double tube heat exchangers for applications in energy systems. Energy 137, 160–171 (2017)

Kumar, A.; Hassan, M.; Chand, P.: Heat transport in nanofluid coolant car radiator with louvered fins. Powder Technol. 376, 631–642 (2020)

Sayed, E.T.; Abdelkareem, M.A.; Mahmoud, M.S.; Baroutaji, A.; Elsaid, K.; Wilberforce, T.; Maghrabie, H.M.; Olabi, A.: Augmenting performance of fuel cells using nanofluids. Therm. Sci. Eng. Prog. 25, 101012 (2021)

Sahin, F.; Acar, M.C.; Genc, O.: Experimental determination of NiFe2O4-water nanofluid thermophysical properties and evaluation of its potential as a coolant in polymer electrolyte membrane fuel cells. Int. J. Hydrog. Energy 50, 1572–1583 (2024)

Rudyak, V.Y.; Pryazhnikov, M.I.; Minakov, A.V.; Shupik, A.A.: Comparison of thermal conductivity of nanofluids with single-walled and multi-walled carbon nanotubes. Diam. Relat. Mater. 139, 110376 (2023)

Pabst, W.; Hříbalová, S.: Modeling the thermal conductivity of carbon nanotube (CNT) nanofluids and nanocomposites–a fresh restart. Int. J. Heat Mass Transf. 206, 123941 (2023)

Wang, J.; Yang, X.; Klemeš, J.J.; Tian, K.; Ma, T.; Sunden, B.: A review on nanofluid stability: preparation and application. Renew. Sustain. Energy Rev. 188, 113854 (2023)

Ghalandari, M.; Maleki, A.; Haghighi, A.; Shadloo, M.S.; Nazari, M.A.; Tlili, I.: Applications of nanofluids containing carbon nanotubes in solar energy systems: a review. J. Mol. Liq. 313, 113476 (2020)

Sarafraz, M.M.; Peyghambarzadeh, S.M.: Experimental study on subcooled flow boiling heat transfer to water–diethylene glycol mixtures as a coolant inside a vertical annulus. Exp. Therm. Fluid Sci. 50, 154–162 (2013)

Esfe, M.H.; Esfandeh, S.; Saedodin, S.; Rostamian, H.: Experimental evaluation, sensitivity analyzation and ANN modeling of thermal conductivity of ZnO-MWCNT/EG-water hybrid nanofluid for engineering applications. Appl. Therm. Eng. 125, 673–685 (2017)

Shahsavar, A.; Bahiraei, M.: Experimental investigation and modeling of thermal conductivity and viscosity for non-Newtonian hybrid nanofluid containing coated CNT/Fe3O4 nanoparticles. Powder Technol. 318, 441–450 (2017)

Esfe, M.H.; Rostamian, H.; Sarlak, M.R.; Rejvani, M.; Alirezaie, A.: Rheological behavior characteristics of TiO2-MWCNT/10w40 hybrid nano-oil affected by temperature, concentration and shear rate: an experimental study and a neural network simulating. Physica E Low-Dimens. Syst. Nanostruct. 94, 231–240 (2017)

Esfe, M.H.; Afrand, M.: Mathematical and artificial brain structure-based modeling of heat conductivity of water based nanofluid enriched by double wall carbon nanotubes. Physica A 540, 120766 (2020)

Rashidi, M.M.; Nazari, M.A.; Mahariq, I.; Assad, M.E.H.; Ali, M.E.; Almuzaiqer, R.; Nuhait, A.; Murshid, N.: Thermophysical properties of hybrid nanofluids and the proposed models: an updated comprehensive study. Nanomaterials 11, 3084 (2021)

Rostami, S.; Aghakhani, S.; Pordanjani, A.H.; Afrand, M.; Cheraghian, G.; Oztop, H.F.; Shadloo, M.S.: A review on the control parameters of natural convection in different shaped cavities with and without nanofluid. Processes 8, 1011 (2020)

Esfe, M.H.; Zabihi, F.; Rostamian, H.; Esfandeh, S.: Experimental investigation and model development of the non-Newtonian behavior of CuO-MWCNT-10w40 hybrid nano-lubricant for lubrication purposes. J. Mol. Liq. 249, 677–687 (2018)

Esfe, M.H.; Rostamian, H.; Esfandeh, S.; Afrand, M.: Modeling and prediction of rheological behavior of Al2O3-MWCNT/5W50 hybrid nano-lubricant by artificial neural network using experimental data. Physica A 510, 625–634 (2018)

Rostamian, S.H.; Biglari, M.; Saedodin, S.; Esfe, M.H.: An inspection of thermal conductivity of CuO-SWCNTs hybrid nanofluid versus temperature and concentration using experimental data, ANN modeling and new correlation. J. Mol. Liq. 231, 364–369 (2017)

Alfaleh, A.; Khedher, N.B.; Eldin, S.M.; Alturki, M.; Elbadawi, I.; Kumar, R.: Predicting thermal conductivity and dynamic viscosity of nanofluid by employment of Support Vector Machines: a review. Energy Rep. 10, 1259–1267 (2023)

Onyiriuka, E.: Predictive modelling of thermal conductivity in single-material nanofluids: a novel approach. Bull. Natl. Res. Cent. 47, 140 (2023)

Sarafraz, M.M.; Arjomandi, M.: Demonstration of plausible application of gallium nano-suspension in microchannel solar thermal receiver: experimental assessment of thermo-hydraulic performance of microchannel. Int. Commun. Heat Mass Transfer 94, 39–46 (2018)

Sarafraz, M.M.; Arjomandi, M.: Thermal performance analysis of a microchannel heat sink cooling with Copper Oxide-Indium (CuO/In) nano-suspensions at high-temperatures. Appl. Therm. Eng. 137, 700–709 (2018)

Esfe, M.H.; Saedodin, S.; Naderi, A.; Alirezaie, A.; Karimipour, A.; Wongwises, S.; Goodarzi, M.; Dahari, M.B.: Modeling of thermal conductivity of ZnO-EG using experimental data and ANN methods. Int. Commun. Heat Mass Transf. 63, 35–40 (2015)

Yousefi, F.; Mohammadiyan, S.; Karimi, H.: Application of artificial neural network and PCA to predict the thermal conductivities of nanofluids. Heat Mass Transf. 52, 2141–2154 (2016)

Sarafraz, M.M.; Hormozi, F.: Convective boiling and particulate fouling of stabilized CuO-ethylene glycol nanofluids inside the annular heat exchanger. Int. Commun. Heat Mass Transf. 53, 116–123 (2014)

Sarafraz, M.M.; Hormozi, F.: Experimental study on the thermal performance and efficiency of a copper made thermosyphon heat pipe charged with alumina–glycol based nanofluids. Powder Technol. 266, 378–387 (2014)

Sarafraz, M.M.; Hormozi, F.: Intensification of forced convection heat transfer using biological nanofluid in a double-pipe heat exchanger. Exp. Therm. Fluid Sci. 66, 279–289 (2015)

Sarafraz, M.M.; Hormozi, F.; Kamalgharibi, M.: Sedimentation and convective boiling heat transfer of CuO-water/ethylene glycol nanofluids. Heat Mass Transf. 50, 1237–1249 (2014)

Javed, Y.; Rajabi, N.: Multi-layer perceptron artificial neural network based IoT botnet traffic classification. In: Arai, K., Bhatia, R., Kapoor S. (eds.) Proceedings of the Future Technologies Conference (FTC), Vol. 1, pp. 973–984. Springer, New York (2020)

Xavier-de-Souza, S.; Suykens, J.A.; Vandewalle, J.; Bollé, D.: Coupled simulated annealing. IEEE Trans. Syst. Man Cyber. B 40, 320–335 (2010)

Choldun, R.M.I.; Santoso, J.; Surendro, K.: Determining the number of hidden layers in neural network by using principal component analysis. In: Bi, Y., Bhatia, R., Kapoor, S. (eds.) Intelligent Systems and Applications: Proceedings of the 2019 Intelligent Systems Conference (IntelliSys), Vol. 2, pp. 490–500. Springer, New York (2020)

Tatar, A.; Najafi-Marghmaleki, A.; Barati-Harooni, A.; Gholami, A.; Ansari, H.; Bahadori, M.; Kashiwao, T.; Lee, M.; Bahadori, A.: Implementing radial basis function neural networks for prediction of saturation pressure of crude oils. Pet. Sci. Technol. 34, 454–463 (2016)

Najafi-Marghmaleki, A.; Tatar, A.; Barati-Harooni, A.; Mohammadi, A.H.: A GEP based model for prediction of densities of ionic liquids. J. Mol. Liq. 227, 373–385 (2017)

Sarafraz, M.M.; Peyghambarzadeh, S.M.: Influence of thermodynamic models on the prediction of pool boiling heat transfer coefficient of dilute binary mixtures. Int. Commun. Heat Mass Transf. 39, 1303–1310 (2012)

Park, J.; Sandberg, I.W.: Approximation and radial-basis-function networks. Neural Comput. 5, 305–316 (1993)

Park, J.; Sandberg, I.W.: Universal approximation using radial-basis-function networks. Neural Comput. 3, 246–257 (1991)

Leonard, J.A.; Kramer, M.A.; Ungar, L.H.: Using radial basis functions to approximate a function and its error bounds. IEEE Trans. Neural Netw. 3, 624–627 (1992)

Nahas, J.: A Survey of Artificial Neural Networks and Semantic Segmentation. Int. J. Adv. Res. Comput. Sci. 8, 2590–2596 (2017)

Orr, M.J.: Introduction to radial basis function networks, Technical Report, Center for Cognitive Science, University of Edinburgh, (1996)

Jang, J.-S.; Sun, C.-T.: Functional equivalence between radial basis function networks and fuzzy inference systems. IEEE Trans. Neural Netw. 4, 156–159 (1993)

Rouhani, M.; Javan, D.S.: Two fast and accurate heuristic RBF learning rules for data classification. Neural Netw. 75, 150–161 (2016)

Svozil, D.; Kvasnicka, V.; Pospichal, J.: Introduction to multi-layer feed-forward neural networks. Chemom. Intell. Lab. Syst. 39, 43–62 (1997)

Chen, S.; Mulgrew, B.; Grant, P.M.: A clustering technique for digital communications channel equalization using radial basis function networks. IEEE Trans. Neural Netw. 4, 570–590 (1993)

Buchtala, O.; Klimek, M.; Sick, B.: Evolutionary optimization of radial basis function classifiers for data mining applications. IEEE Trans. Syst. Man Cybern. B 35, 928–947 (2005)

Yingwei, L.; Sundararajan, N.; Saratchandran, P.: A sequential learning scheme for function approximation using minimal radial basis function neural networks. Neural Comput. 9, 461–478 (1997)

Yasin, Z.M.; Salim, N.A.; Aziz, N.; Mohamad, H.; Wahab, N.: Prediction of solar irradiance using grey Wolf optimizer least square support vector machine. Indones. J. Electr. Eng. Comput. Sci. 17, 10–17 (2020)

Vapnik, V.: The nature of statistical learning theory, Springer science & business media (2013)

Samui, P.; Kothari, D.: Utilization of a least square support vector machine (LSSVM) for slope stability analysis. Sci. Iranica 18, 53–58 (2011)

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Mathe. Control Signal Syst. 2, 303–314 (1989)

Suykens, J.A.; Vandewalle, J.: Least squares support vector machine classifiers. Neural. Process. Lett. 9, 293–300 (1999)

Parkin, D.M.; Bray, F.; Ferlay, J.; Pisani, P.: Global cancer statistics. CA: A Cancer J. Clin. 55, 74–108 (2005)

Rostamian, H.; Lotfollahi, M.N.: A novel statistical approach for prediction of thermal conductivity of CO2 by Response Surface Methodology. Physica A: Stat. Mech. Appl. 527, 121175 (2019)

Sharma, C.; Ojha, C.: Statistical parameters of hydrometeorological variables: standard deviation, SNR, skewness and kurtosis, Advances in water resources engineering and management: select proceedings of TRACE 2018, pp. 59-70. Springer, (2020)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest in this paper.

Appendix A

Appendix A

Thermal conductivity ratio data that used in this work are listed in Table 1. The thermal conductivity of ZnO-MWCNT/EG-water hybrid nanofluid was measured in the 0.02%, 0.05%, 0.1%, 0.25%, 0.5%, 0.75%, and 1% solid volume fractions. As can be seen from Table 1, the temperature ranges were 30–50 °C.

See Table

3.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zamany, M.S., Taghavi Khalil Abad, A. Statistical Analysis and Accurate Prediction of Thermophysical Properties of ZnO-MWCNT/EG-Water Hybrid Nanofluid Using Several Artificial Intelligence Methods. Arab J Sci Eng (2024). https://doi.org/10.1007/s13369-024-09565-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13369-024-09565-7