Abstract

The Wireless Sensor Networks is a wireless system comprising uniformly distributed, autonomous smart sensors for physical or environmental surveillance. Being extremely resource-restricted, the major concern over the network is efficient energy consumption wherein network sustainability is reliant on the transmittance, processing rate, and the acquisition and dissemination of sensed data. Energy conservation entails reducing transmission overheads and can be achieved by incorporating energy-efficient routing and clustering techniques. Accomplishing the desired objective of minimizing energy dissipation thereby enhancing the network’s lifespan can be perceived as an optimization problem. In the current era, nature-inspired meta-heuristic algorithms are being widely used to solve various optimization problems. In this context, this paper aims to achieve the desired objective by implementing an optimum clustered routing protocol is presented inspired by glowworm's luminescence behavior. The prime purpose of the Glowworm swarm optimization with an efficient routing algorithm is to enhance coverage and connectivity across the network to ensure seamless transmission of messages. To formulate the Objective function, it considers residual energy, compactness (intra-cluster distance), and separation (inter-cluster distance) to provide the complete routing solution for multi-hope communication between the Cluster Head and Sink. The proposed technique’s viability in terms of solution efficiency is contrasted to alternative techniques such as Particle Swarm Optimization, Firefly Algorithm, Grey Wolf Optimizer, Genetic Algorithm, and Bat algorithm and the findings indicate that our technique outperformed others by as glowworm optimization’s convergence speed is highly likely to provide a globally optimized solution for multi-objective optimization problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Wireless sensor networks constitute self-organizing sensing units wherein all nodes are randomly distributed to accumulate, evaluate and transmit the data from the targeted region on an ad-hoc basis to the specific location of its underlying application. The subsistence of WSN came from the view of accumulating information from tangible environmental factors through the spatial distribution of sensing motes intended to capture and relay the data to the Base Station. The main objective of the WSN is to increase the network lifetime and maintain a high network-wide range-connectivity ratio to improve the utilization rate and thus minimize network-wide latency. Subject to inadequate energy reserves, the development and maintenance of WSNs are demanding. Since the sensing mote’s energy is mostly used for data reception and transmission, the conventional routing approach relies on using the shortest distance to communicate transmitted data to the destinations efficiently and effectively. Consequently, due to the transmission of voluminous data from source to sink the network suffers from the problem of “Energy Hole” because nodes nearer to sink or along the shortest path tend to consume more energy than others, resulting in an energy disequilibrium and lower network lifespan. As a result, to attain a higher quality of service, efficacious routing algorithms are needed. Significant work has been reported to prolong the life of the network. Studies have suggested and embraced a range of practices to ensure optimal utilization of strained WSN resources. Meta-heuristic Optimization has a pivotal role in Wireless Sensor networks, in addressing the challenges of coverage & connectivity and also in ensuring enhanced network lifespan. Meta-heuristic optimization alongside the Data Aggregation Strategy intends to mitigate network-wide energy consumption. Cos of their greater efficiencies so far as the optimality is concerned, meta-heuristics are influential and preclude their trapping in local search space. GSO is nature-inspired meta-heuristics optimization emulating glow worms working according to stipulated directives. It is designed for adaptive wireless sensor networks based on random routes. The uneven clustering is established with improved GSO, and the ideal centroid cluster is designated to transmit information between cluster members to the base station to enhance the system's life and reduce energy consumption. GSO is highly susceptible to accommodate local and global adaptations. It is adequate for a structured search of several solutions to optimize the numerous objective function with similar or distinctive characteristics. The routing protocol is used to control and manage the data stream. Whenever a datagram leaves its origin, it could take a range of distinct routes to its target, where the routing protocol will be used to determine the optimum and shortest path. With excellent Clustered-Routing mechanisms, sensor nodes are set up with confined batteries, where data distribution and networking are the substantial resource-consuming challenges in WSN.

1.1 Major contributions

The following contributions are provided to resolve the above-mentioned problems in terms of range insufficiency and optimization inefficiencies leading to reduced accuracy computations:

-

1.

This paper introduces a GSO algorithm-focused solution based on energy-intensive sensor activity, as sensor mobility substantially contributes to energy dissipation wherein GSO helps in reducing energy consumption by minimizing the number and proximity of transitioning sensors.

-

2.

Clustered Routing is a probabilistic technique whereby systemic self-assembly and re-clustering are present at random in such a manner whereby energy utilization is continuously distributed through nodes of the network.

-

3.

An efficient routing scheme is intended to ensure data-gathering accompanied by consistent communication between the clusters and the base station, i.e. the sink node. After data dissemination, clustered routing aims to accomplish data accumulation and fusion to limit the count of relaying of messages to the base station.

-

4.

It retains the coverage of limited battery target sensors and works on factors like those of operational nodes per iteration, energy expended per transmission, mean energy consumed per data transfer by one node. The aforesaid contributions and correlating deliberations are analyzed and discussed in Sects. 3 and 4 where the protocols proposed are differentiated from the existing protocols. Section 2 presents the research outcomes relating to wireless network sensor coverage.

2 Related work

Network Targets are often patterned as a set of symmetric free space points within the sensing zone. Even though sensors with a stochastic model usually have no tracking limits, the probability is calculated with considerable computational coherence, which takes into consideration of all functional sensing units. As the sensing competence and probability reduces with the increase in distances between both the sensing motes and respective target of observation, and hence, the impact of distant sensors be predominantly omitted. To address the said state of matter, studies have focused on ensuring energy-efficient target coverage in WSN, where the sensors are clustered into successively triggered sets and different sensors will cover a particular target redundantly. It is dealt with the issue known as the Connected Set Covers (CSC), which evaluates the maximum achievable cover sets, connected to the base station (Raton 2008). A multi-swarm cooperative particle swarm optimization (MCPSO) is introduced as a novel fuzzy computational technique for detecting and managing non-linear dynamical processes motivated by the concept of symbiosis in natural environments (Niu et al. 2008). A substantial amount of work has been reported on a new control coverage scheme based on the elitist non-dominated genetic sorting algorithm (NSGA-II) in a heterogeneous sensor network compared to the current uniform sensing model. The algorithm is put into distributed effect via the implementation of a cluster-based layout. In conjunction, an improved probabilistic encoding reflects both sensor radius reconfiguration and sensor availability (Jia et al. 2009). A systematic approach to scheduling work on computational grids based on Particle Swarm Optimization (PSO) came into existence (Liu et al. 2010). Integrating the prime GAs and PSO features, a fuzzy decision-making system is used to interpret the results generated during the optimization process (Valdez et al. 2011). It is further stated the objective to propose an efficient routing optimization technique reliant on modifying the equations of the observer bee and the scout bee of the original artificial bee colony (ABC). Several other control features are incorporated, like overlooking and factors to speed up the convergence and likelihood of mutant to increase its lifespan (Yu et al. 2013). A Distributed Lifetime Coverage Optimization (DiLCO), which retains the coverage and enhances the existence of a network of wireless sensors. Next, by using the conventional divide and conquer approach network is partitioned into sub-regions. The protocol proposed incorporates two energy-efficient strategies for fulfilling the main objective: a leader’s election in each area and a schedule of node operations centered on optimization by each elected leader (Idrees et al. 2015). An Adaptive Genetic Algorithm (AGA) and enhanced binary ant colony algorithm to exhibit optimum network coverage. GA replicates the correlation probability and mutation probability optimally according to the different conditions of individuals in the process of searching for optimal parameters to keep the colony diversity and prevent adverse divergence. The solution is formed by a binary ant colony algorithm as an initial population of a genetic algorithm, and then a better solution is found. It can, therefore, enhance algorithm convergence rate, improve solution efficiency and avoid local optimal and precautionary glitches (Tian et al. 2016). A Socratic Algorithm to take into account the collection of cluster heads, the transmission route from specific nodes to the cluster head, and the optimization of the mobile sink pathway. The proposed Mobile Sinks data gathering algorithm is based on the swarm of artificial bees. The algorithm proposed will effectively save resources, improve the performance and reliability of network data gathering and prolong network life (Yue et al. 2016). Novel Artificial Fish Swarm Intelligence Optimization Algorithm (AFSA) to artificially simulate fish behavior and search for the optimized solution space. For the quest for an effective solution area, global coverage of artificial swarm fish algorithms is used. The particle swarm algorithm is then used to rapidly perform local analysis, change the WSN node coordinates and dimension, and eliminate coverage overlays and blind areas (Xia 2016). A shortest disbursed route data gathering methodology to optimize network lifetime pertaining both to the stationary and remote multichip WSNs, for interconnected target coverage, wherein data packets from the source nodes are created on a sink through energy-efficacious shortest paths (concerning hop-counting).It is presumed that each target is under the surveillance of at least one of the sensing units (Biswas et al. 2018). It is further stated that the main reason that could save energy from WSN is to trigger data sets of sensors. The prime objective of the work is to attain and maintain the sensor remnant’s ability to carry out target recognition. Herein, firstly the sensed information is analyzed with a perspective to design an empirical formulation. Then, the algorithm for column generation, together with the meta-heuristic GRASP, gives sensors the time to get activated (Lersteau et al. 2018). It is further acquainted with using the Enhanced Whale Group Optimization to boost node search capability and to speed up the global search process. In this algorithm, backward learning is used to initialize the position of the whale population, which can effectively prevent the generation of poorly positioned individuals (Wang et al. 2019). Eventually, a novel enhanced clustering oriented Multiple Mobile Sinks (MMS) using the ACO approach to improve data collection efficiency and network life of WSNs. With the ACO-based MS approach, routing has become more appropriate and resilient to changes across the network. MMS reduced the time required in all clusters to collect data. The data transmit interval was reduced so that the network life span was enhanced thereby reducing the data loss rate (Krishnan et al. 2019). To increase network capacity and lifespan, the backup node is assimilated using the probabilistic greedy technique in which the primary objective is to select a minimal number of K-means clusters (Das et al. 2019). To exhibit the sensor node concretion and coverage lapping. CMEST involves the composition of network clusters which, in turn, ascertain the Cluster Head and normal sensing nodes subject to their residual energy count. The intra-cluster routing is achieved with the help of a self-stabilizing algorithm, whereas the inter-cluster routes are constructed using the Boruvka-MST algorithm. Whenever the energy of CH depletes or the associations within the cluster are disrupted due to motes failures, the re-construction process is triggered. It relinks the nodes and replaces the dead CHs without a reorganization of clusters that could save energy across the network (Chen et al. 2019). Issues relating to \sink and sensor placement”, the obtaining of feasible coverage, connectivity, and data routing are dealt with through a single SPRC (Sensor Placement, Scheduling and Routing with Connectivity) protocol which identified the optimum sensors and sink positions and also active/backup sensing devices duration and data transmission routes from each active sensor to respective base station (Kabakulak 2019). The impact of the sensing range in sweep coverage issues is investigated to shorten mobile sensor route length (Gao et al. 2019). Focus is on a coverage set-oriented target coverage technology wherein the goal was to provide an energy-optimized minimum path from the sink to sensor node as well as from cover set to sink, thereby improving network lifespan and lessening the average energy consumption rate (Katti 2019).

3 Improved GSO-based “coverage and connectivity”

“Connectivity & Coverage” are essential to a stable wireless network. (Jia et al. 2009) Target Coverage based on Glowworm Swarm Optimization (GSO) is aimed primarily at the ability to track targets with accessible sensing units. It is a deterministic approach that is intended to decide whether at least “n-predefined sensors” cover all targets in the sensing network and during the movement phase selecting a neighbor with a larger luciferin concentration than its own. (Chen et al. 2019).

When it comes to global search, the Glowworm Optimization method's location update model only retains local information that results in a static convergence rate across the network and also the optimality reduces when a glowworm updates its position in all the directions simultaneously. To ensure that the glowworms update their positions concurrently using local and global information simultaneously the condition is modified as:

where \(y_{i} \left( t \right)\) is the location of glowworm at instance ‘t’ and \(y_{j} \left( t \right)\) represents its neighborhood, \(a_{1}\) and \(a_{2}\) are acceleration constants, r is a random value, \(y_{g} \left( t \right)\) is the global optimum of a glowworm.

The GSO algorithm is divided into 3 phases: adjusting luciferin levels, optimizing, and upgrading neighborhood selection. The glowing pigment is updated as per rule:

where \(Lu_{g} \left( t \right)\) belongs to the luciferin level related to the glowworm \(g_{w}\) at time t, \(\delta\) is the luciferin decay constant \(\left( {0 < \delta < 1} \right)\), \(\eta\) is the enhancement constant in luciferin, and \(O\left( {y_{g} \left( t \right)} \right)\) is the objective function value at \(g^{th}\) glowworm at time \(t\).The likelihood of movement to a peer \(p\) for each glowworm is given by

where

Is the \(g_{w}^{th} the\) neighborhood set at the time t, \(dis_{gp} \left( t \right)\) is the Euclidean distance between glowworms (\(g_{w} )\) and \(p\) at the time \(t\), and \(dis_{d}^{g} \left( t \right)\) stands for the variable neighborhood range related to the glowworm \(g_{w}\) at time \(t.\) Let glowworm \(g_{w}\) choose a glowworm \(p \in N\) with a \(M_{gp} \left( t \right)\) probability. (Valdez et al. 2011)

where \(y_{g} \left( t \right) \in Z^{m}\) is the position of the glowworm \(g_{w}\) at time \(t\) in the m dimensional physical space \(Z^{m}\). \(\parallel \parallel\) is a Euclidean norm operator, \(\alpha\) is the step size that should be > 0. In the vicinity of each luminous worm, the following rule is updated:

\(\gamma\) is the scalar quantity, \(h_{t}\) is the number of parameters for neighboring influence.

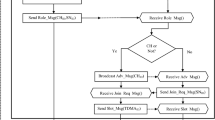

3.1 Essential force clustered routing

Clustered Routing monitors connectivity as a basic principle since the sensing motes cannot provide their sensed parameters to their respective Cluster Head without connectivity. Its fundamental objective is to choose a cover set to ensure that at least one target per iteration is covered by a sensing unit (Figs. 1 and 2).

-

Throughout this phase, all Pt (for t = 0) particles are initialized randomly across the populace. The initialization phase is followed out as: (Krishnan et al. 2019)

$$P_{{ij,d}}^{r} = R\left( {l_{b} ;u_{b} } \right)for\;i,j = 1,2, \ldots ,F\;and\;d = 1,2, \ldots ,n$$(7)where \(^{\prime}i\;and\;j^{\prime}\) correspond to the lattice particle position, \(^{\prime}r^{\prime}\) is the loop radial of essential force clustered routing, \(^{\prime}F^{\prime}\) is the lattice-like environment dimension, \(^{\prime}n^{\prime}\) is the dimensional spatial searching, \(l_{b}\) and \(u_{b}\) are the \(d^{th}\) dimensions of search space’s lower bound and the upper bound.

-

The loop is terminated once the termination condition is reached. The condition to terminate herein would be when the maximum iterations are achieved.

-

This phase calculates and saves in the magnetic field \(^{\prime}m^{\prime}\) the target of each \(S_{xy}^{t}\) in \(S^{t}\). Where \(^{\prime}r^{\prime}\) is the iteration round and \(i,j = 1,2, \ldots ,F\) displays particle position in population.

-

Next, the normalization is performed on the \(M_{F}^{r}\). The normalization is performed as:

$$M_{{F_{ij} }} = \frac{{M_{{F_{ij} }} - L}}{H - L}$$(8)$$L = min_{i,j \to 1 to F} P_{ij}^{r}$$$$H = max_{i,j \to 1 to F} P_{ij}^{r}$$ -

Each particle only interacts with its neighboring in lattice-like structure, i.e. each particle only applies its force to its neighbors. The \(P_{ij}^{r}\) neighbors are positioned in this phase.

-

The Essential Force Clustered Routing optimizes the way sensed data are transferred to the base station. The source node is called the node containing the information to be transmitted. (Sampathkumar et al. 2020) This node searches for the next possible hop to transfer the sensed packets to the destination or base station. All neighbors will receive a notification about a route directly to find the next best hop. This message to the route path contains information such as the neighbor’s position, velocity, and energy of the node. Next nodes make the same requests by substituting their value for the position, velocity, and energy value they receive from their available neighbors. Rebroadcast the same cycle until the base station is reached. The proposed method of finding the next best hops uses the Clustered Routing.

-

It is based upon the concept of Gravity denotes that “Any particle attracts any other particle with a force F which is directly proportional to the mass-produced and inversely proportional to the distance square between them”.

$$E_{F} = G_{c} *\left[ {{\raise0.7ex\hbox{${W_{1} W_{2} }$} \!\mathord{\left/ {\vphantom {{W_{1} W_{2} } {d_{P}^{2} }}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${d_{P}^{2} }$}}} \right]$$(9)

Where,\(E_{F} -\) Essential force of a particle, \(G_{c} -\) Gravitational Constant, \(W_{1} -\) weight of particle1.\(W_{2} -\) Weight of particle2, \({\text{d}}_{{\text{P}}}^{2} -\) distance between particle 1 and particle 2.

4 Pseudo-code of GSO based target coverage with EFP routing

-

1

Initialize the Swarm of sensing glowworms \(x _{i}\)(i = 1, 2... n).

-

2

Initialize the GSO parameters no. of source nodes, no. of destination nodes, threshold decision range, Ell values, luciferin enhancement and decay constants, Limit axis, etc.

-

3

Initially ∀glowworm “i”the luciferin concentration remains the same.

-

4

for every glowworm \(i \to 1 to n\left( {populace} \right)\), update the Luciferin Concentration of each glowworm using equation(i)

-

4.1

\(Lu_{g} \left( t \right) = \left( {1 - \delta } \right) + \eta *O\left( {y_{g} \left( t \right)} \right)\)

-

4.2

Objective function Evaluation

-

4.2.1

\(f\left( {x_{i} ,y_{i} } \right)\) , where \(x_{i } and y_{i }\) signifies the coordinates of the position of glowworm in free space.

-

4.3

Update probabilistic likelihood of each glowworm using equations (ii)and (iii)

-

4.3.1

\(M_{gp} \left( t \right) = {\raise0.7ex\hbox{${Lu_{p} \left( t \right) - Lu_{g} \left( t \right)}$} \!\mathord{\left/ {\vphantom {{Lu_{p} \left( t \right) - Lu_{g} \left( t \right)} {\mathop \sum \nolimits_{k = 1}^{N} Lu_{gk} \left( t \right) - Lu_{g} \left( t \right)}}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${\mathop \sum \nolimits_{k = 1}^{N} Lu_{gk} \left( t \right) - Lu_{g} \left( t \right)}$}}\)

-

4.3.2

\(p \in N, N = \{ p; dis_{gp} \left( t \right) < dis_{d}^{g} \left( t \right); Lu_{p} \left( t \right)\}\)

-

4.4

Probabilistic estimation of transition of Glowworm towards neighborhood is calculated as shown in equation(iv)

-

4.4.1

\(y_{g} \left( {t + 1} \right) = y_{g} \left( t \right) + \alpha \frac{{y_{p} \left( t \right) - y_{g} \left( t \right)}}{{\parallel y_{p} \left( t \right) - y_{g} \left( t \right)\parallel }}\)

-

4.5

The Vicinity of each sensing unit is updated using Decision Update as shown in equation(v)

-

4.5.1

\(c_{dis}^{g} \left( {t + 1} \right) = \min \left\{ {c_{\alpha } ,\max \left( {0,c_{dis}^{g} \left( t \right) - \gamma \left( {h_{t} - \left| {N\left( t \right)} \right|} \right)} \right)} \right\}\)

-

4.6

Repeat, while(iter < = max)

-

4.7

end for

-

5

Update the position of Sensing Agents as:

-

5.1

\(for k = 1:No_{drones}\)

-

5.2

\(agentx\left( {k; j; :} \right) = Xcoor\left( k \right); agenty\left( {k; j; :} \right) = Ycoor\left( k \right);\)

-

6

Apply Essential Force Clustered Routing to optimize the transmission of sensed data to the base station.

-

7

∀ iteration \(^{\prime}iter^{\prime}\)

-

7.1

Computer the Cluster List using threshold value as:

-

7.1.1

\(t = \left( {\frac{p}{{1 - p*\bmod \left( {rnd,\frac{1}{p}} \right)}}} \right)\) where p is the probability of a node being Cluster Head.

-

7.2

CH and its members are elected based on Euclidean Distance

-

7.2.1

\(d_{ij} = \frac{{\mathop \sum \nolimits_{i \ne j} \sqrt {\left( {x_{i} - x_{j} } \right)^{2} + \left( {y_{i} - y_{j} } \right)^{2} } }}{{\mathop \sum \nolimits_{i \ne j} I_{j} }}\)

\(\left( {x_{i} , y_{i} } \right) and \left( {x_{j} ,y_{j} } \right)are\,the\, coornidates\, of \,source^{\prime}i^{^{\prime}} and\, destination^{\prime}j^{\prime}of\, transmission \,and\) \(I_{j}\) is the intermediate hop count between transmission.

-

8

end for

-

9

Source node chains uplink by sending Route path messages to their neighbors.

-

10

Every node attracts its neighborhood with force F as shown in equation(viii)

-

10.1

\(E_{F} = G_{c} *\left[ {{\raise0.7ex\hbox{${W_{1} W_{2} }$} \!\mathord{\left/ {\vphantom {{W_{1} W_{2} } {d_{P}^{2} }}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${d_{P}^{2} }$}}} \right]\)

-

11

∀ Iteration calculate \(E_{Tx}\) and \(E_{Rx}\) as:

-

11.1

\(E_{Tx} = E_{elec} *k + E_{amp} *s\_d*SensorNodes\left( i \right):dtch^{2} ;\)

where \(E_{Tx}\) = "Transmission Energy",\(E_{elec}\) = "Energy to run Electric Circuitry:",\(E_{amp}\) = "Amplification Energy required for Data Communication"(joules/bit/\(m^{2}\)),s_d is the size of the data packet and \(SensorNodes\left( i \right):dtch^{2}\) = distance of a normal node to the cluster head.

-

11.2

\(E_{Rx} = (E_{elec}\) + \(E_{da} )*k\)Where \(E_{Rx}\) (joules/bit) is the "Reception Energy" and \(E_{da}\) (joules/bit) is Energy required for Data Aggregation at Cluster Head.

-

12

The Covering Vector for Efficient Target Coverage is defined as:

-

12.1

\(C_{m*n} = \left\{ {\begin{array}{*{20}c} {1 ;} & {if sensor S_{m } monitors target T_{m} } \\ {0;} & {otherwise} \\ \end{array} } \right.\)

-

13

\(if iter > max \Rightarrow Network Dead\)

-

14

End.

5 Results and discussions

The network is randomly deployed in the ‘200 * 200’ workspace with 500 sensing glow worms bearing 2Joules of initial energy. It is hypothesized that 5% of the total number of nodes used in the network would provide better results as per probability distribution (Table 2).

Figure 3 represents energy consumption outcomes (in Joules) for transmitting data packets per transaction, strength transmitted and received, transmission impact on energy consumption.

A sensing mote with an energy value more than the threshold is called an operational node, while one with a value below the threshold is known as a dead node. Figure 4 depicts the number of operational nodes per transmission of data which in turn prolongs network lifetime.

5.1 Comparative analysis

The objective of this research is to ascertain which optimization technique amongst GWO (Grey Wolf Optimization), PSO (Particle Swarm Optimization), BA (Bat Algorithm), GA (Genetic Algorithm), FA (Firefly Algorithm), an improved variant of GSO (Glow Worm Swarm Optimization) provides the optimum solution and energy consumed with restricted iterations. Five hundred randomly distributed nodes in the network were used to simulate performance based on the amount of energy dissipated, the number of alive nodes, the number of dead nodes, and the network's throughput.

-

Network’s Lifetime The network's expected lifespan is expressed as the proportion of time the network can accomplish the desired functionality.

Figure 5 indicates that the improved GSO algorithm maintains the highest network lifetime of 4700(approx) as compared to existing techniques like Firefly(3500), GWO(3350), GA(3300), BAT(3200), and PSO(3050).

-

Energy Consumption per transmission Energy is the primary resource of WSN nodes, and it determines the longevity of the network.

Figure 6 shows that the approximate energy dissipation rate of the improved GSO algorithm is lowest i.e. 0.52% in comparison to existing techniques like Firefly(0.57), GWO(0.72), GA(0.82), BAT(0.70), and PSO(0.88).

-

Throughput It determines the frequency at which data is successfully transmitted across a network.

$$Throughput = {\raise0.7ex\hbox{${No.\, of\, data\, packets\, successfully\, transferred}$} \!\mathord{\left/ {\vphantom {{No.\, of\, data\, packets\, successfully \,transferred} {Total \,number\, of \,packets}}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${Total\, number \,of \,packets}$}}$$

Figure 7 shows that the improved GSO exhibits the successful transmission of data packets with a throughput rate of 0.88 which is more as compared to existing techniques like Firefly(0.80), GWO(0.69), GA(0.55), BAT(0.60), and PSO(0.50).

-

The number of Alive Nodes The number of alive nodes was calculated for each round to find the energy efficiency of the network. Figure 8 depicts that in terms of the number of alive nodes per iteration thereby prolonging network lifetime GSO gives optimal results.

-

The number of Dead Nodes In Fig. 9 GSO is compared with GA, GWO, PSO, BAT, and firefly algorithms in terms of the number of dead nodes per iteration and it significantly specifies that the number of dead nodes in GSO per iteration is comparatively lesser thereby providing prolonged network life due to the existence of operational nodes. The network dies at a faster pace in the PSO, BAT, and GWO algorithm, and for modified GSO once the network attains a static increase in the number of iterations its stability increases thereby decreasing the number of dead nodes across the network.

6 Conclusion

In this research work, we have administered a node distribution strategy to resolve the issues that existed due to overlapping nodes and spreading latencies as a result of reduced sensor nodes across many clusters before applying the target coverage. This significantly reduced the energy consumption of each cluster, which reduced the spread latency and the energy consumption of substantial nodes by equalizing the sensors on every cluster. The target coverage is accomplished by GSO, to effectively track a range of targets with deterministic sensors. GSO-based Clustered target coverage ensures that the exploration duration for the optimum is constrained. The predominant prime objective of this research is to address the coverage of all targets. We used the Essential Force Clustered Routing to illustrate the selection of routes depending on the minimum distance of proximity, the average power, and the position of the sensor to interface efficiently. The meta-heuristic-inspired self-organizing cluster scheme for the creation and management of clusters aims to increase network connectivity performance. This technology concentrates on the neighboring spectrum, residual energy, and sensor positioning for efficient communication. It offers significant outcomes as the network stabilizes after specific transmissions with a certain number of active nodes. The benefit of this technique is that it wouldn’t have to be centralized and therefore easy to adapt for substantial sensor networks. This technique is intended to determine the “local best solution”, in the next future, we may enhance the method to identify the potential solution, and work towards the dynamic change in the decision-making domain for the movement of glowworms.

References

Baskaran M, Sadagopan C (2015) Synchronous firefly algorithm for cluster head selection in WSN. Sci World J. https://doi.org/10.1155/2015/780879

Binh HTT, Hanh NT, Van Quan L, Dey N (2018) Improved cuckoo search and chaotic flower pollination optimization algorithm for maximizing area coverage in wireless sensor networks. Neural Comput Appl 30:2305–2317

Biswas S, Das R, Chatterjee P (2018) Energy-efficient connected target coverage in multi-hop wireless sensor networks, industry interactive innovations in science, engineering and technology. Ind Interact Innov Sci Technol 11:411–421

Chen DR, Chen LC, Chen MY, Hsu MY (2019) A coverage-aware and energy-efficient protocol for the distributed wireless sensor networks. Comput Commun 137:15–31

Das S, Sahana S, Das I (2019) energy efficient area coverage mechanisms for mobile Ad Hoc networks. Wirel Pers Commun 107:973–986

Gao X, Chen Z, Pan J, Wu F, Chen G (2019) Energy efficient scheduling algorithms for sweep coverage in mobile sensor networks. IEEE Trans Mob Comput 19(6):1332–1345

He Y, Tang X, Zhang R, Du X, Zhou D, Guizani M (2019) A course-aware opportunistic routing protocol for FANETs. IEEE Access 7:144303–144312

Hussen HR, Choi SC, Park JH, Kim J (2019) Predictive geographic multicast routing protocol in flying ad hoc networks. Int J Distrib Sens Netw. https://doi.org/10.1177/1550147719843879

Idrees AK, Deschinkel K, Salomon M, Couturier R (2015) Distributed lifetime coverage optimization protocol in wireless sensor networks. J Supercomput 71:4578–4593

Jia J, Chen J, Chang G, Wen Y, Song J (2009) Multi-objective optimization for coverage control in wireless sensor network with adjustable sensing radius. Comput Math with Appl 57:1767–1775

Kabakulak B (2019) Sensor and sink placement, scheduling and routing algorithms for connected coverage of wireless sensor networks. Ad Hoc Netw 86:83–102

Katti A (2019) Target coverage in random wireless sensor networks using cover sets. J King Saud Univ Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2019.05.006

Kaur K, Kapoor R (2019) MCPCN: multi-hop clustering protocol using cache nodes in WSN. Wirel Pers Commun 109:1727–1745

Keshmiri H, Bakhshi H (2020) A new 2-phase optimization-based guaranteed connected target coverage for wireless sensor networks. IEEE Sens J 20:7472–7486

Krishnan M, Yun S, Jung YM (2019) Enhanced clustering and ACO-based multiple mobile sinks for efficiency improvement of wireless sensor networks. Comput Netw 160:33–40

Lersteau C, Rossi A, Sevaux M (2018) Minimum energy target tracking with coverage guarantee in wireless sensor networks. Eur J Oper Res 265:882–894

Liao WH, Kao Y, Li YS (2011) A sensor deployment approach using glowworm swarm optimization algorithm in wireless sensor networks. Expert Syst Appl 38:12180–12188

Liu D, Li J (2019) Safety monitoring data classification method based on wireless rough network of neighborhood rough sets. Saf Sci 118:103–108

Liu H, Abraham A, Hassanien AE (2010) Scheduling jobs on computational grids using a fuzzy particle swarm optimization algorithm. Futur Gener Comput Syst 26:1336–1343

Niu B, Zhu Y, He X, Shen H (2008) A multi-swarm optimizer based fuzzy modeling approach for dynamic systems processing. Neurocomputing 71:1436–1448

Oramus P (2010) Improvements to glowworm swarm optimization algorithm. Comput Sci 11:7–7

Panag TS, Dhillon JS (2019) Maximal coverage hybrid search algorithm for deployment in wireless sensor networks, Wirel. Networks 25:637–652

Pitchaimanickam B, Murugaboopathi G (2020) A hybrid firefly algorithm with particle swarm optimization for energy efficient optimal cluster head selection in wireless sensor networks. Neural Comput Appl 32:7709–7723

Raton B (2008) Energy-efficient connected-coverage in wireless sensor networks. IJSNET. https://doi.org/10.1504/IJSNET.2008.018484

Rejinaparvin J, Vasanthanayaki C (2015) Particle swarm optimization-based clustering by preventing residual nodes in wireless sensor networks. IEEE Sens J 15:4264–4274

Sampathkumar A, Mulerikkal J, Sivaram M (2020) Glowworm swarm optimization for effectual load balancing and routing strategies in wireless sensor networks, Wirel. Networks 26:4227–4238

Selvaraj S (2017) Performance analysis of routing in wireless sensor network using optimization. Techniques 7:146–155

Singh S, Kumar P (2019) MH-CACA: multi-objective harmony search-based coverage aware clustering algorithm in WSNs, Enterp. Inf Syst 00:1–29

Sun W, Tang M, Zhang L, Huo Z, Shu L (2020) A survey of using swarm intelligence algorithms in IoT. Sensors. https://doi.org/10.3390/s20051420

Tian J, Gao M, Ge G (2016) Wireless sensor network node optimal coverage based on improved genetic algorithm and binary ant colony algorithm. Eurasip J Wirel Commun Netw. https://doi.org/10.1186/s13638-016-0605-5

Valdez F, Melin P, Castillo O (2011) An improved evolutionary method with fuzzy logic for combining particle swarm optimization and genetic algorithms. Appl Soft Comput J 11:2625–2632

Vijayalakshmi K, Anandan P (2019) A multi-objective Tabu particle swarm optimization for effective cluster head selection in WSN. Cluster Comput 22:12275–12282

Wang L, Wu W, Qi J, Jia Z (2018) Wirless sensor network coverage optimization based on whale group algorithm. Comput Sci Inf Syst 15:569–583

Wang X, Zhou Q, Qu C, Chen G, Xia J (2019) Location updating scheme of sink node based on topology balance and reinforcement learning in WSN. IEEE Access 7:100066–100080

Wang H, Li K, Pedrycz W (2020) An Elite Hybrid Metaheuristic Optimization Algorithm for maximizing wireless sensor networks lifetime with a sink node. IEEE Sens J 20:5634–5649

Xia J (2017) Coverage optimization strategy of wireless sensor network based on swarm intelligence algorithm. Proc. - 2016 Int. Conf. Smart City Syst. Eng. ICSCSE 2016, pp 179–182

Yu X, Zhang J, Fan J, Zhang T (2013) A faster convergence artificial bee colony algorithm in sensor deployment for wireless sensor networks. Int J Distrib Sens Netw. https://doi.org/10.1155/2013/497264

Yue Y, Li J, Fan H, Qin Q (2016) Optimization-based artificial bee colony algorithm for data collection in large-scale mobile wireless sensor networks. J Sensors. https://doi.org/10.1155/2013/497264

ZainEldin H, Badawy M, Elhosseini M, Arafat H, Abraham A (2020) An improved dynamic deployment technique based-on genetic algorithm (IDDT-GA) for maximizing coverage in wireless sensor networks. J Ambient Intell Humaniz Comput. https://doi.org/10.1155/2016/7057490

Funding

None.

Author information

Authors and Affiliations

Contributions

The authors are responsible for the correctness of the statements provided in the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Ridhi Kapoor and Sandeep Sharma declare that they have no conflict of interest. All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kapoor, R., Sharma, S. Glowworm Swarm Optimization (GSO) based energy efficient clustered target coverage routing in Wireless Sensor Networks (WSNs). Int J Syst Assur Eng Manag 14 (Suppl 2), 622–634 (2023). https://doi.org/10.1007/s13198-021-01398-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13198-021-01398-z