Abstract

Under constant-stress accelerated life test, the general progressive type-II censoring sample and the two parameters following the linear Arrhenius model, the point estimation and interval estimation of the two parameters log-normal distribution were discussed. The unknown parameters of the model as well as reliability and hazard rate functions are estimated by using Maximum likelihood (ML) and Bayesian methods. The maximum-likelihood estimates are derived by the Newton–Raphson method and the corresponding asymptotic variance is derived by the Fisher information matrix. Since the Bayesian estimates (BEs) of the unknown parameters cannot be expressed explicitly, the approximate BEs of the unknown parameters. The approximate highest posterior density confidence intervals are calculated. The practicality of the proposed method is illustrated by simulation study and real data application analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Product reliability is a common concern of manufacturers. To track product performance, it is necessary to collect product life data. So, life test is indispensable for the analysis and assessment of product reliability. However, with scientific and technological advance, high reliability and long life products emerge in an endless stream. In practical experiments, accelerated life test( ALT ) are widely used for time and expense. In ALT, the life of the product under different accelerated stress levels was tested, and then the life distribution of the product under normal stress was estimated by a suitable physical statistical model, common models are the Arrhenius model, the power law model and the Eyring model. In practice, the acceleration stress level can be temperature, voltage, etc. In recent years, many scholars have conducted research on ALT based on different types of data and life distribution models. Ismail (2015) discussed the maximum-likelihood estimates (MLEs) and BEs of Pareto distribution parameters on the basis of Type-I censoring sample in the partially ALT of constant-stress. Xu et al. (2016) got the BE for the Weibull distribution based on constant-stress ALT. Yan et al. (2017) obtained MLE and BE of Weibull regression model based on the general progressive type-II censoring (GPT-IIC) sample in the multi-stress life test. More detailed studies on ALT can be found in Shi et al. (2013), Anwar (2014), Mahmoud et al. (2017), Zheng and Shi (2013) and their references. In traditional accelerated experimental studies, it is considered that only one parameter of the lifetime distribution changes with stress, but other parameters remain constant throughout the test. However, owing to the complication of the failure mechanism, this hypothesis may be unsuitable in many cases. For example, Hiergeist et al. (1989) found that in capacitor tests, Weibull shape parameters depend on temperature. Assuming that the log-life of product obeys a location-scale distribution, studies by Nelson (1984), Boyko and Gerlach (1989) demonstrate that both location and scale parameters in dielectric breakdown data depend on stress. Therefore, the study of life model with nonconstant parameters has begun to be concerned by many scholars. For example, Seo et al. (2009) designed a new ALT sampling scheme that has a non-constant shape parameters. Lv et al. (2015) discussed the ALT reliability modeling of stochastic effect and nonconstant shape parameters. Meeter and Meeker (1994) extend the existing ML theory for test scheme with non-constant scale parameter models, and gave test scheme for a large number of actual test cases.

The above research mainly corresponds to the reliability analysis of complete sample or right censored sample under constant stress ALT, the shape parameter and scale parameter of the life model, one is non-constant, and the other is constant for different stress levels. The case where both shape and scale parameters are changing is relatively rare studied in lifetime model. Wang (2018a, 2018b) introduced the MLEs of exponential distribution and Weibull distribution with nonconstant parameters under constant-stress ALT. The GPT-IIC scheme is increasingly common in the field of obtaining product failure time data. In order to motivate our research, we provided a real data set from the life test of seel specimens.

The Log-normal distribution is an alternative to life distribution in practice. For failure data of some products, such as steel specimens data, it is very flexible to use this distribution to fit these lifetime data. In the paper we introduced the reliability analysis of the log-normal distribution, which has nonconstant shape parameter and nonconstant scale parameter, by adopting the maximum likelihood and Bayesian methods.

The structure of this paper is arranged as below. In Sect. 2, the basic assumptions and life test procedure are assessed. In Sect. 3, the MLEs and BEs of the model parameters, the reliability and the hazard rate functions are given. In order to prove the effectiveness and practicability of the research, the reliability analysis is assessed in Sects. 4 and 5 with the simulation study and a real data, respectively. Some conclusion remarks are presented in Sect. 6.

2 Basic assumptions and life test procedure

2.1 Basic assumptions

This study adopts the following assumptions.

Assumption 1

Based on the normal stress level \(S_{0}\) and the accelerated stress levels \(S_{i},i=1,\ldots ,k\). The product life X obeys the log-normal distribution with different parameters \((\mu _i,\sigma ^2_i)\), \(\mu _i\in (-\infty ,+\infty )\), \(\sigma _i\in (0,+\infty )\), the probability density function (PDF), cumulative distribution function (CDF), reliability and hazard rate functions are as followings:

where \(\Phi (x)=\int _{-\infty }^x\frac{1}{\sqrt{2\pi }}\exp \{-\frac{t^2}{2}\}\mathrm{d}t\), \((\mu _0,\sigma ^2_0)\) is denoted as \((\mu ,\sigma ^2)\) that is the log-normal distribution parameter at the normal stress level \(S_{0}\).

Assumption 2

The product accelerated model is assumed to be log-linear, that is, both the location and scale parameters, and the accelerated stress \(S_i\) satisfy the following equations:

where \(\phi (S)\) denotes the function of the accelerated stress S, and \(a_t,b_t\) are the constants, \(t=1,2\). In general, the stress S is the temperature, then \(\phi (S)=\frac{1}{S}\), the stress S is voltage, \(\phi (S)=\log {S}\). According to Eqs. (5) and (6), we rewrite \((\mu _i,\sigma ^2_i)\) in terms of \((\mu ,\sigma ^2)\) as follows:

let \(\theta _1=\mu _1/\mu , \theta _2=\sigma _1/\sigma\), we can get

where \({\phi _i} = \frac{{\phi ({S_i}) - \phi ({S_0})}}{{\phi ({S_1}) - \phi ({S_0})}}, i=1,\ldots ,k\), and \(\theta _1,\theta _2\in (0,+\infty )\) are named as acceleration coefficient.

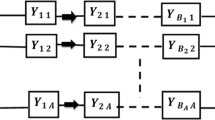

2.2 Life test procedure

Let k accelerated stress levels be \(S_{1}<S_{2}<\cdots <S_{k}\). Assume that units with size \(n_i\) are tested at stress level \(S_{i}\), and put into the GPT-IIC test, implementation is as follows.

Suppose that the failure times of the first \(r_{i}\) units are not observed. The failure time \(x_{i:r_{i}+1}\) is observed at the \((r_{i}+1)\)th failure unit, then \(R_{i:r_{i+1}}\) surviving units are randomly withdrawn from the test. At the \((r_{i}+2)\)th failure time \(x_{i:r_{i}+2}\), \(R_{i:r_{i+2}}\) surviving units are randomly withdrawn, and so on. At the \(m_{i}\)th failure time \(x_{i:m_{i}}\), all the remaining units \(R_{i:m_{i}}=n_{i}-m_{i}-R_{i:r_{i+1}}-\cdots -R_{i:m_{i}-1}\) are finally removed and the test terminates, where the failure numbers \(m_{i},i=1,\ldots ,k\) are prefixed. The \(m_{i}-r_{i}\) failure times

is a general progressive censored sample under censoring scheme

Apparently, \(x_{i:r_{i}+1}\le x_{i:r_{i}+2}\le \cdots \le x_{i:m_{i}}\). In this paper, we suppose the tests are independent of each other under the different stress levels, and \(m_{i}-r_{i}>0\) causes the failure time of at least one unit to be observed.

3 MSEs and BEs for the unknown parameters

For the stress level \(S_{i}\), the likelihood function is that

Then, combined with assumptions 1 and 2 in Sect. 2.1, the likelihood and log-likelihood functions based on the GPT-IIC sample \(\varvec{x}=(x_{1},x_{2},\ldots ,x_{k})\) are given by

where \(\omega _{ij}=\frac{\ln x_{i:j}-\mu \theta _{1}^{\phi _{i}}}{\sigma {\theta _{2}}^{\phi _{i}}}\).

3.1 MLEs

Let \(({\hat{\mu }} ,{\hat{\sigma }},{{\hat{\theta }} _1},{{\hat{\theta }} _2} )\) denote the MLEs of \((\mu ,\sigma ,\theta _1,\theta _2)\), which can be obtained by following equations.

where \(l=l(\mu ,\sigma ,\theta _{1},\theta _{2}|{\varvec{x}})\), \(\varphi (\cdot )\) is the standard normal distribution density function, and \(\omega _{ij1}=\frac{\partial \omega _{ij}}{\partial \mu }=-\frac{\theta _{1}^{\phi _{i}}}{\sigma \theta _{2}^{\phi _{i}}}\), \(\omega _{ij2}=\frac{\partial \omega _{ij}}{\partial \sigma }=-\frac{1}{\sigma }\omega _{ij}\), \(\omega _{ij3}=\frac{\partial \omega _{ij}}{\partial \theta _{1}}=-\frac{\mu \phi _{i}\theta _{1}^{\phi _{i}-1}}{\sigma \theta _{2}^{\phi _{i}}}\), \(\omega _{ij4}=\frac{\partial \omega _{ij}}{\partial \theta _{2}}=-\frac{\phi _{i}}{\theta _{2}}\omega _{ij}\). Because Eqs. (14–17) are complex, explicit solutions are not available. The Newton–Raphson method is used to calculate the MLEs \(({\hat{\mu }} ,{\hat{\sigma }},{{\hat{\theta }} _1},{{\hat{\theta }} _2} )\). Further, the standard deviation of the estimators was assessed using the variance–covariance matrix, which is the inverse of the observed Fisher information matrix \(\mathrm{I}(\mu ,\sigma ,\theta _{1},\theta _{2} )\) at \(({\hat{\mu }} ,{\hat{\sigma }},{{\hat{\theta }} _1},{{\hat{\theta }} _2} )\) as follows:

here \(\omega _{ij11}=\frac{\partial \omega _{ij1}}{\partial \mu }=0\), \(\omega _{ij12}=\frac{\partial \omega _{ij1}}{\partial \sigma }=\frac{{\theta _{1}}^{\phi _{i}}}{\sigma ^2{\theta _{2}}^{\phi _{i}}}\), \(\omega _{ij13}=\frac{\partial \omega _{ij1}}{\partial \theta _{1}}=\frac{(\phi _{i}-1){\theta _{1}}^{\phi _{i}-1}}{\sigma {\theta _{2}}^{\phi _{i}}}\), \(\omega _{ij14}=\frac{\partial \omega _{ij1}}{\partial \theta _{2}}=\frac{(\phi _{i}+1){\theta _{1}}^{\phi _{i}}}{\sigma {\theta _{2}}^{\phi _{i}+1}}\), \(\omega _{ij22}=\frac{1}{\sigma ^2}\omega _{ij}-\frac{1}{\sigma }\omega _{ij2}\), \(\omega _{ij23}=\frac{1}{\sigma }\omega _{ij3}\), \(\omega _{ij23}=\frac{1}{\sigma }\omega _{ij4}\), \(\omega _{ij33}=-\frac{\mu \phi _{i}(\phi _{i}-1){\theta _{1}}^{\phi _{i}-2}}{\sigma {\theta _{2}}^{\phi _{i}}}\), \(\omega _{ij34}=\frac{\mu \phi _{i}^2{\theta _{1}}^{\phi _{i}-1}}{\sigma {\theta _{2}}^{\phi _{i}+1}}\), \(\omega _{ij44}=\frac{\phi _{i}}{{\theta _{2}}^2}\omega _{ij}-\frac{\phi _{i}}{{\theta _{2}}}\omega _{ij4}\).

The \(100(1-\tau )\)% approximate confidence interval (CI) for parameter \(P_{M}\) is given by

where \({\hat{P}}_{M}\) = \({\hat{\mu }}\), \(\hat{\sigma }\), \({{\hat{\theta }} _1}\), or \({{\hat{\theta }} _2}\), and \(z_{\tau /2}\) is the upper quantile of the standard normal distribution. Under the normal stress level, the estimations of s(x) and h(x) are that:

The approximating variances of \({\hat{s}}(x)\) and \({\hat{h}}(x)\) are obtained by using the Delta method.

where

The \(100(1-\tau )\%\) approximate CIs for s(x) and h(x) are

and

respectively.

3.2 Bayesian estimation

The parameters are derived by Bayesian method in this section. First, suppose that \(\mu ,\sigma\), \(\theta _{1}\), and \(\theta _{2}\) are independent of one another. Next suppose that \(\sigma\) follows Gamma prior, its PDF \(g(\sigma ;a,b)\) is that

The prior on parameter \(\mu\) has a log-concave function with PDF, here \(\mu\) takes normal prior with PDF \(\pi (\mu ;c,d)\) that is abbreviated to \(\pi (\mu )\) and given by

\(\theta _{1}\) and \(\theta _{2}\) have uniform priors with PDF \(u(\theta _i)\equiv 1\), \(i=1,2\). Then, the posterior joint PDF of \((\mu ,\sigma ,\theta _{1},\theta _{2})\) given \(\varvec{x}\) is derived by

Under the square error loss (SEL) function, we obtain the Bayes estimate of the parameters. Therefore, the BE of any function \(P(\mu ,\sigma ,\theta _{1},\theta _{2})\) of parameters, named \(\hat{P}(\mu ,\sigma ,\theta _{1},\theta _{2})\), is that

Because Eq. (43) cannot be reduced to closed form, the MCMC method is used. Substituting (12) and (40) into (42), and given \(\alpha\), \(\theta _1\), \(\theta _2\), and \(\varvec{x}\), the conditional PDF of \(\mu\) is proportional to

Given \(\mu\), \(\theta _{1}\),\(\theta _{2}\) and \(\varvec{x}\), the conditional PDF of \(\sigma\) is proportional to

Given \(\mu\), \(\sigma\), \(\theta _{2}\), and \(\varvec{x}\), the conditional PDF of \(\theta _{1}\) is proportional to

Given \(\mu\), \(\sigma\), \(\theta _{1}\), and \(\varvec{x}\), the conditional PDF of \(\theta _{2}\) is proportional to

Property 1

The posterior PDF \(\pi (\mu |\sigma ,\theta _{1},\theta _{2} ,\varvec{x})\) of the Eq. (44) is log-concave.

Proof

See “Appendix A”. \(\hfill\square\)

The marginal posterior distributions of \(\mu ,\sigma ,\theta _1\) and \(\theta _2\) do not have closed form. By Eq. (45) and Property 1, we used the adaptive rejection sampling (ARS) method Gilks and Wild (1992) which needs log-concave posterior PDF to get samples from the marginal distribution of \(\mu\). The Metropolis–Hastings (M–H) algorithm is used to obtain samples from the marginal distributions of \(\sigma ,\theta _1\) and \(\theta _2\) based on Eqs. (46)–(48). Finally, a hybrid Markov Chain is generated. The posterior samples are simulated and the BES are obtained in turn, the process of using the M–H and ARS methods is:

Step 1. Set initial value \({\mu }^{(0)},\sigma ^{(0)},\theta _{1}^{(0)},\theta _{2}^{(0)}\) and the iteration counter \(j=1\);

Step 2. Due to Property 1, the marginal density of \(\mu\) forms a log-concave density family, generate a random value \(\mu ^{(j)}\) from \(\pi (\mu |\sigma ^{(j-1)},\theta _{1}^{(j-1)},\theta _{2}^{(j-1)},\varvec{x})\) by adopting the adaptive rejection algorithm introduced by Gilks and Wild (1992).

Step 3. Generate a random variable \(\sigma ^{(j)}\) using M–H algorithm, the process is:

-

(3.1)

Generate a random number \(\sigma ^{(j)}_1\) from the \(\pi (\sigma |\mu ^{(j)},\theta _{1}^{(j-1)},\theta _{2}^{(j-1)},\varvec{x})\);

-

(3.2)

Generate a random number \(\sigma _*^{(j)}\) from the proposal Gamma distribution \(g(\sigma ;a,b)\) with known and nonnegative hyper-parameters a, b;

-

(3.3)

Computer the acceptance probability

$$\begin{aligned} h(\sigma ^{(j)}_1,\sigma ^{(j)}_*)=\min \left( 1,\frac{\pi (\sigma ^{(j)}_*|\mu ^{(j)},\theta _{1}^{(j-1)},\theta _{2}^{(j-1)},\varvec{x})g(\sigma _1^{(j)};a,b)}{\pi (\sigma ^{(j)}_1|\mu ^{(j)},\theta _{1}^{(j-1)},\theta _{2}^{(j-1)},\varvec{x})g(\sigma _*^{(j)};a,b)}\right) \end{aligned}$$ -

(3.4)

Generate a random number \(u_j\) from U(0, 1);

-

(3.5)

If \(h(\sigma ^{(j)}_1,\sigma ^{(j)}_*)>u_j\) then \(\sigma ^{(j)}=\sigma _*^{(j)}\), otherwise \(\sigma ^{(j)}=\sigma ^{(j)}_1\);

Step 4. Random variable \(\theta _{1}^{(j)}\) is generated from \(\pi (\theta _{1} |\mu ^{(j)},\sigma ^{(j)},\theta _{2}^{(j-1)},\varvec{x})\) using M–H algorithm, the process is similar to Step 3;

Step 5. Random variable \(\theta _{2}^{(j)}\) is generated from \(\pi (\theta _{2} |\mu ^{(j)},\sigma ^{(j)},\theta _{1}^{(j)},\varvec{x})\) using M–H algorithm,the process is similar to Step 3;

Step 6. For a given point \(x\in (0,+\infty )\), compute

Step 7. Set \(j=j+1\);

Step 8. Repeat 2 to 7 steps M times and obtain

Abandoning first samples \(N_{0}\) as “burn in”, remaining \(N-N_{0}\) samples are used to obtain the BEs

under the SEL function, the posterior mean square errors (PMSEs) of the parameters \((\mu ,\sigma ,\theta _{1}\), \(\theta _{2},s(x),h(x))\) is that

where P can be \(\mu ,\sigma ,\theta _{1},\theta _2, s(x)\), or h(x).

Step 9. Order \(\hat{P}^{(N_{0}+1)},\ldots ,\hat{P}^{(N)}\) as \(\hat{P}_{(1)},\ldots ,\hat{P}_{(N-N_{0})}\).

Then, the \(100(1-\gamma )\%\) highest posterior density (HPD) credible intervals of P, namely \(\big (\hat{P}_{([(N-N_0)\gamma /2])}, \hat{P}_{([(N-N_0)(1-\gamma /2)])}\big )\), are given by using the method suggested by Chen and Shao (1999), where [a] denotes the integer part of a.

4 Simulation study

In this section, simulation study of the proposed method is conducted. First, suppose that the prior distribution for \(\sigma\) in Eq. (40) is the Gamma distribution with hyper-parameters \(a=0, b=0\), it is equal to non-informative prior \(\frac{1}{\sigma }\), and \(\mu ,\theta _1,\theta _2\) have uniform priors. Next, under the GPT-IIC and a three-level constant stress. Supposing the model is the log-normal distribution and there are three temperature-accelerated levels \(S_{1}=240\), \(S_{2}=260\), \(S_{3}=280\). The normal operating temperature is \(S_{0}=200\). The accelerating function is \(\ln \mu _{i}=1.95+126.43/S_{i}\), \(\ln \sigma _{i}=-0.11+218.79/S_{i},i=1,2,3\). Therefore, \(\mu =13.2255\), \(\sigma =2.6750\), \(\theta _{1}=0.9\), \(\theta _{2}=0.8333\). For the sake of simplicity, we take \(n_{1}=n_{2}=n_{3}=n\) and \(m_{1}=m_{2}=m_{3}=m\), the following sampling schemes are used at the three stress levels:

-

[1]: \(n=30,m=20,r=5,R_{i:6}=\cdots =R_{i:{m-1}}=0,R_{i:m}=5\).

-

[2]: \(n=30,m=25,r=0,R_{i:1}=2,R_{i:2}=\cdots =R_{i:{m-1}}=0,R_{i:m}=3\).

-

[3]: \(n=40,m=30,r=0,R_{i:1}=5,R_{i:2}=\cdots =R_{i:{m-1}}=0,R_{i:m}=5\).

-

[4]: \(n=40,m=35,r=3,R_{i:4}=\cdots =R_{i:{m-1}}=0,R_{i:m}=2\).

-

[5]: \(n=50,m=40,r=1,R_{i:2}=5,R_{i:3}=\cdots =R_{i:{m-1}}=0,R_{i:m}=4\).

-

[6]: \(n=50,m=45,r=2,R_{i:3}=\cdots =R_{i:{m-1}}=0,R_{i:m}=3\). \(i=1,2,3\).

Let the GPT-IIC scheme be that \(n_h,m_h,r_h\) and \(R_h=(R_{h:r_h+1}\), \(R_{h:r_h+2},\ldots , R_{h:m_h})\) under each stress level \(S_h\). The GPT-IIC samples from the log-normal distribution are generated according to the method given in Balakrishnan and Aggarwala (2000) under \(S_h\). the steps of the algorithm as follow:

Step 1. Generate a random variables \(V_{m_h}\) from \(Beta(n_h-r_h,r_h+1)\).

Step 2. Generate \(m_h-r_h-1\) independent random variables \(W_{h:r_h+1},\ldots ,W_{h:m_h-1}\) from U(0, 1).

Step 3. Set \(V_{h:r_h+l}=W_{h:r_h+l}^{1/a_{h:{r_h+l}}}\), \(l=1,\ldots , m_h-r_h-1\), where \(a_{h:{r_h+l}}=l+\sum \limits _{j=m_h-l+1}^{m_h}R_{h:j}\).

Step 4. Set \(_{r_h}U_{r_h+l:m_h:n_h}=1-V_{h:m_h-l+1}V_{h:m_h-l+2}\cdots V_{h:m_h},l=1,\ldots ,m_h-r_h\).

Step 5. Finally, \(_{r_h}X_{r_h+l:m_h:n_h}=F_h^{-1}(_{r_h}U_{r_h+l:m_h:n_h};\mu _h,\sigma _h)\), where \(F_h^{-1}(x;\mu _h,\sigma _h)\) is the inverse function of log-normal CDF that \(F_h(x;\mu _h,\sigma _h)=\Phi (\frac{\ln x-\mu _h}{\sigma _h})\),\(l=1,\ldots ,m_h-r_h\). Then \(\big (_{r_h}X_{r_h+1:m_h:n_h},\, _{r_h}X_{r_h+2:m_h:n_h},\,\ldots , \, _{r_h}X_{m_h:m_h:n_h}\big )\), abbreviated as \(\big (X_{h:r_h+1},\, X_{h:r_h+2},\,\ldots , \,X_{h:m_h}\big )\), is the required GPT-IIC samples of size \(m_h-r_h\) from log-normal distribution with parameters \((\mu _h,\sigma _h^2)\).

We obtain the BEs based on 4000 MCMC samples and remove the first 1000 values. We simulate the whole process 2000 times in each scheme and obtain the MLEs and BEs of parameters according to the method described in Sects. 3.1 and 3.2. Finally, the MEANs, mean square errors (MSEs), average confidence lengths (ACLs) of 95% confidence HPD credible intervals and the coverage percentages (CPs) of the parameters based on simulation are listed in Table 1. We can see that the MLEs and BEs of the parameters are very approach to the real value, therefore, the performance of the two estimation methods is satisfactory. However, the BE has more superiority because it is not affected by initial value, and the MSEs of the BE is generally less than the MLE. For interval estimation, it is observed that the CPs of the confidence and credible intervals for the parameters are nearly 95%, HPD credibel intervals are better than CIs in respect of the ACLs and CPs, when (n, m) increase, the MSEs for MLEs and BEs of the model parameters decrease.

5 Real example

Table 2 shows the life data of steel specimens in 6 randomly assigned batches of 20 observations, each batch has been subjected to a different stress amplitude (Kimber 1990; Lawless 2003). First, K-S hypothesis testing is used to check whether log-normal distribution is fit to the data sets. The K–S distances and the p-values under six levels are 0.0987 (0.9791), 0.0692 (0.9999), 0.0994 (0.9775), 0.1330 (0.8264), 0.1270 (0.8642), and 0.0920 (0.9898) respectively, it is shown that the log-normal distribution is an appropriate choice for these data. Secondly, we consider the homogeneity of variance using Bartlett test. The Bartlett test statistic is given by

where \(s^2=\frac{\sum _{i=1}^{6}(n_{i}-1)s^2_{i}}{\sum _{i=1}^{6}(n_{i}-1)}\), \(s^2_{i} =\frac{1}{n_{i}-1}\sum _{j=1}^{n_{i}}(x_{ij}-{\bar{X}}_{i})^2\), \(C=1+\Big \{3(m-1)[\sum _{i=1}^{6}\frac{1}{n_{i}-1}-\frac{1}{\sum _{i=1}^{6}(n_{i}-1)}]\Big \}^{-1}\).

By calculation, the Bartlett test statistic for these data is \(\chi ^{2} = 31.1890>\chi _{\frac{\alpha }{2}}(5)=12.833,~~\alpha =0.05.\) This indicates that the variance under the six stress levels is inhomogeneous or unequal, so the method discussed in this paper is very necessary in practical application.

We assume that the normal stress level is \(s_{0}=32\), and non-informative prior for \(\sigma\), (that is, the Gamma hyper-parameters are \(a=0, b=0\) in Eq. (40)), uniform priors for \(\mu ,\theta _1,\theta _2\). We then get two groups GPT-IIC samples of the original data in Table 2 with two different censoring schemes:

-

Scheme [1]: \(n=20,m=17,r=0,R_{i:1}=1,R_{i:2}=\cdots =R_{i:{m-1}}=0,R_{i:m}=2\).

-

Scheme [2]: \(n=20,m=15,r=2,R_{i:3}=1,R_{i:4}=\cdots =R_{i:{m-1}}=0,R_{i:m}=2\).

\(i=1,2,3,4,5,6\).

The samples are listed in Tables 3 and 4. By using the MCMC algorithm mentioned in Sect. 3.2, under the GPT-IIC scheme, the samples of \(\mu , \sigma , \theta _{1}, \theta _{2}, S (x),\) and h(x)(\(x=800\)) of size 4000 are generated, and the first 500 samples are removed. Figure 1 shows the sample paths for the remaining 3500 MCMC samples under the scheme 1. As can be seen from Fig. 1, the MCMC algorithm converges.

Sample path maps of MCMC samples under scheme Ismail (2015)

By applying MCMC samples, the BEs of unknown parameters of the life distribution, corresponding reliability s(x) and hazard rate h(x)(\(x=800\)), the Lower limits (LL), upper limits (UL) and interval length (IL) of HPD confidence intervals are calculated, and these results and MLEs are shown in Table 5. Although the BEs and MLEs are similar, the ILs of BEs are shorter than MLEs. However, the MLE is not only complex, but also often influenced by initial value, and it is very difficult to prove the uniqueness of MLE. But the Bayesian method does not need to prove the uniqueness of the solution, and the convergence is not affected by the initial value.

Figure 2 is the reliability function and hazard rate function plots with different stress levels under scheme (Ismail 2015). It can be seen from Fig. 2 that the reliability function becomes steeper and which indicates that the life of the steel specimen decreases with the stress levels increasing. The hazard rate of the steel specimen increases with the stress levels increasing, but it is always increasing at each stress level, which indicates that the monotonicity of the failure rate function doesn’t change with stress levels.

The reliability (left) and hazard rate (right) functions under different stress of scheme Ismail (2015)

6 Conclusion

In the accelerated life test with constant-stress, the reliability has been discussed, when the data is general progressive censoring and follows the log-normal distribution. It is considered that parameters of the log-normal model are affected by stress, this situation is often encountered in practice. Point estimation and interval estimation are obtained by using Bayesian and maximum likelihood methods for unknown parameters of the life distribution, and corresponding reliability and hazard rate. The hybrid Markov Chain Monte Carlo algorithm that combines ARS and M–H steps within the Gibbs sampling method was implemented to obtain the Bayes estimate. The simulation results demonstrate that the maximum likelihood and Bayes estimators have the significant performances. It shows that this paper presents an alternative and effective method for reliability test analysis. The reliability analysis of a real data set shows that the proposed method has the possibility of application.

Data availability

The data used in this study can be obtained from the corresponding author when reasonably requested.

References

Anwar S (2014) Estimation of constant-stress partially accelerated life test plans for Rayleigh distribution using type-II censoring. Int J Eng Sci Res Technol 3(9):327–332

Balakrishnan N, Aggarwala R (2000) Progressively censoring: theory. In: Methods and applications. Birkhäuser, Boston

Boyko KC, Gerlach DL (1989) Time dependent dielectric breakdown of 210A oxides. In: 27th International reliability physics symposium, pp 1–8

Chen M, Shao Q (1999) Monte Carlo estimation of Bayesian credible and HPD intervals. J Comput Graph Stat 8(1):69–92

Gilks WR, Wild P (1992) Adaptive rejection sampling for Gibbs sampling. Appl Stat 41(2):337–348

Hiergeist P, Spizer A, Rohl S (1989) Lifetime of oxide and oxide-nitro-oxide dielectrics within trench capacitors for DRAMs. IEEE Trans Electron Devices 36(5):913–919

Ismail AA (2015) Bayesian estimation under constant-stress Partially accelerated life test for Pareto distribution with type-I censoring. Strength Mater 47(4):633–641

Kimber AC (1990) Exploratory data analysis for possibly censored data from skewed distribution. J R Stat Soc Appl Stat 39(1):21–30

Lawless JF (2003) Statistical models and methods for lifetime data. Wiley, Hoboken

Lv SS, Niu Z, Qu L, He S (2015) Reliability modeling of accelerated life tests with both random effects and nonconstant shape parameters. Qual Eng 27(3):329–340

Mahmoud MAW, El-Sagheer RM, Nagaty H (2017) Inference for constant-stress partially accelerated life test model with progressive type-II censoring scheme. J Stat Appl Probab 6(2):373–383

Meeter CA, Meeker WQ (1994) Optimum accelerated life test with a nonconstant scale parameter. Technometrics 36:71–83

Nelson W (1984) Fitting of fatigue curves with constant standard deviation to data with runouts. J Test Eval 12(2):69–77

Seo JH, Jung M, Kim CM (2009) Design of accelerated life test sampling plans with a nonconstant shape parameters. Eur J Oper Res 197(2):659–666

Shi YM, Jin L, Wei C, Yue H (2013) Constant-stress accelerated life test with competing risks under progressive type-II hybrid censoring. J Adv Mater Res 712–715(2):2080–2083

Wang L (2018) Estimation of exponential population with nonconstant parameters under constant-stress model. J Comput Appl Math 342:478–494

Wang L (2018) Estimation of constant-stress accelerated life test for Weibull distribution with nonconstant shape parameter. J Comput Appl Math 343:539–555

Xu A, Fu J, Tang Y, Guan Q (2016) Bayesian analysis of constant-stress accelerated life test for the Weibull distribution using noninformative priors. Appl Math Model 12(2):119–126

Yan Z, Zhu T, Peng X, Li X (2017) Reliability analysis for multi-level stress testing with Weibull regression model under the general progressively type-II censored data. J Comput Appl Math 330:28–40

Zheng G, Shi Y (2013) Statistical analysis in constant-stress accelerated life tests for generalized exponential distribution based on adaptive type-II progressive hybrid censored data. Chin J Appl Probab Stat 29(4):363–380

Acknowledgements

The authors would like to thank the editors and reviewers for their constructive suggestions to improve the paper.

Funding

This research is supported by National Natural Science Foundation of China (No. 11861049) and Natural Science Foundation of Inner Mongolia (No. 2020LH01002).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

Let \(\xi _i(\mu )=\frac{\ln x_{i:r_{i}+1}-\mu \theta _{1}^{\phi _{i}}}{\sigma {\theta _{2}}^{\phi _{i}}},\) \(\xi _{i:j}(\mu )=\frac{\ln x_{i:j}-\mu \theta _{1}^{\phi _{i}}}{\sigma {\theta _{2}}^{\phi _{i}}}, i=1,\ldots ,k,~j=r_{i}+1,\ldots ,m_i,\) we have that

First, in Eq. (31), \(\frac{\mathrm{d}^2log\pi (\mu )}{\mathrm{d}\mu ^2}\le 0\) because \(\pi (\mu )\) is log-concave. Next, we demonstrate that \(\frac{\partial \Big (\frac{\varphi [\xi _i(\mu )]\cdot \xi _i'(\mu )}{\Phi [\xi _i(\mu )]}\Big )}{\partial \mu }\le 0\) and \(\frac{\partial \Big (\frac{\varphi [\xi _{i:j}(\mu )]\cdot \xi _{i:j}'(\mu )}{1-\Phi [\xi _{i:j}(\mu )]}\Big )}{\partial \mu } \ge 0.\)

Without loss of generality, let \(A(\mu )=\frac{\ln x-\mu \theta _{1}^{\phi }}{\sigma {\theta _{2}}^{\phi }}\), we have that

And

where \(g_1(\mu )=A(\mu )\cdot \Phi [A(\mu )]+\varphi [A(\mu )]\). Then

Namely, \(g_1(\mu )\) is decreasing about \(\mu\). Apparently,

By simply taking the limit, we can get

Therefore, \(g_1(\mu )\ge 0\). Thus, \(\frac{\partial \Big (\frac{\varphi [A(\mu )]\cdot A'(\mu )}{\Phi [A(\mu )]}\Big )}{\partial \mu }\le 0\).

where \(g_2(\mu )=A(\mu )\cdot \{1-\Phi [A(\mu )]\}-\varphi [A(\mu )]\), then

Therefore, \(g_2(\mu )\) is decreasing about \(\mu\). Apparently, we have

It indicates that

Further, we have

Let’s do the simple limit again,

We obtain that \(g_2(\mu )\le 0\), further \(\frac{\partial \Big (\frac{\varphi [A(\mu )]\cdot A'(\mu )}{1-\Phi [A(\mu )]}\Big )}{\partial \mu }\ge 0\). Hence, \(\frac{\partial ^2\log \pi (\mu |\sigma ,\theta _{1},\theta _{2},{\varvec{x}})}{\partial \mu ^2}\le 0\), namely, \(\pi (\mu |\sigma ,\theta _{1},\theta _{2},{\varvec{x}})\) is log-concave.

Rights and permissions

About this article

Cite this article

Cui, W., Yan, Zz., Peng, Xy. et al. Reliability analysis of log-normal distribution with nonconstant parameters under constant-stress model. Int J Syst Assur Eng Manag 13, 818–831 (2022). https://doi.org/10.1007/s13198-021-01343-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13198-021-01343-0