Abstract

This paper develops a philosophical investigation of the merits and faults of a theorem by Lanford (1975), Lanford (Asterisque 40, 117–137, 1976), Lanford (Physica 106A, 70–76, 1981) for the problem of the approach towards equilibrium in statistical mechanics. Lanford’s result shows that, under precise initial conditions, the Boltzmann equation can be rigorously derived from the Hamiltonian equations of motion for a hard spheres gas in the Boltzmann-Grad limit, thereby proving the existence of a unique solution of the Boltzmann equation, at least for a very short amount of time. We argue that, by establishing a statistical H-theorem, it offers a prospect to complete Boltzmann’s combinatorial argument, without running against the objections which plug other typicality-based approaches. However, we submit that, while recovering the irreversible approach towards equilibrium for positive times, it fails to predict a monotonic increase of entropy for negative times, and hence it yields the wrong retrodictions about the past evolution of a gas.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The famous Boltzmann equation is an irreversible equation describing the macroscopic time-evolution of low-density gases. Despite its successful applications, its technical and conceptual status has remained problematic since Boltzmann’s Boltzmann (1872) heuristic derivation. A theorem by Lanford (1975), Lanford (1976), Lanford (1981) shows that, under precise initial conditions, the Boltzmann equation can be rigorously derived from the Hamiltonian equations of motion for a hard spheres gas in the Boltzmann-Grad limit, thereby proving the existence of a unique solution of the equation, at least for a very short amount of time. As such, Lanford’s theorem represents a serious candidate to solve the outstanding problem of establishing the spontaneous approach towards equilibrium in statistical mechanics. Yet, the result is subject to quite severe limitations. In fact, its assessment in the philosophical literature is rather ambivalent. Let us illustrate it by referring to two quotes by some of the most acute commentators. Uffink (2007) emphasized the foundational importance of Lanford’s work in light of its close connections with Boltzmann’s original formulation of the kinetic theory of gases and statistical mechanics:

the approach developed by Lanford... deserves special attention because it stays conceptually closer to Boltzmann’s (1872) work on the Boltzmann equation and the H-theorem than any other modern approach to statistical physics... Furthermore, the results obtained are the best efforts so far to show that a statistical reading of the Boltzmann equation or the H-theorem might hold for the hard spheres gas. [Uffink (2007), p.111]

On the other hand, Sklar (2009) stressed that the idealized regime introduced by the Boltzmann-Grad limit dramatically constrains the domain of applicability of the theorem, and its short time-interval of validity would not even justify the usual realistic applications of the Boltzmann equation at the macroscopic level.

[Lanford’s] derivation has the virtue of rigorously generating the Boltzmann equation, but at the cost of applying only to one severely idealized system and then only for a very short time (although the result may be true, if unproven, for longer time scales). Once again an initial probability distribution is still necessary for time asymmetry. [Sklar (2009)]

Admittedly, appealing to the Boltzmann-Grad limit, which is essential to obtain a rigorous derivation of the Boltzmann equation, restricts one just to highly diluted gas systems. Moreover, the time-scale of the theorem is extremely short: in fact, it is of the order of one-fifth of the mean free time, that is the average time that a particle in the gas would take to undergo two successive collisions. However, even though these limitations may not be overcome, they ought not to obscure the conceptual importance of Lanford’s result for the approach towards equilibrium: indeed, the time-bound is still sufficiently long for one to observe a monotonic increase of entropy for very rarefied gases out of equilibrium. In fact, a statistical H-theorem can be derived as a corollary, which predicts that, for the vast majority of non-equilibrium states satisfying the initial conditions, there will be a monotonic increase of the negative of the H-function through time until the system reaches equilibrium. As we wish to argue, this offers a prospect to complete the combinatorial argument, that is the basis of Boltzmann’s (1877) formulation of statistical mechanics, without running against the objections which plug other typicality-based approaches. Instead, in our opinions, the major concern about Lanford’s theorem arises when one applies it to negative times, in that, once the initial conditions are posited, it fails to yield the right retrodictions about the past evolution of the gas. In reference to the last sentence of the above quote by Sklar, that raises doubts as to whether time-asymmetry is really introduced by the initial probability distribution.

In this paper we wish to evaluate the merits and faults of Lanford’s theorem for the irreversible approach towards equilibrium. We begin by reviewing Boltzmann’s original work, specifically his derivation of the Boltzmann equation and the H-theorem in the kinetic theory of gases and his combinatorial argument in statistical mechanics, and point out some open problems (Section 2). We present Lanford’s theorem together with its limitations in Section 3. We then address the issue whether it can solve the problems left open by Boltzmann. In particular, we explain how it can be used to complete the combinatorial argument, thereby recovering the spontaneous approach to equilibrium for positive times (Section 4). In the last section, we conclude by tackling the issue whether the theorem is also true for negative times. Since a detailed analysis of the theorem would require one to engage in some rather technical subtleties, which may distract one from its philosophical significance, we defer it to the Appendix, where we also discuss its extendibility to arbitrarily long times.

2 Boltzmann’s legacy

At the macroscopic level, thermodynamic systems display a tendency to evolve from some initial non-equilibrium state into an equilibrium state and to remain there for the rest of time. For example, consider a gas contained in a closed box: if the initial state is such that at time t = 0 the gas is compressed in the right corner of the box, the gas tends to uniformly distribute in the entire volume available within the box until it reaches an equilibrium state at a later time t > 0. In fact, we never observe the reversed process, in which the gas uniformly distributed in the box would spontaneously leave the state of equilibrium and set itself into a state in which it is compressed in the corner of the box. Irreversible processes taking place in agreement with our macroscopic observations are accompanied by a monotonic increase of entropy. One of the goals of statistical physics is to recover the spontaneous approach towards equilibrium from a microscopic point of view. For this purpose, one makes some hypothesis about the microscopic constitution of the system under investigation and appeals to probability theory.

In the hard spheres model a gas system is assumed to consist of N molecules, idealized as rigid and impenetrable spheres of diameter a and mass m, which are allowed to interact only through elastic binary collisions. Let us suppose that the gas is enclosed in a container, e.g. a box, occupying the finite-volume region Λ with perfectly elastic reflecting and smooth walls. For most of time the molecules move freely within the box according to the Hamiltonian equations of motion (H.E.M.), but occasionally undergo collisions resulting in a change of their momenta. The microscopic state is represented by a point x = (q⃗ 1, p⃗ 1 ,..., q⃗ N , q⃗ N ) in the 6N-dimensional phase space Γ ≡ (Λ × R 3)N. The motion and mutual collisions of the particles are governed by the laws of classical mechanics, which are time-reversal invariant. The time-evolution of a microstate x is in fact given by x t = T t x, where T t is the Hamiltonian flow defined on the phase-space at any time t.

The crucial question then is: given that the microscopic dynamics is time-symmetric, how can one account for the irreversible approach towards equilibrium observed at a macroscopic level? In his original work, Boltzmann developed at least two main proposals: the 1872 derivation of the Boltzmann equation and the H-theorem in the kinetic theory of gases, and the 1877 combinatorial argument in statistical mechanics. Yet, both accounts failed to establish the approach towards equilibrium. Below, we discuss them in the order.

2.1 The Boltzmann equation and the H-theorem

In the kinetic theory of gases, the macroscopic state of the system is represented by a continuous (normalized) distribution function f (q⃗, p⃖) on the 6-dimensional one-particle phase space Λ × R 3, that is also called μ-space. Boltzmann (1872) constructed the Boltzmann equation (B.E.) for a gas in the hard spheres model as an evolution equation describing how such a state-distribution changes in the course of time due to the free rectilinear motion of the particles and their mutual collisions. The crucial assumption in his derivation is the Stoßzahlansatz (or “assumption about the number of collisions”), which requires that, given any pair of particles, say 1 and 2, a factorization condition

for the two-particle distribution function into the one-particle distribution functions of each particle holds only before collisions. He also added a special condition on the initial state-distribution f 0 according to which each direction of momentum is equally probable. The Boltzmann equation for the time-dependent state-distribution f (q⃗, p⃗) takes the following form:

where ω⃗ 12 is a unit vector pointing from the center of particle 1 to the center of particle 2. In particular, the constraint ω⃗ 12⋅ (p⃗ 1 − p⃗ 2) ≥ 0 means that the particles are approaching each other. The first term in the right-hand side of the equation is the free flow operator and the second term is the collision operator (notice the coefficient N a 2 appearing in front of the integral in the latter).

Boltzmann then associated the entropy of the gas with the negative of the H-function defined as −H [f(q⃗, p⃗)] := − ∫ f (q⃗, p⃗) ln f(q⃗, p⃗) d q⃗ d p⃗, and demonstrated the H-theorem as a corollary that strictly follows from the validity of the Boltzmann equation: if at all time t there exists a solution f t of B.E. with initial value f 0, then the H-function cannot increase in the course of time, i.e. \( - \frac {d H[f_{t}]}{d t} \geq 0 \), where the equality obtains just for the stationary Maxwell-Boltzmann distribution f M B which describes the system at equilibrium. The H-theorem thus predicts that, for all initial non-equilibrium microstates, entropy increases monotonically until the system reaches equilibrium, and then it remains constant for the rest of time. However, one can identify three major limitations in this attempt to establish the approach towards equilibrium.

First of all, Botzmann’s original derivation of B.E. was just heuristic. In fact, his argument rests on the alleged relation between the microstate x of the system and its macroscopic state-distribution f. Yet, as it stands, such a relation is not exact: for, the former is a point in the 6N-dimensional phase-space Γ, while the latter is a continuous function on the 6-dimensional one-particle phase-space. Each microstate x gives rise to a (normalized) exact distribution function on the μ-space, that in modern terminology can be expressed as a sum of Dirac δ-functions

Since real gases are composed by a finite number N of molecules, F [x] (q⃗, p⃗) can only be discrete, and hence it cannot be identified with the continuous distribution function f (q⃗, p⃗). So, in order to provide a rigorous derivation of the Boltzmann equation from the Hamiltonian equations of motion, one ought to establish an exact relation x ∼ f and show that it holds in the course of time, once some suitable initial conditions are posited.

Furthermore, Boltzmann could not prove the existence of a unique solution of equation (2). The problem of showing that, given the function f 0 at the initial time t = 0, its time-evolution f t is a unique solution of the Boltzmann equation with initial value f 0 for all time t ≥ 0 is still an outstanding issue in non-equilibrium statistical physics, which has been settled only in special cases. In particular, the lack of solutions of B.E. implies that the antecedent in the above formulation of the H-theorem would not be verified. Cercignani (1972) observed that the problem of existence of a unique solution of the equation is closely related to the problem of providing a rigorous derivation of B.E. from H.E.M. . Lanford’s theorem establishes precise conditions under which the sought-after result can be achieved, at least for a short interval of time.

Finally, even on the assumption that an exact relation x ∼ f is established at all times and that there exists a unique solution of equation (2), the H-theorem cannot be true in general. Famously, Loschmidt (1876) argued that, if one lets a system out of equilibrium evolve for some time and then suddenly reverses the momenta of all particles, the system will evolve back until it reaches its initial state after the same amount of time. In fact, the reversibility objection shows that for every solution of H.E.M. for which the H-function decreases one can construct another solution for which the H-function increases, thus providing a counter-example to Boltzmann’s H-theorem. Moreover, Poincarés recurrence theorem entails that almost any initial microstate of the system will eventually evolve in accordance to the Hamiltonian flow into a microstate arbitrarily close to itself. Drawing from this, Zermelo (1896) observed that to any monotonic decrease of the H-function in the course of time there must correspond a monotonic decrease too. The reversibility and the recurrence objections thus indicate that the approach towards equilibrium asserted by the H-theorem cannot obtain for all initial microstates. One may still hope, though, that a monotonic increase of the minus H-function can be demonstrated for almost all initial non-equilibrium microstates. A proof of this fact would constitute a statistical H-theorem.

2.2 The combinatorial argument

In his 1877 work, Boltzmann conceded that there are exceptions to the H-theorem, and claimed that a justification of the approach towards equilibrium should be given on a statistical ground. That is what the combinatorial argument purports to establish within the formalism of statistical mechanics. Let \(\mathcal {P} = \{ A_{1}, ..., A_{j} \}\) be a partition of the one-particle μ-space Λ × R 3 into disjoint cells A j of equal volume δ A, which are taken to be rectangular in position and momentum. With any microstate x there is associated a macrostate Z(x) := {n 1(x) ,..., n j (x)}, where n j represents the occupation number in A j , corresponding to the number of particles whose position and momentum lie within the given cell. The occupation number is related to the state-distribution f by the following stipulation:

Here, the value f(q⃗ j , p⃗ j ) is assumed to be constant over the whole cell A j . Since the molecules are taken to be all identical, macrostates do not change under permutations of the particles, and hence different microstates can realize the same macrostates. Boltzmann made the further crucial assumption that all possible microstates have the same probability, that is tantamount to introducing the uniform Lesbegue measure μ on the phase space Γ. Let Δ Z = {x ∈ Γ : Z(x) = Z} be the set of phase points realizing a given macrostate Z: the probability of Z is then obtained by computing the value of μ over Δ Z . Since the measure μ is uniform, such a probability is proportional to the volume |Δ Z | of the corresponding region, given by the number of microstates realizing Z.

Next, Boltzmann associated the entropy of a macrostate Z with the quantity ln |Δ Z |, that is often referred to as the Boltzmann entropy, and show that it is given by −k B N H [f], where k B is known as the Boltzmann constant and depends on the nature of each physical system. It follows that the volume of a macrostate Z is such that |Δ Z | ≈ e −NH [f]. Finally, he demonstrated that, under certain assumptions, the equilibrium macrostate Z e q , which is specified by the occupation numbers obtained by means of formula (4) for the Maxwell-Boltzmann distribution f M B , corresponds to the region of phase-space Γ with the largest volume among all possible macrostates. Accordingly, equilibrium microstates are more numerous than the non-equilibrium microstates realizing any other macrostate. Indeed, one can show that, for a huge number N of particles, \(\Delta _{Z_{eq}}\) is overwhelmingly larger than any other region in Γ, and thus \(|\Delta _{Z_{eq}}| >> |\Delta _{Z}|\) for all Z≠Z e q : under the requirement that the total energy E of the system remains constant, the corresponding region tends to occupy the vast majority of available phase space. Let us refer to this fact as the dominance of the equilibrium macrostate. It means that the equilibrium macrostate is the most probable macrostate, as well as the one associated with the highest Boltzmann entropy. Arguably, this would guarantee that any initial non-equilibrium microstate is very likely to evolve into an equilibrium microstate at a later time.

However, one ought to stress that one of the assumptions adopted to prove the dominance of the equilibrium macrostate restricts the applicability of the combinatorial argument only to the case of ideal gases, where there are no interactions between the molecules. Specifically, Boltzmann assumed that the total energy E of the system is additive, that is E = Σ j n j E j , where E j is the energy of an individual particle whose mechanical state lies in the cell A j , which is taken to be equal to the average energy in the cell. This requires that the energy of each particle does not depend on the mechanical states of the rest of the particles in the system, and hence if follows that E j cannot contain contributions from any interaction potential. Clearly, if one wishes to dispense with such an assumption, one ought to provide an alternative proof that the region \(\Delta _{Z_{eq}}\) has maximal volume in phase-space.

More importantly, as the Ehrenfests (1912) first recognized, the combinatorial argument is incomplete, in that it fails to provide any proof about the time-evolution of the system. In fact, it is a merely static argument and, as such, it cannot establish the spontaneous approach towards equilibrium it purports to demonstrate, even within its narrow conditions of applicability. All it proves is that, for ideal gases, if the initial microstate x 0 is a non-equilibrium microstate, then \(\ln | \Delta _{Z(x_{0})}| < \ln | \Delta _{Z_{eq}} |\), and hence x 0 is associated with a lower Boltzmann entropy than an equilibrium microstate. Yet, this does not entail that x 0 is very likely to evolve into a microstate x t = T t x 0 contained in \(\Delta _{Z_{eq}}\) at some later time t > 0. In fact, one is not even assured that x 0 is very likely to evolve into any microstate realizing a macrostate Z(x t ) of higher Boltzmann entropy than Z(x 0). In particular, even if one concedes that x 0 would eventually evolve into an equilibrium microstate, nothing would prevent the Boltzmann entropy from decreasing during some time-interval: the system could in principle evolve into a microstate x t realizing a macrostate Z(x t ) which is of lower Boltzmann entropy than the macrostate Z(x t ′) at a previous instant t ′ < t. The incompleteness of the argument lies in the fact that there lacks any information about how the macrostate of the system changes in the course of time. In order to complete the combinatorial argument, one ought to add a dynamical ingredient which would induce a monotonic increase of the Boltzmann entropy \(\ln | \Delta _{Z(x_{t})}|\) through time. Unfortunately, the available solutions proposed in the literature are rather unsatisfactory (cfr. Uffink (2007) and Frigg (2008)), so that the problem of establishing the approach towards equilibrium in Boltzmann’s formulation of statistical mechanics remains open.

Since Lanford’s approach purports to develop Boltzmann’s legacy, as suggested in the quote by Uffink in the Introduction, we now wish to evaluate whether it can settle the problems in Boltzmann’s original work.

3 Lanford’s theorem

In this section we explain in what sense Lanford’s theorem provides a rigorous derivation of the Boltzmann equation from the Hamiltonian equations of motion, thereby proving the existence of a unique solution of B.E., at least for a short interval of time. We also discuss the limitations of the applicability of the result to realistic gases.

The first step to obtain a rigorous derivation of B.E. is to establish an exact relation between the microstate of the gas and its macroscopic state-distribution. It requires one to work in a suitable limiting regime for the hard spheres model: indeed, as the number of particles increases, the discrete exact distribution F [x] generated by the microstate x would converge to some continuous, differentiable distribution function f. In the Boltzmann-Grad limit (B-G limit), one lets N grow to infinity while simultaneously letting the diameter a of the particles go to zero, in such a way that the quantity N a 2 remains finite and non-zero. Here, we make the dependence of the terms on both parameters N and a explicit by using the superscript (a) as a shortening, and we denote the B-G limit by a→0, where it is intended that one also takes N → ∞. Given a partition \(\mathcal {P}\) of μ-space into cells of equal size rectangular in position and momentum and given some positive real number 𝜖, one establishes an exact relation \(x \sim _{(\mathcal {P}, \epsilon )} f\) if F [x] converges in distribution to f in the B-G limit in the sense that

Equivalently, we say that x represents f within the tolerance \((\mathcal {P},\epsilon )\).

Next, one would like to show that, for any initial non-equilibrium microstate x 0 which represents the continuous function f 0 within a certain tolerance, its time-evolution x t = T t x 0 represents the solution f t of B.E. with initial value f 0 at any later time t. However, due to the time-reversal invariance of H.E.H., there is some initial microstate which does not evolve through time in accordance with the irreversible Boltzmann equation. Lanford thus set himself to prove that, under precise assumptions, an initial x 0 representing f 0 is very likely to evolve into a microstate x t representing f t . For this purpose, one ought to introduce a probability measure. Actually, for technical reasons, Lanford’s theorem is expressed in terms of sequences of probability measures \(\{ \mu _{k}^{(a_{k})} \}_{k= 1, ..., N}\), where the index k labels the number of molecules. To avoid to overburden the notation we omit any reference to k and collectively denote the sequence with μ (a). In particular, Lanford assumed that μ (a) is absolutely continuous with respect to the Lebesgue measure and symmetric under permutations of the particles. Given the set \(\Delta ^{(a)}_{\mathcal {P},\epsilon }[f] = \{x \in \Gamma : \mbox { } x \sim _{(\mathcal {P}, \epsilon )} f \}\) of phase-points which represent f within the tolerance \((\mathcal {P}, \epsilon )\), the sequence of probability measures μ (a) is said to be an approximating sequence for f just in case in the B-G limit it assigns probability one to all microstates in \(\Delta ^{(a)}_{\mathcal {P},\epsilon }[f]\), that is

Since the time-evolution of μ (a) is governed by the Hamiltonian flow T t , what one ought to demonstrate in order to complete the derivation of B.E. from H.E.M. is that, if μ (a) is an approximating sequence for a continuous function f 0 at the initial time, μ (a)∘T −t is an approximating sequence for the solution f t of the B.E. with initial value f 0 at any later time t.

Lanford determined sufficient conditions for the sought-after sequence of probability measures to exist, which are captured by assumptions (1) and (2) in his theorem. Since the precise statement of these assumptions require one to introduce some extra technical background, we defer it to the Appendix. Here, we just give an informal characterization. Assumption (1) is a regularity condition, which rules out initial probability measures which do not drop exponentially as the Maxwell-Boltzmann distribution. Assumption (2) contains a generalization of the factorization condition in Boltzmann’s Stoßzahlansatz, with the crucial difference that it does not include the additional provision that it applies only to particles which are about to collide. For a (diluted) gas with density z and inverse temperature β, the combination of assumptions (1) and (2) implies the following bound:

So, one restricts oneself just to a special class of well-behaving initial probability distributions which are sufficiently regular in the sense that they do not develop intractable singularities and satisfy a factorization condition, just as solutions of B.E. ought to do. From a conceptual point of view, this is analogous to Boltzmann’s derivation imposing special initial conditions on f 0. We are now in a position to spell out the theorem as it is formulated by Lanford (1976), Lanford (1981). In what follows t̄ denotes the mean free time, namely the average time a particle would take to undergo two successive collisions.

LANFORD’S THEOREM

Suppose f 0(q⃗, p⃗) is a continuous function, and μ (a) is an approximating sequence for f 0. If assumptions (1) and (2) hold at the initial time t = 0, then μ (a)∘T −t is an approximating sequence for the solution f t of B.E. with initial value f 0 during all t ∈ [0, τ], where τ = 0.2t̄.

Lanford then proceeded to construct a sequence of probability measures which verifies the conditions of his theorem. Given a sufficiently fine tolerance \((\mathcal {P}_{0}, \epsilon _{0})\), one can define the sequence of conditional probability measures

concentrated on the set \(\Delta ^{(a)}_{\mathcal {P}_{0}, \epsilon _{0}}[f_{0}]\) comprising all the microstates x such that \(x \sim _{(\mathcal {P}_{0}, \epsilon _{0})} f_{0}\). By construction, \(\mu ^{(a)}_{0}\) is an approximating sequence for f 0. If assumptions (1) and (2) are satisfied, it thus follows that \(\mu ^{(a)}_{0} \circ T_{-t}\) is an approximating sequence for the solution f t of B.E. with initial value f 0 for all t up to the time-bound τ corresponding to one-fifth of the mean free time. In fact, for any tolerance \((\mathcal {P}, \epsilon )\), in the B-G limit the sequence \(\mu ^{(a)}_{0} \circ T_{-t}\) assigns probability one to any initial microstate x which evolves into a microstate x t = T t x representing f t within the tolerance \((\mathcal {P}, \epsilon )\), that is

The existence of the thus-constructed sequence \(\mu ^{(a)}_{0}\) guarantees that there exists a unique solution f t of B.E. with initial value f 0, at least for the time-interval of validity of the theorem.

It is important to stress that this does not mean that the Boltzmann equation holds on average, but rather that it holds for almost all initial microstates. That is, the theorem assures that, for any arbitrary tolerance \((\mathcal {P}, \epsilon )\), there is a tolerance \((\mathcal {P}_{0}, \epsilon _{0})\) such that, for the vast majority of phase-points x 0 at t = 0, if \(x_{0} \sim _{(\mathcal {P}_{0}, \epsilon _{0})} f_{0}\) then \(x_{t} \sim _{(\mathcal {P}, \epsilon )} f_{t}\) for any positive time t up to τ. As Lanford put it,

It is simply that, in the Boltzmann-Grad limit, among those microscopic states which represent a given f 0 within some small tolerance, most will have classical trajectories such that the state at time t > 0 approximately represents f t provided that t is not too large. [Lanford (1976), p. 73]

The qualification “most“, as synonymous of “almost all“, or “the vast majority of“, is to be given a measure-theoretic interpretation, in that it is intended with respect to the measure \(\mu _{0}^{(a)}\). In fact, the set of microstates satisfying the initial conditions which due to the time-reversal invariance of H.E.M. will not evolve in such a way to agree with B.E. is assigned probability zero by \(\mu _{0}^{(a)} \). In the spirit of the theorem, such exceptional microstates can then be neglected. So, measure-theoretic considerations play a crucial role in Lanford’s argument. In the next section, we relate this idea to the notion of typicality.

A statistical H-theorem can be derived from Lanford’s result as a corollary. For, once an exact relation between the microstate x and the macroscopic state-distribution f is established, it follows from Boltzmann’s derivation of his original H-theorem that, for any solution of the Boltzmann equation satisfying assumptions (1) and (2) at the initial time, the negative of the H-function will increase monotonically until the system reaches equilibrium and will then remain constant for the rest of time. In fact, one can argue as follows. Let a microstate x 0 and the macroscopic state-distribution f 0 be fixed at time t = 0, for which the initial entropy of the system is −H [f 0]: as the macroscopic state-distribution evolves in the course of time as a solution f t of B.E. with initial value f 0, the entropy −H [f t ] increases until it attains its maximal value −H [f M B ]. That is assured to be the case for almost all microstates x 0 satisfying the initial conditions of Lanford’s theorem, at least during an interval of time [0, τ]. Before turning to the issue whether this offers a prospect to establish the irreversible approach towards equilibrium, we address some of the limitations of Lanford’s result.

3.1 Limitations of the result

As we pointed out in the Introduction, the two main limitations for the applicability of Lanford’s theorem to realistic gas situations are the idealized regime introduced by the Boltzmann-Grad limit and the short time of validity of the result. Let us take them up in the reverse order.

For a (diluted) gas with density z and inverse temperature β, the mean free path l̄, namely the average distance that a particle would travel between two successive collisions, is approximatively given by \(\frac {1}{\pi a^{2} N z}\), with π a 2 being the cross section of a hard sphere. One can then compute the mean free time t̄ as the ratio between l̄ and the mean square average velocity \(\sqrt {\frac {3}{m \beta }}\) of the particles. During a time-interval of length t̄, about 100% of the particles in the gas would undergo a collision. Clearly, the time-bound τ of validity of Lanford’s result being of the order of one-fifth of t̄ corresponds to an extremely short interval: for realistic gases in standard conditions it amounts to a few milliseconds. Of course, such a short time-scale does not even justify the usual applications of the Boltzmann equation at the macroscopic levelFootnote 1.

Unfortunately, no fully satisfactory attempt to prove a theorem extending Lanford’s result to arbitrary positive times has been made. The best effort so far is that by Illner and Shinbrot (1984), Illner and Pulvirenti (1986), Illner and Pulvirenti (1989), who obtained a result which holds at all time for two-dimensional and three-dimensional diluted gases expanding in the vacuum. However, the relevant physical models are rather irrealistic. For, they assume that the mean free path is very large in comparison with the initial data: in this regime, the density of the gas would become so low that virtually no collision may take place. Furthermore, since the gas is depicted as freely expanding in the vacuum, Illner and Pulvirenti’s result does not apply to the case of a gas confined in a finite volume, as in Lanford’s theorem. To prove that, under mild assumptions, Lanford’s result can be estended in time for hard spheres gases contained in a box still remains an open challenge in foundations of non-equilibrium statistical mechanics. On the positive side, no no-go result is known which prevents Lanford’s conclusion from holding for arbitrarily long times. In fact, there are concrete indications that the sought-after global theorem can be obtained. As we explain in the Appendix, it is just a consequence of the technique of the proof adopted by Lanford that the time-bound τ arises. Moreover, even by applying the same technique, one could extend the result to longer times by strengthening the regularity assumption (1).

Be it as it may, from a conceptual point of view, the limitation in time does not override the importance of Lanford’s theorem for the problem of establishing the approach towards equilibrium. Indeed, despite being ridicolously short, the time-bound τ is already long enough to observe irreversibility, in that the ensuing statistical H-theorem guarantees the monotonic increase of entropy −H [f t ] during the interval [0, τ] if the system is not at equilibrium at the initial time t = 0.

A more serious limitation comes from the appeal to the Boltzmann-Grad limit. Recall that one requires that the number of hard spheres N tends to infinity while their diameter a goes to zero in such a way that N a 2 remains finite and non-zero. In this limit one makes precise the idea implicit in the hard spheres model that collisions are neither too frequent nor too rare, in the sense that the mean free time remains of order one, so that each particle would typically experience a collision per unit time. However, a→0 implies that the quantity N a 3, that is proportional to the proper volume (namely the actual volume occupied by the particles), approaches zero in the limit. To the contrary, the volume V(Λ) occupied by the entire gas may be kept fixed, e.g. the volume of the box, and hence the density of the gas system tends to vanish. In other words, despite the number of its particles growing to infinity, the gas becomes infinitely rarefied. This restricts the domain of applicability of Lanford’s result dramatically, in that it may only apply to very diluted gases.

A related issue arises within the recent philosophical debate on the use of limits and idealizations in statistical physics. The question is whether, and to what extent, the limit system, namely the system of infinite particles constructed by taking N → ∞, provides a description of a target system, namely a real system with certain physical properties, in which the number N of particles is very large but necessarily finite. In the B-G limit the limit system appears as a (countable) infinity of extensionless material points in an otherwise empty volume of space, whereas the target system is any real gas system comprising a huge number of material particles of very small size interacting by binary collisions, which is contained in a closed box. Clearly, the limit system can only give an inexact description of its purported target system, in that the latter ought to have very low density but cannot have null density. Norton (2011) maintained that the infinite system constructed in the B-G limit fails to be an idealization. He stipulated that an idealization is a system “some of whose properties provide an inexact description of some aspects of the target system“ (p.209). This definition ought to be contrasted with the notion of approximations, which are defined just as inexact descriptions of the target system, and as such they would not require one to regard the construction of the limit system as essential. According to Norton, a limit system would fail to be an idealization if it does not retain some crucial properties of the target system, such as determinism. He then observed that in the B-G limit single collisions between particles becomes indeterministic, contrary to what happens for finite gas systems in the hard spheres model. For, in order to compute the outcome of an individual collision between particles 1 and 2, one ought to uniquely determine six unknowns corresponding to the spatial components of the post-collision momenta of the two particles. Yet, one has only four conservation equations at one’s disposal, i.e. one for energy and three for momentum (one for each spatial component), and so one needs to add some extra constraint. In the case of hard spheres of non-zero size, the required condition comes from the geometry of the collision, whereby the momentum transfer is perpendicular to the plane of contact of the surfaces of the two spheres. Instead, when a→0 hard spheres reduce to points, and colliding points do not have a definite plane of contact. The extra constraint is therefore missing. The fact that one cannot fix a unique resolution of the post-collision momenta means that binary collisions are no longer deterministic for the limit system, from which Norton concluded that the infinite system in the B-G limit fails to be an idealization.

Norton’s reasoning has the merit to reveal that the treatment of collisions in the B-G limit is somewhat problematic. To enforce this point, we would like to make the further remark that the unit vector ω 12 connecting the centers of particles 1 and 2, which appears in the integral in the collision term in the Boltzmann equation (2), allows one to transform pre-collision momenta into post-collision momenta according to the classical laws of collisions (see eq.2 in Lanford (1975), p.75). Yet, when a→0, during a collision the positions q⃗ 1 and q⃗ 2 of the centers of the two particles coincide, and hence the vector ω 12 is no more defined. Therefore, the laws of collisions cannot apply in their standard form in the B-G limit. In fact, in the proof of Lanford’s theorem, one proceeds to remove collision points from the allowed portion of phase space, by arguing that they form a set of measure zero with respect to the Lebesgue measure (see the Appendix for more details). However that may be, Norton’s conclusion rests on his stipulated distinction between idealizations and approximations: arguably, far from being an idealization, the infinite system in the B-G limit ought to be regarded as an approximation of its target system. In fact, the limit system would yield a “good“ approximation of a real gas system in the hard spheres model when the number of particles in the latter is very high and their diameter is very small, so that the quantity N a 3 which is proportional to the density remains non-null. Under this interpretation, the justification for one to appeal to the B-G limit is merely pragmaticFootnote 2.

That said, we would like to stress that the B-G limit is essential for the purpose of obtaining a rigorous derivation of the Boltzmann equation, from which one derives a statistical H-theorem predicting a monotonic increase of entropy towards equilibrium. On the one hand, it allows one to establish an exact relation between the microstate of the system and its macroscopic state-distribution; on the other one, the fact that the quantity N a 2 remains well-defined means that the collision term in equation (2) does not vanish when a→0, thereby assuring the irreversible behavior of the negative of the H-function. Furthermore, the B-G limit puts one in a position to avoid the recurrence objection which was raised against the original H-theorem. Already Boltzmann estimated that the recurrence time would tend to be infinite if the number of particles N goes to infinity. More to the point, Poincarés theorem rests on the assumption that the volume of total phase space is bounded, which is obviously not true for N → ∞. As a consequence, in the B-G limit the recurrence theorem does not hold, and hence one cannot run Zermelo’s objection against any possible extension of Lanford’s theorem to arbitrarily long times.

So, in spite of the above mentioned limitations, the striking point about Lanford’s theorem remains, namely that, for extremely diluted gases contained in a box, under suitable initial conditions one can derive the irreversible Boltzmann equation from the time-reversal non-invariant Hamiltonian equations of motion, thereby proving the monotonic increase of the negative of the H-function for some non-trivial amount of time. We now address the issue whether the theorem can be used to recover the approach towards equilibrium in Boltzmann’s formulation of statistical mechanics, at least within its domain of applicability.

4 The approach towards equilibrium

Let us recall why the combinatorial argument is incomplete. If one wishes to show that any initial microstates x 0 is very likely to evolve into an equilibrium microstate, one needs to introduce a probability measure. However, this issue is highly sensitive to how such a measure is applied. For instance, one cannot argue that it happens simply because equilibrium microstates are more probable. Indeed, strictly speaking, due to Boltzmann’s equiprobability assumption any equilibrium microstate has the same Lebesgue measure μ as any non-equilibrium microstate. Furthermore, since the Hamiltonian flow T t is measure-preserving, the probability of the evolved microstate x t would remain the same as that of x 0 for all time t. Granted, by the dominance of the equilibrium macrostate, Z e q is overwhelmingly the most likely macrostate. Yet, one still need to prove that, as time goes on, the initial non-equilibrium microstate would evolve into microstates x t which realize more and more probable macrostates Z(x t ) until the system reaches equilibrium. In fact, the spontaneous approach towards equilibrium can only be demonstrated by showing that the microscopic dynamics assures the monotonic increase of the Boltzmann entropy for positive times. The time-evolution of the system is determined by its Hamiltonian, plus some initial conditions. We submit that, by entailing a statistical H-theorem, Lanford’s result provides the missing dynamical ingredient required to complete the combinatorial argument. The key is to recognize the crucial link established in Boltzmann’s argument between the negative of the H-function and the Boltzmann entropy S B := ln |Δ Z |, which is given by −k B N H [f]. Clearly, the existence of a unique solution f t of B.E. with initial value f 0 for which −H [f t ] increases monotonically in the course of time yields sufficient conditions to put the Boltzmann entropy in motion. Below, we show it explicitly.

To begin with, let us spell out the relation between the region Δ Z associated with the macrostate Z realized by a given microstate x and the set \(\Delta _{\mathcal {P}, \epsilon }[f]\) of phase-points such that \(x \sim _{\mathcal {P}, \epsilon } f\) for the macroscopic state-distribution f. Consider a partition \(\mathcal {P}\) of μ-space into a large number of cells. For a given microstate x∈Δ Z(x), the occupation numbers for any cell A j are specified by formula (4), that is \(n_{j}(x) = N f(\vec {q}_{j}, \vec {p}_{j}) \delta A\). Since it is assumed that the value f (q⃗ j, p⃗ j) is constant within each cell, one can write \(n_{j}(x) = N \int _{A_{j}} f(\vec {q},\vec {p}) d^{3} \vec {q} d^{3} \vec {p}\). The requirement that there exists an 𝜖 > 0 for which condition (5) is satisfied is thus trivially fulfilled, and hence \(x \in \Delta _{\mathcal {P}, \epsilon }[f]\). On the other hand, strictly speaking, the converse is not true: that is, the fact that a microstate x represents f within the tolerance \((\mathcal {P}, \epsilon )\) does only imply that the occupation numbers n j (x) are approximately equal to \(N \int _{A_{j}} f(\vec {q},\vec {p}) d^{3} \vec {q} d^{3} \vec {p}\) for any cell A j . Yet, the positive real number 𝜖 in formula (5) can be chosen to be arbitrarily small. Thus, whenever the exact relation \(x \sim _{(\mathcal {P}, \epsilon )} f\) is established, one can regard the sets Δ Z(x) and \(\Delta _{\mathcal {P}, \epsilon }[f]\) as being equivalent to a good approximation. By looking at the way in which the occupation numbers evolve according to Lanford’s theorem, one can then determine how the macrostate of the system changes in the course of time.

For an initial non-equilibrium microstate x 0 representing the continuous function f 0 within a certain tolerance, the occupation numbers in the corresponding macrostate Z(x 0)={n 1(x 0),..., n j (x 0)} are approximatively equal to \(N \int _{A_{j}} f_{0}(\vec {q},\vec {p}) d^{3} \vec {q} d^{3} \vec {p}\) for any cell A j . The existence of a unique solution f t of B.E. with initial value f 0 assures that the time-evolved occupation numbers n j (x t ) are approximatively equal to \(N \int _{A_{j}} f_{t}(\vec {q},\vec {p}) d^{3} \vec {q} d^{3} \vec {p}\) at all t > 0, thereby establishing the time-evolution of the macrostate Z(x t )={n 1(x t ),..., n j (x t )} with Boltzmann entropy \(\ln |\Delta _{Z(x_{t})}|\). Lanford’s result then implies that, for almost all initial non-equilibrium microstates x 0, the time-evolved microstate x t is such that

at least for the time-interval of validity of the theorem, which means that the Boltzmann entropy S B (x t ) cannot decrease for positive times. Moreover, it follows that \( \ln |\Delta _{Z_{eq}}| \geq \ln |\Delta _{Z(x_{t})}| \), where the equality holds just in case x t ∈Δ Z e q , that is when \(x_{t} \sim _{(\mathcal {P}, \epsilon )} f_{MB}\). In other words, the Boltzmann entropy S B reaches its maximum when the negative of the H-function is applied to the Maxwell-Boltzmann equilibrium distribution. This means that, for the vast majority of non-equilibrium microstates satisfying the initial conditions, the Boltzmann entropy increases monotonically through time and attains its maximal value at equilibrium.

Notice that there are two distinct ways in which probability considerations are employed here. On the one hand, in the combinatorial argument one assumes the Lebesgue measure μ on phase-space, and then one computes the probability of the macrostates realized by a given microstate x by evaluating the size of the corresponding region. On the other hand, in Lanford’s approach the sequence of probability measures \(\mu _{0}^{(a)}\) is assumed to be absolutely continuous with respect to the Lebesgue measure, and it is shown that an initial microstate has probability close to one to evolve through time in accordance to the Boltzmann equation. The above procedure to complete the combinatorial argument by means of Lanford’s result draws a connection between these two meanings of probability. Accordingly, given the region \(\Delta _{Z(x_{0})}\) containing all the phase points representing the initial value f 0 of a solution f t of B.E. within the tolerance \((\mathcal {P}_{0}, \epsilon _{0})\), the conclusion expressed by formula (7) that \( \lim\limits _{a \rightarrow 0}\mu ^{(a)}_{0} \circ T_{-t} (\{\Delta ^{(a)}_{\cal P, \epsilon }[f_{t}] \}) = 1 \) just means that in the Boltzmann-Grad limit any initial microstate x 0 is assigned probability one to evolve into a microstate x t which realizes the macrostate Z(x t ) corresponding to a region \(\Delta _{Z(x_{t})}\) of larger volume (it is important to recognize, though, that not all the phase points contained in the latter region are the time-evolution of some microstate in \(\Delta _{Z(x_{0})}\)). In other words, Lanford’s theorem implies that any initial non-equilibrium microstate satisfying assumptions (1) and (2) is very likely (with respect to \(\mu _{0}^{(a)}\)) to evolve into microstates realizing macrostates which are more and more probable (with respect to μ) as time goes on, until the system reaches equilibrium. We wish to emphasize that the procedure to complete the combinatorial argument we just outlined does not restrict one to ideal gases. In fact, it bears on an alternative method than Boltzmann’s original one to prove the dominance of the equilibrium macrostate. In particular, one does not need to invoke the additivity of total energy assumption. To be sure, the condition \(E = \sum\limits_{j}n_{j}E_{j}\) still holds for E j taken as the average energy in the cell A j . However, one can no longer associate E j with the energy of any individual particle whose mechanical state lies within the cell. In fact, the energy of a single particle would not be independent from the other particles: when a binary collision occurs there are energy exchanges between the colliding molecules, whose mechanical states are located in distinct cells of μ-space since their momenta are in general quite different from each other. The above procedure thus seems to offer a scheme to extend the combinatorial argument to physical systems in which the molecules are allowed to interact with each other. To this end, it is worth noticing that King (1975) managed to generalize Lanford’s result to include gases in which the molecules mutually interact by a short-range non-negative potential.

4.1 A typicality-based account

Measure-theoretic arguments to the effect that the vast majority of non-equilibrium microstates will eventually evolve into equilibrium microstates are sometimes cast in the literature in terms of the notion of typicality. The basic idea is that a microstate is “typical“ with respect to a given measure on phase space if it belongs to a set of measure one. Conversely, it is “a-typical“ if it has measure zero. Clearly, such a definition is sensitive to the specific measure one adopts. So, one can identify two distinct senses of typicality corresponding to the two probability measures described above. On the one hand, in the combinatorial argument typical microstates are those which realize the equilibrium macrostate Z e q , since the set \(\Delta _{Z_{eq}}\) has measure one with respect to the Lebesgue measure μ. On the other hand, in Lanford’s theorem typical microstates are those initial microstates representing f 0 within a certain tolerance which evolve through time in agreement with the Boltzmann equation, since they form a set of measure one with respect to the sequence \(\mu _{0}^{(a)}\) of probability measures absolutely continuous with respect to the Lebesgue measure.

Frigg (2011) criticized various attempts to establish the approach towards equilibrium within Boltzmann’s formulation of statistical mechanics by means of typicality arguments. He contended that they all fail to explain why an initial non-equilibrium microstate is very likely to evolve into an equilibrium microstate in such a way that the entropy of the system monotonically increases in the course of time. Such accounts are based on the notion of typicality induced by the dominance of the equilibrium macrostate. Although they differ on how they attempt to complete the combinatorial argument, the common underlying intuition is well exemplified by the following passage.

For a non-equilibrium phase point x of energy E, the Hamiltonian dynamics governing the motion x t would have to be ridiculously special to avoid reasonably quickly carrying x t into \(\Delta _{Z_{eq}}\) and keeping it there for an extremely long time - unless, of course, x itself were ridiculously special. [Goldstein (2001), p.43-44, where the notation has been suitably modified]

Goldstein’s quote lends itself to two possible readings. On the first reading, one argues that a non-equilibrium microstate is very likely to evolve into an equilibrium microstate simply because the region associated with Z e q occupies the overwhelming majority of the available phase space. Yet, all one can infer from the dominance of the equilibrium macrostate is that equilibrium microstates are typical with respect to μ, but there is no proof that an initial non-equilibrium microstate would evolve into a typical one at some later time t > 0: in fact, it is even possible that the trajectory of x t in phase space may never intersect the region \(\Delta _{Z_{eq}}\). Once again, the argument remains static, just as Boltzmann’s original combinatorial argument. The upshot is that measure-theory alone is not sufficient to provide an explanation of the approach towards equilibrium. On the second reading, one recognizes the need to add some further constraint to the dynamics, which should be general enough to prevent it from being “ridiculously special“. For instance, the Ehrenfests (1912) proposed to appeal to the ergodic hypothesis. However, such a condition is known to hold only in very rare circumstances. In particular, it fails in the case of ideal gases, and hence it does not apply within the domain of validity of the combinatorial argument. Other random properties in the ergodic hierarchy, such as mixing, have been proposed in the literature. Yet, if one wishes to invoke any of them, one is bounded to face similar difficulties, in that such properties turn out to be false in general for realistic systems. Furthermore, one ought to provide a justification for adopting the putative dynamical assumption both on a physical and a conceptual ground.

In the last analysis, as Frigg argued, the existing typicality-based accounts which rely on the dominance of the equilibrium macrostate fail to explain the approach towards equilibrium due to the lack of any proven statement that the time-evolution of a system out of equilibrium would behave in the expected wayFootnote 3. In addition, the relevant notion of typicality has the drawback that non-equilibrium microstates are regarded as a-typical, and hence the initial microstate having a negligible probability should itself be ignored as being “ridiculously special“. The account of the approach towards equilibrium based on the notion of typicality relevant to Lanford’s theorem clearly avoids these objections. For one, it provides a proof of the monotonic increase of entropy observed at the macroscopic level from the details of the microscopic dynamics. Moreover, it offers a consistent treatment of the initial non-equilibrium microstates: in fact, typical microstates are exactly those which evolve in accordance with the Boltzmann equation.

However, even within this framework, there remains some unsolved problem. Indeed, one of the major limitations of Lanford’s account is that, just as the other typicality-based accounts, the Lebesgue measure is granted a privileged role. And, while it is true that the result extends to any measure which is absolutely continuous with respect to the Lebesgue measure, one still ought to give an independent justification to adopt the latter as the appropriate a priori microscopic probability, as Lanford himself explicitly recognized (cfr. Lanford (1976)).

On this issue, one should note that, according to some recent literature (cfr. Goldstein (2012), Pitowsky (2012), Hemmo and Shenker (2012)), the notion of typicality ought to be kept distinct from that of probability: in fact, although a probability measure can well be used to define typical sets (exactly in the same fashion as it was done in our previous discussion), one does not need to appeal to probability in order to introduce a measure of typicality. In particular, Pitowsky carefully distinguishes between the size of a given set, specifically the set of outcomes of an experiment, which is given by a measure function, and the probability function assigning values to the elements of such a set. Moreover, he argues that the Lebesgue measure arises as the natural a priori measure, for it extends to the continuous case the counting measure, which would represent the obvious choice in the discrete case in that the size of the relevant set is determined by counting the number of possible experimental outcomes. Yet, he also observes that such a measure is not rich enough to fix the probabilities of the outcomes of the experiment, and hence one still lacks a justification for the probabilistic statements of statistical mechanics. Hemmo and Shenker go even further and reject the claim that the privileged role of the Lebesgue measure can be justified a priori, since for them only experience can guide the choice of the appropriate measure in physics as well as the assignment of probabilities. Let us survey their argument, as they apply it to Lanford’s theorem as a case study.

By drawing from Pitowsky’s distinction, Hemmo and Shenker identifies two different characterizations of the status of the Lebesgue measure in Lanford’s account and its relevancy for the discussion of typicality. On the one hand, they concede that the theorem establishes that a large subset of the initial microstates share the property of evolving in such a way to approach towards equilibrium. In fact, if one supposes that the result can be extended to arbitrarily long times, the size of the overlap between the set of phase-points evolving from the microstates in \(\Delta _{Z(x_{0})}\) according to H.E.M. and the set \(\Delta _{Z_{eq}}\) of phase-points realizing the equilibrium macrostate will eventually tend to have Lebesgue measure one. This is a statement about the size of sets and it is indeed uncontroversial, provided that one posits the appropriate initial conditions. On the other hand, Hemmo and Shenker deny that the theorem can support the probabilistic statements of statistical mechanics, in that they maintain that the agreement between its predictions at a given time and the actual experimental outcomes is merely contingent. As they put it, “assuming that we already know from experience that the Lebesgue measure of the overlap regions (...) matches with the relative frequencies of the macrostates, Lanford’s theorem provides possible mechanical conditions, which underwrite these observations“ (p.97). To enforce their claim, they stress the fact that, if one adopts a measure which is not absolutely continuous with respect to the Lebesgue measure, one may not obtain the sought-after result, since it may happen that the initial microstates x 0 evolving in agreement with B.E. do not form a set of measure one, and hence the theorem would become empirically insignificant. So, while Lanford’s result is about the structure of trajectories through time, it does not really provide one with a dynamical underpinning of the Lebesgue measure. We actually endorse such a conclusion. However, we also wish to point out that the fact that one does not have any empirical ground for the preference of the Lebesgue measure does not in itself undermine the possibility that the latter could be independently justified as the natural a priori measure along the lines of Pitowsky’s argument.

Be it as it may, in our views the crucial problem concerning the approach towards equilibrium in Lanford’s theorem is that it does not give the right account of the behavior of a gas for negative times. We take this up in the last section.

5 Is Lanford’s theorem true for the past?

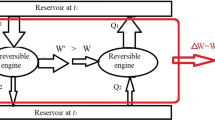

The irreversible behavior of the minus H-function for positive times is a consequence of the fact that, contrary to the Hamiltonian equations of motion, the Boltzmann equation is not invariant under time-reversal trasformations, just as Loschmidt’s reversibility objection indicated. In order to obtain an irreversible result from a set of time-reversal invariant equations, one ought to add a time asymmetric condition. The time-reversal non-invariant ingredient in Boltzmann’s original derivation of B.E. is the Stoßzahlansatz, in particular the provision that the factorization condition 9 holds only for pre-collision particles. In fact, if one instead assumes factorization for post-collision particles, one would derive an equation having the same form as (2) but with a minus sign in front of the collision term, namely the Anti-Boltzmann equation (Anti-B.E.). That is the time-reversal transformation of B.E.. If f t is a solution of Anti-B.E., then entropy would monotonically decrease through time, in that \( - \frac {dH[f_{t}]}{dt} \leq 0 \) for all t. Therefore, B.E. and Anti-B.E. can hold together just in case the system is at equilibrium. Although deriving a (unique) solution of B.E. assures that the predictions of the theory agree with our macroscopic observations about the approach towards equilibrium, one ought to provide a justification for the choice of pre-collision over post-collision particles in the Stoßzahlansatz. Nevertheless, neither Hamiltonian dynamics nor probability theory, being both neutral with respect to the direction of time, can ground such a preference. As Price (1996) pointed out, in the absence of an independent justification for the putative time asymmetric ingredient one incurs the risk of falling into some kind of double standard. On the other hand, a statistical H-theorem would allow one to disregard those exceptional phase points for which a monotonic increase of the negative of the H-function is not guaranteed, such as the microstate constructed in the reversibility objection. Yet, one still ought to determine whether, and how, time asymmetry is introduced in Lanford’s theorem.

It is instructive to relate the statistical H-theorem following from Lanford’s result with an argument by Boltzmann (1895, 1897) based on the so-called H-curve. With any microstate x one can associate a curve in phase space depicting the evolution of H [f] in the course of time. Boltzmann claimed that, with the exception of certain microstates, the H-curve exhibits the following properties: (i) for most of the time, the value of the H-function is very close to its minimum H m i n , that is the system is close to equilibrium; (ii) occasionally the H-curve rises to a peak well above the minimum value, that is the system fluctuates out of equilibrium; (iii) higher peaks are extremely less probable than lower ones, that is states of lower entropy are less probable that states of higher entropy. If at time t = 0 the curve takes on a value H [f 0] much greater than H m i n , so that the system is very far from equilibrium, the function may evolve only in two alternative ways. Either H [f 0] lies in the neighborhood of a peak, and hence H [f t ] decreases in both directions of time; or it lies on an ascending or descending slope of the curve, and hence H [f t ] would correspondingly decrease or increase. However, statement (iii) entails that the first case is much more probable than the second. One would thus conclude that there is a very high probability that at time t = 0 the entropy of the system, associated with the negative of the H-function, would increase for positive times; likewise there is a very high probability that the entropy would increase for negative times. This conclusion is sometimes regarded as yielding a statistical H-theorem. The Ehrenfests (1912) actually refined Boltzmann’s argument by outlining different versions of what the time-evolution of the H-function may look like, such as the “concentration curve“ and the “bundles of H-curves“. Nevertheless, no proof of these claims was provided (unless one assumes the controversial ergodic hypothesis), and hence one does not really have a theorem.

Lanford’s result fills this gap. In fact, there exists a version of the theorem for negative times which provides a rigorous derivation of the Anti-Boltzmann equation (cfr. Lanford 1975 and Lebowitz 1981): that is, under assumptions (1) and (2), if \(\mu _{0}^{(a)}\) is an approximating sequence for the continuous function f 0, then \(\mu _{0}^{(a)} \circ T_{-t}\) is an approximating sequence for the solution f t of Anti-B.E. with initial value f 0 during all t∈[−τ, 0]. In other words, the conclusion expressed by formula (7) that \( \lim\limits _{a \rightarrow 0}\mu ^{(a)}_{0} \circ T_{-t} (\{\Delta ^{(a)}_{\cal P, \epsilon }[f_{t}] \}) = 1 \) now means that in the Boltzmann-Grad limit the vast majority of microstates x 0 satisfying the initial conditions evolve in agreement with Anti-B.E., from which it follows that the negative of the H-function monotonically decreases for t < 0. Thus, by putting together the versions of Lanford’s theorem for positive times and negative times, respectively, one finally has a proof of Boltzmann’s claim that, at any initial time t = 0, there is a very high probability that the entropy of the system increases in the future as well as a very high probability that it decreases in the past.

Although his comment is not made in reference to Boltzmann’s H-curve, Uffink fully recognized this remarkable aspect of Lanford’s result.

The theorem equally holds for −τ < t < 0, with the proviso that f t is now a solution of the anti-Boltzmann equation. This means that the theorem is, in fact, invariant under time-reversal. [Uffink (2007), p.116]

Under such an interpretation, though, one ought to face two cumbersome issues concerning the problem of establishing the approach towards equilibrium in statistical mechanics. First, the theorem being time-reversal invariant, in the sense that it predicts a symmetric behaviour in the two directions of time for a fixed initial distribution, raises the question whether there is any time asymmetry at all. Second, the fact that entropy decreases for negative times entails that the theorem gives the wrong retrodictions.

Regarding the first issue, one would expect that, given that the microscopic dynamics is time-reversal invariant, the time asymmetry comes from the initial conditions, as the quote by Sklar in the Introduction emphasizes. However, the regularity assumption (1) is manifestly time-symmetric. Likewise, assumption (2) does not contain any irreversible ingredient either: as we already noted, contrary to Boltzmann’s Stoßzahlansatz, it does not express any preference for pre-collision over post-collision phase points. Actually, different derivations of the theorem by King (1975) and Spohn (1991) replace assumption (2) with a weaker conditionFootnote 4, which is time-reversal non-invariant. Yet, one still obtains the same kind of time-symmetric behaviour with respect to t = 0 as in Lanford’s original theorem, and thus the issue remains unsettled. Incidentally, that seems to indicate that, although initial conditions are necessary, they may not be sufficient for time asymmetry. So, one is still missing an explanation of why, for irreversible processes, if the system is out of equilibrium at the initial time, entropy should increase in the course of time for the future whereas it should decrease for the past.

This leads one to the second issue. That can be illustrated with our example of a diluted gas expanding from the right corner of a box at t = 0. One would expect that before the initial time the gas is even further compressed in the corner, so that its entropy is lower. Nevertheless, the theorem predicts that the gas evolves away from equilibrium for t < 0. As Spohn (1997) put it,

This is the real puzzle because it contradicts everyday experience... So why does the method which works so well for the future fail so badly for the past? [Spohn (1997), p.157]

The answer to such a question, in our opinions, rests on the fact that the theorem is invariant under time-reversal: without the presence of a time-asymmetric condition holding in both directions of time, for any increase of the minus H-function in the future there must indeed be a symmetrical decrease of the minus H-function in the past. But how can one solve the puzzle about the wrong retrodictions? The solution proposed by Spohn is to invoke an extra assumption asserting that the system starts off in the distant past from a state of very low entropy. That is known as the Past Hypothesis. Yet, the status of such an assumption is not quite perspicuous. There are two possible formulations: either one considers the entire universe as the relevant system, so that its early state of very low entropy corresponds to the Big Bang; or, in the spirit of laboratory physics, one refers just to the gas system under investigation and posits that its past state at some time t < 0 has much lower entropy than its state at t = 0.

Spohn understands the Past Hypothesis in the first sense, that is as a cosmological assumption. Although it has other eminent advocates such as Boltzmann himself, Feynmann, Penrose and Lebowitz, the view that the low entropy of the early state of the universe grounds the thermodynamical arrow of time seems untenable. In particular, Earman (2006) argued that the past hypothesis is “not even false“ since the cosmological models described in general relativity do not fare well with the idea that the Boltzmann entropy takes on a low value, nor do they support the claim that time-evolution is accompanied by a global monotonic increase of entropy. Furthermore, even granting the fact that the entropy of the entire universe is low, this does not mean that the entropy of some small subsystem is low too, much less does it imply that the latter ought to increase in the course of time. What one needs to establish in order to secure the predictions of Lanford’s theorem is that a gas contained in a box manifests a tendency to evolve towards equilibrium, but considerations about the universe as a whole are of no help to describe the behavior of some isolated system.

So, one could instead understand the past hypothesis in the second sense, that is as referring to isolated systems in the laboratory context. In this case, as Spohn himself emphasized, the puzzle about the wrong retrodictions does not seem to arise. In fact, one can simply manifacture a low entropy state for the gas at the initial time: the behavior of the system before t = 0 is therefore irrelevant, in that it does not undergo any unconstrained evolution for t < 0. However, in Lanford’s theorem the choice of the initial time is arbitrary, and so if one applies the theorem for negative times at any later instant t > 0 one would still get the wrong retrodictions. Even worse, on this basis one can actually construct an argument which has rather dramatic consequences for the theorem. For, let the system be prepared in some initial state at t = 0, and let it evolve during the time-interval \([0, \frac {\tau }{2}]\). According to Lanford’s theorem, the overwhelming majority of initial microstates x 0 in \(\Delta _{\mathcal {P}_{0}, \epsilon _{0}}^{(a)}[f_{0}]\) are such that their time-evolved microstates \(x_{\frac {\tau }{2}}\) represent a solution of B.E. taking on the value \(f_{\frac {\tau }{2}}\) at \(t = \frac {\tau }{2}\). These initial microstates x 0 are typical with respect to the measure \(\mu _{0}^{(a)}\). Nevertheless, if one now runs the theorem backwards for the same amount of time, it appears that the behavior of such microstates is far from typical. In fact, in order to apply the theorem again at \(t = \frac {\tau }{2}\) one ought to introduce a different sequence of probability measures than \(\mu _{0}^{(a)}\), namely the sequence \(\mu _{\frac {\tau }{2}}^{(a)}(\cdot ) := \mu (\cdot | \Delta _{\mathcal {P}_{0}, \epsilon _{0}}^{(a)}[f_{\frac {\tau }{2}}])\) concentrated on the set of phase-points representing the continuous function \(f_{\frac {\tau }{2}}\) within the tolerance \((\mathcal {P}_{0}, \epsilon _{0})\). According to the version of Lanford’s theorem for negative times, the overwhelming majority of the microstates in \(\Delta _{\mathcal {P}_{0}, \epsilon _{0}}^{(a)}[f_{\frac {\tau }{2}}]\) must have evolved in agreement with Anti-B.E. from some microstates \(x_{0}^{\prime }\) at time t = 0. Of course, \(x_{0}^{\prime } \not \in \Delta _{\mathcal {P}_{0}, \epsilon _{0}}^{(a)}[f_{0}]\) and hence \(x_{0}^{\prime }\) ≠x 0, unless the initial x 0 was an equilibrium microstate to begin with (an option which would clearly make the result trivial). Since by the combinatorial argument the volume of \(\Delta _{\mathcal {P}_{0}, \epsilon _{0}}^{(a)}[f_{0}]\) is less than the volume of \(\Delta _{\mathcal {P}_{0}, \epsilon _{0}}^{(a)}[f_{\frac {\tau }{2}}]\), the fact that most microstates in the latter region must have evolved in agreement with Anti-B.E. means that in the B-G limit \(\mu _{\frac {\tau }{2}}^{(a)}\) assigns probability zero to those microstates which evolved from x 0 in agreement with B.E. at time \(t = \frac {\tau }{2}\). In the spirit of the theorem such phase-points of measure zero should therefore be neglected, despite being the microstates for which one obtains the expected monotonic increase of entropy during the time-interval \([0, \frac {\tau }{2}]\). If this analysis is correct, Lanford’s result would actually lose much of its strength.

6 Conclusion

We discussed whether Lanford’s theorem can be used to recover the approach towards equilibrium in Boltzmann’s formulation of statistical mechanics. After addressing its limitations, we showed that it offers a prospect to complete the combinatorial argument. However, we then argued that the theorem being time-reversal invariant fails to yield the right retrodictions about the past evolution of a gas.

Notes

It should be emphasized, though, that this amount of time is not too short for collisions to take place. As ? Cercignani:1998 () and Uffink (2007) pointed out, during one-fifth of the mean free time about 20% of the particles in the gas undergo a collision. Actually, under certain circumstances which depend on suitable choices of the initial distribution f 0, the time-bound may be extended up to \(\frac {1}{2}\bar {t}\) (cfr. Lanford (1981)), and hence in this case about half of the particles would undergo a collision.

To make the notion of good approximations more precise, one could perhaps follow a general recipe to dissolve the mistery of singular limits, such as the B-G limit, outlined by Butterfield (2011). One should keep in mind, though, that such a recipe may not apply to all cases of limits employed in statistical physics (see Batterman (2013) for a detailed analysis of this issue).

For completeness, let us mention that Frigg also discussed a third account that cannot be traced back to the spirit of Goldstein’s quote in that it focuses on the structure of the region Δ Z of phase-space associated with a macrostate Z at a given time, but he dismissed it for analogous reasons.

Specifically, such a weaker assumption requires that the domain of convergence of each initial correlation function \(f_{k}^{(a)}\) is defined on a smaller subset of phase space Γ k than Γ k, ≠ (0) (see the Appendix for a definition of these terms).

References

Batterman, R.W. In The Tyranny of Scales, forthcoming in The Oxford Handbook of Philosophy of Physics. London: Oxford University Press.

Boltzmann, L. (1872). Weitere Studien über das Wärmegleichgewicht unter Gasmolekülen, Wiener Berichte 66, 275–370; in Boltzmann (1909) vol.I. pp. 316–402.

Boltzmann, L. (1877). Über die beziehung dem zweiten Haubtsatze der mechanischen Wärmetheorie und der Wahrscheinlichkeitsrechnung respektive den Sätzen über das Wärmegleichgewicht. Wiener Berichte, 76, 373–435.

Butterfield, J. (2011). Less is Different: Emergence and Reduction Reconciled, in. Foundations of Physical, 41, 1065–1135.

Cercignani, C. (1972). On the Boltzmann equation for rigid spheres, in. Transport Theory and Statistical Physics, 2, 211.

Earman, J. (2006). The past-hypothesis: not even false, in. History and Philosophy of Modern Physics, 37, 399–430.

Frigg, R. (2008). A Field Guide to Recent Work on the Foundations of Statistical Mechanics. In Rickles, D. (Ed.) The Ashgate Companion to Contemporary Philosophy of Physics, (pp. 99–196). London. Ashgate, 2008.

Frigg, R. (2011). Why Typicality Does Not Explain the Approach to Equilibrium. In Suarez, M. (Ed.), Probabilities, Causes and Propensities in Physics, (pp. 77–93). Dordrecht: Springer. Synthese Library.

Goldstein, S. (2001). Boltzmann’s approach to statistical mechanics, in Chance in Physics: Foundations and Perspective In Bricmond, J. et al. (Eds.), Lecture Notes in Physics 574, p.39-54. Berlin: Springer.

Goldstein, S. (2012). Typicality and Notions of Probability in Physics, in Probability in Physics. The Frontiers Collection, 59–71.

Grad, H. (1949). On the kinetic theory of rarefied gases, in. Communications Pure and Applied Mathematics, 2, 331–407.

Hemmo, M., & Shenker, O. (2012). Measure over Initial Conditions, in Probability in Physics. The Frontiers Collection, 87–98.

Illner, R., & Shinbrot, M. (1984). The Boltzmann equation: Global existence for a rare gas in a infinite vacuum, in . Communications of Mathematics Physiological, 95, 217–226.

Illner, R., & Pulvirenti, M. (1986). Global validity of the Boltzmann equation for a two-dimensional rare gas in a vacuum, in. Communications of Mathematics Physiological, 105, 189–203.

Illner, R., & Pulvirenti, M. (1989). Global validity of the Boltzmann equation for a two-dimensional and three-dimensional rare gas in vacuum: Erratum and improved result, in. Communications of Mathematics Physiological, 121, 143–146.

Lebowitz, J.L. (1981). Microscopic Dynamics and Macroscopic Laws. In Horton, C.W.Jr., Reichl, L.E., Szebehely, V.G. (Eds.) Long-Time Prediction in Dynamics, presentations from the workshop held in March 1981 in Lakeway, TX, (pp. 220–233). New York: John Wiley.

Loschmidt, J. (1876). Über die Zustand des Wärmegleichgewichtes eines Systems von Körpern mit Rücksicht auf die Schwerkraft in Wiener Berichte, 73: 128, 366.

Lanford, O.E. (1975). Time evolution of large classical systems In Moser, J. (Ed.) Dynamical Systems, Theory and Applications, (pp. 1–111). Berlin: Springer. Lecture Notes in Theoretical Physics Vol.38.

Lanford, O.E. (1976). On the derivation of the Boltzmann equation, in. Asterisque, 40, 117–137.

Lanford, O.E. (1981). The hard sphere gas in the Boltzmann-Grad limit, in. Physica, 106A, 70–76.

King, F.G. (1975). Ph.D, Dissertation, Department of Mathematics, University of California at Berkeley.

Norton, J. (2012). Approximation and Idealization: Why the Difference Matter, in. Philosophy of Science, 79, 207–232.

Pitowsky, I. (2012). Typicality and the Role of the Lebesgue Measure in Statistical Mechanics, in Probability in Physics. The Frontiers Collection, 41–58.

Price, H. (1996). In Times arrow and Archimedes point. New York: Oxford University Press.

Spohn, H. (1991). In Large Scale Dynamics of Interacting Particles. Heidelberg: Springer Verlag.

Spohn, H. (1997). Loschmidt’s reversibility argument and the H-theorem. In Fleischhacker, W., & Schlonfeld, T. (Eds.), Pioneering Ideas for the Physical and Chemical Sciences, Loschmidt’s Contributions and Modern Developments in Structural Organic Chemistry, Atomistics and Statistical Mechanics, (pp. 153–158). New York: Plenum Press.

Uffink, J. (2007). Compendium of the foundations of classical statistical physics. In Butterfield, J., & Earman, J. (Eds.), Handbook for the Philosophy of Physics. Amsterdam: Elsevier.

Zermelo, E. (1896). Über einen Satz der Dynamik und die mechanische Wärmetheorie. Annalen der Physik, 57, 485–494.

Acknowledgments