Abstract

With the advent of structured data in the form of social networks, genetic circuits and protein interaction networks, statistical analysis of networks has gained popularity over recent years. The stochastic block model constitutes a classical cluster-exhibiting random graph model for networks. There is a substantial amount of literature devoted to proposing strategies for estimating and inferring parameters of the model, both from classical and Bayesian viewpoints. Unlike the classical counterpart, there is a dearth of theoretical results on the accuracy of estimation in the Bayesian setting. In this article, we undertake a theoretical investigation of the posterior distribution of the parameters in a stochastic block model. In particular, we show that one obtains near-optimal rates of posterior contraction with routinely used multinomial-Dirichlet priors on cluster indicators and uniform or general Beta priors on the probabilities of the random edge indicators. Our theoretical results are corroborated through a small scale simulation study.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Data available in the form of networks are increasingly becoming common in applications ranging from brain connectivity, protein interactions, web applications and social networks to name a few, motivating an explosion of activity in the statistical analysis of networks in recent years (Goldenberg et al. 2010). Estimating large networks offers unique challenges in terms of structured dimension reduction and estimation in stylized domains, necessitating new tools for inference. A rich variety of probabilistic models have been studied for network estimation, ranging from the classical Erdos and Renyi graphs (Erdős and Rényi, 1961), exponential random graph models (Holland and Leinhardt, 1981), stochastic block models (Holland et al. 1983), Markov Graphs (Frank and Strauss, 1986) and latent space models (Hoff et al. 2002) to name a few.

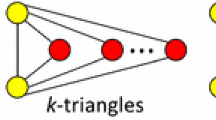

In a network with n nodes, there are O(n2) possible connections between pairs of nodes, the exact number depending on whether the network is directed/undirected and whether self-loops are permitted. A common goal of the parametric models mentioned previously is to parsimoniously represent the O(n2) probabilities of connections between pairs of nodes in terms of fewer parameters. The stochastic block model achieves this by clustering the nodes into k ≪ n groups, with the probability of an edge between two nodes solely dependent on their cluster memberships. The block model originated in the mathematical sociology literature (Holland et al. 1983), with subsequent widespread applications in statistics (Wang and Wong, 1987; Snijders and Nowicki, 1997; Nowicki and Snijders, 2001). In particular, the clustering property of block models offers a natural way to find communities within networks, inspiring a large literature on community detection (Bickel and Chen, 2009; Newman, 2012; Zhao et al. 2012; Karrer and Newman, 2011; Zhao et al. 2011; Amini et al. 2013). Various modifications of the stochastic block model have also been proposed, including the mixed membership stochastic block model (Airoldi et al. 2009) and degree-corrected stochastic block model (Dasgupta et al. 2004; Karrer and Newman, 2011).

Statistical accuracy of parameter estimates for inference in stochastic block models is of growing interest, with one of the objects of interest being the n × n matrix of probabilities of edges between pairs of nodes, which we shall denote by 𝜃 = (𝜃ij). Using a singular-value thresholding approach, Chatterjee (2014) obtained a \(\sqrt {k/n}\) rate for estimating 𝜃 with respect to the squared ℓ2 distance in a k-component stochastic block model. In a recent technical report, Gao et al. (2015) obtained an improved \(k^{2}/n^{2} + \log k/n\) rate by considering a least-squares type estimator. They also showed that the resulting rate is minimax-optimal; interestingly the minimax rate comprises of two parts which (Gao et al. 2015) refer to as the nonparametric and clustering rates respectively. Among other related work, Bickel et al. (2013) provided conditions for asymptotic normality of maximum likelihood estimators in stochastic block models.

In this article, we consider a Bayesian formulation of a stochastic block model where 𝜃 is equipped with a hierarchical prior and study the contraction of the posterior distribution assuming the data to be generated from a stochastic block model. We show that one obtains the minimax rate of posterior contraction with essentially automatic prior choices, such as multinomial-Dirichlet priors on cluster indicators and uniformFootnote 1 on the probability of the random edge indicators. Such priors are commonly used and there is a sizable literature (Snijders and Nowicki, 1997; Nowicki and Snijders, 2001; Golightly and Wilkinson, 2005; McDaid et al. 2013) on posterior sampling and inference in the stochastic block model. The theoretical development of our present work assumes the knowledge of the number of clusters a priori. In a different perspective, when such prior knowledge is unavailable, Geng et al. (2018) proposed an efficient Markov Chain Monte Carlo (MCMC) algorithm to simultaneously estimate the number of unknown clusters and clustering structure. While preparing this manuscript, we also came to know about some recent studies relating to various theoretical properties of such stochastic block models. For instance, Gao et al. (2018) considers a general unified framework of structured linear models that covers many complex statistical problems such as stochastic block models, bi-clustering, sparse linear regression, regression under group sparsity, multi-task learning and dictionary learning. The authors of this paper study the posterior contraction rate of their newly proposed elliptical Laplace distribution under this general set up. Refer also to Channarond et al. (2012), Suwan et al. (2016), van der Pas and van der Vaart (2018), and Hayashi et al. (2016), among others for recent works on the theoretical investigation of various aspects of the SBM.

Theoretical investigation of the posterior distribution in block models offers some unique challenges relative to the small but growing literature on posterior contraction in high-dimensional sparse problems (Castillo and van der Vaart 2012, 2015; Pati et al. 2014; Banerjee and Ghosal, 2014). When a large subset of the parameters are exactly or approximately zero, the sparsity assumption can be exploited to reduce the complexity of the model space to derive tests for the true parameter versus the complement of a neighborhood of the true parameter (Castillo and van der Vaart, 2012; Pati et al. 2014). It is now well appreciated that constructing such tests plays a crucial role in posterior asymptotics (Schwartz, 1965; Barron, 1988, 1999; Ghosal et al., 2000). In the present setting, we exploit the parsimonious structure of the parameter space as a result of clustering of n nodes into k << n communities to derive such tests. This also enables us to reduce the “effective” number of parameters (the edge probabilities) to be estimated from O(n2) to O(k2 + n). This dimension reduction is enabled by exploiting the structure of the model unlike the traditional notion of sparsity in typical sparse high-dimensional studies where a subset of the parameters are zero or negligible in magnitude.

The remainder of the paper is organized as follows. Some notations are introduced in Section 2. We provide an overview of the stochastic block models in Section 3. Our main theoretical results on posterior contraction are stated in Section 4. While the proof of Theorem 4.1 is given at the end of Section 4.2, proof of other main theoretical results are deferred to the Appendix. A small-scale simulation study is presented in Section 5 and some additional simulation results are also given in the Appendix. We conclude the paper with some discussions in Section 6.

2 Preliminaries

For \(\mathcal S \subset \mathbb {R}\), we shall denote the set of all d × d matrices with entries in \(\mathcal S\) by \(\mathcal S^{d \times d}\). For any \(B = (B_{ll^{\prime }}) \in \mathbb {R}^{d \times d}\), we denote the Euclidean (equivalently Frobenius) norm of B by \(\|B\| = \sqrt {{\sum }_{l=1}^{d} {\sum }_{l^{\prime }=1}^{d} B_{ll^{\prime }}^{2}}\). Given \(X^{*} \in \mathbb {R}^{d \times d}, W \in \mathbb {R}_{+}^{d \times d}\), let \(\xi _{d^{2}}(X^{*}; W)\) denote the unit ellipsoid with center X∗ and weight W given by

Viewed as a subset of \(\mathbb {R}^{d^{2}}\), the Euclidean volume of \(\xi _{d^{2}}(X^{*}; W)\), denoted by \(| \xi _{d^{2}}(X^{*}; W) |\), is

Given sequences {an},{bn}, \(a_{n} \lesssim b_{n}\) indicates there exists a constant K > 0 such that an ≤ Kbn for all large n. We say an ≍ bn when \(a_{n} \lesssim b_{n}\) and \(b_{n} \lesssim a_{n}\). Given any function f and some subset A in its domain, we denote by f(A) the image of A under f. Throughout, \(C, C^{\prime }\) denote positive constants whose values might change from one line to the next.

3 Stochastic Block Models

Let \(A = (A_{ij}) \in \{0, 1\}^{n \times n}\) denote the adjacency matrix of a network with n nodes, with Aij = 1 indicating the presence of an edge from node i to node j and Aij = 0 indicating a lack thereof. To keep the subsequent notation clean, we shall consider directed networks with self-loops so that Aij and Aji need not be the same and Aii can be both 0 and 1. Our theoretical results can be modified to undirected networks with or without self-loops in a straightforward fashion; refer to Section 4.1 for further discussion.

Let 𝜃ij denote the probability of an edge from node i to j, with \(A_{ij} \sim \text {{Bernoulli}}(\theta _{ij})\) independently for 1 ≤ i, j ≤ n. A stochastic block model postulates that the nodes are clustered into communities, with the probability of an edge between two nodes solely dependent on their community memberships. Specifically, let zi ∈{1,…, k} denote the cluster membership of the i th node and Q = (Qrs) ∈ [0,1]k×k be a matrix of probabilities, with Qrs indicating the probability of an edge from any node i in cluster r to any node j in cluster s. With these notations, a k-component stochastic block model is given by

We use \(\mathbb {E}_{\theta } \slash \mathbb {P}_{\theta }\) to denote an expectation/probability under the sampling mechanism (3.1).

The stochastic block model clearly imposes a parsimonious structure on the node probabilities 𝜃 = (𝜃ij) when k ≪ n, reducing the effective number of parameters from O(n2) to O(k2 + n). To describe the parameter space for 𝜃, we need to introduce some notations. For k ≤ n, let \(\mathcal Z_{n, k} = \left \{z = (z_{1}, \ldots , z_{n}) : z_{i} \in \{1, \ldots , k\}, 1 \le i \le n \right \}\) denote all possible clusterings of n nodes into k clusters.

For any 1 ≤ r ≤ k, z− 1(r) is used as a shorthand for {1 ≤ i ≤ n : zi = r}; the nodes belonging to cluster r. When z is clear from the context, we shall use nr = |z− 1(r)| to denote the number of nodes in cluster r; clearly \({\sum }_{r=1}^{k} n_{r} = n\). For the theoretical development in this paper, it is assumed that nr ≥ 1 for all \(r=1,\dots ,k\), that is, each cluster is assumed to be non-empty containing at least one observation.

With these notations, the parameter space Θk for 𝜃 is given by

For any \(z \in \mathcal Z_{n, k}\) and Q ∈ [0,1]k×k, we denote the corresponding 𝜃 ∈Θk by 𝜃z, Q, so that \(\theta ^{z, Q}_{ij} = Q_{z_{i} z_{j}}\). In fact, (z, Q)↦𝜃z, Q is a surjective map from \(\mathcal Z_{n, k} \times [0, 1]^{k \times k} \to {\varTheta }_{k}\), though it is clearly not injective.

Given \(z \in \mathcal Z_{n, k}\), let A[rs] denote the nr × ns sub matrix of A consisting of entries Aij with zi = r and zj = s. The joint likelihood of A under model (3.1) can be expressed as

A Bayesian specification of the stochastic block model can be completed by assigning independent priors to z and Q, which in turn induces a prior on Θk via the mapping (z, Q)↦𝜃z, Q. We generically use p(z, Q) = p(z)p(Q) to denote the joint prior on z and Q. The induced prior on Θk will be denoted by π(𝜃) and the corresponding posterior given data A = (Aij) will be denoted by πn(𝜃∣A). The following fact is useful and heavily used in the sequel: for any U ⊂Θk,

where the second equality uses the independence of z and Q. Specific choices of p(z) and p(Q) are discussed below.

We assume independent U(0,1) prior on the Qrs’s. We consider a hierarchical prior on z where each node has probability πr of being allocated to the r th cluster independently of the other nodes, and the vector of probabilities π = (π1,…, πk) follows a Dirichlet(α1,…, αk) prior. Here α1,…, αk are fixed hyper-parameters that do not depend on k or n; a default choice is αr = 1/2 for all r = 1,…, k. We further assume the number of clusters k to be known. Model (3.1) along with the prior specified above can be expressed hierarchically as follows:

A hierarchical specification as in (or very similar to) (3.5)–(3.8) has been commonly used in the literature; see for example, Snijders and Nowicki (1997), Nowicki and Snijders (2001), Golightly and Wilkinson (2005), and McDaid et al. (2013). Analytic marginalizations can be carried out due to the conjugate nature of the prior, facilitating posterior sampling (McDaid et al. 2013). In particular, using standard multinomial-Dirichlet conjugacy, the marginal prior of z can be written as

where we recall that  . The following lemma provides an upper bound to the prior ratio {p(z)/p(z0)} which is used subsequently in the proof of our main theorem.

. The following lemma provides an upper bound to the prior ratio {p(z)/p(z0)} which is used subsequently in the proof of our main theorem.

Lemma 3.1.

Assume \(z_{0} \in \mathcal Z_{n,k}\)with  for all r = 1,…k. Then, \(\max \limits _{z \in \mathcal Z_{n, k}} p(z)/p(z_{0}) \leq e^{C n \log k}\), where C is a positive constant.

for all r = 1,…k. Then, \(\max \limits _{z \in \mathcal Z_{n, k}} p(z)/p(z_{0}) \leq e^{C n \log k}\), where C is a positive constant.

Proof

Fix \(z \in \mathcal Z_{n,k}\). From Eq. 3.9, \(p(z)/p(z_{0}) = {\prod }_{r=1}^{k} {\varGamma }(n_{r} + \alpha _{r})/{\varGamma }\\(n_{0r} + \alpha _{r})\). Then

The first term is maximized over \(z \in \mathcal Z_{n,k}\) when nr = n for some r and ns = 0 for all s≠r. Further, replacing n0r by n/k for all r = 1,…, k only decreases the second term in the above display. Hence, letting \(\alpha _{(k)} = \max \limits \{\alpha _{1}, \ldots , \alpha _{k}\}\) and \(\alpha _{(1)} = \min \limits \{\alpha _{1}, \ldots , \alpha _{k}\}\),

Using the standard two sided bound (Abramowitz and Stegun, 1964), we obtain \(\log {\varGamma }(z) = \log (2 \pi )/2 + (z - 1/2) \log (z) - z + R(z)\) with 0 < R(z) < (12z)− 1 for z > 0, the dominating term in the right hand side of the above display being \(n \log \{ (n + \alpha _{(k)}) / (n/k + \alpha _{(1)}) \} \lesssim C n\log k\), concluding the proof. □

4 Posterior Contraction Rates in Stochastic Block Models

We are interested in contraction properties of the posterior πn(⋅∣A) assuming the true data-generating parameter \(\theta ^{0} \in {\varTheta }_{k}\). To measure the discrepancy in the estimation of \(\theta ^{0} \in {\varTheta }_{k}\), the mean squared error has been used in the frequentist literature,

where \(\hat {\theta }\) is an estimator of 𝜃0. Chatterjee (2014) proposed estimating 𝜃0 using a low rank decomposition of the adjacency matrix A followed by a singular value decomposition to obtain a convergence rate of \(\sqrt {k/n}\). More recently, Gao et al. (2015) considered a least squares type approach which can be related to maximum likelihood estimation where the Bernoulli likelihood is replaced by a Gaussian likelihood. They obtained a rate of \(k^{2}/n^{2} + \log k/n\), which they additionally showed to be the minimax rate over Θk, i.e.,

Interestingly, the minimax rate has two components, k2/n2 and \(\log k/n\). Gao et al. (2015) refer to the k2/n2 term in the minimax rate as the nonparametric rate, since it arises from the need to estimate k2 unknown elements in Q from n2 observations. The second part, \(\log k/n\), is termed as the clustering rate, which appears since the clustering configuration z is unknown and needs to be estimated from the data. Observe that the clustering rate grows logarithmically in k. Parameterizing k = nζ with ζ ∈ [0,1], the interplay between the two components becomes clearer (refer to equation 2.6 of Gao et al. 2015); in particular, the clustering rate dominates when k is small and the nonparametric rate dominates when k is large.

To evaluate Bayesian procedures from a frequentist standpoint, one seeks for the minimum possible sequence 𝜖n → 0 such that the posterior probability assigned to the complement of an 𝜖n-neighborhood (blown up by a constant factor) of 𝜃0 receives vanishingly small probabilities. The smallest such 𝜖n is called the posterior contraction rate (Ghosal et al. 2000). There is now a growing body of literature showing that Bayesian procedures achieve the frequentist minimax rate of posterior contraction (up to a logarithmic term) in models where the parameter dimension grows with the sample size; see Bontemps (2011), Castillo and van der Vaart (2012), Pati et al. (2014), Banerjee and Ghosal (2014), van der Pas et al. (2014), and Castillo et al. (2015) for some flavor of the recent literature.

We now state the main result of this article where we derive the contraction rate of the posterior arising from the hierarchical formulation (3.5)–(3.8).

Theorem 4.1.

Assume A = (Aij) is generated from a k-component stochastic block model (3.1) with the true data-generating parameter \(\theta ^{0} = (\theta ^{0}_{ij}) \in {\varTheta }_{k}\), where Θk is as in (3.2). Further assume that there exists a small constant δ ∈ (0,1/2) such that \( \theta ^{0}_{ij} \in (\delta , 1 - \delta )\)for all i, j = 1,…, n. Suppose the hierarchical Bayesian model (3.5)–(3.8) is fitted. Then, with \({\epsilon _{n}^{2}} = k^{2}\{ \log n + \log (\delta ^{-1})\}/ n^{2} + \log k/n\), and a sufficiently large constant M > 0,

for some C > 0 and for all n ≥ 1.

Remark 4.2.

Since \(\theta ^{0} \in {\varTheta }_{k}\), following the discussion after (3.2), there exists \(z^{0} \in \mathcal Z_{n, k}\)and Q0 ∈ [0,1]k×k such that \(\theta ^{0} = \theta ^{z^{0}, Q^{0}}\). The condition of the theorem posits that all entries of Q0 lie in (δ, 1 − δ). As long as δ ≥ n−a for any a > 0, the posterior contraction rate is the same (up to a constant) as in the case of fixed δ. The assumption \(\theta ^{0} \in {\varTheta }_{k}\)also implicitly implies that all the clusters have at least one observation, i.e.,  for all r = 1,…, k; otherwise there exists l < k such that \(\theta ^{0} \in {\varTheta }_{l}\).

for all r = 1,…, k; otherwise there exists l < k such that \(\theta ^{0} \in {\varTheta }_{l}\).

A proof of Theorem 4.1 can be found towards the end of Section 4.2 after some important auxiliary results which are instrumental in deriving the main theoretical results of this paper. Theorem 4.1 shows that as long as δ ≥ n−a for any a > 0, the posterior contracts at a (near) minimax rate of \(k^{2}\log n /n^{2} + \log k/n\). The nonparametric component of the rate is slightly hurt by a logarithmic term; appearance of such an additional logarithmic term is common in Bayesian nonparametrics.

It would be noteworthy that in Theorem 4.1 a uniform U(0,1) prior is assigned to the edge probabilities Qrs’s, while in a similar independent work, van der Pas and van der Vaart (2018) considered a more general Beta(β1, β2) distribution for Q’s that includes the uniform prior as a special case. While our main goal of inference is the recovery of the edge probabilities, van der Pas and van der Vaart (2018) focused on detection of the community memberships. A pertinent question that would be natural to ask in this context is whether our posterior contraction results can be extended further for a more general Beta prior. The following result, namely, Corollary 4.3 provides an affirmative answer to the aforesaid question. In particular, it says that, for recovery of the edge probabilities Qrs’, the posterior obtained from a more general Beta(β1, β2) prior contracts at the same rate as obtained for the uniform prior as in Theorem 4.1. As a matter of fact, it turns out that our general scheme of arguments for deriving the contraction rates works equally well even for this Beta prior.

Corollary 4.3.

Consider the set up of Theorem 4.1, where \(Q_{rs}\stackrel {ind}{\sim }\text {Beta}\\(\beta _{1},\beta _{2})\), for \(r,\ s=1,\dots ,k\), in Eq. 3.5instead of an U(0,1) prior. Then, with \({\epsilon _{n}^{2}} = k^{2}\{ \log n + \log (\delta ^{-1})\}/ n^{2} + \log k/n\), and a sufficiently large constant M > 0 (depending on (β1, β2))

for some C > 0 and for all n ≥ 1.

Proof of Corollary 4.3 above follows along exactly the same line of arguments as that of Theorem 4.1 and is given in the Appendix. An inspection of the proof of Theorem 4.1 reveals that the only technical difference between the proofs of the aforesaid results lies in a careful exploitation of a volume argument used in the proof of Theorem 4.1 under the more general Beta(β1, β2) prior for every possible choice of (β1, β2), while rest of the arguments remain unaltered.

4.1 Undirected Networks

Theorem 4.1 can be extended to the case of undirected networks with or without self-loops. For technical simplification, we consider the case when there are no self-loops. We highlight the key differences in the data generation and the prior specification below. Let zi ∈{1,…, k} denote the cluster membership of the i th node and Q = (Qrs) ∈ [0,1]k×k be a symmetric matrix of probabilities, with Qrs = Qsr indicating the probability of an edge between node i in cluster r and any node j in cluster s. Then an undirected version of Eq. 3.1 can be obtained by letting

and Aii = 𝜃ii = 0 for i = 1,…, n. The prior distributions are appropriately modified as:

We modify the discrepancy measure as

where 𝜃, 𝜃0 are in the parameter space

Then the following version of Theorem 4.1 is true for undirected networks:

Theorem 4.4.

Assume A = (Aij) is generated as in Eq. 4.4with \(\theta ^{0} = (\theta ^{0}_{ij}) \in {{\varTheta }_{k}^{u}}\), where \({{\varTheta }_{k}^{u}}\)is as in Eq. 4.9. Further assume that there exists a small constant δ ∈ (0,1/2) such that \( \theta ^{0}_{ij} \in (\delta , 1 - \delta )\)for all 1 ≤ i ≤ j ≤ n. Suppose the hierarchical Bayesian model (4.5)–(4.7) is fitted. Then, with \({\epsilon _{n}^{2}} = k^{2}\{ \log n + \log (\delta ^{-1})\}/ n^{2} + \log k/n\), and a sufficiently large constant M > 0, the conclusion (4.3) is true.

A sketch of the proof of Theorem 4.4 is given in the Appendix.

4.2 Geometry of Θk

In this section, we derive a number of auxiliary results aimed at understanding the geometry of the parameter space Θk. These results are useful in proving our main concentration result presented in Theorem 4.1.

We first state a testing lemma which harnesses the ability of the likelihood to separate points in the parameter space.

Lemma 4.5.

Assume \(\theta ^{0} \ne \theta ^{1} \in {\varTheta }_{k}\)and let \(E = \{\theta \in [0, 1]^{n \times n} : \left \Vert \theta - \theta ^{1}\right \Vert \le \left \Vert \theta ^{1} - \theta ^{0}\right \Vert /2\}\)be an Euclidean ball of radius ∥𝜃1 − 𝜃0∥/2 around 𝜃1 inside [0,1]n×n. Based on \(A_{ij} \stackrel {\text {ind}}\sim \text {{Bernoulli}}(\theta _{ij})\)for i, j = 1,…, n, consider testing

H0 : 𝜃 = 𝜃0 versus H1 : 𝜃 ∈ E. There exists a test function Φ such that

for constants C1, C2 > 0 independent of n, 𝜃1 and x 𝜃0.

Proof

Define the test function Φ as

where  denotes the indicator of a set. We show below that this test has the desired error rates (4.10).

denotes the indicator of a set. We show below that this test has the desired error rates (4.10).

We first bound the type-I error \(\mathbb {E}_{\theta ^{0}} ({\varPhi })\). Noting that under \(\mathbb {P}_{\theta ^{0}}\), \((A_{ij} - \theta ^{0}_{ij})\) are independent zero mean random variables with \(|A_{ij} - \theta ^{0}_{ij}| < 1\), we use a version of Hoeffding’s inequality (refer to Proposition 5.10 of Vershynin 2012) to conclude that,

for a constant C1 > 0 independent of n, 𝜃1 and 𝜃0.

We next bound the type-II error \(\sup _{\theta \in E} \mathbb {E}_{\theta }(1 - {\varPhi })\). Fix 𝜃 ∈ E. We have,

where we abbreviate \(\left \langle \theta ^{\prime }, \theta ^{\prime \prime }\right \rangle = {\sum }_{i=1}^{n} {\sum }_{j=1}^{n} \theta ^{\prime }_{ij} \theta ^{\prime \prime }_{ij}\). Bound

where the penultimate step used the Cauchy–Schwarz inequality along with the fact that \(\left \Vert \theta - \theta ^{1}\right \Vert \le \left \Vert \theta ^{1} - \theta ^{0}\right \Vert /2\). Substituting in Eq. 4.11 and noting that under \(\mathbb {P}_{\theta }\), (Aij − 𝜃ij) are independent zero mean bounded random variables, another application of Hoeffding’s inequality yields

for some constant C2 > 0 independent of n and 𝜃. Taking a supremum over 𝜃 ∈ E yields the desired result. □

Our next result is concerned with the structure of a specific type of Euclidean balls inside Θk. Recall that 𝜃z, Q denotes the element of Θk with \(\theta ^{z, Q}_{ij} = Q_{z_{i} z_{j}}\). For \(z \in \mathcal Z_{n, k}\), let

denote a slice of Θk along z. In other words, given z, Θk(z) is the image of the map Q↦𝜃z, Q in Θk. Suppose \(\theta ^{*} = \theta ^{z^{*}, Q^{*}} \in {\varTheta }_{k}\), and consider a ball B(z) in Θk(z) centered at 𝜃∗ of the form \(B(z) = \left \{\theta \in {\varTheta }_{k}(z) : \|\theta - \theta ^{*}\| < t \right \}\) for some t > 0. If z∗ = z, then it is straightforward to observe that

wherewe recall that  for r = 1,…, k. Therefore, although a subset of [0,1]n×n, B(z) can be identified with a k2-dimensional ellipsoid in [0,1]k×k. When z∗≠z, one no longer has a nice identity as above and the geometry of B(z) is more difficult to describe. However, we show below in Lemma 4.6 that B(z) is always contained inside a set \(\widetilde {B}(z)\) in Θk(z) which can be identified with a k2-dimensional ellipsoid in [0,1]k×k. Recall our convention for describing ellipsoids from Eq. 2.1.

for r = 1,…, k. Therefore, although a subset of [0,1]n×n, B(z) can be identified with a k2-dimensional ellipsoid in [0,1]k×k. When z∗≠z, one no longer has a nice identity as above and the geometry of B(z) is more difficult to describe. However, we show below in Lemma 4.6 that B(z) is always contained inside a set \(\widetilde {B}(z)\) in Θk(z) which can be identified with a k2-dimensional ellipsoid in [0,1]k×k. Recall our convention for describing ellipsoids from Eq. 2.1.

Lemma 4.6.

Fix \(z^{*} \in \mathcal Z_{n, k}, Q^{*} \in [0, 1]^{k \times k}\), and let \(\theta ^{*} = \theta ^{z^{*}, Q^{*}}\). For \(z \in \mathcal Z_{n, k}\)and t > 0, let \(B(z) = \left \{\theta \in {\varTheta }_{k}(z) : \|\theta - \theta ^{*}\| < t \right \}\). Set Wrs = nrns/t2 and W = (Wrs), where  for r = 1,…, k. Then, \(B(z) \subseteq \widetilde {B}(z)\), where

for r = 1,…, k. Then, \(B(z) \subseteq \widetilde {B}(z)\), where

for some \(\bar {Q}^{*} \in [0, 1]^{k \times k}\) depending on Q∗, z∗ and z. In particular, if z∗ = z, then \(\bar {Q}^{*} = Q^{*}\)and the containment becomes equality, i.e., \(B(z) = \widetilde {B}(z)\).

Proof

Define \(\bar {\theta } = \text {arg min}_{\theta \in {\varTheta }_{k}(z)} \left \Vert \theta - \theta ^{*}\right \Vert ^{2}\). According to the definition of \(\bar {\theta }\), we have from the Pythagorean identity

for 𝜃 ∈Θk(z). Therefore, \(\left \Vert \theta - \bar {\theta }\right \Vert \leq \left \Vert \theta - \theta ^{*}\right \Vert \), which implies \(\{\theta \in {\varTheta }_{k}(z): \left \Vert \theta - \theta ^{*}\right \Vert \leq t \} \subset \{\theta \in {\varTheta }_{k}(z): \left \Vert \theta - \bar {\theta }\right \Vert \leq t\}\). Since \(\bar {\theta } \in {\varTheta }_{k}(z)\), there exists \(\bar {Q}^{*} \in [0, 1]^{k \times k}\) such that \(\bar {\theta }_{ij} =\bar {Q}^{*}_{z_{i} z_{j}}\). This completes the proof of the first part. When z = z∗, the proof of the second part is completed by noting that

□

Remark 4.7.

From Eq. 2.1, \(\xi _{k^{2}}(\bar {Q}^{*}, W)\)in Lemma 4.6 is the collection of all Q satisfying \({\sum }_{r=1}^{k} {\sum }_{s=1}^{k} n_{r} n_{s}(Q_{rs} - \bar {Q}_{rs}^{*})^{2} < t^{2}\). The last part of Lemma 4.6 is consistent with the discussion preceding (4.13). When z∗ = z, Eq. 4.13implies that B(z) consists of all 𝜃z, Q with Q ∈ [0,1]k×k satisfying \({\sum }_{r=1}^{k} {\sum }_{s=1}^{k} n_{r} n_{s} (Q_{rs} - Q_{rs}^{*})^{2} < t^{2}\).

Corollary 4.8.

Inspecting the proof of Lemma 4.6, the condition Q ∈ [0,1]k×k is only used to show that \(\bar {Q}^{*} \in [0, 1]^{k \times k}\). If we let Q to be unrestricted, then the containment relation continues to hold as subsets of \(\mathbb {R}^{k \times k}\), i.e.,

with equality when z∗ = z.

Lemma 4.6 crucially exploits the lower dimensional structure underlying the parameter space Θk and is used subsequently multiple times. First, recall from Eq. 3.4 that one needs a handle on p(Q : 𝜃z, Q ∈ U) to bound the prior probability of U ⊂Θk. In particular, if U = {∥𝜃 − 𝜃0∥ < t}, then p(Q : 𝜃z, Q ∈ U) equals the volume of U ∩Θk(z), which can be suitably bounded by the volume of the bounding k2 dimensional ellipsoid. Second, a handle on the size of balls in Θk facilitates calculating the complexity of the model space (in terms of metric entropy) which is pivotal in proving the posterior contraction; in particular, to extend the test function in Lemma 4.5 to construct test functions against more complex alternatives in Lemma 4.9 below. Once again, the dimensionality reduction is key to preventing the metric entropy from growing too fast.

Lemma 4.9.

Recall 𝜖n from Theorem 4.1. Assume \(\theta ^{0} \in {\varTheta }_{k}\)and for l ≥ 1, let \(U_{l, n} = \left \{ \theta \in {\varTheta }_{k} : l n \epsilon _{n} \le \|\theta - \theta ^{0}\| < (l+1) n \epsilon _{n} \right \}\). Based on \(A_{ij} \stackrel {\text {ind}}\sim \text {{Bernoulli}}(\theta _{ij})\)for i, j = 1,…, n, consider testing H0 : 𝜃 = 𝜃0 versus H1 : 𝜃 ∈ Ul, n. There exists a test function Φl, n such that

for constants C1, C2 > 0 independent of n.

Proof

Since \(\theta ^{0} \in {\varTheta }_{k}\), there exists \(z^{0} \in \mathcal Z_{n,k}\) and Q0 ∈ [0,1]k×k with \(\theta ^{0} = \theta ^{z^{0}, Q^{0}}\). For \(z \in \mathcal Z_{n, k}\), define Ul, n(z) = Ul, n ∩Θk(z), where Θk(z) is as in Eq. 4.12. Clearly,

and \(U_{l, n} \subset \cup _{z \in \mathcal Z_{n, k}} U_{l,n}(z)\). We first use Lemma 4.5 to construct tests against Ul, n(z) for fixed z. Our desired test is obtained by taking the maximum of all such test functions.

Fix \(z \in \mathcal {Z}_{n, k}\). Let \(\mathcal {N}_{l,n}(z) = \{\theta _{l, n, h} \in U_{l, n}(z): h \in I_{l,n}(z) \}\) be a maximalln𝜖n/2-separated set inside Ul, n(z) for some index set Il, n(z); i.e., \(\mathcal N_{l,n}(z)\) is such that ∥𝜃1 − 𝜃2∥≥ ln𝜖n/2 for all \(\theta ^{1} \ne \theta ^{2} \in \mathcal {N}_{l,n}(z)\), and no subset of Ul, n(z) containing \(\mathcal N_{l,n}(z)\) has this property. We provide a volume argument to determine an upper bound for |Il, n(z)|, the cardinality of \(\mathcal N_{l, n}(z)\). The separation property implies that Euclidean balls of radius ln𝜖n/4 centered at the points in \(\mathcal N_{l, n}(z)\) are disjoint. Since \(B_{h}^{+} := \left \{\theta ^{z, Q} : Q \in \mathbb {R}^{k \times k}, |\theta ^{z, Q} - \theta _{l, n, h}| < l n \epsilon _{n}/4 \right \}\) is contained inside an Euclidean ball of radius ln𝜖n/4 centered at 𝜃l, n, h, the sets \(B_{h}^{+}\) are disjoint as h varies over Il, n(z). By the triangle inequality, all \(B_{h}^{+}\)s lie inside \(B^{+} = \left \{\theta ^{z, Q} : Q \in \mathbb {R}^{k \times k}, \|\right .\)\(\left .\theta ^{z,Q} - \theta ^{0}\| \le (5l/4+1) n \epsilon _{n} \right \}\), since ∥𝜃z, Q − 𝜃0∥≤∥𝜃z, Q − 𝜃l, n, h∥ + ∥𝜃l, n, h − 𝜃0∥≤ (l + 1)n𝜖n + ln𝜖n/4.

It should be noted that the sets \(B_{h}^{+}\)s and B+ are constructed in a way that Q is not restricted to be inside [0,1]k×k. This allows us to invoke Corollary 4.8 to identify \(B_{h}^{+}\) and B+ with appropriate ellipsoids in \(\mathbb {R}^{k^{2}}\) and simplify volume calculations. First, since 𝜃l, n, h ∈Θk(z) for each h, it follows from (the equality part of) Corollary 4.8 that \(B_{h}^{+} = \{\theta ^{z,Q} : Q \in \xi _{k^{2}}(\bar {Q}_{h}, \widetilde {W})\}\) with \(\bar {Q}_{h}\) constructed as in the proof of Lemma 4.6 and \(\widetilde {W}_{rs} = n_{r} n_{s}/\{(l n \epsilon _{n})^{2}\}\). The equality is crucially used below; also note that \(\widetilde {W}\) does not depend on h. Invoking Corollary 4.8 one more time, we obtain \(B^{+} \subset \{\theta ^{z,Q} : Q \in \xi _{k^{2}}(\bar {Q}^{0}, W)\}\), with \(W_{rs} = n_{r} n_{s}/[\{ (5l/4+1) n \epsilon _{n} \}\text {} ^{2}]\). We conclude that the Euclidean ellipsoids \(\xi _{k^{2}}(\bar {Q}_{h}, \widetilde {W})\) are disjoint as h varies over Il, n(z) and all of them are contained in \(\xi _{k^{2}}(\bar {Q}^{0}, W)\). Comparing volumes,

Using the volume formula in Eq. 2.2 and canceling out common terms, we finally have

We are now in a position to construct the test. The maximality of \(\mathcal N_{l,n}(z)\) implies that \(\mathcal N_{l,n}(z)\) is an ln𝜖n/2-net of Ul, n(z), i.e., the sets El, n, z, h = {𝜃 ∈ [0,1]n×n : ∥𝜃 − 𝜃l, n, h∥ < ln𝜖n/2} cover Ul, n(z) as h varies. For each \(\theta _{l,n,h} \in \mathcal N_{l,n}(z)\), consider testing H0 : 𝜃 = 𝜃0 versus H1 : 𝜃 ∈ El, n, z, h using the test function from Lemma 4.5. Lemma 4.5 is applicable since ∥𝜃0 − 𝜃l, n, h∥≥ ln𝜖n; let Φl, n, z, h denote the corresponding test with type-I and II errors bounded above by \(e^{-C l^{2} n^{2} {\epsilon _{n}^{2}}}\). Define \({\varPhi }_{l,n} = \max \limits _{z \in \mathcal Z_{n,k}} \max \limits _{h \in I_{l,n}(z)} {\varPhi }_{l,n,z,h}\). For any 𝜃 ∈ Ul, n, there exists \(z \in \mathcal Z_{n,k}\) and h ∈ Il, n(z) such that 𝜃 ∈ El, n, z, h, so that \(\mathbb {E}_{\theta } (1 - {\varPhi }_{l,n}) \le \mathbb {E}_{\theta }(1 - {\varPhi }_{l,n,z,h}) \le e^{-C l^{2} n^{2} {\epsilon _{n}^{2}}}\). Taking supremum over 𝜃 ∈ Ul, n delivers the desired type-II error. Further, the type-I error of Φl, n can be bounded as

since \(|\mathcal Z_{n,k}| = k^{n}\) and by Eq. 4.18, \(|I_{l,n}(z)| \le 9^{k^{2}}\) for all z. The conclusion then follows since \(n^{2} {\epsilon _{n}^{2}} = k^{2}\{\log n + \log (\delta ^{-1})\} + n \log k \gtrsim k^{2} + n \log k\). □

As commented earlier, below we present the proof of Theorem 4.1 already stated in Section 4 of this paper.

Proof 5 of Theorem 4.1.

Let \(\mathbb {E}_{0} \slash \mathbb {P}_{0}\) denote an abbreviation of \(\mathbb {E}_{\theta ^{0}} \slash \mathbb {P}_{\theta ^{0}}\). Since \(\theta ^{0} \in {\varTheta }_{k}\), there exists some \(z^{0} \in \mathcal Z_{n,k}\) and Q0 ∈ [0,1]k×k with \(\theta ^{0} = \theta ^{z^{0}, Q^{0}}\). Recall \({\epsilon _{n}^{2}} = k^{2} \{\log n + \log (\delta ^{-1}) \}/n^{2} +\)\( \log k/n\) and define \(U_{n} = \left \{\theta \in {\varTheta }_{k}: \|\theta - \theta ^{0}\|^{2} > M^{2} n^{2} {\epsilon _{n}^{2}}\right \}\) for some large constant M > 0 to be chosen later. Letting \(f_{\theta _{ij}}(A_{ij}) = \theta _{ij}^{A_{ij}} (1 - \theta _{ij})^{1-A_{ij}}\) denote the Bernoulli(𝜃ij) likelihood evaluated at Aij, the posterior probability assigned to Un can be written as

where \(\mathcal N_{n}\) and \(\mathcal D_{n}\) respectively denote the numerator and denominator of the fraction in Eq. 4.20. Let \(\mathcal F_{n}\) denote the σ-field generated by \(\tilde {A} = (\tilde {A}_{ij})\), with \(\tilde {A}_{ij}\) independently distributed as \(\text {{Bernoulli}}(\theta _{ij}^{0})\); the true data generating distribution. We first claim that there exists a set \(\mathcal A_{n} \in \mathcal F_{n}\) where we can bound \(\mathcal D_{n}\) from below with large probability under \(\mathbb {P}_{0}\) in Lemma 4.10. The proof is adapted from Lemma 10 of Ghosal and van der Vaart (2007).

Lemma 4.10.

Assume 𝜃0 satisfies the conditions of Theorem 4.1. Then, there exists a set \(\mathcal A_{n}\) in the σ-field \(\mathcal F_{n}\)with \( \mathbb {P}_{0}(\mathcal A_{n}) \geq 1 - C/ (n^{2} {\epsilon _{n}^{2}})\)for some C > 0, such that within \(\mathcal A_{n}\),

Proof

Let \(f_{\theta _{ij}}(A_{ij})\) denote the likelihood for Bernoulli(𝜃ij) evaluated at Aij. Letting \(B_{l, n}= \{\theta \in {\varTheta }_{k}: l^{2} {\epsilon _{n}^{2}} \leq (1/n^{2})\|\theta - \theta ^{0}\|^{2} \leq (l+1)^{2} {\epsilon _{n}^{2}} \}\). Define

and \(\mathcal {A}_{n} = \{ A:\! {\int \limits } {\prod }_{1\leq i,j \leq n } f_{\theta _{ij}}(A_{ij}) / f_{\theta ^{0}_{ij}}(A_{ij}) p(dz, dQ) \!\geq \! e^{- n^{2} {\epsilon _{n}^{2}}} {\varPi }(B_{n}(\theta ^{0}; \epsilon _{n}) \}\). The following fact is a straightforward modification of Lemma 5 of Ghosal and Roy (2006). Let 0 < δ < 1/2, δ < α, β < 1 − δ. Then there exists a constant L such that

Since \(\delta < \theta _{ij}^{0} \leq 1- \delta \) for 1 ≤ i, j ≤ n, it follows from the above fact that \(B_{n}(\theta ^{0}; \epsilon _{n}) \supset \{\theta : \left \Vert \theta - \theta ^{0}\right \Vert ^{2} \leq n^{2} \delta ^{2} {\epsilon _{n}^{2}} \}\). It now follows from Lemma 10 of Ghosal and van der Vaart (2007) that \( \mathbb {P}_{0}(\mathcal A_{n}) \geq 1 - C/ (n^{2} {\epsilon _{n}^{2}})\) for some C > 0. □

In view of Lemma 4.10, it is sufficient to provide an upper bound to

For l ≥ M, let \(U_{l, n} = \left \{ \theta \in {\varTheta }_{k} : l^{2} n^{2} {\epsilon _{n}^{2}} \le \left \Vert \theta - \theta ^{0}\right \Vert ^{2} < (l+1)^{2} n^{2} {\epsilon _{n}^{2}} \right \}\) denote an annulus in Θk centered at 𝜃0 with inner and outer Euclidean radii ln𝜖n and (l + 1)n𝜖n respectively. Using a standard testing argument (see, for example, the proof of Proposition 5.1 in Castillo and van der Vaart (2012) 2012) in conjunction with Lemma 4.10, one arrives at

where Φl, n is the test function constructed in Lemma 4.9 for testing H0 : 𝜃 = 𝜃0 versus H1 : 𝜃 ∈ Ul, n with error rates as in Eq. 4.16. Recall Ul, n(z) = Ul, n ∩Θk(z) and its equivalent representation in Eq. 4.17 from the proof of Lemma 4.9. Since \(U_{l,n} \subseteq \cup _{z \in \mathcal Z_{n,k}} U_{l,n}(z)\), from Eq. 3.4,

where p(z) is the prior probability (3.9) of z under the Dirichlet-multinomial prior.

Next, consider the term \({\varPi }(\left \Vert \theta - \theta ^{0}\right \Vert ^{2} < n^{2} \delta ^{2} {\epsilon _{n}^{2}})\) in the denominator of the expression for βl, n. Bound \({\varPi }(\left \Vert \theta - \theta ^{0}\right \Vert ^{2} < n^{2} \delta ^{2} {\epsilon _{n}^{2}}) \ge {\varPi }(\left \Vert \theta - \theta ^{0}\right \Vert ^{2} < n^{2} \delta ^{2} {\epsilon _{n}^{2}} \mid z = z^{0}) p(z^{0})\) and using Lemma 4.6 once again,

The probability in the right hand side of the above display is the volume of the intersection of an ellipsoid with [0,1]k×k, and therefore we cannot simply replace the probability by the volume of the ellipsoid. Instead, we embed an appropriate rectangle inside the intersection of the ellipsoid and [0,1]k×k. We claim that

First, based on our assumption that all entries of Q0 are bounded away from 0 and 1 and the fact that 𝜖n → 0, it is immediate that the rectangle is contained in [0,1]k×k for sufficiently large n. Second, for any Q with \(|Q_{rs} - Q^{0}_{rs}| \le \delta \epsilon _{n}/2\) for all 1 ≤ r, s ≤ k, we have

thereby proving the claim in Eq. 4.24. Now we can bound \({\varPi }(\left \Vert \theta - \theta ^{0}\right \Vert ^{2} < n^{2} \delta ^{2} {\epsilon _{n}^{2}} \mid z = z^{0})\) from below by the volume of the rectangle, which equals \((\epsilon _{n} \delta )^{k^{2}}\). Since n0r ≥ 1 for all r = 1,…, k, invoke Lemma 3.1 to bound \(\max \limits _{z \in \mathcal Z_{n, k}}p(z) / p(z_{0}) \leq e^{C n \log k}\). Combining this with error rates (4.16) in (4.21) we obtain,

For \(n^{2}{\epsilon _{n}^{2}} = k^{2} \{\log n + \log (\delta ^{-1})\} + n \log k\), the right hand size of Eq. 4.25 converges to zero for all M larger than a suitable constant. □

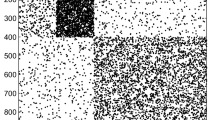

5 Simulation Studies

In this section, we consider a small-scale simulation study to examine the accuracy in estimating 𝜃 as the number of nodes n in the network increases. We simulate 100 replicates of an SBM network using k = 3,4,5 equi-sized communities, with n = 120, 150, and 200. The off-diagonal entries of Q are set to 0.1 and all the diagonal entries are set to a constant ρ > 0.1. The smaller the value of ρ is, the more vague the block structure is in the network.

For each n, we consider ρ = 0.3,0.5,0.8. The true community assignment z0 is set to {(1)n/3,(2)n/3,(3)n/3}, where (x)k denotes the vector obtained by replicated x, k times. We consider (i) an SBM with k = 3, (ii) an SBM with k = 4 and (iii) an SBM with k = 5; note the number of communities is mis-specified in (ii). The following Gibbs sampler is employed to sample from the posterior distribution of the parameters.

5.1 Gibbs sampling for fixed k (directed networks)

Define

Then the full-conditional distributions of π and Q can be obtained as

Observe that

Keeping the terms involving zi,

Hence,

The Gibbs sampler proceeds by cycling through π∣−, Qrs∣ − and zi∣z−i, A, π, Q. We set αj = 1, j = 1,…, k and ran the MCMC for 3000 iterations with a burn-in of 1000. The posterior mean \(\hat {\theta }\) of 𝜃 and the posterior mode of z post burn-in are obtained as the Bayes estimates. As a measure of discrepancy between 𝜃 and 𝜃0, we compute mean squared error (MSE): (1/n2)∥𝜃 − 𝜃0∥2 and for z and z0, we obtained the Rand-index (RI) where \(\text {RI}=\# \text {mismatched pairs} / {n \choose 2}\). The results are summarized in Tables 1 & 2. It is evident that for a fixed ρ, MSE decreases and RI increases as n increases. On the other hand, for a fixed n, as ρ increases, the clustering pattern is more evident in the network leading to improved accuracy in estimating z0.

Interestingly, the results for known k are very similar with that of the mis-specified k indicating the robustness of the Bayesian approach. It is possible that a phenomenon similar to overfitted Gaussian mixtures (Rousseau and Mengersen, 2011) is at work here.

6 Discussion

In this article, we presented a theoretical investigation of posterior contraction in stochastic block models. One crucial assumption in our current results is that the true number of clusters k is known. Geng et al. (2018) studied inference in a stochastic block model with an unknown number of clusters within a Bayesian non-parametric framework. Their objective was two-fold : (i) simultaneous estimation of the number of clusters and the cluster structure and (ii) consistent cluster detection. Towards that, they employed a mixture of finite mixtures (MFM) as a prior distribution for k. A natural and interesting extension of our present work would be to theoretically explore the situation when k remains unknown and an MFM or a mixture of Dirichlet processes prior is used to adaptively learn about k. We leave this as an important research problem in future. In a very recent technical report, Gao et al. (2018) provided general conditions for optimal posterior contraction rates in stochastic block models adaptively for all values of k ∈{1,2,…, n} using Laplace-type priors on Q and a complexity prior on k. Their proposed elliptical Laplace prior distribution is theoretically interesting and accommodates many statistical problems in a unified way. Contrary to that, we worked with a more natural and easily implementable uniform prior specification which is widely used in network analysis problems. An interesting direction is to develop a fully Bayesian approach with the more commonly used uniform prior on Q and a complexity prior on k and to show that the corresponding procedure yields optimal rates of posterior contraction adaptively for all values of k ∈{1,2,…, n}. Such an approach can be connected to nonparametric estimation of networks (Bickel and Chen, 2009) where one typically assumes a more flexible way of data generation; \(A_{ij} \mid \xi _{i}, \xi _{j} \sim \text {{Bernoulli}}\{f(\xi _{i}, \xi _{j})\}\), where f is a function from [0,1]2 → [0,1], called a graphon and ξis are i.i.d. random variables on [0,1]. It is well known (refer, for example, to Szemerédi 1975; Lovász and Szegedy 2006; Airoldi et al. 2013; Gao et al. 2015) that one can approximate a sufficiently smooth graphon using elements of Θk. When the smoothness of the graphon is unknown, the prior on k should facilitate the posterior to concentrate in the appropriate region. Using such approximation results and modifying our Theorem 4.1, it may be possible to derive posterior contraction rates for estimating a graphon.

Notes

Our result continues to hold for general Beta priors on the edge-inclusion probabilities.

References

Abramowitz, M. and Stegun, I. (1964). Handbook of mathematical functions: with formulas, graphs, and mathematical tables. No. 55. Courier Corporation.

Airoldi, E. M., Blei, D. M., Fienberg, S. E. and Xing, E. P. (2009). Mixed membership stochastic blockmodels. In: Advances in Neural Information Processing Systems. pp. 33–40.

Airoldi, E., Costa, T. and Chan, S. (2013). Stochastic blockmodel approximation of a graphon: Theory and consistent estimation. In: Advances in Neural Information Processing Systems. pp. 692–700.

Amini, A. A., Chen, A., Bickel, P. J. and Levina, E. (2013). Pseudo-likelihood methods for community detection in large sparse networks. The Annals of Statistics 41, 4, 2097–2122.

Banerjee, S. and Ghosal, S. (2014). Posterior convergence rates for estimating large precision matrices using graphical models. Electronic Journal of Statistics 8, 2, 2111–2137.

Barron, A. R. (1988). The exponential convergence of posterior probabilities with implications for Bayes estimators of density functions. Univ.

Barron, A., Schervish, M. J. and Wasserman, L. (1999). The consistency of posterior distributions in nonparametric problems. The Annals of Statistics 27, 2, 536–561.

Bickel, P. and Chen, A. (2009). A nonparametric view of network models and Newman–Girvan and other modularities. Proceedings of the National Academy of Sciences106, 50, 21068–21073.

Bickel, P. J., Choi, D., Chang, X. and Zhang, H. (2013). Asymptotic normality of maximum likelihood and its variational approximation for stochastic blockmodels. The Annals of Statistics 41, 4, 1922–1943.

Bontemps, D. (2011). Bernstein–von mises theorems for gaussian regression with increasing number of regressors. The Annals of Statistics 39, 5, 2557–2584.

Castillo, I. and van der Vaart, A. W. (2012). Needles and straw in a haystack: Posterior concentration for possibly sparse sequences. The Annals of Statistics 40, 4, 2069–2101.

Castillo, I., Schmidt-Hieber, J. and van der Vaart, A. (2015). Bayesian linear regression with sparse priors. Ann. Statist. 43, 5, 1986–2018. https://doi.org/10.1214/15-AOS1334.

Channarond, A., Daudin, J. -J. and Robin, S. (2012). Classification and estimation in the stochastic block model based on the empirical degrees. Electronic Journal of Statistics 6, 2574–2601.

Chatterjee, S. (2014). Matrix estimation by universal singular value thresholding. The Annals of Statistics 43, 1, 177–214.

Dasgupta, A., Hopcroft, J. E. and McSherry, F. (2004). Spectral analysis of random graphs with skewed degree distributions. IEEE, p. 602–610.

Erdős, P. and Rényi, A. (1961). On the evolution of random graphs. Bull. Inst. Internat. Statist 38, 4, 343–347.

Frank, O. and Strauss, D. (1986). Markov graphs. Journal of the American Statistical association 81, 395, 832–842.

Gao, C., Lu, Y. and Zhou, H. H. (2015). Rate-optimal graphon estimation. The Annals of Statistics 43, 6, 2624–2652.

Gao, C., van der Vaart, A. W. and Zhou, H. H. (2018). A general framework for bayes structured linear models. arXiv:1506.02174.

Geng, J., Bhattacharya, A. and Pati, D. (2018). Probabilistic community detection with unknown number of communities. Journal of American Statistical Association (to appear).

Ghosal, S., Ghosh, J. K. and van der Vaart, A. W. (2000). Convergence rates of posterior distributions. Annals of Statistics 28, 2, 500–531.

Ghosal, S. and Roy, A. (2006). Posterior consistency of gaussian process prior for nonparametric binary regression. The Annals of Statistics, 2413–2429.

Ghosal, S. and van der Vaart, A. W. (2007). Convergence rates of posterior distributions for noniid observations. The Annals of Statistics 35, 1, 192–223.

Goldenberg, A., Zheng, A., Fienberg, S. and Airoldi, E. (2010). A survey of statistical network models. Foundations and Trends®;, in Machine Learning 2, 2, 129–233.

Golightly, A. and Wilkinson, D. J. (2005). Bayesian inference for stochastic kinetic models using a diffusion approximation. Biometrics 61, 3, 781–788.

Hayashi, K., Konishi, T. and Kawamoto, T. (2016). A tractable fully bayesian method for the stochastic block model. arXiv:1602.02256.

Hoff, P. D., Raftery, A. E. and Handcock, M. S. (2002). Latent space approaches to social network analysis. Journal of the American Statistical association 97, 460, 1090–1098.

Holland, P. W. and Leinhardt, S. (1981). An exponential family of probability distributions for directed graphs. Journal of the American Statistical association 76, 373, 33–50.

Holland, P. W., Laskey, K. B. and Leinhardt, S. (1983). Stochastic blockmodels: First steps. Social Networks 5, 2, 109–137.

Karrer, B. and Newman, M. E. J. (2011). Stochastic blockmodels and community structure in networks. Physical Review E 83, 1, 016107.

Lovász, L. and Szegedy, B. (2006). Limits of dense graph sequences. Journal of Combinatorial Theory, Series B 96, 6, 933–957.

McDaid, A., Murphy, T. B., Friel, N. and Hurley, N. (2013). Improved bayesian inference for the stochastic block model with application to large networks. Computational Statistics & Data Analysis 60, 12–31.

Newman, M. E. J. (2012). Communities, modules and large-scale structure in networks. Nature Physics 8, 1, 25–31.

Nowicki, K. and Snijders, T. A. B. (2001). Estimation and prediction for stochastic blockstructures. Journal of the American Statistical Association 96, 455, 1077–1087.

Pati, D., Bhattacharya, A., Pillai, N. S. and Dunson, D. (2014). Posterior contraction in sparse bayesian factor models for massive covariance matrices. The Annals of Statistics 42, 3, 1102–1130.

Rousseau, J. and Mengersen, K. (2011). Asymptotic behaviour of the posterior distribution in overfitted mixture models. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 73, 5, 689–710.

Schwartz, L. (1965). On bayes procedures. Probability Theory and Related Fiel 4, 1, 10–26.

Snijders, T. A. B. and Nowicki, K. (1997). Estimation and prediction for stochastic blockmodels for graphs with latent block structure. Journal of Classification 14, 1, 75–100.

Suwan, S., Lee, D. S., Tang, R., Sussman, D. L., Tang, M. and Priebe, C. E. (2016). Empirical bayes estimation for the stochastic block model. Electronic Journal of Statistics 10, 1, 761–782.

Szemerédi, E. (1975). On sets of integers containing no k elements in arithmetic progression. Acta Arith 27, 199-245, 2.

van der Pas, S., Kleijn, B. and van der Vaart, A. (2014). The horseshoe estimator: Posterior concentration around nearly black vectors. Electronic Journal of Statistics 8, 2, 2585–2618.

van der Pas, S. L. and van der Vaart, A. W. (2018). Bayesian community detection. Bayesian Analysis 13, 3, 767–796.

Vershynin, R. (2012). Introduction to the non-asymptotic analysis of random matrices. Compressed Sensing, 210–268.

Wang, Y. J. and Wong, G. Y. (1987). Stochastic blockmodels for directed graphs. Journal of the American Statistical Association 82, 397, 8–19.

Zhao, Y., Levina, E. and Zhu, J. (2011). Community extraction for social networks. Proceedings of the National Academy of Sciences 108, 18, 7321–7326.

Zhao, Y., Levina, E. and Zhu, J. (2012). Consistency of community detection in networks under degree-corrected stochastic block models. The Annals of Statistics 40, 4, 2266–2292.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A.1 Proof of Corollary 4.3

Following exactly the same set of arguments as in the proof of Theorem 4.1, we have for all sufficiently large n,

Since for each (r, s), \(Q_{rs}^{0}\in (\delta ,1-\delta )\), the prior probability of the embedded rectangle \({\prod }_{r=1}^{k}{\prod }_{s=1}^{k}[Q_{rs}^{0}-\delta \epsilon _{n}/2,Q_{rs}^{0}+\delta \epsilon _{n}/2]\) can be bounded below as follows:

where Beta(β1, β2) denotes the standard Beta function with parameters (β1, β2). Next we observe that

where for each fixed (δ, 𝜖n), the function \(\psi _{\delta ,\epsilon _{n}}\colon (0,\infty )^{2} \rightarrow (0,\infty )\) is defined as

Using Eqs. 4.26–4.28, and following exactly the same line of arguments as in the proof of Theorem 4.1, we obtain

for some constant \(C(\beta _{1},\beta _{2},\delta ,\epsilon _{n})=\left (\text {Beta}(\beta _{1},\beta _{2})\right )^{-k^{2}}\left (\psi _{\delta ,\epsilon _{n}}(\beta _{1},\beta _{2})\right )^{k^{2}}>0\). Now, for every possible choice of the pair (β1, β2), we note that \(\log C(\beta _{1},\beta _{2},\)\(\delta ,\epsilon _{n})\sim k^{2}\). For instance, suppose β1 ≥ 1, β2 ≥ 1. Then, as δ ∈ (0,1/2) is fixed and \(\epsilon _{n}\rightarrow 0\) as \(n\rightarrow \infty \), \(\log C(\beta _{1},\beta _{2},\delta ,\epsilon _{n})=-k^{2} \log \text {Beta}(\beta _{1},\beta _{2})+(\beta _{1}+\beta _{2}-2)k^{2}\log (\delta (1-\epsilon _{n}/2))\sim k^{2}\). Therefore, for \(n^{2}{\epsilon _{n}^{2}} = k^{2} \{\log n + \log (\delta ^{-1})\} + n \log k\), \(\log C(\beta _{1},\beta _{2},\delta ,\epsilon _{n})=o(n^{2}{\epsilon _{n}^{2}})\) as \(n\rightarrow \infty \). Thus, choosing a large enough constant M > 0 (depending on (β1, β2)), it follows that the above sum in Eq. 4.29 converges to zero for all large values of M which concludes the argument.

A.2 Proof of Theorem 4.4

Observe that the posterior distribution in the case of directed networks can be written as

Observe that the discrepancy measure in Eq. 4.8 can also be written as

for \(\theta , \theta ^{0} \in {{\varTheta }_{k}^{u}}\), defined in Eq. 4.9. Hence, it is straightforward to obtain versions of Lemmata 4.5, 4.6, 4.9 and 4.10 as well as Corollary 4.8 for parameters \(\theta \in {{\varTheta }_{k}^{u}}\). The conclusion then follows by replicating arguments (4.21)-(4.25).

A.3 Additional Simulations Results

Below we present an additional small scale simulation study where we simulate 100 replicates of an SBM network using k = 3 and 5 equi-sized communities with n = 30, 60, and 90 and ρ = 0.3,0.5. We summarize these additional results into Tables 3 and 4 below.

Rights and permissions

About this article

Cite this article

Ghosh, P., Pati, D. & Bhattacharya, A. Posterior Contraction Rates for Stochastic Block Models. Sankhya A 82, 448–476 (2020). https://doi.org/10.1007/s13171-019-00180-5

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13171-019-00180-5

Keywords and phrases

- Bayesian asymptotics

- Stochastic block models

- Clustering

- Multinomial-Dirichlet

- Networks

- Posterior contraction

- Random graphs