Abstract

We interpret uncertainty in a model for seismic wave propagation by treating the model parameters as random variables, and apply the Multilevel Monte Carlo method to reduce the cost of approximating expected values of selected, physically relevant, quantities of interest (QoI) with respect to the random variables. Targeting source inversion problems, where the source of an earthquake is inferred from ground motion recordings on the Earth’s surface, we consider two QoIs that measure the discrepancies between computed seismic signals and given reference signals: one QoI, \({\mathscr {Q}}_E\), is defined in terms of the \(L^2\)-misfit, which is directly related to maximum likelihood estimates of the source parameters; the other, \({\mathscr {Q}}_W\), is based on the quadratic Wasserstein distance between probability distributions, and represents one possible choice in a class of such misfit functions that have become increasingly popular to solve seismic inversion in recent years. We simulate seismic wave propagation, including seismic attenuation, using a publicly available code in widespread use, based on the spectral element method. Using random coefficients and deterministic initial and boundary data, we present benchmark numerical experiments with synthetic data in a two-dimensional physical domain and a one-dimensional velocity model where the assumed parameter uncertainty is motivated by realistic Earth models. Here, the computational cost of the standard Monte Carlo method was reduced by up to \(97\%\) for \({\mathscr {Q}}_E\), and up to \(78\%\) for \({\mathscr {Q}}_W\), using a relevant range of tolerances. Shifting to three-dimensional domains is straight-forward and will further increase the relative computational work reduction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recent large earthquakes and their devastating effects on society and infrastructure (e.g., New Zealand, 2011; Japan, 2011; Nepal, 2015) emphasize the urgent need for reliable and robust earthquake-parameter estimations for subsequent risk assessment and mitigation. Seismic source inversion is a key component of seismic hazard assessments where the probabilities of future earthquake events in the region are of interest.

From ground motion recordings at the surface of the Earth, i.e. seismograms, we are interested in efficiently computing the likelihood of postulated parameters describing the unknown source of an earthquake. A sub-problem of the seismic source inversion is to infer the location of the source (hypocenter) and the origin time. We take the expected value of the quantity of interest, which, in our case, is the misfit between the observed and predicted ground displacements for a given seismic source location and origin time, to find the location and time of highest likelihood from observed seismogram data. Several mathematical and computational approaches can be used to calculate the predicted ground motions in the source inversion problem. These techniques span from approximately calculating only some of the waveform attributes, (e.g. peak ground acceleration or seismic phase arrival-times), often by using simple one-dimensional velocity models, to the simulation of the full wave propagation in a three-dimensionally varying structure.

In this work, the mathematical model and its output are random to account for the lack of precise knowledge about some of its parameters. In particular, to account for uncertainties in the material properties of the Earth, these are modeled by random variables. The most common approach used to compute expected values of random variables is to employ Monte Carlo (MC) sampling. MC is non-intrusive, in the sense that it doesn’t require underlying deterministic computational codes to be modified, but only called with randomly sampled parameters. Another striking advantage of MC is that no regularity assumptions are needed on the quantity of interest with respect to the uncertain parameters, other than that the variance has to be bounded to use the Central Limit Theorem to predict the convergence rate. However, in situations when generating individual samples from the computational model is highly expensive, often due to the need for a fine, high resolution, discretization of the physical system, MC can be too costly to use. To avoid a large number of evaluations of the computer model with high resolution, but still preserve the advantages of using MC, we apply Multilevel Monte Carlo (MLMC) sampling (Giles 2008; Heinrich 2001; Giles 2015) to substantially reduce the computational cost by distributing the sampling over computations with different discretization sizes. Polynomial chaos surrogate models have been used to exploit the regularity of the waveform solution (Cruz-Jiménez et al. 2018); in cases when the waveform is analytic with respect to the random parameters, the asymptotic convergence can be super-algebraic. However, if the waveform is not analytic, one typically only achieves algebraic convergence in \(L^2\) asymptotically, as was shown in Motamed et al. (2013) to be the case with stochastic collocation for the second order wave equation with discontinuous random wave speed. The setup in Motamed et al. (2013) with a stratified medium is analogous to the situation we will treat in the numerical experiments for a viscoelastic seismic wave propagation problem in the present paper. The efficiency of polynomial chaos, or stochastic collocation, methods deteriorates as the number of “effective” random variables increases, whereas the MC only depends weakly on this number. Here MC type methods have an advantage for the problems we are interested in.

We are motivated by eventually solving the inverse problem; therefore the Quantities of Interest (QoIs) considered in this paper are misfit functions quantifying the distance between observed and predicted displacement time series at a given set of seismographs on Earth’s surface. One QoI is based on the \(L^2\)-norm of the distances; another makes use of the Wasserstein distance between probability densities, which requires transformation of the displacement time series to be applicable. The advantages of the Wasserstein distance over the \(L^2\) difference for full-waveform inversion, for instance, that the former circumvents the issue of cycle skipping, have been shown in Engquist and Hamfeldt (2014) and further studied in Engquist et al. (2016), Yang and Engquist (2018), Yang et al. (2018). A recent preprint (Motamed and Appelö 2018) combines this type of QoIs with a Bayesian framework for inverse problems.

In our demonstration of MLMC for the two QoIs, we consider a seismic wave propagation in a semi-infinite two-dimensional domain with free surface boundary conditions on the Earth’s surface, heterogeneous viscoelastic media, and a point-body time-varying force. We use SPECFEM2D (Komatitsch et al. 2012; Tromp et al. 2008) for the numerical computation where we consider an isotropic viscoelastic Earth model. The heterogeneous media is divided into homogeneous horizontal layers with uncertain densities and velocities. The densities and shear wave velocities are treated as random, independent between the subdomains, and they are uniformly distributed over the assumed intervals of uncertainty in the respective layers. The compressional wave velocities of the subdomains follow a multivariate uniform distribution conditional on the shear wave speeds. Our choice of probability distributions to describe the uncertainties are motivated by results given in Albaric et al. (2010); Roecker et al. (2017).

The paper is outlined as follows: In Sect. 2, the seismic wave propagation problem is described for a viscoelastic medium with random Earth material properties. The two QoIs are described in Sect. 3. The computational techniques, including (i) numerical approximation of the viscoelastic Earth material model and of the resulting initial boundary value problem, the combination of which is taken as “black-box” solver by the widely used seismological software package SPECFEM2D (Komatitsch et al. 2012), and (ii) MLMC approximation of QoIs depending on the random Earth material properties, are described in Sect. 4. The configuration of the numerical tests is described in Sect. 5, together with the results, showing a considerable decrease in computational cost compared to the standard Monte Carlo approximation for the same accuracy.

2 Seismic wave propagation model with random parameters

Here we describe the model we use for seismic wave propagation in a heterogeneous Earth medium, given by an initial boundary value problem (IBVP). We interpret the inherent uncertainty in the Earth material properties through random parameters which define the compressional and shear wave speed fields and the mass density.

First, we state the strong form of the IBVP in the case of a deterministic elastic Earth model, later to be extended to a particular anelastic model in the context of a weak form of the IBVP, suitable for the numerical approximation methods used in Sects. 4 and 5 . Finally, we state assumptions on the random material parameter fields.

2.1 Strong form of initial boundary value problem

We consider a heterogeneous medium occupying a domain \(D\subset {\mathbb {R}}^3\) modeling the Earth. We denote by \(\mathbf s :D\times (0,{\mathscr {T}}]\rightarrow {\mathbb {R}}^3\) the space-time displacement field induced by a seismic event in \(D\). In the deterministic setting \(\mathbf s \) is assumed to satisfy

for some finite time horizon, given by \({\mathscr {T}}>0\), with the initial conditions

and the free surface boundary condition on Earth’s surface \(\partial D_S\)

where \(\mathbf T \) denotes the stress tensor, and \(\hat{\mathbf{n }}\) denotes the unit outward normal to \(\partial D_S\). Together with a constitutive relation between stress and strain, (1a)–(1c) form an IBVP for seismic wave propagation; two different consitutive relations will be considered below. In this paper \(\partial _{t}\) denotes time derivative and \(\varvec{\nabla }\) and \(\varvec{\nabla \cdot }\) denote spatial gradient and divergence operators, respectively.

With \(\rho \) denoting the density, \(\mathbf f \) becomes a body force that includes the force causing the seismic event. In this study, we consider a simple point body force acting with time-varying magnitude at a fixed point, as described in Aki and Richards (2002), Dahlen and Tromp (1998).

For an isotropic elastic Earth medium undergoing infinitesimal deformations, the constitutive stress–strain relation can be described by

with \({{\,\mathrm{Tr}\,}}(\varvec{\epsilon })\) the trace of the symmetric infinitesimal strain tensor, \(\varvec{\epsilon }(\varvec{\nabla }\mathbf s ) = \frac{1}{2} \left[ \varvec{\nabla }\mathbf s + \left( \varvec{\nabla }\mathbf s \right) ^\intercal \right] \), and \(\mathbf I \) the identity tensor; see Aki and Richards (2002), Dahlen and Tromp (1998), Carcione (2014). In the case of isotropic heterogeneous elastic media undergoing infinitesimal deformations, the first and second Lamé parameter, denoted \(\lambda \) and \(\mu \) respectively, are functions of the spatial position, but for notational simplicity, we often omit the dependencies on \(\mathbf x \). These parameters, together, constitute a parametrization of the elastic moduli for homogeneous isotropic media and together with \(\rho \) also determine the compressional wave speed, \(\alpha \), and shear wave speed, \(\beta \), by

Either one of the triplets \((\rho ,\lambda ,\mu )\) and \((\rho , \alpha , \beta )\) defines the Earth’s material properties with varying spatial position for a general velocity model (Virieux 1986).

Simplification of the full Earth model For the purpose of the numerical computations and the well-posedness of the underlying wave propagation models, we will later replace the whole Earth domain by a semi-infinite domain, which we will truncate with absorbing boundary conditions at the artificial boundaries introduced by the truncation. The domain boundary is \(\partial D=\partial D_S\cup \partial D_A\) with \(\partial D_A\) denoting the artificial boundary. From now on, we will consider \(D\) to be an open bounded subset of \({\mathbb {R}}^d\), where \(d=2~\text {or}~3\) denotes the dimension of the physical domain. We will consider numerical examples with \(D\subset {\mathbb {R}}^2\).

2.2 Weak form of initial boundary value problem

The numerical methods for simulating the seismic wave propagation used in this paper are based on an alternative formulation of IBVP (1) that uses the weak form in space. To obtain such form, one multiplies (1a) at time t by a sufficiently regular test function \(\mathbf w \), and integrates over the physical domain \(D\). Using integration by parts and imposing the traction-free boundary condition (1c), one derivative is shifted from the unknown displacement, \(\mathbf s \), to the test function \(\mathbf w \), i.e.,

where \(:\) denotes the double contraction. In this context, “sufficiently regular test function”, means that \(\mathbf w \in \mathbf H ^1(D)\), where \(\mathbf H ^1(D)\) denotes the Sobolev space \(\mathbf W ^{1,2}(D)\),

equipped with the usual inner product

where l is a characteristic length scale, and the corresponding induced norm

The weak form of the IBVP then becomes:

Problem 1

(Weak form of isotropic elastic IBVP) Find \(\mathbf s \in \mathbf V _\mathbf s \), which both satisfies the initial conditions (1b), and for \(\mathbf T \) in (2) satisfies

almost everywhere in the time interval \(\left[ 0,{\mathscr {T}}\right] \), where the trial space

using the spaces defined in (6).

Above, the time dependent functions, \(\mathbf s (\mathbf x ,t)\), \(\partial _t\mathbf s (\mathbf x ,t)\), \(\partial _{tt}\mathbf s (\mathbf x ,t)\), belong to Bochner spaces

where the appropriate choice of \({\mathscr {X}}\) depends on the number of spatial derivatives needed: \(\mathbf H ^1(D)\), \(\mathbf L ^2(D)\), and \(\mathbf H ^{-1}(D)\), respectively, with the latter space being the dual space of \(\mathbf H ^1(D)\).

According to Lions and Magenes (1972a, b), Problem 1 is well-posed under certain regularity assumptions and appropriate boundary condition on \(\partial D_A\), see page 32, equation (5.29) in Lions and Magenes (1972b). More precisely, assuming that the density \(\rho \) is bounded away from zero, \(\rho \ge \rho _{min}>0\), the problem fits into the setting of Section 1, Chapter 5, of Lions and Magenes (1972b), after dividing through by \(\rho \). Assuming that \(\lambda ,\mu \) in (2) are also sufficiently regular, according to Theorem 2.1 in the chapter it holds that, if the force \(\mathbf f /\rho \in \mathbf L ^{2}(0, {\mathscr {T}}; \mathbf L ^{2}(D))\), the initial data \(\mathbf g _{1} \in \mathbf H ^{1}(D)\) and \(\mathbf g _{2} \in \mathbf L ^{2}(D)\), and the boundary, \(\partial D_S\), is infinitely differentiable, there exists a unique solution to Problem 1,

which depends continuously on the initial data.

In our numerical experiments, however, we instead use a problem with piecewise constant material parameters, violating the smoothness assumption, but only at material interfaces in the interior of the domain. Furthermore, a singular source term, \(\mathbf f \), common in seismic modeling, will be used.

2.3 Weak form including seismic attenuation

For a more realistic Earth model, we include seismic attenuation in the wave propagation. The main cause of seismic attenuation is the relatively small but not negligible anelasticity of the Earth. In the literature, e.g., Chapter 6 of Dahlen and Tromp (1998) or Chapter 1 of Carcione (2014), anelasticity of the Earth is modeled by combining the mechanical properties of elastic solids and viscous fluids. In a heterogeneous linear isotropic viscoelastic medium, the displacement field \(\mathbf s (\mathbf x ,t)\) follows the IBVP (1), but the stress tensor \(\mathbf T \) depends linearly upon the entire history of the infinitesimal strain, and the constitutive relation (2) will be replaced by

where \(\mathbf c \) represents the anelastic fourth order tensor which accounts for the Earth’s material properties, which will be further discussed in Sect. 4.2.

With the constitutive relation (7) replacing (2), the IBVP becomes:

Problem 2

(Weak form of isotropic viscoelastic IBVP) Find \(\mathbf s \in \mathbf V _\mathbf s \), defined in (5), which both satisfies the initial conditions (1b), and for \(\mathbf T \) in (7) satisfies

almost everywhere in the time interval \(\left[ 0,{\mathscr {T}}\right] \).

In the case of a bounded domain, \(D\), with homogeneous initial conditions, theoretical well-posedness results for the viscoelastic model considered in Problem 2, as well as a wide range of other viscoelastic models, are given in Brown et al. (2018). More precisely, the viscoelastic material tensor \(\mathbf c (\mathbf x , t - t^{\prime })\) should follow the hypothesis of symmetricity, positivity, and boundedness, as given on page 60 in Brown et al. (2018); the boundary, \(\partial D\), can be a combination of nonoverlapping Dirichlet and Neumann parts, and we can define a bounded and surjective trace operator from \(\mathbf H ^1(D)\) to \(\mathbf H ^{1/2}(\partial D)\). For a detailed description of \(H^{1/2}(\partial D)\) in the case of mixed type of boundaries, see page 58 in Brown et al. (2018). Note that a \(d-1\) times differentiable \(\partial D\) with locally Lipschtiz \(D\) will always have a trace operator.

2.4 Statement in stochastic setting

Here we model the uncertain Earth material properties, \((\rho ,\lambda ,\mu )\), as time-independent random fields \((\rho ,\lambda ,\mu ):D\times \varOmega \rightarrow {\mathbb {R}}^3\), where \(\varOmega \) is the sample space of a complete probability space. We assume that the random fields are bounded from above and below, uniformly both in physical space and in sample space, and with the lower bounds strictly positive,

For any given sample \(\omega \) the displacement field \(\mathbf s (\cdot ,\cdot ,\omega )\) solves Problem 1 or Problem 2, for the respective case.

Any known well-posedness properties of the deterministic Problems 1 and 2 are directly inherited in their stochastic form, assuming the same regularity of realizations of the random fields as of their deterministic counterparts.

3 Quantities of interest

Two QoI suitable for different approaches to seismic inversion will be described. The common feature is that they quantify the misfit between data, consisting of ground motion measured at the Earth’s surface, at fixed equidistant observation times \(\{t_k^d\}_{k=0}^K\), \(t_k=k\varDelta t_{}^d\), and model predictions, consisting of the corresponding model predicted ground motion. The displacement data, \(\mathbf d \), and the model predicted displacement, \(\mathbf s \), are given for a finite number of receivers, \(N_{rec}\), at locations  . Let us ignore model errors and assume that the measured data is given by the model, \(\mathbf s \), depending on two parameters, denoted by \(\mathbf x _{\mathbf{s }}^{~}\) and \(\varvec{\theta }\). Here \(\mathbf x _{\mathbf{s }}^{~}\) corresponds to the unknown source location, which can be modeled as deterministic or stochastic depending on the approach to the source inversion problem, and \(\varvec{\theta }\) is a random nuisance parameter, corresponding to the uncertain Earth material parameters. We assume that \(\mathbf d \) is given by the model up to some additive noise:

. Let us ignore model errors and assume that the measured data is given by the model, \(\mathbf s \), depending on two parameters, denoted by \(\mathbf x _{\mathbf{s }}^{~}\) and \(\varvec{\theta }\). Here \(\mathbf x _{\mathbf{s }}^{~}\) corresponds to the unknown source location, which can be modeled as deterministic or stochastic depending on the approach to the source inversion problem, and \(\varvec{\theta }\) is a random nuisance parameter, corresponding to the uncertain Earth material parameters. We assume that \(\mathbf d \) is given by the model up to some additive noise:

where \(\varvec{\varepsilon }_{k,n}\sim {\mathscr {N}}(\mathbf 0 ,\sigma ^{2}\mathbf I )\), independent identically distributed (i.i.d.), and \(\mathbf x _{\mathbf{s }}^{*}\) and \(\varvec{\theta }^*\) denote some fixed values of \(\mathbf x _{\mathbf{s }}^{~}\) and \(\varvec{\theta }\), respectively. We consider the random parameter, \(\varvec{\theta }\), to consist of the material triplet, \(\left( \rho ,\alpha ,\beta \right) \) as a random variable or field and all other parameters than \(\varvec{\theta }\) and \(\mathbf x _{\mathbf{s }}^{~}\) as given.

The additivity assumption on the noise, while naive, can easily be replaced by more complex, correlated, noise models without affecting the usefulness or implementation of the MLMC approach described in Sect. 4.4.

Let us denote a QoI by \({\mathscr {Q}}(\mathbf x _{\mathbf{s }}^{~},\varvec{\theta })\). An example of a seismic source inversion approach is finding the location, \(\mathbf x _{\mathbf{s }}^{~}\), that yields the lowest expected value of \({\mathscr {Q}}\), i.e., the solution of

where \({\text {E}\left[ \cdot \vert \cdot \right] }\) denotes conditional expectation of the first argument with respect to the second argument. Both QoIs investigated in this work can be used to construct likelihood functions for statistical inversion, see e.g. Bissiri et al. (2016). For instance, finding the source location, \(\mathbf x _{\mathbf{s }}^{~}\), by maximizing the marginal likelihood, i.e., the solution of

where \({\mathscr {L}}\) is the likelihood function.

The two QoIs below represent two different classes, which have many variations. In the present work we neither aim to compare the two QoIs to each other, nor to choose between different representatives of the two classes. Instead, we want to show the efficiency of MLMC applied to QoI from both classes. Furthermore, in the two QoIs below, we assume that the discrete time observations have been extended to a continuous function, e.g., by linear interpolation between data points; we could also have expressed the QoI in terms of discrete time observations.

\(L^2\)-based QoI The first QoI studied in this work, denoted by \({\mathscr {Q}}_{E}\), is based on the commonly used \(L^2\) misfit between predicted data \(\mathbf s \) and measured data \(\mathbf d \):

where \(\vert \cdot \vert \) is the Euclidean norm in \({\mathbb {R}}^d\) and \({\mathscr {T}}\) is the total simulation time. This quantity of interest is directly related to the seismic inversion problem through its connection to the likelihood for normally-distributed variables:

where \(\varTheta (\mathbf x _{\mathbf{s }}^{~},\varvec{\theta }) :=\dfrac{1}{2\sigma ^{2}}\left||\mathbf s (\mathbf x _{\mathbf{s }}^{~},\varvec{\theta })-\mathbf d \right||_{L^2(0,{\mathscr {T}}]}^{2} =\dfrac{{\mathscr {T}}}{2\sigma ^{2}}{\mathscr {Q}}_{E}(\mathbf x _{\mathbf{s }}^{~},\varvec{\theta })\).

A drawback with the \(L^2\) misfit function for full-waveform seismic inversion, see e.g. Yang and Engquist (2018), is the well-known cycle skipping issue which typically leads to many local optima and raises a substantial challenge to subsequent tasks such as optimization and Bayesian inference.

\(W_2^2\)-based QoI An alternative QoI was introduced, in the setting of seismic inversion, and analyzed in Engquist and Hamfeldt (2014); Engquist et al. (2016); Yang and Engquist (2018); Yang et al. (2018), where it is shown to have several desirable properties which \({\mathscr {Q}}_E\) is lacking; in particular, in an idealized case, if one of the two waveforms is shifted in time, this QoI is a convex function of the shift; see Theorem 2.1 in Engquist and Hamfeldt (2014) and the discussion in Engquist et al. (2016). This QoI is based on the quadratic Wasserstein distance between two probability density functions (PDFs), \(\psi :X\rightarrow {\mathbb {R}}^{+}\) and \(\phi :X\rightarrow {\mathbb {R}}^{+}\), which is defined as

where \({\mathscr {M}}\) is the set of all maps that rearrange the PDF \(\psi \) into \(\phi \). When X is an interval in \({\mathbb {R}}\), an explicit form

exists, where \(\varPsi (\cdot )\) and \(\varPhi (\cdot )\) are the cumulative distribution functions (CDFs) of \(\psi \) and \(\phi \) respectively.

How to optimally construct a QoI for seismic source-inversion based on the \(W_2^2\)-distance, or similar distances, is an active research topic, and several recent papers discuss advantages and disadvantages of various approaches; see e.g. Yang and Engquist (2018); Métivier et al. (2018); Motamed and Appelö (2018). Here, we use one of the earliest suggestions, proposed in Engquist and Hamfeldt (2014).

To eliminate the scaling due to the length of the time interval we make a change of variable \(\tau =t/{\mathscr {T}}\) so that \(X=[0,1]\) below. Typically, the waveforms will not be PDFs, even in their component parts. If we assume that \(\psi \) and \(\phi \) are two more general one-dimensional functions, taking both positive and negative values in the interval, then the non-negative parts \(\psi ^{+}\) and \(\phi ^{+}\) and non-positive parts \(\psi ^{-}\) and \(\phi ^{-}\) can be considered separately, and one can define

To define the QoI we sum \({\mathscr {W}}(\cdot ,\cdot )\) applied to all spatial components of the vector-valued \(\mathbf s \) and \(\mathbf d \) in all receiver locations, i.e.

Remark 1

(Assumption on alternating signs of \(\mathbf s \) and \(\mathbf d \)) Note that the definition of \({\mathscr {Q}}_W\) above requires all components of \(\mathbf s \) and \(\mathbf d \) in all receivers to obtain both positive and negative values in the time interval \([0,{\mathscr {T}}]\) for (17) to be well-defined. With Gaussian noise in (10), the probability of violating this assumption is always positive, though typically too small to observe in practice if the observation interval and the receivers are properly set up. To complete the definition of \({\mathscr {Q}}_W\), we may extend (17) by replacing \(W_2^2\left( \phi ^*/\int _0^1\phi ^*,\psi ^*/\int _0^1\psi ^*\right) \) by its maximal possible value, 1, whenever at least one of \(\phi ^*\) and \(\psi ^*\) is identically 0. Note that this can lead to issues due to reduced regularity beyond the loss of differentiability caused by the splitting into positive and negative parts.

The expected value, \({\text {E}\left[ {\mathscr {Q}}(\mathbf x _{\mathbf{s }}^{~},\varvec{\theta })\right] }\), as a function of the shift between source location in the MLMC simulation and in the simulation used to generate the synthetic data; see Remark 2. The top row shows \({\mathscr {Q}}_E\), computed with synthetic data with additive noise, the middle row \({\mathscr {Q}}_W\) with the same synthetic data, and the bottom row, \({\mathscr {Q}}_W\) without noise added to the synthetic data. The effect of adding or removing a noise of this level is not visible in \({\mathscr {Q}}_E\), and therefore the corresponding figures of \({\mathscr {Q}}_E\) without added noise are omitted

Remark 2

(Use of \({\mathscr {Q}}_W\) in source-inversion) The convexity of \({\mathscr {Q}}_W\) with respect to time-shifts in signals is directly related to source inversion problems, Engquist et al. (2016), since perturbations of the source location approximately result in shifts in the arrival times at the receivers. However, convexity with respect to the source location is not guaranteed. As an illustration, consider the case where the data \(\mathbf d \) is synthetic data obtained from a computed approximation of \(\mathbf s (\cdot ,\cdot ;\mathbf x _{\mathbf{s }}^{*},\varvec{\theta }^*)\). Figure 1 shows results from a numerical example very similar to the one in Sect. 5. In Fig. 1\({\text {E}\left[ {\mathscr {Q}}_E(\mathbf x _{\mathbf{s }}^{~},\varvec{\theta })\right] }\) and \({\text {E}\left[ {\mathscr {Q}}_W(\mathbf x _{\mathbf{s }}^{~},\varvec{\theta })\right] }\) are approximated for 41 different synthetic data, obtained by shifting the source location, \(\mathbf x _{\mathbf{s }}^{*}\), while keeping the Earth material parameters fixed, as described in Sect. 5.1.4. In the figure, \((\varDelta x,\varDelta z)=\varDelta \mathbf x =\mathbf x _{\mathbf{s }}^{*}-\mathbf x _{\mathbf{s }}^{~}\) is the shift of the source location in the synthetic data relative to the source location encoded in \(\mathbf f \) when approximating Problem 2. The left column shows a larger region around the point \(\varDelta \mathbf x =\mathbf 0 \), marked with a red circle, and the right gives a detailed view around \(\varDelta \mathbf x =\mathbf 0 \). The left column clearly illustrates a situation where the large-scale behavior of \({\text {E}\left[ {\mathscr {Q}}_W(\mathbf x _{\mathbf{s }}^{~},\varvec{\theta })\right] }\) is convex with respect to \(\varDelta \mathbf x \), while \({\text {E}\left[ {\mathscr {Q}}_E(\mathbf x _{\mathbf{s }}^{~},\varvec{\theta })\right] }\) is not. In the absence of noise, both QoIs are convex in a small neighborhood around the point \(\varDelta \mathbf x =\mathbf 0 \). Avoiding the non-convex behavior on the larger scale significantly simplifies the source inversion problem.

4 Computational techniques

In this section, we start with a concise description of how Problems 1 and 2 are approximated numerically. The domain, \(D\), is modified in two steps: first, the finite Earth model is replaced by a half-plane in two dimensions or a half-space in three dimensions, and second, this semi-infinite domain is truncated, introducing absorbing boundary conditions on the artificial boundaries. We also describe a simplification of the stress tensor model (7) that results in a viscoelastic stress tensor suitable for numerical implementation. Then, we proceed with providing computational approximations of the QoI given in Sect. 3. The section ends with a summary of the MLMC algorithm for computing the expected value of the QoI.

4.1 Numerical approximation of initial boundary value problem

A numerical approximation of Problem 1 or 2 can either be achieved by (i) approximating the seismic wave propagation produced by a seismic event on the whole Earth, or by (ii) restricting the computational domain, \(D\), to a local region around the source and the receivers. In either case, there are purpose-built software packages based on the Spectral Element Method (SEM) Komatitsch and Tromp (1999); Komatitsch and Vilotte (1998) that will be used in this paper. The MLMC method does not fundamentally depend on which of the alternatives, (i) or (ii), that is used, or on the choice of SEM over other approximation methods. Indeed, an important advantage of MLMC, or more generally MC, methods is that they are non-intrusive in the sense that they can straightforwardly be applied by randomly sampling the Earth material parameters and then executing any such publicly available simulation code to compute the corresponding sample of the QoI.

In our numerical example, we choose alternative (ii), and proceed in two steps: first, we approximate the Earth locally by a half-plane, in a two-dimensional test case, or by a half-space, in the full three-dimensional problem; second, the half-plane or half-space is truncated to a finite domain, where absorbing boundary conditions (ABC) are introduced on the artificial boundaries to mimic the absorption of seismic energy as the waves leave the region around the receivers. The variational equations (4) and (8) now contain a non-vanishing boundary term, corresponding to the part of the boundary, \(\partial D_A\), where absorbing boundary conditions apply. We use a perfectly matched layer (PML) approximation of the ABC, introduced in Berenger (1994) and used in many fields; see e.g. Xie et al. (2014); Komatitsch and Tromp (2003) in the context of seismic wave propagation. However, in the absence of true PML, see Xie et al. (2016), for Problem 2 which has attenuating Earth material properties, in practice we choose the truncation of \(D\) such that \(\partial D_A\) is far enough from all receivers to guarantee that no reflected waves reach the receivers in the time interval \([0,{\mathscr {T}}]\), given the maximal wave speeds allowed by the range of uncertainties (9).

To apply SEM, first, a semi-discrete version of the variational equation is introduced by discretizing space and introducing a finite dimensional solution space where the solution at time t can be represented by a finite vector, \(\mathbf S (t)\). Then, the time evolution of the SEM approximation, \(\mathbf S (t)\), of the seismic wavefield solves an initial value problem for the second order ordinary differential equation (ODE) in time

where M is the mass matrix, C is the global absorbing boundary matrix, K the global stiffness matrix, and F the source term.

To get the semi-discrete form, \(D\) is divided into non-overlapping elements of maximal size \(\varDelta x_{}\), similarly to what is done when using a standard finite-element method. Quadrilateral elements are used in two space dimensions and hexahedral in three. Each element is defined in terms of a number, \(n_{c}\), of control points and an equal number of shape functions which describe the isomorphic mapping between the element and reference square or cube. The shape functions are products of Lagrange polynomials of low degree. In the remainder, we assume that no error is introduced by the representation of the shape of the elements, which is justified by the very simple geometry of the test problem in Sect. 5. The displacement field on every element is approximated by a Lagrange polynomial of higher degree, \(N_{l}\), and the approximation of the variational form (4) or (8), including the artificial boundary term, over an element is based on the Gauss–Lobatto–Legendre integration rule on the same \(N_{l} + 1\) points used for Lagrange interpolation; this choice leads to a diagonal mass matrix, M, which is beneficial in the numerical stepping scheme. More details on the construction of these matrices and the source term can be found in Komatitsch and Vilotte (1998).

The initial value problem for the ODE (19) is approximately solved by introducing a discretization of the time interval, and by applying a time-stepping method. Among multiple available choices, this work uses the second-order accurate explicit Newmark-type scheme; see for example Chapter 9 in Hughes (2000). It is a conditionally stable scheme and the associated condition on the time step leads to \(\varDelta t_{}\le c\,\varDelta x_{}N_{l}^{-2}\), for uniform spatial discretizations. Thanks to the diagonal nature of M and the sparsity of C and K, the cost per time step of the Newmark scheme is proportional to the number of unknowns in \(\mathbf S (t_j)\), and thus to \(\varDelta x_{}^{-d}\), and since the number of time steps is inversely proportional to \(\varDelta t_{}\propto \varDelta x_{}\) the total work is proportional to \(\varDelta x_{}^{-(d+1)}\).

Determining \(\varDelta t_{}\) by the stability constraint, \(\varDelta t_{}\propto \varDelta x_{}\), we expect the second order accuracy of the Newmark scheme to asymptotically be the leading order error term as \(\varDelta x_{}\rightarrow 0\), assuming sufficient regularity of the true solution.

4.2 Computational model of seismic attenuation

Approximately solving Problem 2, as it is stated in Sect. 2.3, is very difficult since the stress \(\mathbf T \) in (7) at time t depends on the entire solution history \(\mathbf s (\cdot ,t')\) for \(-\infty <t'\le t\), or in practice for \(0\le t'\le t\) since the displacement is assumed to be constant up to time 0. Even in a discretized form in an explicit time stepping scheme, approximately updating the stress according to (7) would require storing the strain history for all previous time steps in every single discretization point where \(\mathbf T \) must be approximated, requiring unfeasible amounts of computer memory and computational time. Therefore, the model of the viscoelastic properties of the medium is often simplified to a generalized Zener model using a series of “standard linear solid” (SLS) mechanisms. The present work uses the implementation of the generalized Zener model in SPECFEM2D. Below, we will briefly sketch the simplification of (7). For a more detailed description, we refer readers to Liu et al. (1976); Zhang et al. (2016); Komatitsch and Tromp (1999); Moczo et al. (2007); Carcione (2014).

The integral in the stress–strain relation (7) can be expressed as a convolution in time by defining the relaxation tensor \(\mathbf c \) to be zero in \(D\times {\mathbb {R}}^-\), i.e. \(\mathbf c (\cdot ,t) = {\tilde{\mathbf{c }}}(\cdot ,t)H(t)\), where H(t) is the Heaviside function. That is,

which, as discussed in Emmerich and Korn (1987), can be formulated in the frequency domain as

where \(\mathbf M (\cdot ,t)=\partial _t\mathbf c (\cdot ,t)\). In seismology, it has been observed, Carcione et al. (1988), that the so-called quality factor

is approximately constant over a wide range of frequencies. This Q is an intrinsic property of the Earth material that describes the decay of amplitude in seismic waves due to the loss of energy to heat, and its impact on \(\mathbf M \) is explicitly given in equation (5) of Emmerich and Korn (1987). This observation allows modeling \(\widehat{\mathbf{M }}(\cdot ,\omega )\) in the frequency domain through a series of a number, B, of SLS. Then the stress–strain relation (20) can be approximated as

where \(\mathbf c ^{U}\) is the unrelaxed viscoelastic fourth order tensor, which for an isotropic Earth model is defined by \(\lambda \) and \(\mu \) or equivalently \(\alpha \) and \(\beta \). The relaxation functions \(\mathbf R ^b\) for each SLS satisfy initial value problems for a damping ODE; by the non-linear optimization approach given in Blanc et al. (2016), implemented in SPECFEM2D and used in this work, the ODE for each \(\mathbf R ^b\) is determined by two parameters: the quality factor, Q, and the number of SLS, B.

4.3 Quantities of interest

The two QoI, \({\mathscr {Q}}_E\) and \({\mathscr {Q}}_W\) defined in (13) and (18) respectively, are approximated from discrete time series  and

and  . The data observation times \(0=t_0^d<\cdots <t_{K}^d={\mathscr {T}}\), are considered given and fixed, and with realistic frequencies of the measurements, \(100-200\,\text {Hz}\), it is natural to take smaller time steps in the time discretization \(0=t_0^s<\dots <t_J^s={\mathscr {T}}\) of the numerical approximation of \(\mathbf s \) and we assume that \(\left\{ t_k^d\right\} _{k=0}^K\subseteq \left\{ t_j^s\right\} _{j=0}^J\). Furthermore, the time discretization is assumed to be characterized by one parameter \(\varDelta t_{}\), e.g., the constant time step size of a uniform discretization. Since we defined both QoI as sums over all receivers and all components of the vector-valued functions \(\mathbf s \) and \(\mathbf d \) in the receivers, it is sufficient to describe the approximation in the case of two scalar functions \(\phi ^s\), representing the simulated displacement, and \(\phi ^d\), representing the observed data. Here, we define the function \(\phi ^d\) as the piecewise linear interpolation in time of the data points.

. The data observation times \(0=t_0^d<\cdots <t_{K}^d={\mathscr {T}}\), are considered given and fixed, and with realistic frequencies of the measurements, \(100-200\,\text {Hz}\), it is natural to take smaller time steps in the time discretization \(0=t_0^s<\dots <t_J^s={\mathscr {T}}\) of the numerical approximation of \(\mathbf s \) and we assume that \(\left\{ t_k^d\right\} _{k=0}^K\subseteq \left\{ t_j^s\right\} _{j=0}^J\). Furthermore, the time discretization is assumed to be characterized by one parameter \(\varDelta t_{}\), e.g., the constant time step size of a uniform discretization. Since we defined both QoI as sums over all receivers and all components of the vector-valued functions \(\mathbf s \) and \(\mathbf d \) in the receivers, it is sufficient to describe the approximation in the case of two scalar functions \(\phi ^s\), representing the simulated displacement, and \(\phi ^d\), representing the observed data. Here, we define the function \(\phi ^d\) as the piecewise linear interpolation in time of the data points.

Approximation of \(\varvec{{{\mathscr {Q}}}_{{E}}}\) The time integral in (13) is approximated by the Trapezoidal rule on the time discretization of the numerical simulation. With the assumption that \(\{t_k^d\}_{k=0}^K\subseteq \{t_j^s\}_{j=0}^J\) and the definition of \(\phi ^d\) as the piecewise linear interpolation in time of the data points, only the discretization of \(\phi ^s\) contributes to the error. The numerical approximation of \({\mathscr {Q}}_E\) will then have an \({\mathscr {O}}(\varDelta t_{}^2)\) asymptotic error, provided that \(\phi ^s\) is sufficiently smooth.

Approximation of \(\varvec{{{\mathscr {Q}}}_{{W}}}\) The approximation of (18), through (17), requires both positive and negative values to be attained in all components of  and

and  in all receivers; see Remark 1. Assuming that this holds we approximate the \(W_2^2\)-distance between the normalized non-negative parts of \(\phi ^s\) and \(\phi ^d\); we treat the non-positive analogously. To this end, the zeros of \(\phi ^s\) and \(\phi ^d\), denoted \(\{z_j^s\}_{j=0}^{{\hat{J}}}\) and \(\{z_k^d\}_{k=0}^{{\hat{K}}}\) respectively, are approximated by linear interpolation, and they are included in the respective time discretizations, generating \(\{t_j^s\}_{j=0}^{J^*}=\{t_j^s\}_{j=0}^J\bigcup \{z_j^s\}_{j=0}^{{\hat{J}}}\) and \(\{t_k^d\}_{k=0}^{K^*}=\{t_k^d\}_{k=0}^K\bigcup \{z_k^d\}_{k=0}^{{\hat{K}}}\), and thus \(\{\phi _j^{s,+}\}_{j=0}^{J^*}\) and \(\{\phi _k^{d,+}\}_{k=0}^{K^*}\) are obtained. Then the corresponding values of the CDFs, \(\{\varPhi _j^{s,+}\}_{j=0}^{J^*}\) and \(\{\varPhi _k^{d,+}\}_{k=0}^{K^*}\) are approximated by the Trapezoidal rule, followed by normalization. Finally, the inverse of \(\varPhi ^{d,+}\) in \(\{\varPhi _j^{s,+}\}_{j=0}^{J^*}\), i.e. \(\left\{ \left[ \varPhi ^{d,+}\right] ^{-1}\left( \varPhi _j^{s,+}\right) \right\} _{j=0}^{J^*}\), is approximated by linear interpolation, and analogously for the inverse of \(\varPhi ^{s,+}\) in \(\{\varPhi _k^{d,+}\}_{k=0}^{K^*}\), before the integral in (16) is approximated by the Trapezoidal rule on the discretization \(\{\varPhi _j^{s,+}\}_{j=0}^{J^*}\bigcup \{\varPhi _k^{d,+}\}_{k=0}^{K^*}\) of [0, 1]. These steps combined lead to an \({\mathscr {O}}(\varDelta t_{}^2)\) asymptotic error, provided that \(\phi ^s\) is sufficiently smooth.

in all receivers; see Remark 1. Assuming that this holds we approximate the \(W_2^2\)-distance between the normalized non-negative parts of \(\phi ^s\) and \(\phi ^d\); we treat the non-positive analogously. To this end, the zeros of \(\phi ^s\) and \(\phi ^d\), denoted \(\{z_j^s\}_{j=0}^{{\hat{J}}}\) and \(\{z_k^d\}_{k=0}^{{\hat{K}}}\) respectively, are approximated by linear interpolation, and they are included in the respective time discretizations, generating \(\{t_j^s\}_{j=0}^{J^*}=\{t_j^s\}_{j=0}^J\bigcup \{z_j^s\}_{j=0}^{{\hat{J}}}\) and \(\{t_k^d\}_{k=0}^{K^*}=\{t_k^d\}_{k=0}^K\bigcup \{z_k^d\}_{k=0}^{{\hat{K}}}\), and thus \(\{\phi _j^{s,+}\}_{j=0}^{J^*}\) and \(\{\phi _k^{d,+}\}_{k=0}^{K^*}\) are obtained. Then the corresponding values of the CDFs, \(\{\varPhi _j^{s,+}\}_{j=0}^{J^*}\) and \(\{\varPhi _k^{d,+}\}_{k=0}^{K^*}\) are approximated by the Trapezoidal rule, followed by normalization. Finally, the inverse of \(\varPhi ^{d,+}\) in \(\{\varPhi _j^{s,+}\}_{j=0}^{J^*}\), i.e. \(\left\{ \left[ \varPhi ^{d,+}\right] ^{-1}\left( \varPhi _j^{s,+}\right) \right\} _{j=0}^{J^*}\), is approximated by linear interpolation, and analogously for the inverse of \(\varPhi ^{s,+}\) in \(\{\varPhi _k^{d,+}\}_{k=0}^{K^*}\), before the integral in (16) is approximated by the Trapezoidal rule on the discretization \(\{\varPhi _j^{s,+}\}_{j=0}^{J^*}\bigcup \{\varPhi _k^{d,+}\}_{k=0}^{K^*}\) of [0, 1]. These steps combined lead to an \({\mathscr {O}}(\varDelta t_{}^2)\) asymptotic error, provided that \(\phi ^s\) is sufficiently smooth.

Remark 3

(Cost of approximating \({\mathscr {Q}}_W\)) In the present context, passive source-inversion with a small number of receivers, in the order of 10, the cost of approximating \({\mathscr {Q}}_W\) from \(\mathbf s \) and \(\mathbf d \) is negligible compared to that of computing the approximation of \(\mathbf s \) itself. This might not always be the case in other contexts where one has data from a large number of receivers, as could be the case e.g. in seismic imaging for oil and gas exploration.

Remark 4

(On expected weak and strong convergence rates) The deterministic rate of convergence, as \(\varDelta t_{}^s\rightarrow 0\), of both \({\mathscr {Q}}\) approximations, is two. Here, \(\varDelta t_{}^s\) is identical to the time step of the underlying approximation method of Problem 1 or 2. In the numerical approximation of \(\mathbf s \), with the second order Newmark scheme in time, the asymptotic convergence rate is also two at best, which holds if the solution is sufficiently regular. By our assumptions on the random fields satisfying the same regularity as in the deterministic case and being uniformly bounded, from above and away from zero from below, in the physical domain and with respect to random outcomes, we expect both the weak and the strong rates of convergence to be the same as the deterministic convergence rate of the numerical approximation; i.e. at best two, asymptotically as \(\varDelta t_{}\propto \varDelta x_{}\) goes to zero.

4.4 MLMC algorithm

Here, we summarize the MLMC algorithm introduced by Giles (2008) and independently, in a setting further from the one used here, by Heinrich (2001), which has since become widely used (Giles 2015).

MLMC is a way of reducing the computational cost of standard MC, for achieving a given accuracy in the estimation of the expected value of some QoI, in situations when the samples in the MC method are obtained by numerical approximation methods characterized by a refinement parameter, \(h_{\ell }\), controlling both the accuracy and the cost.

Goal We aim to approximate the expected value of some QoI, \({\text {E}\left[ {\mathscr {Q}}\right] }\), by an estimator \({\mathscr {A}}\), with the accuracy requirement that

where \(\text {TOL}>0\) is a user-prescribed error tolerance. To this end we require

and

for some \(0<\varphi <1\), which we are free to choose.

Assumptions on the Numerical Approximation Model Consider a sequence of discretization-based approximations of \({\mathscr {Q}}\) characterized by a refinement parameter, \(\{h_{\ell }\}_{\ell =0}^\infty \). Let \({\mathscr {Q}}_\ell (\varvec{\theta })\) denote the resulting approximation of \({\mathscr {Q}}\), using the refinement parameter \(h_{\ell }\) for an outcome of the random variable \(\varvec{\theta }\). In this work, we consider successive halvings in the refinement parameter, \(h_{\ell }=2^{-\ell }\), which in Sect. 4.1 corresponds to the spatial mesh size and the temporal step size, \(\varDelta x_{\ell }=\varDelta x_{0}h_{\ell }\) and \(\varDelta t_{\ell }=\varDelta t_{0}h_{\ell }\), respectively. We then make the following assumptions on how the cost and accuracy of the numerical approximations depend on \(h_{\ell }\). We assume that the work per sample of \({\mathscr {Q}}_\ell \), denoted \(W_\ell \), depends on \(h_{\ell }\) as

and the weak order of convergence is \({q_{w}}\), so that we can model,

and that the variance is independent of the refinement level

Standard MC estimator For \(N\) i.i.d. realizations of the parameter, \(\{\varvec{\theta }_n\}_{n=1}^N\), the unbiased MC estimator of \({\text {E}\left[ {\mathscr {Q}}_\ell (\varvec{\theta })\right] }\) is given by

For \({\mathscr {A}}_{MC}\) to satisfy (25) we require

which according to the model (26b) becomes

For any fixed \(h_{\ell }\) such that \({K_{w}}h_{\ell }^{q_{w}}<\text {TOL}\), the value of the splitting parameter, \(\varphi \), is implied by replacing the inequality in (29) by equality and solving for \(\varphi \), giving

Thus, the model for the bias tells us how large of a statistical error we can afford for the desired tolerance, \(\text {TOL}\). By the Central Limit Theorem, \({\mathscr {A}}_{MC}\) properly rescaled converges in distribution,

where \({\mathscr {N}}(0,1)\) is a standard normal random variable with CDF \(\varPhi _{{\mathscr {N}}(0,1)}\). Hence, to satisfy the statistical error constraint (25b), asymptotically as \(\text {TOL}\rightarrow 0\), we require

where \(C_\xi \) is the confidence parameter corresponding to a \(1-\xi \) confidence interval, i.e. \(\varPhi _{{\mathscr {N}}(0,1)}(C_\xi )=1-\xi /2\).

The computational work of generating \({\mathscr {A}}_{MC}\) is

For asymptotic analysis, assume that we can choose \(h_{\ell }\) by taking equality in (29) and \(N\) by taking equality in (32); then we get the asymptotic work estimate

For any fixed choice of \(\varphi \), the computational complexity of the MC method is \(\text {TOL}^{-\left( 2+\gamma /{q_{w}}\right) }\). Minimizing the right hand side in (33) with respect to \(\varphi \) gives the asymptotically optimal choice

MLMC estimator The work required to meet a given accuracy by standard MC can be significantly improved by systematic generation of control variates given by approximations corresponding to different mesh sizes. In the standard MLMC approach, we use a whole hierarchy of \(L+1\) meshes defined by decreasing mesh sizes \(\{h_{\ell }\}_{\ell =0}^L\) and the telescoping representation of the expected value of the finest approximation, \({\mathscr {Q}}_L\),

from which the MLMC estimator is obtained by approximating the expected values in the telescoping sum by sample averages as

where \(\{\varvec{\theta }_{\ell ,n}\}_{n=1,\dots ,N_\ell }^{\ell =0,\dots ,L}\) denote i.i.d. realizations of the mesh-independent random variables. Note that the correction terms

are evaluated with the same outcome of \(\varvec{\theta }_{\ell ,n}\) in both the coarse and the fine mesh approximation. This means that \({\text {Var}\left[ \varDelta {\mathscr {Q}}_{\ell }\right] }\rightarrow 0\), as \(\ell \rightarrow \infty \), provided that the numerical approximation \({\mathscr {Q}}_{\ell }\) converges strongly. Introducing the notation

and assuming a strong convergence rate \({q_{s}}/2\) we model

Note that while this holds asymptotically as \(\ell \rightarrow \infty \), by the definition of strong convergence, this model may be inaccurate for small \(\ell \), corresponding to coarse discretizations. However, it suffices for an asymptotic work estimate.

The computational work needed to generate \({\mathscr {A}}_{MLMC}\) is

where we now assume that (26a) also holds for the cost of generating \(\varDelta {\mathscr {Q}}_\ell \). In order for \({\mathscr {A}}_{MLMC}\) to satisfy (24), we fix \(\varphi \in (0,1)\) and require \({\mathscr {A}}_{MLMC}\) to satisfy the bias constraint (28) and, consequently (29), on the finest discretization, \(\ell =L\), leading to

and we also require it to satisfy the statistical error constraint (25b). In the MLMC context, (25b) is approximated by the bound

on the variance of \({\mathscr {A}}_{MLMC}\). Enforcing (25b) through this bound is justified asymptotically, as \(\text {TOL}\) converges to 0, by a Central Limit Theorem for MLMC estimators if for example \({q_{s}}>\gamma \); see Theorem 1.1 in Hoel and Krumscheid (2018). Given L and \(\varphi \), minimizing the work (39) subject to the constraint (41) leads to the optimal number of samples per level in \({\mathscr {A}}_{MLMC}\),

Substituting this optimal \(N_\ell \) in the total work (39) yields:

Finally, using the mesh parameter given by (40), work per sample (26a), and for simplicity assuming that (38) also holds for \(\ell =0\), this computational work has the asymptotic behavior

as \(\text {TOL}\rightarrow 0\), assuming \({q_{w}}\ge \min {\left( {q_{s}},\gamma \right) }/2\); see e.g. Theorem 3.1 in Giles (2008), or Corollary 2.1 and Corollary 2.2 in Haji-Ali et al. (2016). Similar to the standard MC case, it is possible to optimize the choice of \(\varphi \) in (25) for MLMC. In particular, if \({q_{s}}>\gamma \), an asymptotic analysis gives \(\varphi \rightarrow 1\), as \(\text {TOL}\rightarrow 0\), indicating an aggressive refinement of the numerical discretization to reduce the bias. Again, the choice of \(\varphi \) does not change the rate of the complexity, but an optimal choice may reduce the work with a constant factor.

In all three cases in (44), the complexity is lower than the corresponding complexity, \(\text {TOL}^{-\left( 2+\gamma /{q_{w}}\right) }\), for standard MC simulation of the same problem (33). This leads to very significant computational saving in complex models, and as a result some problems that are infeasable using the standard MC method are computationally tractable using MLMC.

MLMC applied to \({\text {E}\left[ {\mathscr {Q}}_\ell \right] }\) of Sects. 4.1–4.3 The assumption on the work per sample (26a) holds for \(\gamma =d+1\), since the degrees of freedom in the uniform spatial discretization are proportional to \(h_{\ell }^{-d}\), and the number of time steps is proportional to \(h_{\ell }^{-1}\), where work per time step of the explicit time stepping scheme is proportional to the degrees of freedom. In the setting described in Sects. 2–3, the weak convergence rate, \({q_{w}}\), is identical to the rate of convergence in the approximation of the deterministic problem, and the strong convergence rate, \({q_{s}}/2\), equals the weak rate. The explicit Newmark time stepping scheme and the numerical approximation of \({\mathscr {Q}}_E\) and \({\mathscr {Q}}_W\) are both of order 2, so that asymptotically as \(h_{\ell }\rightarrow 0\) we expect \({q_{w}}=2\) and \({q_{s}}=4\) assuming sufficiently regular exact solution. Based on these observations, summarized in Table 1, and the complexity estimates (33) and (44), we expect the asymptotic complexity to improve from \(\text {TOL}^{-3.5}\) to \(\text {TOL}^{-2}\), for \(d=2\), and from \(\text {TOL}^{-4}\) to \(\text {TOL}^{-2}(\log {(\text {TOL}^{-1})})^2\), for \(d=3\), as \(\text {TOL}\rightarrow 0\) and standard MC is replaced by MLMC.

5 Numerical tests

These numerical experiments make up an initial study of the validity of MLMC techniques as a means of accelerating the approximation of expected values of source inversion misfit functions, where we take the expectation with respect to random parameters modeling uncertainties in the Earth model. After this initial study where the source is approximated to a point and only synthetic data are used, our ultimate goal is to integrate MLMC into the full source inversion problem where the finite fault solution is to be inferred by using real seismological data. While the final source inversion must be based on numerical simulations on a three-dimensional Earth model, these initial tests were made on a two-dimensional model described in the following. Furthermore, the misfit functions were chosen with the aim of identifying the source location considering the source moment tensor as fixed.

We first describe the problem setup, including the source model, computational geometry, discretization, random Earth material parameters, and the synthetic data replacing actual measurements in the two-dimensional test. Finally, we describe the execution and results of MLMC computations on the given problem setup.

5.1 Problem setup

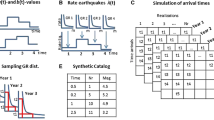

For the numerical tests with \(d=2\), we create a geometry consistent with an actual network of receivers, belonging to a small seismic network in the Ngorongoro Conservation Area on the East Rift, in Tanzania. We do this by selecting three receivers that are approximately aligned with the estimated epicenter of a seismic event that was recorded. Figure 2 illustrates the physical configuration. The rough alignment of the source and the receiver locations in the actual seismic network make this event a good opportunity to run the tests in a two-dimensional domain. We describe the two-dimensional computational domains below, together with the source and Earth parameters.

5.1.1 Source model

We consider a point source with a symmetric moment tensor, modeled as a body force in the variational equation (8) of Problem 2,

with the moment tensor

measured in \(\text {Nm}\), and a Gaussian source-time function with corner frequency \(f_{0}=2\,\text {Hz}\),

The time source function is centered at time \(t_{c}=0\,\text {s}\); the solution time interval starts at \(t_{0}=-0.6\,\text {s}\) and ends at \({\mathscr {T}}=25\,\text {s}\), and the QoI is based on \(0\le t\le {\mathscr {T}}\).

5.1.2 Computational domain

The heterogeneous Earth is initially modeled with six homogeneous layers of variable thickness, as stated in Table 2, and terminated by an infinite half-space. The layers are separated by horizontal interfaces; topography is not included here. The source-receiver geometry is defined by the depth (vertical distance from the free surface) of the point source and the horizontal distances between the point source and the three receivers, as given in Table 3 and shown in Fig. 3.

As described in Sect. 4.1, the half-plane domain is approximated by a finite domain, with absorbing boundary conditions on the three artificial boundaries. In the numerical approximation of Problem 2 with seismic attenuation, the PML boundary conditions are not perfectly absorbing, but reflections are created at the boundary; see e.g. Section 3.4 in Komatitsch et al. (2012). The finite domain is defined by three additional parameters \(x_s\), \(\varDelta D_x\), and \(\varDelta D_z\), which are chosen large enough so that no reflections reach any of the three receivers during the simulation time interval, \([-0.6,{\mathscr {T}}]\,\text {s}\), given the maximal velocities in the ranges of uncertainties.

Domain of the two-dimensional model. Four parameters define the configuration: the depth of the source, \(d_s>0\), and the signed horizontal distances between the source and the three receivers, \(\varDelta r_1,\varDelta r_2,\,\text {and}\,\varDelta r_3\). The horizontal coordinate direction is denoted x and the vertical z (color figure online)

Discretization of the computational domain For the numerical computations using SPECFEM2D version 7.0 (Komatitsch et al. 2012) in double precision, the computational domain is discretized uniformly into squares of side \(\varDelta x_{\ell }\), with the coarsest mesh using \(\varDelta x_{0}=2500\,\text {m}\) so that the interfaces always coincide with element boundaries. These computations are based on spectral elements in two dimensions, using \(n_c=9\) control points to define the isomorphism between the computational element and the reference element, a basis of Lagrange polynomials of degree \(N_l=4\), and \(5\times 5\) Gauss-Lobatto-Legendre quadrature points. The second order Newmark explicit time stepping scheme was used with step size \(\varDelta t_{0}=6.25\times 10^{-3}\,\text {s}\) on the coarsest discretization, which was refined at the same rate as \(\varDelta x_{\ell }\) to keep the approximate CFL condition satisfied. A PML consisting of three elements was used on the artificial boundaries.

5.1.3 Earth material properties

The viscoelastic property of the Earth material, as described in Sect. 4.2, is approximated by a generalized Zener model, implemented in SPECFEM2D, with \(B=3\) SLS and the quality factor, Q, which is constant in each layer (Table 4). The quality factor is kept constant throughout the simulations.

As described in Sect. 2, the triplet \((\rho , \alpha , \beta )\), denoting the density, compression wave speed, and shear wave speed, respectively, defines the Earth’s material properties with varying spatial position. In the particular seven-layer domain introduced above, a one-dimensional, piecewise constant velocity model is used. These three fields are then completely described by three seven-dimensional random variables, \(\varvec{\alpha }\), \(\varvec{\beta }\), and \(\varvec{\rho }\). Here, we detail the probability distributions we assign to these parameters. To prepare the inversion of real data, \(\alpha \), \(\beta \), and \(\rho \) are adapted from the results of Albaric et al. (2010) and Roecker et al. (2017) obtained from previous seismological experiments in adjacent areas. Among the unperturbed values, denoted with a bar over the symbols, listed in Table 4, \(\bar{\varvec{\beta }}\) and \(\bar{\varvec{\rho }}\) are treated as primary parameters, while \(\varvec{\bar{\alpha }}\) is scaled from \(\bar{\varvec{\beta }}\). The relation

is chosen because it is a common use for crustal structure and it is in agreement with previous seismological studies in the area (Roecker et al. 2017).

We model the uncertain shear wave speed, \(\varvec{\beta }\), as a uniformly distributed random variable

with independent components, and where the range is a plus-minus 10% interval around the unperturbed value, i.e.

where \(q=0.1\). In keeping with (45), but assuming some variability in the ratio, the compressional wave speed, \(\varvec{\alpha }\), is modeled by a random variable which, conditioned on \(\varvec{\beta }\), is uniformly distributed with independent components,

where \(\nu ^{lb}=1.64\) and \(\nu ^{ub}=1.78\), corresponding to a range of variability of about ± 4%. Finally, the density, \(\varvec{\rho }\), is again uniformly distributed with independent components

where

with \(r=0.1\).

Sample average, marked with the thick black graph, and equidistributed quantiles, color-coded thinner graphs, of the Earth material parameters based on the 320 samples from the verification run. (Top Left) Shear wave speed, \(\beta \), (Top Right) Compressional wave speed, \(\alpha \), (Bottom Left) Density, \(\rho \), and (Bottom Right) \(\nu =\alpha /\beta \) (color figure online)

Figure 4 shows the sample mean and contour lines of the sample CDF for these random parameters, based on samples used in the verification run of the problem setup.

5.1.4 Synthetic data

Instead of actual, measured data from seismic activity, the misfit functions for the QoI in the two-dimensional computations use synthetic data obtained from the same underlying code using a finer discretization, \(\varDelta x_{}=78.125\,\text {m}\) and \(\varDelta t_{}=1.953125\times 10^{-4}\,\text {s}\), than any of the samples in the MLMC run. The source location relative to the receivers, listed in Table 3, agrees with the independently estimated epicenter in Fig. 2 and the fixed Earth material parameters, listed in Table 5, correspond to one outcome of the sampling procedure in Sect. 5.1.3. The computed displacements are illustrated in the left column of Fig. 5.

The resulting time series for the displacement in the three receivers are then restricted to a much coarser time discretization, corresponding to a frequency of measurements of \(160\,\text {Hz}\), that are in the realistic range of frequencies for measured seismograms, and i.i.d. noise \(\varvec{\varepsilon }_{k,n}\sim {\mathscr {N}}(\mathbf 0 ,\sigma ^{2}\mathbf I )\) with \(\sigma =2.5\times 10^{-3}\approx 1\%\) of \(\max \mathbf{s }\), is added as in (10); see the right column of Fig. 5.

Synthetic data \(\mathbf d (\mathbf x _{n},t)=\mathbf s (\mathbf x _{n},t;\mathbf x _{\mathbf{s }}^{*},\varvec{\theta }^*)\), where the displacement \(\mathbf s (\mathbf x _{n},t;\mathbf x _{\mathbf{s }}^{*},\varvec{\theta }^*)\) is obtained from a computation with the source location, \(\mathbf x _{\mathbf{s }}^{*}\), and the geometry parameters of Figure 3 given in Table 3 and with a fixed outcome of the random Earth parameters, \(\varvec{\theta }^*\), within their ranges of uncertainty, as given in Table 5. To the left are the x-component (top) and z-component (bottom) of the synthetic data without added noise. To the right, the x-component in receiver location 1 is shown both with and without added noise, with the bottom being a detailed view of the top

5.1.5 The impact of attenuation on the QoIs

To assess the effect of attenuation in the problem described above, we compute the QoIs obtained with and without attenuation in the model, for one outcome of the Earth material parameters, given in Table 6, and with the geometry of the MLMC runs in Table 3, using discretization levels 1 and 2 in Table 8. Including attenuation changed both QoIs several percent; see Table 7.

The significantly reduced computing time of MLMC, compared to standard MC with corresponding accuracy, allows for simulation with an error tolerance small enough to evaluate the usefulness of including attenuation in the model in the presence of uncertainty in the Earth material parameters.

An alternative variant of the MLMC approach in this paper, would be to use QoIs sampled using the elastic model as control variates for QoIs sampled using the model with attenuation. Since the work associated with the elastic model is smaller, we expect an MLMC method where coarse grid samples are based on the elastic model to further reduce the computational cost of achieving a desired accuracy in the expected value of the QoI.

5.2 MLMC tests

In this section, we describe how we apply the MLMC algorithm to the test problem introduced above and present results showing a significant decrease in cost in order to achieve a given accuracy, compared to standard MC estimates.

5.2.1 Verification and parameter estimation

In a verification step, we compute a smaller number of samples on four discretization levels corresponding to a repeated halving of \(\varDelta x_{}\) and \(\varDelta t_{}\), as specified in Table 8. Statistics of the underlying Earth material samples on the coarsest discretization are illustrated in Fig. 4.

This verification step is necessary to verify that our problem configuration works with the underlying code as expected. At the same time, the assumptions (26) and (38) are tested, by experimentally observing the computation time per sample on the different levels, as well as sample averages (27) and sample variances,

of either QoI in Sect. 4.3, \({\mathscr {Q}}_{*,\ell }\), \(\ell =0,1,2,3\), and the corresponding two-grid correction terms \(\varDelta {\mathscr {Q}}_{*,\ell }\), \(\ell =1,2,3\).

Work estimates The cost per sample is taken to be the cost of generating one sample of the displacement time series  using SPECFEM2D. It is measured as the reported elapsed time from the job scheduler on the supercomputer, multiplied by the number of cores used, as given in Table 8. The post-processing of the time series to approximate the QoI and compute the MLMC estimators, as outlined in Sects. 4.3 and 4.4 without any additional filters etc., is performed on laptops and workstations at a negligible cost, compared to the reported time.

using SPECFEM2D. It is measured as the reported elapsed time from the job scheduler on the supercomputer, multiplied by the number of cores used, as given in Table 8. The post-processing of the time series to approximate the QoI and compute the MLMC estimators, as outlined in Sects. 4.3 and 4.4 without any additional filters etc., is performed on laptops and workstations at a negligible cost, compared to the reported time.

The time per sample on a given level varied very little, and its average over the samples in the verification run, shown in Fig. 6, verifies the expectation from Sect. 4.1 that \(W_\ell \propto h_{\ell }^{-3}\), corresponding to \(\gamma =3\) in (26a).

Work per sample from the verification run measured as the core time, i.e. the elapsed time reported from the supercomputer’s job scheduler multiplied by the number of cores per job, given in Table 8

Sample averages (left) and sample variances (right) of \({\mathscr {Q}}_{E,\ell }\) and \(\varDelta {\mathscr {Q}}_{E,\ell }\) based on the verification run with the number of samples, \(N_\ell \), given in Table 8. Error bars show bootstrapped \(95\%\) confidence intervals

Sample averages (left) and sample variances (right) of \({\mathscr {Q}}_{W,\ell }\) and \(\varDelta {\mathscr {Q}}_{W,\ell }\) based on the verification run with the number of samples, \(N_\ell \), given in Table 8. Error bars show bootstrapped \(95\%\) confidence intervals

QoI based on the \({L}^{{2}}\)-misfit For \({\mathscr {Q}}_{E,\ell }\) defined in (13), both \({\text {E}\left[ \varDelta {\mathscr {Q}}_{E,\ell }\right] }\) and \({\text {Var}\left[ \varDelta {\mathscr {Q}}_{E,\ell }\right] }\) appear to decrease faster in this range of discretizations than the asymptotically optimal rates, which are \({q_{w}}=2\) and \({q_{s}}=4\) given the underlying numerical approximation methods; see Fig. 7. The increased convergence rates indicate that we are in a pre-asymptotic regime where, through the stability constraint, the time step is taken so small that the time discretization error does not yet dominate the error in \({\mathscr {Q}}_E\), the way it eventually will as \(\text {TOL}\rightarrow 0\). In the context of the intended applications for inverse problems, it can be justified to solve the forward problem to a higher accuracy than one would demand in a free-standing solution to the forward problem, but it may still be unrealistic to use smaller relative tolerances than those used in this study. This means we can expect to remain in the pre-asymptotic regime, and simple extrapolation-based estimates of the bias will be less reliable.

We note from the sample variances, \({\mathscr {V}}({\mathscr {Q}}_{E,\ell })\), that the standard deviation of \({\mathscr {Q}}_E\) is of the order \(5\times 10^{-4}\), whereas \({\text {E}\left[ {\mathscr {Q}}_E\right] }\) itself is of the order \(4\times 10^{-3}\) (see the reference value in Table 13).

Given that \({\mathscr {V}}\left( \varDelta {\mathscr {Q}}_{E,1}\right) \) is significantly smaller than \({\mathscr {V}}\left( {\mathscr {Q}}_{E,0}\right) \), and that the cost per sample of \({\mathscr {Q}}_{E,0}\) is significantly smaller than the corresponding cost of \({\mathscr {Q}}_{E,1}\), it is intuitively clear that the optimal MLMC approximation should include samples starting at the discretization level labeled \(\ell =0\) in Table 8, and that the finest level, L, will depend on the tolerance, \(\text {TOL}\).

QoI based on \({W}_{{2}}^{{2}}\)-distances For \({\mathscr {Q}}_{W,\ell }\) defined in (18), we again seem to be in the pre-asymptotic regime; see Fig. 8. Here, unlike for \({\mathscr {Q}}_E\), it is clear that samples on level \(\ell =0\) are of no use, since \({\mathscr {V}}\left( \varDelta {\mathscr {Q}}_{W,\ell }\right) \) only become smaller than \({\mathscr {V}}\left( {\mathscr {Q}}_{W,\ell }\right) \) for \(\ell \ge 2\). This shows that typically the optimal MLMC approximation should start with the discretization labeled \(\ell =1\) in Table 8.

We note from the sample variances, \({\mathscr {V}}({\mathscr {Q}}_{W,\ell })\), that the standard deviation of \({\mathscr {Q}}_W\) is of the order \(3\times 10^{-2}\), while \({\text {E}\left[ {\mathscr {Q}}_W\right] }\) is of the order \(1\times 10^{-1}\); see the reference value in Table 13. Thus the uncertainty in the Earth material parameters contribute significantly to \({\mathscr {Q}}_W\) when, as in this case, the source location, \(\mathbf x _{\mathbf{s }}^{~}\), in the MLMC simulation is not too far from the source location, \(\mathbf x _{\mathbf{s }}^{*}\), used when generating the synthetic data.

5.2.2 Generation of MLMC and MC runs

To test the computational complexity of generating MC and MLMC estimators with a given tolerance, \(\text {TOL}\), in \({\text {E}\left[ {\mathscr {Q}}_E\right] }\) and \({\text {E}\left[ {\mathscr {Q}}_W\right] }\), we take a sequence of tolerances, \(\{\text {TOL}_i\}_{i=1}^I\), and predict which refinement levels to use, and how many samples to use on each level to achieve an error within a given tolerance, as follows:

Parameters in models of work and convergence We take the cost per sample, cf. (26a),

where \(\overline{W_\ell }\) denotes the average core time in the verification run and \(\gamma =3\). For the bias estimate, cf. (26b), we make the assumption that the asymptotic weak convergence rate holds for \(\ell \ge 3\) and we approximate the bias on level \(\ell <3\) using the correction to level \(\ell +1\). More precisely,

where \({\mathscr {A}}_{MC}^{95\%}(\varDelta {\mathscr {Q}}_{*,\ell })\) denotes the maximum absolute value in the bootstrapped 95% confidence interval of \({\mathscr {A}}_{MC}(\varDelta {\mathscr {Q}}_{*,\ell })\). Similarly, for the estimate of the variances, cf. (26c) and (38), we assume

and

where \({\mathscr {V}}^{95\%}({\mathscr {Q}}_{*,\ell })\) and \({\mathscr {V}}^{95\%}(\varDelta {\mathscr {Q}}_{*,\ell })\) denote the maximum value in the bootstrapped 95% confidence interval of \({\mathscr {V}}({\mathscr {Q}}_{*,\ell })\) and \({\mathscr {V}}(\varDelta {\mathscr {Q}}_{*,\ell })\) respectively.

Tolerances used and corresponding estimators For the convergence tests, we estimate the scale of \({\text {E}\left[ {\mathscr {Q}}_*\right] }\) from the verification run and choose sequences of decreasing tolerances

where, for \({\text {E}\left[ {\mathscr {Q}}_E\right] }\), \(\text {TOL}_1 = 4.650\times 10^{-4}\approx 12.5\%\) of \({\text {E}\left[ {\mathscr {Q}}_E\right] }\) and, for \({\text {E}\left[ {\mathscr {Q}}_W\right] }\), \(\text {TOL}_1 = 1.920\times 10^{-2}\approx 21\%\) of \({\text {E}\left[ {\mathscr {Q}}_W\right] }\).

To determine which levels to include and how many samples to use on each level in the MLMC, we proceed as follows: Given \(\text {TOL}\), \(C_\xi =2\), \(\ell _{max}\), and the models (47)–(50), we use the brute force optimization described in Algorithm 1 to determine the optimal choices \(\mathbf H = \left( \ell _0,L,\{N_\ell \}_{\ell =\ell _0}^L\right) \). Here, \(\ell _{max}\) is a relatively small positive integer, since the number of levels grows at most logarithmically in \(\text {TOL}^{-1}\). The resulting choices are shown in Table 9 and Table 11, for \({\mathscr {Q}}_E\) and \({\mathscr {Q}}_W\), respectively.

For standard MC estimators, we make the analogous brute force optimization to determine on which level to sample and how many samples to use; see Tables 10 and 12.

Observations regarding the suggested MLMC and MC parameters Recalling that we expect, \(\gamma =3\), \({q_{w}}=2\), and \({q_{s}}=4\), asymptotically for both \({\mathscr {Q}}_E\) and \({\mathscr {Q}}_W\), and that this should lead to an asymptotic complexity \(W_{\text {MC}}\propto \text {TOL}^{-3.5}\) and \(W_{\text {MLMC}}\propto \text {TOL}^{-2}\), as \(\text {TOL}\rightarrow 0\), we show the predicted work for both MC and MLMC together with these asymptotic work rate estimates in Fig. 9. In both cases, it is clear that the predicted MLMC work grows with the asymptotically expected rate, which is the optimal rate for Monte Carlo type methods, as it is the same rate obtained for MC sampling when samples can be generated at unit cost, independently of \(\text {TOL}\).

It is clear, by comparing Fig. 10 (showing the refinement level) with Fig. 9, that there are ranges of values of \(\text {TOL}\) for which the predicted work for MC first grows approximately as \(\text {TOL}^{-2}\), and then faster towards the end of the stage. These ranges correspond to values of \(\text {TOL}\) resulting in the same refinement level, so that the cost per sample and the bias estimate, within each range, are independent of \(\text {TOL}\). As noted above, in this pre-asymptotic regime, the apparent convergence \({\text {E}\left[ {\mathscr {Q}}_{*,\ell }\right] }\), with respect to \(\ell \), is faster than the asymptotically expected rate, \({q_{w}}=2\). Therefore, the MC work will also grow at a slower rate than the asymptotic estimate. In particular, \(\varDelta x_{}\) and \(\varDelta t_{}\) are decreased at a lower rate with decreasing \(\text {TOL}\).

Splitting parameter, \(\varphi \), in (25), implicit in the MLMC and MC estimators listed in Table 9 for \({\mathscr {Q}}_E\), (Left), and Table 11 for \({\mathscr {Q}}_W\), (Right). The dashed line denotes the asymptotically optimal value \(\varphi =4/7\) for MC, as \(\text {TOL}\rightarrow 0\), given the asymptotic work and convergence rates

This faster apparent weak convergence rate is also reflected in the value of the splitting parameter, \(\varphi \) in (25), implicitly obtained through the brute force optimization in Algorithm 1 (Fig. 11). The optimal splitting for MC, given the asymptotic rates of work per sample, \(\gamma =3\), and weak convergence, \({q_{w}}=2\), is \(\varphi _\text {MC}=4/7\) according to (34), while for \(\text {TOL}\) in the given range the observed \(\varphi \) is typically closer to 1 due to the fast decay of the bias estimate. For MLMC, in contrast, we expect \(\varphi \rightarrow 1\), as \(\text {TOL}\rightarrow 0\), with , \(\gamma =3\), \({q_{w}}=2\), and \({q_{s}}=4\).

Predicted savings of MLMC compared to MC We recall from Fig. 9 that MLMC still provides significant savings, compared to MC, even in the range of tolerances where the work of MC grows at a slower rate than we can predict that it will do asymptotically, as \(\text {TOL}\rightarrow 0\). For example, for the finest tolerance in Table 9, MLMC is predicted to reduce the work of MC by about \(97\%\), and for the finest tolerance in Table 11, by about \(78\%\). This is also illustrated in Fig. 12.

5.2.3 MLMC and MC runs

Here, we present computational results based on the actual MLMC and MC runs performed with the parameters listed in Table 9, for \({\mathscr {Q}}_E\), and Table 11, for \({\mathscr {Q}}_W\).

On the use of parameters estimated in the verification run Note that we use information from the verification run when we set up the convergence tests. This is in line with the intended use of MLMC in the inverse problem setting that involves repeatedly computing approximate solutions to the underlying forward problem with different parameter values in the course of solving the inverse problem, so that prior information about parameters from earlier runs becomes available. Additionally, a continuation type algorithm (Collier et al. 2015), can be used in the inverse problem setting.