Abstract

This research proposes a method for optimizing process performance; the method involves the use of multiple quality characteristics, fuzzy logic, and radial basis function neural networks (RBFNNs). In the method, each quality characteristic is transformed into a signal-to-noise ratio, and all the ratios are then provided as inputs to a fuzzy model to obtain a single comprehensive output measure (COM). The RBFNNs are used to generate a full factorial design. Finally, the average COM values are calculated for different factor levels, where for each factor, the level that maximizes the COM value is identified as the optimal level. Three case studies are presented for illustrating the method, and for all of them, the proposed method affords the largest total anticipated improvements in multiple quality responses compared with previously used methods, including the fuzzy, grey-Taguchi, Taguchi, and principal component analysis methods. The main advantages of the proposed method are its effectiveness in obtaining global optimal factor levels, its applicability and the requirement of less computational effort, and its efficiency in improving performance. In conclusion, the proposed method may enable practitioners optimize process performance by using multiple quality characteristics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over the past few years, the optimization of multiple responses of a product or process has received considerable attention as a potential technique that can help survive today’s intense competition (Çakıroğlu and Acır 2013; Otebolaku and Andrade 2015). The Taguchi method, which has been traditionally widely used, is applicable only for the optimization of a single quality characteristic of a product or process (Li et al. 2008; Dasgupta et al. 2014). Consequently, several methods have been proposed for optimizing process performance by using multiple quality responses; the methods include data envelopment analysis (Al-Refaie et al. 2009), fuzzy regression (Al-Refaie 2013), artificial neural networks (Zăvoianu et al. 2013; Chen 2015; Wu and Chen 2015), fuzzy methods (Bose et al. 2013), the utility method (Sivasakthivel et al. 2014), and goal programming (Al-Refaie and Li 2011; Al-Refaie et al. 2014). Nevertheless, such methods have several limitations, such as relying on nonparametric evaluation, dealing with a limited number of experiments, achieving local optimality, and requiring good mathematical skills. These limitations and weaknesses of existing methods continue to motivate researchers to develop more efficient methods for solving multiresponse problem in robust design.

In the Taguchi method, finding the combination of optimal factor settings that optimizes the process performance is critical. Although several methods have been proposed in the literature, they have limitations (Al-Refaie 2014a, b, c, d, 2015; Al-Refaie et al. 2012), which include (1) providing local optima; for example, fuzzy logic and regression methods, (2) the methods being nonparametric methods such as the utility concept and grey relational analysis, (3) the relationship between process settings and responses is ignored, (4) insufficiency of concurrent improvements in multiple responses, and (5) requirement of mathematical skills such as knowledge of regression techniques. Accordingly, the present research makes an extension to ongoing research by proposing an approach for optimizing process performance by using multiple quality responses, fuzzy logic, and artificial neural networks (ANNs) techniques. The remainder of this paper is presented in the following sequence. “Fuzzy logic and ANN techniques in the optimization procedure” introduces fuzzy logic, ANN techniques, and the optimization procedure. “Illustrations” provides illustrative case studies. “Research results” summarizes the research results. Finally, the conclusions are presented in “Conclusions”.

2 Fuzzy logic and ANN techniques in the optimization procedure

2.1 Fuzzy logic

The fuzzy logic principle is widely used to handle vague and uncertain information. Two common types of fuzzy systems are used: Takagi–Sugeno (T–S) and Mamdani fuzzy systems. Mamdani fuzzy systems are special cases of T–S fuzzy systems, which involve mathematical expressions that contain a linear function (Lilly 2010). The architecture of a fuzzy system is shown in Fig. 1 (Lilly 2010). The functions of a fuzzy system include fuzzification, which depends on the membership function (MF), rule evaluation, defining the MF for the output, setting the fuzzy rules, and defuzzification to transform the fuzzy value into a comprehensible output measure (Sun and Hsueh 2011; de Pontes et al. 2012).

In the proposed method, the center of gravity (COG) defuzzification method is used. Suppose two input variables are to be converted into a COM value by using Mamdani’s fuzzy inference method, the method sets two MFs, high and low, for each input. Let the range of input 1 be [A, D] and the range of input 2 be [B, C]. Then, the fuzzification of the input variables is as shown in Fig. 2. Generally, the rules that relate the two inputs with the output are set as follows:

If input 1 is Low and input 2 is Low then the output is Low.

If input 1 is Low and input 2 is High then the output is Normal.

If input 1 is High and input 2 is Low then the output is Normal.

If input 1 is High and input 2 is High then the output is High.

Applying these rules to fuzzy values yields the following results:

For two values A and B, as the representation A ∧ B denotes min(A, B). For example,

μ low(input 1) ∧ μ low(input 2) = min(μ low(input 1), μ low(input 2)).

The MFs for the output are then set as shown in Fig. 3, where S and Q are in the range (0, 1). Finally, output defuzzification is performed by using the COG method to compute the COM value, as shown in Fig. 4.

Several studies have used the fuzzy logic approach for optimizing performance by considering multiple quality characteristics (Azadeh et al. 2011; Sun et al. 2012; Mandic et al. 2014).

2.2 Artificial neural networks

The ANNs are soft computing techniques based on certain aspects of human behavior; they involve the use of a finite number of layers (which serve as the computing elements) with different neurons. The capabilities of the ANNs are stored in inter-unit connections, strengths, or weights, which are all handled and tuned in the learning process (Asiltürk and Çunkaş 2011; Moosavi and Soltani 2013). The type of ANN that is the most widespread consists of input, hidden, and output layers. The input and output layers represent the variables, and the hidden layer represents the relationship between the input and the output variables. The weights of the nodes are random for a given input pattern and are updated to obtain predicted responses that are less susceptible to errors (Almonacid et al. 2011). In many types of ANNs, such as the backward propagation neural network (BPNN), the gradient descent method, the Levenberg–Marquardt algorithm, the Broyden–Fletcher–Goldfarb–Shanno algorithm, or the resilient back propagation algorithm can be applied to adjust the weights used in the approximation. A multilayer perceptron model is mainly based on the BPNN. The drawbacks of the BPNN are overfitting, and immature decisions that result from the use of local minima rather than global minima. Moreover, a BPNN model shows slow convergence. In the BPNN, a fixed number of neurons should be set before data training, and a wide range of inputs can be covered since sigmoid neurons are used in the hidden layer (Xia et al. 2010). By contrast, the radial basis function neural network (RBFNN) can approximate the desired outputs predicted without requiring a mathematical expression of the relationship between the outputs and the inputs; hence, radial basis functions are called model-free estimators. A high convergence speed is also achievable by using a radial basis function where only one hidden layer is present. The RBFNN architecture is shown in Fig. 5 (Peng et al. 2014). The parameters Ud and yi are the inputs and outputs of the RBFNN for the training data and \(G_{l}\) represents the Gaussian function in hidden layer l at the center where the number of controllable factors ranges from 1 to D, n is the number of experiments, the number of hidden layers is L, and \(w_{\text{lz}}\) is the weight. The problem of local minima is not encountered in the RBFNN, unlike the BPNN. Because of the advantages and powerful features of the RBFNN, it is widely used for nonlinear functional approximation and pattern classification. The RBFNN has other advantages: local and optimal approximations can be obtained; it has a high convergence rate, high precision, and an adaptive structure; and the output is independent of the weight value set initially (Javan et al. 2013). In the RBFNN, the network output vector is a linear combination of the outputs of the basis function, and the neurons in the hidden layer use the radial basis function as an activation function. If a Gaussian function is chosen as the radial basis function, the outputs can have the following form:

where \(\hat{U}(s) = \left[ {\mathop {\hat{U}}\limits_{1} (s) \ldots \ldots \mathop {\hat{U}}\limits_{m} (s)} \right]^{T}\) is the input vector, \(\hat{y}_{z} (s + 1)\) is the zth output, l represents the hidden neuron, and the zth output neuron is linked by the weight \(w_{lz}\). Furthermore, L denotes the number of Gaussian functions, which is equal to the number of hidden layer nodes, \(\varphi_{l}\) represents the Gaussian function in hidden layer l at the center, m l is the width of the Gaussian function, and \(\sigma_{l}\) represents the standard deviation of u l . In the training algorithm applied to the initial RBFNN, many iterations are performed to find the optimal values of \(w_{\text{lz}}\) and \(m_{l}\). Therefore, it is imperative to know the initial structure of the network. The input vectors are used for determining the basic functions, and then both input and output data are used to set the weights connected to the output layer. This two-stage training methodology prevents the problem of local minima, and the RBFNN has a high divergence rate (Tsai 2014).

RBFNNs have been widely used in many business applications (Tatar et al. 2013; Chen et al. 2013). Furthermore, several studies have employed the ANN approach for optimizing performance by using multiple quality characteristics. Furtuna et al. (2011) used the ANN method along with a genetic algorithm to optimize the polysiloxane synthesis process. Lin et al. (2012) conducted parameter optimization of a solar energy selective absorption film continuous sputtering process by using Taguchi methods, neural networks, a desirability function, and genetic algorithms. Marvuglia et al. (2014) proposed a new approach involving the combination of a fuzzy logic controller and ANNs for the dynamic and automatic regulation of the indoor temperature.

2.3 The optimization procedure

In the Taguchi method, the columns of the orthogonal array (OA) represent the controllable factors to be studied, and the rows represent combinations of factor levels used in experiments. Typically, there are three types of quality characteristics: smaller-the-better (STB), larger-the-better (LTB), and nominal-the-best (NTB) responses. The proposed approach for optimizing process performance by using multiple quality characteristics is illustrated in Fig. 6 and is outlined as follows:

Step 1: Assume that in an OA, L controllable factors are studied by conducting n experiments to improve J quality characteristics, as shown in Table 1; y ijk is the kth replicate of the jth response in the ith experiment, where i = 1, …, n; j = 1, …, J; and k = 1, …, K. Compute the signal-to-noise ratio (SNR) (\(\eta_{ij}\)) for the jth response in experiment i by using the appropriate formula as follows. For STB-type quality characteristic, \(\eta_{i}\) is calculated as (Al-Refaie 2010, 2011):

For NTB-type quality characteristic, \(\eta_{i}\) is calculated as follows:

where \({\overline{{y_{i}}}}\) and \(s_{i}^{{}}\) are the estimated average and standard deviation of response j in experiment i, respectively. For LTB-type quality characteristic, \(\eta_{i}\) is calculated as

The parameter \(\eta_{i}\) is computed for each response in experiment i, as shown in Table 2.

Step 2: Convert the multiple quality characteristics into a single response by using fuzzy logic (or Mamdani-style fuzzy inference) in which the inputs and output MFs are linear. The inputs are the \(\eta_{ij}\) values, whereas the outputs are the COM i values. The Mamdani-style fuzzy inference process is performed in four stages:

-

(1)

Fuzzification of the inputs

Define the MF for each quality characteristic using the corresponding \(\eta_{ij}\) values. Let the values G i1, G i2, …, G iJ represent the fuzzy subsets defined by the MFs \(\mu_{{G_{i1} }}\), \(\mu_{{G_{i1} }}\), …, and \(\mu_{{G_{ij} }}\). Use the minimum and maximum values of \(\eta_{ij}\) to generate the MF for each quality characteristic, as shown in Fig. 7.

-

(2)

Rule evaluation

Generate the fuzzy rules that relate the inputs to the output. The fuzzy rule base consists of a set of J inputs, one output measure F, and T rules; for example, for the ith experiment, the rules may be formulated as follows:

Rule 1: If \(\eta_{i1}\) is G 11 and \(\eta_{i2}\) is G 12… and \(\eta_{iJ}\) is G 1J then F 1 is M 1 else

Rule 2: If \(\eta_{i1}\) is G 21 and \(\eta_{i2}\) is G 22…. and \(\eta_{iJ}\) is G 2J then F 2 is M 2 else

$$\begin{array}{cccccccc} \vdots & \vdots & \vdots & \vdots & \vdots & \vdots & \vdots & \vdots \\ \end{array}$$Rule T: If \(\eta_{i1}\) is G T1 and \(\eta_{i2}\) is G T2 …and \(\eta_{iJ}\) is G TJ then F T is M T .

-

(3)

Aggregation of the rule outputs

From the fuzzy rules, the MFs of the output are identified. For example, the value of M t that is obtained from the fuzzy rules represents the fuzzy subsets defined by MFs, \(\mu_{{M_{t} }}\). To compute M t for each rule t, the \(\eta_{ij}\) value for each quality characteristic is used as an input variable of the rules. The fuzzy reasoning of the rules yields the output by using the max–min composition operation. The MFs of the output of fuzzy reasoning can be expressed as

$$\mu_{{C_{0} }} (F) = \left( {\mu_{{G_{11} }} (\eta_{i1} ) \wedge \mu_{{G_{12} }} (\eta_{i2} ) \wedge \ldots \ldots \wedge \mu_{{G_{1J} }} (\eta_{iJ} )\mu_{{M_{1} }} (F_{1} )} \right) \vee \ldots \ldots \left( {\mu_{{G_{T1} }} (\eta_{i1} ) \wedge \mu_{{G_{T2} }} (\eta_{i2} ) \wedge \ldots \ldots \wedge \mu_{{G_{TJ} }} (\eta_{iJ} )\mu_{{M_{T} }} (F_{T} )} \right)$$(5)where ∧ is the minimum operation used in the AND fuzzy operation and ∨ is the maximum operation used in the OR fuzzy operation. Figure 8 shows the fuzzy value for each quality characteristic in experiment i. Suppose two quality characteristics are studied, with the first rule being low for both quality characteristics. This results in low for the output, which means min (low G i1 and low G i2).

-

(4)

Defuzzification

A defuzzification method is used to convert the fuzzy inference output \(\mu_{{C_{0} }}\) into a nonfuzzy value COM i . The conversion is performed using the COG method. The larger the COM value the better the performance. For each experiment i, the COM i value is calculated using the following equation, and it is displayed in Fig. 9.

Fig. 9 $$COM = \frac{{\sum F\mu_{{C_{0} }} (F)}}{{\sum \mu_{{C_{0} }} (F)}}$$(6)

Step 3: To obtain the global optimal solution, the full data set of the COM is generated by the RBFNN technique on the basis of the input and output matrices; the OA is used as the input matrix and the COM values are employed as the output matrix. The full data set of the COM value is predicted with less error for the full factorial design. Table 3 shows the layout of the two matrices used as the training data and the predicted data for the full factorial design; n and H are the number of experiments in the OA and the number of full factorial runs, respectively. In this approach, the function type of the RBFNN is newrbe, in which the number of hidden layers is two and the spread constant with a smoothing factor of one. The number of neurons is equal to the number of input vectors or the number of experiments. The first layer represents the nonlinear function (a Gaussian function is used as an activation function) and the second layer represents the linear function.

Step 4: Calculate the average COM values for each factor level, as shown in Table 4. The level that has the largest average COM value is identified as the optimal level for the factor.

Step 5: Compare the total anticipated improvement in each quality response obtained using the proposed approach with those obtained using previously used approaches. The anticipated improvement in each quality response is calculated by subtracting the sum of average SNRs for the combination of optimal factor levels from that for the combination of initial factor levels. Furthermore, calculate the total anticipated improvement in multiple responses and then compare the results.

3 Illustrations

Three real case studies that were investigated in previous studies are adopted for illustrating the proposed method.

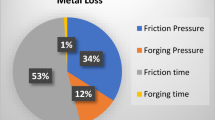

3.1 Optimization of process parameters in electro-erosion

Muthuramalingam and Mohan (2014) optimized the parameters of an electro-erosion process by using three quality characteristics: material removal rate (MRR, mm3/min), electrode wear rate (EWR, mm3/min), and surface roughness (SR, μm). The parameters are shown in Table 5. The experimental results of the L27 array are shown in Table 6. In this case study, the quality characteristics are classified into two categories: the EWR and SR are STB and the MRR is LTB. The proposed method is then employed as follows:

-

Step 1:

The \(\eta_{ij}\) values are calculated for each of the three quality responses for the 27 experiments by using the appropriate formula from Eqs. (2)–(4). Table 6 lists the obtained results.

-

Step 2:

The Mamdani-style fuzzy inference is adopted to convert the three quality characteristics into a single response. First, the \(\eta_{ij}\) values of the MRR, EWR, and SR were fuzzified. The two fuzzy subsets low and high are assigned to the \(\eta_{ij}\) values of the MRR, EWR, and SR. Table 7 displays the high and low representations for \(\eta_{i2}\) of the SR response as an example. Figure 10 displays the MFs for the three quality responses. The rules that communicate between the MFs of the responses and the output are shown in Table 8. Next, the rule outputs are aggregated, and the four fuzzy subsets are assigned to the output COM value, as shown in Fig. 11. Finally, the fuzzy value of the output is defuzzified to convert it to a crisp COM value for each experiment using the COG method. Table 9 shows the calculated COM i value for each experiment.

Table 7 High and low representations of \(\eta_{i2}\) for the SR response (μm) Table 8 Generated fuzzy rules for case study 3.1 Table 9 Calculated COM values for Case study 3.1 -

Step 3:

The RBFNN technique shown in Fig. 12 is used to generate the full data set for the COM values. Table 10 displays the full data set obtained.

Table 10 Full data set for the COM value for Case study 3.1 -

Step 4:

The average COM values are calculated for each factor level, as shown in Table 11. It is found that the combination of optimal factor levels is V1C1DF1T3, whereas it is identified as V3C3DF2T2 using the grey-Taguchi approach.

Table 11 Average COM values for the full factorial design for Case study 3.1 -

Step 5:

For each quality characteristics, the anticipated improvements obtained using the neural–fuzzy approach are compared with those determined using the grey-Taguchi concept.

3.2 Optimization of binary mixture removal

Zolgharnein et al. (2014) employed the Taguchi method and principal component analysis for the optimization of the removal of a binary mixture of alizarin red and alizarin yellow by using multiple LTB quality characteristics: the sorbent capacity of alizarin red (Qr), sorbent capacity of alizarin yellow (Qy), removal percentage of alizarin red (Rr), and removal percentage of alizarin yellow (Ry). The L27 array was used to investigate the process parameters shown in Table 12. The \(\eta_{ij}\) values are calculated and displayed in Table 13.

The Mamdani-style fuzzy inference process is performed, in which the two fuzzy subsets low and high are assigned to the four \(\eta_{ij}\) values for each quality characteristic (Qr, Qy, Rr, and Ry). The MFs for the COM values are displayed in Fig. 13.

To convert the fuzzy value of the output to a nonfuzzy value (the COM value), the COG defuzzification method is used. Finally, the RBFNN shown in Fig. 14 is used to complete the full data set for the COM value, shown in Table 14.

The average COM value for each factor level is calculated and displayed in Table 15. The combination of optimal factor levels is identified as M2Cr2Cy2Ph1S2Ti2T2 by selecting the levels having the maximum COM value for each factor.

The anticipated improvement in each of the quality characteristics (Qr, Qy, Rr, and Ry) is calculated using the neural–fuzzy approach and compared with that obtained using the Taguchi method and principal component analysis.

3.3 Optimization of high-speed CNC turning parameters

Gupta et al. (2011) performed the optimization of high-speed CNC machining of AISI P-20 tool steel by using four LTB-type quality characteristics—SR (µm), tool life (TL, min), cutting force (CF, N), and power consumption (PC, W)—and the Taguchi-fuzzy approach. The selected process parameters presented in Table 16 are studied using the L27 array. The \(\eta_{ij}\) values are calculated for each quality characteristic, and they are shown in Table 17. The two fuzzy subsets low and high are assigned to the four inputs by using the \(\eta_{ij}\) values of SR, TL, CF, and PC, as shown in Fig. 15. The two fuzzy subsets low and high are assigned to the four inputs by using the \(\eta_{ij}\) values of SR, TL, CF, and PC, as shown in Fig. 15. The five fuzzy subsets are then assigned to the output, COM value, as shown in Fig. 16. The COG method is used to convert the fuzzy value to a nonfuzzy value that is called the COM value.

The RBFNN technique is used to generate the full factorial data. The COM averages are calculated for each factor level, as shown in Table 18, where the combination of optimal factor levels is identified as S2F1D1N2E3. The anticipated improvement for the optimal combination is obtained using the neural–fuzzy approach, and it is compared with that obtained using the Taguchi-fuzzy approach.

4 Research results

4.1 Optimization results for electro-erosion

The anticipated improvement for electro-erosion is shown in Table 19, where it is clear that for the initial factor settings (V1C1DF1T1), the values of the sum of \(\eta_{ij}\) averages for the MMR (LTB), EWR (STB), and SR (STB) are 49.458, −39.041, and 25.680, respectively. For the grey-Taguchi concept (V3C3DF2T2), they are 38.452, −24.495, and 19.809 dB, respectively. Finally, the fuzzy-RBFNN approach (V1C1DF1T3) yields the values of the sum for the MMR, SR, and EWR are 33.396, −17.464, and 62.124 dB, respectively. The anticipated improvements in the MMR, SR, and EWR determined using the grey-Taguchi concept (fuzzy-RBFNN) are −11.006 (−16.062), 14.546 (21.577), and −5.870 (36.444) dB, respectively. It is found that the grey-Taguchi concept provides larger anticipated improvement in the MMR (LTB type) compared with the fuzzy-RBFNN approach. By contrast, the fuzzy-RBFNN approach outperforms the grey-Taguchi concept in improving SR and EWR. Finally, the total anticipated improvements for the fuzzy-RBFNN approach (=13.987 dB) are considerably larger than those obtained (=−7.988 dB) using the grey-Taguchi concept.

4.2 Results for optimization of binary mixture removal

The anticipated improvements in Ry, Rr, Qy, and Qr using the Taguchi method and principal component analysis (fuzzy-RBFNN) are 7.4 (1.6), 6.0 (6.4), −10.7 (4.6), and −12.1 (9.5) dB, respectively, as shown in Table 20. It is clear that the Taguchi method and principal component analysis (M2Cr1Cy1PH1S1Ti1T1) provide a larger anticipated improvement in Ry compared with the fuzzy-RBFNN approach (M2Cr2Cy2Ph1S2Ti2T2). By contrast, the fuzzy-RBFNN outperforms the Taguchi method and principal component analysis in improving Rr, Qy, and Qr. Finally, the total anticipated improvement for the fuzzy-RBFNN approach (=5.5 dB) is considerably larger than that (=−2.4 dB) for the Taguchi method and principal component analysis.

4.3 Results for optimization of high-speed CNC turning

The anticipated improvements in the SR, TL, CF, and PC using the Taguchi-fuzzy approach (fuzzy-RBFNN) are 9.51 (12.83), 7.26 (5.39), 2.75 (3.45), and −0.08 (−0.68) dB, respectively, as shown in Table 21. It is clear that the Taguchi-fuzzy approach (S1F1D1N2E3) provides larger anticipated improvements in SF and PC compared with the fuzzy-RBFNN approach (S2F1D1N2E3). By contrast, the fuzzy-RBFNN approach outperforms the Taguchi-fuzzy approach in improving the SR and CF. Finally, the total anticipated improvement obtained using the fuzzy-RBFNN approach (=5.5 dB) is considerably larger than that (=4.86 dB) obtained using the Taguchi-fuzzy approach.

5 Conclusions

This study successfully integrated fuzzy logic and the RBFNN and used multiple quality responses for the optimization of process performance. For each quality characteristic, \(\eta_{ij}\) values are calculated for each quality response, and these values are set as the input to the fuzzy model for obtaining a single response COM. The ANN is then used to predict the full data for the fractional design, for which the full factorial array is used as input to predict the COM value. The average COM values are calculated for each factor level and then adopted to identify the combination of optimal factor levels. Illustrative case studies from past studies are provided. The proposed approach shows appreciable improvements compared with previously used techniques, such as the grey-Taguchi concept, Taguchi method and principal component analysis, and Taguchi-fuzzy approach. Moreover, the proposed technique has the following advantages: (1) it provides a global optimal solution, (2) provides the largest overall improvement, and (3) shows flexibility in dealing with different data sets, increasing number of factors, levels, and factor weights. Future work should consider using the loss function instead of the SNR and/or using genetic algorithms instead of the RBFNN.

References

Almonacid F, Rus C, Pérez-Higueras P, Hontoria L (2011) Calculation of the energy provided by a PV generator. comparative study: conventional methods vs. artificial neural networks. Energy 36(1):375–384

Al-Refaie A (2010) A grey-DEA approach for solving the multi-response problem in Taguchi method. J Eng Manuf 224(1):147–158

Al-Refaie A (2011) Optimizing correlated QCHs using principal components analysis and DEA techniques. Prod Plan Control 22(07):676–689

Al-Refaie A (2013) Optimization of multiple responses in the Taguchi method using fuzzy regression. Artif Intell Eng Des Anal Manuf 28:99–107

Al-Refaie A (2014a) A proposed satisfaction model to optimize process performance with multiple quality responses in the Taguchi method. J Eng Manuf 228(2):291–301

Al-Refaie A (2014b) Optimizing the performance of the tapping and stringing process for photovoltaic panel production. Int J Manag Sci Eng Manag. doi:10.1080/17509653.2014.974887

Al-Refaie A (2014c) Optimizing performance of low-voltage cables’ process with three quality responses using fuzzy goal programming. HKIE Trans 21(3):1–21

Al-Refaie A (2014d) Applying process analytical technology framework to optimize multiple responses in wastewater treatment process. J Zhejiang Univ Sci A 15(5):374–384

Al-Refaie A (2015) Optimizing multiple quality responses in the Taguchi method using fuzzy goal programming: modeling and applications. Int J Intell Syst 30(6):651–675

Al-Refaie A, Li M-H (2011) Optimizing the performance of plastic injection molding using weighted additive model in goal programming. Int J Fuzzy Syst Appl 22(07):676–689

Al-Refaie A, Wu T-H, Li M-H (2009) Data envelopment analysis approaches for solving the multi response problem in the Taguchi method. Artif Intell Eng Des Anal Manuf 23:159–173

Al-Refaie A, Rawabdeh I, Alhajj R, Jalham I (2012) A fuzzy multiple regression approach for optimizing multiple responses in the Taguchi method. Int J Fuzzy Syst Appl 3(2):14–35

Al-Refaie A, Diabat A, Li MH (2014) Optimizing tablets’ quality with multiple responses using fuzzy goal programming. J Process Mech Eng 228(2):115–126

Asiltürk İ, Çunkaş M (2011) Modeling and prediction of surface roughness in turning operations using artificial neural network and multiple regression method. Expert Syst Appl 38(5):5826–5832

Azadeh A, Sheikhalishahi M, Asadzadeh S (2011) A flexible neural network-fuzzy data envelopment analysis approach for location optimization of solar plants with uncertainty and complexity. Renew Energy 36:3394–3401

Bose PK, Deb M, Banerjee R, Majumder A (2013) Multi objective optimization of performance parameters of a single cylinder diesel engine running with hydrogen using a Taguchi-fuzzy based approach. Energy 63(15):375–386

Çakıroğlu R, Acır A (2013) Optimization of cutting parameters on drill bit temperature in drilling by Taguchi method. Measurement 46(9):3525–3531

Chen T (2015) An efficient and effective fuzzy collaborative intelligence approach for cycle time estimation in wafer fabrication. Int J Intell Syst 30:620–650

Chen SX, Gooi HB, Wang MQ (2013) Solar radiation forecast based on fuzzy logic and neural networks. Renew Energy 60:195–201

Dasgupta K, Singh DK, Sahoo DK, Anitha M, Awasthi A, Singh H (2014) Application of Taguchi method for optimization of process parameters in decalcification of samarium–cobalt intermetallic powder. Sep Purif Technol 124(18):74–80

de Pontes FJ, Paiva AP, Balestrassi PP, da Ferreira JR, Silva MB (2012) Optimization of radial basis function neural network employed for prediction of surface roughness in hard turning process using Taguchi’s orthogonal arrays. Expert Syst Appl 39(9):7776–7787

Furtuna R, Curteanu S, Leon F (2011) An elitist non-dominated sorting genetic algorithm enhanced with a neural network applied to the multi-objective optimization of a polysiloxane synthesis process. Eng Appl Artif Intell 24:772–785

Gupta A, Singh H, Aggarwal A (2011) Taguchi-fuzzy multi output optimization (MOO) in high speed CNC turning of AISI P-20 tool steel. Expert Syst Appl 38:6822–6828

Javan DS, Mashhadi HR, Rouhani M (2013) A fast static security assessment method based on radial basis function neural networks using enhanced clustering. Electr Power Energy Syst 44(1):988–996

Li M-H, Al-Refaie A, Yang CY (2008) DMAIC approach to improve the capability of SMT solder printing process. IEEE Trans Electron Packag Manuf 31(2):126–133

Lilly JH (2010) Fuzzy control and identification, 3rd edn. Wiley, Hoboken

Lin H-C, Su C-T, Wang C-C, Chang B-H, Juang R-C (2012) Parameter optimization of continuous sputtering process based on Taguchi methods, neural networks, desirability function, and genetic algorithms. Expert Syst Appl 39(17):12918–12925

Mandic K, Delibasic B, Knezevic S, Benkovic S (2014) Analysis of the financial parameters of Serbian banks through the application of the fuzzy AHP and TOPSIS methods. Econ Model 43:30–37

Marvuglia A, Messineo A, Nicolosi G (2014) Coupling a neural network temperature predictor and a fuzzy logic controller to perform thermal comfort regulation in an office building. Build Environ 72:287–299

Moosavi M, Soltani N (2013) Prediction of the specific volume of polymeric systems using the artificial neural network-group contribution method. Fluid Phase Equilib 356:176–184

Muthuramalingam T, Mohan B (2014) Application of Taguchi-grey multi responses optimization on process parameters in electro erosion. Measurement 58:495–502

Otebolaku AM, Andrade MT (2015) Context-aware media recommendations for smart devices. J Ambient Intell Humaniz Comput 6(1):13–36

Peng X, Li Q, Wang K (2014) Core axial power shape reconstruction based on radial basis function neural network. Ann Nucl Energy 73:339–344

Sivasakthivel T, Murugesan K, Thomas HR (2014) Optimization of operating parameters of ground source heat pump system for space heating and cooling by Taguchi method and utility concept. Appl Energy 116:76–85

Sun J-H, Hsueh B-R (2011) Optical design and multi-objective optimization with fuzzy method for miniature zoom optics. Opt Lasers Eng 49:962–971

Sun J-H, Fang Y-C, B-R Hsueh (2012) Combining Taguchi with fuzzy method on extended optimal design of miniature zoom optics with liquid lens. Optik 123(19):1768–1774

Tatar A, Shokrollahi A, Mesbah M, Rashid S, Arabloo M, Bahadori A (2013) Implementing radial basis function networks for modeling CO2-reservoir oil minimum miscibility pressure. J Nat Gas Sci Eng 15:82–92

Tsai T (2014) Improving the fine-pitch stencil printing capability using the Taguchi method and Taguchi fuzzy-based model. Robot Comput Integr Manuf 27:808–817

Wu H-C, Chen T (2015) CART–BPN approach for estimating cycle time in wafer fabrication. J Ambient Intell Humaniz Comput 6:57–67

Xia C, Wang J, McMenemy K (2010) Short, medium and long term load forecasting model and virtual load forecaster based on radial basis function neural networks. Electr Power Energy Syst 32(7):743–750

Zăvoianu A-C, Bramerdorfer G, Lughofer E, Silber S, Amrhein W, Klement EP (2013) Hybridization of multi-objective evolutionary algorithms and artificial neural networks for optimizing the performance of electrical drives. Eng Appl Artif Intell 26:1781–1794

Zolgharnein J, Asanjrani N, Bagtash M, Azimi G (2014) Multi-response optimization using Taguchi design and principle component analysis for removing binary mixture of alizarin red and alizarin yellow from aqueous solution by nano c-alumina. Spectrochim Acta Part A Mol Biomol Spectrosc 126:291–300

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Al-Refaie, A., Chen, T., Al-Athamneh, R. et al. Fuzzy neural network approach to optimizing process performance by using multiple responses. J Ambient Intell Human Comput 7, 801–816 (2016). https://doi.org/10.1007/s12652-015-0340-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-015-0340-5