Abstract

In this paper, we present a linear programming model where the parameter space contains some multi-choice parameters. Alternative choices of multi-choice parameter are considered as random variables. Using interpolating polynomial for each multi-choice parameter, the model has been transformed into a non-linear mixed integer probabilistic programming problem. Then chance constrained programming technique is used to obtain an equivalent deterministic model of the transformed problem. To find the deterministic form of the objective function four different models namely, E-model, V-model, probability maximization model and fractile criterion model are used. Assuming the values of the multi-choice parameters as independent normal random variables, the methodology is presented. A numerical example is also presented to illustrate the methodology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In real life, there are several decision making situations where decision makers have to choose one value from a set of values of a parameter. This type of situation can be transformed into a mathematical programming model called multi-choice programming problem (MCPP). In MCPP, a multiple number of choices are assigned by the decision maker for any multi-choice parameter. Chang [1] proposed a new idea to solve multi-objective programming problem called multi-choice goal programming where for each objective a multiple number of aspiration levels or goals are set by the decision maker. In addition, to tackle these multi-choice aspiration levels a binary variable for each goal is introduced. In his other paper, Chang [2] used some continuous variable to tackle the situation instead of binary variable. Liao [3] proposed a model called multi-segment goal programming problem, in which the coefficients of the objective function take multiple aspiration level. Chang’s [1] binary variable method has been used to tackle the multi-choice parameters. Biswal and Acharya [4] proposed multi-choice linear programming problems (MCLPP) in which the right hand side parameters of the constraints take multiple aspiration level. They proposed a transformation technique to obtain an equivalent mathematical model. In their other paper [5], they introduced interpolating polynomial for each multi-choice type parameter to transform the model.

However, multi-choice programming problem can be considered as a combinatorial optimization problem. Since in actual decision making situations, we often make a decision on the basis of uncertain data, it makes proper sense to take the alternative choices of a multi-choice parameter as random variable in MCLPP. Stochastic programming is used for such decision making problems where randomness is involved.

Stochastic programming has been developed in various fields, a bibliographic review of stochastic programming can be found in [6, 7]. Among these approaches for stochastic programming, there are two very popular approaches namely,

-

(i) Chance-constrained programming or probabilistic programming proposed by Charnes and Cooper [8];

-

(ii) Two stage programming or Recourse programming proposed by Dantzig [9].

In optimization problems, to handle some random parameters we use both these methods. In chance constrained programming (CCP) technique, constraints of the problem can be violated up to a given level of probability. These satisfactory levels of probability are fixed by the decision maker. The two-stage programming technique also converts the stochastic problem into a deterministic problem. But the two stage programming does not allow any constraint to be violated. Several literature are found in the field of stochastic programming [10–16]. Typical areas of application of stochastic programming are Finance and Industrial Engineering, Water resource management, Management of stochastic farm resources, Mineral blending, Human resource management, Energy production, Circuit manufacturing, Chemical engineering, Telecommunications etc.

Under these situations, in this paper, we concentrate to find a suitable methodology to solve the multi-choice linear programming problem with random variables as the alternative choices of a multi-choice parameter. Since most common distribution in nature is the normal distribution, we discuss the methodology by assuming all the alternatives of a multi-choice random parameter as independent normal random variable with known mean and variance. In some stochastic programming problems some of the parameters follows non-normal distribution namely, uniform, exponential, gamma and log-normal distributions. In the stochastic programming literature the deterministic models have been established for stochastic programming problem with such random variables [17, 18].

The organization of the paper is as follows: after giving a brief introduction about the problem in Sect. 1, we present the mathematical Model of Multi-choice random Linear Programming Problem in Sect. 2. The deterministic form of the proposed model is established in Sect. 3. In Sect. 4, a case study is presented and the proposed method is illustrated with the results. In Sect. 5, some conclusions are presented.

2 Mathematical model of multi-choice random linear programming problem

The mathematical model of a multi-choice random linear programming problem can be presented as:

subject to

where \(X=(x_1,x_2,\ldots ,x_n)\) is n-dimensional decision vector. We consider the decision variables as deterministic. Each alternative value \(c_j^{(l)}\) \((l=1,2, \ldots , k_j)\) of the multi-choice parameter \(c_j\), \(j = 1,2, \ldots , n\); \(a_{ij}^{(s)}\) \((s=1,2, \ldots , p_{ij})\) of multi-choice parameter \(a_{ij}, i = 1,2, \ldots , m; j = 1,2, \ldots , n\) and \(b_i^{(t)}\) \((t=1,2, \ldots , r_i)\) of multi-choice parameter \(b_i, i = 1,2, \ldots , m\) are considered as independent random variable. Since each of the alternative choices of multi-choice parameter of the problem (1–3) are random variable, we are unable to apply the usual solution procedure for mathematical programming problem directly to solve the problem. So, we need to develop some suitable methodology to solve these problems.

Considering that the constraints \(\sum _{j=1}^{n}\{a_{ij}^{(1)},a_{ij}^{(2)},\ldots ,a_{ij}^{(p_{ij})}\}x_j \le \{b_i^{(1)},b_i^{(2)},\ldots ,b_i^{(r_i)}\},\, i = 1,2, \ldots ,m\) of the problem (1–3) need not hold almost surely, but they can instead hold with some known probabilities. To be more precise, the original m constraints are interpreted as:

where \(\Pr\) means probability. \(\gamma _i\) is the given probability of the extents to which the i-th constraint violations are admitted. The inequalities (4) are called chance constraints. These inequalities means that the i-th constraint may be violated, but at most \(\gamma _i\) proportion of the time. Hence problem (1–3) can be restated as:

subject to

3 Deterministic model formulation

The model established in the previous section is basically a stochastic programming model. Since random variables are there in the model, we need to establish the deterministic form of the model to solve the problem. We establish the equivalent deterministic model of the problem (5–7) by using chance-constrained programming technique.

3.1 Interpolating polynomials for the multi-choice parameters

The model (5–7) contains multi-choice parameters. Multi-choice parameters are found in the objective function as well as in the constraints. Also, each alternative value of the multi-choice parameters found to be random variables. We are unable to apply any stochastic programming approach directly to the model as multiple choices are present for each parameter. At first, we transform these multi-choice parameters. To do so, interpolating polynomials are formulated. Interpolating polynomials are formed by introducing an integer variable corresponding to each multi-choice parameter. Each integer variable takes exactly k number of nodal points if the corresponding parameter has k number of choices. Each node corresponds to exactly one functional value of a multi-choice parameter. Here the functional value of each node is a random variable. Interpolating polynomial, which are formulated intersects the affine function exactly once at these nodes. We replace a multi-choice parameter by an interpolating polynomial using Lagrange’s formula.

For the multi-choice parameter \(c_j \, (j=1,2,\ldots ,n)\), we introduce an integer variable \(u_{j}\) which takes \(k_{j}\) number of values. We formulate a Lagrange interpolating polynomial \(f_{c_{j}}(u_{j})\) which passes through all the \(k_{j}\) number of points given by Table 1.

Following Lagrange’s formula [20] we obtain the interpolating polynomial for the multi-choice parameter \(c_{j}\,(j=1,2,\ldots ,n)\) as:

Similarly, we introduce an integer variable \(w_{ij}\) to tackle the multi-choice parameter \(a_{ij}\,(i=1,2,...,m;\,j=1,2,...,n)\). The integer variable \(w_{ij}\) takes \(p_{ij}\) number of different values. Following the Lagrange’s formula, we construct an interpolating polynomial \(f_{a_{ij}}(w_{ij})\). The interpolating polynomial \(f_{a_{ij}}(w_{ij})\) passes through all the \(p_{ij}\) number of points which are given by Table 2. The interpolating polynomial can be written as:

Similarly, we introduce a new integer variable \(v_i\) for the multi-choice parameter \(b_i\,(i=1,2,...,m)\). We formulate the corresponding interpolating polynomial \(f_{b_i}(v_i)\) which passes through the data points given by Table 3. The interpolating polynomial can be formulated as:

Note that, in each of the above interpolating polynomials random variables are present. After replacing the multi-choice parameters by interpolating polynomials, we obtain a transformed stochastic model which can be stated as:

subject to

3.2 Equivalent deterministic form of the chance constraints

The alternative choices of the multi-choice random parameters can follow any continuous distribution namely uniform, normal, exponential etc. Also these random variables are independent. The interpolating polynomial corresponding to each multi-choice random parameter is a linear combination of independent random variables. So, the polynomial function itself is a random variable. We can find the distribution function of the random variable by using the following theorem:

Theorem 1

Let X and Y be two independent random variables with distribution function \(F_X(x)\) and \(F_Y(y)\) respectively, defined for all real numbers. Then the sum \(Z=X+Y\) is a random variable with distribution function \(F_Z(z)\), where \(F_Z(z)\) is given by:

where \(f_X(x)\) and \(f_Y(y)\) represent the probability density function of the random variables X and Y respectively.

In real life situations, the alternative choices of the multi-choice random parameter are considered from the same distribution. However, if the alternative choices of the multi-choice random parameter are from different distribution then the equivalent deterministic form of the i-th constraint of the problem can be establish in the following way:

Let us define \(S_i=\sum _{j=1}^{n}f_{a_{ij}}(w_{ij};a_{ij}^{(1)},a_{ij}^{(2)},\ldots ,a_{ij}^{(p_{ij})})x_j- f_{b_i}(v_{i};b_i^{(1)},b_i^{(2)},\ldots ,b_i^{(r_i)}).\) Then \(S_i\) is a random variable with distribution function \(F_{S_i}\) which can be found by using Theorem 1. Hence from the Eq. 16, we have the following:

which leads us to the deterministic form of the i-th chance constraint of the problem.

3.2.1 Chance constraints with normal random variable

Let us consider the case when all the random variables present in the problem are independent normal random variables. Let \(c_j^{(l)}\), \(a_{ij}^{(s)}\) and \(b_i^{(t)},\) \(l=1,2, \ldots , k_j;\,s=1,2, \ldots , p_{ij};\,t=1,2, \ldots , r_i;\,i = 1,2, \ldots , m; j = 1,2, \ldots , n\) be distributed normally with known mean and variance. Let us consider

where the mean and variance of the parameters are given by Table 4.

Now, the interpolating polynomial \(f_{a_{ij}}(w_{ij};a_{ij}^{(1)},a_{ij}^{(2)},\ldots ,a_{ij}^{(p_{ij})})\) is a linear function of \(p_{ij}\) number of independent normal random variables \(a_{ij}^{(s)}\) with known mean and variance. Also, interpolating polynomial \(f_{b_i}(v_{i};b_i^{(1)},b_i^{(2)},\ldots ,b_i^{(r_i)})\) is a linear function of \(r_{i}\) number of independent normal random variables \(b_{i}^{(t)}\) with known mean and variance. Let

Then \(M_{ij}\) is a random variable with mean and variance \(E(M_{ij})\) and \(V(M_{ij})\) respectively, where \(E(M_{ij})\) and \(V(M_{ij})\) are defined as,

Similarly, \(N_i\) is also treated as a random variable with mean and variance \(E(N_{i})\) and \(V(N_{i})\). \(E(N_{i})\) and \(V(N_{i})\) are given by:

Let us define \(A_i=(\sum _{j=1}^{n}M_{ij}-N_{i}),\,i=1,2,\ldots ,m\), then \(A_i\) are also normally distributed random variables. Hence the constraint (16) can be written as:

where \(\mu _{A_i}=\sum _{j=1}^{n}E(M_{ij})-E(N_{i})\), \(\sigma _{A_i}=\sqrt{\sum _{j=1}^{n}V(M_{ij})+V(N_{i})}\) and \(\eta _i\) is the standardized normally distributed random variable. Therefore the chance constraint (20) holds if and only if

where \(\varPhi (z)=\frac{1}{\sqrt{2\pi }}\int _{-\infty }^{z}e^{-\frac{\eta ^2}{2}}d\eta\) is the cumulative density function (C.D.F.) of the standard normal random variable. The above inequality can be simplified as:

Hence we establish the deterministic form of the i-th \((i=1,2,\ldots ,m)\) constraint as a non-linear constraint. The constraint can be stated as:

We obtain the deterministic form of the chance constraints which form the feasible region (say S) of the model (1–3). To establish the deterministic model of (1–3), we find the deterministic form of the objective function. Depending on the aim of the decision maker Charnes and Cooper [8] considered three types of decision rules to optimize objective functions with random variables:

-

(i) the minimum or maximum expected value model,

-

(ii) the minimum variance model and

-

(iii) the maximum probability model also called as dependent-chance model.

Moreover, Kataoka [21] and Geoffrion [22] individually proposed the fractile criterion model. We establish the deterministic models by assuming the random variables as independent normal random variable.

3.3 Expectation maximization model (E-model)

In order to deal with the situations where the decision maker wants to maximize the expected value of the objective function in (11–12), we consider ’E’-model for the objective function [8]. Substituting the random variables by their expected values, we obtain the model as:

subject to

Hence we obtain an equivalent deterministic model of the problem (1–3). We apply non-linear programming approach to solve the problem.

3.4 Variance minimization model (V-model)

In order to deal with the situations where the decision maker minimizes the variance of the objective function with random variable in (11–12) subject to the fact that the expected value of the objective function achieved a certain target fixed by the decision maker, we consider ‘V’-model for the objective function. Let the target value for the expected value of the objective function fixed by decision maker be T. Here we minimize the variance of the objective function. The equivalent deterministic model presented as:

subject to

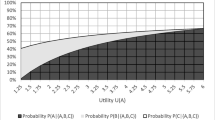

3.5 Probability maximization model (P-model)

In order to deal with the situations where the decision maker wants to maximize the probability that the objective function with random variable is greater than or equal to a certain permissible level in (11–12), we consider probability maximization model [23]. Considering the minimum permissible level of the objective function as z, we establish the objective function of the model as:

We assume \(c_j^{(l)}\, (l=1,2,\ldots ,k_j;\,j=1,2,\ldots ,n)\) are independent normal random variable with known mean and variance, so \(\sum _{j=1}^{n}f_{c_{j}}(u_{j};c_j^{(1)},c_j^{(2)},\ldots ,c_j^{(k_j)})x_j\) is a normal random variable. Let \(M=\sum _{j=1}^{n}f_{c_{j}}(u_{j};c_j^{(1)},c_j^{(2)},\ldots ,c_j^{(k_j)})x_j\) then expected value variance of M is given by,

Therefore, we have

Hence we transformed the problem as:

subject to

3.6 Fractile criterion optimization model

In order to deal with the situations where the decision maker is risk-averse and wants to guarantee that the probability of obtaining maximum value of the objective function is greater than or equal to some given threshold, we adopt the fractile criterion model [22]. Basically, the fractile criterion model is considered as complementary to the probability maximization model or P-model. In this model, the target variable to the objective function is maximized, provided that the probability that the objective function value is greater than the target variable is guaranteed to be larger than a given threshold. Let us denote the target variable to the objective function as \(\lambda\) and the given threshold be \(\beta\). Then fractile criterion model is given by:

subject to

In the above problem, we have an additional probabilistic constraint. Using chance constrained programming approach we obtain an equivalent deterministic model which is given by:

subject to

Hence, we establish the equivalent deterministic model of the proposed problem. We obtain the solution for the proposed problem by solving one of the four model described above (depending on the aim of the decision maker). We use standard optimization softwares to solve the deterministic model.

4 Numerical example and discussion

In this Section we present a hypothetical case study (see [24]) to illustrate the problem and methodology presented in this paper. We modeled the problem and solved it by using the proposed solution procedure.

A cattle feed manufacturing company wants to produce one type of cattle feed mix which is produced by mixing four type of food material. The food materials are Barley, Maize, Sesame Flakes and Groundnut. There are four markets where sufficient amount of Barley available. In all these four markets the price per unit of barley are different. Also there is some differences in the quality of the Barley. Similarly, there are five markets where Groundnut meal is available. In all these four markets the price per unit of Groundnut are different. Also there is some differences in the quality of the Groundnut. There are three type of maize available in three different markets. Cost, protein and fat for different type of maize is different. There two type of Sesame Flakes made from two different type of Sesame. The amount of Protein and fat available in these different Sesame Flakes are different. The cost of these available Sesame Flakes are different. The details of purchase cost of these raw material, amount of protein and fat in those materials are presented in the Tables 5, 6, 7 and 8. We assume that the cost and protein content of each material are random variable. Also, fat content of each type of maize are random variable. The source of the data for cost of different materials is http://164.100.222.56/amb/1/weeklymandiselect.asp. Protein and fat to vary greatly because of the local food systems, food security and interventions. Therefore either 20.7 or 21.0 or 22 or 22.7 % are the possible requirement of protein. Also, the required fat content may be either 4.8 or 5.0 or 5.3 or 5.9 or 6.2 %. The significant level of a probabilistic constraint corresponding to protein is 0.95. We consider confidence levels for fat constraint is 0.98 respectively.

Let \(x_1\), \(x_2\), \(x_3\), \(x_4\) be the quantities of Barley, Groundnut meal, Maize and Sesame Flakes mixed in the cattle feed respectively. Let the sum total of the raw materials is equal to one kg. Also, it is assumed that, the quantities of Barley and Maize i.e., the value of \(x_1\) and \(x_3\) must be at least 0.5 and 0.01 kg respectively. So, we have an minimization problem with two chance constraints and two linear constraints.

The above situation can be modeled into a mathematical model which is a multi-choice probabilistic linear programming problem. The mathematical model is expressed as:

subject to

where

Using ‘E’-model we obtain the deterministic equivalent of the model (59–65) as:

subject to

The mathematical model (66–73) is a mixed integer nonlinear programming problem. Using nonlinear programming technique [25] we can solve the problem. Using MATHEMATICA [26] software we obtain the optimal solution. Mathematica use “Differential Evolution” algorithm to find the numerical global optimal solution of the nonlinear programming problem. In this method the tolerance for accepting constraint violations is ’0.001’ (See [27]). So, the constraints are violated slightly (See Table 9). In order to compare the solution obtained by Mathematica, the transformed model is also solved in GAMS [28] solver. The same optimal solution is obtained. Optimal solutions of the different models are given by the Table 9.

For the ‘V-model’ and ‘P-model’ the target costs are set as 30 and 35 respectively. The maximum probability with the production cost as 35 is \(88.59\,\%\). From the Table 9 we can see that, for the different models the markets for Barley or the market for Groundnut Meal or types of Maize and Sesame flakes chosen are different in most of the cases. Hence from these models and the results obtained we conclude that the consideration of the multi-choice random parameters in the parametric space is very logical and helpful for the decision makers to take proper decision.

5 Conclusions

In this paper, a suitable methodology is established to solve a multi-choice linear programming problem where the decision maker set a number of random aspiration level for any parameter presents in the problem. By considering the alternative aspiration level as normal random variable, we establish the methodology. At first, we tackle the multi-choice parameter by using some interpolating polynomial. Interpolating polynomials are linear functions of normal random variables. So, the transformed problem is treated as a probabilistic programming problem. By using chance constraint programming approach, we establish an equivalent deterministic model. We present four different models to find the deterministic form of the objective function, depending on the desire of the decision maker. The proposed methodology provides a novel way for solving the multi-choice linear programming problems involving random variables as the alternative choices of the parameters. The present method serves as a useful decision making tool for a decision maker to find optimal solution with best alternative for a multi-choice parameter. A further study is essential for this problem in the presence of other random variables, namely uniform, exponential and log-normal random variable. There are several real life problems such as Production planning problem, Inventory control problem, Scheduling problems in management science where this multi-choice probabilistic programming technique can be applied.

References

Chang, C.-T.: Multi-choice goal programming. Omega: Int. J. Manag. Sci. 35, 389–396 (2007)

Chang, C.-T.: Revised multi-choice goal programming. Appl. Math. Model. 32, 2587–2595 (2008)

Liao, C.-N.: Formulating the multi-segment goal programming. Comput. Ind. Eng. 56, 138–141 (2009)

Biswal, M.P., Acharya, S.: Transformation of multi-choice linear programming problem. Appl. Math. Comput. 210, 182–188 (2009)

Biswal, M.P., Acharya, S.: Solving multi-choice linear programming problems by interpolating polynomials. Math. Comput. Model. 54, 1405–1412 (2011)

Stancu-Minasian, I.M., Wets, M.J.: A research bibliography in stochastic programming. Oper. Res. 24, 1078–1119 (1976)

Stancu-Minasian, I.M.: Overview of different approaches for solving stochastic programming problems with multiple objective functions. In: Slowinski, R., Teghem, J. (eds.) Stochastic Versus Fuzzy Approaches to Multiobjective Mathematical Programming under Uncertainty, pp. 71–101. Kluwer Academic Publishers, Dordrecht/Boston/London (1990)

Charnes, A., Cooper, W.W.: Deterministic equivalents for optimizing and satisficing under chance constraints. Oper. Res. 11(1), 18–39 (1963)

Dantzig, G.B.: Linear programming under uncertainty. Manag. Sci. 1(3–4), 197–206 (1995)

Hulsurkar, S., Biswal, M.P., Sinha, S.B.: Fuzzy programming approach to multi-objective stochastic linear programming problems. Fuzzy Sets Syst. 88, 173–181 (1997)

Sahoo, N.P., Biswal, M.P.: Computation of some probabilistic linear programming problems involving normal and log-normal random variables with a joint constraints. Int. J. Comput. Math. 82(11), 1123–1138 (2005)

Sahoo, N.P., Biswal, M.P.: Computation of a multi-objective production planning model with probabilistic constraints. Int. J. Comput. Math. 86, 185–198 (2009)

Acharya, S., Biswal, M.P.: Solving probabilistic programming problems involving multi-choice parameters. OPSEARCH 48(3), 217–235 (2011)

Abdelaziz, F.B.: Solution approaches for the multiobjective stochastic programming. Eur. J. Oper. Res. 216, 1–16 (2012)

Sakawa, M., Kato, K.: An interactive fuzzy satisfying method for multiobjective stochastic linear programming problems using chance constrained conditions. J. Multi-Criteria Decis. Anal. 11(3), 125–137 (2002)

Sakawa, Masatoshi, Yano, Hitoshi, Nishizaki, Ichiro: Linear and Multiobjective Programming with Fuzzy Stochastic Extensions. Springer, New York (2013)

Biswal, M.P., Biswal, N.P., Li, D.: Probabilistic linear programming problems with exponential random variables: a technical note. Eur. J. Oper. Res. 111(3), 589–597 (1998)

Biswal, M.P., Sahoo, N.P., Li, D.: Probabilistic linearly constrained programming problems with log-normal random variables. Opsearch 42, 70–76 (2005)

Charnes, A., Cooper, W.W.: Chance constrained programming. Manag. Sci. 6(1), 73–79 (1959)

Atkinson, K.E.: An Introduction to Numerical Analysis. Wiley, New York (2009)

Kataoka, S.: A stochastic programming model. Econometrica 31, 181–196 (1963)

Geoffrion, A.M.: Stochastic programming with aspiration or fractile criteria. Manag. Sci. 13, 672–679 (1967)

Sakawa, Masatoshi, Kato, Kosuke, Katagiri, Hideki: An interactive fuzzy satisficing method for multiobjective linear programming problems with random variable coefficients through a probability maximization model. Fuzzy Sets Syst. 146(2), 205–220 (2004)

Van de Panne, C., Popp, W.: Minimum cost cattle feed under probabilistic protein constraints. Manag. Sci. 9, 405–430 (1963)

Li, D., Sun, X.: Nonlinear Integer Programming, vol. 84. Springer US, New York (2006)

Wolfram, S.: The Mathematica Book, 5th edn. Wolfram Media, Inc., Cambridge (2003)

http://reference.wolfram.com/language/tutorial/ConstrainedOptimizationGlobalNumerical.html#252245038

Brooke, A., Kendrick, D., Meeraus, A., Raman, R.: GAMS: A User’s Guide. GAMS Development Corporation, Online, retrieved 30, (2009)

Acknowledgments

The authors are grateful to the anonymous referees for their valuable comments and suggestions to improve the quality and clarity of the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In this section we include the description of MATHEMATICA commands for solving the mixed integer programming problem. To solve an integer programming problem using MATHEMATICA software we use ‘NMinimize’ or ‘NMaximize’ function to find the optimal value of the problem.

The detail of the MATHEMATICA command to solve mixed integer nonlinear programming problem of Sect. 4 is given below:

The obtained solution of the problem is as follows:

Rights and permissions

About this article

Cite this article

Pradhan, A., Biswal, M.P. Multi-choice probabilistic linear programming problem. OPSEARCH 54, 122–142 (2017). https://doi.org/10.1007/s12597-016-0272-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12597-016-0272-7