Abstract

Modelling, analysis and synthesis of behaviour are the subject of major efforts in computing science, especially when it comes to technologies that make sense of human–human and human–machine interactions. This article outlines some of the most important issues that still need to be addressed to ensure substantial progress in the field, namely (1) development and adoption of virtuous data collection and sharing practices, (2) shift in the focus of interest from individuals to dyads and groups, (3) endowment of artificial agents with internal representations of users and context, (4) modelling of cognitive and semantic processes underlying social behaviour and (5) identification of application domains and strategies for moving from laboratory to the real-world products.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Modelling, analysis and synthesis of human behaviour are the subject of major efforts in computing science [138, 140]. In principle, the problem can be addressed in purely technological terms, i.e. by applying the same methodologies and approaches that can be used for any other type of data accessible to machines. For example, speech has been analysed using methodologies that can be applied to any other signal, and similarly, computer vision has addressed the problem of tracking people using the same methodologies that can be used to track any other moving object. Furthermore, robotics and computer graphics addressed the synthesis of human motion by simply reproducing its observable aspects.

However, human behaviour is governed by cognitive, social and psychological phenomena that, while not being observable, must be taken into account to build technologies more robust, effective and human-centred. The first attempts in this direction were done in the early 1990s, when automatic analysis and synthesis of facial expressions were addressed for the first time not only in terms of observable facial muscles activity, but also in terms of emotion expression [47]. Interdisciplinary collaboration between computing on one side and, on the other side, psychology and cognitive sciences proved to be a crucial and fruitful milestone.

Nowadays, domains like affective computing [100], social signal processing (SSP) [138], social robotics [23], intelligent virtual agents [32], human communication synamics [87], sentic computing [27, 103] are well established and have a well-delimited and well-recognized scope in the computing community. Recent technological achievements include social robots that deal with autistic children [119], computers that make sense of human personality in various contexts [137], artificial agents that sustain emotionally rich conversations with their users [123], intelligent frameworks for multimodal affective interaction [63, 105], approaches that detect phenomena as subtle as mimicry [43], and the list could continue.

However, modelling, analysis and synthesis of human behaviour are far from being solved problems. This article outlines a few major issues that need to be addressed to substantially improve the current state of the art:

-

Virtuous practices for design, collection and distribution of data. Without data it is difficult, if not impossible, to develop technologies revolving around human behaviour. However, widely shared practices for making of data an asset for the entire community are still missing (see Section “The Data”) [19, 73].

-

From individuals to interaction. A group of interacting people is more than the mere sum of its members. However, most current analysis approaches still focus on individuals. Furthermore, methodologies addressing groups as a whole, especially when it comes to mutual influence processes, are still at an early stage of development (see Section “Behaviour Analysis”) [33, 60].

-

From shallow to deep interactions. Human–human interactions take place in highly specific contexts where people typically have a long history of previous relations. However, current artificial agents typically miss an internal representation of both context and others, resulting in shallow interactions with their users. Attempts to go beyond such a state-of-affairs are still limited (see Section “Multimodal Embodiment”) [7, 118].

-

Integration of semantic and cognitive aspects. Social life is determined to a large extent by unconscious, cognitive processes. However, most current approaches for analysis and synthesis of human behaviour do not try to model how people make sense of others and give meaning to their experiences (see Section “Computational Models of Interaction") [38, 106].

-

Applications. Real-world applications are the ultimate test bed for any technology expected to interact with humans. However, only a relatively few domains are seriously planning the adoption of technologies dealing with human behaviour (see Section “Applications”) [69, 109, 110, 134].

The rest of the article describes each of the above issues in details.

The Data

Corpora and data collections are a necessary prerequisite for modelling, analysis and synthesis of human behaviour. In fact, analysis and synthesis are not possible without learning from data showing contexts and phenomena of interest. Furthermore, efficiency in experiments and replicability of results are difficult without a framework for comprehensive and easily interoperable data annotation and analysis. In other words, the multimodal research community cannot progress without virtuous data collection, annotation and sharing practices that make high-quality data accessible and easy to process. This section outlines the challenges arising at various stages of corpus design, collection, annotation, curation and distribution and proposes strategies that should underpin the best practices.

Data Design and Collection

Collections of data portraying multimodal interaction behaviours cover a wide spectrum of verbal, non-verbal, social and communicative phenomena. However, most current resources do not address all aspects of social interactions, but focus on the investigation of specific contexts and settings. The probable reason is that the range of spoken interactions, or “speech-exchange systems” [116], humans engage in is enormous. It is an open question whether basic mechanisms such as turn-taking or the temporal distribution of cues such as back-channel, gestures or disfluencies vary with the type of interaction. In other words, it is not sure whether observations made over certain data generalize to other data as well and, if yes, to what extent. This requires one to carefully consider what is the genre of the corpus at the design stage.

Corpora may consist of audio-visual material gathered from conventional media (radio and television) and the web, or recordings made during laboratory experiments, possibly using advanced sensors (e.g. high-definition cameras, gaze trackers, microphone arrays, RGB depth cameras like the Kinect, physiological sensors). Overall, the large number of settings and data acquisition approaches reflects the wide variety of design and research goals that data collections are functional to [138].

When interactions are recorded in a laboratory setting, the most common way to ensure that people actually engage in social exchanges is to use tasks aimed at eliciting conversation. Typical cases include the description of routes on a map (e.g. the HCRC Map Task Corpus [5]), spotting differences between similar pictures (e.g. the London UCL Clear Speech in Interaction Database [12] and the Wildcat Corpus [135]), participation in real or simulated professional meetings (e.g. the ICSI Meeting Corpus [68] and the AMI Corpus [84]). This way of collecting data produces useful corpora of non-scripted dialogues. However, it is unclear whether the motivation of subjects involved in an artificial task can be considered genuine. Therefore, it is not sure that the resulting corpora can be used to make reliable generalizations about natural conversations [76, 92].

Attempts to collect data in more naturalistic settings have focused initially on real-world phone calls like, e.g. the suicide hotline and emergency-line conversations described in [116]. A broadest domain of topics is available in the corpora of real phone conversations (e.g. Switchboard [53] and ESP-C [31]). The main drawbacks of these resources are that they are unimodal, and furthermore, it is not clear whether phone-mediated and face-to-face conversations can be considered equivalent. The effort of capturing face-to-face, real-life spoken interactions has led to collection of corpora like Santa Barbara [45], ICE [55], BNC [16] and Gothenburg Corpus [1]. However, the effectiveness of these collections is still limited by unimodality (the only exception is the Gothenburg Corpus) and relatively low quality of the recordings.

What emerges from the above is that the collection of data suitable for multimodal research entails a trade-off between the pursuit of real-life, naturalistic resources and the need of high-quality material suitable for automatic processing. This typically leads to the choice of laboratory settings, where the sensing apparatus is as unobtrusive as possible and the scenario is carefully designed to avoid biases. This led to hybrid multimodal corpora showing encounters recorded in the laboratory, but without prescribed task or subject of discussion imposed on participants. These include collections of free-talk meetings or first encounters between strangers (e.g. the Swedish Spontal [46], the NOMCO and MOMCO Danish and Maltese corpora [95], casual conversations between acquaintances and strangers [93]). Some of the latest corpora include physiological signals, motion capture information (e.g. DANS and Spontal [46]) and breathing data.

The availability of new sensors, capable of capturing information non-accessible in previous corpora, makes old data less useful due to sparsity of the type of signals collected (many are audio only) and the impossibility of investigating the range of interconnected signals and cues of interest to current researchers. This issue could serve as a caution to current data collectors. It would be very useful if researchers future-proofed corpora by gathering a range of signals as wide as possible at the data collection stage, hopefully slowing the onset of data obsolescence.

Data Annotation, Curation and Distribution

Creating recordings is becoming increasingly cheaper and easier, but annotating them in view of modelling, analysis and synthesis of social behaviour remains a time-consuming and labour-intensive task. In fact, enriching data with descriptive and semantic information is usually done manually. Recent advances in sensing technologies have introduced flexibility in automatically collecting features of interest enabling the creation of data sets rich with information on multimodal behaviour that can be further augmented with manual encodings. However, analysis and modelling of multimodal interaction are hampered by the lack of a comprehensive annotation scheme or taxonomy incorporating speech, gestures and other multimodal interaction features.

Many spoken dialogue annotation schemes are based on speech/dialogue acts and their function in updating dialogue state [24, 37, 75]. The ISO 24617-2 standard for functional dialogue annotation [65] comprehensively covers information transfer and dialogue control/interaction management functions of utterances, but coverage of social or interactional functions is restricted to “social obligation management” (salutations, self-introduction, apologizing, thanking and valedictions). There is also a need to include annotation of multimodal cues. The MUMIN scheme [2] allows coding of multimodal aspects of dialogue, particularly in terms of their contribution to interaction management and turn-taking, but has not yet been integrated into larger dialogue taxonomies. An important advantage of the ISO standard and indeed of the information state update paradigm [20] is its multidimensionality, whereby a “markable” or “area of interest” can be tagged in several orthogonal ways. This scheme may thus be extensible to account for many interactional and multimodal aspects of interaction. A more extensive taxonomy of communicative acts encompassing various modalities is highly desirable.

While many databases are publicly available, many others are still not shared. The shortage of desirable annotated data is also due to the lack of standardization, intellectual property rights (IPR) restrictions and privacy issues arising from research ethics. Data sets involved in tasks related to human behaviour analysis come with strict terms of use. Providers of data should thus ensure that data reuse is permitted through a set of appropriate licensing conditions. More importantly, data sets should be indexed so that all interested parties are able to identify different types of resources they wish to access and/or acquire. The multidisciplinarity of the field also calls for true and continuous cooperation among disciplines to make the most of complementary expertise in resource development and processing [126].

Open Issues and Challenges

Methodologies aimed at creation and dissemination of data should be fostered by both users and providers to maximize availability and usability. The goal should be the creation of data ecosystems that support the whole multimodal value chain—from design to distribution—through definition of best practices (e.g. like those available in natural language processing) and set-up of infrastructures for resource use and sharing [102]. These infrastructures will address the following needs:

-

A framework for managing and sharing data collections;

-

Legal and technical solutions for privacy protection in a number of use scenarios;

-

Data visibility and encouragement to data sharing, reuse and repurposing for new research questions;

-

Identification of gaps and missing resources.

Establishing such an ecosystem in the area of multimodal interaction is necessary to support the increasingly demanding requirements of real-world applications. In particular, the creation of an effective data ecosystem promises to have the following advantages:

-

Integration of social and multimodal annotation into standard dialogue annotation schemes;

-

Building of knowledge bases informing the design of real-world and impact-oriented applications;

-

Coverage of a wide, possibly exhaustive spectrum of contexts and situations;

-

Better analysis of context and genre in social interactions.

Overall, a solid shared data ecosystem would greatly streamline the acquisition of relevant scientific understanding of multimodal interaction, and thus expedite the use of this knowledge in the research and development of a range of novel real-world applications (see Section “Applications"). The challenge remains at defining, labelling and annotating the high-level behaviours associated with human–human interaction. For this purpose, experts in multimodal signal processing and machine learning work hand-in-hand with psychologists, clinicians and other domain experts to transfer knowledge gained over years of labelling human behaviours to a machine readable code that is amenable to computational manipulation.

Behaviour Analysis

Previous research on social behaviour analysis has focused on individuals, whether it comes to the detection of specific actions and cues (e.g. facial expressions, gestures and prosody) or the measurement of social and psychological phenomena (e.g. valence and arousal and personality traits). With advances in methodology, there is increasing interest in advancing beyond action detection in individuals to detection and understanding of interpersonal influence. Recent work includes comparing patterns of interpersonal influence under different conditions (e.g. with or without visual feedback, during high versus low conflict, and during negative and positive affect [58, 60, 85, 127, 136]) and the relation between interpersonal influence and outcome variables (e.g. friendship or relationship quality [3, 107]). Key issues are feature extraction and representation, time-series methodologies and outcomes. Unless otherwise noted, the rest of this section focuses on dyads (i.e. two interacting individuals) rather than larger social groups.

Detection of Behavioural Cues

The first step in computing interpersonal influence is to extract and represent relevant behavioural features from one or more modalities. Methodologies include motion-tracking [9] for body motion, computer vision [42, 60, 85, 136] for facial expression, head motion and other visual displays, signal processing [67, 136] for voice quality, timing and speech, and manual measurements by human observers [36, 78, 81]. Because of their objectivity, quantitative measurement, efficiency and reproducibility, automatic measures are desirable. We address their limitations and challenges in Section “Open Issues and Challenges”.

Modelling Interpersonal Influence

Independent of specific methods of feature extraction, two main approaches have been used to analyse interpersonal influence. The first includes analytic and descriptive models that seek to quantify the extent to which behaviour of an individual accounts for the behaviour of another. The second includes prediction and classification models that seek to measure behavioural matching between interactive partners.

Analytic/Descriptive Models

Windowed cross-correlation is one of the most commonly used measures of similarity between two time series [3, 107]. It uses a temporally defined window to measure successive lead–lag relationships over relatively brief timescales [17, 59, 60, 85]. By using small window sizes, assumptions of stationarity are less likely violated. When time series are highly correlated at zero lag, they are said to be synchronous. When they are highly correlated at negative or positive lags, reciprocity is indicated. Patterns of cross-correlation may change across multiple windows, consistently with descriptions of mismatch and repair processes (e.g. in mother-infant dyads [35]).

Other approaches are recurrence analysis, accommodation and spectral analysis. Recurrence analysis [114] seeks to detect similar patterns of change or movement in time series, which are referred to as “recurrence points”. Accommodation [122], also referred to as convergence, entrainment or mimicry [98, 99], refers to the tendency of dyadic partners to adapt their communicative behaviour to each other. Accommodation is based on a time-aligned moving average between time series. Spectral methods are particularly suitable for rhythmic processes. Spectral analysis measures phase shifts [94, 114] and coherence [42, 113, 114] or power spectrum overlap [94].

These methods may suggest that one of the interaction participants influences the other (e.g. infant smile in response to the mother’s smile), but it is more rigorous to say that they detect co-occurrence patterns that do not necessarily correspond to causal or influence relationships. Correlation or co-occurrence across multiple time series might be due to chance.

A critical issue when attempting to detect dependence between time series is to rule out random cross-correlation or random cross-phase coherence. Two types of approaches may be considered. One of the most common is to apply surrogate statistical tests [9, 42, 78, 113]. For instance, the time series may be randomized. Statistics that summarize the relation between time series (e.g. correlations) then can be compared between the original and randomized series. If the statistics differ between the original and randomized series, that suggests a non-random explanation. Alternatively, time- and frequency-domain time-series approaches have been proposed to address this problem [54, 78].

Surrogate and time-series approaches both involve analysis of observational measures. Yet another approach is to introduce experimental perturbations into naturally occurring behaviour. In a videoconference, the output of one person’s behaviour may be processed using an active appearance model and modified in real time without their knowledge. Using this approach, it has been found that attenuated head nods in an avatar resulted in increased head nods and lateral head turns in the other person [18]. Recent advances in image processing make possible real-time experimental paradigms to investigate the direction of effects in interpersonal influence.

Prediction/Classification Models

In many applications, it is of interest to detect moments of similar behaviour between partners. For example, smiles in interactions between mothers and infants could be learned, and then their joint occurrence detected automatically. Mutual or synchronous head nodding, as in back-channelling, would be another example. A method to detect joint states using semi-supervised learning was proposed in [141]. Similarly, one could use supervised or unsupervised methods to learn phase relations between partners. This would include coordinated increasing or decreasing intensity of positive affect or mimicry. In [117], Hidden Markov Models (bi-grams) are employed to learn parent–infant interaction dynamics. This modelling is coupled with non-negative matrix factorization for the extraction of a social signature of typical and autistic children. In [44], a set of one-class SVM-based models are used to recognize the gestures of task partners during EEG hyper-scanning. A measure of “imitation” is then derived from the likelihood ratio between the models.

To reveal bidirectional feedback effects, parametric approaches such as actor-partner analysis have been proposed [72]. In actor-partner analysis, data are analysed while taking into account both participants in the dyad simultaneously. As an example, Hammal et al. [60] used actor-partner analysis to measure the reciprocal relationship between head movements of intimate partners in conflict and non-conflict interaction. Each participant’s head movement was used as both predictor and outcome variable in the analyses. The pattern of mutual influence varied markedly depending on conflict.

Open Issues and Challenges

Critical challenges are access to well-annotated data from dyads or other social groups (see Section “The Data”), further advances in automated measurement and improved analysis methodologies (see Section “Computational Models of Interaction”). Because the distribution of spontaneous social interaction data has been constrained by confidentiality restrictions, investigators have been unable to train on and analyse each other’s data. That limits advances in our methods. Often, however, participants would be agreeable to sharing their audio–video data if only asked. When participants have been given the opportunity to consent to such use by the research community, they often have consented. This has encouraged efforts to open access to data sources that would have been unavailable in the past (see Section “The Data"). The US National Institutes of Health [85] among others supports data-sharing efforts.

The current state of automated measurement presents limits. First, automatic feature extraction typically results in moderate rates of missing data, such as when head rotation exceeds the operational parameters of the system or face occlusion occurs. This is particularly germane when applying algorithms to participants much different than ones on which they were trained [58]. Second, while communication is multimodal, automated feature extraction typically is limited to one or few modalities. Despite advances in natural language processing (NLP) [125], sampling and integration of speech with non-verbal measures remains a challenge. Third, optimal approaches to multimodal fusion are an open research question and may hinge on specific applications. In manual measurement, coders often use multimodal descriptors [35]. Comparable descriptors for automated feature extraction have yet to appear. In part for these reasons, some investigators have considered a combination of automatic and manual measurement [58, 60] or combination of overlapping algorithms for feature extraction [97].

A further key challenge is to propose statistical and computational approaches suitable for content and temporal structure of dyadic interactions. Various sequential learning models, such as hidden Markov models (HMMs) or conditional random fields (CRFs), are typically used to characterize the temporal structure of social interactions. Further approaches of this type will be of great benefit for automatic analysis and understanding of interpersonal communication in social interaction.

Multimodal Embodiment

In the past ten years, significant amount of effort has been dedicated to exploring the potential of social signal processing in human interaction with embodied conversational agents and social robots. The social interaction capability of an artificial agent may be defined as the ability of a system to interact seamlessly with humans. This definition implies the following:

-

The human produces and expects responses to social signals in the communication with the agent;

-

The agent not only is perceptive to the social signals emitted by the human, but also uses social signals to further its own purposes.

Particularly, the latter point implies a rich internal representation of humans and human–human interactions for the agent.

Needless to say, specific aspects of embodied social interaction cannot be studied under laboratory conditions alone; naturalistic social settings and people’s daily environments are needed to situate the user-agent communication (see Section “The Data” for issues related to the collection of data in naturalistic settings). Looking at the recent literature, current goals in multimodal embodied interaction are focused on implementing sets of social communication skills in the agent, including detection of humour, empathy, compassion and affect [140]. Basic skills like facial emotion recognition, gaze detection, dialogue management and non-verbal signal processing are still far from being effective. Similarly, synthesis and timing of non-verbal signals and appropriate ways of signalling apparent social cues are studied.

This section identifies two major challenges in this area. The first is that in these studies, typically, the cultural context is held fixed. One may argue that even humans have troubles selecting correct responses when the cultural setting is not familiar, but studies on artificial agents typically take place in very restricted domains, and naturalistic contexts are absent. The second problem is that the social behaviour of the agent is often not grounded in a rich internal representation and lacks depth [7, 118]. When an agent shows signs of enjoying humour, it does that according to an internal rule triggered to display amusement as the appropriate response to a certain number of interactional situations. This way of modelling social exchanges is very rudimentary, and while it can be the initial step for implementing a social agent, it is very far from implementing the complexity and richness of social communications in real life. The two issues mentioned above are strongly connected; without a proper internal representation, shallow models cannot be expected to adapt to different social contexts.

Approaches to Multimodal Embodiment

Social signals are strongly contextualized. For example, in a situation of bereavement, a gesture that is performed close to the interacting party can easily be interpreted as showing sympathy. The same gesture could be entirely inappropriate in a different context. The interpretation of social signals depends not only on the correct perception and categorization of the signal, but also on the evaluation and active interpretation of the interacting parties. While humans are adept at this, artificial agents lack the semantic background knowledge to deal with subtleties. Subsequently, the human–agent interaction needs to assume that the technology is limited and compensates for its shortcomings by structuring the interaction in a way that the exchange follows signals that are clear and simple, tailored to the capabilities of the agent, but still rich enough to convey the internal states of the agent to the human and vice versa.

Technologies for realizing individual components of a social agent have reached a great level of advancement. International benchmarking campaigns, such as the series of the Audio-Visual Emotion Challenges (AVEC)Footnote 1, have considerably fostered progress in the area of social signal interpretation. This is important for artificial agents that need to understand their users in naturalistic settings, but it is obvious that human–agent interactions do not necessarily need to use the same signals as those used in human–human interaction. The archetypical example is a domesticated cat, which produces a different set of social signals than a human, but seamlessly communicates over this set. The contribution of benchmarking campaigns is essential to the development of new solutions. Realistic data, naturalistic behaviours and real-time processing are key aspects for these campaigns. The latter aspect is particularly important, as most challenges focus on offline processing, but the online mode, which is essential for real, situated social interactions, is a much more difficult setting [120]. Candace Sidner and Charles Rich [112] coined the term always-on relational agents to describe the vision of a robotic or virtual character that lives as a permanent member in a human household, which remains a grand challenge. In a related perspective, Barbara Grosz [56] stated that: “Is it imaginable that a computer (agent) team member could behave, over the long term and in uncertain, dynamic environments, in such a way that people on the team will not notice it is not human”. The perception, negotiation and generation of social cues in a context is necessary to achieve this condition.

Open Issues and Challenges

As tools become more diversified and layered, it becomes possible to create agents with more depth. Work done in the SEMAINE project [123] has shown that simple backchannel signals, such as “I see”, may suffice to create the illusion of a sensitive listener. However, to engage humans over a longer period of time, a deeper understanding of the dialogue would be necessary. While a significant amount of work has been done on the semantic/pragmatic processing in the area of NLP, work that accounts for a close interaction between the communication streams required for semantic/pragmatic processing and social signal processing is rare (see Section “Computational Models of Interaction”). The integration of social signal processing with semantic and pragmatic analysis may help to resolve ambiguities. Particularly, short utterances tend to be highly ambiguous when solely the linguistic data are considered. An utterance like “right” may be interpreted as a confirmation, as well as a rejection, if intended cynically, and so may the absence of an utterance. Preliminary studies have shown that the consideration of social cues may help to improve the robustness of semantic and pragmatic analysis [21] (see Section “Computational Models of Interaction”).

Finding the right level of sensitivity is very important in creating seamless interaction, and this requires strong adaptation skills for the agent. Mike Mozer’s [89] early experiments on the adaptive neural network house established that people tolerate only to a limited extent the mistakes of an “intelligent” system. This is true for social signals as well; agents that act and react inappropriately will most likely irritate users [6]. Treating all user behaviours as possible input to the agent (called the “Midas Touch Problem” [61]) will result in poor interactions and confused users.

Recent work in the framework of the “Natural Interaction with Social Robots Topic Group”Footnote 2 (NISR-TG) proposes to use several levels to describe the social ability of an agent:

-

Level 0: The agent does not interact with the human;

-

Level 1: The agent perceives the human as an object (useful for orienting and navigating);

-

Level 2: The agent perceives the human as another agent that is represented explicitly and can be reidentified time and again;

-

Level 3: A two-way interaction is possible provided that the interacting human knows and obeys some conventions and behaviours required by the agent’s system;

-

Level 3a: A two-way interaction is possible with the ability of spoken language interaction;

-

Level 4: The agent adapts its behaviour to the interaction partners during the interaction;

-

Level 5: The agent recognizes different users and adjusts its behaviour accordingly;

-

Level 6: The agent is capable to interact with more than one users;

-

Level 7: The agent is endowed with personality traits that can be recognized as such by the users and results in displaying different behaviours in the same situations;

-

Level 8: The agent is capable to learn and accumulate experience over multiple interactions;

-

Level 9: The agent is capable to build and sustain relationships with its users.

Progressing through the levels, the agent is expected to gain one-way and two-way interaction capabilities, followed by a more advanced set of skills including adaptation, multiparty interaction management and the incorporation of social constructs like personality.

Humans adapt their social behaviours during interactions based on explicit or implicit cues they receive from the interlocutor. In order to establish longer-lasting relationships between artificial companions and human users, artificial companions need to be able to adjust their behaviour on the basis of previous interactions. That is, they should remember previous interactions and learn from them [10]. To this end, sophisticated mechanisms for the simulation of self-regulatory social behaviours will be required. Furthermore, social interactions will have to be personalized to individuals of different gender, personality and cultural background. For example, cultural norms and values determine whether it is appropriate to show emotions in a particular situation [83] and how they are interpreted by others [82]. While offline learning is prevalent in current systems exploiting SSP techniques, future work should explore the potential of online learning in order to enable continuous social adaptation processes. For the integration of context, novel sensor technologies can be used by the agent in ways that are not available to humans in an ordinary interaction [41, 139]. Multimodality can also be harnessed in expressing social signals in novel ways, for instance, by adding haptic cues to visual displays [15, 49].

At a finer level, a single interaction between two agents also involves an interactive alignment (also see Section “Behaviour Analysis"), where the interacting parties converge on similar representations at different levels of linguistic processing [51, 101]. The alignment at higher levels (e.g. common goals) relies on the alignment of lower levels (e.g. objects of joint attention). This requires that the agents model their interaction partners, anticipate interaction directions, align their communication acts, as well as actions [115]. We can safely assume that research in cognitive science and linguistics will be essential in achieving these goals (see Section “Computational Models of Interaction”).

Computational Models of Interaction

Broadly based on the work of Tomasello [130, 131] (and others) human–human interaction can be represented as a three-step process: sharing attention, establishing common ground and forming shared goals (a.k.a. joint intentionality). Two prerequisites for successful human–human communication via joint intentionality are:

-

The ability to form a successful model of the cognitive state of people around us, i.e. decoding not only overt, but also covert communication signals also referred to as “recursive mind-reading”;

-

Establishing and building trust, a truly human trait.

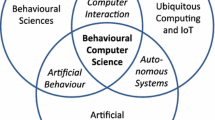

Affective computing, SSP and behavioural signal processing (BSP) address the first prerequisite, building machines that can understand the emotional, social and cognitive state of an individual. A layered view of human–machine interaction from the cognitive and computational perspectives are shown in Fig. 1. This section reviews computational models and associated challenges for each layer.

Joint Attention and Saliency

Unlike computers, humans are able to process only the most salient parts of an image, a sound or a brochure, literally ignoring the rest. Being able to model and predict what a human sees and hears in an audio-visual scene is the first step towards forming a cognitive representation of that scene, as well as establishing common ground in interaction scenarios.

Saliency- and attention-based models have played a significant role in multimedia processing in the past decade [22, 39, 48, 66, 71, 77, 90, 108, 128], exploiting low-level cues from the (mostly) visual, audio and spoken language (transcription) modalities: they have proved very successful in identifying salient events in multimedia for a variety of applications. However, attention-based algorithms typically use only perceptually motivated low-level (frame-based) features and employ no high-level semantic information, with few exceptions in very specific cases (mostly in the visual domain) [90].

Challenges still remain on: (1) mid- and high-level feature extraction including incorporating semantics (scenes, objects, actions) and (2) computational models for the multimodal fusion of the bottom-up (gestalt-based) and top-down (semantic-based) attentional mechanisms. Also applying these multimodal salient models to realistic human–human (especially) and human–computer interaction scenarios remains a challenge. The most promising research direction for these challenges seems to be deep learning, where the integration among levels of diverse granularity of knowledge is the core skill [14]. Finally, identifying the dynamics of attention, i.e. constructing joint (interactional) attention models, remains an open problem in this area.

Common Ground and Concept Representations

While interacting, humans process and disambiguate multimodal cues, integrating low-, mid- and high-level cognitive functions, specifically using the low-level machinery of cue selection (as discussed above) via (joint) attention, the mid-level machinery of semantic disambiguation via common ground and shared conceptual representations and the high-level machinery of intention awareness [62].

Since establishing common ground is a prerequisite for successful communication, an essential module of an ideal interacting machine should model an extensive cognitive semantic/pragmatic representation, that is, a network of concepts and their relations that form the very essence of common ground, and in this sense formal ontologies may help.

Formal ontologies [57] are a top-down (knowledge-based) semantic representation that has been used for interaction modelling mainly by the research community, e.g. [142]. The main advantages of ontologies are description clarity (via mathematical logic) and inference power. However, the following challenges remain to make ontologies a viable representation for practical interactional systems:

-

Mapping between the semantic and lexical/surface representations, a.k.a. the “lexicalization” of ontologies necessary both for natural language (NL) understanding [34] and for NL generation [8]; the same problem holds also in the visual domain, where a proper “visual ontology” is missing or available in very restricted domains [132];

-

Representing ambiguous semantics [4];

-

Representing complex semantics, e.g. time relationships [13];

-

Combining ontology-driven semantics with bottom-up (data-driven) approaches, e.g. for grammar induction [52] and in general for computer vision.

Grounding exists only in the context of our semantic, affective and interactional cognitive representations and should be addressed as such. This poses the grand challenge of using “big data” to construct such cognitive representations, as well as defining the “topology” (unified vs. distributed) and processing logic (parallel/serial) of these representations. Cognitively motivated conceptual representations, e.g. common-sense reasoning [104] and transfer learning [96], and novel machine learning algorithms, e.g. extreme learning machines [25] (1) achieve rapid learning and adaptation to new concepts and situations from very few examples (situational learning and understanding) and (2) provide grounding in interaction and problem-solving settings (negotiating common ground).

From Semantics to Behaviour and Interaction

Even if it was possible to solve the multimodal understanding problem by mapping from signal(s) to semantics (a monumental task by itself), it would still be only half of the way. Assuming that a conceptual representation is in place (see above), this section discusses how to model jointly semantics and affect.

Given that the cognitive semantic space is both distributed and fragmented into subspaces, the mapping from semantics to affective labels should also be distributed and fragmented. Semantic-affective models (SAM) [30, 79, 133] are based on the assumption that semantic similarity implies affective similarity. Thus, affective models can be simply constructed as mappings from semantic neighbourhoods to affective scores. In the SAM model proposed in [79, 80], the affective label of a token can be expressed as a map (trainable linear combination) of its semantic similarities to a set of seed words and the affective ratings of these words. The model can be extended to also handle many-to-many mappings between multiple layers of cognitive representations. The model is consistent with (and implementable via) the multilayered cognitive view of representation and deep learning models.

Although good performance can be obtained for language- and image-processing applications, the challenge remains on how to apply this model to audio and video, where the segmentation of the stream into tokens is not straightforward. Also, the model works very well at estimating the affective content of single tokens (words, images); going from a single token to a sequence of tokens (e.g. word to sentences) is a hard open problem. Last but not least, generalizing this model to other behavioural labels remains a grand challenge.

Open Issues and Challenges

The previous sections have identified major challenges that lie ahead in the fields of affective, social and behavioural signal processing as it pertains to interaction modelling. The sections have also argued that it is very improbable that one can successfully address these major challenges without taking into account the peculiarities of human cognition.

The proposition of this paper is that the solution of these problems should be grounded on human cognition, including modelling the errors (cognitive biases) and nonlinear logic of humans [111]. Although “pure” machine learning algorithms often achieve good performance for classification of low- and mid-level labels, they are less successful with higher-level behavioural classification tasks. This can be partially attributed to the ambiguity, abstraction, subjectivity and representation depth inherent in high-level cognitive tasks. Cognitively inspired models can represent the very errors, biases, subjective beliefs and attitudes of a human. Thus, adopting a human-centred approach becomes increasingly important as we move from signals to behaviours and interaction. The recent achievements of cognitively motivated machine learning paradigms such as representation, transfer and deep learning further validate this view. Interaction modelling poses new challenges and opens up fruitful research directions for the years to come.

Applications

Effectiveness in real-world applications is the ultimate test for any technology-oriented research effort. While being an opportunity for methodological progress and acquisition of key insights about human psychology and cognition, research on modelling, analysis and synthesis of human behaviour aims at achieving impact in terms of both commercial exploitation, i.e. development of products that reach the market and result into jobs creation, and solutions to societal problems, i.e. development of systems that improve the quality of life, especially when it comes to disadvantaged categories.

Addressing the issues and challenges presented in this work will certainly advance the state of the art, but it will increase the chances of success for a wide spectrum of real-world technologies as well (the list is not exhaustive):

-

Analysis of agent–customer interactions at call centresFootnote 3 with the goal of improving the quality of services [50];

-

Improvement of tutoring systems aimed at supporting students in individual and collective learning processes [121];

-

Creation of speech synthesizersFootnote 4 that convey both verbal and non-verbal aspects of a text [124];

-

Enrichment of multimedia indexing systems with social and affective information [6, 26];

-

Recommendation systems that take into account stable individual characteristics (e.g. personality traits) and transient states (e.g. emotions) [28, 129];

-

Socially intelligent surveillance and monitoring systems [40].

The rest of this section focuses on three application domains that address crucial issues and aspects of everyday life, namely health care, human–machine interactions and human–human conversations. The three cases account for three major steps in the process that leads from the laboratory to the real world:

-

The development of a vision based on current state of the art and major technological trends in the case of healthcare personal agents (see Section “The Healthcare Personal Agent: A Vision for the Future of Medicine”);

-

The realization of a prototype that addresses one specific application (intelligent control centres), but results into the definition of principles that can be transferred to other areas (see Section “Building a Working Prototype: The Example of Intelligent Control Centres");

-

The definition of concrete steps bridging the gap between research, industry and society in the case of conversational technologies (see Section “Roadmapping Research and Innovation in Conversational Interaction Technologies”).

The description of the case studies above will provide insights regarding the interdependency between the challenges outlined so far and application-driven needs.

The Healthcare Personal Agent: A Vision for the Future of Medicine

Advances in mobile technologies, such as voice, video, touch screens, web 2.0 capabilities and integration of various on-board sensors and wearable computers, have rendered mobile devices as ideal units for delivery of healthcare services [11]. At the same time, the dawn of the data-driven economy has stirred the innovation of processes and products. Unfortunately, the innovation has been slow in the healthcare sector where much innovation is needed to improve the quality of the service at various end-points (hospitals, healthcare professionals, patients) and reduce costs.

The 2012 survey in [91] reports that in Europe, there were more than one hundred health apps in a variety of languages (Turkish, Italian, Swedish, etc...) and domains (mental problems, self-diagnosis, heart-monitoring, etc.). Such growing number of smartphone applications can track user activity, sleeping and eating habits and covert and overt signals such as blood pressure, heart rate, skin temperature, speech, location, movement by either using the on-board sensors of the smartphone or interacting with various wearable and healthcare monitoring devices.

In the recent years, there has been a growing research interest in creating such applications which can interact with people though context-aware multimodal interfaces and have been used for various healthcare services ranging from monitoring and accompanying the elderly [11, 86] to real-time measuring of healthcare quality [29] and providing healthcare interventions for long-term behaviour changes [88].

Such agents can be useful in keeping track of patient activity in-between visits or to ensure the patients are taking their medicines on time, or that they follow their advised health routine (see Section “Multimodal Embodiment” for challenges related to “always on” agents).

In the future, healthcare personal agent research and development should plan for an agenda where current limitations are addressed and new avenues are explored. Such agenda can directly impact the quality of life and health of people by disrupting current models of delivering healthcare services. Agents will have different physical and virtual appearance (see Section “Multimodal Embodiment" for challenges in embodiment) ranging from avatars to robots (e.g. [86]). Covert signal streams from wearable and mobile sensors may be effectively used to model user state in terms of his/her physiological responses to external stimuli, events and medical protocol he/she is following (see Section “Behaviour Analysis” for challenges related to behaviour analysis).

Personal agents need to be able to handle basic and complex emotions such as empathy. In the healthcare domain, the ability to handle emotions is critical to manage and support, for instance, daily healthcare routine. The affective signals and communication need to be adapted for target patient groups such as children, elderly people. By far one of the most important social and cognitive skills of a conversational agent is the ability to carry out a dialogue with a human (see Section “Roadmapping Research and Innovation in Conversational Interaction Technologies”). Different models of user interaction might be needed for different users/user groups and different application domains (e.g. robotic surgery vs. bank fund transfer vs information seeking). An application tracking brushing habits of kids might achieve better results with gamification, while an obesity monitoring agent should use motivational feedback to improve user compliance.

Left: Comfortable sensor-equipped chair. Micro gestures allow for natural interaction during lengthy passive monitoring periods. Middle: Operators at workstations are tracked and an acoustic interface targets sound at a particular operator without disturbing others. Right: Collaboration and distribution of urgent tasks via hand gestures and shared screens

Building a Working Prototype: The Example of Intelligent Control Centres

Human–computer interaction is one of the domains that directly benefit from multimodal technologies for human behaviour understanding. This applies in particular to applications where machines must adapt as intelligent as possible to the natural and spontaneous behaviour of their users because these need to concentrate their attention and cognitive efforts on difficult and demanding tasks.

Reducing the cognitive load and enabling immediate reaction to alarms in idle times are key requirements that have driven the development of the innovative control centre described in [69]. Comparable efforts on concrete applications have worked on ship bridges [74] and crises response control rooms [64].

In control centres, teams of human operators collaborate to monitor and manipulate external processes, such as in industrial production, IT and telecommunication infrastructure, or public infrastructure such as transportation networks and tunnels. In this domain, innovation towards user interfaces has been picked up slowly since it is limited by governmental regulation or short-term return-on-investment considerations. Surprisingly, many of the systems in use were first built decades ago and have been extended iteratively without proper redesign of their user interfaces until today. Recent generations of operators, however, are digital natives and hence familiar with mobile devices, gesture interfaces and touch screens, for example. While considerable business opportunities can be expected in the next decade to redesign the interfaces in such control rooms, many research challenges remain to be addressed.

Most current systems feature redundant input devices, little context awareness, and expose operators to information overflow. The support for distribution of tasks and collaboration in general leaves to be desired. One key enabling factor in the redesign of such complex systems is the dynamic interpretation of the operators’ actions and interactions as a team while taking the current situation (goal, alarm and stress level, etc.) into account (see Section “Behaviour Analysis” for the challenges related to understanding the behaviour of groups). Inspired by a human-centred design approach, the concept recently proposed in [69] experiments with the combination of visual cues, micro (i.e. fingers and hands only) and macro gestural interaction, an acoustic interface with individualized sound radiation, and intelligent data processing (semantic lifting, see [70]) into a single, universal interface. The concept is considering specific needs of the operators and the length of work shifts, which, for example, led to the omission of wearable devices such as headsets. Figure 2 illustrates several components of this multimodal interaction concept [69]. The work made clear that while research has been addressing the combination of input and output devices of multiple modalities, a lot more applied research is required on their interplay regarding specific tasks in real industry settings.

In a safety critical environment, user interaction requires different levels of robustness and precision according to the tasks. Control centre operators conduct very specific tasks that call for different interaction devices and concepts. Their integration and dynamic adaptation is a challenge. An underlying aim is to actively manage the cognitive load of the operators, mainly to ensure quick reaction in alarm situations. There are idle times where operators essentially take a break but do not leave their workplace, lengthy passive monitoring tasks and very urgent alarm handling situations. A significant impact can be expected in this domain by improved user behaviour analysis.

Roadmapping Research and Innovation in Conversational Interaction Technologies

The research community in multimodal conversational interaction has advanced significantly in recent years; however—despite the fast growth of multimodal smartphone technologies, for example—innovation and commercial exploitation are not always closely connected to research advances. To develop and integrate research and innovation in this area, it is thus important to identify the key innovation drivers and most promising elements across science, technology, products and services on which to focus in the future.

Technology roadmapping is a process to lay out a path from science and technology development through integrated demonstration to products and services that address business opportunities and societal needs. Often performed by individual businesses, it can also be used to put together all of the different viewpoints and information sources available in a large stakeholder community as a way of helping them work together and achieve more. The EU ROCKIT project, driven by a broad vision for conversational interaction technologies, has constructed a technology roadmap for conversational interaction technologies (http://www.citia.eu).

In consultation with researchers and companies of every size (including several workshops involving about 100 researchers and technologists), the ROCKIT support action constructed a technology roadmap for conversational interaction technologies. Since research and business environments can change rapidly, the resultant roadmap is structured to enable stakeholders to steer through change and understand how they can achieve their goals in a changing context. For this reason, the roadmap is not just a series of steps that go from current science and technology outcomes to future profitable products and services, but conveys the relationships among societal drivers of change, products and services, use cases for them and research results.

The ROCKIT roadmap connects the strong research base with commercial and industrial activity and with policy makers. To develop the roadmap, and to make tangible links between research and innovation, a small number of target scenarios have been developed. Each scenario includes its societal and technological drivers, research aspects, market and business drivers and potential test beds. We identified a number of common themes coming out of ROCKIT’s consultations with stakeholders, in particular accessibility, multilinguality, the importance of design, privacy by design, systems for all of human–human, human–machine and human–environment interactions, robustness, security, potentially ephemeral interactions and using the technology to enable fun.

Building on these themes, together with the different social, commercial and technological drivers, we have identified five possible target application scenarios:

-

Adaptable interfaces for all: Interfaces which recognize who you are, where you are and eventually what you want, by drawing on a profiled knowledge base about your habits and preferences. They will therefore be able to adapt to your disability, language, visual competency, specific need for speech or typed input depending on whether you are driving/working with two hands on a repair job or are seated in front of a keyboard, physical or virtual, or are prostrate in bed (see Section “Multimodal Embodiment” for challenges related to agents with internal representations of users and ability to adapt to context and interactions).

-

Smart personal assistants: Multisensory agents able to integrate heterogeneous sources of knowledge, display social awareness and behave naturally in multiuser situations (see Section “Multimodal Embodiment” for challenges related to synthesis of social behaviour).

-

Active access to complex unstructured information: Linking knowledge to rich interaction will enable the development of agents which can search proactively and can make inferences from their (possibly limited) knowledge, to enable people to be notified of relevant things faster and to help people reach understanding of complex situations involving many streams of information (see Section “Computational Models of Interaction” for challenges in representing knowledge and cognitive processes).

-

Communicative robots: Embodied agents able to display personality and to generate and interpret social signals (see Sections “Behaviour Analysis” and “Multimodal Embodiment” for related challenges).

-

Shared collaboration and creativity: Empowering and augmenting communication between people. This will include new approaches to social sharing (across languages), design platforms, which enable people to build their own tools and scalable systems that enable groups to collaborate with shared goals, facilitate problem solving and provide powerful mechanisms for engagement.

Conclusions

This article has described some of the most important challenges and issues that need to be addressed in order to achieve substantial progress in technologies for the modelling, analysis and synthesis of human behaviour, especially for what concerns social interactions. Section “The Data” has shown that data, while being a crucial resource, cannot become an asset for the community without widely accepted practices for design, collection and distribution. Section “Behaviour Analysis" has proposed to move the focus of analysis approaches from individuals involved in an interaction to phenomena that shape groups of interacting people (e.g. interpersonal influence and social contagion). Section “Multimodal Embodiment" highlighted the need of endowing machines, in particular embodied conversational agents, with an internal representation of their users. Section “Computational Models of Interaction" has focused on the possibility of integrating models of human cognitive processes and semantics in technologies dealing with human behaviour. Finally, Section “Applications” has overviewed application domains that can benefit, or are already benefiting, from technologies aimed at modelling, analysis and synthesis of behaviour.

While addressing relatively distinct problems, the challenges above have a few aspects in common that might guide at least the first steps required to address them. The first is that human behaviour is always situated and context dependent. Therefore, technologies for dealing with human behaviour should try to address highly specific aspects of the contexts where they are used rather than trying to be generic. Conversely, it should be always kept in mind that an approach effective in a given situation or context might not work in others. The second is the need of considering both verbal and non-verbal aspects of human–human and human–machine interactions. So far, verbal content and semantics tend to be neglected, the reason being that non-verbal aspects are more honest and, furthermore, taking into account what people say violates the privacy. The third is to model explicitly the processes that drive interactive behaviour in humans, e.g. the development of internal representation of others.

The last part of the article has considered three application case studies that account for different steps of the process that leads from laboratory to real-world applications. Healthcare personal agents have been proposed as a case of research vision that builds upon current technology trends (in particular the diffusion of mobile devices and the availability of large amounts of data) to design new applications of technologies for analysis of behaviour. The case of the intelligent control centres has shown that the implementation of an application-driven prototype provides insights on how technologies revolving around behaviour should progress. Finally, the case of conversational technologies has given an example of how a roadmapping process can contribute to bridge the gap between research and application.

Needless to say, the issues proposed in this article do not necessarily cover the entire spectrum of problems currently facing the community. Furthermore, new challenges and issues are likely to emerge, while the community addresses those described in this work. However, dealing with the problems proposed in this article will certainly lead to substantial improvements of the current state of the art.

Notes

See http://www.cogitocorp.com for a company working on the analysis of call centre conversations.

See https://www.cereproc.com for a company active in the field.

References

Allwood J, Björnberg M, Grönqvist L, Ahlsén E, Ottesjö C. The spoken language corpus at the department of linguistics, Göteborg university. Forum Qual Soc Res. 2000;1.

Allwood J, Cerrato L, Jokinen K, Navarretta C, Paggio P. The MUMIN coding scheme for the annotation of feedback, turn management and sequencing phenomena. Lang Resour Eval. 2007;41(3–4):273–87.

Altmann U. Studying movement synchrony using time series and regression models. In: Esposito A, Hoffmann R, Hübler S, Wrann B, editors. Program and Abstracts of the Proceedings of COST 2102 International Training School on Cognitive Behavioural Systems, 2011.

Ammicht E, Fosler-Lussier E, Potamianos A. Information seeking spoken dialogue systems–part I: semantics and pragmatics. IEEE Trans Multimed. 2007;9(3):532–49.

Anderson A, Bader M, Bard E, Boyle E, Doherty G, Garrod S, Isard S, Kowtko J, McAllister J, Miller J, et al. The HCRC map task corpus. Lang Speech. 1991;34(4):351–66.

André E. Exploiting unconscious user signals in multimodal human–computer interaction. ACM Trans Multimed Comput Commun Appl. 2013;9(1s):48.

André E. Challenges for social embodiment. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, (2014);35–7.

Androutsopoulos I, Lampouras G, Galanis D. Generating natural language descriptions from owl ontologies: the NaturalOWL system. 2014. arXiv preprint arXiv:14056164.

Ashenfelter KT, Boker SM, Waddell JR, Vitanov N. Spatiotemporal symmetry and multifractal structure of head movements during dyadic conversation. J Exp Psychol Human Percept Perform. 2009;35(4):1072.

Aylett R, Castellano G, Raducanu B, Paiva A, Hanheide M Long-term socially perceptive and interactive robot companions: challenges and future perspectives. In: Bourlard H, Huang TS, Vidal E, Gatica-Perez D, Morency LP, Sebe N. editors. Proceedings of the 13th International Conference on Multimodal Interfaces, ICMI 2011, Alicante, Spain, November, 2011. 14–18, ACM, p. 323–326.

Baig MM, Gholamhosseini H. Smart health monitoring systems: an overview of design and modeling. J Med Syst. 2013;37(2):9898.

Baker R, Hazan V LUCID: a corpus of spontaneous and read clear speech in british english. In: Proceedings of the DiSS-LPSS Joint Workshop 2010.

Batsakis S, Petrakis EG. SOWL: a framework for handling spatio-temporal information in owl 2.0. Rule-based reasoning, programming, and applications. New York: Springer; 2011.

Bengio Y, Courville A, Vincent P. Representation learning: a review and new perspectives. IEEE Trans Pattern Anal Mach Intell. 2013;35(8):1798–828.

Bickmore TW, Fernando R, Ring L, Schulman D. Empathic touch by relational agents. IEEE Trans Affect Comput. 2010;1(1):60–71.

BNC-Consortium. 2000. http://www.hcu.ox.ac.uk/BNC

Boker SM, Xu M, Rotondo JL, King K. Windowed cross-correlation and peak picking for the analysis of variability in the association between behavioral time series. Psychol Methods. 2002;7(3):338–55.

Boker SM, Cohn JF, Theobald BJ, Matthews I, Spies J, Brick T. Effects of damping head movement and facial expression in dyadic conversation using real-time facial expression tracking and synthesized avatars. Philos Trans R Soc B. 2009;364:3485–95.

Bonin F, Gilmartin E, Vogel C, Campbell N. Topics for the future: genre differentiation, annotation, and linguistic content integration in interaction analysis. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;5–8.

Bos J, Klein E, Lemon O, Oka T. DIPPER: description and formalisation of an information-state update dialogue system architecture. In: Proceedings of SIGdial Workshop on Discourse and Dialogue, 2003;115–24.

Bosma W, André E. Exploiting emotions to disambiguate dialogue acts. In: Proceedings of the International Conference on Intelligent User Interfaces, 2004;85–92.

Boujut H, Benois-Pineau J, Ahmed T, Hadar O, Bonnet P. A metric for no-reference video quality assessment for hd tv delivery based on saliency maps. In: Proceedings of IEEE International Conference on Multimedia and Expo, 2011;1–5.

Breazeal CL. Designing sociable robots. Cambridge: MIT press; 2004.

Bunt H. Dialogue control functions and interaction design. NATO ASI Series F Comput Syst Sci. 1995;142:197.

Cambria E, Huang GB. Extreme learning machines. IEEE Intell Syst. 2013;28(6):30–1.

Cambria E, Hussain A. Sentic album: content-, concept-, and context-based online personal photo management system. Cognit Comput. 2012;4(4):477–96.

Cambria E, Hussain A. Sentic computing: a common-sense-based framework for concept-level sentiment analysis. New York: Springer; 2015.

Cambria E, Mazzocco T, Hussain A, Eckl C. Sentic medoids: organizing affective common sense knowledge in a multi-dimensional vector space. In: Advances in Neural Networks, no. 6677 in LNCS, Springer, 2011. p. 601–610.

Cambria E, Benson T, Eckl C, Hussain A. Sentic PROMs: application of sentic computing to the development of a novel unified framework for measuring health-care quality. Expert Syst Appl. 2012;39(12):10,533–43.

Cambria E, Fu J, Bisio F, Poria S. Affective space 2: enabling affective intuition for concept-level sentiment analysis. In: Proceedings of the AAAI Conference on Artificial Intelligence (2015).

Campbell N. Approaches to conversational speech rhythm: speech activity in two-person telephone dialogues. In: Proceedings of the International Congress of the Phonetic Sciences, 2007. p. 343–48.

Cassell J. Embodied conversational agents. Cambridge: MIT press; 2000.

Chetouani M. Role of inter-personal synchrony in extracting social signatures: some case studies. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, 2014. p. 9–12.

Cimiano P, Buitelaar P, McCrae J, Sintek M. Lexinfo: a declarative model for the lexicon-ontology interface. Web Semant Sci Serv Agents World Wide Web. 2011;9(1):29–51.

Cohn J, Tronick E. Mother–infant face-to-face interaction: influence is bidirectional and unrelated to periodic cycles in either partner’s behavior. Dev Psychol. 1988;34(3):386–92.

Cohn JF, Ekman P. Measuring facial action by manual coding, facial emg, and automatic facial image analysis. In: Harrigan J, Rosenthal R, Scherer K, editors. Handbook of nonverbal behavior research methods in the affective sciences. Oxford: Oxford University Press; 2005. p. 9–64.

Core M, Allen J. Coding dialogs with the DAMSL annotation scheme. In: AAAI Fall Symposium on Communicative Action in Humans and Machines, 1997. p. 28–35.

Cristani M, Ferrario R. Statistical pattern recognition meets formal ontologies: towards a semantic visual understanding. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, 2014. p. 23–25.

Cristani M, Bicego M, Murino V. On-line adaptive background modelling for audio surveillance. In: Proceedings of the International Conference on Pattern Recognition. 2004;2:399–402.

Cristani M, Raghavendra R, Del Bue A, Murino V. Human behavior analysis in video surveillance: a social signal processing perspective. Neurocomputing. 2013;100:86–97.

Damian I, Tan CSS, Baur T, Schöning J, Luyten K, André E Exploring social augmentation concepts for public speaking using peripheral feedback and real-time behavior analysis. In: Proceedings of the International Symposium on Mixed and Augmented Reality, 2014.

Delaherche E, Chetouani M. Multimodal coordination: exploring relevant features and measures. In: Proceedings of the International Workshop on Social Signal Processing. 2010;47–52.

Delaherche E, Chetouani M, Mahdhaoui M, Saint-Georges C, Viaux S, Cohen D. Interpersonal synchrony : a survey of evaluation methods across disciplines. IEEE Trans Affect Comput. 2012;3(3):349–65.

Delaherche E, Dumas G, Nadel J, Chetouani M. Automatic measure of imitation during social interaction: a behavioral and hyperscanning-EEG benchmark. Pattern Recognition Letters (to appear), 2015.

DuBois JW, Chafe WL, Meyer C, Thompson SA. Santa Barbara corpus of spoken American English. CD-ROM. Philadelphia: Linguistic Data Consortium; 2000.

Edlund J, Beskow J, Elenius K, Hellmer K, Strömbergsson S, House D. Spontal: a swedish spontaneous dialogue corpus of audio, video and motion capture. In: Proceedings of Language Resources and Evaluation Conference, 2010.

Ekman P, Huang T, Sejnowski T, Hager J. Final report to NSF of the planning workshop on facial expression understanding. http://face-and-emotion.com/dataface/nsfrept/nsf_contents.htm. 1992.

Evangelopoulos G, Zlatintsi A, Potamianos A, Maragos P, Rapantzikos K, Skoumas G, Avrithis Y. Multimodal saliency and fusion for movie summarization based on aural, visual, textual attention. IEEE Trans Multimed. 2013;15(7):1553–68.

Gaffary Y, Martin JC, Ammi M. Perception of congruent facial and haptic expressions of emotions. In: Proceedings of the ACM Symposium on Applied Perception. 2014;135–135.

Galanis D, Karabetsos S, Koutsombogera M, Papageorgiou H, Esposito A, Riviello MT. Classification of emotional speech units in call centre interactions. In: Proceedings of IEEE International Conference on Cognitive Infocommunications. 2013;403–406.

Garrod S, Pickering MJ. Joint action, interactive alignment, and dialog. Topics Cognit Sci. 2009;1(2):292–304.

Georgiladakis S, Unger C, Iosif E, Walter S, Cimiano P, Petrakis E, Potamianos A. Fusion of knowledge-based and data-driven approaches to grammar induction. In: Proceedings of Interspeech, 2014.

Godfrey JJ, Holliman EC, McDaniel J. SWITCHBOARD: telephone speech corpus for research and development. Proc IEEE Int Conf Acoust Speech Signal Process. 1992;1:517–20.

Gottman J. Time series analysis: a comprehensive introduction for social scientists. Cambridge: Cambridge University Press; 1981.

Greenbaum S. ICE: the international corpus of English. Engl Today. 1991;28(7.4):3–7.

Grosz BJ. What question would Turing pose today? AI Mag. 2012;33(4):73–81.

Guarino N. Proceedings of the international conference on formal ontology in information systems. Amsterdam: IOS press; 1998.

Hammal Z, Cohn J. Intra- and interpersonal functions of head motion in emotion communication. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, in conjunction with the 16th ACM International Conference on Multimodal Interaction ICMI 2014. 12–16 November 2014. p. 19–22.

Hammal Z, Cohn JF, Messinger DS, Masson W, Mahoor M. Head movement dynamics during normal and perturbed parent-infant interaction. In: Proceedings of the biannual Humaine Association Conference on Affective Computing and Intelligent Interaction, 2013.

Hammal Z, Cohn JF, George DT. Interpersonal coordination of head motion in distressed couples. IEEE Trans Affect Comput. 2014;5(9):155–67.

Hoekstra A, Prendinger H, Bee N, Heylen D, Ishizuka M. Highly realistic 3D presentation agents with visual attention capability. In: Proceedings of International Symposium on Smart Graphics. 2007;73–84.

Howard N, Cambria E. Intention awareness: improving upon situation awareness in human-centric environments. Human-Centric Comput Inform Sci. 2013;3(1):1–17.

Hussain A, Cambria E, Schuller B, Howard N. Affective neural networks and cognitive learning systems for big data analysis. Neural Netw. 2014;58:1–3.

Ijsselmuiden J, Grosselfinger AK, Münch D, Arens M, Stiefelhagen R. Automatic behavior understanding in crisis response control rooms. In: Ambient Intelligence, Lecture Notes in Computer Science, vol 7683, Springer, 2012; 97–112.

ISO Language resource management: semantic annotation framework (SemAF), part 2: Dialogue acts, 2010.

Itti L, Koch C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Res. 2000;40(10):1489–506.

Jaffe J, Beebe B, Feldstein S, Crown CL, Jasnow M. Rhythms of dialogue in early infancy. Monogr Soc Res Child. 2001;66(2):1–149.

Janin A, Baron D, Edwards J, Ellis D, Gelbart D, Morgan N, Peskin B, Pfau T, Shriberg E, Stolcke A. The ICSI meeting corpus. Proc IEEE Int Conf Acoust Speech Signal Process. 2003;1:1–364.

Kaiser R, Fuhrmann F. Multimodal interaction for future control centers: interaction concept and implementation. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, 2014.

Kaiser R, Weiss W. Virtual director. Media production: delivery and interaction for platform independent systems. New York: Wiley; 2014. p. 209–59.

Kalinli O. Biologically inspired auditory attention models with applications in speech and audio processing. PhD thesis, University of Southern California, 2009.

Kenny D, Mannetti L, Pierro A, Livi S, Kashy D. The statistical analysis of data from small groups. J Pers Soc Psychol. 2002;83(1):126.

Koutsombogera M, Papageorgiou H. Multimodal analytics and its data ecosystem. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;1–4.

Kristiansen H. Conceptual design as a driver for innovation in offshore ship bridge development. In: Maritime Transport VI, 2014;386–98.

Larsson S, Traum DR. Information state and dialogue management in the TRINDI dialogue move engine toolkit. Nat Lang Eng. 2000;6(3&4):323–40.

Lemke JL. Analyzing verbal data: principles, methods, and problems. Second international handbook of science education. New York: Springer; 2012. p. 1471–84.

Liu T, Feng X, Reibman A, Wang Y. Saliency inspired modeling of packet-loss visibility in decoded videos. In: International Workshop on Video Processing and Quality Metrics for Consumer Electronics, 2009. p. 1–4.

Madhyastha TM, Hamaker EL, Gottman JM. Investigating spousal influence using moment-to-moment affect data from marital conflict. J Fam Psychol. 2011;25(2):292–300.

Malandrakis N, Potamianos A, Iosif E, Narayanan S. Distributional semantic models for affective text analysis. IEEE Trans Audio Speech Lang Process. 2013;21(11):2379–92.

Malandrakis N, Potamianos A, Hsu KJ, Babeva KN, Feng MC, Davison GC, Narayanan S. Affective language model adaptation via corpus selection. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, 2014.

Martin P, Bateson P. Measuring behavior: an introductory guide. 3rd ed. Cambridge: Cambridge University Press; 2007.

Matsumoto D. Cultural influences on the perception of emotion. J Cross-Cultural Psychol. 1989;20(1):92–105.

Matsumoto D. Cultural similarities and differences in display rules. Motiv Emot. 1990;14(3):195–214.

McCowan I, Carletta J, Kraaij W, Ashby S, Bourban S, Flynn M, Guillemot M, Hain T, Kadlec J, Karaiskos V. The AMI meeting corpus. In: Proceedings of the International Conference on Methods and Techniques in Behavioral Research, 2005;88.