Abstract

Land cover (LC) changes play a major role in global as well as at regional scale patterns of the climate and biogeochemistry of the Earth system. LC information presents critical insights in understanding of Earth surface phenomena, particularly useful when obtained synoptically from remote sensing data. However, for developing countries and those with large geographical extent, regular LC mapping is prohibitive with data from commercial sensors (high cost factor) of limited spatial coverage (low temporal resolution and band swath). In this context, free MODIS data with good spectro-temporal resolution meet the purpose. LC mapping from these data has continuously evolved with advances in classification algorithms. This paper presents a comparative study of two robust data mining techniques, the multilayer perceptron (MLP) and decision tree (DT) on different products of MODIS data corresponding to Kolar district, Karnataka, India. The MODIS classified images when compared at three different spatial scales (at district level, taluk level and pixel level) shows that MLP based classification on minimum noise fraction components on MODIS 36 bands provide the most accurate LC mapping with 86% accuracy, while DT on MODIS 36 bands principal components leads to less accurate classification (69%).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Land cover (LC) changes induced by human and natural processes are linked to climate and weather in many complex ways. These linkages between LC dynamics and climate include the exchange of greenhouse gases (water vapor, carbon dioxide, methane, etc.) between the land surface and the atmosphere, the radiation balance of the land surface, the exchange of sensible heat in the atmosphere, and the roughness of the land surface. Because of these linkages between LC and climate, changes in LC are important for climate studies and its variability. This has fuelled the research in LC mapping, with more recent technical developments in object oriented analysis and ontology (Camara et al. 2000; Benz et al. 2004; Sun et al. 2005). Recently, there have been attempts of LC mapping in many parts of the world including China, the European Union, and India (EEA & ETC/LC, Corine LC Technical Guide 1999; Torma and Harma 2004; Natural Resources Census 2005), etc. based on monotemporal remote sensing (RS) data with the analysis being done on an annual basis. However, monitoring LC dynamics with time series data would not be economical for regional or national level mapping with commercial data. Also, RS data such as ASTER are inexpensive and have a better spatial resolution, but are not regularly available for all geographical regions. Relatively, temporal MODIS data with more spectral bands (7 bands composite-data every 8 days availability with Level 3 processing and MODIS 36 bands product every 1–2 day availability with Level 1B processing) with spatial resolution ranging from 250 m to 1 km can be downloaded freely and are suitable for many applications, especially for countries with large area ground coverage. Their frequent availability is useful to account for seasonal variations and changes in LC pattern.

In order to obtain these LC types, remotely sensed data are classified by identifying the pixels according to user-specified categories, by allocating a pixel to the spectrally maximally “similar” class, which is expected to be the class of maximum occupancy within the pixel. LC mapping can be performed using various algorithms by processing the RS data into different themes or classes. The principle and the purpose behind each of these techniques may be different and each of these algorithms may also result in different output maps.

Many methods have been proposed to obtain the classified image. This study comparatively analyses the performance of neural network (NN) based multilayer perceptron (MLP) classifier and decision tree (DT) that have been proposed for classification of superspectral MODIS data. The motivation for using MLP and DT in this study came from the poor result obtained by experimenting with the conventional classification techniques such as Maximum Likelihood Classifier (MLC) and Spectral Angle Mapper (SAM) that gave lesser overall accuracies (76%, 30%, 42% with MLC and 69%, 35%, 49% with SAM) for three different types of MODIS based inputs (as explained in the “Results—MODIS data classification” in Section 4). On the other hand, the results obtained from neural based classifier and DT (which are non-parametric classifiers) gave quite motivating results for regional LC mapping using the coarse spatial resolution data.

Data and Study Area

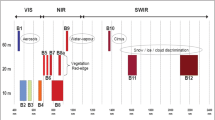

The MOD 09 Surface Reflectance 8-day (19-26th Dec 02) L3G product at 250 m (band 1 and 2) and bands 3 to 7 at 500 m spatial resolution (http://edcimswww.cr.usgs.gov/pub/imswelcome/), and MOD 02 Level-1B Calibrated Geolocation (georeferenced) data at 1 km (bands 1 to 36, acquired on 21st Dec 02 described at http://modis.gsfc.nasa.gov/data/dataprod/) were used. They are atmospherically and radiometrically corrected, fully calibrated and geolocated radiances at-aperture for all spectral bands and are processed to Level 3G (http://modis.gsfc.nasa.gov/data/dataprod/dataproducts.php?MOD_NUMBER=09) and Level 1B (http://daac.gsfc.nasa.gov/MODIS/Aqua/product_descriptions_modis.shtml#rad_geo) respectively. IRS (Indian Remote Sensing Satellite) LISS (Linear Imaging Self Scanner)-III MSS data in Green, Red and NIR bands with a spatial resolution of 23.5 m (acquired on 22nd and 25th Dec 02) were purchased from National Remote Sensing Agency, Hyderabad, India.

The Kolar district in Karnataka State, India, extending over an area of 8,238 km2 between 77°21′ to 78°21 E and 12°46′ to 13°58′ N, was chosen for this study. Kolar (Fig. 1) is divided into 11 sub-regions for administration purposes (Ramachandra and Rao 2005). The study area is mainly dominated by 6 broad LC classes: agricultural land, built up, forest, plantations, waste land and water bodies. There are a few other LC classes (barren/rock/stone/others) that have very less ground area proportions and are unevenly scattered among the major six classes, and were grouped under the waste land category.

Methodology

LISS-III data were geo-corrected, mosaiced, cropped pertaining to study area boundary and resampled to 250 m, (for pixel level comparison with MODIS classified data). Supervised classification was performed using a Maximum Likelihood classifier followed by accuracy assessment. It is to be noted that the same technique did not perform well on coarse resolution data (MODIS) and therefore, we evaluated NN and DT for MODIS data classification. The MODIS data were geo-corrected with an error of 7 m with respect to LISS-III images. The 500 m resolution bands 3 to 7 and 1 km MODIS 36 bands were resampled to 250 m using nearest neighbour (with Polyconic projection and Evrst 1956 as the datum). Principal components (PC) and minimum noise fraction (MNF) components were derived from the 36 bands to reduce noise and computational requirements for subsequent processing. The methodology is depicted in Fig. 2. The spectral characteristics of the training data were analysed using spectral plots and a Transformed Divergence matrix. MODIS data were classified using MLP and DT. The MLP based NN classifier and DT is briefly discussed below.

MLP Based NN Classifier

NN classification overcomes the difficulties in conventional digital classification algorithms that use the spectral characteristics of the pixel in deciding to which class a pixel belongs. The bulk of MLP based classification in RS has used multiple layer feed-forward networks that are trained using the back-propagation algorithm based on a recursive learning procedure with a gradient descent search. A detailed introduction can be found in literatures (Atkinson and Tatnall 1997; Haykin 1999; Kavzoglu and Mather 1999; Duda et al. 2000; Kavzoglu and Mather 2003; Mas 2003) and case studies (Bischof et al. 1992; Heermann and Khazenie 1992; Chang and Islam 2000; Venkatesh and KumarRaja 2003).

The MLP in this work is trained using the error backpropagation algorithm (Rumelhart et al. 1986). The main aspects here are: (1) the order of presentation of training samples should be randomised from epoch to epoch; and (2) the momentum and learning rate parameters are typically adjusted (and usually decreased) as the number of training iterations increase. Back propagation algorithm for training the MLP is briefly stated below:

-

1.)

Initialize network parameters: Set all the weights and biases of the network to small random values.

-

2.)

Present input and desired outputs: Present a continuous valued input vector, x 0 , x 1 ,…, x n-1 , and specify the desired output d 0 , d 1 ,…d n-1 . If the network is used as a classifier, then all the desired outputs are typically set to zero except for that corresponding to the class of the input. That desired output is 1.

-

3.)

Forward computation: Let a training example in the epoch be denoted by [x(n), d(n)], with the input vector x(n) applied to the input layer of sensory nodes and the desired response vector d(n) presented to the output layer of computation nodes. The net internal activity v j (l)(n) for the neuron j in layer l is given by Eq. 1

where \( y_i^{{(l - 1)}}(n) \) is the function signal of neuron i in the previous layer (l-1) at iteration n, and \( w_{{ji}}^{{(l)}}(n) \) is the synaptic weight of neuron j in the layer l that is fed from neuron i in layer (l-1). Assuming the use of sigmoid function as the nonlinearity, the function (output) signal of neuron j in layer l is given by Eq. 2

If neuron j is in the first hidden layer (i.e., l = 1), set y j (0) = xj(n), where xj(n) is the j th element of the input vector x(n). If neuron j is in the output layer (i.e., l = L), set \( y_j^{{(L)}}(n) = {o_j}(n) \). Hence, compute the error signal \( {e_j}(n) = {d_j}(n) - {o_j}(n) \), where d j (n) is the j th element of the desired response vector d(n).

-

4.)

Backward computation: Compute the δ’s (i.e., the local gradients) of the network by proceeding backward, layer by layer:

\( \delta_j^{{(l)}}(n) = e_j^{{(l)}}(n){o_j}(n)[1 - {o_j}(n)] \), for neuron j in output layer L,

\( \sum\limits_k {\delta_k^{{(l + 1)}}(n)w_{{kj}}^{{(l + 1)}}(n)} \), for neuron j in the hidden layer l.

Hence adjust the synaptic weights of the network in layer l according to the generalised delta rule (Eq. 3):

where η is the learning-rate parameter and α is the momentum constant.

-

5.)

Iteration: Iterate the forward and backward computations under steps 3 and 4 by presenting new epochs of training examples to the network until stopping criterion is met.

Decision Tree

Decision tree (DT) is a machine learning algorithm and a non-parametric classifier involving a recursive partitioning of the feature space, based on a set of rules learned by an analysis of the training set. A tree structure is developed; a specific decision rule is implemented at each branch, which may involve one or more combinations of the attribute inputs. A new input vector then travels from the root node down through successive branches until it is placed in a specific class (Piramuthu 2006) as shown in Fig. 3. The thresholds used for each class decision are chosen using minimum entropy or minimum error measures. It is based on using the minimum number of bits to describe each decision at a node in the tree based on the frequency of each class at the node. With minimum entropy, the stopping criterion is based on the amount of information gained by a rule (the gain ratio). DT algorithm is stated briefly:

-

1.)

If there are k classes denoted {C 1 , C 2 ,….C k }, and a training set, T, then

-

2.)

If T contains one or more objects which all belong to a single class C j , then the decision tree is a leaf identifying class C j .

-

3.)

If T contains no objects, the decision tree is a leaf determined from information other than T.

-

4.)

If T contains objects that belong to a mixture of classes, then a test is chosen, based on a single attribute that has one or more mutually exclusive outcomes {O 1 , O 2 ,…On}. T is portioned into subsets T 1 , T 2 ,…Tn, where Ti contains all the objects in T that have outcome Oi of the chosen test.

The same method is applied recursively to each subset of training objects to build DT. Successful applications of DT using MODIS data have been reported in Chang et al. (2007) and Wardlow and Egbert (2008). Accuracy assessment was done for the classified maps with ground truth data. LC percentages were compared at sub-regional level (taluk level) and at pixel level with a LISS-III classified map.

Results

Classification of LISS-III

The class spectral characteristics for 6 LC categories using LISS-III MSS band 2, 3 and 4 were obtained from the training pixels spectra to assess their inter-class separability and the images were classified as shown in Fig. 4a with training data collected using pre-calibrated GPS, uniformly distributed over the study area. This was validated with the representative field data and the LC statistics are given in Table 1. Producer’s, User’s, and Overall accuracy computed are listed in Table 2. A kappa (k) value of 0.95 was obtained, indicating that the classified outputs are in good agreement with the ground conditions to the extent of 95%. The possible source of errors in classification was mainly due to the temporal difference in training data collection (December, 2005) and image acquisition (December, 2002).

The same data was also classified in 1,000 iterations using NN where the training threshold contribution was set to 0.1, training rate was 0.2, training RMSE was 0.09, number of hidden layers was 1 and the output activation threshold was 0.001. The overall accuracy was 95.1% with producer’s and user’s accuracy in the range of 85–96% and 85–95% respectively, and a kappa (k) value of 0.94.

Even though the outputs obtained from MLC and NN were very close to each other in terms of overall accuracy (95.63% for MLC and 95.1% for NN), MLC output was marginally superior and was used as a reference to validate the output of the MODIS products. Choice of MLC was more justified, as the time complexity involved in training the neurons in NN was very high compared to MLC and NN classification became more complex with the large image size of LISS-III MSS (5,997 rows × 6,142 columns). This conventional per-pixel spectral-based classifier (MLC) constitutes a historically dominant approach to RS-based automated land-use/land-cover (LULC) derivation (Gao et al. 2004; Hester et al. 2008) and in fact, this aids as “benchmark” for evaluating the performance of novel classification algorithms (Song et al. 2005). The dimension of MODIS data was very less (532 rows × 546 columns) and hence it is reasonable to rigorously apply and choose appropriate classification algorithm. In this context, we applied MLC, NN and DT on different products of MODIS, but used MLC based LISS-III MS classified map for evaluating the MODIS outputs.

Classification of MODIS

The MODIS data (bands 1 to 7), the first five PC’s and the first five MNF components of the MODIS 36 bands (based on their higher eigen values) were classified using MLP (Fig. 4b, c, d). The order of presentation of training samples was randomized from epoch to epoch and the momentum and learning rate parameters were adjusted as the number of training iterations increased. The process for training the neurons converged at 1,000 iterations. The number of hidden layer was kept at 1, the output activation function and momentum were kept low and increased in steps to see the variations in the classification result. The RMS error at the completion of the process was 0.09, 0.39 and 0.29 for the three different inputs, respectively. Figure 5 shows the training iterations on the three different data sets. DT-See5 was used to classify the same three datasets. For each training site, a level of confidence in characterization of the site was recorded as described below:

-

(1)

The scaled reflectance values for LC classes were extracted for all the ground points.

-

(2)

All the data were converted into See5 compatible format and was submitted for extraction of rules.

-

(3)

Since the boosting option changes the exact confidence of the rule, the rules were extracted without boosting option. A 10% cut off was allowed for pruning the wrong observations.

-

(4)

Once the rules were framed by the See5, these rules were ported into Knowledge engineer to make a knowledge based classification schema. It was ensured that 90% confidence level is maintained for all the classes defined.

-

(5)

Subsequently the decision tree schema was applied over the data set to produce the colour coded land cover output (Fig. 4e, f, g).

Table 3 shows the total number of rules generated, the maximum and the minimum confidence level for each class and the number of rules used for classification at a confidence level of 0.600 for MODIS 7 band data. For MODIS derived PCs, the rules were generated at a confidence level of 0.920 (Table 4). Here the image was classified into more number of classes (16) and these classes were ultimately merged into six categories. For the MNF bands, the threshold factor was maintained at 0.7 at a confidence level 0.700 (Table 5). Table 1 shows the LC statistics for the classification results.

Accuracy Assessment

Ground Truth/Field Data

User’s, Producer’s and Overall accuracy assessment of the MODIS classified maps was performed with the ground truth data that were collected using handheld GPS in the same month (December, 2005) as that of the RS data acquisition representing the entire study area. Thirty test samples were used for validating agriculture, builtup, forest, plantation and waste land classes each and 10 test samples were used for validating water bodies. Survey of India Topographical sheets (1:50000) were also used to validate the results (Table 2). The table highlights that some of the LC classes have been classified with higher accuracy using NN while DT has outperformed in classifying the other remaining LC classes. This is explained in detail in the discussion section (Discussion).

Sub-regional Level

At the sub-regional (taluk) level, MODIS based LC class percentages were compared with LISS-III MSS based LC class percentages. The assessment showed that NN analysis of MODIS band 1 to 7 is best for mapping agriculture (with −5% differences between values from LISS-III mapped agriculture), while it fails for plantation and water bodies. DT on MODIS 7 bands is superior for mapping built up (−2.4%), waste land (+1%) and also good for mapping plantation (+2%) with MNF components of 36 bands. While NN on MNF could map forest properly with −3.5% difference and water bodies with +1% difference on sub-region wise distribution. Hence, further analysis was carried out at pixel level to understand the sources of these differences.

Pixel Level

A pixel of MODIS (250 m) corresponds to a kernel of 10 × 10 pixels of LISS-III spatially (Fig. 6). Classified maps using MODIS data were validated pixel by pixel with the classified LISS-III image. A kernel of 10 × 10 in LISS-III for a particular category was considered as homogeneous when the presence of that class was ≥90%. User’s, Producer’s and Overall accuracy is listed in Table 6.

Discussion

Two data mining techniques—MLP and DT were compared based on three different input data sets obtained from MODIS. The first input was the MODIS 7 bands product, the second was the PCs derived from MODIS 36 bands and third was the MNF components derived from the same MODIS 36 bands. The six different outputs at 250 m spatial resolution (3 obtained from MLP implementation and 3 obtained from DT algorithm) were compared with the high spatial resolution LISS-III classified image.

In general, hard classification techniques such as MLP, DT and MLC perform well with high spatial resolution data (such as IRS LISS-III or Landsat ETM+) compared to moderate or low spatial resolution (such as MODIS). MODIS classified images had many pixels misclassified as is clear from the accuracy assessment (Tables 2 and 6). However, some errors may have occurred since the signal of the pixel is ambiguous, perhaps as a result of spectral mixing. As an additional argument, it can be said that as the pixel size increases (in this case 250 m), the chance of high accuracies being product of random assignment of values also declines.

Classification accuracy using ground truth and pixel to pixel mapping revealed that MLP on MODIS MNF components is overall superior to all other techniques, followed by DT on MNF, and the same technique performed worst on PC’s. At the pixel level lower accuracies were reported—since only 10 × 10 pixels with ≥90% homogeneity in LISS-III were considered for comparison (65% of the pixels were homogeneous in the study area) since the LC is very fragmented. However, at the sub-regional level the algorithms performed in a different way for various classes, revealing that a certain algorithm may be good for mapping a particular class, but at the same time may not be equally good for mapping all other classes.

Pre-processing techniques such as PCA and MNF had varied effects on the accuracy. Both techniques performed better on MNF components and worst on PC’s compared to MODIS 7 bands data. Figure 5 illustrates that the training of the neurons was smooth in the case of MNF components, a reason to substantiate is that the noise component and the redundancy are removed from the data compared to PCs, where only redundancy is removed. The result also gives an insight as to which technique is better for mapping heterogeneous LC classes. The pixels in the PC’s were not very distinct and were clustered into sub groups comprising of two or three pixels, leading to inaccurate results. However, classifying each pixel based on signature for PC’s and MNF components was difficult, since the image was slightly pixilated, although the class separability was very good. The same trend was observed at the pixel level; the two techniques performed moderately better on MNF but relatively poor on PC’s and maintained the same position in the rankings for MODIS 7 bands data in the range of 62% (see Tables 2 and 3). This reveals that highly preprocessed MOD 09 data (Level 3) take care of all the atmospheric disturbances, whereas the 36 band data, MOD 02 at Level 1B requires further preprocessing to actually represent a good estimate of the surface spectral reflectance as it would have been measured at ground level without atmospheric scattering or absorption. In other words, MOD 09 is 8-day composite product acquired on 8 continuous days while MOD 02 product was gained by processing of only 1 day image, thereby it was possibly still affected by atmospheric and angular effects. MLP classifiers have proven superior to conventional classifiers, often recording Overall accuracy improvements in the range of 10%. Similar results have also been reported by Kim (2006) while empirically comparing the performance of NN and DT. It was observed that the performance of NN improved faster than DT as the number of classes of categorical variable increased while varying the number of independent variables, the types of independent variables, the number of classes of the independent variables, and the sample size. As the number of successful applications of MLP increases, it is increasingly clear that the technique can produce more accurate results for RS applications.

However, the back propagation NN is not guaranteed to find the ideal solution to a particular problem since the network may get caught in a local minimum in the output error field, rather than reaching the absolute minimum error. Alternatively, the network may begin to oscillate between two slightly different states, each of which results in approximately equal error. DT are less appropriate for estimation tasks, where the goal is to predict the value of a continuous variable, unless a lot of effort is put into presenting the data in such a way that trends and sequential patterns are made visible. The process of growing a DT is also computationally expensive since at each node each candidate splitting field must be sorted before its best split can be found. Pruning algorithms can also be computationally expensive since many candidate sub-trees must be formed and compared.

Conclusions

The utility of MLP and DT in classifying MODIS data is compared in this communication. The results showed that MLP on MNF components was best for LC mapping (86% accuracy) at regional scale. This technique was able to map agriculture, forest and water bodies properly, whereas DT is good in classifying built up areas, plantation and waste land. The results from this study can lead to better mapping of various LC features from MODIS data with the help of ancillary data (secondary data) in the absence of high resolution imagery. One potential limitation of the study is that the MODIS pixels could as well be softly classified using unmixing techniques (such as Linear unmixing, or NN based unmixing) rather than using hard classification that assigns a single class to each pixel. The utility of these unmixing techniques then would have been studied on different MODIS products. The unmixing techniques give a better estimate of the percentage area of different LC classes since most landscapes are heterogeneous in nature and are a mixture of various LC classes. This could be the future work of the current study.

References

Atkinson, P. M., & Tatnall, A. (1997). Introduction neural networks in remote sensing. International Journal of Remote Sensing, 18(4), 699–709.

Benz, U. C., Hofmann, P., Willhauck, G., Lingenfelder, I., & Heynen, M. (2004). Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. Photogrammetry and Remote Sensing, 58, 239–258.

Bischof, H., Schneider, W., & Pinz, A. J. (1992). Multispectral classification of Landsat images using neural networks. IEEE Transactions on Geoscience and Remote Sensing, 30(3), 482–490.

Camara, G., Monteiro, A. M. V., Paiva, J., & Souza, R. C. M. (2000). Action-driven ontologies of the geographical space. In M. J. Egenhofer & D. M. Mark (Eds.), GIScience. Savannah: AAG.

Chang, D., & Islam, S. (2000). Estimation of soil physical properties using remote sensing and artificial neural network. Remote Sensing of Environment, 74(3), 534–544.

Chang, J., Hansen, M. C., Pittman, K., Carroll, M., & DiMiceli, C. (2007). Corn and soybean mapping in the United States using MODIS time-series data sets. Agronomy Journal, 99, 1654–1664.

Duda, R. O., Hart, P. E., & Stork, D. G. (2000). Pattern classification (pp. 517–598). Indianapolis: Wiley-Interscience.

EEA & ETC/LC, Corine LC Technical Guide (1999). http://etc.satellus.se/the_data/Technical_Guide/index.htm (Accessed July 20, 2006).

Gao, J., Chen, H. F., Zhang, Y., & Zha, Y. (2004). Knowledge-based approaches to accurate mapping of mangroves from satellite data. Photogrammetric Engineering & Remote Sensing, 70(12), 1241–1248.

Haykin, S. (1999). Neural networks: A comprehensive foundation. Englewood Cliffs: Prentice-Hall International.

Heermann, P. D., & Khazenie, N. (1992). Classification of multispectral remote sensing data using back-propagation neural network. IEEE Transactions on Geoscience and Remote Sensing, 30(1), 81–88.

Hester, D. B., Cakir, H. I., Nelson, S. A. C., & Khorram, S. (2008). Per-pixel classification of high spatial resolution satellite imagery for urban land-cover mapping. Photogrammetric Engineering & Remote Sensing, 74(4), 463–471.

Kavzoglu, T., & Mather, P. M. (1999). Pruning artificial neural networks: an example using land cover classification of multi-sensor images. International Journal of Remote Sensing, 20(14), 2787–2803.

Kavzoglu, T., & Mather, P. M. (2003). The use of backpropagating artificial neural networks in land cover classification. International Journal of Remote Sensing, 24(23), 4907–4938.

Kim, Y. S. (2006). Comparison of the decision tree, artificial neural network, and linear regression methods based on the number and types of independent variables and sample size. Expert Systems with Applications, 24(2008), 1227–1234.

Lee, C., & Bethel, J. S. (2004). Extraction, modelling, and use of linear features for restitution of airborne hyperspectral imagery. ISPRS Journal of Photogrammetry and Remote Sensing, 58(5–6), 289–300.

Mas, J. F. (2003). Mapping land use/cover in a tropical coastal area using satellite sensor data, GIS and artificial neural networks. Estuarine, Coastal and Shelf Science, 59(2004), 219–230.

Natural Resources Census, National Landuse and LC Mapping Using Multitemporal AWiFS Data (LULC–AWiFS) (2005). Project Manual, Remote Sensing & GIS Applications Area, National Remote Sensing Agency, Department of Space, Government of India, Hyderabad, India

Piramuthu, S. (2006). Input data for decision trees. Expert Systems with Applications. doi:10.1016/j.eswa.2006.12.030.

Ramachandra, T. V., & Rao, G. R. (2005). Inventorying, mapping and monitoring of bioresources using GIS and remote sensing. Geospatial Technology for Developmental Planning (pp. 49–76). New Delhi: Allied Publishers Pvt. Ltd.

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323, 533–535.

Song, M., Civco, D. L., & Hurd, J. D. (2005). A competitive pixel-object approach for land cover classification. International Journal of Remote Sensing, 26, 4981–4997.

Sun, H., Li, S., Li, W., Ming, Z., & Cai, S. (2005). Semantic-based retrieval of remote sensing images in a grid environment. IEEE Geoscience and Remote Sensing Letters, 2(4), 440–444.

Torma, M., & Harma, P. (2004). Accuracy of CORINE LC classification in Northern Finland. Geoscience and Remote Sensing Symposium, IGARSS ’04, Proceedings, IEEE International 1, pp. 227–230

Venkatesh, Y. V., & KumarRaja, S. (2003). On the classification of multispectral satellite images using the multilayer perceptron. Pattern Recognition, 36(2003), 2161–2175.

Wardlow, B. D., & Egbert, S. L. (2008). Large-area crop mapping using time-series MODIS 250 m ndvi data: as assessment for the U.S. Central Great Plains. Remote Sensing of Environment, 112, 1096–1116.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Kumar, U., Kerle, N., Punia, M. et al. Mining Land Cover Information Using Multilayer Perceptron and Decision Tree from MODIS Data. J Indian Soc Remote Sens 38, 592–603 (2010). https://doi.org/10.1007/s12524-011-0061-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12524-011-0061-y