Abstract

In this paper, we study limit cycle bifurcations for a differential system with two switching lines by using Picard–Fuchs equation. We obtain a detailed expression of the corresponding first order Melnikov function which can be used to get the upper bound of the number of limit cycles. It is worth noting that we greatly simplify the computation. Our results also show that the number of switching lines has essential impact on the number of limit cycles bifurcating from a period annulus.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

Piecewise smooth differential systems have been studied extensively as they frequently appear in modeling many real phenomena. For instance, in control engineering [5], nonlinear oscillations [23] and biology [14], etc.. Moreover, these systems can exhibit complicated dynamical phenomena. Thus, in the past years, much interest from the mathematical community is seen in trying to understand their dynamical richness, especially the number of limit cycles.

There are many excellent papers studying limit cycle bifurcations of piecewise smooth differential systems with one switching line, see for example, [3, 7, 15,16,17,18,19, 27,28,29,30,31] and the references quoted there. Of course, there are also papers dedicated to study limit cycle bifurcations of piecewise smooth differential systems with multiple switching lines, see [2, 4, 6, 11, 13, 20, 21, 25, 26]. The methods used in the above papers are Melnikov function established in [10, 17] and averaging method developed in [1, 9, 19, 22]. The disadvantages of the above two methods are the complexity of calculation. Yang and Zhao [29] developed the Picard–Fuchs equation method to study the number of limit cycles of piecewise smooth differential systems with one switching line. Recently, a new development to multi-dimensional case of the averaging method on the upper bound was given in [12].

In this paper, our aim is to study limit cycle bifurcations of differential systems with two switching lines by using Picard–Fuchs equation. More precisely, we study the following integrable differential system under perturbations of piecewise polynomials of degree n

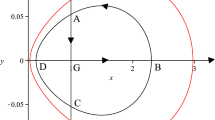

where \(\eta \) is a real positive constant. System (1.1) has a unique center \(G(0,\eta )\). See Fig. 1.

The phase portrait of system (1.1)

The perturbed system of (1.1) with two vertical switching lines intersected at point \((0,\eta )\) is

where \(0<|\varepsilon |\ll 1\),

When \(\varepsilon =0\), system (1.2) has first integrals

with integrating factor \(\mu ^k(x,y)=y^{-3},\ k=1,2,3,4\) and a family of periodic orbits given by

Obviously, \(L_h\) approaches to the center \(G(0,\eta )\) as \(h\rightarrow -\frac{1}{2\eta }\) and an invariant curve \(y=x^2+\frac{\eta }{2}\) as \(h\rightarrow 0\), respectively. A, B, C and D are the intersection points of periodic orbit and switching lines \(x=0\) and \(y=\eta \). See Fig. 1.

Our main results are the following theorems.

Theorem 1.1

Let \(0<|\varepsilon |\ll 1\) and the first order Melnikov function M(h) of system (1.2) is not zero identically. Then the number of limit cycles bifurcating from the period annulus around center \((0,\eta )\) is not more than \(41n-23\) (counting multiplicity) for \(n=1,2,3,\ldots \).

Theorem 1.2

Let \(0<|\varepsilon |\ll 1\) and the first order Melnikov function M(h) of system (1.2) is not zero identically. If \(f^1(x,y)=f^2(x,y)\), \(g^1(x,y)=g^2(x,y)\), \(f^3(x,y)=f^4(x,y)\) and \(g^3(x,y)=g^4(x,y)\), then the number of limit cycles of system (1.2) bifurcating from the period annulus around center \((0,\eta )\) is not more than \(9n-4\) (counting multiplicity) for \(n=1,2,3,\ldots \).

Theorem 1.3

Let \(0<|\varepsilon |\ll 1\) and the first order Melnikov function M(h) of system (1.2) is not zero identically. If \(f^1(x,y)=f^4(x,y)\), \(g^1(x,y)=g^4(x,y)\), \(f^2(x,y)=f^3(x,y)\) and \(g^2(x,y)=g^3(x,y)\), then the number of limit cycles of system (1.2) bifurcating from the period annulus around center \((0,\eta )\) is not more than \(9n-6\) (counting multiplicity) for \(n=1,2,3,\ldots \).

Remark 1.1

When \(f^1(x,y)=f^2(x,y)=f^3(x,y)=f^4(x,y)\) and \(g^1(x,y)=g^2(x,y)=g^3(x,y)=g^4(x,y)\), Gentes [8] studied the case of \(n=2\) and proved that M(h) has at most 2 zeros. Xiong and Han [27] obtained that M(h) has at most n zeros under perturbation of polynomials of degree n.

Remark 1.2

From Lemmas 2.2, 4.1 and 5.1, we know that the first order Melnikov function of system (1.2) with two switching lines is more complicated than that of systems (4.1) and (5.1) with one switching line. Thus, the number of switching lines has essential impact on the number of limit cycles bifurcating from the quadratic center.

The rest of the paper is organized as follows: In Sect. 2, we will give detailed expression of the first order Melnikov function M(h) by using Picard–Fuchs equation. Theorems 1.1–1.3 will be proved in Sects. 3–5.

2 The Algebraic Structure of M(h) and Picard–Fuchs Equation

By Theorem 2.2 in [11] and Lemma 2.1 in [24], we know that the first order Melnikov function M(h) of system (1.2) is

and the number of zeros of M(h) controls the number of limit cycles of system (1.2) if \(M(h)\not \equiv 0\) in corresponding period annulus [9, 10].

For \(h\in \Sigma \) and \(i=0,1,2,\ldots ,j=0,1,2,\ldots \), we denote

Let \(\Omega \) be the interior of \(L^1_{h}\cup \overrightarrow{BG}\cup \overrightarrow{GA}\), see the black line in Fig. 1. Using Green’s Formula, we have for \(i\ge 0\) and \(j\ge -1\)

Hence,

In a similar way, we have for \(i\ge 0\) and \(j\ge -1\)

Therefore, we obtain from (2.1)–(2.3)

where \({\tilde{a}}_{i,j}\), \({\tilde{b}}_{i,j}\), \({\tilde{c}}_{i,j}\), \({\tilde{d}}_{i,j}\), \(\sigma _{i,j}\), \(\tau _{i,j}\), \({\tilde{a}}_{i}\), \({\tilde{b}}_{i}\), \({\tilde{c}}_{i}\) and \({\tilde{d}}_{i}\) are arbitrary real constants and in the last equality we have used

The coordinates of B and D are \((\sqrt{\eta ^2h+\frac{\eta }{2}},\eta )\) and \((-\sqrt{\eta ^2h+\frac{\eta }{2}},\eta )\) respectively. Thus,

where \(\nu _i\) is a real constant.

Lemma 2.1

If \(i+j=n\ge 3\) and i is an even number, then

If \(i+j=n\ge 3\) and i is an odd number, then

where \({\tilde{\varphi }}_{l}(h)\), \({\tilde{\psi }}_{l}(h)\), \({\bar{\varphi }}_{l}(h)\) and \({\bar{\psi }}_{l}(h)\) are polynomials in h of degrees at most l, and \({\tilde{\alpha }}_k(h)\), \({\tilde{\beta }}_k(h)\), \({\tilde{\gamma }}_k(h)\) and \({\tilde{\delta }}_k(h)\) are polynomials of h with

Proof

Without loss of generality, we only prove the first equality in (2.5). The others can be shown in a similar way. It follows from (1.3) that

Multiplying (2.6) by \(x^{i-2}y^{j}dy\), integrating over \(L^1_h\) and noting that (2.2), we have

Similarly, multiplying the first equality in (1.3) by \(x^{i}y^{j-3}dx\) and integrating over \(L^1_h\) yields

Taking \((i,j)=(2,0),(3,-1)\) in (2.7), we obtain

From (2.8) we obtain

Taking \((i,j)=(2,-1)\) in (2.7) and \((i,j)=(0,1)\) in (2.8), we have

Eliminating \(I_{0,-1}(h)\) in (2.11) and noting that (2.9), we get

In view of (2.7) and (2.8), we obtain

Now we prove the conclusion by induction on n. In fact, (2.13) implies that the conclusion holds for \(n=3\). Suppose that the first equality in (2.5) holds for \(i+j\le n-1\, (n\ge 4)\). If n is an even number, then, by (2.7) and (2.8), we have

where

Hence, the first equality in (2.5) holds.

Next we will discuss the degrees of \({\tilde{\alpha }}_1(h)\), \({\tilde{\beta }}_1(h)\) and \({\tilde{\varphi }}_l(h)\) in (2.5). If \((i,j)=(2,n-2),(4,n-4),\ldots ,(n-2,2),(n,0)\), then, in view of (2.14) and noting that n is an even number, we obtain

where \(\alpha ^{(n-s)}(h)\) and \(\beta ^{(n-s)}(h)\ (s=1,2)\) are polynomials in h satisfying

\(\varphi ^{(n-1)}(h)\) is a polynomial of h satisfying \(\deg \varphi ^{(n-1)}(h)\le \frac{3}{2}n-3\), \(\varphi ^{(n-2)}(h)\) is a polynomial of h satisfying \(\deg \varphi ^{(n-2)}(h)\le \frac{3}{2}n-5\) and \(\xi _{\frac{n}{2}}(h)\) is a polynomial of h with degree at most \(\frac{n}{2}\). Therefore,

Similarly, we can prove that the conclusion holds for \((i,j)=(0,n)\).

If n is an odd number, we can prove the conclusion in a similar way. This ends the proof. \(\square \)

From (2.4) and Lemma 2.1, we obtain the algebraic structure of the first Melnikov function M(h) immediately.

Lemma 2.2

If \(i+j=n>3\), then

where \({\varphi }_{l}(h)\) and \({\psi }_{l}(h)\) are polynomials of h of degrees at most l, and \({\alpha }_k(h)\), \({\beta }_k(h)\), \({\gamma }_k(h)\) and \({\delta }_k(h)\) are polynomials of h with

If \(n=1,2,3\), then

where \({\varphi }_{l}(h)\) and \({\psi }_{l}(h)\) are polynomials of h of degrees at most l, and \({\alpha }_k(h)\), \({\beta }_k(h)\), \({\gamma }_k(h)\) and \({\delta }_k(h)\) are polynomials of h with

The following lemma gives the Picard–Fuchs equations which the generators of M(h) satisfy.

Lemma 2.3

(i) The vector functions \(\big (I_{0,1}(h),I_{2,0}(h)\big )^T\) and \(\big (I_{1,0}(h),I_{1,1}(h)\big )^T\) respectively satisfy the Picard–Fuchs equations

and

(ii) The vector functions \(\big (J_{0,1}(h),J_{2,0}(h)\big )^T\) and \(\big (J_{1,0}(h),J_{1,1}(h)\big )^T\) respectively satisfy the Picard–Fuchs equations

and

Proof

We only prove the conclusion (i). Conclusion (ii) can be proved similarly. Since x can be regarded as a function of y and h, differentiating the first equation in (1.3) with respect to h, we get

which implies

Hence,

Multiplying both side of (2.21) by h and integrating over \(L^1_h\), we have

On the other hand, by (2.2), we get for \(i\ge 1\) and \(j\ge -1\)

Taking \((i,j)=(0,1)\) in (2.22) and noting that (2.12) we obtain

From (2.24) we have

In view of (2.9) and (2.12) we obtain conclusion (i). The proof is completed. \(\square \)

Lemma 2.4

For \(h\in \Sigma =(-\frac{1}{2\eta },0)\), we have

and

where \(c_i\) and \(d_i\) (\(i=1,2\)) are real constants.

Proof

We only prove (2.25). (2.26) can be shown in a similar way. Since the coordinates of A and C are \((0,\frac{-\sqrt{2\eta h+1}-1}{2h})\) and \((0,\frac{\sqrt{2\eta h+1}-1}{2h})\), we have

Inserting the above equality into the second equation in (2.17) gives the following first order linear differential equation

Then, solving (2.27) gives \(I_{2,0}(h)\) in (2.25).

It follows from the first equation in (2.18) that

where \(c_1\) is a real constant. Similar to solving \(I_{2,0}(h)\), we obtain

where c and \(c_2\) are real constants. Noting that \(\lim \nolimits _{h\rightarrow -\frac{1}{2\eta }}I_{1,1}(h)=0\), we have \(c=\frac{\pi }{4}-c_1\sqrt{2\eta }\). Then, substituting c into (2.28) yields \(I_{1,1}(h)\) in (2.25). This completes the proof. \(\square \)

3 Proof of Theorem 1.1

In the following, we denote by \(P_k(u)\), \(Q_k(u)\), \(R_k(u)\), \(S_k(u)\) and \(T_k(u)\) the polynomials of u with degree at most k and denote by \(\#\{\phi (h)=0, h\in (\lambda _1,\lambda _2)\}\) the number of isolated zeros of \(\phi (h)\) on \((\lambda _1,\lambda _2)\) taking into account the multiplicity.

Proof of Theorem 1.1

If \(n>3\) is an even number, let \({\overline{M}}(h)=h^{n-2}M(h)\) for \(h\in (-\frac{1}{2\eta },0)\), then \({\overline{M}}(h)\) and M(h) have the same number of zeros on \((-\frac{1}{2\eta },0)\). By Lemmas 2.2 and 2.4, we have

Let \(t=\sqrt{h+\frac{1}{2\eta }}\), \(t\in (0,\frac{1}{\sqrt{2\eta }})\), then \({\overline{M}}(h)\) can be written as

Hence, \({\overline{M}}(h)\) and \(M_1(t)\) have the same number of zeros for \(h\in (-\frac{1}{2\eta },0)\) and \(t\in (0,\frac{1}{\sqrt{2\eta }})\). Suppose that \(\Sigma _1=(0,\frac{1}{\sqrt{2\eta }})\backslash \{t\in (0,\frac{1}{\sqrt{2\eta }})|P_{n-1}(t^2)=0\}\). Then, for \(t\in \Sigma _1\), we get

Let \(\Sigma _2=(0,\frac{1}{\sqrt{2\eta }})\backslash \{t\in (0,\frac{1}{\sqrt{2\eta }})|P_{2n-2}(t^2)=0\}\), then we have for \(t\in \Sigma _2\)

Similarly, let \(\Sigma _3=(0,\frac{1}{\sqrt{2\eta }})\backslash \{t\in (0,\frac{1}{\sqrt{2\eta }})|P_{4n-3}(t^2)=0\}\), then we have for \(t\in \Sigma _3\)

Let \(M_4(t)=P_{17n-10}(t)+Q_{8n-5}(t^2)t\sqrt{1-2\eta t^2}=0\). That is,

By squaring the above equation, we can deduce that \(M_4(t)\) has at most \(34n-20\) zeros on \((0,\frac{1}{\sqrt{2\eta }})\). Hence,

If \(n=1,2,3\), it is easy to check that (3.1) also holds.

If n is an odd number, we can prove Theorem 1.1 similarly. This ends the proof of Theorem 1.1. \(\square \)

4 Proof of Theorem 1.2

If \(f^1(x,y)=f^2(x,y)\), \(g^1(x,y)=g^2(x,y)\), \(f^3(x,y)=f^4(x,y)\) and \(g^3(x,y)=g^4(x,y)\), then system (1.2) can be written as

From Theorem 1.1 in [10, 17], we know that the first order Melnikov function M(h) of system (4.1) has the following form

and the number of zeros of M(h) controls the number of limit cycles of system (4.1) if \(M(h)\not \equiv 0\) in corresponding period annulus.

For \(h\in \Sigma \) and \(i=0,1,2,\ldots ,j=0,1,2,\ldots \), we denote

where \(\Gamma _h=L^1_h\cup L^2_h\) and \({\tilde{\Gamma }}_h=L^3_h\cup L^4_h\). It is easy to get that \({\tilde{U}}_{i,j}(h)=(-1)^{i+1}{U}_{i,j}(h)\). Similar to (2.2), we get

Therefore,

where \({\bar{\sigma }}_{i,j}\) are real constants.

Following the proof of Lemmas 2.1 and 2.2, we can get the following Lemma 4.1.

Lemma 4.1

, If \(i+j=n>3\), then

where \({\alpha _1}(h)\), \({\beta _1}(h)\), \({\gamma _1}(h)\) and \({\delta _1}(h)\) are polynomials of h with

If \(n=1,2,3\), then

where \({\alpha _1}(h)\), \({\beta _1}(h)\), \({\gamma _1}(h)\) and \({\delta _1}(h)\) are polynomials of h with

The following lemma gives the Picard–Fuchs equations which the generators of M(h) in (4.2) satisfy and can be proved by the method in Lemma 2.3.

Lemma 4.2

The vector functions \(\big (U_{0,1}(h),U_{2,0}(h)\big )^T\) and \(\big (U_{1,0}(h),U_{1,1}(h)\big )^T\) respectively satisfy the Picard–Fuchs equations

and

From (4.5) and (4.6), we have for \(h\in \Sigma =(-\frac{1}{2\eta },0)\)

where \(e_1\) and \(e_2\) are real constants. Hence,

where \({\hat{P}}_{k}(h)\), \({\hat{Q}}_{k}(h)\), \({\hat{R}}_{k}(h)\) and \({\hat{S}}_{k}(h)\) are the polynomials of h with degree not more than k. Following the lines of the proof of Theorem 1.1, we can prove that M(h) has at most \(9n-4\) zeros on \((-\frac{1}{2\eta },0)\). The Theorem 1.2 is proved.

5 Proof of Theorem 1.3

If \(f^1(x,y)=f^4(x,y)\), \(g^1(x,y)=g^4(x,y)\), \(f^2(x,y)=f^3(x,y)\) and \(g^2(x,y)=g^3(x,y)\), then system (1.2) can be written as

From Theorem 1.1 in [10, 17], we know that the first order Melnikov function M(h) of system (5.1) has the following form

where \(\Upsilon _h=L^1_h\cup L^4_h\) and \({\tilde{\Upsilon }}_h=L^2_h\cup L^3_h\). Furthermore, the number of zeros of M(h) controls the number of limit cycles of system (5.1) if \(M(h)\not \equiv 0\) in corresponding period annulus.

For \(h\in \Sigma \) and \(i=0,1,2,\ldots ,j=0,1,2,\ldots \), we denote

Noting that \(\Upsilon _h\) and \({\tilde{\Upsilon }}_h\) are symmetric with respect to \(x=0\), we get \({V}_{2l,j}(h)={\tilde{V}}_{2l,j}(h)=0\) for \(l=0,1,2,\ldots \). Similar to (2.2), we have

Therefore,

where \({\tilde{\sigma }}_{i,j}\), \({\tilde{\tau }}_{i,j}\) and \({\tilde{\nu }}_i\) are real constants.

Lemma 5.1

If \(i+j=n>3\), then

where \({\gamma _k}(h)\) and \({\delta _k}(h)\)\((k=1,2)\) are polynomials of h with

If \(n=1,2,3\), then

where \({\gamma _k}(h)\) and \({\delta _k}(h)\)\((k=1,2)\) are polynomials of h with

Lemma 5.2

The vector functions \(\big (V_{1,0}(h),V_{1,1}(h)\big )^T\) and \(\big ({\tilde{V}}_{1,0}(h),{\tilde{V}}_{1,1}(h)\big )^T\) respectively satisfy the Picard–Fuchs equations

and

From (5.5) and (5.6), we have for \(h\in \Sigma =(-\frac{1}{2\eta },0)\)

where \({\hat{c}}_1\) and \({\hat{d}}_2\) are real constants. Hence,

where \({\check{P}}_{n-1}(h)\), \({\check{Q}}_{n-1}(h)\), \({\check{R}}_{n-1}(h)\) and \({\check{S}}_{n-1}(h)\) are the polynomials of h with degree not more than \(n-1\). Following the lines of the proof of Theorem 1.1, we get that M(h) has at most \(9n-6\) zeros on \((-\frac{1}{2\eta },0)\). The Theorem 1.3 is proved.

References

Buica, A., Llibre, J.: Averaging methods for finding periodic orbits via Brouwer degree. Bull. Sci. Math. 128, 7–22 (2004)

Bujac, C., Llibre, J., Vulpe, N.: First integrals and phase portraits of planar polynomial differential cubic systems with the maximum number of invariant straight lines. Qual. Theory Dyn. Syst. 15, 327–348 (2016)

Cen, X., Li, S., Zhao, Y.: On the number of limit cycles for a class of discontinuous quadratic differetnial systems. J. Math. Anal. Appl. 449, 314–342 (2017)

Chen, H., Li, D., Xie, J., Yue, Y.: Limit cycles in planar continuous piecewise linear systems. Commun. Nonlinear Sci. Numer. Simul. 47, 438–454 (2017)

di Bernardo, M., Budd, C., Champneys, A., Kowalczyk, P.: Piecewise-Smooth Dynamical Systems, Theory and Applications. Springer, London (2008)

Dong, G., Liu, C.: Note on limit cycles for \(m\)-piecewise discontinuous polynomial Liénard differential equations. Z. Angew. Math. Phys. 68, 97 (2017)

Gao, Y., Peng, L., Liu, C.: Bifurcation of limit cycles from a class of piecewise smooth systems with two vertical straight lines of singularity. Int. J. Bifurc. Chaos 27, 1750157 (2017)

Gentes, M.: Center conditions and limit cycles for the perturbation of an elliptic sector. Bull. Sci. Math. 133, 597–643 (2009)

Han, M.: On the maximum number of periodic solutions of piecewise smooth periodic equations by average method. J. Appl. Anal. Comput. 7, 788–794 (2017)

Han, M., Sheng, L.: Bifurcation of limit cycles in piecewise smooth systems via Melnikov function. J. Appl. Anal. Comput. 5, 809–815 (2015)

Hu, N., Du, Z.: Bifurcation of periodic orbits emanated from a vertex in discontinuous planar systems. Commun. Nonlinear Sci. Numer. Simul. 18, 3436–3448 (2013)

Han, M., Sun, H., Balanov, Z.: Upper estimates for the number of periodic solutions to multi-dimensional systems. J. Differ. Equ. 266, 8281–8293 (2019)

Itikawa, J., Llibre, J., Mereu, A.C., Oliveira, R.: Limit cycles in uniform isochronous centers of discontinuous differential systems with four zones. Discrete Contin. Dyn. Syst. Ser. B 22, 3259–3272 (2017)

Krivan, V.: On the Gause predator-prey model with a refuge: a fresh look at the history. J. Theor. Biol. 274, 67–73 (2011)

Li, S., Liu, C.: A linear estimate of the number of limit cycles for some planar piecewise smooth quadratic differential system. J. Math. Anal. Appl. 428, 1354–1367 (2015)

Liang, F., Han, M., Romanovski, V.: Bifurcation of limit cycles by perturbing a piecewise linear Hamiltonian system with a homoclinic loop. Nonlinear Anal. 75, 4355–4374 (2012)

Liu, X., Han, M.: Bifurcation of limit cycles by perturbing piecewise Hamiltonian systems. Int. J. Bifurc. Chaos Appl. Sci. Eng. 20, 1379–1390 (2010)

Llibre, J., Mereu, A.: Limit cycles for discontinuous quadratic differetnial systems. J. Math. Anal. Appl. 413, 763–775 (2014)

Llibre, J., Mereu, A., Novaes, D.: Averaging theory for discontinuous piecewise differential systems. J. Differ. Equ. 258, 4007–4032 (2015)

Li, Y., Yuan, L., Du, Z.: Bifurcation of nonhyperbolic limit cycles in piecewise smooth planar systems with finitely many zones. Int. J. Bifurc. Chaos 27, 1750162 (2017)

Shen, J., Du, Z.: Heteroclinic bifurcation in a class of planar piecewise smooth systems with multiple zones. Z. Angew. Math. Phys. 67, 42 (2016)

Sanders, J., Vehrulst, F.: Averaging Method in Nonlinear Dynamical Systems, Applied Mathematical Sciences, vol. 59. Springer, Berlin (1985)

Teixeira, M.: Perturbation theory for non-smooth systems. In: Encyclopedia of Complexity and Systems Science. Springer, New York (2009)

Wang, Y., Han, M., Constantinescu, D.: On the limit cycles of perturbed discontinuous planar systems with 4 switching lines. Chaos Solitons Fractals 83, 158–177 (2016)

Xiong, Y.: Limit cycle bifurcations by perturbing non-smooth Hamiltonian systems with 4 switching lines via multiple parameters. Nonlinear Anal. Real World Appl. 41, 384–400 (2018)

Xiong, Y., Hu, J.: Limit cycle bifurcations in perturbations of planar piecewise smooth systems with multiply lines of critical points. J. Math. Anal. Appl. 474, 194–218 (2019)

Xiong, Y., Han, M.: On the limit cycle bifurcation of a polynomial system from a global center. Anal. Appl. 12, 251–268 (2014)

Yang, J., Zhao, L.: Limit cycle bifurcations for piecewise smooth Hamiltonian systems with a generalized eye-figure loop. Int. J. Bifurc. Chaos 26, 1650204 (2016). (14pages)

Yang, J., Zhao, L.: Bounding the number of limit cycles of discontinuous differential systems by using Picard–Fuchs equations. J. Differ. Equ. 264, 5734–5757 (2018)

Yang, J., Zhao, L.: Limit cycle bifurcations for piecewise smooth integrable differential systems. Discrete Contin. Dyn. Syst. Ser. B 22, 2417–2425 (2017)

Zou, C., Yang, J.: Piecewise linear differential system with a center-saddle type singularity. J. Math. Anal. Appl. 459, 453–463 (2018)

Acknowledgements

Supported by National Natural Science Foundation of China (11701306, 11671040, 11601250), Construction of First-class Disciplines of Higher Education of Ningxia (Pedagogy)(NXYLXK2017B11), Young Top-notch Talent of Ningxia, Ningxia Natural Science Foundation of China (2019AAC03247) and Key Program of Ningxia Normal University (NXSFZDA1901).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, J. Limit Cycle Bifurcations from a Quadratic Center with Two Switching Lines. Qual. Theory Dyn. Syst. 19, 21 (2020). https://doi.org/10.1007/s12346-020-00374-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12346-020-00374-5