Abstract

Light is scattered and absorbed as it travels through the water, which results in color shift, poor contrast, uneven illumination, and blurred details. This is not conducive to the exploration of marine life, the protection of marine ecology, and the development of marine engineering. For the problems of visual unnaturalness and blurred details in the enhanced underwater images, we propose a multi-weight and multi-granularity underwater image enhancement algorithm. The algorithm is built on the fusion of two images, which are derived from color-corrected and contrast-adjusted versions of an original degraded image. Based on this, their associated weight maps, i.e., Laplace contrast weight, local contrast weight, saliency weight, exposure weight, and saturation weight, are normalized and then fused with multi-granularities to solve the problem of the visual unnaturalness of images due to uneven illumination. Further, we use double scale decomposition to obtain two high-frequency components and then fuse them into image fusion to enhance image contrast and highlight image details. We test the subjective results and objective evaluations of the proposed algorithm on several datasets. The subjective results demonstrate our algorithm not only improves contrast, color naturalness, and brightness but also enhances the details of underwater images. The objective evaluation shows that the average values of UCIQE and PCQI of our algorithm outperform the other six different classical algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The theoretical technologies of marine information acquisition, transmission, and processing are crucial to the rational development, utilization, and protection of marine resources. Underwater images are an important carrier of marine information. However, unlike normal images, underwater images have poor visibility due to the attenuation of propagating light, mainly due to absorption and scattering effects. The absorption of light by the medium in the water can lead to color degradation of the acquired images. At the same time, the scattering of light by the medium in the water will lead to the problem of blurring and low contrast of the acquired images. Therefore, clear underwater images are crucial for accessing marine resources, and obtaining high-quality underwater images seems to be an important issue. To solve this problem, many researchers have proposed a series of methods to enhance underwater images based on the imaging characteristics of underwater images. These methods are mainly classified into three categories.

The first category is underwater image recovery methods. The recovery methods for degraded images rely on specific physical models. The recovery method considers the root cause of image quality degradation and restores it to the state before image degradation. For instance, Pan et al. (2019) proposed a multi-scale iterative framework to remove scatter, which uses a white balance algorithm to remove color bias, and then transform the image to the nonsubsampled contourlet (NSCT) domain to remove noise to improve the visual effect of the image. However, it cannot effectively remove the blue background in the deep ocean region. He et al. (2011) suggested a single image defogging algorithm that finds the brightest pixel in the dark channel as the background light intensity, thus improving the atmospheric fogged image. However, it does not apply to underwater image processing. Galdran et al. (2015) presented a red channel-based method for underwater image restoration, which reduces the attenuation of the red channel and the effect of artificial light sources on the transmittance estimation. However, the obtained image red channel does not reflect the image details well, and some image regions produce red shading problems.

The second category is underwater image enhancement methods. These methods do not rely on specific models but purposefully enhance the most important and useful information and suppress information from irrelevant regions. For example, Wen et al. (2013) put forward an algorithm combining the dark channel with the blue-green channel to estimate the red channel transmittance to enhance the image details effectively. However, the image is also biased. Ghani and Isa (2014) proposed Rayleigh stretching to improve the quality of underwater images, which enhances visibility and contrast. However, the image colors are over-enhanced. Iqbal et al. (2007) presented an unsupervised color correction method for underwater image enhancement, effectively removing the bluish colors and improving red channel information. However, the processed image has obvious red shadows.

The third category is underwater image fusion methods. These methods have shown great improvement in dealing with color shift, low contrast, and low definition. For instance, Ancuti et al. (2011) used a fusion-based method. Firstly, two different versions of the original image are obtained from the original image and used as the fused components. Secondly, four fusion weight maps are obtained from these two images separately. Finally, the multi-scale fusion technique fuses the weight maps and fusion components to obtain the enhanced underwater images (Ancuti et al. 2017). However, the details of the processed underwater images are not clear enough.

We conducted a comprehensive study of several state-of-the-art single underwater image enhancement algorithms above. Based on the previous work, we propose an underwater image enhancement algorithm based on multi-weight and multi-granularity fusion from the perspective of fusion. It has low complexity and high stability and can correct image chromaticity shift as well as improve image sharpness.

Overview of the main work: firstly, color balance algorithm and contrast limited adaptive histogram equalization (CLAHE) are used for underwater recession images to obtain two images with color correction and brightness uniformity. Secondly, the two images’ multi-weight and multi-granularity normalized fusion drive their edge transfer. The fused image has a natural appearance. Then, the fused image F is subjected to dual-scale image decomposition to obtain the first layer of large-scale high-frequency component \({F_{H_{1}}}\) and small-scale low-frequency component \({F_{L_{1}}}\). Finally, the small-scale low-frequency component is subjected to dual-scale image decomposition to obtain the second layer of large-scale high-frequency component \({F_{H_{2}}}\) and low-scale \({F_{L_{2}}}\). The original image, \({F_{H_{1}}}\) and \({F_{H_{2}}}\) are fused to obtain a high-quality image. The algorithm can effectively enhance the global contrast, sharpness, and dark region brightness of the image, as well as significantly improve the edge detail texture.

The main contributions of this paper are summarized as follows.

-

We propose a multi-weight and multi-granularity underwater image enhancement algorithm, which can directly enhance individual underwater images without additional information. The proposed algorithm demonstrates the advantages of fusion and also drives the development of fusion-based underwater image enhancement.

-

Our algorithm analyzes and processes the five weights and optimally assigns each weight proportion to make the image visually natural. The method can be applied to various image enhancement fields, such as marine image enhancement defogging images.

-

We conducted experiments on subjective results and objective evaluations, and the results show that the algorithm achieves better structure recovery, more natural color correction, more remarkable details, and higher stability.

Proposed algorithm

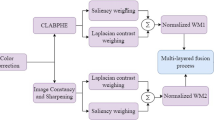

The main challenge of underwater image enhancement is color correction while recovering image details. Therefore, we propose a fusion-based underwater image enhancement algorithm. The framework of our algorithm is shown in Fig. 1. Firstly, the color is corrected by color balance and CLAHE, and we obtain ICB and ICL. Secondly, \(\overline {W_{CB}}\) and \(\overline {W_{CL}}\) are obtained by multi-weights on ICB and ICL and then image F is obtained by multi-granularity fusion. Thirdly, the first double-scale decomposition of F is performed to obtain \({F_{L_{1}}}\) and \({F_{H_{1}}}\), \({F_{L_{1}}}\) is decomposed again to obtain \({F_{L_{2}}}\) and \({F_{H_{2}}}\). Finally, \({F_{H_{1}}}\) and \({F_{H_{2}}}\) are added to F to produce a clear resultant map.

Color correction

Different wavelengths of light attenuate at different rates underwater, causing a large reduction in light energy and resulting in color deviations between underwater images and real scenes. Currently, the classical methods of color correction include Gray World and White Patch algorithm (Provenzi et al. 2008), Perfect Reflection (Zumofen et al. 2008), multi-scale retinex with color restoration (MSRCR) (Qiu et al. 2014), and the results of each color correction algorithm are shown in Fig. 2. However, the images processed by the above methods have the problems of reduced global contrast, high color saturation, and overexposure. To solve the above problem, we use the color balance algorithm to remove the color bias to obtain image ICB (Limare et al. 2011). The principle is intuitive: the image’s R, G, and B channels are contrast stretched. Then, the data is removed from the smallest and largest parts by a certain percentage. Finally, the middle part is re-quantized linearly to between 0 and 255. The result of this algorithm is shown in Fig. 2(f), which can eliminate color distortion more effectively, recover the color saturation of the image more properly, and restore the color more naturally and more closely to the visual perception of human eyes.

Since some areas in the image ICB processed by the color balance algorithm are too bright or too dark, we use CLAHE to solve the above problem (Li 2010). Based on the Adaptive Histogram Equalization (AHE) (Zimmerman et al. 1988), CLAHE uses thresholding to limit the contrast to achieve the effect of weakening the noise amplification problem and effectively solve the deficiencies of Histogram Equalization (HE) (Cheng and Shi 2004) and AHE. As a result, the visual effect of image ICL obtained after processing by the CLAHE algorithm is more harmonious.

The edges and details of the scene are affected by scattering, and the use of color balance and CLAHE algorithms is insufficient to address the unnaturalness of local areas. Based on this, we propose an effective fusion-based approach. The multi-weighted multi-granularity fusion strategy described in the next section will be in charge of minimizing the transfer of these unnaturalizes to the final blended image.

Multi-weight and multi-granularity fusion

Image fusion has shown utility in several applications such as image compositing (Grundland et al. 2010), multispectral video enhancement (Bennett et al. 2007; Ancuti and Bekaert 2010), and HDR imaging (Mertens et al. 2010). Here, our goal is to solve the phenomenon of excessive contrast enhancement in local areas and make the overall visual effect of the image more natural. In this work, we establish a multi-scale fusion principle, analyze and process 5 weights of Laplace contrast weights(Wlap,k), local contrast weights(Wloc,k), saliency weights(Wsal,k), saturation weights(Wsat,k) and exposure weights (Wexp,k)(k ∈{CB,CL}). After finding the relationship of the five weights, we propose a multi-weight and multi-granularity fusion method to solve image unnaturalness, in which ICB and ICL are used as the two input images for image fusion.

Multi-weight fusion

-

A.

Laplace contrast weighting(Wlap,k)

Wlap,k is to apply the Laplacian filter to each luminance channel and calculate its absolute value to get the weight map of global contrast. First, the RGB image is converted into an HSV image. Then, the luminance layer is extracted so that the Laplacian operator can effectively handle the image edge information. Finally, the Laplacian filter is applied to the brightness channel of each input image, and its absolute value is calculated to obtain the global contrast (Ancuti et al. 2012).

-

B.

Local contrast weight(Wloc,k)

Wloc,k is the deviation relationship between the brightness of each pixel in the input image and the average brightness of its neighboring pixels. We convert the input image Ik from RGB space to LAB space to calculate the local contrast weights of the image. It is defined as follows:

$$ W_{loc,k}=\lvert I_{k}-I_{\omega_{hc},k} \rvert, $$(1)where Ik is the input brightness channel, \(I_{\omega _{hc}}\) is the pixel value after Gaussian low-pass filtering, and \(I_{\omega _{hc},k}\) is the Gaussian low-pass filtering for the brightness channel. The cut-off frequency of this filter is usually taken as π/2.75. The value of local contrast shows the overly bright and dark areas in the image. Therefore, the local contrast map is used to enhance the local contrast of the fused image.

-

C.

Saliency weight(Wsal,k)

Wsal,k is mainly to enhance the contrast of light and dark areas, enhance the overall contrast of the image, and highlight the inconspicuous objects in the water environment:

$$ W_{sal,k}=(L_{k}-\overline{L_{k}})^{2}+(A_{k}-\overline{A_{k}})^{2}+(B_{k}-\overline{B_{k}})^{2}, $$(2)where L is the brightness. A and B is the two colors. The average of each the three channels is noted as \(\overline {L}\), \(\overline {A}\) and \(\overline {B}\), respectively.

Wsal,k is used to highlight the salient areas of the image and enhance the contrast between the salient areas and the adjacent areas, thus improving the global contrast of the image.

-

D.

Saturation weight (Wsat,k)

Saturation is an indicator that determines the brightness of an image. Wsat,k is used to adjust the saturated areas in an image, which makes the image achieves uniform saturation after blending. Therefore, we can adjust the highly saturated areas of the original image based on Wsat,k. We calculate the saturation weight by computing the deviation between the three channels Rk, Gk, Bk and the brightness Ik as follows:

$$ W_{sat,k}=\sqrt{\frac{1}{3}[(R_{k}-L_{k})^{2}+(G_{k}-L_{k})^{2}+(B_{k}-L_{k})^{2}]}. $$(3)Turning up the saturation of an image usually produces a more vibrant image. Wsat,k is used to select colorful image areas to be added to the fused image.

-

E.

Exposure weight(Wexp,k)

Wexp,k is used to increase the proportion of highly visible image areas in the fused image. All pixels of the image are in good exposure to retain more detailed tonal information, and we define good exposure as follows:

$$ W_{exp,k}=e^{\left[- \frac{(L_{k}-0.5)^{2}}{2\times0.25^{2}}\right]}. $$(4)The larger the Wexp,k of a region of the image, the more balanced the exposure of that region and the higher the visibility.

Summation of Laplace contrast weight, local contrast weight, saliency weight, saturation weight, and exposure weight:

$$ W_{k}=W_{lap,k}+W_{loc,k}+W_{sal,k}+W_{sat,k}+W_{exp,k}. $$(5)Next, Wk (k ∈{CB,CL}) is normalized as

$$ \overline{W_{k}}=\frac{W_{k}}{\Sigma(W_{CB}+W_{CL})}, $$(6)where WCB is the sum of Wlap,CB, Wloc,CB, Wsal,CB, Wsat,CB and Wexp,CB. WCL is the sum of the five weights of ICL. \(\overline {W_{k}}\) is the weight map of the input image and as the weights for image fusion, as shown in Fig. 3.

Multi-granularity fusion

To avoid problems arising from pixel-level fusion methods, such as the halo phenomenon, therefore, we use a multi-granularity fusion method based on pyramidal decomposition. The flow of multi-grain fusion is shown in Fig. 4, and the description of steps is as follows.

Step 1 decomposes the two normalized weight maps into Gaussian pyramids. The weight maps \(\overline {W_{CB}}\) and \(\overline {W_{CL}}\) are low-pass filtered and downsampled to generate l (l ∈{1,2,...,9}) granularity layer Gaussian pyramids, respectively. It is denoted Gl,k. Different decomposition layers correspond to different granularities.

Step 2 decomposes the two input images into Laplacian pyramids. Decompose the input images ICB and ICL into l granularity layers Laplace pyramids, respectively. (1) ICB and ICL are generated into low-grained Gaussian pyramid layers. (2) Up-sampling and low-pass filtering of the Gaussian pyramid layer to obtain the next granularity Gaussian pyramid layer. (3) The decomposition layer of Laplace’s pyramid is obtained by making a difference with the Gaussian pyramid layer of the previous granularity. Repeat (2)-(3) to obtain nine different decomposition layers. Different decomposition layers also correspond to different granularities. The l granularity layer of Laplace pyramid is recorded as Ll,k.

Step 3 fuses the result images obtained from steps 1 and 2 in each l granularity layer to obtain the multi-grain fusion image Fl. The Laplace pyramid layer Ll,k of each granularity is weighted and summed using the Gaussian pyramid layer Gl,k of the same granularity to obtain the multi-grain size fusion image:

where G is the Gaussian pyramid decomposition and L is the Laplace pyramid decomposition.

Step 4 obtain the multi-granularity fusion image F by up-sampling and reconstructing the Laplace pyramid Fl.

Dual-scale image decomposition detail enhancement

The contrast, color naturalness, sharpness, and brightness of the fused image are significantly improved, and the overall visual effect is well. However, the details and edges are not clear enough. The effect of light scattering on imaging is eliminated while recovering detailed texture information to represent the ocean scene information fully. We propose a dual-scale image decomposition algorithm based on a fused image. The dual-scale image decomposition algorithm refers to the decomposition of the original image into two parts: the low-frequency component and the high-frequency component. Among them is the low-frequency component in the image region, where the gray value changes slowly. The large flat area of the image describes the main part of the image. The high-frequency component corresponds to the part of the image changing drastically, which is the detailed edges or noise of the image.

The implementation of the image dual-scale decomposition algorithm is shown in Fig. 5. Firstly, F is convolved with the mean filter Z to obtain \(F_{L_{1}}\) and \(F_{H_{1}}\). Secondly, \(F_{L_{1}}\) is decomposed into \(F_{L_{2}}\) and \(F_{H_{2}}\). Finally, \(F_{H_{1}}\), \(F_{H_{2}}\) and F are fused to obtain the result image, which effectively solves the detail degradation problem.

Ablation experiment

To demonstrate the validity of each component of our algorithm. We conducted ablation experiments to analyze the algorithms in this paper. Histograms of the R, G, and B channels visualize the grayscale distribution of an image and are a common method for analyzing image quality (He et al. 2021). We demonstrate the differences between the original image, color correction image, F, and the resultant image by ablation experiments to reflect the need for color correction, multi-weighted multi-granularity fusion and dual-scale decomposition of images. Figure 6 shows the results of the histogram comparison for the R, G, and B channels. As shown in Fig. 6(a), each channel’s pixel values in the original image histogram vary greatly in size. They are distributed only in concentrated areas, which suffer from low contrast and color bias. Figure 6(b) shows that the color correction histogram recovers the color bias and stretches the histogram. As shown in Fig. 6(c), the histogram of the multi-weighted multi-granularity fusion image shows a reduced percentage of 255-pixel points in 0 to 255, improving the unnaturalness of local darkness. As shown in Fig. 6(d), the histogram of the dual-scale fused image shows that the gray values of each channel are stretched, and the pixel values of the three channels are balanced in size, with significantly enhanced gray levels less texture granularity. Figure 6 shows that the color correction is very effective. Multi-weighted and multi-granularity retain and accumulate important features at all previous levels and improve local color correction. The dual-scale fusion helps extract edge features of underwater images and motivates the algorithm to use the key effective information to generate clearer images. The ablation experiments demonstrate the effectiveness of our algorithm.

Analysis of results

We used publicly available underwater image datasets (Ancuti et al. 2012; Galdran et al. 2015). We chose six typical underwater blurred images to test the feasibility of our algorithm.

Subjective evaluation

Figure 7(b) is based on the dark channel a priori decolorization algorithm in which the color distortion problem remains unresolved. The atmospheric dark primary color defogging algorithm is not applicable in underwater images. The Multi-Scale fusion dehazing algorithm enhances the contrast of the images to some extent (Ancuti and Ancuti 2013). However, it cannot correct the color distortion of the underwater images, as shown in Fig. 7(c). The UDCP algorithm designed to recover underwater images cannot accurately correct the color of the image in the case of severe color deviation or even exacerbates the deviation phenomenon, as shown in Fig. 7(d) (Drews et al. 2013). The rank-based dehazing shows enhanced contrast (Emberton et al. 2015). However, the overall color of the images is yellowish, and the color recovery is not satisfactory, as in Fig. 7(e). The result of the fusion enhancement algorithm has a high degree of color reproduction (Ancuti et al. 2017). However, the images appear overexposed. For example, the red channel appears overcompensated, and some areas of the images appear reddish, as in Fig. 7(e). The result of a novel dark channel prior recovery algorithm local contrast is enhanced (Hou et al. 2020). However, the color distortion increases and the images are overexposed overall, as Fig. 7(g). In contrast, the proposed algorithm can solve the color bias and overexposure problems for underwater images with green (dark and light green) and blue backgrounds. As a result, Fig. 7(h) has more natural colors and high contrast and sharpness.

To test the effectiveness of the proposed algorithm in processing detailed texture information, we perform further experiments on local detail images. As in Fig. 8(b)–(e) and 8(g), the problems of color bias and poor detail texture enhancement still exist. Figure 8(f) shows that the color correction works well, but the process loses detailed information. Nevertheless, our algorithm has a good visual effect compared with other algorithms. As shown in Fig. 8(h), the proposed algorithm improves the contrasting sharpness and enhances the detailed texture structure while correcting the color bias.

Objective evaluation

The subjective visual evaluation shows that the algorithm has a good clarity effect on underwater images in different environments. To further verify the effectiveness of the proposed algorithm, we conduct experiments from the objective point of view. The proposed algorithm is objectively evaluated by two evaluation metrics namely the underwater color image quality evaluation (UCIQE) (Yang and Sowmya 2015) and patch-based contrast quality index (PCQI) (Wang et al. 2015).

-

(1)

UCIQE is a linearly weighted combination of chromaticity, saturation, and sharpness in CIELab space. It not only quantitatively describes the uneven color shift, blur, and low contrast of underwater images but also measures the quality of underwater images.

-

(2)

PCQI is a metric used to evaluate the quality of images with local contrast variation by calculating the mean intensity, signal strength, and signal structure in each patch to compare the difference in contrast between two images. PCQI is based on comparing the mean and variance within the patch.

The UCIQE and PCQI of underwater images processed by our algorithm and six different algorithms are shown in Tables 1 and 2, with the optimal values in bolded font. We can obtain the following observations: 1) The higher UIQM, the better the overall quality, the more natural color, and higher contrast and sharpness. 2) The larger the value of PCQI, the better the quality and fidelity of the images.

Our algorithm presents more effective information while also better eliminating the fogging of underwater images, and the subjective visual effect is significantly improved. In addition, the result values of PCQI and UCIQE on objective evaluation are also well.

Stability analysis

We will further analyze the stability of the algorithm. Six underwater images with different scenes and different tones are selected from the publicly available underwater image database. Six different classical algorithms are compared with our algorithm, as shown in Fig. 9.

The comparison of different algorithms from Fig. 9 shows that our algorithm steadily improves the visual quality of underwater images and better reflects the image details. The algorithm corresponding to Fig. 9(b)–(f) and (g) still suffer from color distortion. Figure 9(c) shows that the details of the resulting map of this algorithm are not clear enough. Figure 9(e) shows that the color saturation of the resulting map of this algorithm is low.

The resultant image of our algorithm has a high color fidelity and good visual perception compared to the original image. In addition, the resulting image of our algorithm shows objects in the water clearly distinguished from the background. Furthermore, the thematic information, such as divers and carved rocks, is better highlighted. In conclusion, our proposed algorithm shows significant improvements in handling color shifts, low contrast, and low sharpness, while the details of the processed images are much clearer.

To objectively demonstrate the stability of our algorithm, we compare the histograms of the six original images in Fig. 9(a) and the resulting images in Fig. 9(h) to verify the stability of the proposed algorithm, as shown in Fig. 10.

Figure 10 shows that the overall RGB grayscale in the resultant histogram is enhanced. The red channel is no longer concentrated in the low grayscale region around 0 to 50 but is evenly distributed in the overall interval. The histograms of the six enhanced underwater images are the same, proving our algorithm’s better stability.

In summary, our algorithm improves the quality of underwater images better than other advanced algorithms. In addition, the algorithm has higher UCIQE and PCQI values in the relevant evaluation, proving the algorithm’s advanced innovation.

Conclusions

We propose an underwater image enhancement algorithm based on the fusion principle of multi-weight and multi-granularity fusion. The algorithm starts directly from a degraded image without additional information from a single original image. Firstly, the algorithm color corrects the underwater image by pre-processing the original image before fusion. Secondly, the idea of fusion-decomposition-fusion to solve the problem of unnatural and detail blurred images is adopted to obtain a clear resultant image. Finally, we validate and analyze the proposed algorithm from three perspectives: subjective, objective, and stability, respectively. The results of experiments show that the algorithm can stably improve the contrast of the image, solve the color shift problem, and better reflect the details of each channel without losing the color information of each channel itself. Compared with the original image, the visual effect is significantly improved. However, the shortcoming is that some noise is amplified during the detail texture enhancement process, which will be further optimized in future research.

Code Availability

The codes generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Ancuti C O, Ancuti C (2013) Single image dehazing by multi-scale fusion. IEEE Trans Image Process 22:3271–3282

Ancuti C, Bekaert P (2010) Effective single image dehazing by fusion. In: IEEE International conference on image processing, pp 3541–3544

Ancuti C O, Ancuti C, Haber T, Bekaert P (2011) Fusion-based restoration of the underwater images. In: IEEE International conference on image processing, pp 1557–1560

Ancuti C, Ancuti C O, Haber T, Bekaert P (2012) Enhancing underwater images and videos by fusion. In: IEEE conference on computer vision pattern recognition, pp 81–88

Ancuti C O, Ancuti C, Vleeschouwer C D, Bekaert P (2017) Color balance and fusion for underwater image enhancement. IEEE Trans Image Process 27:379–393

Bennett E P, Mason J L, Mcmillan L (2007) Multispectral bilateral video fusion. IEEE Trans Image Process 16:1185–1194

Cheng H D, Shi X J (2004) A simple and effective histogram equalization approach to image enhancement. Digit Signal Process 14:158–170

Drews J P, Nascimento E, Moraes F, Botelho S, Campos M (2013) Transmission estimation in underwater single images. In: IEEE international conference on computer vision workshops, pp 825–830

Emberton S, Chittka L, Cavallaro A (2015) Hierarchical rank-based veiling light estimation for underwater dehazing. In: British machine vision conference, vol 12, pp 1–125

Galdran A, Pardo D, Picon A, Alvarez-Gila A (2015) Automatic red-channel underwater image restoration. J Vis Commun Image Represent 26:132–145

Ghani A, Isa N (2014) Underwater image quality enhancement through composition of dual-intensity images and Rayleigh-stretching. SpringerPlus 3

Grundland M, Vohra R, Williams G P, Dodgson N A (2010) Cross dissolve without cross fade: preserving contrast, color and salience in image compositing. Comput Graph Forum 25:577–586

He K, Sun J, Tang X (2011) Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell 33:2341–2353

He S, Chen Z, Wang F (2021) Integrated image defogging network based on improved atmospheric scattering model and attention feature fusion. Earth Sci Inform 14:2037–2048

Hou G, Li J, Wang G, Yang H, Huang B, Pan Z (2020) A novel dark channel prior guided variational framework for underwater image restoration. J Vis Commun Image Represent 66:102732

Iqbal K, Salam R A, Osman A, Talib A Z (2007) Underwater image enhancement using an integrated colour model. IAENG Int J Comput Sci 34:239–244

Li Z (2010) Contrast limited adaptive histogram equalization. Comput Knowl Technol 6:2238–2241

Limare N, Lisani J L, Morel J M, Petro A B, Sbert C (2011) Simplest color balance. Image Process On Line 1:297–315

Mertens T, Kautz J, Reeth F V (2010) Exposure fusion: a simple and practical alternative to high dynamic range photography. Comput Graph Forum 28:161–171

Pan P W, Yuan F, Cheng E (2019) De-scattering and edge-enhancement algorithms for underwater image restoration. Front Inf Technol Electron Eng 20:862–871

Provenzi E, Gatta C, Fierro M, Rizzi A (2008) A spatially variant white-patch and gray-world method for color image enhancement driven by local contrast. IEEE Trans Pattern Anal Mach Intell 30:1757–1770

Qiu Y, Jiang X, Xiong J (2014) A multi-scale retinal vessels enhancement filtering method based on hessian operator. Comput Appl Softw 31:201–205

Wang S, Ma K, Yeganeh H, Wang Z, Lin W (2015) A patch-structure representation method for quality assessment of contrast changed images. IEEE Signal Process Lett 22:2387–2390

Wen H, Tian Y, Huang T, Wen G (2013) Single underwater image enhancement with a new optical model. In: IEEE international symposium on circuits and systems (ISCAS), pp 753–756

Yang M, Sowmya A (2015) An underwater color image quality evaluation metric. IEEE Trans Image Process 24:6062–6071

Zimmerman J, Pizer S, Staab E, Perry J, Mccartney W, Brenton B (1988) An evaluation of the effectiveness of adaptive histogram equalization for contrast enhancement. IEEE Trans Med Imaging 7:304–12

Zumofen G, Mojarad N M, Sandoghdar V, Agio M (2008) Perfect reflection of light by an oscillating dipole. Phys Rev Lett 101:180404

Funding

This work was supported by the Natural Science Foundation of Zhangzhou (no. ZZ2020J33) and the National Natural Science Foundation of China under Grant (no. 62001199). Partial financial support was received from the project of the Fujian Provincial Natural Science Fund (no. 2019J01842).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by shuqi wang, zhixiang chen and hui wang. The first draft of the manuscript was written by shuqi wang and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of Interest

All Authors declare that they have no conflict of interest.

Additional information

Communicated by: H. Babaie

Availability of Data and Material

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Shuqi Wang and Hui Wang contributed equally to this work.

Rights and permissions

About this article

Cite this article

Wang, S., Chen, Z. & Wang, H. Multi-weight and multi-granularity fusion of underwater image enhancement. Earth Sci Inform 15, 1647–1657 (2022). https://doi.org/10.1007/s12145-022-00804-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12145-022-00804-9