Abstract

The Differential Evolution (DE) algorithm is one of the most popular and studied approaches in Evolutionary Computation (EC). Its simple but efficient design, such as its competitive performance for many real-world optimization problems, has positioned it as the standard comparison scheme for any proposal in the field. Precisely, its simplicity has allowed the publication of a great number of variants and improvements since its inception in 1997. Moreover, several DE variants are recognized as well-founded and highly competitive algorithms in the literature. In addition, the multiple DE applications and their proposed modifications in the state-of-the-art have propitiated the drafting of many review and survey works. However, none of the DE compilation work has studied the different variants of DE operators exclusively, which would benefit future DE enhancements and other topics. Therefore, in this work, a survey analysis of the variants of DE operators is presented. This study focuses on the proposed DE operators and their impact on the EC literature over the years. The analysis allows understanding of each year’s trends, the improvements that marked a milestone in the DE research, and the feasible future directions of the algorithm. Finally, the results show a downward trend for mutation or crossover variants while readers are increasingly interested in initialization and selection enhancements.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Differential Evolution (DE) is one of the most popular and used algorithms in Evolutionary Computation (EC); it was developed by Rainer Storn and Kenneth V. Price around 1995 [1]. Like many other optimization techniques, the DE was created to solve real-world engineering problems as the Chebyshev polynomial fitting problem and the optimization of digital filter coefficients [2, 3]. By using the Genetic Annealing algorithm [4], Kenneth found the solution to the five-dimensional Chebyshev problem. However, he concludes that this approach does not meet the performance requirements of a competitive optimization scheme due to its slow convergence and the fact that adjusting the efficient control parameters was an arduous task. After this initial research, he experimented with modifications to the Genetic Annealing into the encoding and arithmetic operations instead of logic ones. Eventually, he discovered the differential mutation operator on which DE is based [5]. After Rainer suggested architecture design and creating a separate parent and child population, the DE was conceived as we know it now.

Due to its simple design and optimization flexibility, the DE has been applied to many scientific and engineering optimization problems such as image processing [6, 7], energy systems [8, 9], healthcare [10, 11], among several others. This flexibility feature of the DE has allowed that nowadays, its published researches in the state-of-the-art correspond to an enormous amount of related works that are almost impossible to gather in a compendium. Actually, since the inception of the DE, its rate of related published works has grown exponentially as is shown in Fig. 1. Therefore, this has propitiated the existence of many works that compile all publications related to the DE and group them according to specific topics. Of course, they are relevant works that contribute priceless value to their respective fields of study [12]. These publications usually present a brief general review of the DE and its history, like the survey of Opara et al. [13] where the main DE variants are discussed through the years. Also, Parouha presented a recent DE overview where its most important variants are chronologically compared against the Particle Swarm Optimization (PSO) variants [14, 15]. Finally, Mashwani discusses the different evolutionary strategies inspired by the DE [16], to mention a few related works. Regarding the concept of multiple ways to expose a DE review work, another remarkable way to expose the state-of-the-art of the DE is according to its optimization field. Chakraborty published a priceless recent review of the different applications of DE in image processing problems [17], while Qing presented a book explaining in detail all the electrical engineering applications of the DE [18]. In other engineering fields like chemistry and communications, some important summarize works have been published, like the review of Dragoi et al. [19] who exposed the use of DE in chemical optimization problems over the years, in the same way, Okagbue developed a precise analysis of the impact of DE in wireless communications through the years [20], just for mention some review applications. On the other hand, a classical way to perform a review work of the DE is according to specific algorithm features; however, it is worth mentioning that these types of works are usually the minority among the DE reviews. One of the most recent examples was presented by Piotrowski et al. [21], who resumed the relevance of different population sizes in DE variants. Xin presented the essential hybridizations of DE and PSO algorithms to determine the best design optimizers [22]. A related recent and relevant summary work was presented by Tanabe et al. [23], where the most critical ways to tune the control parameters of DE (mutation and crossover rates) are analyzed. Finally, a classical feature of any optimization approach is its capability to be competitive in multi-objective optimization problems (MOPs); in that sense, the most recent related work was proposed by Ayaz, who exposed the most remarkable advances in the state-of-the-art for the improvement of DE in MOPs [24]. All these feature information reviews of DE are just a few of the many related works.

As noted, the DE has been summarized in many ways through the years, and these works must keep existing since the published advances in DE are just growing in terms of amount and complexity [25]. However, the review (or surveys) works of the DE correspond only to \(1\%\) of the DE published works; the rest of the topics are shown in Fig. 2. As can be seen, most of the works correspond to journal articles from different scientific fields. Moreover, after 28 years of existence of the DE, there is still a lack of information that has been vaguely broached in some review works of the DE history, the DE operators, and its variants. The importance of discussing the DE operators lies in the fact that nowadays, these operators number in the dozens, and to the best of the author’s knowledge, there is no work in the literature that summarizes all of them. Moreover, as explained before, it is well documented that the accuracy and optimization of the DE and its variants are directly related to the quality of its respective operators. Thus, knowing the advances and limitations of all DE operators in the literature is crucial to proposing a real DE improvement. Regarding the review and survey works reported in Fig. 2, in this work, only the reviews and survey works directly related to the DE itself have been considered. This is quite remarkable since DE is one of the algorithms most analyzed in most research fields. Another important data is that this work only considers compilation works unrelated to Open Access journals. Thus, the literature reports many compilation works about the DE applied to said fields. In that sense, Table 1 presents all the compilation works used for this article, the editorial they belong to, and their publication year. The highlight is that all the compilation works with the aforementioned specifications started to be published in 2010. Also, it is seen that Springer used to bet on these types of works in the first half of the decade, while Elsevier led the rest of the decade. This last editorial continues to publish this type of work.

To solve this lack of information, a survey of all the DE operators exposed in the literature is presented in this article. The four main stages of the algorithm (initialization, mutation, crossover, and selection) are exposed, analyzed, and discussed. Moreover, each stage is separately broached; their operator variants are analyzed individually so the reader can easily find those operators that may be considered more relevant, instead of a single long paragraph where the information can be lost. Additionally, at the end of its respective subsection, a table summarizes the advantages and limitations of each operator variant so the reader can judge those techniques that seem more attractive for future research. On the other hand, once all the operators are presented, the survey ends with a discussion subsection for each stage of the DE, trying to offer an objective perspective of the schemes, as mentioned earlier.

In the same way, these discussions allow us to compare all the operators simultaneously to expose the best ones in terms of optimization or give a general view of future opportunities for each operator. Finally, it is worth mentioning that this survey work pretends to be a valuable source of information for future DE improvements. Therefore, gathering information on the state-of-the-art covers 113 works published up to the date of development of this work, demonstrating that the presented information is vast and treated through a rigorous scientific gathering process. Summarizing the given information in this section, this novel review work can be synthesized in the following five features:

-

This work compiles a considerable amount of the DE operator and variants presented in the literature.

-

The broached literature processes scientifically 113 publications regardless of the fields of study.

-

The exposed information is orderly presented for easier reading to the reader.

-

All the presented operators are analyzed and discussed deeply.

-

This work corresponds to a compendium of priceless information for future DE improvements.

The rest of the article is as follows: in Sect. 2, the basics of DE are explained, Sect. 3 presents a study of the DE operator variants in their respective stages, the discussions of the stages of the DE are exposed in Sect. 4, finally Sect. 5 reports the conclusions of the authors.

2 Differential Evolution

As is known, Differential Evolution (DE) is a population-based metaheuristic algorithm that uses an iterative process to find solutions to a problem. This search for solutions is achieved by generating improved candidate solutions through an evolutionary process. This process consists of four stages: initialization, mutation, crossover, and selection. Figure 3 shows the flowchart of the general scheme of the DE algorithm. These stages are briefly described in the following subsections.

2.1 Initialization Stage

The initialization stage is the first step in the DE algorithm process to find the global optimal solutions within the search space. This process stage randomly generates an initial population \(x^{d}_{i,G}\). The initial population of size Np consists of i-th individuals with d-dimensionality for each G-th generation, represented as \(x_{i,G}={\bigl \{x^{1}_{i,G}, x^{2}_{i,G}\ldots ,x^{d}_{i,G}\bigr \}}\) and is generated by a random process with the following equation:

where \(i=1,\ldots ,Np\), rand(0, 1) represents a uniformly distributed random variable within the range [0,1], \(x_{min}\) and \(x_{max}\) are the lower and upper bounds for each dimension of the search space. Once the initial population has been generated, the steps involved in the iterative process are carried out.

2.2 Mutation Stage

DE algorithm implements a perturbation process performed on the elements of each candidate solution to produce a modified version of each individual called mutant vector \(v^{d}_{i,G}\). The most common mutation operator used in the DE algorithm is the DE/rand/1, defined in Eq. 2.

where \(x_{r1}, x_{r2}, x_{r3}\) are population vectors which are randomly selected considering that \(r1 \ne r2 \ne r3 \ne i\), and F is the scale factor that is a fixed value by the user in the range of [0, 2].

2.3 Crossover Stage

In this stage, the target individual is combined with a randomly chosen individual, generating a trial individual. This process is guided by the crossover probability (Cr) (a constant value in the [0, 1] range). To generate a new vector, also called trial vector \(u^{d}_{i,G}\), the crossover is performed between target vector \(x^{d}_{i,G}\), and mutant vector \(v^{d}_{i,G}\) as follows:

where \(d_{rand}\) is a randomly chosen index \({ \in 1, 2,...,d}\) which ensures that \(u_{i,G}\) includes at least one parameter of the mutant vector \(v_{i,G}\).

2.4 Selection Stage

The selection process enables DE to determine the survival of the target or trial solution in the next generation through a comparison according to their fitness values. The vector with the fittest values continues in the next generation’s population. This operation is performed as follows:

Once the individual in the population is replaced by a new one, the mutation, crossover, and selection process is repeated until the optimum is located or the specific termination criterion is satisfied.

Each stage in the DE algorithm process will be examined and expanded upon in the following sections.

3 A Study of the Differential Evolution Operators and Their Variants

This section analyzes the operators proposed in the literature related to DE. It explains the variants that are possible to find for each of the DE stages (initialization, crossover, mutation, and selection). Such modified operators were taken from previously published articles and explained. Besides, some advantages and limitations of the operators are discussed.

3.1 Initialization in DE

In general terms, an initialization approach is always used in different algorithms to define their initial parameters and establish the initial population. Different studies have demonstrated that the quality of the search of an algorithm is directly related to the quality of the initial population [37, 38]. In the same way, a correctly initialized population can be understood as a population of individuals where diversity is the most essential feature. Based on the above, different initialization processes in the literature have been used in the DE. However, many related approaches use an initialization already established in the literature. That is why, in this section, only the proposed techniques in which the initialization phase is one of the novelties of its proposal will be analyzed.

-

Micro-opposition-based differential evolution

Rahnamayan [39] proposed an initialization based on micro opposition (micro-ODE) with tiny population size. This algorithm was used for sixteen image threshold tasks. The results were compared against the well-known Kittler approach, and the micro-ODE demonstrated its remarkable benefits against the Kittler method [40].

-

Quadratic interpolation differential evolution

Pant [41] presented a novel work in which quadratic interpolation is applied in the DE to improve the algorithm’s convergence rate. The QIDE was tested on 10 benchmark problems and compared against the DE and the Opposition-based-learning DE (ODE). The results proved a significant improvement to the classical DE.

-

Nonlinear simplex differential evolution

Ali [42] used three different initialization schemes in DE to determine the best one in computational terms. The novelty of his work is the implementation of the Nonlinear Simplex Method (NSM) as an initialization technique [43]. A comparison was made between the DE, ODE, and QIDE, demonstrating the competitiveness of NSDE.

-

Smart sampling differential evolution

De Melo [44] used machine learning techniques to find promising regions in a continuous search space. Through the Smart Sampling method (SS), the proposed SSDE was tested in a set of benchmark functions against the ODE, QODE, and UQODE algorithms.

-

Improved self-adaptive differential evolution with multiple strategies

Deng [45] proposed a novel variation of DE based on a dynamical population divided according to the fitness of the individuals. Deng also used an adjustment method to tune the F and Cr factors. The ISDEMS was compared with the DE, ACDE, and SACDE on different optimization tests, proving the good performance of the ISDEMS.

-

Adaptive population topology differential algorithm

Sun [46] structured a new DE variation for unconstrained optimization problems. The APTDE bases its performance on actualizing population topology to avoid premature convergences. The results showed the algorithm’s remarkable efficiency.

-

Adaptive population tuning scheme

Zhu [47] based his work on the dynamic population concept, where the redundant individuals are removed according to their ranking order. This APTS allows for the improvement of diversity in the population, which is effective, as the results demonstrated, for various evolutionary strategies.

-

Symbiosis co-evolutionary model based on the population topology differential evolution

Sun [48] proposed a DE variant similar to the APTDE, where the population is divided to improve diversity. The SCoPTDE was compared with other related approaches, and remarkable results were obtained.

-

Adaptive multi-population differential evolution

Wang [49] presented the concept of dynamical population but applied it to multi-objective systems. His work is a hybrid MOEA that allows the improvement of the exploitation of the DE. The results showed important benefits to DE regarding multi-objective search strategies.

-

Cumulative differential evolution

Aalto in [50] presented a modified DE where the population is adapted based on the probability mass function (Cumu-DE). This was designed to auto-tune the three-parameter settings of the DE. The approach proved to be faster than other related schemes and computationally effective.

-

Ensemble sinusoidal differential evolution with niching reduction

Awad [51] published a variant of LSHADE [52] (EsDEr-NR), where the exploration and exploitation phases are improved through a mixture of two sinusoidal formulas of the already best solutions. The proposal outperformed other related approaches, including CMAES variants [53].

-

Reinitialization mid-point check

Du Plessis [54] proposed a novel technique for Dynamic DE (DynDE) [55]. This approach allows populations to compete for function evaluations based on their performance. The results show that all DynDE approaches have improved significantly.

-

Self-adaptive strategy differential evolution with symmetric Latin hypercube design

Zhao [56] developed a technique where the population is initialized by a symmetric Latin hypercube (SLADE-SLHD), which increases the population’s diversity.

-

Chaotically initialized differential evolution

Ozer [57] presented a novel DE initialized by seven different chaotic maps (CIDE), demonstrating that the sinusoidal and circle chaotic maps significantly outperform the DE in diverse scenarios.

-

Cluster-based population initialization for differential evolution frameworks

Poikolainen [58] developed an initialization of three stages (CBPI), where a randomly generated population is clustered by a K-means approach and grouped by the Euclidean distances of the individuals to improve the quality of the initial population.

-

Differential evolution based on clustering

Bajer [59] developed a novel initialization technique (DEc) where promising regions are searched through a clustering process, and then, a simple Cauchy mutation method is used to generate new individuals in the region [60].

-

Adaptive multi-population differential evolution with dynamic population reduction

Ali [61] presented a new DE variant (sTDE-dR), which clusters the population in multiple tribes, and then the algorithm utilizes different mutation and crossover schemes for each tribe to reduce dynamically the population according to the success of the tribes.

-

Heuristic for initialization of cluster centers

Mustafi [62] proposed a new initialization for the k-means algorithm through evolutionary strategies. Essentially, the DE generates a requisite number of clusters at each iteration; by doing this, the empty clusters are avoided, and the k-means are improved.

-

Chaotic oppositional differential evolution

Ahmad [63] presented a novel DE variant that combines the concept of chaotic maps and Opposition-Based Learning strategies [64] to improve the quality of the proposed initialized solutions of the algorithm. The scheme is applied to different classical DE variants over several benchmark functions, demonstrating the viability of this new initialization process (Table 2).

3.2 Mutation in DE

DE has proven to be one of the most popular and successful evolutionary algorithms for solving optimization problems. However, due to the problems that continually emerge from modern application areas, there is still a need for improvement in the search performance of this algorithm [65]. The performance and effectiveness of DE heavily rely on the selected mutation operator and its associated control parameter value. This has led several researchers to focus their work on the ensemble of new approaches or improvement of these strategies to make the performance of DE more robust. A Mutation in biology can be defined as an instant change in a chromosome’s gene composition. In contrast, in the context of evolutionary computation, it can be referred to as a random perturbation process performed on selected decision variables. In the DE algorithm, an individual is taken as a donor and perturbed with a scaled vector differential from the other two individuals to produce a mutated individual. Generally, the DE mutation strategies can be represented in the format ‘DE/\(\alpha\)/\(\beta\)’, where DE stands for Differential Evolution, \(\alpha\) specifies the base vector to be perturbed, and \(\beta\) refers to the number of difference vectors involved in the mutation process.

Notably, no single mutation strategy satisfactorily solves all the problems that arise [30] because an operator may be effective for specific problems but perform poorly for others. It is similar to what the “No free lunch theorem [66]” establishes. For this reason, different mutation strategies with different control parameter settings may better solve a particular problem than a single mutation strategy with fixed parameter settings, as is the case with the original version of DE.

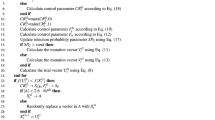

Each individual in the population is an n-dimensional vector representing a candidate solution, so the idea behind the original mutation strategy is to take the difference vector between two individuals, scale it by the mutation factor, and then added to a third individual to create a new candidate solution. Figure 4 describes this process in a two-dimensional search space. Two individuals \(x_{r1}\) and \(x_{r2}\) are randomly chosen with \(r1\ne r2\), and the scaled version of the difference between these two individuals is added to the individual randomly selected \(r3\notin \{r1,2\}\). This results in a mutant vector \(v_{i,G}\), which might be accepted into the population as a new candidate solution depending on its fitness value. At every generation G, this operator creates a mutant vector (\(v_{i,G}\)) corresponding to each population member, also known as the target vector (\(x_{i,G}\)). Listed below are some of the mutations that have been proposed in the last two decades.

-

DE/rand/1

In this strategy (see Eq. 5), three vectors are selected from the population, where one will be the base vector and disturbed by the difference between the other two. The indices of these vectors r1, r2, and r3 must be mutually exclusive integers randomly chosen from the range [1, Np] and different from the index i of the target vector. This randomness when selecting the individuals that participate in creating a mutant vector makes the algorithm able to maintain population diversity and global search capability with no bias to any specific search direction. Nevertheless, it might slow down the convergence speed [67].

$$\begin{aligned} v_{i,G}=x_{r3,G} + F (x_{r1,G}-x_{r2,G}) \end{aligned}$$(5)Brest. et al. employing this mutation operator developed the JDE algorithm [68], which is a method that seeks to adapt the control parameters F and CR automatically and get rid of the trial and error practices when establishing these values.

-

DE/rand/2

Eq. 6 can be considered an extension of DE/rand/1 since a new pair of vectors (r4 and r5) is added, which might lead to a better perturbation than the strategies with only one difference vector. Some researchers [69] have stated that two difference vector strategies are better than DE/rand/1 due to their ability to improve diversity by producing more trial vectors while increasing their exploration ability of the search space.

$$\begin{aligned} v_{i,G}=x_{r5,G} + F (x_{r1,G}-x_{r2,G})+ F (x_{r3,G}-x_{r4,G}) \end{aligned}$$(6)The self-adaptive DE algorithm with improved mutation strategy (IMSaDE) [70] takes this operator along with an elite archive strategy to generate mutant vectors. In this way, they seek to obtain the good exploration capacity of this operator and, at the same time, a better convergence rate through the inclusion of individuals with good fitness.

-

DE/best/1

The difference between this strategy (Eq. 7) and DE/rand/1 is that instead of randomly selecting the base vector, it utilizes the information of the individual with the best fitness found so far (\(x_{best,G}\)), attracting all the individuals toward the same best position. In fact, according to Mezura et al. [71] this scheme is one of the most competitive for unimodal, separable, and non-separable problems, but due to its exploitative tendency, may cause premature convergence, diversity loss, and more chances of falling into a local optimum when dealing with multi-modal problems [72, 73].

$$\begin{aligned} v_{i,G}=x_{best,G} + F (x_{r1,G}-x_{r2,G}) \end{aligned}$$(7)It is difficult to find an algorithm that employs only this mutation strategy since, in most cases, it is used to compensate for the weak exploitation of other strategies like in the original DE algorithm. For example, the Modified Mutation Strategy DE (MMDE) algorithm [74] uses this operator in conjunction with DE/rand/1, where a random number and a threshold determine which one is used.

-

DE/best/2

In this strategy, similarly to DE/rand/2, an additional pair of vectors is included, which adds an extra component of random variation in each mutation, also seen as an enhancement of the exploration capability [75]. The strategy is defined as follows:

$$\begin{aligned} v_{i,G}=x_{best,G} + F (x_{r1,G}-x_{r2,G})+ F (x_{r3,G}-x_{r4,G}) \end{aligned}$$(8)Like in the previous schemes (and practically in most mutation operators, unless otherwise stated) \(r1 \ne r2 \ne r3 \ne r4 \ne i \ne best\). Ho-Huu et al. [76] included this operator in their proposal alongside DE/rand/1, DE/rand/2, and DE/best/1, where at each generation, two of them are selected based on an adaptive scheme based on the absolute deviation between the best and the mean of the objective function in the previous generation.

-

DE/current-to-rand/1

In this mutation operator ( Eq. 9), the current vector is the base vector that will be perturbed by the difference between two pairs of individuals. The effect of this method presented in [77] can be seen as a local search because the current vector can be attracted to a surrounding point determined by the difference of four vectors selected randomly.

$$\begin{aligned} v_{i,G}=x_{i,G} + F (x_{r1,G}-x_{r2,G})+ F (x_{r3,G}-x_{r4,G}) \end{aligned}$$(9)Its efficacy has been demonstrated when used to address multi-objective optimization problems in [78]. In the Fitness Landscape DE (FLDE) algorithm [79], Z. Tan et al. used this operator along with DE/rand/1 and DE/current-to-best/1 to train a random forest model with CEC2014 and CEC2015 functions to predict the mutation strategy when solving new problems.

-

DE/current-to-best/1

Eq. 10, also known as DE/target-to-best/1 [2, p. 140] takes into consideration the information of the best solution so far in the population to the generation of a mutant vector, which can be interpreted as an attraction from the current vector to the best vector.

$$\begin{aligned} v_{i,G}=x_{i,G} + F (x_{best,G}-x_{i,G})+ F (x_{r1,G}-x_{r2,G}) \end{aligned}$$(10)In an attempt to balance the effects of this mutation operator, S. Das et al. proposed two neighborhood models in the local and global neighborhood-based mutations DE (DEGL) algorithm [80]. The first one is known as the local neighborhood model, where each individual is mutated using the best position of a small zone. The second one, referred to as the global mutation model, employs the best position of the entire population, just like DE/current-to-best/1 does.

-

DE/rand-to-best/1

According to the DE literature, Qin. et al. [72] developed this operator that combines the techniques that rely on incorporating the current best individual and the subtraction of two pairs of vectors. The mutant vector is generated as follows:

$$\begin{aligned} v_{i,G}=x_{r3,G} + F (x_{best,G}-x_{3,G})+ F (x_{r1,G}-x_{r2,G}) \end{aligned}$$(11)This operator’s effect on the population gradually guides the population toward the best solution. However, the attraction is not uniformly among the population at each iteration as it would with the DE/current-to-best/1 operator since an individual can be selected and perturbed multiple times in the same iteration.

-

DE/current-to-pbest/1

In the self-adaptive differential evolution with fast and reliable convergence performance (JADE) algorithm [81], the authors introduced Eq. 12 as a generalized version of the operator DE/current-to-best/1 to diversify the population and improve the convergence performance of the proposal by randomly choosing a vector from the top-ranked set of individuals of the current population to play the role of the single best solution in DE/current-to-best, thus guiding guide the search process not only toward one single point but to \(100p\%\) possibilities. The recommended size (p) for this set of good individuals is between 5% to 20% of Np.

$$\begin{aligned} v_{i,G}=x_{i,G} + F (x^p_{best,G}-x_{i,G})+ F (x_{r1,G}-x_{r2,G}) \end{aligned}$$(12)However, to improve the diversity in choosing the individuals involved in generating a new solution, the authors proposed a variation that includes the information of an external archive formed by recently explored solutions that have been replaced by their offspring in the selection phase. The operator with the optional archive is shown in Eq. 13

$$\begin{aligned} v_{i,G}=x_{i,G} + F_i (x^p_{best,G}-x_{i,G})+ F_i (x_{r1,G}-\tilde{x}_{r2,G}) \end{aligned}$$(13)where \(\tilde{x}_{r2,g}\) is an individual randomly picked from the union of the current population and the archive (\(P \cup A\)).

This mutation strategy is also employed in the Success-History based Adaptive DE (SHADE) algorithm [82] where Tanabe and Fukunaga proposed a technique for parameter adaptation using a historical memory of successful control parameter configurations to guide the selection of future control parameter values.

Furthermore, a similar approach was proposed in [83] where the authors presented two mutation schemes named DE/e-rand/2 and DE/e-best/2. The letter e refers to the fact that the involved individuals in generating a mutant vector come from the “elite” part of the top-ranked population (usually between 30% to 50% ).

-

DE/current-to-gr_best/1

Another variant of DE/current-to-best/1, less greedy and more explorative, is proposed in [84]. This mutation strategy (Eq. 14) utilizes \(x_{gr\_best,G}\) that refers to the best vector of a dynamic group of q vectors randomly selected from the current population to replace the \(x_{best,G}\) of the Eq. 10. This feature helps to prevent premature convergence at local optima since it ensures that the target solutions are not always attracted to the same best position of the entire population.

$$\begin{aligned} v_{i,G}=x_{i,G} + F (x_{gr\_best,G}-x_{i,G})+ F (x_{r1,G}-x_{r2,G}) \end{aligned}$$(14)The authors found that a group size of 15% of Np provides good results on most tested benchmarks. It might seem that DE/current-to-pbest/1 and DE/current-to-gr_best/1 are the same, but while the first one takes \(x_{best,G}\) from the best group of the entire population, the second one takes it from a group whose members are randomly chosen. Consequently, it has more chances of escaping from local optima, but the convergence rate can be slower.

-

DE/rand-to-best ¤t/1

In [85], the authors claimed that including information from the current individual and the best solution will improve the DE algorithm’s exploration and exploitation capabilities. This combination of information is carried out as shown in the following equation:

$$\begin{aligned} v_{i,G}=x_{r3,G} + F_\alpha (x_{best,G}-x_{r2,G})+ F_\beta (x_{i,G}-x_{r1,G}) \end{aligned}$$(15)Moreover, in Eq. 15, it is observed that two different scaling factors intend to control the information’s contribution of the best and the current vectors, achieving a similar effect of having two strategies (DE/rand-to-best and DE/rand-to-current) in a single one.

-

Triangular mutation

A. Mohamed [86] introduced this mutation intending to enhance the global exploration and local exploitation abilities and improve the algorithm’s convergence rate. With this adjustment, the convex combination vector \(\bar{x}_{c,G}\) will be used to replace the random base vector \(x_{r3,G}\) of the Eq. 5. The remaining two vectors will be substituted for the best and worst of the three randomly chosen vectors to produce the difference vector. The triangular mutation is defined in Eq. 16.

$$\begin{aligned} v_{i,G}=\bar{x}_{c,G} + 2F (x_{best,G}-x_{worst,G}) \end{aligned}$$(16)From Eq. 16 the convex combination vector \(\bar{x}_{c,G}\) of the triangle is a weighted sum of the three randomly selected vectors where the best vector has the highest contribution, and is defined as follows:

$$\begin{aligned} \bar{x}_{c,G} = w_1 \cdot x_{rbest,G} + w_2 \cdot x_{rbetter,G} + w_3 \cdot x_{rworst,G} \end{aligned}$$(17)where the real weights are given by \(w_i = p_i /\sum _{i=1}^{3} p_i \), i = 1, 2, 3 and \(p_1=1\), \(p_2=rand(0.75,1)\) and \(p_3=rand(0.5,p_2)\). This mutation process exploits the nearby region of each \(\bar{x}_{c,G}\) in the direction of each \((x_{best,G}-x_{worst,G})\) for each mutated vector. It focuses on exploiting some sub-regions of the search space, improving the local search tendency, and accelerating the proposed algorithm’s convergence speed.

-

Trigonometric mutation

In this approach [87], instead of taking the best vector, the current one, or a vector randomly chosen as the base vector, it will be the one that corresponds to the central point of the hyper-geometric triangle formed by three randomly chosen vectors. Moreover, the perturbation imposed on this base vector comprises a sum of three weighted vector differentials as shown in Eq. 18.

$$\begin{aligned} \begin{aligned} v_{i,G}=&(x_{r1,G} + x_{r2,G}+ x_{r3,G})/3 + (p_2-p_1)(x_{r1,G} - x_{r2,G})+\ldots \\&\ldots (p_3-p_2)(x_{r2,G} - x_{r3,G})+(p_1-p_3)(x_{r3,G} - x_{r1,G}) \end{aligned} \end{aligned}$$(18)Where:

$$\begin{aligned} \begin{array}{l} p_1=\frac{|f(x_{r1,G})|}{|f(x_{r1,G})|+ |f(x_{r2,G})|+|f(x_{r3,G})|}\\ \\ p_2=\frac{|f(x_{r2,G})|}{|f(x_{r1,G})|+|f(x_{r2,G})|+|f(x_{r3,G})|}\\ \\ p_3=\frac{|f(x_{r3,G})|}{|f(x_{r1,G})|+|f(x_{r2,G})|+|f(x_{r3,G})|} \end{array} \end{aligned}$$(19)These weight values scale the contribution magnitudes of each differential vector to the perturbation applied to the base vector. It is easy to see that the mutant vector is strongly biased to the best of the three individuals, so this mutation can be considered a greedy local search operator.

-

Historical population-based mutation

Meng and Yang [88] developed this strategy (Eq. 20) so that the mutant vector contains information not only from the current population but from a historical population, which reflects the landscape of the target and the knowledge extracted over past generations.

$$\begin{aligned} v_{i,G}=x_{i,G} + F (x^p_{best,G}-x_{i,G})+ F (x_{r1,G}-\widehat{x}_{r2,G}) \end{aligned}$$(20)This way, \(x^p_{best,G}\) corresponds to a randomly selected individual from the current generation’s top \(p\%\) of the current population, \(\widehat{x}_{r2,G}\) denotes a randomly selected vector from the union of the current population and the historical individuals in the external archive.

-

Reflection-based mutation

The authors of [89] based their strategy on the Nelder-Mead method, a traditional direct algorithm for unconstrained nonlinear optimization problems that construct a polyhedron of \(n +1\) vertices for n-dimensional optimization. They found that an approximate optimal solution can be obtained through reflection, expansion, contraction, and shrink operations over this polyhedron known as simplex. First, they randomly select four individuals from the current population, sorting them from best to worst according to their object function values, \(f(x_{r1,G})<\) \(f(x_{r2,G})<\) \(f(x_{r3,G})<\) \(f(x_{r4,G})\). Then, the mutant vector is generated according to the Eq. 21

$$\begin{aligned} v_{i,G}=x_{o,G} + F (x_{r1,G}-x_{r4,G}) \end{aligned}$$(21)where:

$$\begin{aligned} x_{o,G} = w_1 \cdot x_{r1,G} + w_2 \cdot x_{r2,G} + w_3 \cdot x_{r3,G} \end{aligned}$$(22)and the respective weights of \(x_{r1,G},\) \(x_{r2,G},\) and \(x_{r3,G}\) are defined as follows:

$$\begin{aligned} \begin{array}{l} w_1=\frac{f(x_{r1,G})}{f(x_{r1,G})+f(x_{r2,G})+f(x_{r3,G})}\\ w_2=\frac{f(x_{r2,G})}{f(x_{r1,G})+f(x_{r2,G})+f(x_{r3,G})}\\ w_3=\frac{f(x_{r3,G})}{f(x_{r1,G})+f(x_{r2,G})+f(x_{r3,G})} \end{array} \end{aligned}$$(23)Although this mutation operation can balance exploration and exploitation better than other basic mutation strategies, it is still susceptible to premature convergence when solving complex multimodal optimization problems due to its tendency towards the best individuals. Thus, the authors combined this strategy with DE/rand/1 and DE/current-to-rand/1.

-

Hemostasis-based mutation

Prabha and Yadav [90] took inspiration from the biological phenomenon called Hemostasis, which regulates the flow of blood vessels during injury. Hence, the operator in Eq. 24 aims to maintain the internal environment so it does not get stuck in the local optimum. To do so, this procedure introduces the Hemostasis vectors (\(x_{HeV1}\) and \(x_{HeV2}\)), defined as one pair of “good” vectors selected randomly from the best half of the sorted population and one pair of bad vectors chosen randomly from the worst half of the population. Then, two trial vectors are generated and later compared with the current vector of their respective population.

$$\begin{aligned} v_{i,G}=x_{best,G} + F (x_{HeV1,G}-x_{HeV2,G}) \end{aligned}$$(24) -

Union differential evolution mutation

Sharifi-Noghabi et al. [91] presented a mutation strategy that can be seen as a modification of the DE/rand/2 operator, where the mechanism to select the vectors involved in the generation of a mutant vector, takes into consideration the advantages of both design and fitness spaces criteria. The mutant vector is generated as follows:

$$\begin{aligned} v_{i,G}=x_{FS1,G} + F (x_{FS2,G}-x_{r1,G})+ F (x_{DS,G}-x_{r2,G}) \end{aligned}$$(25)where \(x_{r1,G}\), \(x_{r2,G}\) are randomly chosen from the population, \(x_{FS1,G}\), \(x_{FS2,G}\) are the parent vectors selected by fitness space criterion, to obtain them it is necessary to:

-

Sort the population from best to worst fitness

-

Calculate the selection probability for each individual according to:

$$\begin{aligned} P_i=\frac{Np-i}{Np} \end{aligned}$$(26) -

Select the two individuals using the roulette wheel.

\(x_{DS,G}\) is the vector selected by design space criterion, and to obtain it the next steps are required:

-

-

Construct the distance matrix (DM) based on the Euclidean distance between all the individuals in the population

$$\begin{aligned} DM=\left( \begin{array}{ccc} \left\| x_1-x_1\right\| &{} \cdots &{} \left\| x_1-x_{N P}\right\| \\ \vdots &{} \ddots &{} \vdots \\ \left\| x_{N P}-x_1\right\| &{} \ldots &{} \left\| x_{N P}-x_{N P}\right\| \end{array}\right) \end{aligned}$$(27) -

Calculate the probability matrix PM based on DM

$$\begin{aligned} PM=1-\frac{D M(i, j)}{\sum _{\forall k} D M(i, k)} \end{aligned}$$(28) -

Finally, roulette wheel selection without replacement is carried out on every row of PM matrix (for each member of the population).

After seeing how \(x_{FSi}\) are obtained, this mutation seems more similar to DE/best/2 since vectors with better fitness are more likely to be chosen. However, due to Eq. 28, it can also be considered a local search operator because it exploits the regions around a predefined member by assigning a higher probability to closer individuals.

-

Gaussian-based mutation

Huiwang et al.[92] presented an almost parameter-free algorithm to determine the optimal control parameter in the differential evolution algorithm. The strategy mutation of this proposal (Eq. 29) generates a new vector by a Gaussian distribution, sampling the search space based on the current position.

$$\begin{aligned} V_{i,G}=N (\mu ,\sigma ) \end{aligned}$$(29)where \(\mu = (x_{best,G}+x_{i,G})/2\) and \(\sigma = \mid x_{best,G}-x_{i,G} \mid\). In the early evolutionary phases, the search process focuses on exploration due to the large deviation (initially, the distance between individuals is large). As the generations increase, the deviation becomes smaller, and the search process will focus on exploitation.

-

Cauchy-based mutation

Although this operator was originally introduced by A. Stacey et al. [93] as an improvement of the Particle Swarm Optimization (PSO) algorithm, M. Ali and M.Pant [94] implemented this mutation (with some variations) as a mechanism to help individuals escape the local basin by allowing them to jump to a new region. Each element of the vector has a probability of 90\(\%\) of being perturbed by a random number generated from a Cauchy distribution, which is similar to the Gaussian distribution but with more of its probability in the tails, increasing the probability of large values being generated. This operator is shown in Eq. 30

$$\begin{aligned} u_{j, i . G+1}=\left\{ \begin{array}{cl} x_{j, \text{ best } , G}+\textrm{C}(\gamma , 0) &{} \text{ if } {\text {rand}}(0,1) \le 0.9 \\ x_{j, i . G} &{} \text{ otherwise } \end{array}\right. \end{aligned}$$(30)where j corresponds to the jth element of the best solution (j=1,2..., Dims) at generation G, \(\textrm{C}(\gamma , 0)\) stands for a random number generated by Cauchy probability distribution with scale parameter and centered at the origin.

Table 3 presents an overview of all the aforementioned mutation strategies, their classification and the type of search they perform.

3.3 Crossover in DE

The basic algorithmic framework of the DE algorithm conforms to four phases: initialization, mutation, crossover, and selection. This section centers on the crossover operator phase. Since its introduction in 1995, the crossover operator has come to enhance the potential diversity of the population, in which the mutant vector and current vector cross their components in a probabilistic form to produce a trial vector (also called offspring). The crossover process allows the current solution to inherit features from the donor or mutant vector. This combination of elements is controlled by a parameter called Crossover Rate (CR), which is set as 0 to 1. This trial vector contends with the respective element of the current population in the current generation to know who is the best, with its respective objective function, and is transferred into the next generation. Two commonly used crossover operators are binomial (uniform) crossover and exponential (modular two-point) crossover [2, 26, 30]. The principal difference between binomial and exponential crossover is that while in the binomial case, the components inherited from the mutant vector are arbitrarily selected, they form one or two compact sub-sequences in the case of exponential crossover. An interesting comparative study is proposed by [95, 96]. Another interesting comparative study is shown by [97]. Other crossover variants are analyzed in this section. In [98] proposed a crossover rate repair technique for adaptive DE algorithms. The parent-Centric Crossover approach was proposed by [99] in which multiple parents recombine to produce the child and showed that the proposed algorithm works better regarding convergence rate and robustness. Epistatic arithmetic Crossover was proposed by [100]. A crossover rule called preferential crossover rule [101] was proposed to reduce the drawbacks of scaling parameters. Self-Adaptive Differential Evolution [102] was proposed with adaptive crossover strategies. [103] introduced an orthogonal crossover scheme applied in different variants of DE. A locality-based crossover scheme for dynamic optimization problems is introduced by [104]. Eigenvector-based crossover is proposed by [105] and demonstrates that this scheme can be applied to any crossover strategy. Using a linear increasing strategy, [106] modify the crossover rate. [107] proposed a Self-Adaptive Differential Evolution (SaDE) improving the generation of trial vectors and control parameters values are self-adaptive based on previous experiences. This work aims to analyze and compare the impact of crossover operators and their variants on the Differential Evolution algorithm.

-

Exponential crossover

The exponential crossover is proposed in the original work of Storn and Price in [1]. Since its introduction, the crossover operator allows the construction of a new trial element starting from the current and mutant elements. The exponential (Modular two-point) crossover is similar to the 1 to 2-point crossover in GA’s. One of the first appearances of the crossover operator is in [1], and the implementation of this is to increase the diversity of the perturbed parameter vector described in the Sect. 3.2. For the exponential crossover, the trial vector is generated as follows:

$$\begin{aligned} u_{i,G}= u_{j,G} {\left\{ \begin{array}{ll} v^{j}_{i,G} &{} \text {for } j=\langle n\rangle _{D},\ \langle n+1\rangle _{D},\ldots , \langle n+L-1\rangle _{D} \\ x^{j}_{i,G} &{} \text {for all other} j \in [1,D] \end{array}\right. } \end{aligned}$$(31)where the angular brackets \(\langle \rangle _{D}\) denote a module function with modulus D. The integer L is drawn from [1, D]. In Fig. 5 an example of the mechanism of the crossover operator for 7-dimensional vectors is shown.

-

Binomial crossover

DE, like GA, is a simple real-coded evolutionary algorithm and uses the concept of fitness in the same sense as in genetic algorithms. The most significant difference between DE and GA is that in DE, some of the parents are generated through a mutation process before crossover is performed. In contrast, GA usually selects parents from the current population, performs crossover, and then mutates the offspring. In the DE algorithm, the role of the crossover operator is to enhance the diversity of the population. The crossover is executed after generating the donor control vector through mutation. In the vast majority of research on the DE algorithm, it is typically used the binomial crossover (or continuous crossover) that is performed on each of the D variables whenever a random number between 0 to 1 is less or equal to the Cr (the crossover rate value), in this case for the binomial crossover the trial vector is created in the following way:

$$\begin{aligned} u_{i,G}= u_{j,G} {\left\{ \begin{array}{ll} v^{j}_{i,G} &{} \text {if } j=j_{rand} \text { or } rand(0,1) \le CR \\ x^{j}_{i,G} &{} \text {otherwise} \end{array}\right. } \end{aligned}$$(32)where rand(0, 1) is a uniformly distributed random number, \(j_{rand} \in [1,2,\ldots ,D]\).

-

Crossover rate repair technique

In this scheme, the crossover rate in DE is repaired by its corresponding binary strings using the average number of components taken from the mutant vector. The mean value of the binary string is the replacement for the original crossover rate. This proposal analyzes the behavior of the crossover in DE, and it proposes a methodology in which it is considered that the trial vector is directly related to its binary strings but not directly related to the crossover rate [98]. This work mainly focuses on improving the adaptive DE algorithm based on the proposed crossover rate repair technique to improve its performance. The analysis of the proposed method is next: Let \(b_{i}\) be a binary string generated for each target vector \(x_{i}\) as follows:

$$\begin{aligned} b_{i,j}={\left\{ \begin{array}{ll} 1, &{} \text {if } rndreal(0,1) \le CR \text { or } j=j_{rand} \\ 0, &{} \text {otherwise} \end{array}\right. } \end{aligned}$$(33)Consequently, the binomial crossover of DE can be reformulated as:

$$\begin{aligned} u_{i,j} = b_{i,j} \cdot v_{i,j} + (1 - b_{i,j}) \cdot x_{i,j} \end{aligned}$$(34)where \(i=1,\ldots ,NP\) and \(j=1,\ldots ,D\). It can see that the binary string \(b_{i}\) is stochastically related to CR; Nevertheless, the trial vector \(u_{i}\) is directly related to its binary string \(b_{i}\), but not directly related to its crossover rate CR. In addition, this work proposes to update the CR based on the successful parameter, calculating the \(CR^{'}\) as:

$$\begin{aligned} CR^{'}_{i} = \frac{\sum _{j=1}^{D} b_{i,j}}{D} \end{aligned}$$(35) -

Parent-centric crossover

The proposed modification of the DE algorithm uses parent centric approach [99]. The name of the improvement is called DEPCX. This modification measures the balance between the convergence rate and the algorithm’s robustness. Parent-Centric Crossover (PCX) was first introduced by [108, 109]. In [108, 109], PCX is a normal crossover operator, while in in DEPCX, it is treated as a mutant vector and is applied to each candidate solution vector, showing better results regarding convergence rate and algorithm robustness.

-

Epistatic arithmetic crossover

The epistatic arithmetic crossover operator [100], unlike the ordinary arithmetic crossover, considered the impact of epistatic genes in the context of evolutionary computation by expressing the epistatic as a graph product of two linear graphs represented by the candidate solution that is involved in the crossover process. This operator has embedded into a DE variant called eXEDE.

-

Preferential crossover

In [101] is proposed a crossover rule called the preferential crossover rule. The modified DE algorithm is called DEPC. This algorithm uses two population sets called \(S_{1}\) and \(S_{2}\). Each set contains N elements (total of the population). \(S_{2}\) is called the auxiliary population set and is used to record the trial points discarded from the original DE process.

-

Crossover strategies adaptation

A self-adaptive Differential Evolution algorithm with crossover strategies adaptation (CSA-SADE) is proposed in [102]. In this proposal, each individual has its mutation strategy, crossover strategy, and control parameters, which can be adaptively adjusted through the entire search process. In the case of the crossover strategy, this methodology involves binomial and exponential crossover. For a detailed and longer explanation of the implementation of the methodology, please refer to [102].

-

Orthogonal crossover

Orthogonal crossover (OX) operators are based on orthogonal design [110]. These operators can perform a systematic and rotational search in the region the parent solutions define. In [103], a framework is suggested using an OX in DE called OXDE, a combination of DE/rand/1/bin and a version of OX (QOX). In [111] proposes a quantization technique into OX and proposes a version of OX, called QOX, for dealing with numerical optimization. This version of OX is used in this improved version.

-

Locality-based crossover

This article proposes an algorithm called Adaptive Differential Evolution with Locality-based Crossover (ADE-LbX) [104]. The mutation operation is guided by a locality-based scheme that preserves the features of the nearest individuals based on Euclidean distance around a potential solution. One of the most prominent features of this implementation is the use of the L-best crossover technique. L-best crossover uses the concept of blending rate, which determines the rate at which traits interbreed to produce successful offspring, as opposed to conventional crossover techniques that specify a Cr parameter of crossover probability.

-

Eigenvector-based crossover

Eigenvector-based Crossover, known as a rotationally invariant operator, was proposed by [105] to address optimization problems with rotated fitness landscapes more effectively. This consists of a rotated coordinate system that was first constructed by referring to the eigenvector information of the population’s covariance matrix. The offspring solutions can then be generated by the randomly selected parents from standard or rotated coordinate systems to prevent the rapid diversity loss of the population. The eigenvectors form a basis of the population and let the crossover operator exchange information between the target and donor vectors with a basis of eigenvectors rather than a natural basis. The eigenvector-based crossover operator is fully explained in [105].

-

Hybrid linkage crossover

The hybrid linkage crossover (HLX) was proposed by [112] to leverage the problem-specific linkage information between pairs of variables for more effective guidance of the search process. An improved differential grouping technique was first incorporated into HLX to adaptively extract groups of tightly interactive variables known as building blocks (BBx). Two group-wise crossover operators, GbinX and GorthX, were then proposed to guide the crossover process without disrupting the tight linkage structures of BBs. The proposed HXL scheme can be easily adapted into the existing DE variants to achieve more promising optimization performance.

-

Superior–inferior and superior-superior crossover

Superior-inferior (SI) Crossover and Superior-Superior (SS) Crossover strategies were proposed by [113] to improve the diversity of the population in the DE algorithm. The SS scheme is triggered to enhance the exploration strength of an algorithm if the population diversity is too low. The SS scheme promotes the exploitation search if the population is diversified. The SS and SI schemes are adaptable to typical binomial and exponential crossover operators and can be incorporated into various DE frameworks. The choice that determines which of the two implementations is used is given by the calculation of the diversity of the population given in [113]. This diversity decides whether the implementation should be used (SI) or (SS).

-

Multiple exponential crossover

Multiple exponential crossovers enable the formation of a new solution by combining multiple segments of target and mutant vectors [114]. This semi-consecutive crossover operator showed that it is not only prepared with the strengths of conventional binomial and exponential crossover operators but also demonstrates better capability in handling the subset of tightly interactive variables.

-

Rotating crossover operator

A new DE variant was proposed by [115] and consists of a rotating crossover operator (RCO) with a multi-angle searching strategy, aiming to reduce the likelihood of generating inferior offspring solutions by expanding the search space tactically. Unlike the conventional binomial crossover scheme, the trial vector of RCO can be generated diversely within the circle regions around the donor and target vector by referring to the self-adaptive crossover parameter and rotation control vectors followed by the Levy distribution. This scheme uses a rotation control vector and a binary parameter to generate trial vectors around target vectors or mutant vectors at certain angles and distances. The RCO crossover strategy can generate trial vectors by rotating around donor vectors or target vectors controlled by rotating vectors, whose direction and angles can be guided to flexible search space and diverse offspring.

-

Optional blending crossover

In [116] is proposed a switched parameter DE with success-based mutation and modified BLX crossover (SWDE_Success_mBLX) to solve scalable optimization problems without sacrificing the simplicity of its algorithmic framework. A simple control parameter selection strategy that enables the random and uniform switching of mutational scale factors and crossover rates within their feasible ranges was first proposed. A success-based switching strategy was incorporated to determine the mutation schemes of each solution based on its search performance history. The crossover of each target-donor pair was performed using a binomial crossover or a modified BLX (mBLX) crossover via a probability-based selection scheme. The latter mBLX crossover scheme facilitated the search in the region between and beyond the dimensional bounds established by the target-donor pairs to balance the exploration and exploitation searches. The mBLX strategy follows a similar philosophy of BLX-\(\alpha\)-\(\beta\) [117, 118] but avoids the extra fitness evaluation.

-

Linear increasing crossover strategy

Zuo Dexuan and Gao Liqun designed an Efficient Improved Differential Evolution (EIDE). Its method modifies the scalar factor and improves the crossover rate [106]. The IEDE is different from DE in two principal aspects: 1.- For the scale factor (F) it was adopted a random number from a uniform distribution in the range \([0-0.6]\), that is \(F\thicksim U(0-0.6)\). 2.- For crossover rate CR it was adopted a linear increasing strategy as follows:

$$\begin{aligned} CR = CR_{min} + (CR_{max} + CR_{min}) \times k/K \end{aligned}$$(36)Where \(CR_{min}\) and \(CR_{max}\) are the minimal and maximal crossover rates, respectively. k is the current number of iterations, and K is the maximal number of iterations.

In the same way as the last subsections, this crossover subsection ends with a summary of the aforementioned proposed approaches. Table 4 shows said information in a similar structure as the one presented in the initialization subsection, where the respective scheme and its reference, such as its advantages and limitations, are reported in three columns.

3.4 Selection in DE

As mentioned before, the selection stage is usually ignored when a new DE modification is proposed because the selection stage is not as relevant for the DE algorithm process as in a GA. However, over the years, different studies have demonstrated that DE improves significantly by adding an enhanced selection approach [27, 120]. Next, the different selection approaches presented in the literature are explained in detail, such as their respective obtained results and the essential part of their methodologies. It is worth mentioning that DE selection stage modifications related to a multi-objective optimization process will not be considered in this work; this is because said process is often analyzed as a different field in optimization research, which does not compete with this article.

-

Roulette wheel selection-elitist-differential evolution

Ho-Huu et al. [121] presented an elitist selection technique instead of the common greedy selection. Ho-Huu utilized the elitist selection technique developed in [122], combining the children and parent populations. Certain individuals are selected from this new population to create the next generation. This allows the algorithm to accelerate its convergence rate, among other important benefits.

-

Landscape-based adaptive operator selection mechanism for differential evolution

Sallam et al. [123] presented a DE multi-modification approach, including the selection stage. This new algorithm considered the landscape information and the performance histories of the operators’ problems. About the selection stage, a landscape-based adaptive operator was proposed; essentially, this technique is based on the difference between the information landscape vector of the problem and a spherical function defined as a reference landscape [124]. The mentioned technique is defined as follows:

$$\begin{aligned} f_{ref}\left( \overrightarrow{x}\right) =\sum _{j=1}^{D}\left( x_{j}-x^{b}_{j}\right) ^{2} \end{aligned}$$(37)where \(\overrightarrow{x}^b\) is the best individual in the iteration or sample.

-

Improved differential evolution with individual-based selection

Tian et al. [125] presented an Improved Differential Evolution with Individual-based parameter setting and selection strategy (IDEI) [126]. The author developed a diversity-based selection stage established by a mix of the well-known greedy selection and defining a new weighted fitness value according to the fitness values and positions of the target.

-

Subset-to-subset

Guo et al. [127] presented a modification to the DE to improve the premature convergence. The author developed a novel Subset-To-Subset (STS) selection operator, which divides target and trial populations into subsets to rank them later. An example is given in Fig. 6, representing a population of 15 individuals divided into 3 subsets. In Fig. 6, the left and right figures show the target and trial populations, respectively, while the black circles indicate the first pair of respective subsets.

-

Noisy-objective-optimization-problem DE

Rakshit [128] presented an improved DE algorithm to optimize a single noisy objective problem (NOP). About the selection stage, it is modified to allow competition among individuals in the population; this is developed by adding a probabilistic crowding based niching (local optima) method [129].

-

\(pbest_{rr}\) Joint approximate diagonalization of eigenmatrices

Yi et al. [130] developed a DE variant based on the well-known JADE algorithm [81]. In this case, the selection stage was modified by implementing a pbest wheel operator, which works according to the function value of the top vectors chosen in the mutation stage for each individual, Eq. 38 summarizes the model as follows:

$$\begin{aligned} pbest_{i}={\left\{ \begin{array}{ll} pbest_{i} &{} \text {if }f\left( u_{i,\, G+1}\right) \le f\left( X_{i,\, G}\right) \\ elite_{j} &{} \text {if }f\left( u_{i,\, G+1}\right) >f\left( X_{i,\, G}\right) and cpro_{j-1}\le r\, and\, <cpro_{j}\end{array}\right. } \end{aligned}$$(38)where NP and N are the N-dimensional individuals and the iterations, respectively. thus, \(i=1,2,...,NP; j=1,2,...,N\). \(u_{i,G}\) and \(X_{i,G}\) are the fitness value of the target vector.

-

New selection operator

Zeng et al. [131] proposed one of the most recent selection stage modifications in the literature. Zeng proposed that if the DE is in a stage of stagnation, then three candidate vectors will survive and be chosen in the next generation. Nonetheless, these candidate vectors cannot be randomly chosen since it does not guarantee that the population will be improved; that is why discarded trial vectors are considered to replace the parent vector.

-

Ensemble differential evolution for a distributed blocking flowshop scheduling problem

Zhao et al. [132] proposed some modifications to an Ensemble Differential Evolution (EDE) for a Distributed Blocking Flowshop Scheduling Problem (DBFSP), which is an optimization problem related to the operations research field [133]. A biased selection operator replaces the original greedy selection operator in the selection stage. For the optimization problem, the new target individual \(X_{i,g+1}\) is defined as follows:

$$\begin{aligned} X_{i,g+1}= & {} {\left\{ \begin{array}{ll}U &{} \text {if }\omega<0\text { or }rand<max\left\{ \eta -\omega ,0\right\} \\ X &{} Otherwise\end{array}\right. } \end{aligned}$$(39)$$\begin{aligned} \omega= & {} \dfrac{f\left( U\right) -f\left( X\right) }{f\left( X\right) } \end{aligned}$$(40)where \(\omega\) is the error of U according to X, while \(\eta\) is defined as the selection scale factor, and it belongs to the space \(0-1\).

-

DE orthogonal array-based initialization and new selection strategy

Kumar et al. [134] presented a conservative selection scheme for selecting the solutions from current and trial populations for processing in the next iteration. Basically, a randomly created neighborhood \(N_{s,i}\) of size ns is defined for each trial solution. This neighborhood comprises iterative trial solutions under the next three conditions: 1. The created trial solution must be better than the current population solution. 2. Each trial solution must comprise 25% of its neighborhood’s solutions. 3. This entire selection process can only be done for the first 60% of the optimization process; the classical greedy selection process defines the selection of the 40% spare process.

-

Multi-objective differential evolution with ensemble selection methods

Qu et al. [135] utilized a Multi-Objective Differential Evolution (MODE) for solving the extended Dynamic Economic Emission Dispatch (DEED) [136]. To solve the said model, the author proposed an ensemble of selection methods in the MODE algorithm [137]. This selection approach combines the newly generated offspring and their parents according to one condition: if a uniformly generated random number between 0 and 1 is \(\ge 0.5\), then the classical non-domination sorting technique is used to sort the population. Otherwise, the summation-based sorting method will be utilized for the same purpose.

STS selection process described by Guo et al. [127]

Finally, to summarize all the given information about the modified selection methods for the Differential Evolution, Table 5 is presented. For as in the rest of the operators, Table 5 exposes the information structurally with the results, metrics, advantages, and limitations of each proposed algorithm.

4 Discussion

In this section, the four stages of the DE will be analyzed and discussed to expose the advantages and disadvantages of each method in comparison with the rest of its respective approaches. In the same way, as far as possible, the best and worst variations will be described in terms of computational costs, applied experimentation, improvement rates, and complexity development.

4.1 Initialization

As explained in Sect. 3.1, only the works that had as a main proposal a novel initialization technique were exposed, 19 published works related to the said argument. According to Bilal et. al [27], the initialization variants for the DE correspond to the \(15\%\) of published works, and the best authors’ knowledge, said proportion has not changed for this work. In that sense, these initialization approaches will be discussed in the next paragraphs, trying to define their similarities and differences. Moreover, it will be defined the best and worst proposed initialization schemes according to specific effectiveness metrics.

Based on the above, the efficiency of the methods can be discussed according to the Beyer work et. al [139], which defines the efficiency of an EA based on three metrics: 1. Its convergence rate, 2. It can reach the global optima in different OP’s, and 3. Its computational cost. In that sense, the best operator must allow the algorithm to have the perfect balance of these 3 aspects. When Table 2 is analyzed, it is easy to note that the majority of initialization approaches enhance their respective algorithms in terms of a speeder convergence rate, being the APTS and RCM the only techniques that do not reach this goal. This behavior has sense since it is well-known that the quality of the initial population directly affects the exploration phase. Therefore, an improvement in said stage benefits the early appearance of the exploitation phase. On the other hand, Table 2 also demonstrates that an improved initial population minimizes the computational resources the algorithm needs. However, the SSDE, ISDEMS, APTDE, CIDE, CBPI, DEc, sTDE-dR, and k-means DE are the schemes that do not benefit the algorithm in the aforementioned metric. Nonetheless, these works correspond to the \(42\%\) of the related techniques, probing that the computational cost is improved in the \(52\%\) of the published techniques. Finally, the third metric related to the diversity of OP’s tested for each scheme is also suggested in Table 2. The algorithms probed with two or more OP’s are the minority of the works, though the ISDEMS, Cumu-DE, CIDE, CBPI, sTDE-dR, k-means DE, and CODE are the only approaches with said feature. However, these last algorithms have demonstrated their capabilities through various experiments.

To visualize the reported in Table 2 related to the Beyer metrics, Fig. 7 is presented. In said Figure, the three Beyer’s metrics are exposed as bars of different textures for better comprehension. Also, it can be noted that each algorithm is represented by bars of different sizes. More specifically, only one reached metric is represented by its respective bar with the smaller size; if the algorithm reaches two metrics, then it is represented with its two respective bars in a medium size; finally, if the scheme reaches the three metrics, then it is exposed with the three bars with the longest size. As can be seen, only two algorithms reached the three Beyer’s metrics, the Cumu-DE and CODE, while the SSDE, APTDE, APTS, RCM, and DEc obtained only one metric. Based on Fig. 7, it can be deduced that Cumu-DE and CODE are generally the best-proposed initialization approaches for the DE in the state-of-the-art. Thus, such algorithms are the most promising schemes for future research without demeriting the rest of the techniques.

4.2 Mutation

One of the main reasons for the good performance of any optimization algorithm is the ability to balance two opposing aspects, global exploration, and local exploitation, or, as seen from another perspective, find the appropriate trade-off between population diversity and a high convergence rate. To achieve this, during the past two decades, many researchers have designed different strategies to enhance the selection of the vectors involved in generating a mutant vector. Some consider the individual’s fitness or ranking in the population, whereas others define the selection criterion in design space. One of the first deep and serious studies of an intelligent selection was realized by Kaelo and Ali [140], who used the tournament selection method to obtain the base vector from three randomly chosen vectors.

Even though many of the mutation operators recently proposed have been designed to balance the aforementioned aspects by making an intelligent selection, they are usually accompanied by other complementary modifications and techniques such as parameter adaptation mechanism, the use of an external archive or a different topology of the algorithm [30, 141]. Therefore, the performance of a mutation strategy may vary depending on the approach. One example of this was presented by Bujok and Tvrdík [142], where they found that including the DE/current-to-pbest/1 mutation in their proposal did not bring sufficient enhancement of the performance on the CEC2013 benchmark. However, in the LSHADE algorithm [143], this same strategy outperformed the results in the CEC2014 problems of the JADE algorithm where it was originally introduced.

The mutation strategies described above can be classified into two main categories: random and guided. In the first group are all the operators, where the individuals of the population do not have a defined search pattern and move freely in the search space. The best representatives are DE/rand/i and DE/current-to-rand/i. Nonetheless, they should be utilized depending on the needs of the search process since DE/rand/i perturbs any individual in any direction, which is beneficial at early generations to discover regions that may not have been reached at initialization or to help to escape from local optima in multi-modal problems.

With DE/rand/i, some individuals may be disturbed a greater number of times than others in the same population. Whereas, with DE/current-to-rand/1, the contribution of each particle in the search process is more equitable since each individual is perturbed once every generation, giving each individual the same opportunity to explore their neighborhood.

The second category contains all those operators that influence the search process toward certain positions, usually to the best one of the population or the best of a sub-group. This guided search prevents the algorithm from wandering in the search space, wasting computational resources and time, since in some applications, one function access could represent more than a simple evaluation of a variable in an equation. Nonetheless, one issue that should be highlighted is that the quality of the guide vectors is a determining factor in the performance of these strategies because, in multi-modal problems, individuals that may seem like promising solutions at the beginning could attract other individuals of the population to regions that are not close to the best objective solution the problem causing the algorithm to converge prematurely and even get stuck in a local optimum. In this category, we can find examples like DE/best/i, DE/current-to-best/i, DE/rand-to-best/i, Historical population-based mutation, and Hemostasis-based mutation.

All these are easy to classify because the vector \(X_{best}\) or \(X_{pbest}\) is present in their respective equation, but what happens with strategies where there is no evident best vector, and even all of them are randomly selected as is the case of the Reflection based mutation and the Trigonometric mutation? We might consider them as random strategies, but since the information’s contribution of each vector is determined by its fitness, the resultant mutant vector has a certain tendency towards the best of the selected ones. Therefore, it becomes a partially guided mutation.

Before including any mutation strategy in a new differential evolution-based algorithm, it is recommended that the needs of the proposal be analyzed to identify which one is better suited. A common approach in the literature, considered a research hotspot [83], is the combination of several mutation operators to take advantage of the capabilities of each one. The combination of strategies can be done in different ways; here are two common:

The first one employs a candidate pool of mutation schemes in which the operators are used depending on some selection criteria. For example, in the SaDE algorithm [144], a mutation is picked from the candidate pool according to the probability learned from its success rate in generating improved solutions in previous generations. The selected strategy is subsequently applied to the corresponding base vector to generate a mutant vector. More specifically, at each generation, the probabilities of choosing each strategy in the candidate pool are summed to 1. Another example is found in the EPSEDE algorithm [145], where, at the initialization phase, each member of the initial population is randomly assigned to a mutation strategy and associated with parameter values taken from the respective pools. If the trial vector produced is better than the base vector, the mutation strategy and parameter values are maintained for the next generation. Otherwise, the individual is randomly reinitialized with a new mutation strategy and associated parameter values from the respective pools.

The second common method found in the literature is dividing the population into subgroups to evolve, each with different mutation operators. Chatterjee and Zhou [146] generate three subpopulations based on the fitness values of the individuals, where the operator DE/best/1 is applied to the subpopulation with the higher fitness values, DE/rand/1 to the lower subgroup and DE/current-to-best/1 to the subset with average fitness value. Likewise, Zhan et al. [147] employ three subpopulations (each one with a different mutation strategy) called the exploration population, exploitation population, and balance population, which co-evolve by using the master–slave multi-population framework.

4.3 Crossover