Abstract

We consider the family of Lorentz ideals \({{\mathcal {C}}}_p^+\), \(1 \le p < \infty \). Let \({{\mathcal {C}}}_p^{+(0)}\) be the \(\Vert \cdot \Vert _p^+\)-closure of the collection of finite-rank operators in \({\mathcal C}_p^+\). It is well known that \({{\mathcal {C}}}_p^{+(0)} \ne {\mathcal C}_p^+\). We show that \({{\mathcal {C}}}_p^{+(0)}\) is proximinal in \({{\mathcal {C}}}_p^+\). We further show that a classic approximation for Hankel operators (Axler et al. in Ann Math (2) 109, 601–612, 1979) does not generalize to this new context.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let X be a Banach space and let M be a closed linear subspace of X. An element \(x \in X\) is said to have a best approximation in M if there is an \(m \in M\) such that \(\Vert x - m\Vert \le \Vert x - a\Vert \) for every \(a \in M\). The subspace M is said to be proximinal in X if every \(x \in X\) has a best approximation in M.

One of the most familiar and significant examples of such a pair is the case of \(X = {{\mathcal {B}}}({{\mathcal {H}}})\) and \(M = {{\mathcal {K}}}({{\mathcal {H}}})\), where \({{\mathcal {H}}}\) is a Hilbert space, \({{\mathcal {B}}}({{\mathcal {H}}})\) is the collection of bounded operators on \({{\mathcal {H}}}\), and \({\mathcal K}({{\mathcal {H}}})\) is the collection of compact operators on \({{\mathcal {H}}}\). It is well known that \({{\mathcal {K}}}({{\mathcal {H}}})\) is proximinal in \({\mathcal B}({{\mathcal {H}}})\), which is a result in the influential book [11] by Gohberg and Krien. Given any \(A \in {\mathcal B}({{\mathcal {H}}})\), to find its best approximation in \({{\mathcal {K}}}({\mathcal H})\), one takes the polar decomposition \(A = U|A|\), where \(|A| = (A^*A)^{1/2}\) and U is a partial isometry. Then from the spectral decomposition of |A| one easily finds the best compact approximation to A. The moral of this example is that when looking for best approximations for operators on a Hilbert space, one should take advantage of spectral decomposition, which is not available on other Banach spaces.

The relation between \({{\mathcal {B}}}({{\mathcal {H}}})\) and \({{\mathcal {K}}}({\mathcal H})\) is that the latter is the closure of the collection of finite-rank operators in the former. On a Hilbert space \({\mathcal H}\), there are many pairs that fit this description, but with different norms. In particular, the norm ideals of Robert Schatten [16] are a good source for interesting examples of X and M.

Before getting to these examples, it is necessary to give a general introduction for norm ideals. For this we follow the approach in [11, 19], because it offers the level of generality that is suitable for this paper.

As in [11], we write \({\hat{c}}\) for the linear space of sequences \(\{a_j\}_{j\in {\mathbf{N}}}\), where \(a_j \in \) R and for every sequence the set \(\{j \in \mathbf{N} : a_j \ne 0\}\) is finite. A symmetric gauge function is a map

that has the following properties:

-

(a)

\(\Phi \) is a norm on \({\hat{c}}\).

-

(b)

\(\Phi (\{1\), 0, ..., 0, \(\dots \}) = 1\).

-

(c)

\(\Phi (\{a_j\}_{j\in {\mathbf{N}}}) = \Phi (\{|a_{\pi (j)}|\}_{j\in {\mathbf{N}}})\) for every bijection \(\pi : {\mathbf{N}} \rightarrow {\mathbf{N}}\).

See [11, p. 71]. Each symmetric gauge function \(\Phi \) gives rise to the symmetric norm

for bounded operators, where \(s_1(A), \dots , s_j(A), \dots \) are the singular numbers of A. On any separable Hilbert space \({{\mathcal {H}}}\), the set of operators

is a norm ideal [11, p. 68]. That is, \({\mathcal C}_\Phi \) has the following properties:

\(\bullet \) For any B, \(C \in {{\mathcal {B}}}({{\mathcal {H}}})\) and \(A \in {{\mathcal {C}}}_\Phi \), \(BAC \in {{\mathcal {C}}}_\Phi \) and \(\Vert BAC\Vert _\Phi \le \Vert B\Vert \Vert A\Vert _\Phi \Vert C\Vert \).

\(\bullet \) If \(A \in {{\mathcal {C}}}_\Phi \), then \(A^*\in {{\mathcal {C}}}_\Phi \) and \(\Vert A^*\Vert _\Phi = \Vert A\Vert _\Phi \).

\(\bullet \) For any \(A \in {{\mathcal {C}}}_\Phi \), \(\Vert A\Vert \le \Vert A\Vert _\Phi \), and the equality holds when rank\((A) = 1\).

\(\bullet \) \({{\mathcal {C}}}_\Phi \) is complete with respect to \(\Vert \cdot \Vert _\Phi \).

Given a symmetric gauge function \(\Phi \), we define \({\mathcal C}_\Phi ^{(0)}\) to be the closure with respect to the norm \(\Vert \cdot \Vert _\Phi \) of the collection of finite-rank operators in \({{\mathcal {C}}}_\Phi \).

Both ideals \({{\mathcal {C}}}_\Phi \) and \({{\mathcal {C}}}_\Phi ^{(0)}\) are important in operator theory and operator algebras. For example, if one considers the problem of diagonalization under perturbation for single self-adjoint operators [13, 14] or for commuting tuples of self-adjoint operators [2, 18,19,20,21], then the natural perturbing operators come from ideals of the form \({{\mathcal {C}}}_\Phi ^{(0)}\). If one studies Toeplitz operators or Hankel operators on various reproducing-kernel Hilbert spaces, then a natural question is the membership of these operators in ideals of the form \({\mathcal C}_\Phi \) [10, 12, 22].

For many symmetric gauge functions, we simply have \({{\mathcal {C}}}_\Phi ^{(0)} = {{\mathcal {C}}}_\Phi \). For example, if we take any \(1 \le p < \infty \) and consider the symmetric gauge function

then the norm ideal \({{\mathcal {C}}}_{\Phi _p}\) defined according to (1.1) is simply the familiar Schatten p-class. It is well known and obvious that \({{\mathcal {C}}}_{\Phi _p} ^{(0)} = {\mathcal C}_{\Phi _p}\).

From [11] we know that there also are many symmetric gauge functions for which \({{\mathcal {C}}}_\Phi ^{(0)} \ne {{\mathcal {C}}}_\Phi \). The most noticeable of such examples is the symmetric gauge function

Obviously, the norm \(\Vert \cdot \Vert _{\Phi _\infty }\) is none other than the ordinary operator norm. Therefore (1.1) gives us \({{\mathcal {C}}} _{\Phi _\infty } = {{\mathcal {B}}}({{\mathcal {H}}})\). It is also obvious that \({{\mathcal {C}}} _{\Phi _\infty }^{(0)} = {{\mathcal {K}}}({\mathcal H})\). Thus the classic result that \({{\mathcal {K}}}({{\mathcal {H}}})\) is proximinal in \({{\mathcal {B}}}({{\mathcal {H}}})\) can be rephrased as the statement that \({{\mathcal {C}}} _{\Phi _\infty }^{(0)}\) is proximinal in \({{\mathcal {C}}} _{\Phi _\infty }\). Once one realizes that, it does not take too much imagination to propose

Problem 1.1

For a general symmetric gauge function \(\Phi \) with the property \({{\mathcal {C}}}_\Phi ^{(0)} \ne {{\mathcal {C}}}_\Phi \), is \({{\mathcal {C}}}_\Phi ^{(0)}\) proximinal in \({{\mathcal {C}}}_\Phi \)?

In such generality, Problem 1.1 does not appear to be easy, for it simply covers too many ideals of diverse properties. It is not too hard to convince oneself that to determine whether or not \({\mathcal C}_\Phi ^{(0)}\) is proximinal in \({{\mathcal {C}}}_\Phi \), one needs to know the specifics of \(\Phi \). At this point, we do not see how to get a general answer using only properties (a)-(c) listed above plus the condition \({{\mathcal {C}}}_\Phi ^{(0)} \ne {{\mathcal {C}}}_\Phi \).

But we are pleased to report that there is a family of symmetric gauge functions of common interest for which we are able to solve Problem 1.1 in the affirmative. Let us introduce these symmetric gauge functions and the corresponding ideals.

For each \(1 \le p < \infty \), let \(\Phi _p^+\) be the symmetric gauge function defined by the formula

where \(\pi : \mathbf{N} \rightarrow \mathbf{N}\) is any bijection such that \(|a_{\pi (1)}| \ge |a_{\pi (2)}| \ge \cdots \ge |a_{\pi (j)}| \ge \cdots \), which exists because each \(\{a_j\}_{j\in \mathbf{N}} \in {\hat{c}}\) only has a finite number of nonzero terms. The ideal \({{\mathcal {C}}}_{\Phi _p^+}\), which is defined by (1.1) using \(\Phi _p^+\), is often called a Lorentz ideal. It is well known that \({{\mathcal {C}}}_{\Phi _p^+} \ne {\mathcal C}_{\Phi _p^+}^{(0)}\) [11]. The ideal \({{\mathcal {C}}}_{\Phi _1^+}\) deserves special mentioning, because it is the domain of the Dixmier trace [4, 6], which has wide-ranging connections [3, 7, 8, 12, 17].

The ideals \({{\mathcal {C}}}_{\Phi _p^+}\) and \({{\mathcal {C}}}_{\Phi _p^+}^{(0)}\), \(1 \le p < \infty \), are the main interest of this paper. Since they will appear so frequently in the sequel, let us introduce a simplified notation. From now on we will write

for \(1 \le p < \infty \). Here is our main result:

Theorem 1.2

For every \(1 \le p < \infty \), \({{\mathcal {C}}}_p^{+(0)}\) is proximinal in \({{\mathcal {C}}}_p^+\).

The result that \({{\mathcal {K}}}({{\mathcal {H}}})\) is proximinal in \({\mathcal B}({{\mathcal {H}}})\) has refinements within specific classes of operators [1]. One such class of operators are the Hankel operators \(H_f : H^2 \rightarrow L^2\), where \(H^2\) is the Hardy space on the unit circle \(\mathbf{T} \subset \mathbf{C}\). We recall the following:

Theorem 1.3

[1, Theorem 3] For each \(f \in L^\infty \), the best compact approximation to the Hankel operator \(H_f\) can be realized in the form of a Hankel operator \(H_g\).

In other words, Theorem 1.3 says that \(H_f\) has a best compact approximation that is of the same kind, a Hankel operator. Using the method in [1], Theorem 1.3 can be easily generalized to Hankel operators on the Hardy space on the unit sphere in \(\mathbf{C}^n\).

Since Theorem 1.2 tells us that each \({{\mathcal {C}}}_p^{+(0)}\) is proximinal in \({{\mathcal {C}}}_p^+\), we can obviously ask a more refined question along the line of Theorem 1.3: Suppose that we have an operator A in a natural class \({{\mathcal {N}}}\), and suppose we know that \(A \in {{\mathcal {C}}}_p^+\), can we find a best \({\mathcal C}_p^{+(0)}\)-approximation to A in the same class \({{\mathcal {N}}}\)? In particular, what if \({{\mathcal {N}}}\) consists of Hankel operators? As we will see, the answer to this last question turns out to be negative.

The rest of the paper is organized as follows. We prove Theorem 1.2 in Sect. 2. Then in Sect. 3, we present the above-mentioned negative answer. Namely, we give an example of a Hankel operator on the unit sphere in \(\mathbf{C}^2\) which is in the ideal \({{\mathcal {C}}}_4^+\) and which does not have any Hankel operator as its best \({{\mathcal {C}}}_4^{+(0)}\)-approximation. This example requires some explicit calculation, which may be of independent interest.

2 Existence of Best Approximation

Recall that the starting domain for every symmetric gauge function \(\Phi \) is the space \({\hat{c}}\), which consists of real sequences whose nonzero terms are finite in number. Our first order of business is to follow the standard practice to extend the domain of \(\Phi \) to include every sequence. That is, for any sequence \(\xi = \{\xi _j\}\) of complex numbers, we define

It is well known that the properties of \(\Phi \) imply that if \(|a_j| \le |b_j|\) for every j, then

This fact will be used in many of our estimates below.

We will focus exclusively on the symmetric gauge functions \(\Phi _p^+\), \(1 \le p < \infty \). For the rest of the paper, p will always denote a positive number in \([1,\infty )\).

Definition 2.1

-

(1)

Write \(c_p^+\) for the collection of sequences \(\xi \) satisfying the condition \(\Phi _p^+(\xi ) < \infty \).

-

(2)

Let \(c_p^+(0)\) denote the \(\Phi _p^+\)-closure of \(\{a+ib : a, b \in {\hat{c}}\}\) in \(c_p^+\).

-

(3)

For each \(\xi \in c_p^+\), denote \(\Phi _{p,\text {ess}}^+(\xi ) = \inf \{\Phi _p^+(\xi - \eta ) : \eta \in c_p^+(0)\}\).

-

(4)

Write \(d_p^+\) for the collection of sequences \(x = \{x_j\}\) in \(c_p^+\) satisfying the conditions that \(x_j \ge 0\) and that \(x_j \ge x_{j+1}\) for every \(j \in \mathbf{N}\).

In other words, \(d_p^+\) consists of the non-negative, non-increasing sequences in \(c_p^+\).

Proposition 2.2

For every \(\xi = \{\xi _j\} \in c_p^+\), we have

In particular, \(\xi = \{\xi _j\} \in c_p^+(0)\) if and only if

Proof

From the above definitions it is obvious that

On the other hand, for any \(a, b \in {\hat{c}}\), there exist a \(\nu \in \mathbf{N}\) and \(\zeta _1,\dots ,\zeta _\nu \in \mathbf{C}\) such that

Thus for every \(m \ge \nu \) we have

Since \(c_p^+(0)\) is the \(\Phi _p^+\)-closure of \(\{a+ib : a, b \in {\hat{c}}\}\), it follows that

This completes the proof. \(\square \)

Proposition 2.3

For every \(x = \{x_j\} \in d_p^+\) we have

Proof

For \(x = \{x_j\} \in d_p^+\), (2.1) trivially holds if \(\sum _{j=1}^\infty x_j < \infty \). Suppose that \(\sum _{j=1}^\infty x_j = \infty \). Then for every \(m \in \mathbf{N}\) we have

Therefore

for every \(m \in \mathbf{N}\). By Proposition 2.2, this means

To prove the reverse inequality, note that for each \(m \in \mathbf{N}\), there is a \(k(m) \in \mathbf{N}\) such that

If there is a sequence \(m_1< m_2< \cdots< m_i < \cdots \) in \(\mathbf{N}\) such that \(k(m_i) \rightarrow \infty \) as \(i \rightarrow \infty \), then from Proposition 2.2 and (2.2) we obtain

The only other possibility is that there is an \(N \in \mathbf{N}\) such that \(k(m) \le N\) for every \(m \in \mathbf{N}\). Obviously, the membership \(x \in d_p^+\) implies \(\lim _{j\rightarrow \infty }x_j = 0\). Thus in the case \(k(m) \le N\) for every \(m \in \mathbf{N}\), from Proposition 2.2 and (2.2) we obtain

This completes the proof. \(\square \)

Proposition 2.4

Let a \(\xi = \{\xi _j\} \in c_p^+\) be given and denote

for every \(i \in \mathbf{N}\). If

then \(\xi \in c_p^+(0)\).

Proof

By (2.3), for every \(k \in \mathbf{N}\), there is a natural number \(i(k) > k+3\) such that

For every \(i \ge i(k)\), we also have

That is,

when \(i \ge i(k)\). Combining this with (2.4), we see that

Since this holds for every \(k \in \mathbf{N}\), we conclude that \(\Phi _{p,\text {ess}}^+(\xi ) = 0\), i.e., \(\xi \in c_p^+(0)\). \(\square \)

Proposition 2.5

For each \(x \in d_p^+\), there is a decomposition \(x = y + z\) such that \(y \in d_p^+\) with

and \(z = \{z_j\} \in c_p^+(0)\), where \(z_j \ge 0\) for every \(j \in \mathbf{N}\).

Proof

Obviously, it suffices to consider \(x \in d_p^+\) with \(\Phi _{p,\text {ess}}^+(x) = 1\). Write \(x = \{x_j\}\). By the definition of \(d_p^+\), we have \(x_j \ge 0\) and \(x_j \ge x_{j+1}\) for every \(j \in \mathbf{N}\).

We define the desired sequences \(y = \{y_j\}\) and \(z = \{z_j\}\) inductively, starting with \(j = 1\). If \(x_1 \le 1\), we define \(y_1 = x_1\) and \(z_1 = x_1 - y_1 = 0\). If \(x_1 > 1\), we define \(y_1 = 1\) and \(z_1 = x_1 - y_1 = x_1 - 1 > 0\).

Let \(\nu \ge 1\) and suppose that we have defined \(0 \le y_j \le x_j\) and \(z_j = x_j - y_j\) for every \(1 \le j \le \nu \) such that the following hold true: for every \(1 \le j \le \nu \) we have

and for each \(j \in \{1,\dots ,\nu \}\) with the property \(y_j < x_j\) we have

Then we define \(y_{\nu + 1}\) and \(z_{\nu +1}\) as follows. Suppose that

In this case, we define \(y_{\nu +1} = x_{\nu +1}\) and \(z_{\nu +1} = x_{\nu +1} - y_{\nu +1} = 0\). Suppose that

Since we know that

these two inequalities imply that there is a \(y_{\nu +1} \in (0,x_{\nu +1})\) such that

This defines \(y_{\nu +1}\). We then define \(z_{\nu +1} = x_{\nu +1} - y_{\nu +1}\), which is greater than 0 in this case. Thus we have inductively defined the sequences \(y = \{y_j\}\) and \(z = \{z_j\}\) with the properties that \(y_j \ge 0\), \(z_j \ge 0\) and \(y_j + z_j = x_j\) for every \(j \in \mathbf{N}\). That is, \(x = y + z\). The construction above ensures

for every \(j \in \mathbf{N}\). Moreover, the construction ensures that the equality

holds for each \(j \in \mathbf{N}\) with the property \(y_j < x_j\).

Next we show that \(y = \{y_j\}\) is a non-increasing sequence, i.e., \(y_j \ge y_{j+1}\) for every \(j \in \mathbf{N}\). First, consider the case \(j = 1\). If \(x_1 \le 1\), then by definition \(y_1 = x_1 \ge x_2 \ge y_2\), since \(x _1 \ge x_2\). If \(x_1 > 1\), then \(y_1 = 1\) by definition, and (2.5) gives us \(1 + y_2 \le 1^{-1/p} + 2^{-1/p}\). Thus in the case \(x_1 > 1\) we have \(y_2 \le 2^{-1/p} < 1 = y_1\).

Now consider any \(j \ge 2\). If \(y_j = x_j\), then we again have \(y_j = x_j \ge x_{j+1} \ge y_{j+1}\), since x is a non-increasing sequence. If \(y_j < x_j\), then (2.5) and (2.6) imply

from which we deduce

Thus \(y = \{y_j\}\) is indeed a non-increasing sequence. Combining this fact with (2.5), we conclude that \(y \in d_p^+\) with \(\Phi _p^+(y) \le 1\). What remains is to show that \(z \in c_p^+(0)\).

To prove that \(z \in c_p^+(0)\), we first consider the case where \(1< p < \infty \). Define

for each \(i \in \mathbf{N}\). By Proposition 2.4, to prove that \(z \in c_p^+(0)\), it suffices to show that

Suppose that this failed. Then there would be a \(\delta > 0\) and an increasing sequence

of natural numbers such that

We will show that this leads to a contradiction.

Write \(a = \Phi _p^+(x)\). Let \(\nu \) and j be any pair of natural numbers such that \(z_j \ge 2^{-i_\nu /p}\). Since \(z_j = x_j - y_j\) and \(y_j \ge 0\), we have \(x_j \ge 2^{-i_\nu /p}\). On the other hand, since x is a non-increasing sequence, we have

Writing \(C_p = p/(p-1)\), the above facts lead to the inequality

That is, if \(z_j \ge 2^{-i_\nu /p}\), then \(j \le (C_pa)^p2^{i_\nu }\). For each \(\nu \in \mathbf{N}\), let \(j_\nu = \max \{j \in \mathbf{N} : z_j \ge 2^{-i_\nu /p}\}\). By (2.6), the fact \(z_{j_\nu } > 0\) forces

Thus

where the last \(\ge \) follows from (2.8). Since the above inequality supposedly holds for every \(\nu \in \mathbf{N}\), by Proposition 2.3, it contradicts the condition \(\Phi _{p,\text {ess}}^+(x) = 1\). This proves (2.7). Applying Proposition 2.4, in the case \(1< p < \infty \) we have \(z \in c_p^+(0)\).

Now consider the case \(p = 1\), which is much more complicated. To prove \(z \in c_1^+(0)\) in this case, pick an \(\epsilon > 0\). Define the sequences \(u = \{u_j\}\) and \(v = \{v_j\}\) by the formulas

\(j \in \mathbf{N}\). We have \(z = u + v\) by design. Then note that \(\Phi _1^+(v) \le \epsilon \Phi _1^+(x)\). Since \(\epsilon > 0\) is arbitrary, it suffices to show that \(u \in c_1^+(0)\).

To prove that \(u \in c_1^+(0)\), consider the set \(N = \{j \in \mathbf{N} : u_j > 0\}\). If \(\text {card}(N) < \infty \), then we certainly have the membership \(u \in c_1^+(0)\). Suppose that \(\text {card}(N) = \infty \). Then we enumerate the elements in N as a sequence

Keep in mind that \(z_{j(k)} > \epsilon x_{j(k)}\) for every \(k \in \mathbf{N}\).

Claim 1 If \(k_1< k_2< \cdots< k_\nu < \cdots \) are natural numbers such that

for every \(\nu \in \mathbf{N}\), then

Indeed for each \(\nu \in \mathbf{N}\), since \(z_{j(k_\nu )} > 0\), i.e., \(y_{j(k_\nu )} < x_{j(k_\nu )}\), we have

Combining this with (2.9), we find that for \(\nu \ge 3\),

It follows from the condition \(\Phi _{1,\text {ess}}^+(x) = 1\) and Proposition 2.3 that

Obviously, (2.10) follows from (2.11) and (2.12). This proves Claim 1.

Claim 2 Let \(E_1\), \(\dots \), \(E_s\), \(\dots \) be finite subsets of \(\mathbf{N}\) such that

Suppose that

Then

To prove this, pick an \(m \in \mathbf{N}\) and define \(F_s = \{k \in E_s : mk \le j(k)\}\) for each \(s \in \mathbf{N}\). Note that (2.14) implies \(j(k) > k^2\) for every \(k \in \cup _{s=1}^\infty E_s\). Therefore \(\text {card}(E_s\backslash F_s) \le m\) for every s. Thus it follows from (2.13) that

Since \(m \in \mathbf{N}\) is arbitrary, (2.15) will follow if we can show that

For each \(s \in \mathbf{N}\), since \(j(k) \ge mk\) for every \(k \in F_s\) and since the sequence x is non-increasing, we have

That is,

for every \(s \in \mathbf{N}\). Since \(\text {card}(F_s) \rightarrow \infty \) as \(s \rightarrow \infty \), (2.17) implies (2.16). This completes the proof of Claim 2.

Having proved Claims 1 and 2, we are now ready to prove the membership \(u \in c_1^+(0)\). Recall that for every j for which \(u_j \ne 0\), we have \(u_j = z_j \le x_j\), and that the elements in \(N = \{j \in \mathbf{N} : u_j > 0\}\) are listed as

Since x is non-increasing, by Proposition 2.3, the membership \(u \in c_1^+(0)\) will follow if we can show that

Suppose that this limit did not hold. Then there would be a sequence

of natural numbers such that

for some \(b > 0\). Again, we will show that this leads to a contradiction.

For each \(s \in \mathbf{N}\), define \(A_s = \{k \in \{1,2,\dots ,n_s\} : \log k \ge (1/2)\log j(k)\}\). If s is such that \(A_s = \emptyset \), we define

If s is such that \(A_s \ne \emptyset \), we let \(k_s\) be the largest element in \(A_s\) and we define

Note that for each s, the definition of \(G_s\) guarantees that \( \log k < (1/2)\log j(k)\) if \(k \in G_s\). Denote \(\Sigma = \{s \in \mathbf{N} : A_s \ne \emptyset \}\). For each \(s \in \Sigma \), define

where \(\beta _s\) is understood to be 0 in the case \(G_s = \emptyset \). For \(s \in \Sigma \) with \(G_s \ne \emptyset \), we have

Suppose that \(\Sigma \ne \emptyset \). Then \(\Sigma = \{s \in \mathbf{N} : s \ge \ell \}\) for some \(\ell \in \mathbf{N}\). Thus there is a sequence

contained in \(\Sigma \) such that both limits

exist. By definition, \(\log k_{s} \ge (1/2)\log j(k_{s})\). By Claim 1, we have

Recall that if \(k \in G_s\), then \( \log k < (1/2)\log j(k)\). By Claim 2, we have

Combining these facts with (2.19), we find that

which contradicts (2.18) in the case \(\Sigma \ne \emptyset \). Suppose that \(\Sigma = \emptyset \). Then by definition we have \(G_s = \{1,2,\dots ,n_s\}\) for every \(s \in \mathbf{N}\). Thus we can apply Claim 2 to conclude that

Thus (2.18) is also contradicted in the case \(\Sigma = \emptyset \). This completes the proof of the proposition. \(\square \)

Having only dealt with sequences so far, we now apply the above results to operators, which are the main interest of the paper. Let \({{\mathcal {H}}}\) be a Hilbert space. For any \(u, v \in {{\mathcal {H}}}\), the notation \(u\otimes v\) denotes the operator on \({{\mathcal {H}}}\) defined by the formula

It is well known that if A is a compact operator on an infinite-dimensional Hilbert space \({{\mathcal {H}}}\), then it admits the representation

where \(\{u_j : j \in \mathbf{N}\}\) and \(\{v_j : j \in \mathbf{N}\}\) are orthonormal sets in \({{\mathcal {H}}}\). See, e.g., [5, 11].

We remind the reader of our notation (1.2). For each each \(A \in {{\mathcal {C}}}_p^+\), we define

We think of \(\Vert A\Vert _{p,\text {ess}}^+\) as the essential \(\Vert \cdot \Vert _p^+\)-norm of A, hence the notation.

Proposition 2.6

For every operator \(A \in {{\mathcal {C}}}_p^+\), we have

Proof

For an \(A \in {{\mathcal {C}}}_p^+\), there are orthonormal sets \(\{u_j : j \in \mathbf{N}\}\) and \(\{v_j : j \in \mathbf{N}\}\) such that

Therefore it is obvious that \(\Vert A\Vert _{p,\text {ess}}^+ \le \Phi _{p,\text {ess}}^+(\{s_j(A)\})\). To prove the reverse inequality, for every \(k \in \mathbf{N}\) we define the orthogonal projection

If F is a finite-rank operator, then \(\Vert E_kF\Vert _p^+ \rightarrow 0\) as \(k \rightarrow \infty \). Therefore

where the last \(=\) follows from Proposition 2.2. Since this inequality holds for every finite-rank operator F, we conclude that \(\Vert A\Vert _{p,\text {ess}}^+ \ge \Phi _{p,\text {ess}}^+(\{s_j(A)\})\). Recalling Proposition 2.3, the proof is complete. \(\square \)

With the above preparation, we now prove Theorem 1.2 in a more explicit form:

Theorem 2.7

For each \(A \in {{\mathcal {C}}}_p^+\), there is a \(K \in {{\mathcal {C}}}_p^{+(0)}\) such that

Proof

Given an \(A \in {{\mathcal {C}}}_p^+\), we again represent it in the form

where \(\{u_j : j \in \mathbf{N}\}\) and \(\{v_j : j \in \mathbf{N}\}\) are orthonormal sets. Applying Proposition 2.5 to the sequence \(\{x_j\} = \{s_j(A)\}\), we obtain \(y = \{y_j\} \in d_p^+\) and \(z = \{z_j\} \in c_p^+(0)\) such that

for every \(j \in \mathbf{N}\) and \(\Phi _p^+(y) = \Phi _{p,\text {ess}}^+(\{s_j(A)\})\). Define

The condition \(z \in c_p^+(0)\) obviously implies \(K \in {{\mathcal {C}}}_p^{+(0)}\). From (2.20) we obtain

Therefore

Now an application of Proposition 2.6 completes the proof. \(\square \)

3 A Contrast to the Classic Case

As we mentioned in the Introduction, the result that \({\mathcal K}({{\mathcal {H}}})\) is proximinal in \({{\mathcal {B}}}({{\mathcal {H}}})\) has refinements within specific classes of operators. One such class of operators are the Hankel operators \(H_f : H^2 \rightarrow L^2\), where \(H^2\) is the Hardy space on the unit circle \(\mathbf{T} \subset \mathbf{C}\). Specifically, [1, Theorem 3] tells us that for \(f \in L^\infty \), the best compact approximation to the Hankel operator \(H_f : H^2 \rightarrow L^2\) can be realized in the form of a Hankel operator \(H_g\).

In other words, [1, Theorem 3] says that \(H_f\) has a best compact approximation that is of the same kind, a Hankel operator. Using the method in [1], this result of best compact approximation can be easily generalized to Hankel operators on the Hardy space \(H^2(S)\) on the unit sphere \(S \subset \mathbf{C}^n\).

The fact that each \({{\mathcal {C}}}_p^{+(0)}\) is proximinal in \({\mathcal C}_p^+\) raises an obvious question: Suppose that we have an operator A in a natural class \({{\mathcal {N}}}\), and suppose we know that \(A \in {{\mathcal {C}}}_p^+\), can we find a best \({\mathcal C}_p^{+(0)}\)-approximation to A in the same class \({{\mathcal {N}}}\)? In particular, what if \({{\mathcal {N}}}\) is the class of Hankel operators on \(H^2(S)\)?

In this section we show that the answer to the last question is negative. This negative answer provides a sharp contrast to the classic result [1, Theorem 3].

For the rest of the paper we assume \(n \ge 2\). Let S denote the unit sphere \(\{z \in \mathbf{C}^n : |z| = 1\}\) in \(\mathbf{C}^n\). Write \(d\sigma \) for the standard spherical measure on S with the normalization \(\sigma (S) = 1\). Recall that the Hardy space \(H^2(S)\) is the norm closure of the analytic polynomials \(\mathbf{C}[z_1,\dots ,z_n]\) in \(L^2(S,d\sigma )\) [15]. Let \(P : L^2(S,d\sigma ) \rightarrow H^2(S)\) be the orthogonal projection. Then the Hankel operator \(H_f : H^2(S) \rightarrow L^2(S,d\sigma )\) is defined by the formula

For these Hankel operators, let us recall the following results:

Proposition 3.1

[9, Proposition 7.2] If f is a Lipschitz function on S, then \(H_f \in {{\mathcal {C}}}_{2n}^+\).

Proposition 3.2

When the complex dimension n is at least 2, for any \(f \in L^2(S,d\sigma )\), if \(H_f\) is bounded and if \(H_f \ne 0\), then \(H_f \notin {\mathcal C}_{2n}^{+(0)}\).

Proof

We apply [9, Theorem 1.6] which tells us that for \(f \in L^2(S,d\sigma )\), if \(H_f\) is bounded and if \(H_f \ne 0\), then there is an \(\epsilon > 0\) such that

for every \(k \in \mathbf{N}\). Thus it follows from Proposition 2.6 that \(\Vert H_f\Vert _{2n,\text {ess}}^+ > 0\), if \(\Vert H_f\Vert _{2n}^+\) is finite to begin with. In any case, we have \(H_f \notin {{\mathcal {C}}}_{2n}^{+(0)}\). \(\square \)

As usual, we write \(z_1,\dots ,z_n\) for the complex coordinate functions. Here is the main technical result of the section:

Theorem 3.3

When the complex dimension n equals 2, we have \(\Vert H_{{\bar{z}}_1}\Vert _4^+ > \Vert H_{{\bar{z}}_1}\Vert _{4,\text {ess}}^+\).

This leads to the negative answer promised above:

Example 3.4

Let the complex dimension n be equal to 2. By Theorem 2.7, \(H_{{\bar{z}}_1}\) has a best approximation in \({\mathcal C}_4^{+(0)}\). On the other hand, it follows from the inequality \(\Vert H_{{\bar{z}}_1}\Vert _4^+ > \Vert H_{{\bar{z}}_1}\Vert _{4,\text {ess}}^+\) that if \(K \in {{\mathcal {C}}}_4^{+(0)}\) is a best approximation of \(H_{{\bar{z}}_1}\), then \(K \ne 0\). The membership \(K \in {\mathcal C}_4^{+(0)}\) implies that K is not a Hankel operator, for Proposition 3.2 tells us that \({{\mathcal {C}}}_4^{+(0)}\) does not contain any nonzero Hankel operators on \(H^2(S)\) in the case \(S \subset \mathbf{C}^2\). Thus for the class of Hankel operators on the Hardy space \(H^2(S)\), \(S \subset \mathbf{C}^2\), the analogue of Theorem 1.3 does not hold for the pair \({{\mathcal {C}}}_4^+\) and \({\mathcal C}_4^{+(0)}\), even though \({{\mathcal {C}}}_4^{+(0)}\) is proximinal in \({{\mathcal {C}}}_4^+\).

Having presented the principal conclusion of the section, we now turn to the proof of Theorem 3.3, which requires some calculation. We begin with the generality \(n \ge 2\), and then specialize to the complex dimension \(n = 2\).

We need to make one use of Toeplitz operators, whose definition we now recall. Given an \(f \in L^\infty (S,d\sigma )\), the Toeplitz operator \(T_f\) is defined by the formula

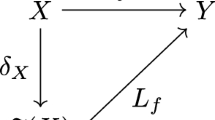

We need the following relation between Hankel operators and Toeplitz operators: We have

for every \(f \in L^\infty (S,d\sigma )\).

We follow the usual multi-index convention [15, p. 3]. Then the standard orthonormal basis \(\{e_\alpha : \alpha \in \mathbf{Z}_+^n\}\) for \(H^2(S)\) is given by the formula

Consider the symbol function \({\bar{z}}_1\). Straightforward calculation using (3.1) shows that

and that

where \(\alpha _1\) denotes the first component of \(\alpha \). Thus \(H_{{\bar{z}}_1}^*H_{{\bar{z}}_1}\) is a diagonal operator with respect to the standard orthonormal basis \(\{e_\alpha : \alpha \in \mathbf{Z}_+^n\}\), and the above are the s-numbers of \(H_{{\bar{z}}_1}^*H_{{\bar{z}}_1}\). Consequently, the s-numbers of \(H_{{\bar{z}}_1}\) are a descending arrangement of

Lemma 3.5

In the case where the complex dimension n equals 2, we have

Proof

For \(\alpha = (\alpha _1,\alpha _2) \in \mathbf{Z}_+^2\), note that \(|\alpha | - \alpha _1 = \alpha _2\) . Thus from (3.2) we obtain

It is also easy to see that \((H_{{\bar{z}}_1}^*H_{{\bar{z}}_1})^{1/2} = Y + Z\), where \(Z \in {{\mathcal {C}}}_4^{+(0)}\) and

Hence \(\Vert H_{{\bar{z}}_1}\Vert _{4,\text {ess}}^+ = \Vert (H_{{\bar{z}}_1}^*H_{{\bar{z}}_1})^{1/2}\Vert _{4,\text {ess}}^+ = \Vert Y\Vert _{4,\text {ess}}^+\), and we need to figure out the latter.

To find \(\Vert Y\Vert _{4,\text {ess}}^+\), consider \(Q = \{(x,y) \in \mathbf{R}^2 : x \ge 0 \ \text {and} \ y \ge 0\}\), the first quadrant in the xy-plane. For each \(a > 0\), define

Solving the inequality \(ay \ge (x + y)^2\) in Q, we find that

Let \(m_2\) denote the natural 2-dimensional Lebesgue measure on Q. Then

For each \(r > 1\) we define

To each \(\alpha \in \mathbf{Z}_+^2\) we associate the square \(\alpha + I^2\), where \(I^2 = [{0,1}]\times [{0,1}]\). From this association we see that

We have

Denote \(A_r = \{\alpha \in \mathbf{Z}_+^2\backslash \{0\} : \sqrt{\alpha _2}/|\alpha | > 1/r\}\). Then

On the other hand, from (3.3) we obtain

Combining these two identities, we find that

Thus the proof of the lemma will be complete if we can show that

To prove (3.4), first note that by Proposition 2.6, the left-hand side is greater than or equal to the right-hand side. Thus we only need to prove the reverse inequality. But for the reverse inequality, note that (3.3) gives us

\(\nu \in \mathbf{N}\). Hence

For a large \(k \in \mathbf{N}\), there is a \(\nu (k) \in \mathbf{N}\) such that \(N(\nu (k)) \le k < N(\nu (k)+1)\). Thus

Using (3.3) again, we find that

Thus, by Proposition 2.6, the left-hand side of (3.4) is less than or equal to the right-hand side as promised. This completes the proof of the lemma. \(\square \)

Proof of Theorem 3.3

Under the assumption \(n = 2\), (3.2) gives us \(\Vert H_{{\bar{z}}_1}1\Vert ^2 = 1/2\). Thus \(\Vert H_{{\bar{z}}_1}\Vert _4^+ \ge \Vert H_{{\bar{z}}_1}\Vert \ge 2^{-1/2}\). On the other hand, Lemma 3.5 tells us that \(\Vert H_{{\bar{z}}_1}\Vert _{4,\text {ess}}^+ = 6^{-1/4}\). Since \(2^{-1/2} > 6^{-1/4}\), it follows that \(\Vert H_{{\bar{z}}_1}\Vert _4^+ > \Vert H_{{\bar{z}}_1}\Vert _{4,\text {ess}}^+\). \(\square \)

We choose to present Lemma 3.5 separately because its proof is more elementary than the general case. But the calculation in Lemma 3.5 can be generalized to all complex dimensions \(n \ge 2\), which may be of independent interest:

Proposition 3.6

In each complex dimension \(n \ge 2\), we have

Proof

We begin with some general volume calculation. For \(j \ge 1\), let \(v_j\) denote the (real) j-dimensional volume measure. Let \(k \ge 2\) and define

for \(t \ge 0\). Elementary calculation shows that \(v_{k-1}(\Delta _k(1)) = \{(k-1)!\}^{-1}k^{1/2}\). Hence

for all \(t > 0\).

Consider the “first quadrant”

in \(\mathbf{R}^n\). We write the elements in \(Q_n\) in the form (x, y), where \(x \ge 0\) and \(y = (y_1,\dots ,y_{n-1})\) with \(y_j \ge 0\) for \(1 \le j \le n-1\). For such a y, we denote

in this proof. Adapting the proof of Lemma 3.5 to general \(n \ge 2\), we now define

for \(a > 0\). We claim that

To prove this, note that the condition \(a|y| \ge (x + |y|)^2\) implies \(a \ge x + |y|\) and, consequently, \(a \ge x\) and \(a \ge |y|\). For each \(0 \le t \le a\), define

Obviously,

For any \(\lambda , \mu \in [t^2/a,t]\), the distance between the slices

is easily seen to be

Combining this fact with (3.5), when \(n \ge 3\) we have

When \(n = 2\), we can omit the first two steps above and the last \(=\) trivially holds. Let u be the unit vector \((n^{-1/2},\dots ,n^{-1/2})\) in \(\mathbf{R}^n\). For \(s, t \in [0,\infty )\), if \(su \in \Delta _n(t)\), then \(n^{1/2}s = t\). Since \(x + |y| \le a\) for \((x,y) \in E_a\), integrating along the “u-axis" in \(\mathbf{R}^n\), we have

Then an obvious simplification of the right-hand side proves (3.6).

Let us again write each \(\alpha \in \mathbf{Z}_+^n\) in the form \(\alpha = (\alpha _1,\alpha _2)\), but keep in mind that this time we have \(\alpha _2 \in \mathbf{Z}_+^{n-1}\). Accordingly, \(|\alpha | - \alpha _1 = |\alpha _2|\). Thus from (3.2) we obtain

Again, \((H_{{\bar{z}}_1}^*H_{{\bar{z}}_1})^{1/2} = Y + Z\), where \(Z \in {{\mathcal {C}}}_{2n}^{+(0)}\) and

Hence \(\Vert H_{{\bar{z}}_1}\Vert _{2n,\text {ess}}^+ = \Vert (H_{{\bar{z}}_1}^*H_{{\bar{z}}_1})^{1/2}\Vert _{2n,\text {ess}}^+ = \Vert Y\Vert _{2n,\text {ess}}^+\), and we need to compute \(\Vert Y\Vert _{2n,\text {ess}}^+\).

For each large \(r > 1\) we define the set

To each \(\alpha \in \mathbf{Z}_+^n\) we associate the cube \(\alpha + I^n\), where \(I^n = \{(x_1,\dots ,x_n) \in \mathbf{R}^n : 0 \le x_j \le 1\) for \(j = 1,\dots ,n\}\). Obviously, there is a constant \(0< C < \infty \) such that for any \(\alpha \in \mathbf{Z}_+^n\backslash \{0\}\) and any (x, y) \(\in \) \(\alpha + I^n\), we have

Write \(N(r) = \text {card}(A_r)\). From (3.7) it is easy to deduce that \(N(r) = v_n(E_{r^2}) + o(r^{2n})\). Combining this fact with (3.6), we obtain

where we denote

For convenience let us write \(dy = dy_1\cdots dy_{n-1}\) on \(\mathbf{R}^{n-1}\). We have

where the third \(=\) follows from (3.6) and (3.9). Thus

On the other hand, from (3.8) we obtain

Combining these two identities, we find that

Recalling (3.9), the proof of the proposition will be complete if we can show that

As in the proof of Lemma 3.5, we first note that by Proposition 2.6, the left-hand side of (3.10) is greater than or equal to the right-hand side. Thus we only need to prove the reverse inequality. But for the reverse inequality, note that (3.8) gives us

\(\nu \in \mathbf{N}\). Hence

Once we have this, by the argument at the end of the proof of Lemma 3.5, the right-hand side of (3.10) is greater than or equal to the left-hand side. This completes the proof. \(\square \)

The point that we try to make with Proposition 3.6 is that it is not easy to come up with functions f on \(S \subset \mathbf{C}^n\) such that \(\Vert H_f\Vert _{2n}^+ > \Vert H_f\Vert _{2n,\text {ess}}^+\). Theorem 3.3 says that the function \({\bar{z}}_1\) on \(S \subset \mathbf{C}^2\) has this property. So what about the function \({\bar{z}}_1\) on \(S \subset \mathbf{C}^3\)? In the case \(n = 3\), Proposition 3.6 gives us

On the other hand, the obvious lower bound that we obtain from (3.2) in the case \(n = 3\) is \(\Vert H_{{\bar{z}}_1}\Vert _6^+ \ge 3^{-1/2}\). Since \(3^{-1/2} < 15^{-1/6}\), this is of no use to us. The difficulty here is to obtain an estimate of \(\Vert H_{{\bar{z}}_1}\Vert _{2n}^+\) that is close to its true value. In view of this, it is somewhat surprising that we can actually calculate the essential norm \(\Vert H_{{\bar{z}}_1}\Vert _{2n,\text {ess}}^+\).

In the case \(n = 2\), we do not know how close the lower bound \(\Vert H_{{\bar{z}}_1}\Vert _4^+ \ge 2^{-1/2}\) is to the true value of \(\Vert H_{{\bar{z}}_1}\Vert _4^+\). So it is really a matter of luck that the apparently crude lower bound \(\Vert H_{{\bar{z}}_1}\Vert _4^+ \ge 2^{-1/2}\) in the case \(n = 2\) is good enough to give us Example 3.4, which is the main purpose of the section. As of this writing, Example 3.4 is the only example of its kind that we are able to produce.

Data availability

Data sharing is not applicable to this article as no data sets were generated or analyzed during the current study.

References

Axler, S., Berg, I., Jewell, N., Shields, A.: Approximation by compact operators and the space \(H^\infty + C\). Ann. Math. (2) 109, 601–612 (1979)

Berg, I.: An extension of the Weyl–von Neumann theorem to normal operators. Trans. Am. Math. Soc. 160, 365–371 (1971)

Connes, A.: The action functional in noncommutative geometry. Commun. Math. Phys. 117, 673–683 (1988)

Connes, A.: Noncommutative Geometry. Academic Press, San Diego (1994)

Conway, J.: A Course in Functional Analysis. Graduate Texts in Mathematics, vol. 96, 2nd edn. Springer, New York (1990)

Dixmier, J.: Existence de traces non normales. C. R. Acad. Sci. Paris Sér. A-B 262, A1107–A1108 (1966)

Engliš, M., Guo, K., Zhang, G.: Toeplitz and Hankel operators and Dixmier traces on the unit ball of \({ C}^n\). Proc. Am. Math. Soc. 137, 3669–3678 (2009)

Engliš, M., Zhang, G.: Hankel operators and the Dixmier trace on the Hardy space. J. Lond. Math. Soc. (2) 94, 337–356 (2016)

Fang, Q., Xia, J.: Schatten class membership of Hankel operators on the unit sphere. J. Funct. Anal. 257, 3082–3134 (2009)

Fang, Q., Xia, J.: Hankel operators on weighted Bergman spaces and norm ideals. Complex Anal. Oper. Theory 12, 629–668 (2018)

Gohberg, I., Krein, M.: Introduction to the Theory of Linear Nonselfadjoint Operators, American Mathematical Society Translations of Mathematical Monographs, vol. 18. Providence (1969)

Jiang, L., Wang, Y., Xia, J.: Toeplitz operators associated with measures and the Dixmier trace on the Hardy space. Complex Anal. Oper. Theory 14(2), Paper No. 30 (2020)

Kato, T.: Perturbation Theory for Linear Operators. Springer, New York (1976)

Kuroda, Sh.: On a theorem of Weyl–von Neumann. Proc. Jpn. Acad. 34, 11–15 (1958)

Rudin, W.: Function Theory in the Unit Ball of \({ \text{ C }}^n\). Springer, New York (1980)

Schatten, R.: Norm Ideals of Completely Continuous Operators. Springer, Berlin (1970)

Upmeier, H., Wang, K.: Dixmier trace for Toeplitz operators on symmetric domains. J. Funct. Anal. 271, 532–565 (2016)

Voiculescu, D.: Some results on norm-ideal perturbations of Hilbert space operators. J. Oper. Theory 2, 3–37 (1979)

Voiculescu, D.: On the existence of quasicentral approximate units relative to normed ideals, I. J. Funct. Anal. 91, 1–36 (1990)

Xia, J.: Diagonalization modulo norm ideals with Lipschitz estimates. J. Funct. Anal. 145, 491–526 (1997)

Xia, J.: A condition for diagonalization modulo arbitrary norm ideals. J. Funct. Anal. 255, 1039–1056 (2008)

Xia, J.: Bergman commutators and norm ideals. J. Funct. Anal. 263, 988–1039 (2012)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Mihai Putinar.

Dedicated to the memory of Jörg Eschmeier.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Multivariable Operator Theory. The Jörg Eschmeier Memorial” edited by Raul Curto, Michael Hartz and Mihai Putinar.

Rights and permissions

About this article

Cite this article

Fang, Q., Xia, J. Best Approximations in a Class of Lorentz Ideals. Complex Anal. Oper. Theory 16, 51 (2022). https://doi.org/10.1007/s11785-022-01220-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11785-022-01220-z