Abstract

In telemedicine-based healthcare system, such as cardiac health monitoring system, large amount of data needs to be stored and transferred. This requires stupendous bandwidth and affects the channel efficiency. The main objective is to develop an efficient compression technique for solving such problems in healthcare systems. In this work, the coalition of empirical mode decomposition (EMD) and tunable quality wavelet transform (TQWT) scheme has been proposed for ECG signal compression with a suitable decomposition level. Thus, the maximum energy is packed for fewer coefficients which have a significant contribution to the original signal. The dynamic thresholding and dead-zone quantization are evaluated, to discard the wavelet coefficients with a small value near zero. Subsequently, a run-length encoding (RLE) lossless compression scheme is employed to encode the wavelet coefficients. The presented technique was evaluated on the Massachusetts Institute of Technology-Beth Israel Hospital (MITDB) arrhythmias dataset which contain regular and irregular heart rhythm. The compression ratio (CR%), percent root-mean-square error (PRD%), normalized PRD (NPRD%), quality score (QS), and signal-to-noise ratio (SNR) of 33.11, 4.35, 8.21, 7.59, and 51.09 have been achieved, after implementing on 48 ECG records with 30-min duration. The presented method was also implemented for normal and abnormal heartbeat classification for validation. The random forest algorithm (RFA) is employed for the classification of cardiac rhythm. The results show minimal distortion with an improved reconstruction of a signal after the compression and show a better performance than the state-of-art technique.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

ECG is the most common method to record the electrical activity of heart rhythm. In recent years, cardiovascular disease has become the leading cause of death worldwide [1]. So, biomedical signals are widely used in medical fields to examine cardiac disorders [2]. The main components of ECG signals are P-wave, QRS complex, T-wave, U-wave, segments, and intervals. Telehealth systems must be capable of transmitting ECG signals rapidly and storing them efficiently. When a patient with cardiac problems is admitted, a long-term ECG recording is normally accomplished [3]. Sometimes, 24–48 h of ECG are recorded continuously. Hence, a vast number of data are collected while a constant ECG monitoring system [4]. This creates a significant load for fast data transfer and efficient data storage [5]. So, to reduce the amount of data, the signal compression technique has gained popularity. Minimum loss and high compression ratio are two main aspects of effective compression methods. For efficient storage and transmission of ECG signals, many compression techniques have been developed. Typically, these techniques can be categorized as lossless and lossy compression. In lossless compression, the compression ratio is low but there is no loss of information. The lossy technique has a high compression ratio with low loss of data and is often used. Further, lossy compression is divided into three parts: direct, parameter extraction, and transform-based technique [6].

To remove the redundancy of the ECG signal, direct method is used in the time domain [7]. Turning point [8], amplitude zone epoch coding (AZTEC) [9], and coordinates reduction time encoding system (CORTES) [10] are the direct method compression techniques. Muller et al. [8] proposed a method based on a turning point through which sampling points are analysed. The raw ECG signal sample points are replaced by slopes and achieve an improved compression ratio. In the parameter extraction method, specific feature signals are extracted, and these features are encoded and compressed [10]. Artificial neural networks (ANN), vector quantization, and peak picking [11, 12] are some techniques involved in this method. Cohen et al. [12] proposed a method based on vector-quantized by taking the structural features for compression of the ECG signal. Akazawa et al. [13] compressed the ECG signal using Huffman coding and the multi-template method. Deepu et al. [14] developed a technique using an adaptive linear data prediction scheme for QRS detection and compression of ECG signals.

The transform method shows the superiorities of the high-quality compression method. In this method, the time domain is converted into a frequency domain. Hence, the transform-based methods are energy contained, and the signals are decomposed into different coefficients at various resolution levels. Sub-band coding [15], discrete cosine transforms [16], discrete Fourier transform (DFT) [17], discrete wavelet transforms [18, 19], and singular value decomposition [11] have been widely used in the compression of ECG signals. Peng et al. [20] used the lifting wavelet transformation method. Cetin et al. [21] proposed a wavelet transform extrema scheme for the compression of ECG signals.

In [22] and [23], ECG compression based on the empirical mode decomposition (EMD) method was proposed. The spline fitting technique was used for the reconstruction of an ECG signal which degrades the performance of the signal and contains a higher error rate. Another limitation is that there is a mode mixing problem occurs, which results in more distortion of the signal. In the past few decades, the wavelet transform method has been interested a gain among researchers. The signal is decomposed into multi-level sub-bands, which gives high energy, and thus, higher compression is achieved. The wavelet transform method has many advantages but still contains some limitations that quality factor is not possible to enhance the signal efficiency, and the selection of the mother wavelet is another challenging task. The author [24] proposed a new method with tunable quality wavelet transform (TQWT) which has the flexibility of tuning the input parameters. The Q-factor in the TQWT technique has presumed oscillatory behaviour of the signal. The lower and higher quality factor is utilized for no oscillatory behaviour and processing of the signal. In TQWT, two-channel filter banks (AFB) with scaling factors are employed for the reconstruction of the signal. In [25], the tunable-Q wavelet transform method is used for the detection of sleep apnoea in ECG signals. In [26], TQWT is optimized using a genetic algorithm. [19] proposed a method for ECG compression based on a tunable-Q wavelet transform method that determines the R-peak components of the ECG signal and has high computational complexity. The vast majority of compression methods fall short of providing perfect reconstruction of a compressed signal. So, to overcome these limitations, a novel technique has been proposed which gives better and improved results over existing techniques. The main goal of this study is to attain a higher compression ratio, with minimizing the percentage root difference without compromising the fidelity of the reconstructed ECG signal. Secondly, it also provides a convenient or secure transmission of biomedical signals for various healthcare applications by preserving the clinical characteristics of the signal. Thus, the algorithm allows for more efficient storage and transmission of ECG data while maintaining the accuracy and reliability of the information conveyed. The main significant contribution of the presented work is as follows:

-

In this paper, we proposed a novel method for the compression of ECG signals based on EMD and tunable quality wavelet transform (TQWT) technique.

-

The ECG signal is distributed into intrinsic mode function (IMF), and further, the TQWT technique has been implemented with fewer transform coefficients with higher energy. The signal is divided into upper sub-band coefficients and lower sub-band coefficients up to six levels.

-

The adaptive thresholding has been considered with appropriate signal level having efficient information about the cardiac rhythm.

-

After that, dead-zone quantization and run-length encoding schemes have been employed to encode the wavelet coefficients.

-

The inverse TQWT technique is executed for the reconstruction of the original ECG signal.

-

The results are executed in 30 min and 2 min at the MITDB arrhythmias database and show better results than the state-of-the-art technique.

The rest of the paper is organized as follows: Sect. 2 describes the tunable-Q wavelet transform method, materials, and method defined in Sect. 3, Sect. 4 consists of the methodology, results, and discussion described in Sect. 5, and Sect. 6 contains the conclusion of the proposed method.

2 Tunable-Q wavelet transform method (TQWT)

The TQWT has recently high advancement for the detection of cardiac arrhythmias and analysing of heart-related problems [27,28,29]. In this study, tunable-Q wavelet transforms with empirical mode decomposition for compression of ECG signal have been proposed. Due to the non-stationary behaviour of the ECG signal, the TQWT decomposes the time-series signal into various frequency coefficients using a wavelet with different ‘Q’ factors. In tunable quality wavelet transform, two scaling factors α and β are utilized for a lower-pass and higher-pass filtering, respectively. Figure 1 depicts the TQWT single-stage filter bank.

The lower-pass sub-bands signal \({L}_{{\text{l}}}(n)\) and higher-pass sub-bands signal \({G}_{{\text{h}}}(n)\) are obtained using a lower-pass filter \({M}_{{\text{l}}}({\omega }_{{\text{f}}})\) and higher-pass filter \({C}_{{\text{h}}}({\omega }_{{\text{f}}})\) with scaling factors α and β. The reconstruction signal Z(n) is effectuated in TQWT using the relation [24]:

where \(X\left({\omega }_{{\text{f}}}\right)\) is a Fourier transform of the input signal x(n). The intervals \(\theta \), \(\vartheta ,\), and \(\mu \) are defined as follows:

The reconstruction of the signal Z(n) requires the conditions, \(Z\left({\omega }_{{\text{f}}}\right)=X({\omega }_{{\text{f}}})\). Therefore, the lower-pass filter \({M}_{{\text{l}}}\left({\omega }_{{\text{f}}}\right)\) and higher-pass filter \({C}_{{\text{h}}}\left({\omega }_{{\text{f}}}\right)\) should satisfy the following constrain [26]:

The pass band of \({M}_{{\text{l}}}\left({\omega }_{{\text{f}}}\right)\) and the stop band \({C}_{{\text{h}}}\left({\omega }_{{\text{f}}}\right)\) are constituted by the interval \(\theta ,\) while the stop band \({M}_{{\text{l}}}\left({\omega }_{{\text{f}}}\right)\) and pass band \({C}_{{\text{h}}}\left({\omega }_{{\text{f}}}\right)\) are constituted by the interval \(\mu [26]\)

where \(\varnothing = \frac{1}{2}(1+{\text{cos}}{\omega }_{{\text{f}}})\sqrt{2-{\text{cos}}{\omega }_{{\text{f}}}}\). In Eqs. (6) and (7), \(\varnothing \) represents the frequency response of daubechies with two vanishing moments. For N length of an input signal, the maximum number of decomposition levels is followed as [24]:

The relation between the TQWT input parameters and scaling factor can be determined as follows:

Further, 0 < α < 1, 0 < β \(\le \) 1, and α + β > 1 satisfy the following relationship to process over-sampling and achieve perfect reconstructions.

3 Material and methods

In the proposed method, MITDB arrhythmias database was considered for the validation of results. The database contains forty-eight half-hours of ECG signals ranging from 22 to 89, including 22 females aged 22–89 and 25 males aged 32–89 [30]. The first 23 records contain various cardiac disorders, and the other 25 records contain different heart abnormalities. The ECG signal is digitized at 360 samples per second with 11 bits resolution of 10 mV which are included in the database.

4 Methodology

In this work, firstly, the high-frequency noise is removed using pre-processing technique. The ECG signal contains various noises which corrupt the original signal. So, to remove these types of noise, median filter and Savitzky–Golay (SG) filter have been implemented.

Then, the EMD method is applied to obtain the first intrinsic mode function by shifting process. After that, the ECG signal is divided into various sub-band coefficients upto six levels using the TQWT technique, which contains maximum energy of the signal with few coefficients transform. Further, dead-zone quantization (DQZ) and thresholding are employed to quantize wavelet coefficients. Then, run-length encoding is implemented for encoding the coefficients and finally, the compressed ECG signal is obtained. Figure 2 describes the following steps for the presented compression technique.

4.1 Pre-processing of ECG signal

The ECG signal is taken from the MITDB arrhythmias database, and gaussian noise is added to remove the redundancy from the signal. The median filter is used to remove a baseline drift from the signal. Two state median filters with 200-ms and 600-ms window sizes are employed to make the signal free from baseline drift. The median filter improves the cross-correlation with minimum loss of information present in the signal. Firstly, the original ECG signal \(x^{\prime}\left[n\right]\) is considered with the window size of fs/2, and the output at the first level is \({s}_{{\text{med}}1}\left[n\right].\) After that, \({s}_{{\text{med}}1}\left[n\right]\) is considered as input for the second level, and the output at the second stage is \({s}_{{\text{med}}2}\left[n\right]\). Finally, the second stage output \({s}_{{\text{med}}2}\left[n\right]\) is subtracted from the original ECG signal \(x^{\prime}\left[n\right]\) and achieved baseline free signal O[n].

Then, Savitzky–Golay filter is used to smooth and enhance the quality of the signal. Figure 3 shows the smooth and baseline-free ECG signal.

4.2 Proposed EMD + TQWT algorithm

4.2.1 Empirical mode decomposition implementation

Empirical mode decomposition (EMD) is utilized after filtering method. EMD is adaptive and instinctive, with fully function derived from the data. It determines the intrinsic oscillatory models in time scales of signal characteristics, and intrinsic mode function (IMF) is obtained by decomposing the signals [23]. For a function to be considered as IMF, it must satisfy two requirements; firstly, the number of local extremes and a number of zero crossings in entire dataset must be equal or differ by most one. Secondly, the envelope defined by local maxima and local minima should have a mean value of zero at any point. The shifting process is iteratively employed to attain the first IMF in ECG signal. In this study, the mean \(a\left[n\right]\) the upper and lower envelope is employed at first shifting process and then subtracted from the filtered signal. The first IMF (\({H}_{1}\)) is given as follows:

where \(O\left[n\right]\) is the filtered signal, and \(a\left[n\right]\) is the mean of the envelope.

The relation between the first IMF function (\({H}_{1})\) and shifting function is expressed as follows [19]:

The \({c}_{1,l}(n)\) is the shifting function and constructed by cube spline fitting of maxima [19]. To achieve the first intrinsic mode function, the normalized standard deviation (\({S}_{{\text{d}}}\)) is calculated from the next shifting functions [30].

The value of \({S}_{{\text{d}}}\) is defined between the range of 0.2 and 0.3 [19] by the hit and trial method, the shifting process is terminated. EMD contains a mode mixing problem that leads to degrading the quality of an ECG signal. Thus, in the proposed technique, an ECG signal is decomposed to first IMF functions, and the shifting function with higher amplitude values is considered further to achieve compression, other are discarded. Figure 4 depicts the flow diagram for the proposed method. The output after empirical mode decomposition method is represented as follows:\(x\left[n\right].\)

4.2.2 Execution of TQWT method

After the empirical mode decomposition, for signal \(x\left[n\right]\) length N, the following steps are illustrated for implementing the TQWT technique:

-

I.

From the given parameters Q and r, the scaling factors α and β are calculated and follow the conditions: \(Q\ge 1, r>1.\)

-

II.

The parameters used for compression of an ECG signal are \(Q\), \(r,\), and \(J\). The performance of the presented method varies with the variation of these parameters. The value of \(Q\) = 5.6, \(r\) = 2, and \(J\) = 6 was tested on the entire ECG dataset for upto 2-min duration applying the error-trial method, and signals are reconstructed with few errors.

-

III.

The discrete Fourier transform (DFT) and normalized DFT are performed on the signal and follow as:

$${X}^{\mathrm{^{\prime}}}\left(k\right)= \sum_{n=0}^{N-1}x\left(n\right){\text{exp}}(-j\frac{2\pi }{N}nK), 0\le K\le N-1$$(14)$${X}_{u}^{\mathrm{^{\prime}}}\left(k\right)= \frac{1}{\sqrt{N}}\sum_{n=0}^{N-1}x\left(n\right){\text{exp}}(-j\frac{2\pi }{N}nK), 0\le K\le N-1$$(15)Here \({X}^{\prime}\left(k\right)\) and \({X}_{u}^{\prime}\left(k\right)\) defined as discrete Fourier and unitary DFT. The \({X}_{u}^{\prime}\left(k\right)\) signal has also decomposed the signal by analysing filter bank (AFB) upto \(J\) level of decomposition. Therefore, each filtered output signal must be sampled.

-

IV.

In the \({j}\text{th}\) level of decomposition, three parameters are considered and followed:

$${N}_{\text{l}}^{j}=2 \times \text{round}\left(0.5\times N {\alpha }^{j}\right), 1\le j\le J$$$${N}_{\text{h}}^{j}=2 \times \text{round}\left(0.5\times N\beta {\alpha }^{j-1}\right), 1\le j\le J$$$${N}^{j}=N, {N}^{j}= {N}_{0}^{j-1}, 2\le j\le J$$where \({N}_{\text{l}}^{j}\), \({N}_{\text{h}}^{j}\), and \({N}^{j}\) describes the length of lower frequency, higher frequency, and input signal, where \(1\le j\le J\), respectively.

-

V.

For the next level of decomposition, low sub-band frequencies are used. At \(J\) level of decomposition, each sub-band energy distribution is controlled by \(r\) within the sub-band that achieved high energy.

-

VI.

After that, the inverse TQWT has applied for the reconstruction of the signal, to get the lower-pass and higher-pass sub-bands output \( p_{\text{l}}^{\prime } \left( j \right) \) and \( d_{\text{h}}^{\prime } \left( j \right) \) as transform coefficients.

-

VII.

Subsequently, the thresholding and DZQ technique is executed. To encode the transform coefficients, RLE encoding scheme is applied.

-

VIII.

The maximum compression ratio and minimum PRD ratio are calculated. The algorithm shows the implementation of the EMD + TQWT method for the compression of an ECG signal.

4.3 Algorithm for proposed method

-

1.

Function proposed EMD_TQWT algorithm:

-

2.

Define the input: \(x\left(n\right), Q, r,J\)

-

3.

Output: \({p}_{l}{\prime}(j)\) and \({d}_{h}{\prime}(j)\) \(for Q\ge 1, r>1 and J\in N\)

-

4.

Scaling factor: α and β, \(\alpha = \frac{1-\beta }{r}, \beta = \frac{2}{(Q+1)}\),

-

5.

\({F}_{s}=\) Sampling frequency

-

6.

\(N=\) Length of input signal x(n)

-

7.

\({X}{\prime}\left(k\right)=\mathrm{DFT of input signal}\)

-

8.

\({X}_{u}^{\mathrm{^{\prime}}}\left(k\right)=\mathrm{unitary DFT of }x(n)\)

-

9.

\(for j=1 to j do\)

-

10.

\({N}_{l}^{j}=2 \times round\left(0.5\times N {\alpha }^{j}\right)\)

-

11.

\({N}_{h}^{j}=2 \times round\left(0.5\times N\beta {\alpha }^{j-1}\right)\)

-

12.

\(\left[X\left(n\right), W\left(n\right)\right]=AFB({X}_{u}^{\mathrm{^{\prime}}}\left(k\right), {N}_{l}^{j}, {N}_{h}^{j})\)

-

13.

\({p}_{l}^{\mathrm{^{\prime}}}\left(j\right)=UIDFT(W\left(n\right))\)

-

14.

\({d}_{h}^{\mathrm{^{\prime}}}\left(j\right)=UIDFT(X\left(n\right))\)

-

15.

Calculate the CR and PRD from the reconstructed signal

-

16.

If (PRD = = min) and (CR = = max), then

-

17.

Selected values of \(Q, r,J\) are optimum values

-

18.

else

-

19.

Go to step 2

-

20.

end

-

21.

END

4.4 Dead-zone quantization (DQZ) and thresholding

The main goal of ECG compression is to increase the compression ratio with minimal loss of information. This can be achieved through thresholding and quantization methods [31]. In TQWT, the signals are decomposing into transform coefficients. Further, the thresholding and quantization are performed on the coefficients using dead-zone quantization [32]. In the DQZ method, a small interval [\({-T}_{{\text{hd}}}, {T}_{{\text{hd}}}\)] is chosen around zero, where \({T}_{{\text{hd}}}\) is known as threshold value [19]. The sub-bands coefficients greater than \({-T}_{{\text{hd}}}\) are quantized to the nearest level, and coefficients smaller than \({T}_{{\text{hd}}}\) are converted to zero [32]. Thus, by selecting the suitable threshold value, compression efficiency can be improved without any significant loss. The dead-zone quantization is followed as;

where \(B_{n}^{\prime }\) represents \(n\text{th}\) decision interval tunable-Q wavelet coefficients, and \( V_{n}^{\prime } \) describes the quantized output value. The step size in quantization is denoted by \(\Delta \), and half of step size is represented by \(\delta .\) The transform coefficients are determined by comparing the thresholding (\({T}_{{\text{hd}}})\) value in the process of quantization. In the present study, the value of threshold is taken using the relation \({T}_{{\text{hd}}}= \frac{M-N}{40}\) [19]. \(M\) and \(N\) described the maximum and minimum value of sub-bands, and 40 is the threshold constant which is selected based on trial-and-error method by varying the \({T}_{{\text{hd}}}\) values in the intervals. The step size (\(\Delta )\) satisfies the condition \(\Delta <2{T}_{{\text{hd}}}\). The value of \(\Delta \) is determined by the relation \(\Delta =\gamma {T}_{{\text{hd}}}\), where \(\gamma \) is chosen from the range from 1.20 to 1.80 as discussed in the literature [32]. In the proposed method, the value of \(\gamma =1.50\) gives better performance for compression of ECG signal on all records at MIT-BIH arrhythmias database.

After the quantization, the wavelet coefficients are converted into integer numbers which improve the encoding performance. This step minimizes the bit requirement to store the coefficients in computer memory and reduces bit required to encode the transform coefficients.

4.5 Run-length encoding

Run-length encoding (RLE) is a lossless encoding method for representing data in a simple encoding system. An RLE method eliminates redundant sequences of data and reduces data size with minimal loss of information [33]. The detailed coefficients repeat the values at intervals of time. Based on the data length, each sequence of the RLE system is coded as a codeword. Subsequently, the compressed data are obtained which is stored and transmitted in a telemedicine system. The inverse run-length encoding is implemented at the receiver side for the reconstruction of the signal.

5 Results and discussion

To validate the efficacy of the proposed technique, all 48 ECG recordings from MITDB arrhythmia database were employed for experimentation [34]. The sampling rate of 360 Hz with 11 bits/sample is used. Several quality measurement variables can be used to determine how well a signal is reproduced when compared with a reference signal, such as CR, PRD, NPRD, SNR, and QS.

-

I.

Compression ratio

To attain the quality of the reconstructed signal, compression ratio is evaluated. A higher compression ratio shows better compression with less bandwidth required to transmit the signal.

-

II.

Percentage root difference (PRD)

PRD to measure distortion between the original signal and reconstructed signal.

-

III.

Quality score (QS)

The QS demonstrates the effectiveness of the compression method.

-

IV.

Signal-to-noise ratio (SNR)

SNR measures the amount of noise reside before and after the reconstruction of signal decibels (dB). It is described as follows;

Here, \(x\left(i\right) and {x}_{avg}\) gives the original and average of ECG signal.

-

V.

Normalized percentage root-mean-square difference (NPRD)

The normalized PRD distinguishes the original signal from the average signal of the rebuilt signal.

The presented methodology is implemented on all 48 ECG records at the MITDB database available on physionet [33]. In this work, two separate ECG recordings have been taken from lead II, one for a duration of 30 min and another for a duration of 2 min. The dataset contains different cardiac arrhythmias and irregular heartbeats. The results obtained from the analysis are summarized in Table 1, which presents valuable insights into the effectiveness of the proposed technique. The average CR of 33.11, PRD of 4.35, NPRD of 8.21, QS of 7.59, and SNR of 51.09 dB obtained at 30-min records, respectively. Table 2 shows the experimental results for 2-min recording and gives average CR, PRD, NPRD, QS, and SNR of 35.57, 4.38, 5.81, 8.10, and 57.58 dB on the MITDB database. Figure 5 shows the ECG signal and compressed ECG signal at record no. 100 and shows a compression ratio of 40.32, PRD of 6.56, and quality score of 6.14, respectively. Figure 6 depicts the ECG signal and reconstructed signal at record no. 117 which contain irregular ectopic beats. Upon meticulous visual scrutiny of the proposed method, it has been discerned that the reconstructed signals exhibit a remarkable congruity with the original signals as compared to [7, 33]. The conspicuous similarity between the original and reconstructed signals is a testament to the efficacy of the proposed method and highlights its potential utility as a robust signal reconstruction technique in pertinent domains as depicted in Figs. 5 and 6.

Table 3 illustrates the performance of presented algorithm and compared in terms of parameters CR, PRD, QS, and SNR, which have been widely used in the literature [7, 33], and [25]. In [25], optimization technique is used for the compression of an ECG signal and gives an average CR of 22.27, PRD of 4.77, and QS of 6.54. In [33], the discrete wavelet and empirical mode decomposition method is proposed and depicts average CR, PRD, and QS of 21.56, 4.65, and 5.38, respectively. In this study, the average CR of 33.11, PRD of 4.35, and QS of 7.59 are achieved. The results show a better CR with a minimum PRD ratio and the reconstructed signal resembles the original signals as shown in Fig. 7. Thus, the proposed algorithm validates the higher compression ratio and quality of the compressed ECG signal as compared to state-of-the-art techniques. In record 117, the CR, PRD, and QS of 30.34, 1.56, and 19.44 are obtained which is better than [7, 33]. In record no. 200, the CR of 31.56, PRD of 4.34, QS of 7.27, and SNR of 64.23 are obtained which offers better performance than the recent techniques based on wavelet transform, discrete cosine, and optimization technique. In record no. 201, the PRD and SNR are low as compared to [33] but give improved CR with minimum reconstruction error. In record no. 207, the CR, PRD, and QS show lesser results as compared to [7]. However, it is noteworthy that the CR of the proposed scheme varies across different ECG records, with some records exhibiting a higher CR [25, 33] while others display a lower CR [7]. This indicates that the effectiveness of the proposed scheme is contingent upon the specific ECG record being compressed, and may be influenced by the unique characteristics and complexities of the underlying signal. Based on the performance of results, it can be inferred that the proposed scheme outperforms other techniques in terms of compression ratio, PRD, and improved quality score. The superiority of the proposed scheme is evident from the consistently higher CR values with minimum reconstruction error achieved than [7] and [33]. Hence, the proposed scheme is better than the existing technique. Figure 8 presents the CR versus PRD curves for multiple ECG records from the MITDB arrhythmia database. Notably, as the CR increases, the PRD also increases, indicating a consistent relationship between the two metrics across different ECG signals. Overall, the results provide compelling evidence for the efficacy and generalizability of the proposed ECG compression method.

In this work, the validation of results is also performed on different regular and irregular heartbeat signals at the MITDB database on ECG records no. 100, 101, 102, 107, 108, 117, and 119, respectively. Table 4 shows the compression ratio, PRD, and quality score of irregular cardiac rhythm, and results show improved results as a comparison [19, 33]. Figure 6 depicts the performance of the proposed technique on record no. 117 and depicts a better reconstruction of signal with less redundancy of data. Figure 9 shows the performance of compression ratio at different ECG records with improved results as compared to [24, 33].

The performance comparison of the presented approach in terms of CR, PRD, and QS has been compared with the existing techniques and illustrated in Table 5. In [35], amplitude zone time epoch coding was utilized on the MITBD database and achieved CR, PRD, and QS of 10, 28, and 0.36, respectively. The author proposed a method based on sub-band coding [15] and obtained the CR of 5.3, PRD of 2.6, and QS of 5.4. In [21], wavelet transform method was used for compression and ensured a compression performance at 4.03 and PRD of 5.26. In [36], discrete cosine transform method is proposed for the compression of an ECG signal. They achieved a CR of 11.49, PRD of 3.43, and QS of 3.82. [19] utilized the wavelet transform method which contains DZQ and RLE techniques for ECG compression. They achieved 20.61, 4.43, and 5.88 CR, PRD, and QS, respectively. The author presents the optimization technique with an improved CR of 22.27, PRD of 4.77, and QS of 6.54 [25]. The proposed method contains a CR of 33.11, PRD of 4.35, and QS of 7.59 which is higher than the state-of-the-art techniques. Figure 10 shows the graph between the CR and PRD at different ECG records and validates the performance of proposed scheme. Thus, from Table 5, it can be concluded that the presented methodology offered a higher compression ratio with minimum PRD and improved quality score than others. Hence, the proposed method demonstrates better performance in terms of compression ratio, distortion, and quality score in the field of ECG compression.

In Table 6, the compression ratio, PRD, and QS are taken for comparison with the previously published ECG compression technique. The proposed scheme gives a higher compression ratio than [19, 32] and [33] with improved PRD and QS, respectively. In [32], the PRD is small but the compression ratio of the presented scheme is much better than the existing technique. Figure 9 shows the CR and PRD values at different records of ECG signal, and it is observed that as the compression ratio is varying the value of PRD also varied and thus validates the proposed method. Table 6 depicts that on ECG records no. 117 and 119, the compression ratio is high, the PRD ratio is less and QS is better than the [7, 19, 32], and [33]. Figure 11 depicts the performance of the presented method with the existing technique. The method gives a high compression ratio with minimal loss of information by reconstructing the original ECG signal. Hence, the presented technique is better than the state-of-the-art technique.

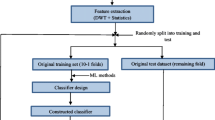

5.1 Detection of normal and abnormal heartbeat classification for validation of the proposed method

To validate the performance of EMD+TQWT technique, heartbeat classification for cardiac arrhythmias is evaluated. The features are extracted from the EMD+TQWT method, and a random forest classifier (RFA) is used for the classification of a signal. The dataset is taken from the Kaggle and contains five categories such as normal beat (NB), supraventricular beat (SB), ventricular beat (VB), fusion beat (FB), and unknown beats (QB). The dataset consists of two lead ECG signals from MITDB database. The QRS complex is detected using the Pan-Tompkins method [41]. The length of each heartbeat is taken as 272 samples, 98 samples are considered before R-peak, and 174 samples are selected after R-peak. About 70% of the data is used for training, and 30% dataset is employed for testing the model. The method is again tested after the compression, to check the quality of the signal.

Table 7 shows the heartbeat classification of normal and abnormal cardiac rhythm before and after compression, respectively. Figures 12 and 13 depict the original signal and reconstructed signal on abnormal heartbeats on records no. 106 and 123 at MITDB database. The result shows that the signal is reconstructed with minimum loss of data. Hence, the validation of the proposed method for ECG compression is profitably executed.

The result shows that the average accuracy, sensitivity, and specificity before compression are 99.90%, 97.42%, and 99.92% for a normal beat, while for an abnormal beat, 98.81%, 96.12%, and 99.57% are achieved, respectively. After the execution of the proposed technique, the average accuracy, sensitivity, and specificity after compression are 99.87%, 96.34%, and 99.23% achieved for normal heartbeat classification. The average accuracy of 98.62%, the sensitivity of 95.21%, and the specificity of 99.34% are obtained for abnormal beat classification after the compression technique. Thus, it validates the performance of the presented technique.

5.2 Discussion

In this study, a novel technique based on EMD and TQWT method is proposed for achieving efficient ECG compression. The main advantage of this work is to provide a high compression ratio with a minimum error rate and improved quality score although the clinical information is preserved as earlier. Firstly, the signal is decomposed into a set of intrinsic mode functions (IMFs), each of which represents a specific frequency component of the signal. Secondly, the TQWT transforms each IMF component into a series of coefficients that represent the energy of different frequency bands to retain only the most significant coefficients with high energy. Table 1 shows the average CR of 33.11, PRD of 4.35, NPRD of 8.21, QS of 7.59, and SNR of 51.09 dB at 30-min records, and Table 2 gives average CR, PRD, NPRD, QS, and SNR of 35.57, 4.38, 5.81, 8.10, and 57.58 dB on 2-min recording at MITDB database. Hence, the proposed approach is a highly effective compression technique that can provide a high compression ratio while maintaining the quality of the compressed data.

The second advantage of this method is that it gives a high compression ratio with a minimum PRD rate. Tables 5 and 6 show a comparative study of the presented approach with recently published works such as wavelet transform, DCT, DWT, and beta-transform method for compression of ECG signal. From Table 5, it is found that the proposed scheme gives higher CR, PRD, and QS than [7, 19, 33, 35,36,37,38] and [40]. Some researchers may focus on records containing arrhythmias or other cardiac abnormalities, as these cases can be more challenging to diagnose accurately when evaluating the performance of a particular algorithm. In [7] and [33], records no. 100, 117, and 119 are selected for comparison with the previously proposed algorithm for the validation of results. Table 6 provides the comparison between the two records, namely record no. 117 and record no. 119, which offers better results than [7, 19, 32] and [33]. Further, the compression ratio and PRD values obtained from this study are compared with the recently developed algorithm for their performance in signal reconstructions. Specifically, the PRD values for the proposed approach are below 6% for the maximum number of ECG signals, indicating their effectiveness in preserving signal fidelity while maintaining relatively low distortion. Table 7 shows the classification of normal and abnormal heartbeats before and after compression. Figures 12 and 13 depict the original signal and reconstructed signal on abnormal heartbeats using the presented technique. Thus, the validation of the proposed method is executed profitably. Therefore, it can be concluded that the proposed scheme outperforms compression with a higher compression ratio and minimum reconstruction error while maintaining the quality (QS) of the signal approaching superior to the state-of-the-art technique.

In the future, the proposed method has significant implications for various fields that require efficient data storage and transmission, such as telemedicine, Holter monitoring, and image processing. The presented technique is also applied to other biomedical signal analyses such as arterial blood pressure (BP), photoplethysmography (PPG), and electroencephalogram (EEG). The limitation of this study, the parameters are selected on the hit and trial method in the TQWT technique for compression of ECG signal. Further, more optimization techniques and other methods are used for better selection of these parameters. In this study, only a limited database is executed for the validation of results. Further investigation may be required to identify the factors contributing to the variability in CR observed for different ECG records.

5.3 Computational time

The presented methodology is executed on MATLAB Software R-2017b. The software is installed on PCs with Intel Core i5 processors, 10 generations having 4 GB RAM. The average time to process 48 records of MITDB arrhythmias is 0.30 s as compare to [7]. Calculation’s time is improved with a parallel processing computer system for real-time applications and ASIC processor proposed regulation in the medical system.

6 Conclusion

In this work, a novel ECG data compression technique based on empirical mode decomposition and tunable-Q wavelet transform method has been proposed. The ECG signal is decomposed into multiple resolution levels, to get the maximum energy at fewer coefficients. The dead-zone quantization and thresholding have been used to obtain relevant wavelet coefficients. The run-length encoding is performed to achieve transform coefficients for compression of the ECG signal. The presented technique is evaluated on the MITDB dataset which contains 48 ECG records with 2-min and 30-min time duration. For the 30-min duration, the average CR (%), PRD (%), NPRD (%), QS (%), and SNR (dB) of 33.11, 4.35, 8.21, 7.59, and 51.09 dB are achieved. In the 2-min duration, the average CR of 35.57, PRD of 4.38, NPRD of 5.81, QS of 8.10, and SNR of 57.58 dB, respectively, are achieved. The results show a better performance than the existing technique. The presented technique is also implemented for normal and abnormal heartbeat classification. The random forest algorithm is employed for the classification of cardiac rhythm. The MITDB dataset is taken which contains regular and irregular cardiac beats. The data are divided into 30% for testing and 70% for training purposes. The results show minimal distortion and improved reconstruction of the signal. The proposed method is further improved by using different resolution levels and filtering techniques. The present methodology can be employed in telemedicine and other healthcare monitoring systems.

Availability of data and materials

We have used publicly available standard database which are available on https://archive.physionet.org/cgi-bin/atm/ATM.

References

World Health Statistics 2013, World Health Org., Geneva, Switzerland (2013)

Tsai, T.-H., Kuo, W.-T.: An efficient ECG lossless compression system for embedded platforms with telemedicine applications. IEEE Access 6, 42207–42215 (2018)

Sugano, H.. et al.: Continuous ECG data gathering by a wireless vital sensor—evaluation of its sensing and transmission capabilities. In: Proc. IEEE ISSSRA, Taichung, Taiwan, pp. 98–102 (2010)

Singh, B., Kaur, A., Singh, J.: A review of ECG data compression techniques. Int. J. Comput. Appl. 116(11), 39–44 (2015)

Wang, F., Ma, Q., Liu, W., Chang, S., Wang, H., He, J., Huang, Q.: A novel ECG signal compression method using spindle convolutional auto-encoder. Comput. Methods Programs Biomed. 175, 139–150 (2019)

Singhai, P., Kumar, A., Ateek, A., Ansari, I.A., Singh, G.K., Lee, H.N.: ECG signal compression based on optimization of wavelet parameters and threshold levels using evolutionary techniques. Circuits Syst. Signal Process. 1–29 (2023)

Kolekar, M.H., Jha, C.K., Kumar, P.: ECG data compression using modified run length encoding of wavelet coefficients for holter monitoring. IRBM 43(5), 325–332 (2022)

Mueller, W.C.: Arrhythmia detection program for an ambulatory ECG monitor. Biomed. Sci. Instrum. 14, 81–85 (1978)

Furht, B., Perez, A.: An adaptive real-time ECG compression algorithm with variable threshold. IEEE Trans. Biomed. Eng. 35(6), 489–494 (1988)

Abenstein, J.P.: Algorithms for real-time ambulatory ECG monitoring. Biomed. Sci. Instrum. 14, 73–79 (1978)

Fira, C.M.: An ECG signals compression method and its validation using NNs. IEEE Trans. Biomed. Eng. 55, 1319–1326 (2008)

Cohen, A., Poluta, M., Scott-Millar, R.: Compression of ECG signals using vector quantization. In: South African sympos on comm and signal processing, pp. 49–54 (1990)

Akazawa, K.: Adaptive data compression of ambulatory ECG using multi templates. In: IEEE Proc. of Comput. in Cardiol Conference, pp. 495–498 (1993)

Deepu, C.J., Lian, Y.: A joint QRS detection and data compression scheme for wearable sensors. IEEE Trans. Biomed. Eng. 62, 165–175 (2015). https://doi.org/10.1109/TBME.2014.2342879

Aydin, M.C., Enis Çetin, A., Köymen, H.: ECG data compression by sub-band coding. Electron. Lett. 27(4), 359–360 (1991)

Chandra, S., Sharma, A., Singh, G.: Computationally efficient cosine modulated filter bank design for ECG signal compression. IRBM 41(1), 2–17 (2020)

Ma, J., Zhang, T., Dong, M.: A novel ECG data compression method using adaptive Fourier decomposition with security guarantee in e-health applications. IEEE J. Biomed. Health Inform. 19(3), 986–994 (2015)

Cetin, A.E., Koymen, H., Cegiz Aydin, M.: Multichannel ECG data compression by multirate signal processing and transform domain coding techniques. IEEE Trans. Biomed. Eng. 40(5), 495–499 (1993)

Jha, C.K., Kolekar, M.H.: Tunable Q-wavelet based ECG data compression with validation using cardiac arrhythmia patterns. Biomed. Signal Process. Control 66, 102464 (2021)

Ziran, P.: Research and improvement of ECG compression algorithm based on EZW. Comput. Meth. Prog. Bio. 145, 157–166 (2017)

Cetin, A.E., Tewfik, A.H., Yardimci, Y.: Coding of ECG signals by wavelet transform extrema. In: Proceedings of IEEE-SP International Symposium on Time-Frequency and Time-Scale Analysis, pp. 544–547. IEEE (1994)

Wang, X., Chen, Z., Luo, J., Meng, J., Xu, Y.: ECG compression based on combining of EMD and wavelet transform. Electron. Lett. 52(19), 1588–1590 (2016)

Zhao, C., Chen, Z., Meng, J., Xiang, X.: Electrocardiograph compression based on sifting process of empirical mode decomposition. Electron. Lett. 52(9), 688–690 (2016)

Selesnick, I.W.: Wavelet transform with tunable Q-factor. IEEE Trans. Signal Process. 59(8), 3560–3575 (2011)

Pal, H.S., Kumar, A., Vishwakarma, A., Ahirwal, M.K.: Electrocardiogram signal compression using tunable-Q wavelet transform and meta-heuristic optimization techniques. Biomed. Signal Process. Control 78(2022), 103932 (2022)

Patidar, S., Pachori, R.B.: Tunable-q wavelet transform based optimal compression of cardiac sound signals. In: 2016 IEEE Region 10 Conference, TENCON, pp. 2193–2197. IEEE (2016)

Patidar, S., Pachori, R.B.: Classification of cardiac sound signals using constrained tunable-q wavelet transform. Expert Syst. Appl. 41(16), 7161–7170 (2014)

Patidar, S., Pachori, R.B.: Segmentation of cardiac sound signals by removing murmurs using constrained tunable-q wavelet transform. Biomed. Signal Process. Control 8(6), 559–567 (2013)

Patidar, S., Pachori, R.B., Garg, N.: Automatic diagnosis of septal defects based on tunable-q wavelet transform of cardiac sound signals. Expert Syst. Appl. 42(7), 3315–3326 (2015)

Sharma, N., Sunkaria, R.K., Sharma, L.D.: QRS complex detection using stationary wavelet transform and adaptive thresholding. Biom. Phys. Eng. Express 8(6), 065011 (2022)

Cetin, A.E., Köymen, H.: Compression of digital biomedical signals. In: The Biomedical Engineering Handbook: Medical Devices and Systems, pp. 3–1. CRC Press (2006)

Chen, J., Wang, F., Zhang, Y., Shi, X.: ECG compression using uniform scalar dead-zone quantization and conditional entropy coding. Med. Eng. Phys. 30(4), 523–530 (2008)

Jha, C.K., Kolekar, M.H.: Empirical mode decomposition and wavelet transform based ECG data compression scheme. IRBM 42(1), 65–72 (2021)

Abdalla, F.Y., Wu, L., Ullah, H., Ren, G., Noor, A., Zhao, Y.: ECG arrhythmia classification using artificial intelligence and nonlinear and nonstationary decomposition. Signal Image Video Process 13, 1283–1291 (2019)

Cox, J.R., Nolle, F.M., Fozzard, H.A., Oliver, G.C.: AZTEC, a preprocessing program for real-time ECG rhythm analysis. IEEE Trans. Biomed. Eng. 2, 128–129 (1968)

Jha, C.K., Kolekar, M.K.H.: ECG data compression algorithm for telemonitoring of cardiac patients. Int. J. Telemed. Clin. Pract. 2(1), 31–41 (2017)

Ahmed, S.M., Al-Ajlouni, A.F., Abo-Zahhad, M., Harb, B.: ECG signal compression using combined modified discrete cosine and discrete wavelet transform. J. Med. Eng. Technol. 33(1), 1–8 (2009)

Kumar, R., Kumar, A., Pandey, R.K.: Beta wavelet-based ECG signal compression using lossless encoding with modified thresholding. Comput. Electr. Eng. 39(1), 130–140 (2013)

Jha, C.K., Kolekar, M.H.: Diagnostic quality assured ECG signal compression with a selection of appropriate mother wavelet for minimal distortion. IET Sci. Meas. Technol. 13(4), 500–508 (2019)

Mohebbian, M.R., Wahid, K.A.: ECG compression using optimized B-spline. Multimed. Tools Appl. 1–13 (2023)

Pan, J., Tompkins, W.J.: A real-time QRS detection algorithm. IEEE Trans. Biomed. Eng. 32(3), 230–236 (1985)

Acknowledgements

The authors are thankful to the Biomedical Signal and Image Processing Group of Dr. B R Ambedkar National Institute of Technology, Jalandhar, for their interest in this work and useful comments to draft the final form of this paper. The authors greatly acknowledge the support of SERB-DST, the Government of India, sponsored Research Project sanctioned vide File No. EEQ/2018/000925) Dated: 22 March 2019 to carry out this present work. We would like to thank Dr. B R Ambedkar National Institute of Technology, Jalandhar, for the laboratory facilities and research environment to carry out this work.

Funding

This work has been supported by: SERB-DST, Government of India, and Ministry of Education, Government of India, at National Institute of Technology, Jalandhar.

Author information

Authors and Affiliations

Contributions

NS and RKS contributed to the design and implementation of the research, to the analysis of the results, and to the writing of the manuscript.

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Ethical approval

We have used publicly available standard databases in our experiment. Therefore, we do not need any ethical consent for this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sharma, N., Sunkaria, R.K. ECG compression based on empirical mode decomposition and tunable-Q wavelet transform with validation using heartbeat classification. SIViP 18, 3079–3095 (2024). https://doi.org/10.1007/s11760-023-02972-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02972-7