Abstract

In this paper, a new robust mean shift tracker is proposed by utilizing the joint color and texture histogram. The contribution of our work is to take local phase quantization (LPQ) operator advantage of texture features representation, and to combine it with a color histogram mean shift tracking algorithm. The LPQ technique can be applied to obtain the texture features which represent the object. In texture classification, The LPQ operator is much robust to blur than the well-known local binary pattern operator (LBP). Compared with traditional color histogram mean shift algorithm which considers only color statistical information of the object, the joint color-LPQ texture histogram is more robust and overcome some difficulties of the traditional color histogram mean shift algorithm. Comparative experimental results on numerous challenging image sequences show that the proposed algorithm obtains considerably better performance than several state-of-the-art methods, especially traditional mean shift tracker. The algorithm is evaluated by numerical parameters: the center location and the average overlap, it proved the tracking robustness in presence of similar target appearance and background, motion blurring.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Object tracking is one of the most significant tasks in the field of computer vision [1,2,3]. It is widely applied in military and civil field such as surveillance, visual navigation [3] and human–computer interaction [1], etc. Object tracking purpose is to detect, extract, recognize, and track a moving object, get its state parameters, and understand the behavior of the object. Although many tracking algorithms [2] have been developed in recent years, it remains a very challenging problem due to factors such as illumination changes, occlusion, background clutters, scale appearance change and fast motions.

In the literature, several related surveys [1, 4, 5] of visual object tracking have been made to investigate the state-of-the-art tracking algorithms and their potential applications. Recently, tracking algorithms can be generally categorized into two main categories: generative tracking [2, 6,7,8,9] and discriminative tracking [10,11,12,13,14,15].

Visual tracking using multiple types of visual features (intensity, color, spatio-temporal information, gradient and texture, etc) has been proved as a robust approach because features could complement each other [16]. In the literature, many tracking algorithms [4, 17,18,19,20,21,22] attempt to combine multiple features to increase the representation accuracy against appearance variations and enhancing the discriminability between the target and its background.

In this paper, we propose a new hybrid color-texture feature for tracking based mean shift algorithm, which is a generative approach. Mean Shift is a good real-time performance, simple calculation and it is insensitive to edge occlusion, rotation, deformation and background motion, as it adopts kernel histogram to represent its object model [23].

The mean shift algorithm [24] is a fast adaptive tracking procedure that finds the maximum of the Bhattacharyya coefficient given a target model and a starting region. This algorithm based on the color histogram used to depict the target region [25]. Color histogram estimates the point sample distribution to represent robustly the object appearance [17]. However, the use of color histograms to model the target object only makes the mean shift algorithm incapable to detect the spatial information which is lost and cannot distinguish between the target and the background, when the target has similar appearance [17, 26].

In order to overcome the disadvantages, multiple features have been used in combination with color histogram [4, 27]. Recently, many researchers proposed various improved methods [17, 20, 26, 28] used the joint color-texture histogram. It is more reliable than using only color histogram in tracking complex video scenes. The texture features introduce new information that the color histogram does not convey and reflect a stable spatial structure of the object, usually not subject to the impact of light and background color. The local binary pattern (LBP) method introduced by Ojala et al. [29] is a very effective technique which describes image texture features. In [17], Ning et al combined LBP technique and the color histogram mean shift tracking algorithm to construct a joint color-texture histogram algorithm. The results obtained outperformed the traditional color based method.

The LPQ operator presented in [30] uses a similar binary encoding scheme as the LBP method, where the descriptor is formed from a histogram of codewords computed for some image region. The results obtained have already shown that LPQ technique is insensitive to centrally symmetric blur that includes linear motion, out of focus, and atmospheric turbulence blur [31] and gave slightly better results for sharp texture images [30] than the LBP method. Therefore, adopting LPQ technique combined with color histogram mean shift algorithm for object tracking is a natural choice.

In this paper, we implement the LPQ to represent the target texture feature and then propose a joint color-texture histogram method for a more distinctive and effective target representation in mean shift tracker. The LPQ is firstly applied to represent the target texture features and then combined with color HSV to form a distinctive and effective target representation called joint color-LPQ histogram.

This paper is organized as follows. Section 2 proposes a short description of the mean shift tracking algorithm. The proposed joint color-LPQ texture histogram based mean shift tracker is described in Sect. 3. Section 4 gives the comparative experimental results of the proposed tracker against traditional mean shift tracker and several tracking methods. Finally, Sect. 5 gives the conclusion of this work.

2 Mean shift algorithm

Mean shift is a nonparametric estimator of gradient density. This algorithm calculates local maxima in any probability distribution [2, 3].

According to [2], in the first frame, we select a rectangular region which contains the object. Let \(\left\{ {x_i^*} \right\} _{i=1\ldots .n} \) be the normalized pixel locations of the target model, \(b(x_i^*)\) is the bin of \(x_i^*\) in the quantized feature space. The target probability model corresponds to each feature \(u=1, 2,\ldots , m\) can be expressed as:

where \(\delta \) is the Kronecker delta and C is a normalization constant so that \(\sum \nolimits _{u=1}^{m} {\hat{q}}_{u} =1\) .

Let \(\left\{ {x_i^*} \right\} _{i=1\ldots .n_{h}}\) be the normalized pixel locations of the target candidate, centered at location y in the current frame. The target probability candidate corresponds to each feature, \(u=1, 2,{\ldots }, m,\) can be expressed as:

To measure the similarity between the target model and the target candidate, mean shift uses the Bhattacharyya coefficient as the similarity function, it is defined as:

The most probable location y of the target in the current frame is obtained by maximizing the Bhattacharyya coefficient \(\rho \left( y \right) \). In the iterative process, the estimated target moves from \(y_0\) to a new position \(y_1\), which is defined as:

where

3 Proposed joint color-texture histogram for tracking based mean shift algorithm

In this work, we propose a novel target representation method by using the joint HSV color and LPQ texture features in the mean shift framework. Mean shift algorithm has been proved to be robust to partial occlusion, scale, rotation and non-rigid deformation of the target [2]. However, using only color histogram in mean shift tracking has some problems [17]. First, the spatial information of the target is lost. Second, it caused object tracking inaccurately or fail easily when the object color was close to background color or the motion fast of the target or the camera (motion blur). To overcome the limitation of color histogram mean shift tracker, we use the HSV color space instead of the RGB color space, and the LPQ texture features have been used in combination with color histogram, which explores the blur invariant property and the spatial information. The details of the proposed method are explained in the following subsections.

3.1 LPQ texture features

The LPQ operator was proposed by Ojansivu et al. for texture description [30]. It was shown that the operator is robust to blur and outperform the Local Binary Pattern operator [29] in texture classification. LPQ utilizes the blur invariance property of the Fourier phase spectrum [30]. It is based on the quantized phase of the discrete Fourier transform computed locally for small image patches [31].

The spatial blurring is represented by a convolution between the image intensity and a point spread function (PSF). In the Fourier domain, this corresponds to

where G(u), F(u), and H(u) are the discrete Fourier transforms (DFT) of the blurred image, the original image, and the PSF, respectively, and u is a vector of coordinates \(\left[ {u,v} \right] ^{T}\) in the frequency domain. The magnitude and phase can be separated from:

The blur PSF is centrally symmetric, its Fourier transform H is always real-valued, and as a consequence its phase is only a two-valued function, given by:

In the LPQ method, it is assumed that in the very-low frequency band, the value of \(H\left( u \right) \) is positive with \(\angle H\left( u \right) =0\), so the phase information of \(\hbox {G}\left( u\right) \) and \(\hbox {F}\left( u\right) \) is the same and therefore a blur invariant representation can be obtained from the phase.

In LPQ the phase is examined in local M-by-M neighborhoods \(N_x\) (\(N_x \) is a window region of \(M \times M\) pixels associated with x position) at each pixel position x of the image f(x). These local spectra are computed using a short term Fourier transform (STFT) defined by:

where \(x=\left\{ {x_{1,} ,x_2 ,\ldots ,x_N } \right\} \) consist of simply 1-D convolution for the rows and columns successively. The local Fourier coefficients are computed at four frequency points \(u_1 =[{a,0}]^{T},u_2 =[{0,a}]^{T},u_3 =\left[ {a,a} \right] ^{T} \,\hbox {and}\, u_4 =\left[ {a,-a} \right] ^{T}\), where a is a sufficiently small scalar to satisfy \(H\left( {u_i } \right) >0\). For each pixel position, this results in a vector:

The phase information in the Fourier coefficients is recorded by observing the signs of the real and imaginary parts of each component in \(F_x \). This is done by using a simple scalar quantizer:

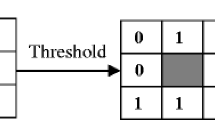

where \(g_j \left( x \right) \) is the jth component of the vector \(G_x =\left[ {Re\left\{ {F_x } \right\} ,Im\left\{ {F_x } \right\} } \right] \). The resulting eight binary coefficients \(q_j \left( x \right) \) are represented as integer values between 0 and 255 using binary coding, given by:

As a result, we get the label image \(f_\mathrm{LPQ} \) whose values are the blur invariant LPQ labels.

3.2 HSV color features

In the traditional mean shift tracking algorithm, the RGB color histogram has been used to target representation. However, the RGB space is not a perceptually uniform color space, that is, the difference between the colors in the RGB space do not correspond to the color differences perceived by humans. Additionally, the RGB dimensions are highly correlated [1]. To improve the performance of the mean shift tracking algorithm, we applied the HSV color histogram, which given robust tracking to lighting conditions, because HSV (hue, saturation and value) is an approximately uniform color space.

3.3 Tracking by mean shift with joint HSV color-LPQ texture histogram

The process of the proposed joint color-LPQ texture histogram based mean shift tracker is described in Fig. 1. Firstly, we calculate the LPQ feature of each pixel in the target region for obtaining LPQ target region, whose value is between 0 and 255. Then, we use the HSV channels and the LPQ patterns to jointly represent the target by the color and texture features, as shown in Fig. 2. To obtain the color and texture distribution of target region, we use (Eq. 1) to calculate the color and texture distribution of target model q, in which \(m=N_{\mathrm{H}} \times N_{\mathrm{S}} \times N_{\mathrm{v}} \times N_{\mathrm{LPQ}}\) the number of joint feature spaces. The three dimensions (i.e., \(16 \times 16 \times 16\)) represent the quantized bins of color HSV channels and the fourth dimension (i.e., 16) is bins of the LPQ texture feature. The distribution color and texture of target model q has four dimensions 4D (i.e., \(16 \times 16 \times 16\times 16\)). Similarly, the target candidate model \({\hat{p}}\left( y \right) \) is calculated with (Eq. 2). Figure 3 illustrates an example of the joint HSV color and LPQ texture distribution of target model q in the features \(u_{\mathrm{H}}=3, u_{\mathrm{S}}=2, u_{\mathrm{V}}=4, u_{\mathrm{LPQ}}=1\).

4 Experimental results

In this section, we introduce the implementation details of the proposed joint color-LPQ texture based mean shift tracking algorithm MSLPQ and mean shift tracking algorithm MS and then report the experimental results by extensively evaluating the proposed tracking methods MSLPQ and MS on numerous video sequences including a comprehensive tracking benchmark [32]. To evaluate the effectiveness of the MSLPQ and MS, we implement it using HSV color histogram, and we use image gray for extracted texture feature with radius \(R = 8\) for obtaining the LPQ image. The iteration numbers of mean shift tracking equal to 20 and the position error threshold \(\zeta \) equal to 0.5. The proposed algorithms MSLPQ and MS are implemented by using the Matlab software. We validate the effectiveness of the proposed trackers by performing the experiments on several sequences from the OTB [32] and VOT 2015 datasets. These sequences include motion blur (MB), occlusion (OCC), background clutters (BC), deformation, fast motion (FM), rotation (IPR, OPR), and illumination (IV) and scale (SV) changes. Sequences Boy, BlurFace, David3, BlurBody, Human8, Lemming and BlurCar4 are from OTB, Bolt2 is from VOT 2015. In the first frame, the target of interest is selected manually. These sequences are publicly available on the websites: http://www.visual-tracking.net, http://www.votchallenge.net

4.1 Quantitative comparison

In this section, we quantitatively compare MSLPQ and MS with four other popular algorithms: (1) the traditional mean shift tracking (KMS) [2]; (2) multiple instance learning (MIL) [13]; (3) Visual Tracking Decomposition (VTD) [9]; and (4) structured output tracking with kernels (Struck) [10]. We evaluate the performance of each tracker based on overlapping rate and center location error [32]. Center location error indicates the euclidean distance between the center of the bounding box around the tracked object and the ground truth. We use the precision to measure the overall tracking performance, which is defined as the percentage of frames whose estimated location within the given threshold distance of the ground truth. Usually, this threshold distance is set to 20 pixels [32, 33].

Another evaluation is to compute the bounding box overlap S of \(r_\mathrm{t} \) and \(r_\mathrm{g} \) in each frame, where \(r_\mathrm{t} \) is the bounding box outputted by a tracker and \(r_\mathrm{g} \) is the ground truth bounding box. The bounding box overlap is defined as \(S=\left| {r_\mathrm{t} \cap r_\mathrm{g} } \right| /\left| {r_\mathrm{t} \cup r_\mathrm{g} } \right| ,\) where \(\cap \) and \(\cup \) denote the intersection and union of two regions, respectively. In order to measure the overall performance of a given image sequence, we count the number of successful frames, whose overlap is larger than the given threshold 0.5. The success plot shows the ratios of successful frames at the thresholds varied from 0 to 1. We use the area under the curve (AUC) of each success plot to rank the comparison trackers.

The average overlap and the center location error of the four comparative trackers on the eight sequences are summarized in Table 1. Overall, the minimum center location error and maximum overlap in all the tested sequences show that the proposed MSLPQ has the best average performances (0.6947) compared with the other methods. Moreover, MS appears to be more robust than original mean shift tracking KMS and achieves a better performance in this dataset especially in sequence Human8.

The overall performance of our tracker and other four compared trackers are shown as in Fig. 4. We use one pass evaluation (OPE) for the overall performance, and success rate and precision as an evaluation criterion, as shown in Fig. 4a, b, respectively. For comparison, we use 20 color images with different challenges. Obviously, the overall performance of our tracker outperforms the other 4 state-of-the-art trackers.

4.2 Qualitative comparison

In order to illustrate the qualitative comparison more clearly, Fig. 5 illustrates the tracking results of different trackers on several challenging sequences. The Boy and BlurFace sequences track human faces under MB, FM, IPR, SV and OPR. The experimental results show that MSLPQ obtains the best performance as shown in Fig. 5a, b. On the Boy sequence, the MIL fails to track the target from frame 577, where the frames include both fast motion and severe motion blur. In contrast, the MSLPQ can accurately follow the target at frames 206, 492 and 577 which are subject to motion blurring, fast motion and pose changes, when all the other trackers have failed. While, in BlurFace sequence, most trackers including MIL, KMS, VTD and Struck, fail to track the target from the early frames especially when severe blur motion occurs.

In Fig. 5c–f, we show some example frames for David3, BlurBody, Human8 and Bolt2 sequences, where the tasks are to track the human bodies in different settings. In the David3 and Bolt2 sequences, a moving Body is tracked, which presents many challenges such as partial occlusion and the background near the target has the similar color or texture as the target. In the David3 sequence, VTD, Struck and MIL are failed to track the target when a partial occlusion occurs at frame 130. In contrast, the proposed method is more robust when the background has the similar color as the target, this is the result of the employment of rich feature color-LPQ texture, while MS and KMS are less successful. In the Bolt2 sequence, the pose of target change rapidly and the appearance deform frequently, MSLPQ, MS and MIL succeed in tracking in the over the whole sequence, while VTD is only able to track a part of the target though does not lose it, but KMS loses the target when the background has the similar color as the target, after frame 185. However, Struck fails on the over the whole sequence. The BlurBody sequence includes Blur motion and DEF. MIL loses the target because of the blur motion, while VTD is able to track in the beginning, but it fails after frame 99. In contrast, the MSLPQ and Struck obtain the best performance (frames 147, 273 and 321). Compared with MS and KMS, MSLPQ successfully tracks the target under different challenges due to the robustness of the additional LPQ texture features as in frame 273. On the Human8, most trackers MIL, VTD, Struck and KMS lose the target from the early frames onwards due to the target appearance is similar to the background. In contrast, the proposed methods MSLPQ and MS can accurately follow the target as in frames 88 and 122, despite the presence of many challenges such as background Clutters, scale and illumination changes.

In the last group of tested sequences, the tasks are varying from tracking car in the road in BlurCar4 sequence to tracking moving doll in Lemming sequence. Figure 5g–h shows some frames of these sequences. The Lemming sequence is much harder due to the significant appearance changes, OCC and out-of-plane rotations (OPRs). In this sequence, all trackers succeed in tracking the target in the beginning even frame 373. The VTD fails as in frame 739 due to heavy occlusion, while Struck gradually drifts from the target after the 948 frame and totally loses the target in the 963 frame (e.g., frame 1023) due to the pose changes. However, MSLPQ, MS, KMS and MIL are only able to track a part of the target though does not lose it (frame 1108). We can see that the MSLPQ and MS have the best performances than KMS and MIL, as a result of the use of color-LPQ texture feature for MSLPQ and 3D color histogram for MS. In the BlurCar4 sequence, the VTD and MIL lose the target from the start. Struck, MSLPQ, KMS and MS are able to track the moving car despite the dramatic severe blur motion and fast motion. In addition, these methods are prone to drift during the tracking due to the target appearance is somewhat similar to the background and in the presence another car has a similar color. However, Struck and MSLPQ achieve the best performances than KMS and MS as shown in frame 331.

5 Conclusion

In this paper, we proposed an effective and robust algorithm of object tracking which uses color-LPQ texture histogram features and applying it to the mean shift tracking algorithm framework. The LPQ operator is used to represent the target structural information, which is more discriminant and insensitive to blurring. Then, the proposed target representation, joint color and LPQ texture, is used to effectively distinguish the foreground target and its background. The experimental results demonstrate that the proposed method is capable of taking advantage of color and texture features, compared with the original mean shift tracking algorithm. Comparative evaluations on challenging benchmark image sequences against several state-of-the-art trackers, such as KMS [2] and Struck [10], show that the proposed method obtains superior performance with respect to Background Clutters, blur motion, partial occlusions, and various appearance changes.

References

Yilmaz, A., Javed, O., Shah, M.: Object tracking: a survey. ACM Comput. Surv.: CSUR 38(4), 1–45 (2006)

Comaniciu, D., Ramesh, V., Meer, P.: Kernel based object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 25(2), 564–577 (2003)

Zhao, Q., Yao, W., Xie, J.: Research on object tracking with occlusion. WIT Trans. Inf. Commun. Technol. 51, 537–545 (2014)

Yang, H., Shao, L., Zheng, F., Wang, L., Song, Z.: Recent advances and trends in visual tracking: a review. Neurocomputing 74, 3823–3831 (2011)

Li, X., Hu, W., Shen, C., Zhang, Z., Dick, A., Hengel, A.V.D.: A survey of appearance models in visual object tracking. ACM Trans. Intell. Syst. Technol. 4(4), 1–58 (2013)

Ross, D.A., Lim, J., Lin, R.S., Yang, M.H.: Incremental learning for robust visual tracking. Int. J. Comput. Vis. 77(1–3), 125–141 (2008)

Zhang, S., Yao, H., Zhou, H., Sun, X., Liu, S.: Robust visual tracking based on online learning sparse representation. Neurocomputing 100, 31–40 (2013)

Mei, X., Ling, H.: Robust visual tracking using l1 minimization. In: IEEE 12th International Conference on Computer Vision, pp. 1436–1443 (2009)

Kwon, J., Lee, K.M.: Visual tracking decomposition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2010, 1269–1276 (2010)

Hare, S., Saffari, A., Torr, P.H.: Struck: structured output tracking with kernels. In: IEEE International Conference on Computer Vision (ICCV), pp. 263–270 (2011)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2015)

Kalal, Z., Mikolajczyk, K., Matas, J.: Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. 34(7), 1409–1422 (2012)

Babenko, B., Yang, M.H., Belongie, S.: Robust object tracking with online multiple instance learning. IEEE Trans. Pattern. Anal. Mach. Intell. 33(8), 1619–1632 (2011)

Ma, C., Yang, X., Zhang, C., Yang, M-H.: Long-term correlation tracking. In: CVPR (2015)

Lan, X.Y., Yuen, P.C., Challappa, R.: Robust MIL-based feature template learning for object tracking. In: The Thirty First AAAI Conferences on Artificial Intelligence (AAAI), pp 4118–4125 (2017)

Lan, X.Y., Ma, A.J., Yuen, P.C., Challappa, R.: Joint sparse representation and robust feature-level fusion for multi-cue visual tracking. IEEE Trans. Image Process. 24(12), 5826–5841 (2015)

Ning, J., Zhang, L., Zhang, D., Wu, C.: Robust object tracking using joint color-texture histogram. Int. J. Pattern Recognit. Artif. Intell. 23(07), 1245–1263 (2009)

Wang, Q., Fang, J., Yuan, Y.: Multi-cue based tracking. Neurocomputing 131, 227–236 (2014)

Lan, X.Y., Ma, A. J., Yuen, P.C.: Multi-cue visual tracking using robust feature-level fusion based on joint sparse representation. In: CVPR, pp 1194–1201 (2014)

Dou, J., Li, J.: Robust visual tracking based on joint multi-feature histogram by integrating particle filter and mean shift. Optik Int. J. Light Electron Opt. 126, 1449–1456 (2015)

Lan, X.Y., Zhang, S., Yuen, P.C.: Robust joint discriminative feature learning for visual tracking. In: IJCAI, pp. 3403–3410 (2016)

Yuan, Y., Xiong, Z., Wang, Q.: An incremental framework for video-based traffic sign detection, tracking, and recognition. IEEE Trans. Intell. Transp. Syst. 18(7), 1918–1929 (2017)

Chen, X., Zhang, M., Ruan, K., Xu, G., Sun, S., Gong, C., Min, J., Lei, B.: Improved mean shift target tracking based on self-organizing maps. SIViP 8, S103–S112 (2014)

Wen, Z., Cai, Z.: A robust object tracking approach using mean shift. Proceedings of the Third International Conference on Natural Computation (ICNC) 2, 170–174 (2007)

An, K.H., Chung, M.J.: Mean shift based object tracking with new feature representation. In: Proceedings of the Third International Conference on Ubiquitous Robots and Ambient Intelligence, pp 365–370 (2006)

Xiaorong, P., Zhihu, Z.: A more robust mean shift tracker on joint color-CLTP histogram. Int. J. Image Gr. Signal. Process. 4(12), 34–42 (2012)

Phadke, G., Velmurugan, R.: Mean LBP and modified fuzzy C-means weighted hybrid feature for illumination invariant mean-shift tracking. SIViP 11(4), 665–672 (2017)

Tavakoli, R.H., Moin, M.S., Heikkila, J.: Local similarity number and its application to object tracking. Int. J. Adv. Robot. Syst. 10, 184 (2013)

Ojala, T., Pietikäinen, M., Mäenpää, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 24(7), 971–987 (2002)

Ojansivu, V., Heikkila, J.: Blur insensitive texture classification using local phase quantization. In: Proceedings of Image and Signal Processing ICISP 2008, Cherbourg-Octeville, pp. 236–243 (2008)

Heikkila, J., Ojansivu, V.: Methods for local phase quantization in blur-insensitive image analysis. In: Proceedings of International Workshop Local and Non-Local Approximation in Image Processing, pp. 104–111 (2009)

Wu, Y., Lim, J., Yang, M. H.: Online object tracking: a benchmark. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2411–2418 (2013)

Qiao, L., Xiao, M., Weihua, O., Quan, Z.: Visual object tracking with online sample selection via lasso regularization. SIViP 11(5), 881–888 (2017)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Medouakh, S., Boumehraz, M. & Terki, N. Improved object tracking via joint color-LPQ texture histogram based mean shift algorithm. SIViP 12, 583–590 (2018). https://doi.org/10.1007/s11760-017-1196-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-017-1196-2