Abstract

Depth functions offer an array of tools that enable the introduction of quantile- and ranking-like approaches to multivariate and non-Euclidean datasets. We investigate the potential of using depths in the problem of nonparametric supervised classification of directional data, that is classification of data that naturally live on the unit sphere of a Euclidean space. In this paper, we address the problem mainly from a theoretical side, with the final goal of offering guidelines on which angular depth function should be adopted in classifying directional data. A set of desirable properties of an angular depth is put forward. With respect to these properties, we compare and contrast the most widely used angular depth functions. Simulated and real data are eventually exploited to showcase the main implications of the discussed theoretical results, with an emphasis on potentials and limits of the often disregarded angular halfspace depth.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction: classification of directional data

In many research areas, data living in nonlinear subdomains of \({\mathbb {R}}^d\) are common. That data can be described by random variables whose support is a nonlinear manifold. The complicated nature of such datasets calls for methods going beyond the standard statistical techniques, and the need for specific tools applicable to nonlinear datasets arises.

Our work considers directional (or spherical) data whose support is the surface of the unit hyper-sphere \(\mathbb {S}^{d-1}\) of \({\mathbb {R}}^d\), or a subset of it. Directional data describe values typically recorded as a set of angles (for \(d=2\), also known as circular data) or as unit vectors in \({\mathbb {R}}^d\). A large amount of literature exists on the analysis of this kind of data, from the seminal book of Mardia (1972) to many recent works on clustering (Salah and Nadif 2019; Bry and Cucala 2022), and on nonparametric data analysis (Arnone et al. 2022; Saavedra-Nieves and Crujeiras 2022), to cite a few.

We focus on the use of angular depth functions in supervised classification. That is, on which angular depth should be adopted when classifying newly observed directional values into groups, given a preliminary knowledge of directional data whose group labels are available. Also known as supervised learning of directional objects, this issue has become quite an active area of research in recent years (see, e.g., Figueiredo (2009); Tsagris and Alenazi (2019); Jana and Dey (2021)). We pursue a nonparametric approach, and within the many, we consider angular depth functions and the associated DD-classifiers (depth-depth classifiers). Statistical depth functions (Liu et al. 1999; Zuo and Serfling 2000a) present a set of tools applicable to multivariate and non-Euclidean data that generalize notions of ranks, orderings, and quantiles to more complex datasets. Exploiting the nonparametric nature of statistical depth functions and their robustness properties, the depths have already been successfully applied also in the context of directional data analysis (Liu and Singh 1992; Agostinelli and Romanazzi 2013; Ley et al. 2014; Demni et al. 2021).

DD-classifiers were first introduced for multivariate data living in \({\mathbb {R}}^d\) (Li et al. 2012). Because of their simplicity, efficacy, and optimality properties, they have been soon extended to data defined over different domains, such as functional data (Cuesta-Albertos et al. 2017; Mosler and Mozharovskyi 2017) and networks (Tian and Gel 2019).

Depth functions evaluate how much “inner” a point is with respect to a probability distribution. DD-classifiers assign to any point in the training set its depth value with respect to each of the competing distributions/groups. Next, these values are mapped into a Euclidean space spanned by the depths themselves (DD-space), and a discriminating function within such a DD-space is constructed. In the second step, new data points are classified by evaluating their depth with respect to each labeled training set, and assigned to a class by means of the discriminating function previously constructed in the DD-space. The depths thus serve as a tool offering elaborate nonlinear dimension reduction methods applicable to classification.

In principle, any depth function can be used to construct a DD-classifier. However, its performance is related to the depth which is adopted. A crucial issue is therefore the choice of a depth among the many available. In \({\mathbb {R}}^d\), related problems have been addressed from a different perspective, even recently (Mosler and Mozharovskyi 2022). For directional data and angular depths, though, apart from a few remarks in particular simulation studies, no discussion on that pressing issue is found in the literature.

The aim of this work is twofold. First, we propose a set of desired conditions that angular depths should satisfy. The focus will be on the properties exploitable in a classification setting. Second, we compare the available angular depths in view of those conditions, and we offer a series of theoretical results regarding the general behavior and the shapes of the depth contours for the most established angular depths.

The paper is organized as follows. After introducing notations (Sect. 1.1), Sect. 2 lists a set of desirable properties for an angular depth function. Several observations regarding these conditions are given; in particular, the conditions are contrasted to those available for depths in linear spaces (Liu 1990; Zuo and Serfling 2000a; Serfling 2006) and the special traits of directional data are stressed out. Section 3 introduces several prominent angular depth functions and discusses which of them enjoy the properties laid out in Sect. 2. Our theoretical analysis pinpoints the often disregarded angular halfspace depth (Small 1987) as a candidate suitable to be used for classification purposes. Section 4 uses simulated and real datasets to outline the main implications of the obtained theoretical results, focusing on the power of the angular halfspace depth and its limitations within a supervised classification setting. Final remarks are offered in Sect. 5. Technical details and proofs of our theoretical results are postponed to the Appendix.

1.1 Notations

We write \({\mathbb {R}}^d\) for the Euclidean space of points \(x \in {\mathbb {R}}^d\) equipped with the Euclidean norm \(\left\| x \right\|\) and a scalar product \(\left\langle \cdot , \cdot \right\rangle\). A vector \(x = \left( x_1, \dots , x_d\right) ^{\textsf{T}}\in {\mathbb {R}}^d\) is meant to be a column vector; when not necessary, the transposition in \(\left( x_1, \dots , x_d\right) ^{\textsf{T}}\) will be dropped to ease the notations. The unit sphere in \({\mathbb {R}}^d\) is \(\mathbb {S}^{d-1}= \left\{ x \in {\mathbb {R}}^d :\left\| x \right\| = 1 \right\}\); elements of \(\mathbb {S}^{d-1}\) are frequently called directions. For a topological space \({\mathcal {S}}\), \({\mathcal {P}}\left( {\mathcal {S}}\right)\) stands for the collection of all Borel probability measures on \({\mathcal {S}}\).

2 Desirable properties of angular depth functions

We begin by discussing the main properties that an angular depth function should enjoy in a classification framework. A depth D on a topological space \({\mathcal {S}}\) is, formally speaking, a bounded mappingFootnote 1

that to a point \(x \in {\mathcal {S}}\) and a probability measure \(P \in {\mathcal {P}}\left( {\mathcal {S}}\right)\) assigns D(x; P), the depth of x with respect to (w.r.t.) P. The depth is intended to quantify how much “inner”, or “centrally located” the point x is w.r.t. the distribution P. The set of points maximizing the depth function \(x \mapsto D(x; P)\) is called the set of depth medians. As one moves away from the median set, the depth w.r.t. P is supposed to decrease until it reaches values close to zero when x is taken far from the main bulk of the mass of P. Any depth D is naturally characterized by the collection of all its central regions

The shape of the regions \(D_\alpha (P)\) should reflect the geometry of the distribution P. We are naturally concerned by describing the shapes and the structural properties of these central regions, since they play a crucial role in depth-based classification.

2.1 Depth functions in linear spaces \({\mathbb {R}}^d\)

The methodology of statistical depth functions in linear spaces \({\mathbb {R}}^d\) has been studied thoroughly by Zuo and Serfling (2000a). According to Zuo and Serfling (2000a), a (statistical) depth D in the linear space \({\mathcal {S}} = {\mathbb {R}}^d\) is a function (1) that fulfills the following properties for all \(P \in {\mathcal {P}}\left( {{\mathbb {R}}^d}\right)\):

- (\(\textrm{L}_1\)):

-

Affine invariance: \(D(x;P) = D(Ax+b; P_{AX+b})\) for all \(x \in {\mathbb {R}}^d\), \(A \in {\mathbb {R}}^{d\times d}\) non-singular, and \(b \in {\mathbb {R}}^d\), where \(P_{AX+b} \in {\mathcal {P}}\left( {{\mathbb {R}}^d}\right)\) stands for the distribution of the transformed random vector \(AX + b\) with \(X \sim P\);

- (\(\textrm{L}_2\)):

-

Maximality at center: If \(\mu \in {\mathbb {R}}^d\) is a “center” of P then

$$\begin{aligned} D(\mu ;P)=\sup _{x \in {\mathbb {R}}^d} D(x;P); \end{aligned}$$(3) - (\(\textrm{L}_3\)):

-

Monotonicity along rays: \(D(x;P) \le D(\mu + \alpha (x - \mu );P)\) holds for all \(x \in {\mathbb {R}}^d\) and \(\alpha \in [0,1]\), where \(\mu \in {\mathbb {R}}^d\) is any point that satisfies (3);

- (\(\textrm{L}_4\)):

-

Vanishing at infinity: \(\lim _{\left\| x\right\| \rightarrow \infty } D(x;P) = 0\).

The notion of a “center” \(\mu\) in condition (\(\textrm{L}_2\)) is ambiguous. It is meant to be applied to a specified notion of symmetry of P, with \(\mu\) being the unique center of that symmetry. Often, P is considered to be symmetric around \(\mu\) if each closed halfspace that contains \(\mu\) carries P-mass at least 1/2, which corresponds to the so-called halfspace symmetry of P, the arguably weakest notion of symmetry considered in \({\mathcal {P}}\left( {{\mathbb {R}}^d}\right)\). Indeed, as shown in Zuo and Serfling (2000b), many other familiar notions of multivariate symmetry (spherical, elliptical, central, or angular) imply halfspace symmetry of a measure in \({\mathbb {R}}^d\).

In addition to conditions (\(\textrm{L}_1\))–(\(\textrm{L}_4\)), several other desiderata of depths have been proposed. For classification, interesting are those concerning the shapes of the central regions (2). In Serfling (2006), the following conditions can be found.

- (\(\textrm{L}_5\)):

-

Upper semi-continuity: \(D(\cdot ;P) :{\mathbb {R}}^d \rightarrow [0,\infty ) :x \mapsto D(x;P)\) is upper semi-continuous;

- (\(\textrm{L}_6\)):

-

Quasi-concavity: The central regions (2) are convex for all \(\alpha \ge 0\).

For the central regions \(D_\alpha (P)\), condition (\(\textrm{L}_5\)) guarantees that each set \(D_\alpha (P)\) is closed in \({\mathbb {R}}^d\), which together with the boundedness condition from (\(\textrm{L}_4\)) implies that the central regions (2) must be compact sets for all \(\alpha > 0\). The additional condition (\(\textrm{L}_6\)) refines (\(\textrm{L}_3\)). In (\(\textrm{L}_3\)), we require that each straight line segment between a maximizer \(\mu \in {\mathbb {R}}^d\) of \(D(\cdot ; P)\) and \(x \in {\mathbb {R}}^d\) lies inside the central region \(D_\alpha (P)\) with \(\alpha = D(x; P)\). That property is called the star convexity of the central regions around \(\mu\). The stronger convexity condition (\(\textrm{L}_6\)) refines this and requires that for any two points \(x, y \in D_\alpha (P)\) the whole line segment between x and y is contained in \(D_\alpha (P)\), for any \(\alpha \ge 0\).

A prototypical depth function in \({\mathbb {R}}^d\) that satisfies all conditions (\(\textrm{L}_1\))–(\(\textrm{L}_6\)) is the Tukey’s halfspace depth (Tukey 1975; Donoho and Gasko 1992), defined for \(x \in {\mathbb {R}}^d\) and \(P \in {\mathcal {P}}\left( {{\mathbb {R}}^d}\right)\) by

where \(H_{x,u} = \left\{ y \in {\mathbb {R}}^d :\left\langle y, u \right\rangle \ge \left\langle x, u \right\rangle \right\}\) is a closed halfspace whose boundary hyperplane passes through x with inner normal u. An angular version of \(hD\) will be presented and studied in Sect. 3.4 below.

2.2 Angular depth functions in \(\mathbb {S}^{d-1}\)

In the setup of directional data, we design depths on the unit sphere \(\mathbb {S}^{d-1}\) w.r.t. a distribution \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\). An angular (or directional) (statistical) depth function \(aD\) should thus naturally be a bounded function

that fulfills the following properties for all \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\):

- (\(\textrm{D}_{1}\)):

-

Rotational invariance: \(aD(x;P)=aD(O x; P_{OX})\) for all \(x \in \mathbb {S}^{d-1}\) and any orthogonal matrix \(O \in {\mathbb {R}}^{d \times d}\), where \(P_{OX} \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) stands for the distribution of the transformed random vector OX with \(X \sim P\);

- (\(\textrm{D}_{2}\)):

-

Maximality at center:

$$\begin{aligned} aD(\mu ;P) = \sup _{x \in \mathbb {S}^{d-1}} aD(x;P), \end{aligned}$$(6)for any P with “center” at \(\mu \in \mathbb {S}^{d-1}\);

- (\(\textrm{D}_{3}\)):

-

Monotonicity along great circles:

$$\begin{aligned} aD(x; P) \le aD(\left( \mu + \alpha (x - \mu )\right) /\left\| \mu + \alpha (x-\mu )\right\| ;P) \end{aligned}$$(7)holds for all \(x \in \mathbb {S}^{d-1}\setminus \left\{ -\mu \right\}\) and \(\alpha \in [0,1]\), where \(\mu \in \mathbb {S}^{d-1}\) is any point that satisfies (6);

- (\(\textrm{D}_{4}\)):

-

Minimality at the anti-median: \(aD(-\mu ;P)=\inf _{x \in \mathbb {S}^{d-1}} aD(x;P)\), for any \(\mu \in \mathbb {S}^{d-1}\) that satisfies (6).

Conditions (\(\textrm{D}_1\))–(\(\textrm{D}_4\)) are direct translations of the classical requirements (\(\textrm{L}_1\))–(\(\textrm{L}_4\)) from \({\mathbb {R}}^d\) to \(\mathbb {S}^{d-1}\). Indeed, since no sensible notion of an affine transform exists in \(\mathbb {S}^{d-1}\), a natural family replacing the set of affine maps in (\(\textrm{L}_1\)) is the set of orthogonal transforms in the ambient space \({\mathbb {R}}^d\), that leave the unit sphere intact. Condition (\(\textrm{D}_3\)) interprets straight lines in \({\mathbb {R}}^d\) as geodesics, which are the shortest curves joining any couple of points. The geodesics in \(\mathbb {S}^{d-1}\) are the great circles passing through pairs of directions; naturally, the expression \(\left( \mu + \alpha (x - \mu )\right) /\left\| \mu + \alpha (x-\mu )\right\|\) on the right hand side of (7) encodes the points of the shortest arc between \(\mu\) and \(x \ne -\mu\) parameterized by \(\alpha \in [0,1]\). The case \(x = -\mu\) is excluded in (\(\textrm{D}_3\)), because there are infinitely many great circles joining \(\mu\) and \(-\mu\). The latter situation is therefore specifically treated in (\(\textrm{D}_4\)), which can be seen as an analog of the vanishing property (\(\textrm{L}_4\)) from \({\mathbb {R}}^d\). Indeed, interpreting the meridians of great circles joining \(\mu\) with \(-\mu\) as “straight lines centered at \(\mu\)” in \(\mathbb {S}^{d-1}\), the antipodal point \(-\mu\) plays the role of all “points at infinity” in \(\mathbb {S}^{d-1}\).

The vague notion of a “center” from (\(\textrm{D}_2\)) is the most difficult to translate to \(\mathbb {S}^{d-1}\). A simple solution is to consider only rotationally symmetric distributionsFootnote 2 in \(\mathbb {S}^{d-1}\) (Ley and Verdebout 2017), and to declare (\(\textrm{D}_2\)) to be desired only for \(\mu\) being (one of the two antipodally symmetric) directions of rotational symmetry of \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\). There are two issues to be addressed when applying (\(\textrm{D}_2\)) with this definition of a center:

-

The center of rotational symmetry is never unique. For example, whenever O fixes \(\mu\), it fixes also its antipodal direction \(-\mu \in \mathbb {S}^{d-1}\).

-

The uniform distribution on \(\mathbb {S}^{d-1}\) is rotationally symmetric around each direction.

The first problem may be resolved by defining the “center” in (\(\textrm{D}_2\)) to be exactly one of the points \(\left\{ \mu , -\mu \right\}\). Its antipodal point is then used in (\(\textrm{D}_4\)). The second problem with the uniformly distributed random variable \(U \sim P_U \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) on \(\mathbb {S}^{d-1}\) is of a more fundamental nature. The rotational invariance (\(\textrm{D}_1\)) immediately gives that for any \(aD\) satisfying (\(\textrm{D}_1\)), \(aD(\cdot ;P_U)\) must be a constant on \(\mathbb {S}^{d-1}\), and thus no ordering of points is possible. This is, however, understandable since the symmetry of \(P_U\) makes it impossible to distinguish points of \(\mathbb {S}^{d-1}\) w.r.t. their location; see also our Theorem 1 below.

We now consider analogues of conditions (\(\textrm{L}_5\)) and (\(\textrm{L}_6\)) in \(\mathbb {S}^{d-1}\). The upper semi-continuity from (\(\textrm{L}_5\)) is defined in any topological space, and in particular, it is simple to state also in \(\mathbb {S}^{d-1}\). We say that a function \(g :\mathbb {S}^{d-1}\rightarrow {\mathbb {R}}\) is upper semi-continuous if

for any sequence \(\left\{ x_n\right\} _{n=1}^\infty \subset \mathbb {S}^{d-1}\) that converges to \(x \in \mathbb {S}^{d-1}\). A set \(A \subseteq \mathbb {S}^{d-1}\) is called spherical convex (see, e.g., Besau and Werner (2016)) if its radial extension defined as

is convex in \({\mathbb {R}}^d\). Observe that if a spherical convex set A is not contained in a closed hemisphere in \(\mathbb {S}^{d-1}\), necessarily \(A = \mathbb {S}^{d-1}\). This particular property of spherical convexity will be important later in our discussion.

We are ready to state the directional versions of conditions (\(\textrm{L}_5\)) and (\(\textrm{L}_6\)):

- (\(\textrm{D}_{5}\)):

-

Upper semi-continuity: \(aD(\cdot ;P) :\mathbb {S}^{d-1}\rightarrow [0,\infty ) :x \mapsto aD(x;P)\) is upper semi-continuous;

- (\(\textrm{D}_{6}\)):

-

Quasi-concavity: The central regions (2) are spherical convex for all \(\alpha \ge 0\).

As in the linear case, condition (\(\textrm{D}_5\)) guarantees that the central regions (2) for depth \(aD\) are closed in \(\mathbb {S}^{d-1}\). Since \(\mathbb {S}^{d-1}\) is a bounded space, it guarantees that a directional median \(\mu \in \mathbb {S}^{d-1}\) of P induced by \(aD\) in (6) always exists, since the supremum must be attained. Condition (\(\textrm{D}_6\)) is stronger than (\(\textrm{D}_3\)) in the same sense as in \({\mathbb {R}}^d\). While (\(\textrm{D}_3\)) can be seen as a spherical version of star convexity of the central regions of \(aD\left( \cdot ; P\right)\), condition (\(\textrm{D}_6\)) imposes spherical convexity of the central regions.

We already argued that for the very special uniform distribution on \(\mathbb {S}^{d-1}\), any sensible angular depth \(aD\) must take a constant value over \(\mathbb {S}^{d-1}\). Our first observation is that, as a consequence of our conditions, a much larger set of directional distributions must possess angular depth that is constant over \(\mathbb {S}^{d-1}\). The proof of the following theorem, as well as the proofs of all our other theoretical results, are deferred to Appendix B.1.

Theorem 1

Suppose that \(aD\) is an angular depth (5) that satisfies (\(\textrm{D}_1\)), (\(\textrm{D}_4\)), and (\(\textrm{D}_5\)). Let \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) be centrally symmetric, meaning that \(X \sim P\) has the same distribution as \(-X\). Then \(aD\left( \cdot ;P\right)\) must be constant over \(\mathbb {S}^{d-1}\).

Observe that the condition of central symmetry in Theorem 1 is a weak one. As proved by Rousseeuw and Struyf (2004, Theorem 2), \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) is centrally symmetric as in Theorem 1 if and only if it is halfspace symmetric around the origin \(0 \in {\mathbb {R}}^d\). Equivalently, P is centrally symmetric if and only if each closed hemisphere of \(\mathbb {S}^{d-1}\) is of P-mass at least 1/2. For an additional discussion on these symmetry considerations see Zuo and Serfling (2000b) and Nagy et al. (2019, Sect. 4.2). Another direct consequence of our conditions follows for rotationally symmetric distributions. Its proof is in Appendix B.2.

Theorem 2

Let \(aD\) be an angular depth (5) that satisfies (\(\textrm{D}_1\)), and let \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) be rotationally symmetric around \(\mu \in \mathbb {S}^{d-1}\). Then \(aD(\cdot ;P)\) is a function of the inner product \(\left\langle \cdot , \mu \right\rangle\) only, i.e. there exists a function \(\xi :[-1,1] \rightarrow [0,\infty )\) such that \(aD(x;P) = \xi \left( \left\langle x, \mu \right\rangle \right)\) for all \(x \in \mathbb {S}^{d-1}\). If, in addition, also (\(\textrm{D}_3\)) is true, then \(\xi\) can be taken monotone on \([-1,1]\); under the additional assumption (\(\textrm{D}_5\)), the function \(\xi\) is upper semi-continuous on \([-1,1]\).

Theorem 2 gives that for P rotationally symmetric, also the angular depth contours of any \(aD\) that verifies (\(\textrm{D}_1\)) must be invariant w.r.t. rotations fixing the center of symmetry \(\mu\) of P. If also (\(\textrm{D}_3\)) is true, all central regions (2) must be spherical caps orthogonal to \(\mu\).Footnote 3 Note, however, that Theorem 2 does not claim that only rotationally symmetric measures can have rotationally symmetric angular depth contours. An example is the angular Mahalanobis depth that will be discussed in Sect. 3.1.

At this point, it is also important to realize that even in the situation when P is rotationally symmetric (and thus all central regions \(aD_\alpha (P)\) are spherical caps by Theorem 2), the spherical convexity (\(\textrm{D}_6\)) does not have to be satisfied for \(aD\). Remarkably, spherical caps of the form \(\left\{ x \in \mathbb {S}^{d-1}:\left\langle x, \mu \right\rangle \ge \beta \right\}\) are spherical convex sets only for \(\beta \ge 0\); the only spherical convex set larger than a closed hemisphere is \(\mathbb {S}^{d-1}\) itself. We formalize our observation in the following result proved in Appendix B.3.

Theorem 3

Suppose that \(aD\) is an angular depth (5) that satisfies (\(\textrm{D}_6\)). Then for any \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) there must exist a direction \(u \in \mathbb {S}^{d-1}\) such that all points in the open hemisphere \(S = \left\{ x \in \mathbb {S}^{d-1}:\left\langle x, u \right\rangle < 0 \right\}\) attain the same depth, i.e. there exist \(u \in \mathbb {S}^{d-1}\) and \(c \ge 0\) such that \(aD(x;P) = c\) for all \(x \in \mathbb {S}^{d-1}\) such that \(\left\langle x, u \right\rangle < 0\). If, in addition, the depth \(aD\) satisfies (\(\textrm{D}_4\)), then \(c = \inf _{x \in \mathbb {S}^{d-1}} aD(x;P)\).

We see that contrary to the linear case where the quasi-concavity assumption (\(\textrm{L}_6\)) is rather standard, for directional data the requirement (\(\textrm{D}_6\)) of convexity of central regions is questionable. As we will see in Sect. 3, the only common angular depth that satisfies condition (\(\textrm{D}_6\)) in full is the angular halfspace depth.

While full-blown quasi-concavity of angular depth functions does not appear to be completely desirable, it is certainly beneficial if the contours of the angular depth reflect the geometric properties of the distribution \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\). In the linear space \({\mathbb {R}}^d\), the affine invariance (\(\textrm{L}_1\)) tacitly imposes this requirement. Its directional analogue (\(\textrm{D}_1\)), however, does not allow for transformations other than orthogonal, which would in \({\mathbb {R}}^d\) correspond to mere rotation invariance of a depth — a condition that is known to be too weak for many practical applications. Several procedures have therefore been proposed to make statistical depths in \({\mathbb {R}}^d\) satisfy the stronger affine invariance (\(\textrm{L}_1\)); we refer to Serfling (2010). The desire for an angular depth to reflect the shape properties and the geometry of the distribution \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) brings about our final requirement on the contours of an angular depth function. It can be phrased as a requirement of non-rigidity of the central regions (2) of \(aD\).

- (\(\textrm{D}_{7}\)):

-

Non-rigidity of central regions: There exists a measure \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) such that for some \(\alpha > 0\) the central region \(aD_\alpha (P)\) from (2) is not a spherical cap.

3 Angular depth functions and their properties

We now revise several angular depth functions known from the literatureFootnote 4 (i) the Mahalanobis depth (Ley et al. 2014); (ii) the angular distance-based depths (Pandolfo et al. 2018) including the arc distance depth, the cosine distance depth, and the chord distance depth; (iii) the angular simplicial depth (Liu and Singh 1992); and (iv) the angular halfspace depth (Small 1987). Our main interest is in the study of the desired properties (\(\textrm{D}_1\))–(\(\textrm{D}_7\)) from Sect. 2. A summary of our main results is in Table 1.

3.1 Angular Mahalanobis depth

Arguably one of the simplest depths for directional data is the angular Mahalanobis depth from Ley et al. (2014). It depends crucially on the notion of a median direction, which is typically taken to be the Fréchet median (Fisher 1985) defined as (any) direction \(\mu \in \mathbb {S}^{d-1}\) that minimizes the objective function

The value \(\arccos \left( \left\langle x, y \right\rangle \right)\) is the arc length distance between x and y on \(\mathbb {S}^{d-1}\). Thus, in analogy with the median from \({\mathbb {R}}\), we search for \(\mu\) minimizing the expected distance of x from \(X \sim P\). The objective function (9) does not have to be minimized at a singleton; in case there are multiple points of minima of (9), the whole set of those \(\mu \in \mathbb {S}^{d-1}\) that minimize (9) is called the set of Fréchet medians of P. One situation when the Fréchet median is not unique is the case when the distribution of \(X \sim P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) is centrally (sometimes also called antipodally) symmetric, i.e. X has the same distribution as \(-X\), in which case the set of Fréchet medians is antipodally symmetric (for each Fréchet median \(\mu \in \mathbb {S}^{d-1}\) of P is also \(-\mu\) a Fréchet median of P).

The angular Mahalanobis depth is defined only for \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) that admit a unique Fréchet median \(\mu \in \mathbb {S}^{d-1}\) (Ley et al. (2014), Assumption A). For such distributions \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\), the angular Mahalanobis depth of \(x \in \mathbb {S}^{d-1}\) w.r.t. P is

Here, \(X \sim P\) is a random vector distributed as P that is defined on a probability space \(\left( \Omega , {\mathcal {F}}, {\textsf{P}}\right)\). Clearly, the angular Mahalanobis depth depends only on the angle of x and \(\mu\), or equivalently on the quantity \(\left\langle x, \mu \right\rangle\). Its central regions (2) are thus always of the form

where \(c_\alpha \in [-1,1]\) is the \(\alpha\)-quantile of the univariate distribution of \(\left\langle X, \mu \right\rangle\), with \(X \sim P\).

The Fréchet median of P is certainly rotationally equivariant. Thus, as remarked already in Ley et al. (2014), under the condition that \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) possesses a unique Fréchet median, the angular Mahalanobis depth trivially satisfies all conditions (\(\textrm{D}_1\))–(\(\textrm{D}_5\)). It does not satisfy the directional quasi-concavity condition (\(\textrm{D}_6\)) because of Theorem 3. The angular Mahalanobis depth is well suited especially for rotationally symmetric distributions with unique Fréchet median, particularly due to the very rigid form of its central regions (10). That collection of distributions contains some of the most important families, such as the Fisher-von Mises-Langevin distributions (Ley and Verdebout (2017), Sect. 2.3.1), for which the Fréchet (and thus also \(aMD\)-induced) median coincides with their location parameter. Nevertheless, a downside of \(aMD\) is that it does not satisfy condition (\(\textrm{D}_7\)).

3.2 Angular distance-based depths

We now proceed to elaborate on the properties of the class of distance-based angular depths (Pandolfo et al. 2018), based on the seminal work of Liu and Singh (1992). For \(\delta :[-1,1] \rightarrow [0,\infty )\) bounded and non-increasing, a general depth of this type is, for \(x \in \mathbb {S}^{d-1}\) and \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\), given by

where \(X \sim P\) is a random variable distributed as P. Note that because \(\left\langle x, X \right\rangle \in [-1,1]\) and \(\delta\) is bounded, these depths are well defined for any \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\). It is also easy to see that for any \(O \in {\mathbb {R}}^{d \times d}\) orthogonal we have

meaning that each distance-based depth trivially satisfies condition (\(\textrm{D}_1\)). Provided that \(\delta\) is continuous on \([-1,1]\), it is easy to see that using the Lebesgue dominated convergence theorem (Dudley (2002), Theorem 4.3.5) for the function

we obtain that as \(x_n \rightarrow x\) in \(\mathbb {S}^{d-1}\), we have that \(\delta (-1) - aD_{\delta }(x_n;P) = \varphi _\delta (x_n) \rightarrow \varphi _\delta (x) = \delta (-1) - aD_{\delta }(x;P)\) as \(n \rightarrow \infty\). Thus, for any \(\delta\) continuous, condition (\(\textrm{D}_5\)) is satisfied, even with continuity in \(x \in \mathbb {S}^{d-1}\) for any \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\).

Three different choices of the distance function \(\delta\) are considered in Pandolfo et al. (2018):

The function \(\delta _{cos}\) leads to the so-called cosine distance depth, \(\delta _{arc}\) to the arc distance depth, and \(\delta _{chord}\) to the chord distance depth. Following Pandolfo et al. (2018), we denote the cosine distance depth by \(aD_{cos}\), the arc distance depth by \(aD_{arc}\), and the chord distance depth by \(aD_{chord}\). We elaborate on the properties of these depths case by case.

3.2.1 Cosine distance depth

The cosine distance depth of \(x \in \mathbb {S}^{d-1}\) simplifies to

This depth can be seen as a variant of the angular Mahalanobis depth discussed in Sect. 3.1. In particular, just as \(aMD\), also \(aD_{cos}\) depends only on the angle of \({{\,\textrm{E}\,}}X \in {\mathbb {R}}^d\) and x represented via the inner product \(\left\langle x, {{\,\textrm{E}\,}}X \right\rangle\), and thus all its central regions are spherical caps oriented in the direction of \({{\,\textrm{E}\,}}X\), given that \({{\,\textrm{E}\,}}X \ne 0 \in {\mathbb {R}}^d\). A distinctive feature of this depth is that it may happen that \({{\,\textrm{E}\,}}X = 0 \in {\mathbb {R}}^d\), which is the only case when \(aD_{cos}\) is constant on \(\mathbb {S}^{d-1}\). Thus, strictly speaking, the cosine distance depth satisfies all (\(\textrm{D}_1\))–(\(\textrm{D}_5\)) (with condition (\(\textrm{D}_5\)) satisfied even in the sense of continuity rather than semi-continuity), but the depth itself is based solely on the expectation \({{\,\textrm{E}\,}}X\) and shares all the shortcomings of \(aMD\). Of course, \(aD_{cos}\) fails to obey both (\(\textrm{D}_6\)) and (\(\textrm{D}_7\)).

3.2.2 Arc distance depth

Already for \(d=2\), the arc distance depth

fails to be unimodal, in the sense that the set of (local, or global) maxima of the depth may fail to form a connected subset of the unit circle \(\mathbb {S}^{1}\). In fact, a stronger statement can be shown. In the proof of the following theorem, given in Appendix B.4, we derive an explicit expression for the arc distance depth of an arbitrary distribution \(P \in {\mathcal {P}}\left( {\mathbb {S}^{1}}\right)\).

Theorem 4

There exists a distribution \(P \in {\mathcal {P}}\left( {\mathbb {S}^{1}}\right)\) such that the depth \(aD_{arc}\left( \cdot ;P\right)\) attains infinitely many local extremes on \(\mathbb {S}^{1}\).

Our Theorem 4 immediately shows that conditions (\(\textrm{D}_3\)) and (\(\textrm{D}_6\)) cannot be valid for \(aD_{arc}\). We now illustrate the statement of Theorem 4 in a concrete example.

Example 1

We first define distribution \(Q \in {\mathcal {P}}\left( {{\mathbb {R}}^2}\right)\) that is supported in two parallel straight lines \(L_+ = \left\{ \left( x,1\right) \in {\mathbb {R}}^2 :x \in {\mathbb {R}}\right\}\) and \(L_- = \left\{ \left( x,-1\right) \in {\mathbb {R}}^2 :x \in {\mathbb {R}}\right\}\). We fix two constants: \(K > 0\) and a positive integer \(m \ge 1\). Inside \(L_+\), the measure Q is uniform on the interval \(A = [-K, K] \times \{1\}\), with \(Q(A) = 1/2\). Inside \(L_-\), we take the finite set of m points in the interval \([-K,K] \times \{-1\}\) given by the sequence \(\left\{ y_j \right\} _{j=1}^m\). We take \(y_j = (- K - \ell /2 + j \, \ell ,-1) \in L_-\), where \(\ell = 2\, K/m\) is the distance between two consecutive points of the sequence, putting \(Q(\{y_j\}) = 1/(2\,m)\) for each \(j = 1, \dots , m\). The circular distribution \(P \in {\mathcal {P}}\left( {\mathbb {S}^{1}}\right)\) is defined as the image of Q under the projection \(\xi :{\mathbb {R}}^2{\setminus }\{0\} \rightarrow \mathbb {S}^{1} :x \mapsto x/\left\| x \right\|\). According to the detailed discussion given in Appendix B.5, the arc distance depth of P possesses at least m local extremes in \(\mathbb {S}^{1}\).

To illustrate this result, we took \(K = 5\) and \(m = 10\) in Fig. 1. We sampled \(n = 10~000\) independent random points from \(P \in {\mathcal {P}}\left( {\mathbb {S}^{1}}\right)\) and computed the sample arc distance depth of all the points in a dense grid in \(\mathbb {S}^{1}\). The resulting sample arc distance depth is displayed in the bottom panel of Fig. 1. As we see, the depth exhibits a rather wild behavior. On both the upper and lower half-circle, it possesses \(m = 10\) clearly distinguished local extremes (local maxima in the upper half-circle, and local minima in the lower half-circle). In between these local extremes, the depth changes drastically. Denote by \(F_+\) (\(F_-\)) the (one-dimensional) distribution function of Q restricted to the line \(L_+\) (\(L_-\)). As shown in Appendix B.5, the local extremes correspond exactly to points where the two distribution functions \(F_+\) and \(F_-\) intersect (upper panel of Fig. 1). This corroborates empirically our finding from Theorem 4.

Note that the fact that the measure P has atoms is not important in our example; instead of the m atoms on the lower half-circle, one could equally well take for \(Q_-\) a mixture of m Gaussian distributions with means \(y_j\), \(j=1,\dots ,m\), respectively, and variances that are chosen small enough. In that case, the resulting distribution P can be taken smooth w.r.t. the Lebesgue measure on the unit circle, yet its arc distance depth will be quite similar to that in Fig. 1. For comparison, in Fig. 2 we display also the chord distance depth, and the cosine distance depth of the same dataset. While the chord distance depth does possess several local extremes, the cosine distance depth has a single (both local and global) maximum at the sample version of the directional mean \({{\,\textrm{E}\,}}X/\left\| {{\,\textrm{E}\,}}X \right\|\) of the dataset. That is in accordance with our analysis from Sect. 3.2.1.

Arc distance depth and Example 1. Upper panel: The empirical versions of the distribution functions \(F_+\) (black line) and \(F_-\) (red line) of the components of Q, respectively, with \(K = 5\) and \(m = 10\). Lower panel: The sample arc distance depth of all points \(x(\theta ) = \left( \cos (\theta ), \sin (\theta ) \right)\) of the unit circle \(\mathbb {S}^{1}\) as a function of their angle \(\theta \in [-\pi ,\pi )\). As can be seen, the arc distance depth behaves quite wildly, with several local extremes. The sample points are indicated as the small points at the bottom of the lower panel (color figure online)

The chord distance depth (upper panel) and the cosine distance depth (lower panel) for the dataset from Example 1. For a description see the caption of Fig. 1. For the chord distance depth we observe several local extremes, similarly as in Fig. 3 below. For the cosine distance depth we observe only a single maximum at the angle corresponding to the circular sample mean of the dataset

It is interesting to observe that the set of arc distance depth medians is exactly the set of all Fréchet medians obtained by minimizing (9). Indeed, the objective function in (9) is directly \(\varphi (x) = {{\,\textrm{E}\,}}\arccos \left( \left\langle x, X \right\rangle \right) = \pi - aD_{arc}(x;P)\). Condition (\(\textrm{D}_2\)) therefore translates into a natural question whether any distribution that is rotationally symmetric around \(\mu \in \mathbb {S}^{d-1}\) must contain \(\mu\) in the set of its Fréchet medians. In the following example, we show that already for \(d=2\) this is not necessarily true.

Example 2

Take \(P \in {\mathcal {P}}\left( {\mathbb {S}^{1}}\right)\) supported in four atoms

for some \(p \in (0,1)\) and \(\varepsilon , \eta \in [0,\pi /2]\). Any such distribution is rotationally symmetric around the axis given by \(\mu = \left( 0,1\right) \in \mathbb {S}^{1}\). By Theorem 2, we thus know that \(aD_{cos}\left( \cdot ; P\right)\) is also symmetric around the axis given by \(\mu\). Take \(x(\theta ) = \left( \cos (\theta ), \sin (\theta ) \right) \in \mathbb {S}^{1}\) to be a point given by its angle \(\theta \in [-\pi /2, \pi /2]\). It is easy to evaluate the objective function (9) at \(x(\theta )\) directly. The function takes the form

where \(\rho (t) = \min \left\{ \left| t \right| , 2\,\pi - \left| t \right| \right\}\) is the length of the shorter arc from the point x(t) to \(x(0) = \left( 1,0\right) \in \mathbb {S}^{1}\). Direct computation gives that for, e.g., \(p = 7/10\), \(\eta = \pi /5\) and \(\varepsilon = \pi /4\) we get

In particular, it is directly seen that the set of Fréchet medians of P is exactly the pair of points \(\left\{ x(\eta ), x(\pi /2 - \eta ) \right\}\), and this set contains neither \(\mu = x(\pi /2)\), nor \(-\mu = x(-\pi /2)\). Consequently, condition (\(\textrm{D}_2\)) is not in general satisfied for the arc distance depth.

The arc distance depth verifies (\(\textrm{D}_4\)) thanks to the following more general result. It is proved in Appendix B.6.

Theorem 5

Consider a distance-based depth \(aD_\delta\) from (11) with a function \(\delta\) that satisfies for some constant \(c> 0\) the condition

Then for all \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) and \(x \in \mathbb {S}^{d-1}\) we have that

In particular, condition (\(\textrm{D}_4\)) is satisfied for \(aD_\delta\).

Applying Theorem 5 to the arc distance depth with \(c = \pi\) we obtain

Note, however, that this is a much stronger condition than (\(\textrm{D}_4\)). It is in fact hardly desirable for an angular depth that (12) is true; it says that knowing the angular depth on any hemisphere of \(\mathbb {S}^{d-1}\), we can determine the exact depth for the remaining points of \(\mathbb {S}^{d-1}\). This is a property not unlike the problem with the constancy of the angular depths satisfying condition (\(\textrm{D}_6\)) at a hemisphere, as discussed in Theorem 3.

3.2.3 Chord distance depth

Consider again the measure \(P \in {\mathcal {P}}\left( {\mathbb {S}^{1}}\right)\) from Example 2 and apply \(aD_{chord}\). A computation completely analogous to that performed in Example 2 shows that the chord distance depth median set of P is given by \(\left\{ x(\eta ), x(\pi /2 - \eta ) \right\}\), just as for the arc distance depth. At the same time, the antipodal points of the median set do not minimize the chord distance depth of P; the unique minimizer of \(aD_{chord}(\cdot ;P)\) is, in fact, the direction \(-\mu = \left( 0,-1\right) \in \mathbb {S}^{1}\). Thus, the chord distance depth verifies neither (\(\textrm{D}_2\)) nor (\(\textrm{D}_4\)).

Further, we show that \(aD_{chord}\) and its central regions (2) violate also (\(\textrm{D}_3\)) and (\(\textrm{D}_6\)). Even worse, already for quite simple distributions, the corresponding chord distance depth median set does not have to be a connected set in \(\mathbb {S}^{d-1}\). To see this, consider a dataset of four points

This dataset is contained in the northern hemisphere \(\mathbb {S}^{2}_+\). A direct calculation gives the exact expression for the chord distance depth of each point \(x \in \mathbb {S}^{2}_+\), w.r.t. the atomic measure \(P \in {\mathcal {P}}\left( {\mathbb {S}^{2}}\right)\) which assigns mass 1/4 to each of the four points \(x_i\). This expression is maximized at two distinct points in \(\mathbb {S}^{2}_+\), located at

We visualize the present setup using the projection

of the northern hemisphere of \(\mathbb {S}^{2}\) into its tangent plane \(H = \left\{ (x_1, x_2, 1) \in {\mathbb {R}}^3 :x_1, x_2 \in {\mathbb {R}}\right\}\). The map (13) is a special case of the gnomonic projection \(\Pi\) described in Appendix A. It is not difficult to see that (13) maps great circles in \(\mathbb {S}^{2}\) onto straight lines in H. In particular, \(\Pi\) preserves convexity and connectedness of sets. Several chord distance depth contours and the two different median points are displayed in Fig. 3. From the figure, we see that the central regions of the chord distance depth have to be neither convex nor connected. Also, several distinct median points can occur, even for very simple datasets. Consequently, both conditions (\(\textrm{D}_3\)) and (\(\textrm{D}_6\)) are violated for the chord distance depth.

Several contours of the chord distance depth \(aD_{chord}(\cdot ;P)\) for P the uniform distribution in the four atoms displayed as black points. In the figure, the whole setup was projected using (13) from the open northern hemisphere of \(\mathbb {S}^{2}\) into the plane H tangent to \(\mathbb {S}^{2}\) at the northern pole \((0,0,1) \in \mathbb {S}^{2}\); for details see Appendix A.2. The two median points of the chord distance depth are displayed in orange. As can be seen, for the chord distance depth, the central regions are neither spherical (star) convex nor connected, in general (color figure online)

3.3 Angular simplicial depth

The angular simplicial depth of \(x \in \mathbb {S}^{d-1}\) w.r.t. \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) is defined as

where \(X_1, \dots , X_d\) are independent random variables distributed as P defined on \(\left( \Omega , {\mathcal {F}}, {\textsf{P}}\right)\), and \(\textrm{sconv}\left( A\right)\) is the spherical closed convex hull of the set \(A \subseteq \mathbb {S}^{d-1}\) defined as

for \(\textrm{rad}\left( A\right)\) the radial extension of the set A defined in (8). In words, \(\textrm{sconv}\left( A\right)\) is the intersection of \(\mathbb {S}^{d-1}\) with the closed convex hull of the positive cone of A. In typical situations, e.g. if all the variables \(X_1, \dots , X_d\) are contained in a hemisphere, the set \(\textrm{sconv}\left( \left\{ X_1, \dots , X_{d}\right\} \right)\) constitutes a spherical simplex, e.g. the shorter arc between \(X_1\) and \(X_2\) for \(d=2\), or a spherical triangle determined by \(X_1, X_2, X_3\) for \(d=3\). This also motivates the name angular simplicial depth, in analogy with the simplicial depth in linear spaces (Liu 1990). This parallel can be used to show that just like the simplicial depth, also its angular variant satisfies (\(\textrm{D}_5\)). The proof can be found in Appendix B.7. It uses the fact that (\(\textrm{D}_1\)) is true for \(asD\), which has been observed already by Liu and Singh (1992).

Theorem 6

The function \(\mathbb {S}^{d-1}\rightarrow [0,1] :x \mapsto asD(x; P)\) is upper semi-continuous for any \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\).

It is, however, important to realize that in (14) it can happen that all \(X_1, \dots , X_d \in \mathbb {S}^{d-1}\) lie inside a hyperplane passing through the origin in \({\mathbb {R}}^d\), e.g. in a single great circle of \(\mathbb {S}^{d-1}\). That occurs if there are hyperplanes H passing through the origin such that \(H \cap \mathbb {S}^{d-1}\) is of positive P-mass. In those situations, the spherical convex hull of \(X_1, \dots , X_d\) is a subset of \(H \cap \mathbb {S}^{d-1}\), which bears the interpretation of a degenerate spherical simplex. In the extreme case when all \(X_j\), \(j=1,\dots ,d\), lie in a single line in direction \(u \in \mathbb {S}^{d-1}\), that is \(X_j \in \left\{ u, -u\right\}\) for all \(j = 1, \dots , d\) and not all \(X_j\) are the same direction, the set \(\textrm{sconv}\left( \left\{ X_1, \dots , X_{d}\right\} \right)\) reduces to only the two-point set \(\left\{ u, -u\right\}\). We use this simple observation to construct our next example. It demonstrates that even for rotationally symmetric distributions, the angular simplicial depth may exhibit undesirable behavior.

Example 3

Consider the uniform distribution \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) that is supported on the equator of the unit sphere \(\mathbb {S}^{d-1}_0 = \mathbb {S}^{d-1}\cap H_0\), where \(H_0 = \left\{ u \in \mathbb {S}^{d-1}:\left\langle u, \left( 0, \dots , 0, 1 \right) \right\rangle = 0 \right\}\). For \(d = 2\), this amounts to the uniform distribution on the pair of points \(\left( 1,0\right) , \left( -1,0\right) \in \mathbb {S}^{1}\). For \(d=3\) we have the uniform probability measure on the equator of \(\mathbb {S}^{2}\), which is the great circle \(\left\{ \left( u_1, u_2, 0\right) \in \mathbb {S}^{2} :u_1^2 + u_2^2 = 1 \right\}\). Since any simplex formed by independent random points \(X_1, \dots , X_d\) sampled from P lies in the hyperplane \(H_0\), we have that also \(\textrm{sconv}\left( \left\{ X_1, \dots , X_d \right\} \right) \subset H_0\) almost surely. Thus, the only points in \(\mathbb {S}^{d-1}\) that attain positive angular simplicial depth are the points on the equator \(\mathbb {S}^{d-1}_0\). On the other hand, the distribution P is clearly rotationally symmetric around the axis given by \(\mu = \left( 0,\dots ,0,1\right) \in \mathbb {S}^{d-1}\), yet since \(\mu \notin \mathbb {S}^{d-1}_0\), we have \(asD(\mu ;P) = asD(-\mu ;P) = 0\), and condition (\(\textrm{D}_2\)) is not satisfied. In addition, the rotational invariance of the simplicial depth (\(\textrm{D}_1\)) applied to the \((d-2)\)-sphere \(\mathbb {S}^{d-1}_0\) gives that the value of the angular simplicial depth of P on \(\mathbb {S}^{d-1}_0\) is a constant denoted by \(c_d > 0\). But, \(\mathbb {S}^{d-1}_0\) is antipodally symmetric, meaning that neither condition (\(\textrm{D}_3\)) nor (\(\textrm{D}_4\)) can be satisfied for \(asD\) in general. To see that, it is enough to take any geodesic joining a point \(x_0 \in \mathbb {S}^{d-1}_0\) with its antipodal reflection \(x_1 = - x_0 \in \mathbb {S}^{d-1}_0\) that does not lie entirely inside \(\mathbb {S}^{d-1}_0\). At the endpoints \(x_0, x_1 \in \mathbb {S}^{d-1}_0\) we have \(asD(x_0;P) = asD(x_1;P) = c_d > 0\), while \(asD(x;P) = 0\) for each x on the rest of the geodesic.

For more contrived examples of distributions P in \(\mathbb {S}^{d-1}\) that make \(asD\) violate conditions (\(\textrm{D}_2\)), (\(\textrm{D}_3\)), or (\(\textrm{D}_6\)), one could design P to lie in a hemisphere and use the gnomonic projection from Appendix A.2 to transfer \(asD\) from \(\mathbb {S}^{d-1}\) to the usual simplicial depth in \({\mathbb {R}}^{d-1}\). Several examples of distributions in \({\mathbb {R}}^{d-1}\) for which the simplicial depth violates (\(\textrm{L}_2\)), (\(\textrm{L}_3\)), or (\(\textrm{L}_6\)) are given in Nagy (2023). All these can be naturally used also as counterexamples for \(asD\).

3.4 Angular halfspace depth

Expanding the classical Tukey’s halfspace depth \(hD\) (Tukey 1975; Donoho and Gasko 1992) from (4) to directional data, Small (1987) defined the angular halfspace depth of \(x \in \mathbb {S}^{d-1}\) w.r.t. \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) to be

where \({\mathcal {H}}_0 = \left\{ H_{0,u} :u \in \mathbb {S}^{d-1}\right\}\) is the set of those closed halfspaces

in \({\mathbb {R}}^d\) that contain the origin \(0 \in {\mathbb {R}}^d\) on their boundary and possess inner normal vector \(u \in \mathbb {S}^{d-1}\). The angular halfspace depth has been studied in Liu and Singh (1992), where several of its properties have been discussed.

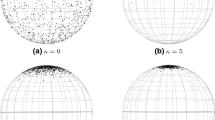

Since that pioneering work, however, the angular halfspace depth has not received much attention in the literature, mainly for two reasons: (i) due to the problem of \(ahD\) being constant on a hemisphere of minimal P-mass (Liu and Singh 1992, Proposition 4.6, see also our Theorem 3), and (ii) because of the perceived high computational cost of \(ahD\) for random samples (Pandolfo et al. 2018). The latter difficulty was recently fully resolved by making substantial progress on the computational front (Dyckerhoff and Nagy 2023). The problem with the constancy of \(ahD\) on a hemisphere is tightly connected with the quasi-concavity of \(ahD\) and our condition (\(\textrm{D}_6\)); directly from the definition (15) it is easy to see that the hemisphere of constant \(ahD\) will be (any) open hemisphere with smallest P-mass. The latter drawback can be an issue in classification studies. As will be illustrated shortly in Sect. 4, in applications where each training set belongs to a hemisphere, the angular halfspace depth can be successfully adopted. If the data does not satisfy this condition, strategies specifically designed to cope with the problem of constancy of the \(ahD\) are required. Possible approaches will be mentioned in Sect. 5 below, and investigated in a follow-up work on the practice of depth-based classification of directional data.

The theoretical properties of the angular halfspace depth have been recently studied in Nagy and Laketa (2023). They can be shown to align closely with the properties of the standard halfspace depth in linear spaces (Donoho and Gasko 1992; Rousseeuw and Ruts 1999). In particular, in Nagy and Laketa (2023) it is proved that \(ahD\) satisfies all conditions (\(\textrm{D}_1\))–(\(\textrm{D}_7\)), including the debatable condition of full quasi-concavity (\(\textrm{D}_6\)). This reinforces the idea that the angular halfspace depth is also interesting in the context of the classification of directional data (at least, provided that the constancy problem is properly addressed).

4 Supervised classification using angular depths

This section aims at illustrating the main implications of the theoretical results derived above when the goal is to classify directional data. More specifically, the focus will be on the desired properties of non-rigidity of central regions (\(\textrm{D}_7\)), and of quasi-concavity (\(\textrm{D}_6\)) entailing spherical convexity of the depth regions. Simulated and real data are exploited to highlight these implications.

As discussed throughout this paper, a large body of research exists on using statistical depth functions in the supervised classification task. The most frequently used depth-based tools are the max-depth and the DD-classifiers (Li et al. 2012). As the latter generalizes the former, we focus on DD-classification, and start by briefly recalling its use within the directional setting in Sect. 4.1. The performance of DD-classifiers depends on the particular depth function used. For that reason, Sects. 4.2–4.4 investigate the classifier performances in terms of misclassification rates when associated with different angular depth functions. Three main scenarios are investigated. First, the importance of the desired property (\(\textrm{D}_7\)) is underlined by considering a case where the optimal discriminating boundary on the sphere is not a great circle (Sect. 4.2). Then, the advantages and disadvantages of property (\(\textrm{D}_6\)) are contrasted in Sects. 4.3 and 4.4. For each scenario, performances of the angular distance-based, the angular halfspace, and the angular simplicial depths are compared. For the angular simplicial depth, however, it must be mentioned that severe computational issues hamper its use in practice (see e.g. the discussion in Pandolfo and D’Ambrosio 2021); its computation is practically feasible only for training sets with sizes less than 400 observations. Hence, the results for the angular simplicial depth are provided only for smaller training set sizes. In addition, the angular Mahalanobis depth is not considered in our simulations because of its similarity with the angular cosine depth, see Sect. 3.2.1.

In a general classification problem in a measurable space \({\mathcal {S}}\), we aim to distinguish between points sampled from one of \(G \ge 2\) distinct probability measures \(\left\{ P_i \right\} _{i=1}^G\subset {\mathcal {P}}\left( {\mathcal {S}}\right)\). A generic classifier in \({\mathcal {S}}\) can be defined as any function \(\textrm{class}:{\mathcal {S}} \rightarrow \{1,2, \dots , G\}\), which associates a point \(x \in {\mathcal {S}}\) with one of the distributions \(P_i \in {\mathcal {P}}\left( {\mathcal {S}}\right)\) for \(i = \textrm{class}(x)\).

Our depth-based classification results will be benchmarked against the empirical Bayes classifier assuming data comes from the family of Kent distributions. Assuming equal priors, the Bayes classifier is generally defined as

where \(f_i\) is the density which, by assumption, corresponds to the distribution \(P_i\). Note that the classifier (16) is optimal as it reaches the minimum achievable average misclassification rate, if the densities \(f_i\) are known.

Kent’s family is a special case of the Fisher-Bingham distribution, whose probability density function on \(\mathbb {S}^{d-1}\) is given by

where \(\mu \in \mathbb {S}^{d-1}\) and \(\kappa > 0\) are the location and concentration parameters, respectively, A is a \(d \times d\) symmetric matrix, and \(a(\kappa ,A) > 0\) is a normalizing constant. To obtain a Kent distribution, the eigenvalues of A are constrained to be \(\lambda _1=0\) and \(\lambda _2=-\lambda _3\), and we fix \(|\lambda _2| < \kappa /2\) to have a unimodal distribution.

Kent’s distribution is a quite general distribution on the sphere. Partially, it mimics the contours of the bivariate normal distribution. Plugging (17) into (16), we obtain the empirical Bayes classifier under Kent. It takes the form

where \(\hat{\mu }_i\), \(\hat{\kappa }_i\), \(\hat{A}_i\) are the maximum likelihood estimates of the parameters \(\mu\), \(\kappa\) and A, respectively, obtained from the i-th training set (the set of observations labeled empirical as belonging to \(P_i\)).

4.1 The DD-classifier

A directional depth-based classifier is a particular classifier that takes the form

where \(x \in \mathbb {S}^{d-1}\) is a new observation to be classified, \(aD(x; P_{i})\) is an angular depth of x w.r.t. the distribution \(P_{i} \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\), and \(r :[0, \infty )^G \rightarrow \{1, 2, \dots , G\}\) is a discriminating function in the depth space. In practice, of course, the true distributions \(P_i \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) are not known, and each \(P_i\) is in (18) replaced by the corresponding empirical measure of a random sample of data labeled as coming from \(P_i\). We denote such an empirical measure by \(\widehat{P}_i \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\).

In (18), the choice of the depth function \(aD\) and of the discriminating function r matters. As for the latter, three functions have been considered in the literature when the data are directional (Demni et al. 2021; Pandolfo and D’Ambrosio 2021):

-

The linear discriminant (LDA);

-

The quadratic discriminant (QDA); and

-

The k-nearest neighbors discriminant function (KNN).

Each of these methods discriminates between groups directly in the DD-space of depths, which is a subset of \([0,\infty )^G\), irrespective of the dimension \((d-1)\) of the directional data in \(\mathbb {S}^{d-1}\). This gives rise to three groups of DD-classifiers based on angular depths that we explore in what follows.

As for the choice of the angular depth function in (18), in the literature we find only classifiers using the collection of distance-based angular depths (Demni et al. 2021; Pandolfo and D’Ambrosio 2021). The adoption of distance-based angular depths in the situation when the underlying distributions in \(\mathbb {S}^{d-1}\) fail to be rotationally symmetric is, however, questionable (Demni and Porzio 2021). For the sake of illustration, in what follows, directional DD-classifiers are investigated for data in \(\mathbb {S}^{2}\) and in the case of two classes, i.e., \(G = 2\).

4.2 Discriminating boundary is not a great circle: property (\(\textrm{D}_7\)) matters

The non-rigidity of central regions allows depth functions to consider the geometry of the data. In particular, if a depth does not satisfy condition (\(\textrm{D}_7\)), it will perform poorly whenever the optimal discriminating boundary is not a great circle. To illustrate this, data were generated according to two specific settings for which the optimal Bayes discriminating boundary does not simplify to a great circle (Kent and Mardia 2013):

-

Setup 1: \(P_1\) and \(P_2\) from Kent, difference only in location. The two groups come from two different rotations of the same Kent distribution \(P_0\), for which we set \(\mu _{0}=(0,0,1) \in \mathbb {S}^{2}\), \(\kappa _{0}=25\), and \(A_{0}={{\,\textrm{diag}\,}}(0,8,-8)\) is a diagonal matrix in \({\mathbb {R}}^{3 \times 3}\). The distribution \(P_1\) is obtained by rotating \(P_{0}\) by a rotation matrix R such that \(\mu _1 = R \mu _{0}\), with \(\mu _1= (\sin (\pi /6) \cos (\pi /4), \sin (\pi /6)\sin (\pi /4), \cos (\pi /6)) \in \mathbb {S}^{2}\). The distribution \(P_2\) is obtained by rotating the \(P_{0}\) through the rotation matrix \(R^{\textsf{T}}\). Random samples from \(P_1\) and \(P_2\) are depicted in black and in red in Fig. 4 (left panel).

-

Setup 2: \(P_1\) and \(P_2\) from Kent, difference in location and concentration. Data are simulated by adopting the same procedure as in Setup 1, using the same rotation matrix R. However, the second group comes from a rotation (by \(R^{\textsf{T}}\)) of a Kent distribution \(P_0\) as in Setup 1 but with concentration \(\kappa =20\). A training set from these distributions is plotted in Fig. 5 (left panel).

Within each setup, 100 training sets of size \(n_{tr} \in \{100, 200, 400\}\) were generated (with each group size given by \(n_{tr}/2\)). Then, for each of the 100 training sets, 100 testing sets of size \(n_{test} \in \{50, 100, 200\}\) (\(n_{test}/2\) per each group) were generated and classified.

Section 4.2: Discriminating boundary is not a great circle, Setup 1. Kent distributions with a difference in location. A spherical plot of a single training set (left panel) and boxplots of empirical misclassification rates (right panel). Each boxplot reports the performance of a different classifier: the empirical Bayes under Kent (EBk), the cosine DD-classifiers (CDD), the chord DD-classifiers (ChDD), the arc distance DD-classifiers (ADD) and the angular halfspace DD-classifiers (HDD). For each angular depth, three classification rules were applied in the DD-space (LDA, QDA, and KNN, respectively) (color figure online)

Section 4.2: Discriminating boundary is not a great circle, Setup 2. Kent distributions with a difference in location and in concentration. A spherical plot of a single training set (left panel) and boxplots of empirical misclassification rates (right panel). Each boxplot reports the performance of a different classifier: the empirical Bayes under Kent (EBk), the cosine DD-classifiers (CDD), the chord DD-classifiers (ChDD), the arc distance DD-classifiers (ADD) and the angular halfspace DD-classifiers (HDD). For each angular depth, three classification rules were applied in the DD-space (LDA, QDA, and KNN, respectively) (color figure online)

Section 4.3: Ellipse-like density contours, Setup 3. Kent distributions with a difference in location, concentration, and orientation. A spherical plot of a single training set (left panel) and boxplots of empirical misclassification rates (right panel). Each boxplot reports the performance of a different classifier: the empirical Bayes under Kent (EBk), the cosine DD-classifiers (CDD), the chord DD-classifiers (ChDD), the arc distance DD-classifiers (ADD) and the angular halfspace DD-classifiers (HDD). For each angular depth, three classification rules were applied in the DD-space (LDA, QDA, and KNN, respectively) (color figure online)

Section 4.3: Ellipse-like density contours, Setup 4. Scaled von Mises distributions with differences in location and shape. A spherical plot of a single training set (left panel) and boxplots of empirical misclassification rates (right panel). Each boxplot reports the performance of a different classifier: the empirical Bayes under Kent (EBk), the cosine DD-classifiers (CDD), the chord DD-classifiers (ChDD), the arc distance DD-classifiers (ADD), and the angular halfspace DD-classifiers (HDD). For each angular depth, three classification rules were applied in the DD-space (LDA, QDA, and KNN, respectively) (color figure online)

The performance of the classifiers is evaluated in terms of empirical misclassification rates, i.e. the proportion of misclassified observations in each replication. For each simulation setting and each classifier, the average misclassification rates for different training set sizes are given in Tables 2 and 3. The distribution of the misclassification rates for training set size \(n_{tr}=400\) is also summarized through the boxplots in Figs. 4 and 5 (right panels). Given their much higher computational cost, the angular simplicial DD-classifiers were not included in the comparison with \(n_{tr}=400\).

Generally speaking, our results highlight the importance of property (\(\textrm{D}_7\)): the DD-classifier based on the cosine depth performs relatively poorly when compared to the others. The same would have happened if the angular Mahalanobis depth had been considered. We also note that, for \(n_{tr}=400\), the angular halfspace depth DD-classifiers associated with LDA and KNN perform quite well and outperform all distance-based DD-classifiers in both Setups 1 and 2 (Figs. 4 and 5, right panels).

The average misclassification rates recorded for different values of the training set size (Tables 2, 3, 4) confirm our results described for \(n_{tr}=400\). Worth noting is that the angular simplicial depth, which is computationally prohibitive, does not fare better overall than the angular halfspace depth within this scenario.

4.3 Ellipse-like density contours: property (\(\textrm{D}_6\)) matters

Spherical convexity of the angular depth central regions ensures the ability to capture ellipse-like data structure on (hemi)spheres. As a consequence, depth functions fulfilling condition (\(\textrm{D}_6\)) should provide good performances if the underlying density has ellipse-like density contours. In our next setup, data were drawn from the Kent and the scaled von Mises-Fisher distribution. While both of them possess ellipse-like contours, the latter exhibits ellipses with a higher level of eccentricity.

The family of scaled von Mises-Fisher distributions (Scealy and Wood 2019) on \(\mathbb {S}^{d-1}\) is generated by applying a bijective transformation of the sphere \(\mathbb {S}^{d-1}\) to itself. The probability density function of the scaled von Mises-Fisher distribution w.r.t. the spherical Lebesgue measure on \(\mathbb {S}^{d-1}\) takes the form

where \(c_d(\kappa ) > 0\) is a normalizing constant, \(\{\mu ,\gamma _2,\dots ,\gamma _d\} \subset {\mathbb {R}}^d\) are location parameters, \(a_1,a_2,\dots ,a_d > 0\) are shape parameters satisfying \(\prod _{j=2}^{d} a_j = 1\), and \(\kappa > 0\) is a concentration parameter.

The following two simulation settings were considered.

-

Setup 3: \(P_1\) and \(P_2\) from Kent, difference in location, concentration, and orientation. For \({P}_1\) we have \(\mu _1=(1,0,0) \in \mathbb {S}^{2}\), \(\kappa _1=25\) and \(A_1={{\,\textrm{diag}\,}}(0,8,-8)\). Data from \(P_2\) are obtained by transforming data sampled from a Kent distribution with \(\mu =(-1,0,0) \in \mathbb {S}^{2}\), \(\kappa =80\) and \(A={{\,\textrm{diag}\,}}(0,-40,40)\) to polar coordinates, and slightly perturbing the first polar coordinate direction (by adding a constant corresponding to an angle \(\pi /18\)). Random samples from these distributions can be seen in Fig. 6 (left panel).

-

Setup 4: \(P_1\) and \(P_2\) from scaled von Mises-Fisher, difference in location and shape. Distribution \({P}_1\) has mean direction \(\mu _1=(0,0,1) \in \mathbb {S}^{2}\), and \({P}_2\) has \(\mu _2=(0.43,-0.9,0) \in \mathbb {S}^{2}\), respectively, and concentration levels \(\kappa _1=\kappa _2=5\). The shape parameters are set to be \(a_1 = 1\), \(a_2 = 10\), \(a_3 = 0.1\) for \(P_1\) and \(a_1 = a_2 = a_3 = 1\) for \(P_2\). Random samples from the two distributions are given in Fig. 7 (left panel).

Results for both setups, for \(n_{tr} = 400\), are summarized through boxplots (Figs. 6 and 7, right panels). Misclassification rates are obtained over 100 independent runs. Tables 4 and 5 also report the average misclassification rates for \(n_{tr} = 100\), and \(n_{tr} = 200\), with values obtained when also the angular simplicial depth is adopted for these latter cases.

Overall, we found the angular halfspace depth working quite, if not extremely well. Worth noting is the case of the scaled von Mises-Fisher distributions (Setup 4). There, the classifier based on the angular halfspace depth is able to outperform even the empirical Bayes under Kent. Interestingly, the Kent distribution is known to share quite similar properties to the scaled von Mises-Fisher (Scealy and Wood 2019). Adopting the angular halfspace depth thus seems to be quite beneficial if minor departures from the assumed distribution are present (which can be well the case for real data applications).

4.4 Data not confined to a hemisphere: property (\(\textrm{D}_6\)) hampers

Spherical convexity of central regions (\(\textrm{D}_6\)) helps a lot when rotational symmetry cannot be assumed, and when the geometry of P is ellipse-like. On the other hand, this same blessed property brings damnation — by Theorem 3, (\(\textrm{D}_6\)) implies constancy over a hemisphere. That is clearly an undesirable feature in classification studies of directional data.

To illustrate, we present a small real data example. The problem of detecting cardiac arrhythmia from Electrocardiogram (ECG) waves is considered. More specifically, waves are dealt with as angular variables, and we select two of the waves used in Demni (2021), recorded for 430 patients: the QRST-wave and the T-wave. The first describes the global ventricular repolarization while the second corresponds to the rapid repolarization of the contractile cells.

The analysis aims to distinguish normal vs. arrhythmia cases. A visualization of the two angular variables on \(\mathbb {S}^{2}\) for 245 normal patients (depicted in black) and for 185 patients having arrhythmia (depicted in red) is given in Fig. 8 (left panel).

A simulation study was then carried on, and — to evaluate the performance of the different classifiers — a threefold stratified cross-validation method was adopted (the percentage of samples from each class was preserved). The experiment was repeated 100 times. The obtained distribution of the misclassification rates of the empirical Bayes under Kent, the distance-based DD-classifiers, and the angular halfspace DD-classifiers are provided through box-plots in Fig. 8 (right panel). The angular simplicial depth was excluded because of the size of the training set.

The distance-based DD-classifiers achieved the best overall performance, especially the chord DD-classifiers and the arc distance DD-classifiers when associated with the linear discriminant rule (LDA). Those methods performed even better than the empirical Bayes under Kent. The reason for that is that the data do not follow an ellipse-like geometry.

On the other hand, the DD-classifiers based on the angular halfspace depth, which is the only depth satisfying condition (\(\textrm{D}_6\)), provide the worst performance. This happens because the two groups have most of the points quite overlapping, and also the corresponding hemispheres with the smallest empirical probability do overlap substantially. As a result, points lying in the intersection of these hemispheres cannot be well classified.

Section 4.4: Data not confined to a hemisphere, real data example. A spherical plot of the angular variables QRST- and T-waves for healthy patients (in black) and patients with arrhythmia (in red, left panel), and boxplots of empirical misclassification rates (right panel). Each boxplot reports the performance of a different classifier: the empirical Bayes under Kent (EBk), the cosine DD-classifiers (CDD), the chord DD-classifiers (ChDD), the arc distance DD-classifiers (ADD), and the angular halfspace DD-classifiers (HDD). For each angular depth, three classification rules were applied in the DD-space (LDA, QDA, and KNN, respectively) (color figure online)

5 Final remarks

We have discussed the use of angular depth functions within a classification setting. Our viewpoint was mainly theoretical, but with a perspective of potential applications in classification and data analysis. With that aim, desirable properties that angular depth functions should enjoy were first provided. The most commonly used angular depths (angular Mahalanobis, distance-based, simplicial, halfspace) were compared w.r.t. these properties. It turned out that the angular halfspace depth is the only function that enjoys all the listed properties, including the condition of its central regions being spherical convex.

To illustrate our main findings, we used several small simulation exercises and a real dataset. We highlighted that (i) if the optimal discriminating boundary is not a great circle, depths not satisfying the non-rigidity property are not apt to be used for classification purposes; and (ii) if the data structure is ellipse-like, depths satisfying the quasi-concavity property should be adopted; but (iii) for data that lie in the whole sphere, depths satisfying the quasi-concavity property may perform poorly.

From a data analysis point of view, the following guidelines arise. First, avoid using the cosine distance and the Mahalanobis angular depths, unless the data comes from a rotationally symmetric distribution with a unique Fréchet median. Second, choose the angular halfspace depth if the geometric structure of the distribution is ellipse-like. Third, do not use the plain angular halfspace depth if the data are distributed sparsely on the whole surface of the sphere.

Our findings suggest that the angular halfspace depth deserves further attention. Certainly, in classification tasks, the angular halfspace depth is a powerful tool if data from each group lie in a hemisphere. For general directional data, however, the issue of constancy of the angular halfspace depth on hemispheres of minimum probability must be addressed. In analogy with the issues related to the halfspace depth in linear spaces, solutions that borrow ideas from the bagdistance (Hubert et al. 2015, 2017) or the illumination depth (Nagy and Dvořák 2021) can be investigated. We aim to report on those advances elsewhere.

Notes

Some depth functions, e.g., the angular Mahalanobis depth treated in Sect. 3.1 below, are not defined for all \(P \in {\mathcal {P}}\left( {\mathcal {S}}\right)\), but rather only for \(x \in {\mathcal {S}}\) and P in a subset \({\mathcal {D}} \subseteq {\mathcal {P}}\left( {\mathcal {S}}\right)\) of probability measures. We slightly abuse the notation and write (1) for simplicity.

Recall that a distribution \(P \in {\mathcal {P}}\left( {\mathbb {S}^{d-1}}\right)\) is called rotationally symmetric around a direction \(\mu \in \mathbb {S}^{d-1}\) if \(X \sim P\) has the same distribution as OX for any orthogonal matrix \(O \in {\mathbb {R}}^{d \times d}\) that fixes \(\mu\), that is \(O \mu = \mu\).

A spherical cap S is defined as an intersection of \(\mathbb {S}^{d-1}\) with a (closed) halfspace H in \({\mathbb {R}}^d\). The cap S is said to be orthogonal to the direction \(u \in \mathbb {S}^{d-1}\) of the unit normal of H.

References

Agostinelli C, Romanazzi M (2013) Nonparametric analysis of directional data based on data depth. Environ Ecol Stat 20(2):253–270

Arnone E, Ferraccioli F, Pigolotti C, Sangalli LM (2022) A roughness penalty approach to estimate densities over two-dimensional manifolds. Comput Stat Data Anal 174:107527

Berger M (2010) Geometry revealed. Springer, Heidelberg

Besau F, Werner EM (2016) The spherical convex floating body. Adv Math 301:867–901

Bry X, Cucala L (2022) A von Mises-Fisher mixture model for clustering numerical and categorical variables. Adv Data Anal Classif 16(2):429–455

Cuesta-Albertos JA, Febrero-Bande M, Oviedo de la Fuente M (2017) The \({\rm DD}^G\)-classifier in the functional setting. Test 26(1):119–142

Demni H (2021) Directional supervised learning through depth functions: an application to ECG waves analysis. In: Balzano S, Porzio GC, Salvatore R, Vistocco D, Vichi M (eds) Stat Learn Model Data Anal. Springer International Publishing, Cham, pp 79–87

Demni H, Messaoud A, Porzio GC (2021) Distance-based directional depth classifiers: a robustness study. Commun Stat Simul Comput. https://doi.org/10.1080/03610918.2021.1996603

Demni H, Porzio GC (2021) Directional DD-classifiers under non-rotational symmetry. In 2021 IEEE International conference on multisensor fusion and integration for intelligent systems (MFI), pp 1–6. IEEE

Donoho DL, Gasko M (1992) Breakdown properties of location estimates based on halfspace depth and projected outlyingness. Ann Stat 20(4):1803–1827

Dudley RM (2002) Real analysis and probability, volume 74 of Cambridge studies in advanced mathematics. Cambridge University Press, Cambridge. Revised reprint of the 1989 original

Dyckerhoff R, Nagy S (2023) Exact computation of angular halfspace depth. Under review

Figueiredo A (2009) Discriminant analysis for the von Mises-Fisher distribution. Commun Stat Simulation Comput 38(9):1991–2003

Fisher NI (1985) Spherical medians. J Roy Stat Soc Ser B 47(2):342–348

Hubert M, Rousseeuw P, Segaert P (2017) Multivariate and functional classification using depth and distance. Adv Data Anal Classif 11(3):445–466

Hubert M, Rousseeuw PJ, Segaert P (2015) Multivariate functional outlier detection. Stat Methods Appl 24(2):177–202

Jana N, Dey S (2021) Classification of observations into von Mises-Fisher populations with unknown parameters. Commun Stat Simul Comput. https://doi.org/10.1080/03610918.2021.1962347

Kent JT, Mardia KV (2013) Discrimination for spherical data. In: Mardia KV, Gusnanto A, Riley AD, Voss J (eds) LASR 2013—statistical models and methods for non-Euclidean data with current scientific applications, pp 71–74

Konen D (2022) Topics in multivariate spatial quantiles. PhD thesis, ULB

Ley C, Sabbah C, Verdebout T (2014) A new concept of quantiles for directional data and the angular Mahalanobis depth. Electron J Stat 8(1):795–816

Ley C, Verdebout T (2017) Modern directional statistics. CRC Press, Boca Raton, FL, Chapman & Hall/CRC Interdisciplinary statistics series

Li J, Cuesta-Albertos JA, Liu RY (2012) \(DD\)-classifier: nonparametric classification procedure based on \(DD\)-plot. J Am Stat Assoc 107(498):737–753

Liu RY (1990) On a notion of data depth based on random simplices. Ann Stat 18(1):405–414

Liu RY, Parelius JM, Singh K (1999) Multivariate analysis by data depth: descriptive statistics, graphics and inference. Ann Stat 27(3):783–858

Liu RY, Singh K (1992) Ordering directional data: concepts of data depth on circles and spheres. Ann Stat 20(3):1468–1484

Mardia KV (1972) Statistics of directional data. Probability and mathematical statistics, no 13. Academic Press, London, New York

Mosler K, Mozharovskyi P (2017) Fast \(DD\)-classification of functional data. Stat Papers 58(4):1055–1089

Mosler K, Mozharovskyi P (2022) Choosing among notions of multivariate depth statistics. Stat Sci 37(3):348–368

Nagy S (2023) Simplicial depth and its median: selected properties and limitations. Stat Anal Data Min 16(4):374–390

Nagy S, Dvořák J (2021) Illumination depth. J Comput Graph Statist 30(1):78–90

Nagy S, Gijbels I, Omelka M, Hlubinka D (2016) Integrated depth for functional data: statistical properties and consistency. ESAIM Prob Stat 20:95–130

Nagy S, Laketa P (2023) Angular halfspace depth: Theoretical properties. Under review

Nagy S, Schütt C, Werner EM (2019) Halfspace depth and floating body. Stat Surv 13:52–118

Pandolfo G, D’Ambrosio A (2021) Depth-based classification of directional data. Exp Syst Appl 169:1144433

Pandolfo G, Paindaveine D, Porzio GC (2018) Distance-based depths for directional data. Can J Stat 46(4):593–609

Rousseeuw PJ, Ruts I (1999) The depth function of a population distribution. Metrika 49(3):213–244

Rousseeuw PJ, Struyf A (2004) Characterizing angular symmetry and regression symmetry. J Stat Plan Inference 122(1–2):161–173

Saavedra-Nieves P, Crujeiras RM (2022) Nonparametric estimation of directional highest density regions. Adv Data Anal Classif 16(3):761–796

Salah A, Nadif M (2019) Directional co-clustering. Adv Data Anal Classif 13(3):591–620

Scealy JL, Wood ATA (2019) Scaled von Mises-Fisher distributions and regression models for paleomagnetic directional data. J Am Stat Assoc 114(528):1547–1560