Abstract

Symbolic Data Analysis (SDA) is a relatively new field of statistics that extends conventional data analysis by taking into account intrinsic data variability and structure. Unlike conventional data analysis, in SDA the features characterizing the data can be multi-valued, such as intervals or histograms. SDA has been mainly approached from a sampling perspective. In this work, we propose a model that links the micro-data and macro-data of interval-valued symbolic variables, which takes a populational perspective. Using this model, we derive the micro-data assumptions underlying the various definitions of symbolic covariance matrices proposed in the literature, and show that these assumptions can be too restrictive, raising applicability concerns. We analyze the various definitions using worked examples and four datasets. Our results show that the existence/absence of correlations in the macro-data may not be correctly captured by the definitions of symbolic covariance matrices and that, in real data, there can be a strong divergence between these definitions. Thus, in order to select the most appropriate definition, one must have some knowledge about the micro-data structure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The volume and complexity of available data in virtually all sectors of society has grown enormously, boosted by the globalization and the massive use of the Internet. New statistical methods are required to handle this new reality, and Symbolic Data Analysis (SDA), proposed by Diday (1987), is a promising research area.

When trying to characterize datasets, it may not be convenient to deal with the individual data observations (e.g. because the sample size is too large), or we may not have access to the individual data observations (e.g. because of privacy restrictions). In conventional data analysis, this problem is usually handled by providing single-valued summary statistics of the data characteristics (e.g. mean, variance, quantiles). The analysis can consider multiple characteristics, but these characteristics can only be single-valued. SDA extends conventional data analysis by allowing the description of datasets through multi-valued features, such as intervals, histograms, or even distributions (Billard and Diday 2003, 2006; Brito 2014). These features are called symbolic variables.

Suppose we want to analyze textile sector per country, e.g. in terms of two characteristics: number of customers and profit. Suppose also that we only have access to summary information per country, and not to the data of each individual company. Conventional data analysis can only deal with single-valued features, like the profit variance, profit mean, or the mean number of customers. Instead, in SDA, the features (the symbolic variables) can be multi-valued, e.g. one feature can be the minimum and maximum profits, and another can be a histogram of the number of customers.

One of the main benefits of SDA has to do with the way individual data characteristics (e.g. profit or number of customers) are described. In conventional data analysis, since only single-valued features are available, one may need many features to describe a given characteristic. Moreover, the features are treated in the same way, irrespective of the characteristic they represent. For example, one may create as features to characterize the profit of the textile sector the mean, the variance, the maximum, the minimum, the median, the first and third quartiles, and so on. There is then an inflation of features to explain a single data characteristic (the profit, in this case). SDA allows explaining single data characteristics through single symbolic data variables, better tailored to analyze that specific characteristic, and with potential gains in terms of dimensionality.

In SDA, the original data is called micro-data and the aggregated data is called macro-data. In the previous example, the micro-data would be the data of individual companies (labeled with the country they belong to), and the macro-data the interval of profit (between maximum and minimum) or the histogram of the number of customers, of the companies of each country. Our main interest in this paper is on interval-valued data (Noirhomme-Fraiture and Brito 2011; Zhang and Sisson 2020), where macro-data corresponds to the interval between minimum and maximum of micro-data values.

SDA is a relatively new field of statistics and has been mainly approached from a sampling perspective. The works Bertrand and Goupil (2000), Billard and Diday (2006) and Billard (2008) introduced measures of location, dispersion, and association between symbolic random variables, formalized as a function of the observed macro-data values. The sample covariance (correlation) matrices were addressed in the context of symbolic principal component analysis in Chouakria (1998), Le-Rademacher (2008), Le-Rademacher and Billard (2012), Wang et al. (2012), Vilela (2015), Oliveira et al. (2017) and more recently in factor analysis (Cheira et al. 2017). In Oliveira et al. (2017) the authors established relationships between several proposed methods of symbolic principal component analysis and available definitions of sample symbolic variance and covariance. Other areas of statistics have also been addressed by SDA like clustering (e.g. de Carvalho and Lechevallier 2009; Sato-Ilic 2011), discriminant analysis (see e.g. Duarte Silva and Brito 2015; Queiroz et al. 2018), regression analysis (see e.g. Lima Neto et al. 2011; Dias and Brito 2017), and time series (see e.g. Maia et al. 2008; Teles and Brito 2015).

Parametric approaches for interval-valued variables have also been considered (Le-Rademacher and Billard 2011; Lima Neto et al. 2011; Brito and Duarte Silva 2012; Duarte Silva and Brito 2015; Dias and Brito 2017; Duarte Silva et al. 2018; Cheira et al. 2017. Authors in Le-Rademacher and Billard (2011) derived maximum likelihood estimators for the mean and the variance of three types of symbolic random variables: interval-valued, histogram-valued, and triangular distribution-valued variables. In Lima Neto et al. (2011), authors have formulated interval-valued variables as bivariate random vectors in order to introduce a symbolic regression model based on the theory of generalized linear models. The works Brito and Duarte Silva (2012), Duarte Silva and Brito (2015), and Duarte Silva et al. (2018) have followed a different approach. In their line of work, the centers and the logarithms of the ranges are collected in a random vector with a multivariate (skew-)normal distribution, which is used to derive methods for the analysis of variance (Brito and Duarte Silva 2012), discriminant analysis (Duarte Silva and Brito 2015), and outlier detection (Duarte Silva et al. 2018) of interval-valued variables. More recently, the need to specify a micro-data model for the underlying macro-data was addressed by Zhang and Sisson (2020) and Beranger et al. (2020). The authors constructed maximum likelihood functions for symbolic data based on how the macro-data is derived from the micro-data.

Despite of previous work, the area of SDA is lacking theoretical support and our work is a step in this direction. Preferably, the statistical methods of SDA should be grounded on populational formulations, as in the case of conventional methods. A populational formulation allows a clear definition of the underlying statistical model and its properties, and the derivation of effective estimation methods.

In this paper, we use population formulations of the sample symbolic mean vector, sample symbolic covariance matrices and sample correlation matrices available in the literature. To provide an interpretation of each definition, we propose a model that links the structure of the micro-data and of the macro-data. The model assumes that the micro-data associated with a certain random interval are not observable (latent), and has a mean equal to the mean of the interval center. Using the model, we derive the assumptions on the micro-data structure subjacent to each definition of symbolic covariance matrices. We also show that the symbolic correlations proposed in the literature are quantities between − 1 and 1, as in the conventional case, independently of the relationship between the micro- and macro-data. Using worked examples, we discuss the meaning of null covariance, and show cases where the existence/absence of correlation in the macro-data is not captured by the definitions of symbolic correlation matrices. Finally, we explore the various definitions using real data examples. When micro-data is available, we select the most appropriate definition for each dataset using goodness-of-fit tests and quantile-quantile plots, and provide an explanation of the micro-data based on the covariance matrices. However, the results show that there can be a large divergence between definitions, meaning that, in general, one needs some information on the micro-data structure to decide about the most appropriate definition.

The paper is structured as follows. In Sect. 2 we introduce the model linking the micro-data with the macro-data of interval-valued symbolic variables and discuss several worked examples. Section 3 introduces the real data examples. Finally, Sect. 4 presents the conclusions of the paper and gives some directions for future work.

2 Symbolic means, variances, and covariances

In this work, we focus on the study of interval-valued variables (Bock and Diday 2000) and interval-valued random vectors, as next defined.

Definition 1

\(X=[L,U]\) is an interval-valued random variable defined on the probability space \((\varOmega , {{\mathcal {F}}},P)\) if and only if L and U are random variables defined on \((\varOmega , {{\mathcal {F}}},P)\) such that \(P(L \le U)=1\). A p-dimensional interval-valued random vector \({\varvec{X}}\) is a vector of p interval-valued random variables, \(X_1\), \(X_2\),\(\dots \),\(X_p\), all defined on the same probability space.

We consider that an interval-valued random variable, \(X=[L, U]\), besides being represented by the interval limits L and U, is also represented by the center and range of the interval:

Similarly a p-dimensional interval-valued random vector \({\varvec{X}}=(X_1,X_2,\ldots ,X_p)^t\) is characterized by the vector \(( {\varvec{C}}^t,{\varvec{R}}^t)^t\) where \({\varvec{C}} = (C_1, \dotsc , C_p)^t\) is the vector of centers and \({\varvec{R}} = (R_1, \dotsc , R_p)^t\) the vector of ranges describing the object, i.e.,

for \(i=1,2,\ldots ,p\).

In the next subsection, we start by proposing a model that establishes a natural link between micro-data and macro-data. Afterwards, we derive the means, variances, and covariances of the micro-data; we refer to these quantities as symbolic means, symbolic variances, and symbolic covariances.

2.1 A model linking micro-data with macro-data

In this model, we define a random vector \({\varvec{A}}\) representing micro-data, as a function of the random vectors of centers and ranges, \({\varvec{C}}\) and \({\varvec{R}}\), that characterize the associated macro-data, along with a random vector \({\varvec{U}}\) that characterizes the structure of the micro-data given the associated macro-data.

Note that a realization of \({\varvec{A}}\) is a point in the hyper-rectangle associated with the random interval-valued vector \({\varvec{X}}\), characterized by its center, \({\varvec{C}}\), and range, \({\varvec{R}}\). In detail, we choose \({\varvec{A}}=(A_{1},\ldots ,A_{p})^t\) such that:

with the weights \(U_{j}\) being random variables with support on the interval \([-1,1]\).

We observe that \(U_{j} R_j/2\) [that is the term that is added to the center of the j-th component of the random macro-data, \(C_j\), to produce the j-th component of an associated micro-data, namely \(A_j\), is the deviation of the j-th component of the micro-data to the center of the interval of the j-th component of its associated macro-data \(\left[ C_j - R_j/2, C_j + \displaystyle R_j/2\right] \).

By imposing conditions on \({\varvec{U}}\), we obtain the mean vector and the covariance matrix of \({\varvec{A}}\), denoted by \({\varvec{\mu }}=\mathrm{E}({\varvec{A}})\) and \({\varvec{\varSigma }}=\mathrm{Var}({\varvec{A}})\), respectively, that have as special case proposals for the symbolic mean vector and symbolic covariance matrix known in the literature. In the remaining of this subsection we consider the assumption next stated (Assumption 1), which in turn leads to Theorem 1.

Assumption 1

The random vector \({\varvec{U}}= (U_{1},U_2,\ldots ,U_{p})^t\) has zero mean and is independent of the random vector \(({\varvec{C}}^t,{\varvec{R}}^t)^t\).

Theorem 1

If Assumption 1 holds then, for \(j,l\in \{1,2,\ldots ,p\}\) with \(j\ne l\):

Proof

Let Assumption 1 hold. From the fact that \(\mathrm{E}(U_{j})=0\), \(U_j\) is independent of \(R_j\), and the linearity of the expectation operator, it follows that

We will now obtain the symbolic variances and covariances. From (3) we can derive:

Moreover, given that \(U_{j}\) and \(R_j\) are independent random variables and \(\mathrm{E}(U_{j})=0\), we obtain

Following a similar reasoning, it can be shown that

for all values of j. As a result, it follows as wanted that:

Again from (3) it follows that for \(j,l\in \{1,2,\ldots ,p\}\) with \(j\ne l\);

As \((U_{j},U_{l})\) is independent of \((C_j,R_j,C_l,R_l)\), it can be shown that

and

thus implying that:

\(\square \)

The following result, which provides the symbolic mean vector, \({\varvec{\mu }}=\mathrm{E}({\varvec{A}})\), and the symbolic covariance matrix, \({\varvec{\varSigma }} = \mathrm{Var}({\varvec{A}})\), follows trivially from Theorem 1.

Corollary 1

If Assumption 1 holds then \({\varvec{\mu }}=\mathrm{E}({\varvec{C}})\) and

where \( {\varvec{\varSigma }} _{CC}\) and \({\varvec{\varSigma }}_{UU}\) represent the covariance matrix of \({\varvec{C}}\) and \({\varvec{U}}\), respectively, and \(\bullet \) denotes the Schur or entrywise product of matrices.

2.2 Symbolic covariance and pseudo-correlation matrices under two scenarios

We will now particularise the form of the symbolic covariance matrix for two scenarios more restrictive than Assumption 1 stated in the previous subsection: the first scenario in which the weights \(U_j\) are uncorrelated random variables, and the second scenario in which the weights \(U_j\) are almost surely equal (represented by \(\overset{\mathrm{a.s.}}{=}\)) to a random variable U. Adding these constraints to Assumption 1, namely that the weights \(U_{j}\) are zero mean random variables with support on the interval \([-1,1]\), independent from \(({\varvec{C}}^t,{\varvec{R}}^t)^t\), we have the following assumptions for scenarios 1 and 2:

- Scenario 1::

-

The weights \(U_1,U_2,\ldots ,U_p\) are zero mean uncorrelated random variables with support on \([-1,1]\) and are independent from \(({\varvec{C}}^t,{\varvec{R}}^t)^t\).

- Scenario 2::

-

\(U_1 \overset{\mathrm{a.s.}}{=}U_2\overset{\mathrm{a.s.}}{=}\cdots \overset{\mathrm{a.s.}}{=} U_p\overset{\mathrm{a.s.}}{=} U\), with U being a zero mean random variable with support on \([-1,1]\) and independent from \(({\varvec{C}}^t,{\varvec{R}}^t)^t\).

We note that Scenario 1 corresponds to the least possible linear association between the weights as they are uncorrelated random variables. In opposition, Scenario 2 corresponds to the highest possible association between the weights as they are equal (almost surely). Thinking about the hyper-rectangular associated with a given macro-data, scenario 1 assumes that micro-data can take any value within the hyper-rectangular, while in scenario 2 micro-data are restricted to take values in the line segment that connects the point consisting of all lower interval limit values with the point consisting of all upper interval limit values.

The result (7), along with Scenarios 1 and 2, lead to two different families of symbolic covariance matrices, summarised in Corollary 2. In what follows, if \({\varvec{Z}}\) is a matrix then \(\mathrm{Diag} ({\varvec{Z}})\) represents a diagonal matrix whose main diagonal have the values \([{\varvec{Z}}]_{ii}\). If \({\varvec{z}}\) is a vector then \(\mathrm{Diag} ({\varvec{z}})\) represents a diagonal matrix whose main diagonal have the values of vector \({\varvec{z}}\).

Corollary 2

Under Scenario 1 the symbolic covariance matrix, denoted by \({\varvec{\varSigma }}^{(1)}\), has the form

where \({\varvec{\varSigma }}_{UU}=\mathrm{Diag} \left( \mathrm{Var}(U_1),\ldots ,\mathrm{Var}(U_p)\right) \). Moreover, if all \(U_j\) share the same variance (i.e. \(\mathrm{Var}(U_j)=\mathrm{Var}(U_1)\) for \(j\in \{2,3,\ldots ,p\}\)), then \({\varvec{\varSigma }}^{(1)}\) simplifies to:

Under Scenario 2 the symbolic covariance matrix, denoted by \({\varvec{\varSigma }}^{(2)}\), has the form

Proof

Let us first assume that the conditions of Scenario 1 hold. This implies that the conditions of Assumption 1 hold as well and, in particular, that Eq. (7) holds. As, in addition, \({\varvec{\varSigma }}_{UU}=\mathrm{Diag} \left( \mathrm{Var}(U_1),\ldots ,\mathrm{Var}(U_p)\right) \), since the weights \(U_1\), \(U_2\), ..., \(U_p\) are uncorrelated random variables, we conclude that (8) holds. Moreover, as (9) follows trivially from (8), we conclude that (9) holds as well.

Let us now assume that the conditions of Scenario 2 hold. This implies that the conditions of Assumption 1 hold as well and, in particular, that Eq. (7) holds. As, in addition, \( \mathrm{Cov}(U_{j},U_{l})= \mathrm{E}(U_{j}U_{l})= \mathrm{E}(U^2)= \mathrm{Var}(U)\), for \(j,l\in \{1,2,\ldots ,p\}\) since \(U_1 \overset{\mathrm{a.s.}}{=}U_2\overset{\mathrm{a.s.}}{=}\cdots \overset{\mathrm{a.s.}}{=} U_p\overset{\mathrm{a.s.}}{=} U\), we conclude that (10) holds. \(\square \)

Different particular forms of the symbolic covariance matrices \(\varvec{\Upsigma }^{(1)}\) and \(\varvec{\Upsigma }^{(2)}\) will be derived in the next subsection, along with conditions on the weights \(U_j\) that may lead to such symbolic covariance matrices. However, we will first address pseudo-correlation matrices associated with the matrices \(\varvec{\Upsigma }^{(1)}\) and \(\varvec{\Upsigma }^{(2)}\), starting by the definition of pseudo-correlation matrix associated with a square matrix with positive diagonal entries.

Definition 2

If \({\varvec{\varLambda }}=[\lambda _{jl}]_{j,l\in \{1,2,\ldots ,p\}}\) is a square matrix with positive diagonal entries, then the associated pseudo-correlation matrix, denoted by \(\rho ( {\varvec{\varLambda }})=[[\rho ({\varvec{\varLambda }})]_{jl}]_{j,l\in \{1,2,\ldots ,p\}}\), is given by

We should stress that if \({\varvec{\varLambda }}\) is the covariance matrix of a given p-dimensional random vector, then \(\rho ( {\varvec{\varLambda }})\) is the correlation matrix of the same random vector. Notice that, however, when dealing with the way some interval correlations are defined in the literature, the so-called symbolic covariance matrix may not be a covariance matrix in the classical sense. For example, in some works from the literature, the (in fact, pseudo) covariance matrix of a p-dimensional interval-valued random vector \({\varvec{X}}=(X_1,\ldots ,X_p)^t\), where \(X_j=[C_j-R_j/2,C_j+R_j/2]\), \(j=1,\ldots ,p\), is defined as:

for a given positive value of \(\delta \). Particular values for \(\delta \) are: \(\delta =\frac{1}{4},\, \frac{1}{8},\, \frac{1}{24},\, \frac{1}{36}-\frac{ \phi (3)}{6(2\varPhi (3)-1)}\) (Cazes et al. 1997), and \(\delta =\frac{1}{12}\) (Wang et al. 2012). Thus, case \(p=2\), if \(X_2=X_1\) then \(\mathrm{Cov}_\delta (X_1,X_2)=\mathrm{Cov}(C_1,C_2)=\mathrm{Var}(C_1)\) and \(\mathrm{Var}_\delta (X_2)=\mathrm{Var}_\delta (X_1)=\mathrm{Var}(C_1)+\delta \mathrm{E}(R_1^2)/2\). Thus, the entry (1,2) of the (pseudo) correlation matrix is

Thus, if \(X_1\) is not a conventional random variable i.e. \(P(R_1=0)< 1\), then \(\mathrm{Cor}_\delta (X_1,X_2) \ne 1\) even that \(X_1=X_2\). As a result, the pseudo-correlation matrix of \((X_1,X_2)^t\) is not a correlation matrix in case \(P(R_1=0)< 1\).

In the next theorem we show that the absolute values of the entries of the pseudo-correlation matrices associated with the matrices \(\varvec{\Upsigma }^{(1)}\) and \(\varvec{\Upsigma }^{(2)}\) [see Eqs. (8) and (10)] are smaller or equal to one. In other words, symbolic pseudo-correlations associated with the matrices \(\varvec{\Upsigma }^{(1)}\) and \(\varvec{\Upsigma }^{(2)}\) are quantities between − 1 and 1, as in the conventional case [provided \(\varvec{\Upsigma }^{(1)}\) and \(\varvec{\Upsigma }^{(2)}\) have finite entries, which is essentially the case whenever \(C_1,C_2,\ldots ,C_p,R_1,R_2,\ldots ,R_p\) are random variables with finite moments of order two].

Theorem 2

If \({\varvec{X}}\) is such that \(\varvec{\Upsigma }^{(1)}\) in the form given by Eq. (9) has only finite entries, then

Similarly, if \({\varvec{X}}\) is such that \(\varvec{\Upsigma }^{(2)}\) given by Eq. (10) has only finite entries, then

Proof

We first suppose that \(\varvec{\Upsigma }^{(1)}\) given by Eq. (9) has only finite entries, in which case \([\rho (\varvec{\Upsigma }^{(1)})]_{jj}=1\), for all \(j\in \{1,2,\ldots ,p\}\), follows trivially. Secondly, we consider arbitrary indices \(j,l\in \{1,2,\ldots ,p\}\) such that \(j\ne l\) and note that, using the Cauchy-Schwarz inequality applied to the random pair \((C_j,C_l)\), we obtain, as wanted,

We now assume that \(\varvec{\Upsigma }^{(2)}\) given by Eq. (10) has only finite entries, in which case \([\rho (\varvec{\Upsigma }^{(2)})]_{jj}=1\), for all \(j\in \{1,2,\ldots ,p\}\), follows trivially. We next consider arbitrary indices \(j,l\in \{1,2,\ldots ,p\}\) such that \(j\ne l\) and note that, using the Cauchy-Schwarz inequality applied to the random pair \((C_j,C_l)\), Holder’s inequality applied to te random pair \((R_j,R_l)\), and making \(\delta = \mathrm{Var}(U)/4\), we obtain:

Thus, \(\left[ [\rho (\varvec{\Upsigma }^{(2)})]_{jl}\right] ^2\) is smaller or equal to

As it can be easily proved, for all non-negative values of x and y, \(2\sqrt{xy} \le (x+y)\). Thus, making \(x=\mathrm{Var}(C_j)\mathrm{E}(R_l^2)\) and \(y=\mathrm{Var}(C_l)\mathrm{E}(R_j^2)\), we conclude that, as wanted, \(\left[ [\rho (\varvec{\Upsigma }^{(2)})]_{jl}\right] ^2 \le 1\). \(\square \)

2.3 Eight particular forms of symbolic covariance matrices

In Table 1 we list 8 particular forms for the symbolic covariance matrix, \({\varvec{\varSigma }}\), along with the scenario and additional constraints on the weights \(U_1, U_2,\ldots , U_p\) or U that may give rise to them. These are merely sufficient conditions, not necessary ones. Moreover, in Fig. 1, we provide an illustration of the associated micro-data for the 2-dimensional case; i.e., we display the possible values of \({\varvec{A}}=(A_{1},A_{2})^t\), for \(k=1,2,\ldots ,8\).

The first form (\(k=1\)) is obtained under Scenario 1 with the weights \(U_{j}\) being zero with probability one, or equivalently under Scenario 2 with the weight U being zero with probability one, corresponding to the case where the ranges are not taken into account. In such case \({\varvec{A}} \overset{\mathrm{a.s.}}{=}{\varvec{C}}\), leading to \( \varvec{\varSigma }= \varvec{\varSigma }_1=\mathrm{Cov}({\varvec{C}})\). As illustrated in Fig. 1(1) for the 2-dimensional case, the micro-data associated with this model is always at the center of the interval-valued object.

The second form (\(k=2\)) is obtained under Scenario 2 when the weight U has unit variance; this along with U having zero mean and support on \([-1,1]\) implies that U follows a discrete Uniform distribution on \(\{-1,1\}\). Then, \(\mathrm{Var}(U)=1\) leads to \({\varvec{\varSigma }}= \mathrm{Cov}({\varvec{A}})={\varvec{\varSigma }}_2\), in view of (10). The micro-data associated with this model is at one of the two vertices \((C_1-R_1/2,C_2-R_2/2,\ldots ,C_p-R_p/2)^t\) or \((C_1+R_1/2,C_2+R_2/2,\ldots ,C_p+R_p/2)^t\), chosen with equal probability. The 2-dimensional case is illustrated in Fig. 1(2).

The third form (\(k=3\)) is obtained under Scenario 2 when the weight U has variance 1/3, as it is the case when U follows a continuous Uniform distribution on \([-1,1]\). Then, \(\mathrm{Var}(U)=1/3\) leads to \({\varvec{\varSigma }}=\mathrm{Cov}({\varvec{A}})={\varvec{\varSigma }}_3\), in view of (10). Note that when U follows a continuous Uniform distribution on \([-1,1]\), the micro-data associated with the model is at the line segment with endpoints \((C_1-R_1/2,C_2-R_2/2,\ldots ,C_p-R_p/2)^t\) and \((C_1+R_1/2,C_2+R_2/2,\ldots ,C_p+R_p/2)^t\), which passes through the vector of centers \((C_1,C_2,\ldots ,C_p)^t\). Figure 1(3) illustrates the 2-dimensional case.

With a similar reasoning, we may conclude that the fourth form (\(k=4\)) is obtained under Scenario 1 when the weights \(U_{1},U_2,\ldots ,U_{p}\) are independent random variables with discrete Uniform distribution on \(\{-1,1\}\). Since \(\mathrm{Var}(U_j)=1\), for all j, in view of (9), we conclude that \({\varvec{\varSigma }}=\mathrm{Cov}({\varvec{A}})={\varvec{\varSigma }}_4\). Micro-data associated with this model are at one of the vertices of the hyper-rectangle corresponding to the Cartesian product \((C_1-R_1/2,C_1+R_1/2)\times (C_2-R_2/2,C_2+R_2/2)\times \cdots \times (C_p-R_p/2,C_p+R_p/2)\), with the same probability, \(1/2^p\), as illustrated in Fig. 1(4), for the 2-dimensional case.

The fifth form (\(k=5\)) is obtained under Scenario 1 when \(U_{1},U_2,\ldots ,U_{p}\) are uncorrelated random variables with common variance 1/3; note that is the case when the weights \(U_j\) are independent random variables with continuous uniform distribution on \([-1,1]\), independent from \(({\varvec{C}},{\varvec{R}})\). Since \(\mathrm{Var}(U_j)=1/3\), for all j, in view of (9), it follows that \({\varvec{\varSigma }}=\mathrm{Cov}({\varvec{A}})={\varvec{\varSigma }}_5\). In case the weights \(U_j\) are independent random variables with continuous uniform distribution on \([-1,1]\), independent from \(({\varvec{C}}^t,{\varvec{R}}^t)^t\), the micro-data follow a p-dimensional continuous uniform distribution on the hyper-rectangle corresponding to the Cartesian product \((C_1-R_1/2,C_1+R_1/2)\times (C_2-R_2/2,C_2+R_2/2)\times \cdots \times (C_p-R_p/2,C_p+R_p/2)\). This case is illustrated in Fig. 1(5), for the 2-dimensional case.

The sixth form (\(k=6\)) models micro-data that can take any value inside the immediately above mentioned hyper-rectangle but showing a tendency to be located near its vertices. The points are spread according to a p-dimensional inverse triangular distribution. Finally, the remaining two forms (\(k=7\) and \(k=8\)) model micro-data mainly located near the center of the same hyper-rectangle, with more concentration near the center of the hyper-rectangle in the case \(k=8\) (p-dimensional multivariate truncated normal distribution) than in the case \(k=7\) (p-dimensional multivariate triangular distribution). The covariance matrices \({\varvec{\varSigma }}_6\) and \({\varvec{\varSigma }}_7\) can be obtained from (9) noting that: \(\mathrm{Var}(U_j)=1/2\) for \(k=6\), and \(\mathrm{Var}(U_j)=1/6\) for \(k=7\). In case \(k=8\), the form of \({\varvec{\varSigma }}_8\) follows from the fact that if Z has normal distribution with zero mean and \(\mathrm{Var}(Z)=1/9\), \(Z \sim {{\mathcal {N}}}(0,\frac{1}{9})\), then \(\mathrm{Var}(U_j)=\mathrm{Var}(Z|Z\in [-1,1])=\frac{1}{9}-\frac{2 \phi (3)}{3(2\varPhi (3)-1)}\), where \(\frac{2 \phi (3)}{3(2\varPhi (3)-1)}\simeq 2.96\times 10^{-3}\), and \(\phi (\cdot )\) and \(\varPhi (\cdot )\) are, respectively, the probability density function and cumulative distribution function of the standard normal distribution.

Cazes et al. (1997) have also addressed the modelling of micro-data, but under the restriction of fixed macro-data (i.e. macro-data with deterministic interval limits). In their work, they have considered micro-data models that fit under Scenario 1, as it is the case of the forms \(k=1,4,5,6,7,8\). However, they have not related the structure of micro-data with the definitions of symbolic covariances matrices for interval-valued random variables. Specifically, they considered \(U_{1},U_2,\ldots ,U_{p}\) to be independent random variables with (i) inverse triangular distribution with parameters \(\{-1,0,1\}\) (we refer to this case as \(k=6\)), (ii) triangular distribution with parameters \(\{-1,0,1\}\) (\(k=7\)), and (iii) Normal distribution truncated to \([-1,1]\), with zero mean and standard deviation approximately equal to 1/3 (\(k=8\)). These constraints on the micro-data are worth considering since they bring alternative modelling possibilities to the forms \(k=1,4,5\).

2.4 How to choose a particular form of symbolic covariance matrix?

The formulation (3) allows us to write:

for \(j=1,2,\ldots ,p\). Thus, if we have a dataset for which macro-data and micro-data are available, we can obtain a realization \(u_{j}\) of U using the relation \(u_j=2(a_j-c_j)/r_j\), with \(a_j\), \(c_j\), and \(r_j\) being the realizations of \(A_j\), \(C_j\), and \(R_j\), respectively. Based on \(u_{j}\)’s, evidences about the distributional form of the random weights \(U_{j}\) may be explored, leading to the choice of the symbolic covariance (correlation) matrix (see Table 1) that better fits the data under study.

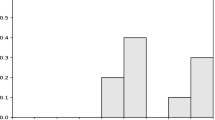

The scatter plots in Fig. 2, represent 300 simulated values of \((U_{1},U_{2})^t\), according to the eight models described in Table 1, illustrating typical patterns of points following each of the distributional models considered in the previous subsection, \(k=1,2,\ldots ,8\).

Note that we may use the procedure described in this section for other models not considered above under Scenario 1 and Scenario 2. The practitioner may obtain new symbolic covariance matrices (based on new distributional forms for the random weights \(U_{j}\)) better suited to particular datasets.

Simulated values of \((U_{1},U_{2})^t\) according to the symbolic models listed in Table 1 for \(k=1,\ldots ,8\)

In Sects. 3.1 and 3.4 , we exemplify the choice of the distributional model for the \(U_j\)’s based on the available micro-data and macro-data for two specific datasets.

2.5 State of the art

In the literature, there are several alternative definitions of sample symbolic covariance matrix. In all definitions, the sample symbolic mean vector is \(\bar{{\varvec{x}}}=({{\bar{x}}}_1,\ldots ,{{\bar{x}}}_p)^t\) where \({\overline{x}}_j = \sum _{i=1}^{n} c_{ij},\) \(j=1,\ldots ,p\). Choosing the sample mean of the centers as the sample symbolic mean makes sense, in particular, under the assumption that the micro-data associated with an interval follows a symmetric distribution on that interval.

In Oliveira et al. (2017) the sample counterparts of definitions 1 to 5 of covariance matrices were written in terms of the sample covariance matrix of the centers and the sample second order moment of the ranges. The population versions of the existing proposals are listed in Table 1.

The sample equivalent of \({\varvec{\varSigma }}_1\) was proposed by Billard and Diday (2003), and is the most straightforward approach, since in its essence follows the definition of the conventional sample variance and covariance of the interval centers. It has the disadvantage of ignoring the contribution of the ranges to the sample symbolic variance and covariance. To overcome this limitation, de Carvalho et al. (2006) proposed a sample variance definition based on the squared distances between the interval limits, and generalized this idea to define the associated sample covariance. Such definitions led to the sample equivalent of \({\varvec{\varSigma }}_2\). A third alternative, proposed by Bertrand and Goupil (2000), is obtained from the empirical density function of an interval-valued variable, assuming that the micro-data follows a Uniform distribution. The corresponding covariance was introduced by Billard (2008), and is based on the explicit decomposition of the covariance into within sum of products and between sum of products. Jointly they define the sample equivalent of \({\varvec{\varSigma }}_3\).

Other sample alternatives for covariance matrices were proposed in the context of symbolic principal component analysis (SPCA) for interval data. In one of the SPCA approaches, the authors define a symbolic sample covariance and use their eigenvectors as the weights of the sample symbolic principal variables. The sample version of \({\varvec{\varSigma }}_4\) is part of the SPCA method called the vertices method and introduced in Cazes et al. (1997). In the same paper, the authors considered other distributions for the micro-data that led to the sample versions of definitions 6 to 8 of Table 1. The sample counterpart of \({\varvec{\varSigma }}_5\) was also proposed as a part of a symbolic principal component estimation method called Complete-Information-based Principal Component (Wang et al. 2012).

2.6 Null symbolic correlation

One of the reasons that makes the correlation coefficient so popular and used is its ability to quantify the strength of linear associations between pairs of random variables. Modeling real problems using linear combinations has the appeal of leading to understandable interpretations and the associated mathematical problems are, in general, easily solved. Linear combinations of input variables have been of central importance in classical multivariate methodologies, like principal components analysis, factor analysis, canonical correlation analysis, discriminant analysis, linear regression models, among others (Johnson and Wichern 2007).

Given that the correlation is a measure of linear association, a null value does not ensure the independence between random variables, but gives indication that there is no linear (and only linear) association between them. In the symbolic framework, the meaning of uncorrelated interval-valued variables is of importance and is analyzed in this subsection.

As discussed previously, in the literature there are two families of definitions of symbolic correlations (covariances). In the first family (Scenario 1), only the association between the centers of the interval-valued random variables are taken into consideration, namely \(\mathrm{Cov}_k(X_j,X_l)=\mathrm{Cov}(C_j,C_l)\), for \(k=1,4,5,\ldots ,8\) (see Table 1); to make the discussion easier, we only consider cases \(k=1,4,5\) of this family. The second family (Scenario 2) takes the ranges’ contribution into consideration by adding the second order cross-moment of the ranges, weighted according to each definition: \(\mathrm{Cov}_k(X_j,X_l)=\mathrm{Cov}(C_j,C_l)+\delta _k\mathrm{E}(R_jR_l)\), for \(k=2,3\), with \(\delta _2=1/4\) and \(\delta _3=1/12\). The first observation is that any association between centers and ranges that may exist is not detected by the symbolic covariances, since the definitions do not include any cross term between centers and ranges. Another potential pitfall is that we can devise cases where existing associations among ranges or among centers are not detected by the symbolic covariance definitions. Table 2 summarizes some of these problems, which will be discussed next.

Example 1

Let \(X_1\) and \(X_2\) be two interval-valued random variables, characterized by \((C_i,R_i)\), \(i=1,2\), where \({\varvec{\mu }}\) is the expected value and \({\varvec{\varPsi }}\) the covariance matrix of the random vector \((C_1,C_2,R_1,R_2)^t\). In this example, we consider

which corresponds to Case 1 of Table 2, leading to the following symbolic covariance matrices:

Clearly, no linear associations between centers and ranges exist, but \({\varvec{\varSigma }}_2\) and \({\varvec{\varSigma }}_3\) show a non-null symbolic correlation (0.414 and 0.203 for definitions 2 and 3, respectively), indicating moderate and weak associations. According to these two definitions and the discussion of Sect. 2, \(\mathrm{Cov}_k(X_1,X_2)=\mathrm{Cov}(A_{1},A_{2})=\mathrm{Cov}(C_1+U\frac{R_1}{2},C_2+U\frac{R_2}{2})\), for \(k=2,3\). Thus, since the centers and the ranges are non-correlated random variables, we conclude that the detected association is due to U, which is shared by the micro-data pair \((A_{1},A_{2})\). Indeed, the root cause for this problem is the assumption that \(U_1 \overset{\mathrm{a.s.}}{=}U_2\), which artificially induces a positive association.

To illustrate these findings, we generate a sample of size 30 of centers and ranges from a multivariate normal distribution with the parameters of Eq. (15). The matrix plot and the corresponding symbolic bivariate scatter plot are shown in Fig. 3. Both plots confirm the absence of linear associations, contradicting the symbolic correlations obtained with definitions 2 and 3 [see Eq. (16)]. To further deepen this issue, we simulated 20 micro-data points according to the reasoning presented in Sect. 2.1, for definitions 3 and 5. The generated \({\varvec{A}}\) values, represented in Fig. 4, show a strong positive association between the micro-data for \(k=3\), and no association for \(k=5\).

Example 2

This example illustrates the Case 2 of Table 2. Here we consider

a scenario where the centers are uncorrelated but where there is a strong linear association between the two ranges, \(\mathrm{Cor}(R_1,R_2)=0.875\). These choices lead to the following symbolic covariance matrices:

Data generated according to Example 1, where the centers and the ranges are uncorrelated

Sample of 20 micro-data pairs, \({\varvec{A}}=(A_{1},A_{2})^t\), per symbolic object. Data generated according to Example 1. In the left plot, the red (blue) dots correspond to the left lower (right upper) vertex of the rectangle that represents each macro-data object (color figure online)

Data generated according to Example 2, where centers are uncorrelated and ranges are highly correlated

Definitions 1, 4, and 5 all lead to null symbolic correlations, disregarding the strong linear association that exists between the two ranges. Such association is clearly illustrated in Fig. 5, which corresponds to a sample of size 30 of centers and ranges from a multivariate normal distribution with the parameters of Eq. (17). The high correlation between the two ranges is apparent in the plot matrix of Fig. 5(1). Regarding the symbolic bivariate scatter plot of Fig. 5(2), the dependence among ranges manifests itself in the relationship between the rectangle lengths. In fact, since we are working with a multivariate normal distribution, then \(E(R_2|R_1=r)=0.0840+0.4375r\), meaning that the average ratio between the rectangle lengths should be approximately 0.4375, which is confirmed by Fig. 5(2).

However, even if definitions 2 and 3 lead to non-null symbolic correlation between \(X_1\) and \(X_2\) (0.377 and 0.170, for \(k=2\) and \(k=3\), respectively), this is due to the micro-data structure assumed by these definitions, and not to the actual association between the two ranges. In fact, the values would be exactly the same for all eight definitions, would \({\varvec{\mu }}\) and \({\varvec{\varPsi }}\) take the values of Eq. (17) except for \([\varPsi ]_{3,4}=\mathrm{Cov}(R_1,R_2)=0\). As pointed out before, this is an important limitation of all eight symbolic correlation (covariance) definitions, presented in Table 1.

Example 3

In this example, we illustrate one of the possible scenarios of Case 3 of Table 2. Assuming

leads to the following symbolic covariance matrices:

The example is built such that there is an association between the two centers which remains unnoticed by definitions 2 and 3. In these definitions, the contribution of the ranges to the symbolic correlation, which is always positive, can be compensated by a negative correlation between the centers. Specifically, in this example, there is a high negative correlation between the centers (\(\mathrm{Cor}(C_1,C_2)=-0.75\), for \(k=2\)), but this correlation is masked by the positive contribution of the ranges. To confirm this, we generated again 30 observations from a multivariate normal distribution, with the parameters of Eq. (19) and \(k=4\) (\(\delta _k=1/4\)). The negative association between the centers is apparent in Fig. 6 but, as mentioned above, this is not captured by definitions 2 and 3.

Data generated according to Example 3 (for \(k=4\)), where centers are correlated and ranges are uncorrelated

3 Analysis of datasets

In this section, we explore four datasets to illustrate the concepts and results derived previously. The first dataset is the Iris data, which corresponds to four different characteristics of flowers from three different species. The observations are artificially grouped by location, which defines, together with the species, the macro-data object to be studied.

The next two datasets are Internet traffic data. Internet traffic data is particularly amenable to SDA for two reasons. First, with the increase in Telecommunication’s link speeds and in the volume of data stored in Internet sites and repositories (the Big Data problem), it becomes impractical (due to excessive computational costs) to store all measured data (e.g. the arrival time and size of each individual packet observed at a given high-speed link). In this case, the analyst can only have access to summary data (e.g. the maximum and minimum packet sizes observed in a given time period), which can be handled with advantage as a symbolic variable. Second, Internet traffic has several levels/views organized hierarchically (e.g. the packet level, the session level, and the application level) and often, when analyzing one level, there is no interest in having detailed information on the lower levels. For example, when studying TCP connections–session level–we need to have information on the patterns of the packet arrival times and packet sizes of the connection, but not on the arrival time and size of each individual packet that belongs to the connection–packet level. The work in Rahman et al. (2020) also presents an application of SDA to Internet traffic data analysis.

The last dataset corresponds to one year monthly credit card expenses of five different types, for which both the micro-data and the macro-data are available.

3.1 Iris data

The iris data is probably one of the best known and most used datasets in multivariate analysis, and is also a reference among the SDA community. In the Iris dataset, there is a total of 150 plants, 50 plants from each one of 3 different species (setosa, versicolor, and virginica), and each plant is characterized in terms of sepal length, sepal width, petal length, and petal width. Billard and Diday (2006) hypothetically associated sets of five plants of the same specie to a location. In this case, each macro-data object corresponds to a location of (five) plants from the same specie, and is characterized by four interval-valued variables (sepal length, sepal width, petal length, petal width) (\(p=4\)). Since there is a total of 150 plants and 5 plants per location, the number of macro-data objects is 30 (\(n=30\)), 10 per specie.

In order to visualize the data, we developed a R Core Team: R (2015) function to produce a matrix of all possible combinations of symbolic bivariate scatter plots and the corresponding sample symbolic correlations (available upon request). Figure 7 gives an example. The diagonal panels of the matrix show the names of the variables. The lower panels (below the diagonal) show the scatter plots: in the (i, j) panel, \(i<j\), each symbolic object is represented by a rectangle whose length and height correspond to the interval values of the i-th and j-th observations. The upper panels show the sample symbolic correlations: the (i, j) panel, \(i>j\), shows the sample correlations that correspond to the (j, i) scatter plots. Each sample symbolic correlation panel has eight values, each corresponding to one of the definitions introduced in previous sections. Inspired by similar R functions, we call this plot symbolic pairs.

In Fig. 7, the locations are colored according to the specie: black for setosa, blue for versicolor, and red for virginica. We notice a strong positive association between petal length and width, and a slightly lower positive association between sepal and petal length, and between sepal length and petal width. In general, locations associated with the setosa specie have smaller center values and inner variability in all variables. Furthermore, versicolor’s locations have the highest center values and slightly higher inner variability when compared with virginica’s locations, in terms of petal width and length. Similar patterns, although not so clear, are noticed in terms of sepal and petal length. We also notice that petal length and width give a good separation among species. These patterns are also observed in the micro-data.

3.2 Internet traffic redirection attacks

Traffic redirection attacks deviate Internet traffic from its normal routes, allowing attackers interposed between the source and destination of the communications (man-in-middle attackers), to gain access to sensitive information, and/or to degrade the network delay, among other motivations. This type of attack explores vulnerabilities in the BGP protocol, which is responsible for the Internet-wide routing of information. The attack provokes an increase in the round-trip time (RTT) of the communications (from the source to the destination and back to the source), and this feature is usually used to detect the attack. However, the attack is difficult to detect when the attacker is located close to the source or the destination.

Salvador and Nogueira (2014) proposed a monitoring infrastructure to detect traffic redirection attacks. This platform comprises a set of geographically dispersed monitoring servers (probes) that periodically measure the RTT to hosts under surveillance (targets). In this case, it may be possible to detect stealthy attacks through suitable statistical processing of the RTTs measured by the various probes.

To evaluate the RTT, each probe makes 10 RTT measurements every 120 s. These RTT measurements form the micro-data, but we have only access to the, average, minimum, and maximum RTT values computed over the 10 individual RTT measurements. The RTT interval (minimum and maximum) forms the macro-data that we study in this example.

In our example, there are four probes located in Amsterdam, Chicago, Los Angeles (LA), and Johannesburg, and the target is in Hong Kong. During the measurement period, two different attacks were performed, one redirecting the traffic through Moscow and the other through Los Angeles (a site different from the Los Angeles’s probe). A total of 2286 RTT interval measurements were collected, where 1799 observations correspond to regular traffic, 241 to traffic redirected through Los Angeles and 246 to traffic redirected through Moscow. For further details on the dataset please see Subtil (2020).

By plotting the average RTT versus interval center and by looking to its sample correlation (always higher than 0.979), we found evidence that the micro-data is symmetrically distributed within the intervals, validating one of the assumptions required in the definitions of symbolic covariance matrices. The matrix of scatter plots and associated symbolic correlations based on the eight definitions under study are summarized in Fig. 8.

The bivariate plots seem to indicate that probes located in LA and Chicago separate the regular traffic (black rectangles) from the one redirected through Moscow (blue rectangles). Moreover, this redirection induces a significant increase in RTT values. This justifies the high correlation detected by all sample symbolic definitions of correlation, ranging from 0.896 for definition 4 to 0.950 for definition 1 (see second row and third column of the matrix displayed in Fig. 8). This Figure also suggests that probes Amsterdam and Johannesburg cannot detect the two attacks. The RTTs measured by these two probes seem to follow a fairly similar pattern, which justifies its medium correlation according to all symbolic definitions.

The most important observation regarding this example is that, for each of the six probe pairs, the sample symbolic correlation is approximately the same in all eight definitions. This is because all definitions privilege the center of the interval against the range and, in this dataset, the correlation between centers is much stronger than between ranges. Note that in all definitions, the center term has weight one, while the maximum weight associated with the range term is 1/4. To confirm the dominance of centers over ranges, we obtained the sample correlations between the centers:

As it can be seen, these correlations are close to the ones of Fig. 8. For example, the symbolic correlations between Amsterdam and Chicago probes are all close to \([\widehat{{\varvec{P}}}_{CC}]_{2,1}=-0.124\).

3.3 Internet traffic of backbone networks

This dataset corresponds to Internet traffic observed in backbone networks (at the core of the Internet), and includes a mixture of Internet applications and attacks, namely Web browsing, file sharing, streaming, video, port scans, and snapshots. It was analyzed in Oliveira et al. (2017) using Symbolic Principal Component Analysis for interval-valued data. The dataset comprises 917 traffic objects, corresponding to packets flows of specific applications and attacks, called datastreams. Five traffic characteristics were measured for each datastream, in intervals of 0.1 s: (i) number of upstream packets, (ii) number of downstream packets, (iii) number of upstream bytes, (iv) number of downstream bytes, and (v) number of active TCP sessions. The values of these characteristics forms the the micro-data. The macro-data corresponds to the interval (minimum and maximum) values of each traffic characteristic, computed over each datastream.

As a preprocessing step, we corrected the asymmetry among the micro-data, to allow using the definitions of symbolic covariance matrices presented in Sect. 2.

In this example, there is a strong association between the centers and ranges of the first four variables. Thus, the definitions that do not take the ranges into consideration when defining the sample covariance lead to low correlations, and the remaining definitions lead to very high correlations. To illustrate this, we consider definitions 3 and 5, where definition 5 does not account for the ranges in the sample covariance. The corresponding sample correlation matrices are:

As it can be seen, the sample correlations obtained with definitions 3 and 5 have significantly different values.

3.4 Credit card data

This example considers a known symbolic dataset, used in Billard and Diday (2003, 2006), that has the merit of having the macro- as well as the micro-data available. The micro-data corresponds to the monthly expenses (in dollars) of three persons, recorded over 12 months, on five different items: food, social entertainment, travel, gas, and clothes. There is a total of 1000 records. The credit card issuer is interested in characterizing the monthly expenses of each person, during one year, over the five different expense types. In our case, each macro-data object corresponds to the expenses of one person in one month, and is characterized by five interval-valued variables, one for each expense type (\(p=5\)). Since there is a total of 3 persons and 12 months, the number of macro-data objects is 36 (\(n=36\)). The five interval-valued variables are \(X_1\), (food), \(X_2\) (social entertainment), \(X_3\) (travel), \(X_4\) (gas), and \(X_5\) (clothes).

The symbolic bivariate plots and the corresponding sample symbolic correlations are showed in Fig. 9, where each person is represented with a different color. It can be said that clothes separate relatively well the three persons. The highest sample symbolic covariances (on absolute value) are the ones between food and clothes (positive values, varying between \(\widehat{\mathrm{Cor}}_4(X_1,X_5)=0.342\) and \(\widehat{\mathrm{Cor}}_2(X_1,X_5)=0.519\)) and gas and clothes (negative values, varying between \(\widehat{\mathrm{Cor}}_2(X_4,X_5)=-0.335\) and \(\widehat{\mathrm{Cor}}_1(X_4,X_5)=-0.674\)). Thus, subjects with high expenses on clothes tend to have high food and lower gas expenses.

The results regarding the sample symbolic correlation show that there can be a significant divergence among the eight definitions, which can even have different signs. For example, in the sample correlation between food and gas, seven definitions lead to negative sample correlations (with values between − 0.401 and − 0.074), and definition \(k=2\) leads to a positive sample correlation (0.261). This also happens in the case of Iris data and the sample correlation between sepal length and width, as can be seen in Fig. 7.

In fact, for \(k=2\) and \(k=3\), the symbolic covariance is a balance between the center’s effect, measured by the term \(\mathrm{Cov}(C_j,C_l)\) (which can take positive or negative values), and the range’s effect, measured by the term \(\delta _k\mathrm{E}(R_iR_j)\) (which can only take positive values); the weight \(\delta _k\) is 1/4 for \(k=2\) and 1/12 for \(k=3\). In this example, \(\widehat{\mathrm{Cov}}(C_1,C_4)=-8.953\) and \(\hat{\mathrm{E}}(R_1R_4)=81.740\). Thus, for \(\delta _2=1/4\), the importance of the ranges overcomes the negative covariance between the centers, leading to a positive correlation. Contrarily, for \(k=3\), the smaller weight \(\delta _3=1/12\) leads to a negative correlation.

Another clear example of divergence between definitions is the sample symbolic correlation between money spent on food and social entertainment. In this case, the sample correlation ranges from \(\widehat{\mathrm{Cor}}_4(X_1,X_2)=0.028\) to \(\widehat{\mathrm{Cor}}_2(X_1,X_2)=0.712\).

The divergence between the sample symbolic correlations obtained through the various definitions motivates searching for the most appropriate definition, which can be done since the micro- and macro-data are both available. To address this issue, we represent in Fig. 10 the values of the random variables, \(U_{j}\), describing the (linearly) transformed micro-data, according to (3). In this example, the macro-data were not observed directly, but results from the aggregation of the micro-data, according to the credit card issuer criteria. Thus, some of the observed values are used to define the observed interval limits, and to avoid distorting the results, we removed them from the analysis.

The scatter plots and sample correlations between concretizations of \(U_{j}\) and \(U_{l}\) (\(j\ne l\)) of Fig. 10 give indication that these random variables are uncorrelated and that the most promising models among the eight introduced above (see Table 1) are: (i) continuous Uniform, (ii) Triangular, and (iii) truncated Normal distribution, associated with \({\varvec{\varSigma }}_5\), \({\varvec{\varSigma }}_7\), and \({\varvec{\varSigma }}_8\), respectively.

We tested these three hypotheses applying goodness of fit tests (we used the Anderson–Darling test Anderson 2011) but the null hypothesis was always rejected. This can be explained by the high number of observations, which makes a small departure from the theoretical model statistically significant, even though it might not be significant from the practical point of view.

To overcome this problem, we made quantile-quantile plots with 95% pointwise envelopes (using function qqPlot from package car Fox and Weisberg 2011 in R), and obtained the percentage of points outside the envelope as a measure of goodness of fit. The Triangular distribution achieved the best results. In this case, the percentage of points outside the 95% envelope range between 1.72% (for social entertainment) to 9.61% (for food). In Fig. 11, we show these two quantile-quantile plots, which confirm the goodness of fit. From this result, we conclude that definition \(k=7\) is the most appropriate for this dataset. Being so, the chosen sample symbolic correlation matrix is

For example, there is a medium-sized symbolic positive correlation (0.471) between money spent on food and clothes and a stronger association (even though negative) between gas and clothes (− 0.631).

Linearly transformed micro-data quantile-quantile plots, for Triangular\([-1,0,1]\) distribution. Percentage of points outside the 95% envelope: 9.61% for (1) food (\(U_{1}\), worst case) and 1.72% for (2) social entertainment (\(U_{2}\), best case), where \(U_{j}=2(A_{j}-C_j)/R_j\), \(j=1,2,\ldots ,5\)

3.5 Discussion

The previous examples show that different definitions may lead to quite different results (symbolic sample correlation matrices), depending on the micro-data. Thus, in order to decide on the most appropriate definition, one must have some information about the micro-data structure of the specific case under analysis. Moreover, different definitions may reveal different, but equally interesting, aspects of the data. For example, in Oliveira et al. (2017), which uses the dataset of Sect. 3.3, definitions 3 and 5 were used to obtain the sample Symbolic Principal Components and associated scores. In this case, definition 3 highlighted a good separation between the various applications, while definition 5 detected the existence of 3 subgroups in the file sharing application (Torrent) [see Fig. 1 of Oliveira et al. (2017)].

We also point out that the existence of different definitions, although it introduces the problem of choosing between them, enriches the set of available statistical tools for exploratory analysis.

4 Conclusions

The low cost of information storage combined with recent advances in search and retrieval technologies has made huge amounts of data available, the so-called big data explosion. New statistical analysis techniques are now required to deal with the volume and complexity of this data, and Symbolic Data Analysis (SDA) is a promising approach.

In this paper we propose a model linking the micro-data with the macro-data of interval-valued symbolic variables, which takes a populational perspective. In this model the micro-data is defined as a random vector which is a function of the centers and ranges associated with the macro-data and random weights characterizing the structure of the micro-data given the associated macro-data. The model defines two scenarios where the various definitions of symbolic covariance matrices already proposed in the literature arise as particular cases. These scenarios correspond to two extreme situations regarding the random weights: in the first scenario the weights are independent random variables and in the second one they are equal variables (almost surely); in both scenarios, the weights are zero mean and uncorrelated latent variables. These conditions on the random weights imply that the current definitions of symbolic covariance matrices rely on micro-data assumptions that may be too stringent, raising applicability concerns. Clearly, more research is required in this area.

We discuss in detail several cases where the existence or absence of correlations in the macro-data is not correctly captured by the definitions. These inconsistencies are explained by the (too restrictive) underlying micro-data assumptions. These cases also highlight that, in the context of current definitions, a null symbolic covariance can not be interpreted as absence of association. This reinforces the need for further research on how to measure associations between interval-valued variables.

The analysis of four different datasets further explores the various definitions of symbolic covariance matrices. We show that, when using real data, there can be a large divergence between the various definitions, in particular when there is a strong association between the ranges in the data. Thus, in order to select the most appropriate definition, one must have some knowledge about the micro-data structure. For datasets where both the micro- and macro-data are available we were able to select the definition that better explains the data. We also highlight that different definitions may reveal different aspects of the data, which can be used in exploratory data analysis.

References

Anderson TW (2011) Anderson–Darling tests of goodness-of-fit. In: Lovric M (ed) International encyclopedia of statistical science. Springer, Berlin, pp 52–54

Beranger B, Lin H, Sisson SA (2020) New models for symbolic data analysis. arXiv:1809.03659

Bertrand P, Goupil F (2000) Descriptive statistics for symbolic data. In: Bock HH, Diday E (eds) Analysis of symbolic data, studies in classification, data analysis, and knowledge organization. Springer, Berlin, pp 106–124

Billard L (2008) Sample covariance functions for complex quantitative data. In: Proceedings of World IASC conference, Yokohama, Japan, pp 157–163

Billard L, Diday E (2003) From the statistics of data to the statistics of knowledge: symbolic data analysis. J Am Stat Assoc 98:470–487

Billard L, Diday E (2006) Symbolic data analysis: conceptual statistics and data mining. Wiley, Hoboken

Bock HH, Diday E (2000) Analysis of symbolic data: exploratory methods for extracting statistical information from complex data. Springer, New York

Brito P (2014) Symbolic data analysis: another look at the interaction of data mining and statistics. Wiley Interdiscip Rev Data Min Knowl Discov 4(4):281–295

Brito P, Duarte Silva AP (2012) Modelling interval data with normal and skew-normal distributions. J Appl Stat 39(1):3–20

Cazes P, Chouakria A, Diday E, Schektman Y (1997) Extension de l’analyse en composantes principales à des données de type intervalle. Revue de Statistique Appliquée 45(3):5–24

Cheira P, Brito P, Duarte Silva AP (2017) Factor analysis of interval data. arXiv:1709.04851

Chouakria A (1998) Extension des méthodes d’analyse factorielle à des données de type intervalle. Ph.D. thesis, Université Paris-Dauphine

de Carvalho FAT, Lechevallier Y (2009) Partitional clustering algorithms for symbolic interval data based on single adaptive distances. Pattern Recogn 42(7):1223–1236

de Carvalho FAT, Brito P, Bock HH (2006) Dynamic clustering for interval data based on L2 distance. Comput Stat 21(2):231–250

Dias S, Brito P (2017) Off the beaten track: a new linear model for interval data. Eur J Oper Res 258(3):1118–1130

Diday E (1987) The symbolic approach in clustering and related methods of Data Analysis. In: Bock H (ed) Proceedings of first conference IFCS, Aachen, Germany. North-Holland

Duarte Silva AP, Brito P (2015) Discriminant analysis of interval data: an assessment of parametric and distance-based approaches. J Classif 32(3):516–541

Duarte Silva AP, Filzmoser P, Brito P (2018) Outlier detection in interval data. J Adv Data Anal Classif 12(3):785–822

Filzmoser P, Brito P, Duarte Silva AP (2014) Outlier detection in interval data. In: Gilli M, Gonzalez-Rodriguez G, Nieto-Reyes A (eds) Proceedings of COMPSTAT 2014, p 11

Fox J, Weisberg S (2011) An R companion to applied regression, 2nd edn. Sage, Thousand Oaks

Johnson RA, Wichern DW (2007) Applied multivariate statistical analysis. Prentice-Hall Inc, Upper Saddle River

Le-Rademacher J (2008) Principal component analysis for interval-valued and histogram-valued data and likelihood functions and some maximum likelihood estimators for symbolic data. Ph.D. thesis, University of Georgia, Athens, GA

Le-Rademacher J, Billard L (2011) Likelihood functions and some maximum likelihood estimators for symbolic data. J Stat Plan Inference 141(4):1593–1602

Le-Rademacher J, Billard L (2012) Symbolic covariance principal component analysis and visualization for interval-valued data. Comput Graph Stat 21(2):413–432

Lima Neto EA, Cordeiro GM, de Carvalho FA (2011) Bivariate symbolic regression models for interval-valued variables. J Stat Comput Simul 81(11):1727–1744

Maia ALS, de Carvalho FAT, Ludermir TB (2008) Forecasting models for interval-valued time series. Neurocomputing 71(16–18):3344–3352

Noirhomme-Fraiture M, Brito P (2011) Far beyond the classical data models: symbolic data analysis. Stat Anal Data Min ASA Data Sci J 4(2):157–170

Oliveira MR, Vilela M, Pacheco A, Valadas R, Salvador P (2017) Extracting information from interval data using symbolic principal component analysis. Aust J Stat 46:79–87

Queiroz DCF, de Souza RMCR, Cysneiros FJA, Araújo MC (2018) Kernelized inner product-based discriminant analysis for interval data. Pattern Anal Appl 21(3):731–740

R Core Team: R (2015) A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria

Rahman PA, Beranger B, Roughan M, Sisson SA (2020) Likelihood-based inference for modelling packet transit from thinned flow summaries. arXiv:2008.13424

Salvador P, Nogueira A (2014) Customer-side detection of Internet-scale traffic redirection. In: 16th international telecommunications network strategy and planning symposium (Networks 2014), pp 1–5

Sato-Ilic M (2011) Symbolic clustering with interval-valued data. Procedia Comput Sci 6:358–363

Subtil A (2020) Latent class models in the evaluation of biomedical diagnostic tests and internet traffic anomaly detection. Doctoral’s thesis, Instituto Superior Técnico, Universidade de Lisboa, Portugal

Teles P, Brito P (2015) Modeling interval time series with space-time processes. Commun Stat Theory Methods 44(17):3599–3627

Vilela M (2015) Classical and robust symbolic principal component analysis for interval data. Master’s Thesis, Instituto Superior Técnico, Universidade de Lisboa, Portugal

Wang H, Guan R, Wu J (2012) CIPCA: complete-information-based principal component analysis for interval-valued data. Neurocomputing 86:158–169

Zhang X, Sisson SA (2020) Constructing likelihood functions for interval-valued random variables. Scand J Stat 47:1–35

Acknowledgements

This research has been supported by Fundação para a Ciência e Tecnologia (FCT), Portugal, through the projects UIDB/04621/2020, UIDB/50008/2020, PTDC/EEI-TEL/32454/2017, and PTDC/EGE-ECO/30535/2017. We thank the reviewers for their constructive comments and suggestions, which greatly enriched the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Oliveira, M.R., Azeitona, M., Pacheco, A. et al. Association measures for interval variables. Adv Data Anal Classif 16, 491–520 (2022). https://doi.org/10.1007/s11634-021-00445-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-021-00445-8