Abstract

Resistivity inversion plays a significant role in recent geological exploration, which can obtain formation information through logging data. However, resistivity inversion faces various challenges in practice. Conventional inversion approaches are always time-consuming, nonlinear, non-uniqueness, and ill-posed, which can result in an inaccurate and inefficient description of subsurface structure in terms of resistivity estimation and boundary location. In this paper, a robust inversion approach is proposed to improve the efficiency of resistivity inversion. Specifically, inspired by deep neural networks (DNN) remarkable nonlinear mapping ability, the proposed inversion scheme adopts DNN architecture. Besides, the batch normalization algorithm is utilized to solve the problem of gradient disappearing in the training process, as well as the k-fold cross-validation approach is utilized to suppress overfitting. Several groups of experiments are considered to demonstrate the feasibility and efficiency of the proposed inversion scheme. In addition, the robustness of the DNN-based inversion scheme is validated by adding different levels of noise to the synthetic measurements. Experimental results show that the proposed scheme can achieve faster convergence and higher resolution than the conventional inversion approach in the same scenario. It is very significant for geological exploration in layered formations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

With the development of exploration techniques, electromagnetic logging-while-drilling (LWD) has been widely used in geophysical exploration. The electromagnetic measurements are not intuitive during the drilling process, so the quantitative evaluation of formation boundary and resistivity near the logging tool must rely on the inversion (Liu et al. 2014; Yang et al. 2017; Li et al. 2022). Resistivity inversion can reconstruct complex characteristics of subsurface properties through the interpretation of observed measurements (Hao et al. 2022).

However, the inversion of the logging measurements often faces many challenges in layered formation. The inverse problem is highly nonlinear. It is also ill-posed because of the non-uniqueness of the solutions. Gradient-based algorithms and stochastic algorithms are widely used to tackle this problem. Regularization approach is used to reduce the ill-posedness of the ill-posed problem and stabilize the solution. Stochastic algorithms governed by Beyesian theorem can overcome the local optima problem by randomly sampling multiple possible solutions and producing a distribution of parameters, and several techniques have been proposed to speed up the sampling process. Particle swarm optimization overcomes the local optimum problem by globally searching a model space with a large number of particles at the expense of a large number of forwarding computations. Therefore, many scholars have proposed alternative methods to reduce the sampling space without affecting the final solution accuracy. Thiel and Omeragic (2017) proposed a pixel-based deep sounding electromagnetic logging data inversion scheme. Based on the Gauss–Newton method, the scheme used adaptive L1 norm regularization and a robust error term to determine the 1D formation resistivity. Pardo and Torres-Verdìn (2015) developed an efficient inversion method to estimate resistivity from the logging measurements that automatically determines the weights, regularization parameter, Jacobian matrix, inversion variable, and stopping criteria. Wang et al. (2017) developed an inversion approach based on a fast forward solver to determine the formation resistivities, anisotropy dip, and azimuth. Heriyanto and Srigutomo (2017) used the Levenberg–Marquardt approach and Singular Value Decomposition (SVD) to obtain layered resistivity structure from the logging data. Therefore, many scholars have proposed alternative methods to reduce the sampling space without affecting the final solution accuracy. Shen et al. (2018) proposed a statistical inversion method based on a hybrid Monte Carlo for the LWD azimuth inversion model, improving sampling efficiency and searching for global solutions of parameters. Wang et al. (2019) proposed a Bayesian inversion method for extracting multilayer boundaries from ultra-deep directional LWD resistivity measurements. Veettil and Clark (2020) developed a geo-steering sequential Monte Carlo method with resampling, capable of estimating the probability of formation depth based on gamma-ray measurements, but the reservoir evaluation results did not include formation properties. However, gradient-based algorithms often need to set an initial model, and the inversion results are prone to fall into local minimum values. Moreover, gradient-based and stochastic-based inversion methods require multiple forward modeling at each logging location, which is time-consuming. Therefore, this is very unrealistic for real-time geo-steering.

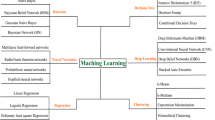

Artificial neural network provides us a new perspective on solving the inversion problems in geophysical exploration with its remarkable nonlinear mapping ability (Xu et al. 2018; Tembely et al. 2020; Colombo et al. 2021a, b; Shahriari et al. 2021). Zhang (2000) used the modular neural network to interpret well logs. According to the resistivity of adjacent layers in a three-layer formation, the data set was divided into several subsets of neural networks, and these subsets were trained, respectively. Singh et al. (2013) analyzed the sensitivity of artificial neural network parameters, such as the number of hidden neurons, using vertical electrical sounding data. Raj et al. (2014) utilized the single-layer neural networks in the resistivity inversion. Shahriari et al. (2020) used an inversion scheme based on a fully connected neural network to invert the logging data in anisotropic formations, but the inversion results are very different from the actual information of the formation, and can only be used as a reference before the inversion. Shahriari et al. (2022) propose an iterative deep neural network (DNN)-based algorithm to design a measurement acquisition system. Noh et al. (2022) used the ResNet network and one-dimensional convolutional neural network to perform 2.5D inversion of deep sounding electromagnetic wave logging data, and compared the inversion only considering short source distance Measurement-While-Drilling (MWD) and using deep sounding MWD performance, and the results show that the method can detect and quantify arbitrary inclined fault planes in real-time. Liu et al. (2020) used a U-Net-based convolutional neural network method to realize high-precision resistivity measurement (ERS) inversion. Hu et al. (2020) proposed a solution based on the supervised descent method to solve the electromagnetic wave logging inversion while drilling. The training model set is generated in advance according to the prior information, and the descending direction in the iterative process is trained in the offline training process. The learned descent direction and data residuals update the model, increasing the speed of inversion iterations. Alyaev et al. (2021a) used DNN approach to accurately calculate the response of ultra-deep detection electromagnetic wave logging tool at each logging point. The training data set comes from commercial simulation software provided by logging tool suppliers. The forward modeling of logging points takes only 0.15 ms, which can be used as part of the geo-steering workflow by shortening the time of forward modeling and increasing the speed of inversion modeling. Although plenty work has been done in geological exploration, there is a lack of research on logging while drilling tools in layered formations. In addition, despite the high accuracy and efficiency of the DNN approach, it has its own limitation. Due to the DNN architecture contains many hidden layers, the distribution of samples gradually shifts during training, affecting subsequent layers and leading to the disappearance of gradients. Moreover, overfitting may occur in the training process. In this article, we study the application of an efficient and robust DNN-based inversion scheme for solving LWD inverse problems in layered formation. The proposed inversion scheme reconstructing both thickness and resistivity is implemented, which combines DNN architecture and batch normalization algorithm, as well as the k-fold cross-validation method. Batch normalization algorithm reduces the internal covariate shift during the training process, thus making the neural network model more robust and accelerating the training speed. The k-fold cross-validation approach is utilized to prevent overfitting. The effectiveness of batch normalization algorithm is verified by comparing two scenarios with and without batch normalization algorithm. Besides, several groups of experiments are considered to demonstrate the feasibility, efficiency, and robustness of the proposed inversion approach. This study provides a strategy for dramatically improving the efficiency of resistivity inversion.

The structure of this article is organized as follows. Sect. "Forward modeling and data preparation" introduces the LWD technology as well as its measurements, and presents the sensitivity study of the measurements. Sect. "Inversion with DNN-based scheme" illustrates a detailed implementation of the proposed inversion scheme, including DNN architecture, backpropagation algorithm, batch normalization, and k-fold cross-validation. Sect. "Results and discussion" validates the feasibility and efficiency of the proposed inversion scheme, and illustrates its efficiency and sensitivity. Finally, the conclusion is drawn in Sect. "Conclusions."

Forward modeling and data preparation

Forward modeling

The basic architecture of the formation and electromagnetic logging instrument is shown in Fig. 1. It consists of two symmetrically compensated transmitter coils T1 and T2, and two symmetrically balanced receiver coils R1 and R2. Symmetrical compensation coils of the induction logging tool make the measurements more accurate (Xing et al. 2008; Li et al. 2015). The coils can be considered magnetic dipoles during the calculation (Hardman and Shen 1986). The magnetic fields on the receiving coils in the formation coordinate system are first calculated and transformed to the magnetic fields in the instrument coordinate system according to angle θ, β, ζ in the following (Zhong et al. 2008; Zhang 2011). We ignore the borehole and mandrel effects. Since the magnetic field formulas contain Bessel infinite integral, the Gauss-Quadrature method and the continued fraction approach are utilized to calculate these magnetic field formulas. Our previous studies have shown that this method can efficiently implement forward modeling (Zhu et al. 2017, 2019). The phase difference after compensation is obtained. The calculation formulas are shown as Formulas (1)–(3).

where S is the coil area, V is the voltage on the receiving coil, \(\omega\) is the operating frequency of the instrument, \(\mu_{0}\) is the permeability in a vacuum, Δφ1 and Δφ2 are the uncompensated phase difference, and Δφ is the compensated phase difference.

We conducted a sensitivity study in layered formation, and the result is presented in Fig. 2. The frequency used for the simulation is 400 kHz. In Fig. 2, the x-axis denotes depth, whereas the y-axis stands for phase difference. It shows that the phase difference measurements are sensitive to both dipping angle and resistivity. The curves have distinct peaks at the interface, and the larger the dipping angle θ, the more obvious the peaks at the boundary. Therefore, the phase difference curves provide resistivity and thickness information of the formation.

Training and test data sets

The responses of the induction logging tool and formation information in different three-layer formations are collected as the data set. The responses of the induction logging tool are used as inputs of the neural network, and the formation information, such as resistivity and thickness of each layer, is used as sample labels. The formation information contains five parameters, the resistivity of each layer and the thickness of the first and second layer. The thickness of the bottom layer is set to infinity. To ensure the randomness of samples in the data set, the values of resistivity parameters are integers randomly generated from 1 to 50. The values of thickness parameters are randomly generated from 1.0, 1.5, 2.0, 2.5, and 3.0. Different formations are formed by different resistivity and thickness. A total of 34,200 samples are generated with different formations. The data set is split into a training set, a validation set, and a testing set.

Inversion with DNN-based scheme

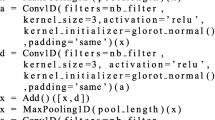

Inversion modeling based on DNN architecture

The interpretation of logging measurements to reconstruct the formation properties could be viewed as an inverse problem. The schematic diagram for the inversion scheme based on DNN is shown in Fig. 3. As shown in Fig. 3, the measurements of the logging tool in the formation are taken as the input of the neural network. The output of the neural network is the formation structure, namely, the resistivity R1, R2, R3, and the thickness h1 and h2 of the three-layer formation. Multiple samples in the training set are used to continuously train the neural networks.

For DNN architecture the input signals of the neurons come from the M neurons in the previous layer, and are calculated by corresponding weights (w) and biases (b) (Jianchang and Anil 1996). After processing by the activation function (f), the output value (q) of the neuron is:

The sigmoid function is utilized, and the calculation approach is shown as follows:

The backpropagation method is used to reduce the errors between output values of the neural network and sample labels, and update the weights and bias between neurons. The backpropagation algorithm uses an iterative method to process the samples in training (Yu et al. 2002). By reducing the error between output values of the output layer and sample labels, the weights and biases between neurons can be updated. The backpropagation algorithm transmitted the input signal layer by layer until the output layer produced the results. The error between output values of the output layer and the actual values is calculated and propagated back to the hidden layer to adjust the weights and bias between neurons. The updated weight (\(w_{{{\text{new}}}}\)) and bias (\(b_{{{\text{new}}}}\))are calculated as follows:

where \(w_{{{\text{old}}}}\) and \(b_{{{\text{old}}}}\) are the weights and bias before update, and \(\Delta w\) and \(\Delta b\) are the steps of the weights and bias update, respectively.

In order to select the optimum DNN architecture, we designed several DNN architectures with different numbers of hidden layers and different numbers of hidden layer neurons. The mean squared error (MSE) is selected to measure the error between the actual and the predicted value by DNN. The comparison results are shown in Fig. 4. The optimal DNN architecture is composed of 10 hidden layers and 100 hidden neurons to realize the inversion. The measurements of the induction logging tool are used as inputs of the neural network, and the formation structure, such as resistivity and thickness of each layer, is used as sample labels.

Offline training

To prevent overfitting, we utilize the k-fold cross-validation method. The schematic diagram is shown in Fig. 5. The data set is divided into k subsets, each subset is regarded as the validation set once, and the other k-1 subsets are considered the training set (Yadav and Shukla 2016). The k models are trained separately, and the error is calculated on their validation set. The average error of k models (Errori) is the error of cross-validation (Error), as shown in Formula (8). In this study, 90% of the samples (30,780) are utilized as the training set and the validation set, and 10% (3420) as the testing set to validate the accuracy of the results implemented by the neural network.

Batch normalization

The DNN architecture contains multiple hidden layers. The internal covariate shift phenomenon leads to slow convergence in training. Batch normalization is used to adjust the input distribution of each layer and normalized inputs of each layer (Ioffe and Szegedy 2015). The input values are distributed in the sensitive area of the nonlinear transformation function to avoid the disappearance of the gradient. Batch normalization is used to change the distribution of the input value of any neuron in each layer of the neural network to a normal distribution by means of normalization by doing scale and shift for the calculation of each layer, so as to make the model more robust. Moreover, batch normalization can make the weight changes of different scales of different layers more consistent as a whole, and can use higher learning rate to accelerate the training speed.

First, for a layer with d-dimensional input \(x = \left( {x_{1} ,x_{2} , \ldots ,x_{k} , \ldots ,x_{d} } \right)\) each dimension is normalized, and the input xk will be normalized to get a normalized input \(\hat{x}_{k}\):

where \(E\left[ {x_{k} } \right]\) is the expectation and \({\text{Var}}\left[ {x_{k} } \right]\) is the variance computed in the training set. However, if only the above normalization formula is used, it may change the layer representation. Therefore, parameters γ (\(\gamma_{k} = \sqrt {{\text{Var}}[x_{k} ]}\)) and β (\(\beta_{k} = E[x_{k} ]\)) are introduced to recover the feature distribution. The output (qk) is as follows:

The parameters γ and β are updated with training. There are N samples in the mini-batch \(B = \left\{ {x_{k}^{1} , \ldots ,x_{k}^{n} , \ldots ,x_{k}^{N} } \right\}\) of training in the kth dimension. The normalized value is \(x_{k}^{n}\), and their linear transformation is \(q_{k}^{n}\). The detailed process of the batch normalization algorithm is shown below. The expectation (\(\mu_{B}\)) and variance (\(\sigma_{B}^{2}\)) of the mini-batch are calculated first, as shown in Formulas (11) and (12).

Then, the inputs are normalized (\(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x}_{k}^{n}\)) as shown in Formula (13), where is a constant added to the batch normalization variance for numerical stability.

Finally, scale and shift are carried out through γ and β parameters, and their linear transformation (\(q_{k}^{n}\)) is as follows:

During testing, the expectation (\(\mu_{B}\)) and variance (\(\sigma_{B}^{2}\)) are calculated over all training samples. In training, the gradient of loss (\(\ell\)) needs to be propagated back, and the gradient relative to the parameters γ and β is calculated, the chain rule is derived as follows:

Results and discussion

In this section, we carry out a series of experiments to demonstrate the feasibility and efficiency of the DNN-based inversion scheme. First, we test the convergence of the DNN-based inversion scheme using synthetic data, during which improvement of the batch normalization strategy is also displayed. Then, we validate the accuracy of the DNN-based inversion. Moreover, the robustness of the DNN-based inversion scheme is validated by adding different levels of random noise to the synthetic measurements. Finally, the efficiency comparison between the DNN-based inversion scheme and the Levenberg–Marquardt inversion is given in the same scenario.

Convergence analysis

The error curves of the DNN model on the data sets in the training stage can provide us with lots of valuable information, such as whether the error is decreasing, whether the overfitting has occurred, and so on. As a comparison, we adopted two scenarios, with and without batch normalization, to verify the effectiveness of the batch normalization algorithm, and the training error curves are shown in Fig. 6. In Fig. 6, the abscissa represents the epochs, and the ordinate represents the training error. The red and black lines are the training error curves with and without batch normalization. It can be seen that the red line drops more rapidly than the black line, and the red line is always below the black line during the training. The red line is less fluctuating than the black line. It can be seen that when the batch normalization algorithm is added, the training error is lower under the same training epochs than without the batch normalization algorithm, which reduces the number of training epochs and accelerates the training speed. After batch normalization, the effectiveness in the training stage is greatly improved.

Besides, the error curves of the training stage without noise, with 5% noise and with 10% noise are recorded, respectively, and the results are shown in Fig. 7. The error curve without noise is shown by the black line. As can be seen from Fig. 7, data errors in the training stage are convergent. The error decreases rapidly at the beginning, and with the epoch increasing, the error gradually decreases and approaches zero. The results suggested that the training error curves are continuously reduced without overfitting in the training progress.

Validation of the inversion scheme

During online prediction, we verify the precision of the ANN model on the testing set. In the testing set, one hundred samples are randomly selected, and compare the predicted values and actual values of each parameter. Figure 8a–c plots the predicted resistivity results and true resistivity results, as well as Fig. 8d, e plots the predicted and true thickness results. The red circles represent the samples, and the straight line is the fitted line of these samples. The abscissa value corresponding to each sample point represents its true value, and the corresponding ordinate value represents the predicted value.

As can be seen from Fig. 8, no matter the thickness of the formation and the values of the resistivities, the determination coefficient R2 of each parameter is close to 1. The samples are all near the straight lines, and the equations of these fitted lines are very close to \(y = x\).

And then, six different formation models are selected in the testing set, and the accurate formation information is compared with predicted formation information, as depicted in Fig. 9. In Fig. 9, the blue line is the phase difference curve of the formation. The black line represents the true value of the formation, and the red dotted line represents the predicted value of the formation. The abscissa indicates the depth of the formation. The left ordinate indicates the magnitude of the phase difference. The right ordinate indicates the resistivity.

As shown in Fig. 9, the black line and red dotted line of all samples are very close, and their changes are consistent with the trend of formation parameters. In all cases, there is a good agreement between actual and estimated resistivities. The results show that the predicted formation information is consistent with the real formation information, and the resistivity inversion realized by the DNN-based scheme is accurate and reliable.

Robustness test

The synthetic measurements with 5% noise and 10% noise are used to validate the robustness of the DNN-based inversion scheme. The error curves of the training stage with 5% noise and with 10% noise are recorded, respectively, and the results are shown in Fig. 7. The error curves with 5% noise and 10% noise are, respectively, represented by the red line and blue line in Fig. 7. The results show that the error after adding noise is larger than that without adding noise, but the training error curves decrease with the increase in training times.

The prediction results are shown in Figs. 10 and 11, respectively. In Figs. 10 and 11, the blue line is the phase difference curve with noise of the formation. The true models are drawn in black dotted lines, while the reconstructed subsurface models with red dotted lines, from which we can see a promising result that the constructed formation models well reflect the structure of the true ones although some discrepancies exist. In brief, the experiment demonstrates the robustness of the DNN-based inversion scheme even when noise exists.

Efficiency comparison with Levenberg–Marquardt inversion

Meanwhile, to illustrate the efficiency of the proposed scheme, comparisons between the DNN-based approach and the Levenberg–Marquardt method are carried out in the same scenario. We recorded the time required to achieve the same precision inversion using the DNN-based method and the Levenberg–Marquardt algorithm, respectively. The online prediction and inversion time of the DNN-based and Levenberg–Marquardt inversion are listed in Table 1. The actual values of formation parameters are on the left part of the table. The results of Levenberg–Marquardt inversion are displayed in the middle of the table (including the initial values of the algorithm and the number of iterations), and the DNN-based inversion results are displayed in the right column of the table.

For the same scenario, it takes more than 400 s for the Levenberg–Marquardt inversion, and the time taken by the DNN-based inversion is about 3 s. The DNN-based inversion scheme spends much less time reconstructing subsurface structure than the Levenberg–Marquardt inversion. Based on our analyses, the performance and efficiency of the DNN-based inversion scheme are significantly improved.

Conclusions

A DNN-based inversion scheme to solve the resistivity measurements inversion problems is explored in this article. First, the sensitivity study of the electromagnetic measurements in layered formation is analyzed. Besides, the DNN-based inversion scheme reconstructing both thickness and resistivity is implemented, which combines DNN architecture and batch normalization, as well as the k-fold cross-validation method. Additionally, several groups of experiments are implemented to demonstrate the feasibility, efficiency, and robustness of the proposed inversion scheme. According to the comprehensive qualitative analysis and quantitative comparison, numerical examples demonstrate that the DNN-based inversion can achieve faster and higher resolution than conventional nonlinear iterative inversion, and its more straightforward and more stable, as well as conquering the local minima and susceptibility to the initial value problems. The DNN-based inversion scheme is an efficient inversion approach that has the great capacity to overcome the inherent disadvantages of traditional inversion methods. In future work, we plan to extend our studies that take into account more formation parameters, such as the dip, azimuth, and geological structure.

Data availability

The data used in this work are available from the corresponding author (gao.muzhi@upc.edu.cn).

References

Colombo, Alyaev S, Shahriari M, Pardo D, Omella AJ, Larsen DS, Jahani N et al (2021a) Modeling extra-deep electromagnetic logs using a deep neural network. Geophysics 86(3):E269–E281

Colombo D, Turkoglu E, Li W, Sandoval-Curiel E, Rovetta D (2021b) Physics-driven deep-learning inversion with application to transient electromagnetics. Geophysics 86(3):E209–E224

Hao P, Sun X, Nie Z, Yue X, Zhao Y (2022) A robust inversion of induction logging responses in anisotropic formation based on supervised descent method. IEEE Geosci Remote Sens Lett 19:1–5

Hardman RH, Shen LC (1986) Theory of induction sonde in dipping beds. Geophysics 51(3):800

Heriyanto M, Srigutomo W (2017) 1-D DC resistivity inversion using singular value decomposition and levenberg-marquardt’s inversion schemes. In: Journal of Physics: Conference Series, vol 877, No (1), pp 012066

Hu YY, Guo R, Jin YC, Wu XQ, Li MK, Abubakar A et al (2020) A Supervised descent learning technique for solving directional electromagnetic logging-while-drilling inverse problems. IEEE Trans Geosci Remote Sens 58(11):8013–8025

Ioffe, S., and Szegedy, C. (2015). Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In: International conference on machine learning, pp. 448-456. arXiv preprint arXiv:1502.03167

Jianchang M, Anil KJ (1996) Artificial neural networks: a tutorial. Computer 29:31–44

Li MY, Yue XG, Hong DC, Han W (2015) Simulation and analysis of the symmetrical measurements of a triaxial induction tool. IEEE Geosci Remote Sens Lett 12(1):122–124

Li H, He ZH, Zhang YT, Feng J, Jian ZY, Jiang YB (2022) A study of health management of LWD tool based on data-driven and model-driven. Acta Geophys 70(2):669–676

Liu DJ, Li H, Zhang YY, Zhu GX, Ai QH (2014) A study on directional resistivity logging-while-drilling based on self-adaptive hp-FEM. Acta Geophys 62(6):1328–1351

Liu B, Guo Q, Li S, Liu B, Jiang P (2020) Deep learning inversion of electrical resistivity data. IEEE Trans Geosci Remote Sens 58(8):5715–5728

Noh K, Pardo D, Torres-Verdin C (2022) 2.5-D Deep learning inversion of LWD and deep-sensing em measurements across formations with dipping faults. IEEE Geosci Remote Sens Lett 19:1–5

Pardo D, Torres-Verdín C (2015) Fast 1D inversion of logging-while-drilling resistivity measurements for improved estimation of formation resistivity in high-angle and horizontal wells. Geophysics 80(2):E111–E124

Shen QY, Wu XQ, Chen JF, Han Z, Huang YQ (2018) Solving geosteering inverse problems by stochastic Hybrid Monte Carlo method. J Petrol Sci Eng 161:9–16

Raj AS, Srinivas Y, Oliver DH, Muthuraj D (2014) A novel and generalized approach in the inversion of geoelectrical resistivity data using artificial neural networks (ANN). J Earth Syst Sci 123(2):395–411

Shahriari M, Pardo D, Picon A, Galdran A, Del Ser J, Torres-Verdin C (2020) A deep learning approach to the inversion of borehole resistivity measurements. Comput Geosci 24(3):971–994

Shahriari M, Pardo D, Rivera JA, Torres-Verdin C, Picon A, Del Ser J et al (2021) Error control and loss functions for the deep learning inversion of borehole resistivity measurements. Int J Numer Meth Eng 122(6):1629–1657

Shahriari M, Hazra A, Pardo D (2022) A deep learning approach to design a borehole instrument for geosteering. Geophysics 87(2):D83–D90

Singh UK, Tiwari RK, Singh SB (2013) Neural network modeling and prediction of resistivity structures using VES Schlumberger data over a geothermal area. Comput Geosci 52(MAR.):246–257

Tembely M, AlSumaiti AM, Alameri W (2020) A deep learning perspective on predicting permeability in porous media from network modeling to direct simulation. Comput Geosci 24(4):1541–1556

Thiel M, Omeragic D (2017) High-fidelity real-time imaging with electromagnetic logging-while-drilling measurements. IEEE Trans Comput Imaging 3(2):369–378

Veettil DRA, Clark K (2020) Bayesian geosteering using sequential monte carlo methods. Petrophysics 61(1):99–111

Wang GL, Barber T, Wu P, Allen D, Abubakar A (2017) Fast inversion of triaxial induction data in dipping crossbedded formations. Geophysics 82(2):D31–D45

Wang L, Li H, Fan Y (2019) Bayesian inversion of logging-while-drilling extra-deep directional resistivity measurements using parallel tempering markov chain monte carlo sampling. IEEE Trans Geosci Remote Sens 57(10):8026–8036

Xing G, Wang H, Ding Z (2008) A new combined measurement method of the electromagnetic propagation resistivity logging. IEEE Geosci Remote Sens Lett 5(3):430–432

Xu Y, Sun K, Xie H, Zhong X, Hong X (2018) Borehole resistivity measurement modeling using machine-learning techniques. Petrophysics 59(6):778–785

Yadav S, Shukla S (2016) Analysis of k-fold cross-validation over hold-out validation on colossal datasets for quality classification In: IEEE 6th International advance computing conference (IACC), pp 78–83

Yang S, Hong D, Huang WF, Liu QH (2017) A stable analytic model for tilted-coil antennas in a concentrically cylindrical multilayered anisotropic medium. IEEE Geosci Remote Sens Lett 14(4):480–483

Yu X, Efe MO, Kaynak O (2002) A general backpropagation algorithm for feedforward neural networks learning. IEEE Trans Neural Netw 13(1):251–254

Zhang L (2000) Application of neural networks to interpretation of well logs. The University of Arizona, Tucson

Zhang Z (2011) 1-D modeling and inversion of triaxial induction logging tool in layered anisotropic medium. University of Houston, Houston

Zhong L, Jing L, Bhardwaj A, Shen LC, Liu RC (2008) Computation of triaxial induction logging tools in layered anisotropic dipping formations. IEEE Trans Geosci Remote Sens 46(4):1148–1163

Zhu G, Chen X, Kong F, Kang L (2017) A continued fraction method for modeling and inversion of triaxial induction logging tool. In: 2017 IEEE microwaves, radar and remote sensing symposium (MRRS)). pp 210–204

Zhu GY, Gao MZ, Kong FM, Li K (2019) Application of Logging while drilling tool in formation boundary detection and geo-steering. Sensors 19(12):2754

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China, Grant/Award Number: 42174141, in part by the Shandong Natural Science Foundation of China under Grant Number: ZR2021QF132, ZR2022QD082, and in part by the Fundamental Research Funds for the Central Universities of China under Grant Number: 22CX06036A.

Author information

Authors and Affiliations

Contributions

GZ contributed to conceptualization, methodology, writing. MG contributed to conceptualization, methodology, review and editing. BW contributed to validation, supervision.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Edited by Dr. Qamar Yasin (ASSOCIATE EDITOR) / Prof. Gabriela Fernández Viejo (CO-EDITOR-IN-CHIEF).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhu, G., Gao, M. & Wang, B. A robust inversion of logging-while-drilling responses based on deep neural network. Acta Geophys. 72, 129–139 (2024). https://doi.org/10.1007/s11600-023-01080-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11600-023-01080-x