Abstract

We show how to separate a doubly nonnegative matrix, which is not completely positive and has a triangle-free graph, from the completely positive cone. This method can be used to compute cutting planes for semidefinite relaxations of combinatorial problems. We illustrate our approach by numerical tests on the stable set problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Burer showed in [4] that every optimization problem with quadratic objective function, linear constraints, and binary variables can equivalently be written as a linear problem over the completely positive cone. This includes many NP-hard combinatorial problems, for example the maximum clique problem. The complexity of these problems is then shifted entirely into the cone constraint. In fact, even checking whether a given matrix is completely positive is an NP-hard problem [8]. Replacing the completely positive cone by a tractable cone like the cone of doubly nonnegative matrices results in a relaxation of the problem providing a bound on its optimal value. For matrices of order \(n\le 4\), the doubly nonnegative cone equals the completely positive cone (cf. [1, Theorem 2.4]) which means that the relaxation is exact. For order \(n\ge 5\), however, there are doubly nonnegative matrices that are not completely positive. So in general, an optimal solution of the doubly nonnegative relaxation is not completely positive. Therefore, it is desirable to add a cut, i.e., a linear constraint that separates the obtained doubly nonnegative solution from the completely positive cone, in order to get a tighter relaxation yielding a better bound.

The basic idea to use copositive cuts, i.e., cuts that are defined by copositive matrices, was first introduced by Bomze et al. [3]. Since then several approaches to compute such cuts have been presented [2, 5, 6, 10, 19]. In this paper, we concentrate on doubly nonnegative matrices that have a triangle-free graph and show how to separate such a matrix that is not completely positive from the completely positive cone.

After giving characterizations of copositivity and complete positivity of a matrix in Sect. 2, we show in Sect. 3 how to construct cuts to separate triangle-free doubly nonnegative matrices from the completely positive cone. In Sect. 4, we illustrate the presented method by applying it to some stable set problems. The results are discussed and compared to the results of previous approaches.

We are going to use the following notations: The vector of all ones is denoted by e, the matrix of all ones by E, and the identity matrix by I. For \(A\in \mathbb {R}^{n\times n}\), let \({{\mathrm{diag}}}(A)\) denote the vector of diagonal elements of A. The Hadamard product \(A\circ B\) of two matrices \(A,B\in \mathbb {R}^{m\times n}\) is the matrix with entries \((A\circ B)_{ij}=a_{ij}b_{ij}\). Let \(\mathcal {S}_n\) denote the space of symmetric \(n\times n\) matrices. The usual (trace) inner product of two matrices \(X,Y\in \mathcal {S}_n\) is \(\langle X,Y \rangle = {{\mathrm{trace}}}(XY)=\sum _{i,j=1}^nx_{ij}y_{ij}\). Observe that for \(A, B, C \in \mathcal {S}_n\), we have by the definitions of Hadamard product and inner product that

If K is an \(n\times n\) matrix, and \(x\in \mathbb {R}^n\), then

Let \(u\in \mathbb {R}^n\). Combining (1) and (2), we have

We denote the cone of symmetric entrywise nonnegative \(n\times n\) matrices by \(\mathcal {N}_n\), and the cone of symmetric positive semidefinite \(n\times n\) matrices by \(\mathcal {S}^+_n\). The cone \(\mathcal {D}_n := \mathcal {S}^+_n\cap \mathcal {N}_n\) is called the doubly nonnegative cone. Its dual cone is \(\mathcal {D}_n^* = \mathcal {S}^+_n + \mathcal {N}_n\). The cone \(\mathcal {COP}_{n}\) of copositive \(n\times n\) matrices and its dual cone \(\mathcal {CP}_{n}\) of completely positive \(n\times n\) matrices are defined as

and

A copositive matrix is said to be extreme if it generates an extreme ray of the copositive cone. The extreme matrices in \(\mathcal {COP}_{n}\) with \(n> 5\) are not generally known. The extreme \(5\times 5\) copositive matrices, however, are fully characterized [14]. These are all the matrices that may be obtained by simultaneous permutation of rows and columns and diagonal scaling by a positive diagonal matrix from the following matrices: the rank 1 positive semidefinite matrices \(xx^T\) where x has both positive and negative entries, the elementary symmetric matrices, the Horn matrix

and the matrices described by Hildebrand in [14] which are of the form

with \(\theta \in \left\{ \theta \in \mathbb {R}_{++}^{5} \ \vert \ e^T\theta < \pi \right\} \). We will refer to these matrices as Hildebrand matrices. For general n, the copositive and extreme copositive \(\{-1,1\}\)-matrices were fully characterized by Haynsworth and Hoffman [13], and the copositive and extreme copositive \(\{-1,0,1\}\)-matrices were fully characterized by Hoffman and Pereira [15].

For every \(X\in \mathcal {S}_n\), the graph of X, denoted by G(X), is the graph with vertices \(\{1, \ldots , n\}\), where \(\{i,j\}\) is an edge if and only if \(i\ne j\) and \(X_{ij}\ne 0\).

The comparison matrix M(A) of a square matrix A is defined by

Finally, for \(x\in \mathbb {R}^n\) we denote by \(x^\dashv \) the vector such that

and if \(x\ge 0\), then \(\sqrt{x}\) denotes the vector with \((\sqrt{x})_i=\sqrt{ x_i }\).

2 Useful observations

In this section we characterize completely positive matrices in terms of Hadamard products with copositive matrices. We start with some basic facts on copositivity:

Proposition 2.1

Let \(K\in \mathcal {S}_n\). Then the following are equivalent:

-

(a)

\(K \in \mathcal {COP}_{n}\),

-

(b)

\(K\circ uu^T \in \mathcal {COP}_{n}\) for every nonnegative \(u\in \mathbb {R}^n\),

-

(c)

\(K\circ uu^T \in \mathcal {COP}_{n}\) for every positive \(u\in \mathbb {R}^n\),

-

(d)

There exists a positive \(u\in \mathbb {R}^n\) such that \(K\circ uu^T \in \mathcal {COP}_{n}\).

Proof

Clearly, we only have to prove (a) \(\Rightarrow \) (b) and (d) \(\Rightarrow \) (a).

(a) \(\Rightarrow \) (b): If \(K \in \mathcal {COP}_{n}\) and u is a nonnegative vector, then (3) implies that

for every nonnegative \(x\in \mathbb {R}^n\). That is, \(K\circ uu^T \in \mathcal {COP}_{n}\).

(d) \(\Rightarrow \) (a): If \(K\circ uu^T \in \mathcal {COP}_{n}\) for a positive \(u\in \mathbb {R}^n\), then

since (a) implies (b). \(\square \)

The next theorem gives similar statements for extreme copositive matrices.

Proposition 2.2

Let \(K\in \mathcal {S}_n\). Then the following are equivalent:

-

(a)

K is an extreme copositive matrix,

-

(b)

\(K\circ uu^T\) is an extreme copositive matrix for every positive \(u\in \mathbb {R}^n\),

-

(c)

There exists a positive \(u\in \mathbb {R}^n\) such that \(K\circ uu^T\) is an extreme copositive matrix.

Proof

If \(u>0\) and D is the diagonal matrix with diagonal u, then \(K\circ uu^T=DKD\). So the equivalence of (a), (b) and (c) is the known fact about preservation of extremality under scaling by a positive diagonal matrix. \(\square \)

Note that if \(u\ge 0\) is not strictly positive and K is an extreme copositive matrix, then \(K\circ uu^T\) may not be extreme. To see this, we consider the Horn matrix H which is an extreme \(5\times 5\) matrix and has \(2\times 2\) principal submatrices of all ones which are not extreme in \(\mathcal {COP}_{2}\), e.g., the principal submatrix on rows and columns 1 and 3. So if the entries 1 and 3 of u are equal to one, and all other entries of u are zeros, then \(H\circ uu^T\) is not extreme.

We now characterize complete positivity of a matrix X in terms of copositivity of the Hadamard product of X with copositive matrices.

Proposition 2.3

Let \(X\in \mathcal {S}_n\). Then the following are equivalent:

-

(a)

\(X \in \mathcal {CP}_{n}\),

-

(b)

\(K\circ X \in \mathcal {COP}_{n}\) for every \(K \in \mathcal {COP}_{n}\),

-

(c)

\(K\circ X \in \mathcal {COP}_{n}\) for every extreme copositive matrix \(K \in \mathcal {COP}_{n}\),

-

(d)

\(K\circ X \in \mathcal {COP}_{n}\) for every extreme copositive matrix \(K \in \mathcal {COP}_{n}\) whose diagonal entries are either zero or one.

Proof

It suffices to prove (a) \(\Rightarrow \) (b) and (d) \(\Rightarrow \) (a).

(a) \(\Rightarrow \) (b): If \(X \in \mathcal {CP}_{n}\), then \(X=\sum _{i=1}^p u_iu_i^T\), where \(u_i\in \mathbb {R}^n\) is nonnegative for every i. Then for every \(K \in \mathcal {COP}_{n}\), the matrix \(K\circ X=\sum _{i=1}^p K\circ u_iu_i^T\) is copositive as a sum of copositive matrices by Proposition 2.1.

(d) \(\Rightarrow \) (a): Let K be an arbitrary extreme copositive matrix. Define \(d={{\mathrm{diag}}}(K)\) and \(u=\sqrt{d^\dashv }\). Then \(K\circ uu^T \in \mathcal {COP}_{n}\) is also extreme, and all its diagonal entries are either zero or one. Since by assumption \((K\circ uu^T)\circ X \in \mathcal {COP}_{n}\) we get from Proposition 2.1 that \(K\circ X=(K\circ uu^T)\circ X\circ \sqrt{d} \sqrt{d}^T \in \mathcal {COP}_{n}\). Consequently, \(0 \le e^T(K\circ X)e = \langle X, K\rangle \). Since K was an arbitrary extreme copositive matrix, this means that X is in the cone dual to \(\mathcal {COP}_{n}\), that is, \(X \in \mathcal {CP}_{n}\). \(\square \)

Corollary 2.4

Let \(X \in \mathcal {D}_n\). Then the following are equivalent:

-

(a)

\(X \in \mathcal {CP}_{n}\),

-

(b)

\(K\circ X \in \mathcal {COP}_{n}\) for every \(K \in \mathcal {COP}_{n} {\setminus } (\mathcal {S}^+_n + \mathcal {N}_n)\),

-

(c)

\(K\circ X \in \mathcal {COP}_{n}\) for every extreme \(K \in \mathcal {COP}_{n} {\setminus } (\mathcal {S}^+_n + \mathcal {N}_n)\),

-

(d)

\(K\circ X \in \mathcal {COP}_{n}\) for every extreme \(K \in \mathcal {COP}_{n} {\setminus } (\mathcal {S}^+_n + \mathcal {N}_n)\) whose diagonal entries are either zero or one.

Proof

If \(K \in (\mathcal {S}^+_n + \mathcal {N}_n)\), then clearly \(K\circ X \in (\mathcal {S}^+_n + \mathcal {N}_n)\). Hence in this case it suffices to consider in Proposition 2.3 matrices \(K \in \mathcal {COP}_{n} {\setminus } (\mathcal {S}^+_n + \mathcal {N}_n)\). \(\square \)

3 Cutting planes

In this section, we construct cutting planes to separate doubly nonnegative matrices which are not completely positive from the completely positive cone. In other words, given \(X \in \mathcal {D}_n {\setminus } \mathcal {CP}_{n}\), we aim to find a \(K \in \mathcal {COP}_{n}\) such that \(\langle K,X\rangle <0\).

One might argue that in view of the NP-hardness of checking complete positivity, it is difficult to verify that the given X is not completely positive. This is true in general, but certain structures in the graph G(X) can provide us with precisely this information. To be more specific, we will see below that if G(X) has a triangle-free subgraph such that a scaling of the corresponding submatrix has a spectral radius \(\rho > 1\), then \(X\notin \mathcal {CP}_{n}\), and from this structure we will construct a copositive matrix which cuts off X from \(\mathcal {CP}_{n}\).

As mentioned in the introduction, the basic idea to use copositive cuts was first introduced by Bomze et al. [3] and further applied to the maximum clique problem by Bomze et al. [2]. We will consider the cuts from [2] in more detail when comparing them in Sect. 4.2 to the cuts that we introduce in Sect. 3.3.

Burer et al. [5] characterize \(5\times 5\) extreme doubly nonnegative matrices which are not completely positive and show how to separate such a matrix from the completely positive cone. Dong and Anstreicher [10] generalize this procedure to doubly nonnegative matrices that are not completely positive and have at least one off-diagonal zero, and to larger matrices having block structure. Extending the results of [5, 10], Burer and Dong [6] establish the first full separation algorithm for \(5\times 5\) completely positive matrices. In [19], Sponsel and Dür present the first algorithm to separate an arbitrary matrix \(X\notin \mathcal {CP}_{n}\) from the completely positive cone. Their approach is based on (approximate) projections onto the copositive cone.

3.1 Generating copositive cuts

The basic idea of our approach is stated in the following theorem.

Theorem 3.1

Let \(X \in \mathcal {D}_n {\setminus } \mathcal {CP}_{n}\), and let \(K \in \mathcal {COP}_{n}\) be such that \(K\circ X \notin \mathcal {COP}_{n}\). Then for every nonnegative \(u\in \mathbb {R}^n\) such that \(u^T(K\circ X)u<0\), the copositive matrix \(K\circ uu^T\) is a cut separating X from \(\mathcal {CP}_{n}\).

Proof

If \(K\circ X \notin \mathcal {COP}_{n}\), as assumed in the theorem, then by Kaplan’s copositivity characterization [16, Theorem 2], \(K\circ X\) has a principal submatrix having a positive eigenvector corresponding to a negative eigenvalue. This shows that we can always choose u to be this eigenvector with zeros added to get a vector in \(\mathbb {R}^n\).

The following property is obvious but useful, since it allows to construct cutting planes based on submatrices instead of the entire matrix.

Proposition 3.2

Assume that \(K \in \mathcal {COP}_{n}\) is a copositive matrix that separates a matrix X from \(\mathcal {CP}_{n}\). If \(A \in \mathbb {R}^{n\times p}\) and \(B \in \mathcal {S}_{p}\) are arbitrary matrices, then the copositive matrix

Note that for a cut it is desirable to have an exposed extreme copositive matrix K rather than just any copositive K, since an exposed matrix K will provide a supporting hyperplane which generates a maximal face of \(\mathcal {CP}_{n}\) (see [9, Theorem 2.20]) and therefore a better (deeper) cut. Since finding an exposed copositive matrix is even harder than finding an extreme copositive matrix, and since the set of exposed matrices is dense in the set of extreme matrices, we will aim for an extreme copositive matrix to construct our cut.

A matrix \(X\in \mathcal {S}_n\) is reducible if there is a permutation matrix P such that

with symmetric matrices \(X_1,X_2\). A matrix that is not reducible is said to be irreducible. We will assume that the matrices that we want to separate from the completely positive cone are irreducible, since any reducible symmetric matrix can be written as a block diagonal matrix and then the problem can be split into subproblems of smaller dimension where each of the diagonal blocks is considered separately.

3.2 The \(5\times 5\) case

We first consider \(5\times 5\) matrices, since this is the smallest dimension where \(\mathcal {D}_n {\setminus } \mathcal {CP}_{n}\) is nonempty. We will use the following terminology and result from Väliaho [20] (that can be traced back to [7, 12]):

Definition 3.3

A matrix A is called copositive of order k, if every principal submatrix of A of order k is copositive. A is called copositive of exact order k if it is copositive of order k but not of order \(k+1\).

By [20, Theorem 3.8], we have the following.

Lemma 3.4

Let \(A\in \mathcal {S}_n\). If A is copositive of exact order \(n-1\), then \(A^{-1}\le 0\).

Using this lemma we can prove our next result.

Theorem 3.5

Let \(X\in \mathcal {D}_5{\setminus } \mathcal {CP}_{5}\). Then

-

(a)

There exists an extreme matrix \(H\in \mathcal {COP}_{5}{\setminus }(\mathcal {S}^+_5+\mathcal {N}_5)\) with no zero entries and with \({{\mathrm{diag}}}H=e\) such that

$$\begin{aligned} B:=X\circ H \end{aligned}$$is not copositive, and \(B^{-1}\) is irreducible and nonpositive.

-

(b)

Let u be a Perron vector of \(-B^{-1}\). Then \(u>0\) and

$$\begin{aligned} K:=H\circ uu^T \end{aligned}$$is an extreme copositive matrix separating X from \(\mathcal {CP}_{5}\).

Proof

-

(a)

The existence of an extreme \(H\in \mathcal {COP}_{5}{\setminus }(\mathcal {S}^+_5+\mathcal {N}_5)\) whose diagonal has only zero and one entries such that \(B=X\circ H\) is not copositive follows from Corollary 2.4. We actually have \({{\mathrm{diag}}}H=e\), since every extreme matrix in \(\mathcal {COP}_{5}\), which is not in \(\mathcal {S}^+_5+\mathcal {N}_5\), has a positive diagonal. If H is a permuted Horn matrix, it has no zero entry. Otherwise, H is a permuted Hildebrand matrix of the form (4). In that case, H may have some (at most two) zeros above the diagonal, but a slight change of the parameters \(\theta \) in H will yield another permuted Hildebrand matrix \(H'\) with no zeros, such that \(X\circ H'\notin \mathcal {COP}_{5}\) (since the complement of \(\mathcal {COP}_{5}\) is open). Since \(X\in \mathcal {D}_5\), all its \(4\times 4\) principal submatrices are completely positive. By Proposition 2.3 this implies that all \(4\times 4\) principal submatrices of B are copositive. So B is copositive of exact order 4, and by Lemma 3.4 we have \(B^{-1}\le 0\). Since \(X\in \mathcal {D}_5{\setminus } \mathcal {CP}_{5}\), the graph G(X) contains a 5-cycle [1, Corollary 2.6]. In particular, X is irreducible. By the choice of H, B, and therefore \(B^{-1}\), are also irreducible.

-

(b)

Let \(\rho >0\) be the Perron eigenvalue of \(-B^{-1}\), and let u be a Perron vector. Then u is positive by the Perron–Frobenius Theorem [1, Theorem 1.13]. It is also an eigenvector of B corresponding to the negative eigenvalue \(\lambda =-\frac{1}{\rho }\) of B. Define the matrix \(K:= H \circ uu^T\), which is extreme copositive according to Proposition 2.2. Then

$$\begin{aligned} \langle K, X \rangle = \langle H \circ uu^T, X \rangle = \langle uu^T, X \circ H \rangle = u^T (X \circ H) u = u^T B u = \lambda u^T u < 0, \end{aligned}$$which shows that K provides the desired cut. \(\square \)

Although the approach is different, the basic structure of this cut is the same as for the cuts in [5] which separate an extreme doubly nonnegative matrix which is not completely positive from the completely positive cone. The cuts in [5] are of the form \(H\circ uu^T\), with H denoting the Horn matrix. However, the vector u there is different from the one we use here. Also note that the construction in [5] works for extreme doubly nonnegative matrices only, whereas the procedure outlined above works for arbitrary \(5 \times 5\) doubly nonnegative matrices.

Theorem 3.5 is an existence result. While it guarantees that a cut of this form exists, it does not provide information on how to choose the right H such that \(X\circ H\) is not copositive. In the next section we show that if G(X) is the 5-cycle, H can be chosen to be the Horn matrix. More generally, we consider doubly nonnegative matrices with a triangle-free graph.

3.3 Separating a triangle-free doubly nonnegative matrix

We return to matrices of order \(n\times n\). We may assume that our matrix \(X \in \mathcal {D}_n\) has \(X_{ii} \ne 0\), otherwise the corresponding row and column would be zero, and we can base our cut on a submatrix with no zero diagonal elements. Furthermore, by applying a suitable scaling if necessary we can assume that \({{\mathrm{diag}}}(X) = e\).

Now suppose that an irreducible \(X \in \mathcal {D}_n\) has a triangle-free graph G(X). Then we have

The nonnegative matrix C has zero diagonal and \(G(C)=G(X)\).

We now characterize complete positivity of X in terms of the spectral radius of C.

Lemma 3.6

A matrix \(X \in \mathcal {D}_n\) of the form (5) is completely positive if and only if the spectral radius \(\rho \) of C fulfills \(\rho \le 1\).

Proof

Since G(X) is triangle-free, by [11, Theorem 5] \(X \in \mathcal {CP}_{n}\) if and only if its comparison matrix M(X) is positive semidefinite. In our case, we have that \(M(X) = I-C\). Since \(\rho \) is the maximal eigenvalue of C, we have that \(1-\rho \) is the minimum eigenvalue of M(X), whence M(X) is positive semidefinite if and only if \(1-\rho \ge 0\), as claimed. \(\square \)

For the separation of a doubly nonnegative matrix in the form (5) which is not completely positive from \(\mathcal {CP}_{n}\), we will use a \(\{-1,0,1\}\)-matrix: Given a triangle-free graph G, let \(A\in \mathcal {S}_n\) be defined by

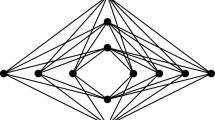

We call this matrix the Hoffman–Pereira matrix corresponding to G. By [15, Theorem 3.2], the matrix A is copositive whenever G is triangle-free. If the diameter of G is 2, then the Hoffman–Pereira matrix does not have any zero entries, and is extreme [13]. This is the case for \(n=5\), and A is then a permutation of the Horn matrix.

Theorem 3.7

Let \(X\in \mathcal {D}_n{\setminus } \mathcal {CP}_{n}\) be of the form (5), let u be the Perron vector of C, and let A be the Hoffman–Pereira matrix corresponding to G(X). Then

-

(a)

\(u>0\) and \(u^TM(X)u<0\),

-

(b)

\(M(X)=X\circ A\) and

$$\begin{aligned} K:=A\circ uu^T \end{aligned}$$is a copositive matrix separating X from \(\mathcal {CP}_{n}\).

Proof

-

(a)

The assumption that G(X) is connected means that X, and therefore C, is irreducible, which implies that \(u>0\) by the Perron–Frobenius Theorem. As before, we have \(M(X) = I-C\), and since \(X \notin \mathcal {CP}_{n}\), we have from Lemma 3.6 that the spectral radius \(\rho \) of C fulfills \(\rho > 1\). Consequently, we get

$$\begin{aligned} u^T M(X) u = u^Tu - u^T Cu = u^Tu (1-\rho ) < 0. \end{aligned}$$ -

(b)

It is easy to see that \(M(X)=X\circ A\), and we have

$$\begin{aligned} \langle X, A\circ uu^T\rangle =\langle X\circ A, uu^T\rangle =u^T(X\circ A)u=u^TM(X)u<0. \end{aligned}$$(7)Since \(u>0\) and A is copositive, the matrix \(K:= A \circ uu^T\) is copositive, which by the above is a cut that separates X from \(\mathcal {CP}_{n}\). \(\square \)

Note that by Proposition 2.2, since \(u>0\), the cut matrix K is extreme if and only if the Hoffman–Pereira matrix A is extreme. This happens, e.g., when the graph G(X) is an odd cycle.

3.3.1 Cycle graphs

Cycle graphs are special triangle-free graphs. When the graph of a matrix \(X \in \mathcal {D}_n\) of the form (5) is a cycle, more can be said. First note that if G(X) is an even cycle, then \(X \in \mathcal {CP}_{n}\) (cf. [1, Corollary 2.6]).

If G(X) is an odd cycle, then by applying [15, Theorem 4.1] it can be easily seen that the Hoffman–Pereira matrix corresponding to G(X) is extreme. Thus the matrix \(K := A \circ uu^T\) that provides the cut separating X from the completely positive cone in Theorem 3.7 is extreme as well, and therefore the cut produced in this case would be deeper than the general cuts generated by Hoffman–Pereira matrices. When the graph is the 5-cycle, the Hoffman–Pereira matrix is a permutation of the Horn matrix.

4 Application to the stable set problem

We next illustrate the separation procedure from Sect. 3.3 by applying it to some instances of the stable set problem. The results are discussed in Sect. 4.1 and compared to the results of other separation procedures in Sects. 4.2 and 4.3.

As shown in [17], the problem of computing the stability number \(\alpha \) of a graph G can be stated as a completely positive optimization problem:

where \(A_G\) denotes the adjacency matrix of G. Replacing \(\mathcal {CP}_{n}\) by \(\mathcal {D}_n\) results in a relaxation of the problem providing a bound on \(\alpha \). This bound \(\vartheta '\) is called Lovász-Schrijver bound:

We consider some instances for which \(\vartheta '\ne \alpha \) and aim to get better bounds by adding cuts to the doubly nonnegative relaxation. The cuts are computed based on Sect. 3.3.

Let \(\bar{X}\) denote the optimal solution we get by solving (9). If \(\vartheta '\ne \alpha \), then \(\bar{X} \in \mathcal {D}_n {\setminus } \mathcal {CP}_{n}\). We want to find cuts that separate \(\bar{X}\) from the feasible set of (8). If G(X) is triangle-free, we can use Theorem 3.7 to separate X from \(\mathcal {CP}_{n}\). Otherwise, we look for a principal submatrix whose graph is triangle-free and whose comparison matrix is not positive semidefinite, construct a cut for this submatrix, then use Proposition 3.2.

Let Y denote such a submatrix. In general, \({{\mathrm{diag}}}(Y) \ne e\) as in (5). Therefore, we consider the scaled matrix DYD, where D is a diagonal matrix with \(D_{ii}=\frac{1}{\sqrt{Y_{ii}}}\). Since Y is a doubly nonnegative matrix having a triangle-free graph, the same holds for DYD. Furthermore, DYD can be written as \(DYD = I+C\), where C is a matrix with zero diagonal and G(C) a triangle-free graph. Let \(\rho \) denote the spectral radius of C and let u be the eigenvector of C corresponding to the eigenvalue \(\rho \). Furthermore, let A be as in Sect. 3.3. If \(\rho >1\), then according to (7), we have

Therefore, \(D(A\circ uu^T)D\) defines a cut that separates Y from the completely positive cone.

4.1 Numerical results for some stable set problems

As test instances, we consider the 5-cycle \(C_5\) and the graphs \(G_{8}\), \(G_{11}\), \(G_{14}\) and \(G_{17}\) from [18]. In each case we determine all submatrices as described above. It turns out that for these instances the biggest order of such a submatrix is \(5\times 5\). The matrix A we use is therefore a permutation of the Horn matrix. We then solve the doubly nonnegative relaxation after adding each of these cuts and after adding all computed cuts. The results are shown in Table 1. We denote by \(\vartheta ^K_{\text {min}}\) and \(\vartheta ^K_{\text {max}}\) the minimal respectively maximal bound we get by adding a single cut to the doubly nonnegative relaxation (9), and \(\vartheta ^K_{\text {all}}\) denotes the bound we get after adding all computed cuts. The last column indicates the reduction of the optimality gap \(\vartheta '-\alpha \) when all cuts are added.

4.2 Comparison with cuts based on subgraphs with known clique number

In this section, we compare our cuts to the ones introduced by Bomze et al. [2], since the basic structure is quite similar. In [2], the maximum clique problem is considered and cuts are computed to improve the bound resulting from solving the doubly nonnegative relaxation of that problem. These cuts are based on subgraphs with known clique number.

To make it easier to compare the results, we assume that we want to compute the clique number \(\omega \) of the complement of a graph G, i.e., that we want to determine \(\omega (\bar{G})\), which is equivalent to the problem of computing the stability number \(\alpha \) of G. As we have seen above (see (8)), the problem can be stated as

Let \(\vartheta '\) denote the bound on \(\alpha \) that we get by solving the doubly nonnegative relaxation of (10).

The construction of cuts in [2] is based on the observation that for any optimal solution \(\bar{X}\) of the doubly nonnegative relaxation of (10), we have \(\bar{X}_{ij}=0\) for all \(\{i,j\}\in E_G\) with \(E_G\) denoting the edge set of G. This means that \(\bar{X}_{ij}>0\) implies \(i=j\) or \(\{i,j\}\in E_{\bar{G}}\). The cuts introduced in [2] are based on subgraphs F of \(\bar{G}\) with known clique number \(\omega (F)\). According to [17, Corollary 2.4], the matrix

is copositive, and consequently also

is copositive.

In [2], a heuristic approach is used to find subgraphs F of \(\bar{G}\) with known clique number \(\omega (F)\) such that the matrix \(C_F\) is a cut that separates \(\bar{X}\) from the feasible set of (10). For the computational results, subgraphs with clique number \(\omega (F)=2\) or 3 are considered. We will concentrate on the case that F is a subgraph with \(\omega (F)=2\), i.e., F is a triangle-free subgraph of \(\bar{G}\).

In the approach described in Sect. 3.3, we consider (scaled) submatrices \(Y=I+C\) of \(\bar{X}\) that have a triangle-free graph G(C). Since \(\bar{X}_{ij}>0\) implies \(i=j\) or \(\{i,j\}\in E_{\bar{G}}\), we can conclude that G(C) is a subgraph of \(\bar{G}\). From the definition of A in (6) it becomes clear that the negative entries of the cut correspond to the edges of G(C). The same holds for the cuts \(C_F\), i.e., the negative entries of \(C_F\) correspond to the edges of F which is a subgraph of \(\bar{G}\). Therefore, the basic structure of the cuts is the same in the sense that the negative entries of the constructed matrices correspond to edges of a subgraph of \(\bar{G}\). In Sect. 3.3, we consider triangle-free graphs, which is a special case of the cuts in [2]. The main difference is how the cuts are constructed. Whereas the entries of \(C'_F\) are either \((1-\frac{1}{\omega (F)})\) or \(-\frac{1}{\omega (F)}\), the cut K in Sect. 3.3 combines the basic matrix A with the eigenvector u.

Next, we compare both constructions numerically. For that we consider the instances from Sect. 4 and compute cuts of the form \(C_F\) for all \(5\times 5\) principal submatrices of the optimal solution \(\bar{X}\) of the doubly nonnegative relaxation of (10) that have a triangle-free graph F. As for the cuts K in Sect. 4, we then add these cuts to the doubly nonnegative relaxation and compute new bounds on \(\omega (\bar{G})=\alpha (G)\). We denote by \(\vartheta ^F_{\text {min}}\) and \(\vartheta ^F_{\text {max}}\) the minimal and maximal bounds (respectively) we get by adding a single cut, and by \(\vartheta ^F_{\text {all}}\) the bound resulting from adding all computed cuts. The results are shown in Table 2.

The results show that the bounds based on the cuts K from Sect. 3.3 are better than the bounds based on the cuts \(C_F\) from [2]. This comes from the fact that the construction of the cuts K is more specific. The cuts K do not only reflect which entries of \(\bar{X}\) are positive but also the entries of \(\bar{X}\) themselves play a role, in form of the eigenvector u. On the other hand, the cuts in [2] are more general since they also apply to subgraphs with higher clique number. Moreover, if those cuts are not restricted to triangle-free subgraphs, then better bounds are obtained as the results in [2] show.

4.3 Comparison with other cutting planes

Finally, we compare our results to the results presented in [10] and [19]. For the sake of completeness, we also include the results reported in [2] although these cuts have already been discussed in Sect. 4.2. The different bounds are stated in Table 3. The bound from [2] is denoted by \(\vartheta ^{\text {BFL}}\). The bound developed in [10] is based on an iterative procedure, whence we cite in the table the bounds \(\vartheta ^{\text {DA}}_{\text {mean,1}}\) (their mean bound in the first round) and \(\vartheta ^{\text {DA}}_{\text {mean,4}}\) (their mean bound after four rounds). Finally, \(\vartheta ^{\text {SD}}\) denotes the bound given in [19].

As can be seen from the table, the bounds \(\vartheta ^{\text {BFL}}\) and \(\vartheta ^{\text {DA}}\) are for all instances slightly better than our bound \(\vartheta ^K_{\text {all}}\). For \(\vartheta ^{\text {BFL}}\), this comes from the fact that the cuts in [2] are more general in the sense that they are not only based on triangle-free subgraphs but also use \(K_4\)-free subgraphs. Nevertheless, we have seen in Sect. 4.2 that our construction gives better results when the underlying subgraph is the same.

The procedure giving \(\vartheta ^{\text {DA}}\) from [10] is an iterative one that is conceptionally quite different from ours. It requires detecting suitable submatrices as well as solving a number of semidefinite optimization problems in each iteration. Therefore, the computational effort to compute \(\vartheta ^{\text {DA}}\) is much higher than the effort for \(\vartheta ^K_{\text {all}}\) which makes it plausible that the numerical results for that procedure are slightly better than ours.

Finally, our bound \(\vartheta ^K_{\text {all}}\) is comparable to \(\vartheta ^{\text {SD}}\) and beats it in two out of the four considered graphs. Also the procedure to compute \(\vartheta ^{\text {SD}}\) is computationally much more expensive than the one presented here, so \(\vartheta ^K_{\text {all}}\) is superior in the sense that it gives comparable or better results with a much lower computational effort.

References

Berman, A., Shaked-Monderer, N.: Completely Positive Matrices. World Scientific Publishing, Cleveland (2003)

Bomze, I.M., Frommlet, F., Locatelli, M.: Copositivity cuts for improving SDP bounds on the clique number. Math. Program. 124, 13–32 (2010)

Bomze, I.M., Locatelli, M., Tardella, F.: New and old bounds for standard quadratic optimization: dominance, equivalence and incomparability. Math. Program. 115, 31–64 (2008)

Burer, S.: On the copositive representation of binary and continuous nonconvex quadratic programs. Math. Program. 120, 479–495 (2009)

Burer, S., Anstreicher, K., Dür, M.: The difference between \(5\times 5\) doubly nonnegative and completely positive matrices. Linear Algebra Appl. 431, 1539–1552 (2009)

Burer, S., Dong, H.: Separation and relaxation for cones of quadratic forms. Math. Program. 137, 343–370 (2013)

Cottle, R.W., Habetler, G.J., Lemke, C.E.: On classes of copositive matrices. Linear Algebra Appl. 3, 295–310 (1970)

Dickinson, P.J.C., Gijben, L.: On the computational complexity of membership problems for the completely positive cone and its dual. Comput. Optim. Appl. 57, 403–415 (2014)

Dickinson, P.J.C.: Geometry of the copositive and completely positive cones. J. Math. Anal. Appl. 380, 377–395 (2011)

Dong, H., Anstreicher, K.: Separating doubly nonnegative and completely positive matrices. Math. Program. 137, 131–153 (2013)

Drew, J.H., Johnson, C.R., Loewy, R.: Completely positive matrices associated with M-matrices. Linear Multilinear Algebra 37, 303–310 (1994)

Hadeler, K.-P.: On copositive matrices. Linear Algebra Appl. 49, 79–89 (1983)

Haynsworth, E., Hoffman, A.J.: Two remarks on copositive matrices. Linear Algebra Appl. 2, 387–392 (1969)

Hildebrand, R.: The extreme rays of the \(5\times 5\) copositive cone. Linear Algebra Appl. 437, 1538–1547 (2012)

Hoffman, A.J., Pereira, F.: On copositive matrices with \(-1, 0, 1\) entries. J. Comb. Theory (A) 14, 302–309 (1973)

Kaplan, W.: A test for copositive matrices. Linear Algebra Appl. 313, 203–206 (2000)

de Klerk, E., Pasechnik, D.V.: Approximation of the stability number of a graph via copositive programming. SIAM J. Optim. 12, 875–892 (2002)

Peña, J., Vera, J., Zuluaga, L.F.: Computing the stability number of a graph via linear and semidefinite programming. SIAM J. Optim. 18, 87–105 (2007)

Sponsel, J., Dür, M.: Factorization and cutting planes for completely positive matrices by copositive projection. Math. Program. 143, 211–229 (2014)

Väliaho, H.: Criteria for copositive matrices. Linear Algebra Appl. 81, 19–34 (1986)

Acknowledgments

We are grateful to the referees for their careful reading and helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by Grant no. G-18-304.2/2011 by the German-Israeli Foundation for Scientific Research and Development (GIF).

Rights and permissions

About this article

Cite this article

Berman, A., Dür, M., Shaked-Monderer, N. et al. Cutting planes for semidefinite relaxations based on triangle-free subgraphs. Optim Lett 10, 433–446 (2016). https://doi.org/10.1007/s11590-015-0922-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-015-0922-3