Abstract

Neurons in the brain receive thousands of synaptic inputs from other neurons. This afferent information is processed by neurons through synaptic integration, which is an important information processing mechanism in biological neural networks. Synaptic currents integrated from spiking trains of presynaptic neurons have complex nonlinear dynamics which endow neurons with significant computational abilities. However, in many computational studies of neural networks, external input currents are often simply taken as a direct current that is static. In this paper, the influences of synaptic and noise external currents on the dynamics of spiking neural network and its computational capability have been investigated in detail. Our results show that due to the nonlinear synaptic integration, both of fast and slow excitatory synaptic currents have much more complex and oscillatory fluctuations than the noise current with the same average intensity. Thus network driven by synaptic external current exhibits remarkably more complex dynamics than that driven by noise external current. Interestingly, the enhancement of network activity is beneficial for information transmission, which is further supported by two computational tasks conducted on the liquid state machine (LSM) network. LSM with synaptic external current displays considerably better performance in both nonlinear fitting and pattern classification than that with noise external current. Synaptic integration can significantly enhance the entropy of activity patterns and computational performance of LSM. Our results demonstrate that the complex dynamics of nonlinear synaptic integration play a critical role in the computational abilities of neural networks and should be more broadly considered in the modelling studies of spiking neural networks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In our brain cortex, synaptic integration is a complex process which describes how neurons integrate the receiving inputs from thousands of presynaptic neurons before the generation of a nerve impulse (action potential) (Williams and Stuart 2002). And this event endows neurons with significant computational abilities. Thus, synaptic integration has an important physiological role in information processing in central neurons. Experimental evidence has demonstrated that considerable computation occurs within dendrites themselves, and that local interactions between synaptic events in dendrites, together with active dendritic conductances, have influences on the rate and precise timing of axonal action potential output (Gulledge et al. 2005). Temporally synchronous and spatially clustered synaptic inputs can make a single dendrite perform highly nonlinear synaptic integrations in layer 5 pyramidal neuron (Li 2014). In Neftci et al. (2016), the authors proposed Synaptic Sampling Machine which is a class of spiking neural network model using synaptic stochastic to perform Monte Carlo sampling and unsupervised learning. Their results show that synaptic unreliability can induce the necessary stochasticity and vastly improve the performance of spiking neural networks in practical machine learning tasks over existing solutions Neftci et al. (2016). Relevant extensive studies on synaptic integration have attracted much attention in neurophysiological studies in recent years (Spruston 2008; Kumamoto et al. 2012; Sultan et al. 2015; Howard and Baraban 2016; Justus et al. 2017).

With the rapid development of computational neuroscience, modelling studies of spiking neural networks (SNN) have becoming increasingly attractive. Spiking neurons are modelled as differential equations with nonlinear dynamical characteristics, such as the Hodgkin and Huxley (1990), Liu et al. (2013), FitzHugh (1961), Zhou et al. (2009), Wang et al. (2017), Izhikevich (2003), Li et al. (2016, 2017a, b), and Morris Lecar Chen et al. (2017) models, and so on. Spiking in neuronal network has been recently studied in autapse-related neural population (Guo et al. 2016a, b; Yilmaz et al. 2015, 2016). There are several recent reviews cover the advances about chimera states in neuronal networks (Majhi et al. 2019), functional importance of noise in neuronal information processing (Guo et al. 2018), and network science of biological systems at different scales (Gosak et al. 2018). However, in most of these studies synaptic currents are only considered in the recurrent connections within the network. While external currents which act as background stimulation applied to the neurons are usually simplified as a direct current which is static and different from the synaptic current in the biological system. The influences of synaptic integration in external background on the dynamic characteristics of network are often ignored and the computational performances of SNN with biologically realistic external driven signal are rarely investigated.

Thus, inspired from the paper (Li et al. 2013) where a biophysiologically-detailed layer 5 pyramidal neuron model is used to study the experimentally observed phenomenon of frequency preference, in this paper we adopt the similar strategy to generate the dynamic external current by integrating each presynaptic input from a Poisson spiking train. Both of the fast and slow excitatory synapses such as the AMPA current and NMDA current are considered by tuning the rising and decay time constant of the synapse model. Our results show that the dynamical complexity of SNN driven by this biologically plausible synaptic current is remarkable higher than that of SNN driven by a noisy direct current with the same average intensity. In addition, computational applications of SNNs with different kinds of external driven currents are investigated. We use this model to investigate which kind of external currents can drive the system to reach higher computational capability. Our study shows that liquid state machine (LSM) with synaptic external current has much better performance than LSM with direct external current in the tasks of nonlinear fitting (approximation of several real-time independent inputs) and pattern classification.

Methods

Neuron model

The Izhikevich neuron model (Izhikevich 2003), which has been shown to be biologically plausible and computationally efficient, is adopted. This model satisfies the following equations:

where \(i=1,2,\ldots ,N\), \(v_{i}\) represents the membrane potential, and \(u_{i}\) is a membrane recovery variable. The parameters a, b, c, and d are dimensionless, with the following selected values: \(a=0.02\), \(b=0.2\), \(c=-65\), and \(d=6\) (Izhikevich 2003). I stands for the externally applied current, and \(I_{i}^{syn}\) is the recurrent synaptic current through neuron i and is governed by the dynamics of the synaptic variable \(s_j\) as follows (Wang and Rinzel 1992; Van et al. 1994):

The parameter \(g_{ij}\) is the synaptic conductance from the jth neuron to the ith neuron. The conductance values are randomly distributed in [0, 0.015]. The synaptic recovery function \(\alpha (v_{j})\) can be considered as the Heaviside function. When the presynaptic cell is in the silent state \(v_{j}<0\), \(s_j\) can be reduced to \(\dot{s_{j}}=-s_{j}/\tau\). Otherwise, \(s_j\) jumps quickly to 1 and acts on the postsynaptic cells. Here, the excitatory synaptic reversal potential \(v_{syn}\) is set to 0, the initial value of \(s_j\) is 0. Other parameters used in this paper are \(\alpha _0 = 3\), \(\tau =2\), and \(v_{shp}=5\). The initial values of v and u are set to be \(-\,65\) mV and 0, respectively.

Note that the external current I in Eq. (1) remarkably influences the firing activity of neural network (Li et al. 2017b). In this study, inhibitory synapses have similar effect as the external current. Adding inhibition is equivalent to increase the intensity of excitatory external current, making the network response in a balanced firing rate. For simplicity, we did not include inhibitory synapses in this study. Here two types of external current were applied to the neurons: one is synaptic currents integrated from Poisson spiking trains, another is direct current with white noise. In the following subsection, we will introduce the external current in detail.

External current

Neurons are connected by synapses in biological neural networks. When presynaptic neuron emits a spike, then neurotransmitters are released from the synapses and bind to receptors located in the postsynaptic cell to excite or inhibit the postsynaptic neurons. This process facilitates the transmission of information. The two most common neurotransmitters in the brain are glutamate and GABA (gamma-aminobutyric acid), which act on the excitatory and inhibitory receptors, respectively. The most prominent excitatory receptors for glutamate are the AMPA (\(\alpha\)-amino-3-hydroxy-5-methyl-4-isoxazole-propionic acid) and NMDA (N-methyl-D-aspartic acid) receptors. Ion channels controlled by AMPA receptors produce fast excitatory synaptic transmission in the central nervous system, while NMDA-controlled-channels are significantly slower (Li 2011). The time course of the current through receptors (synaptic current) includes rise and decay phases (Destexhe et al. 1998). NMDA receptors mediate the prototypical slow excitatory synaptic currents in the brain. Synaptic transmission mediated by NMDA receptors has slower rise and decay courses. This transmission is described using the postsynaptic potential (PSP) kernel (Gütig and Sompolinsky 2006) (as shown in Fig. 1a).

External currents applied to neurons in the spiking neural network. a PSP kernel: solid line represents NMDA kernel, while dotted line represents AMPA kernel. b AMPA synaptic external current generated through a Poisson spike train (upper colored spikes) which is converted into dynamic synaptic inputs by AMPA kernel and then integrated by synaptic integration: dotted line represents the average value of current. c NMDA synaptic external current. d Noise external current (Gaussian noise is added to the static direct current). (Color figure online)

AMPA receptors mediate synaptic currents that are substantially faster than NMDA. Here, we assume that the rise of AMPA-mediated transmission is instantaneous, and synaptic inputs only decay exponentially (see the dotted red line in Fig. 1a). As in Li et al. (2013), Destexhe et al. (1998), Vargas-Caballero and Robinson (2004), two simplified PSP kernels are used to describe the synaptic transmission \(K_{ampa}\) and \(K_{nmda}\) respectively:

where \(t_i\) denotes the spike times of the ith afferent, the factor \(V_0=2.12\) normalizes PSP kernel to 1. The parameters \(\tau _d\)/\(\tau _1\) and \(\tau _2\) donate decay time constants of membrane integration and synaptic currents, respectively. Here \(\tau _d=30\) ms, \(\tau _1=32\) ms and \(\tau _2=8\) ms.

The synaptic external currents are generated as follows. First, a Poisson spiking train (rate is 50 Hz) is converted into AMPA or NMDA synaptic inputs by AMPA kernel \(K_{ampa}\) or NMDA kernel \(K_{nmda}\) respectively. Then, we use synaptic integration to integrate the synaptic inputs as AMPA synaptic external current \(I_{ampa}\) (Fig. 1b) or NMDA synaptic external current \(I_{nmda}\) (Fig. 1c), respectively. The integration is as follows:

where \(t_k\) denotes the Kth spike time in the spike train. The constants \(A_{ampa}\) and \(A_{nmda}\) are the weighting coefficients of the AMPA and NMDA kernels, respectively, which can affect directly the intensity of the synaptic external currents. We select \(A_{ampa}=5\), \(A_{nmda}=3\) to ensure that \(I_{ampa}\) and \(I_{nmda}\) have the same average intensity (see the red dotted line in Fig. 1b, c).

For the direct external current, it is composed of a direct constant current and a white noise to obtain the noise external current (see Fig. 1d):

where \(\mu\) is the mean value of the noisy external current, and \(\xi\) is the Gaussian noise with zero mean and intensity D that represents the white noisy. We select \(\mu =7.3\) and \(D=1\) to make it share the same average intensity as the synaptic external currents.

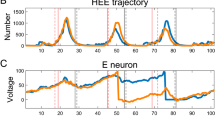

Dynamic analysis

In this paper, the spiking neural network is composed of 200 neurons which are all-to-all randomly connected with recurrent synaptic weights normalized in a range of 0 and 0.015 (Fig. 2). The dynamics of SNN under different external currents (noise or synaptic external current) are shown in Fig. 3. The development of the membrane potential over time of a randomly selected neuron in the network is plotted in Fig. 3a–c, driven by AMPA/NMDA synaptic external current, or noise external current, respectively. We can see that although the total average intensity of these external currents are identical, the firing patterns in response are quite different. Under the driven of noise external current, the neuron fires nearly periodically, which is much simpler than the irregular firings triggered by the synaptic external current (AMPA or NMDA). The spacial-temporal responses of the whole network are shown in Fig. 3d–f. The firings of the network with noise external current are highly synchronized, while networks with synaptic external current (AMPA or NMDA) fire more uniformly and dispersedly. For networks with AMPA or NMDA synaptic external current, there is no big difference in responses between these two cases.

Dynamic responses of single neuron and neural networks with different external currents. Membrane potentials (V) of the neurons with a AMPA or b NMDA synaptic external current or c noise external current. Firing activities of the network in response to d AMPA synaptic current or e NMDA synaptic current or f noise external current. We can see that although the total average intensity of these external currents are identical, the firing patterns in response are quite different. Under the driven of noise external current, the neuron fires nearly periodically, which is much simpler than the irregular firings triggered by the synaptic external current (AMPA or NMDA)

Obviously, the dynamics of networks with synaptic external current are more complex than that of the network with noise external current. To further illustrate this phenomenon, the distributions of inter-spike interval (ISI) of SNN are shown in Fig. 4a–c for AMPA, NMDA synaptic external current, and noise external current, respectively. The ISI distributions corresponding to both of the synaptic external currents are broad and diverse, while the corresponding distribution of noise external current is narrow and unitary. These results indicate the diversity and complexity of the firing patterns of SNN driven by synaptic external currents are better than those of SNN with noise external current. Moreover, the complexity of the network activity induced by these external currents are further compared and measured based on the information entropy (H), which is defined as follows:

where n is the number of unique binary patterns, and \(p_i\) is the probability that pattern i occurs (Shew et al. 2011). For calculation convenience, the neuronal activities are measured in pattern units (unit = 10). In Table 1, parameters for the input external current, i.e. average intensity (\(D_{avg}\)) and standard deviations (SDs), and the output response, i.e. mean firing rate \(f_{net}\) and activity entropy are compared for different external current. With the same average intensity, the standard deviations of the synaptic external currents are much larger than that of noise external current, leading to the higher activity entropy of network activity in response to synaptic external currents. While due to the identical input intensity, differences between mean firing rates of all networks are not quite obvious. This result indicates that synaptic integration is beneficial for generating oscillatory external currents, which can trigger complex and diverse network responses with high activity entropy. Entropy characterizes the information capacity of the population. It can also be tuned by changing the parameter of decay time constant of synaptic input as shown in Fig. 5. As \(\tau _d\) increases, the average intensity of the synaptic current also increases due to synaptic integration. While when the network is driven by excessive external current, neurons fire more synchronously, thus resulting in lower activity entropy.

Activity entropy of networks (a) and average intensity of synaptic external current (b) with increased decay time constant \(\tau _d\). Note that when \(\tau _d\) increases, the integrated synaptic inputs become more smooth, which trigger regular firings with low activity entropy (see the subplot shown in a)

It is believed that a network with low entropy presents a challenge in information transmission in the cortex, that is, results in limited information transmission from input to output (Shew et al. 2011). Significant evidence suggests that maximization of entropy is an organizing principle of neural information processing systems (Laughlin 1981; Dan et al. 1996; Garrigan et al. 2010). Our results demonstrate that synaptic integration can significantly increase the entropy of neural network, indicating that information transmission of networks could be improved. To verify this point, we have conducted several computational tasks based on the model of SNN in the following sections. Since the complexities (or entropies) of the firing activity of networks in response to AMPA or NMDA synaptic current with the same average intensity are almost identical (see Table 1), we select AMPA synaptic current as the synaptic external current in the subsequent simulations. And in the next two subsections, all of the parameter settings of SNN are the same as in the Sect. 3.

Nonlinear fitting

The liquid state machine (LSM) model proposed by Natschläger et al. (2002) is one of the few biologically plausible computational neural network model for real-time computing on time-varying inputs. This model uses a recurrent SNN to hold and nonlinearly transform the information from the previous input stream to the high-dimensional transient state of neural network (Li et al. 2017b). In this section, a biologically relevant real-time computational task is designed to compare the computational capability of LSMs with synaptic or noise external current. Figure 6 shows the architecture of the network for nonlinear fitting task, i.e. the output is expected to be the approximation of the linear or nonlinear functions of input signals. All of the recurrent neurons are driven by a common background synaptic or noise external current. Meanwhile, neurons in the recurrent SNN are equally divided into four groups where each group received input only from one of the four input streams. Only the readout weights (\(W_{out}\)) are trained using a simple regression technique.

Architecture of the LSM network for nonlinear fitting task. All of the recurrent neurons are driven by a common background synaptic or noise external current. Meanwhile, neurons in the SNN are equally divided into four groups where each group received input only from one of the four input streams. Neurons applied with different inputs are marked with different colors. The output synaptic weights \(W_{out}\) are trained by linear regression to make the output response fit/approximate the teacher signal as much as possible. (Color figure online)

Nonlinear fitting task on liquid state machine. a Four independent input streams (lines) are synaptically integrated by eight Poisson spike trains (dots) with randomly varying rates \(r_i(t),i=1,\ldots , 4\). b Teacher signal \(r_1+r_3\). c, d Firing activities of LSM with synaptic or noise external current, respectively. e, f Readouts of the trained LSMs with different external currents (red lines), compared with the target output (blue lines). Comparison of readout responses between LSM with two kinds of background current demonstrate that non-synchronous and complex network activity is beneficial for the nonlinear fitness of LSM with synaptic external current. (Color figure online)

Similar as in previous relevant studies (Maass et al. 2007; Li et al. 2017b), the inputs are four independent signal streams generated by the Poisson process with randomly varying rates \(r_i(t)\), \(i=1,\ldots , 4\), where each input stream consists of eight spike trains (see Fig. 7a). Spiking trains are then converted into input streams by synaptic integrations as described in Eq. 4. Notably, the input stream relies on action potentials (spikes) rather than on the firing rate for increasing the dynamic complexity of the circuits. Synaptic weight from input to the recurrent network is 0.1. The baseline firing rates for streams 1 and 2 are chosen to be 5 Hz, with randomly distributed bursts of 120 Hz for 50 ms. The rates for Poisson processes that generate the spike trains for input stream 3 and 4 are periodically updated by randomly drawing from the two options 30 Hz and 90 Hz. The readout component is trained by linear regression according to the teaching signal. The output of the readout component of the trained LSM should approximate the teacher signal \(r_1+r_3\) as much as possible (Fig. 7b). The firing activities of LSM with synaptic (AMPA) or noise external current are shown in Fig. 7c, d, respectively. Comparison of readout responses between LSM with two kinds of background current (as shown in Fig. 7e, f) demonstrate that non-synchronous and complex network activity is beneficial for the nonlinear fitness of LSM with synaptic external current. LSM driven by synaptic external current shows higher dynamical entropy, which contributes to the much lower mean relative errors (MREs) than LSM driven by noise external current (as shown in Fig. 8). The key point for the well fitness of the target curve is whether the high-dimensional transient states of the liquid neural network are diverse and complex enough. When the liquid is driven by the noisy constant background current, the firings are more synchronous and regular which result in poor performance. This result indicates that the oscillatory synaptic integration can significantly enhance the computational performance of SNN.

Temporal pattern classification

In this section, a temporal pattern classification task (Häusler et al. 2003; Li et al. 2017a) is used to study the effects of the synaptic or noise external current on the performance of LSM for pattern classification. The architecture of LSM for spike pattern classification is shown in Fig. 9. Similar as the previous section, external current is added into the recurrent network as background stimulation environment. Besides, spike trains with four templates are used as the input signals for the classification task. Each template (donated as class) consisting of one channel of 25 Hz Poisson spike train with duration of 500 ms. The input stimulus are produced by adding Gaussian distributions to the templates with mean zeros and standard deviation (SD) of 6 ms (SD is referred as jitter). We generate 10 training samples for each template, that is, 40 training samples for classifying four spike patterns task. A total of 40 jittered versions of the templates are generated. The number of readout neurons is equal to the number of templates. The readout component is trained by linear regression according to the teaching signal. The performance of classification accuracy is measured by evaluating the correction coefficient (CC) as in Li et al. (2017a). The index of the readout neuron with maximum CC is selected as the readout recognizing the template from which the input spike train is generated. CC is described as follows:

where Y is the actual output, \(Y^*\) is the target output (teacher signal) of LSM, \(\overline{Y}\) and \(\overline{Y^*}\) are the average values of Y and \(Y^*\), respectively.

Architecture of LSM for pattern classification task. The external current (synaptic or noise) is added into the recurrent network as background stimulation environment. Besides, spike trains with four templates are used as the input signals for the classification task. Each template (donated as class) consisting of one channel of 25 Hz Poisson spike train with duration of 500 ms. The input stimulus are produced by adding Gaussian distributions to the templates with mean zeros and standard deviation (SD) of 6 ms. The number of readout neurons is equal to the number of templates. The readout component is trained by linear regression according to the teaching signal

Pattern classification task of LSM. a The classification accuracy of LSM with synaptic (blue line) or noise (red line) external current when the number of templates is increased (here jitter = 6 ms). b Comparisons of recognition accuracy of LSM with different external current when the vaule of jitter is increased (here the number of templates is 4). LSM with synaptic external current always has much higher accuracy and performs much more robust with the increase of jitter added to the templates (i.e. noisy disturbance in inputs is increased) than LSM with noise external current. (Color figure online)

As can been seen in Fig. 10a, when the number of templates is increased which means that recognition complexity is increased, the (testing) classification accuracy of LSM drops quickly, especially for LSM driven by noise external current. Moreover, the accuracy of LSM with synaptic external current is always much higher than that of LSM with noise external current. Figure 10b shows that LSM with synaptic external current also performs much more robust with the increase of jitter added to the templates (i.e. noisy disturbance in inputs is increased). This result illustrates that synaptic external current can also enhance the performance of LSM in pattern classification.

Conclusions

In this paper, effects of synaptic integration on the dynamics and computational performance of a spiking neural network are investigated. Compared with the traditional direct current signal with white noise, synaptic current integrated from Poisson spiking trains can significantly increase dynamical complexity and diversity of firing patterns of the network. Moreover, this dynamical synaptic current can also obviously enhance the computational performance of the network on tasks of nonlinear fitting and pattern classification. As shown in Fig. 3, the background synaptic current can trigger the network population into diverse and complex firing patterns. While the noise background current only make the network fire in a synchronous pattern. If the default state of network dynamics is complex and has high information entropy, it is beneficial for the network ready to response to any kinds of inputs and conduct computational tasks. This result is consistent with our previous studies about neural networks with default chaotic or critical state performing better on computational tasks than networks with resting state or synchronous firings (Li et al. 2017b). These results imply that the nonlinear synaptic integration should be paid more attention in the modelling of computational neural networks.

Although the difference between synaptic current integrated from spiking trains and direct current with white noise is very obvious, neural network studies in the application of intelligent computing are mostly based on artificial neural networks, where information is transferred as continuous signal during the computation of linear static units. Even if the units are spiking-based neuron models, external stimulus are still commonly simplified to direct currents with white noise in the computational models (Liu et al. 2013; Zhou et al. 2009; Wang et al. 2017; Izhikevich 2003; Li et al. 2016, 2017a, b; Chen et al. 2017). Actually, in most of the modeling studies in computational neuroscience, input streams from both of the local and global connections are commonly based on synaptic integrations of spiking trains, since all of the external information is transferred into the system in the form of firing action potentials. It should be noted that there is a huge gap between computational neuroscience and the application models of neural networks. The main purpose of this work is to emphasize the importance of one of the basic synaptic properties broadly investigated in neuroscience, which should be attracted much attention. Our work may give insights for optimizing the computational performance of spiking neural networks with high information processing ability.

References

Chen MJ, Wang YF, Wang HT, Ren W, Wang XG (2017) Evoking complex neuronal networks by stimulating a single neuron. Nonlinear Dyn 88(4):2491–2501

Dan Y, Atick JJ, Reid RC (1996) Efficient coding of natural scenes in the lateral geniculate nucleus: experimental test of a computational theory. J Neurosci 16(10):3351–3362

Destexhe A, Mainen ZF, Sejnowski TJ (1998) Kinetic models of synaptic transmission. Methods Neuronal Model Ions Netw 2:1–25

FitzHugh R (1961) Impulses and physiological states in theoretical models of nerve membrane. Biophys J 1(6):445–466

Garrigan P, Ratliff CP, Klein JM, Peter S, Brainard DH, Balasubramanian V (2010) Design of a trichromatic cone array. Plos Comput Biol 6(2):e1000677

Gosak M, Markovič R, Dolenšek J, Slak R, Marjan M et al. (2018) Network science of biological systems at different scales: a review. Phys Life Rev S1571064517301501

Gulledge AT, Kampa BM, Stuart GJ (2005) Synaptic integration in dendritic trees. J Neurobiol 64(1):75–90

Guo D, Chen M, Perc M, Wu S, Xia C, Zhang Y et al (2016a) Firing regulation of fast-spiking interneurons by autaptic inhibition. Europhys Lett 114(3):30001

Guo D, Perc M, Liu T (2018) Functional importance of noise in neuronal information processing. Europhys Lett 124:50001

Guo D, Wu S, Chen M, Perc M, Zhang Y, Ma J et al (2016b) Regulation of irregular neuronal firing by autaptic transmission. Sci Rep 6(1):26096

Gütig R, Sompolinsky H (2006) The tempotron: a neuron that learns spike timing-based decisions. Nat Neurosci 9(3):420–428

Hodgkin AL, Huxley AF (1990) A quantitative description of membrane current and its application to conduction and excitation in nerve. Bull Math Biol 52(1–2):25–71

Howard MA, Baraban SC (2016) Synaptic integration of transplanted interneuron progenitor cells into native cortical networks. J Neurophysiol 116(2):472–478

Häusler S, Markram H, Maass W (2003) Perspectives of the high-dimensional dynamics of neural microcircuits from the point of view of low-dimensional readouts. Complexity 8(4):39–50

Izhikevich EM (2003) Simple model of spiking neurons. IEEE Trans Neural Netw 14(6):1569–1572

Justus D, Dalügge D, Bothe S, Fuhrmann F, Hannes C, Kaneko H, Friedrichs D, Sosulina L, Schwarz I, Elliott DA (2017) Glutamatergic synaptic integration of locomotion speed via septoentorhinal projections. Nat Neurosci 20(1):16–19

Kumamoto N, Gu Y, Wang J, Jenoschka S, Takemaru K, Levine J, Ge S (2012) A role for primary cilia in glutamatergic synaptic integration of adult-born neurons. Nat Neurosci 15(3):399–405

Laughlin S (1981) A simple coding procedure enhances a neuron’s information capacity. Zeitschrift fur Naturforschung Sect C Biosci 36(9–10):910–912

Li XM (2011) Cortical oscillations and synaptic plasticity: from a single neuron to neural networks. Hong Kong Polytechnic University, Hung Hom

Li XM (2014) Signal integration on the dendrites of a pyramidal neuron model. Cogn Neurodyn 8(1):81–85

Li XM, Chen Q, Xue FZ (2016) Bursting dynamics remarkably improve the performance of neural networks on liquid computing. Cogn Neurodyn 10(5):415–421

Li XM, Chen Q, Xue FZ (2017b) Biological modelling of a computational spiking neural network with neuronal avalanches. Philos Trans 375(2096):20160286

Li XM, Liu H, Xue FZ, Zhou HJ, Song YD (2017a) Liquid computing of spiking neural network with multi-clustered and active-neuron-dominant structure. Neurocomputing 243:155–165

Li XM, Morita K, Robinson HP, Small M (2013) Control of layer 5 pyramidal cell spiking by oscillatory inhibition in the distal apical dendrites: a computational modeling study. J Neurophysiol 109(11):2739–2756

Liu SB, Wu Y, Li JJ, Xie Y, Tan N (2013) The dynamic behavior of spiral waves in stochastic Hodgkin–Huxley neuronal networks with ion channel blocks. Nonlinear Dyn 73(1–2):1055–1063

Maass W, Joshi P, Sontag ED (2007) Computational aspects of feedback in neural circuits. Plos Comput Biol 3(1):e165

Majhi S, Ber BKa, Ghosh D, Perc M (2019) Chimera states in neuronal networks: a review. Phys Life Rev 28:100–121

Natschläger T, Maass W, Markram H (2002) The, “liquid computer”: a novel strategy for real-time computing on time series. Special Issue Found Inf Process Telematik 8(1):39–43

Neftci EO, Pedroni BU, Siddharth J, Maruan AS, Gert C (2016) Stochastic synapses enable efficient brain-inspired learning machines. Front Neurosci 10:241

Shew WL, Yang HD, Yu S, Roy R, Plenz D (2011) Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J Neurosci 31(1):55–63

Spruston N (2008) Pyramidal neurons: dendritic structure and synaptic integration. Nat Rev Neurosci 9(3):206–221

Sultan S, Li L, Moss J, Petrelli F, Cassá F, Gebara E, Lopatar J, Pfrieger FW, Bezzi P, Bischofberger J (2015) Synaptic integration of adult-born hippocampal neurons is locally controlled by astrocytes. Neuron 88(5):957–972

Van VC, Abbott LF, Ermentrout GB (1994) When Inhibition not Excitation Synchronizes Neural Firing. J Comput Neurosci 1(4):313–321

Vargas-Caballero M, Robinson HP (2004) Fast and slow voltage-dependent dynamics of magnesium block in the nmda receptor: the asymmetric trapping block model. J Neurosci 24(27):6171–6180

Wang XJ, Rinzel J (1992) Alternating and synchronous rhythms in reciprocally inhibitory model neurons. Neural Comput 4(1):84–97

Wang R, Wu Y, Wang L, Du M, Li JJ (2017) Structure and dynamics of self-organized neuronal network with an improved stdp rule. Nonlinear Dyn 88(3):1855–1868

Williams SR, Stuart GJ (2002) Synaptic integration. Wiley, New York

Yilmaz E, Ozer M, Baysal V, Perc M (2016) Autapse-induced multiple coherence resonance in single neurons and neuronal networks. Sci Rep 6:30914

Yilmaz E, Baysal V, Ozer M, Perc M (2015) Autaptic pacemaker mediated propagation of weak rhythmic activity across small-world neuronal networks. Phys A Stat Mech Appl S0378437115009139

Zhou J, Yu W, Li XM, Small M, Lu JA (2009) Identifying the topology of a coupled fitzhugh-nagumo neurobiological network via a pinning mechanism. IEEE Trans Neural Net 20(10):1679–1684

Acknowledgements

This work is supported by Natural Science Foundation of Chongqing (No. cstc2019jcyj-msxmX0154).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Human participants and/or animals

The authors declare that they have no financial or non-financial competing interests and all of the authors agree to the publication of this paper. This research do not involve any Human Participants and/or Animals.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, X., Luo, S. & Xue, F. Effects of synaptic integration on the dynamics and computational performance of spiking neural network. Cogn Neurodyn 14, 347–357 (2020). https://doi.org/10.1007/s11571-020-09572-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-020-09572-y