Abstract

This paper is concerned with a class of nonlinear uncertain switched networks with discrete time-varying delays . Based on the strictly complete property of the matrices system and the delay-decomposing approach, exploiting a new Lyapunov–Krasovskii functional decomposing the delays in integral terms, the switching rule depending on the state of the network is designed. Moreover, by piecewise delay method, discussing the Lyapunov functional in every different subintervals, some new delay-dependent robust stability criteria are derived in terms of linear matrix inequalities, which lead to much less conservative results than those in the existing references and improve previous results. Finally, an illustrative example is given to demonstrate the validity of the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Recently, a class of hybrid systems (Ye et al. 1998) have attracted many researchers’ significant attentions as they can model several practical control problems that involve the integration of supervisory logic-based control schemes and feedback control algorithms. As a special class of hybrid systems, switched networks (Brown 1989; Liberzon 2003) consist of a set of individual subsystems and a switching rule, play an important role in research activities, since they have witnessed the successful applications in many different fields such as electrical and telecommunication systems, computer communities, control of mechanical, artificial intelligence and gene selection in a DNA microarray analysis and so on. Therefore, the stability issues of switched networks have been investigated (Huang et al. 2005; Li and Cao 2007; Lian and Zhang 2011; Zhang and Yu 2009; Niamsup and Phat 2010). By using common Lyapunov function method and linear matrix inequality (LMI) approach, authors considered the problem of global stability in switched recurrent neural networks with time-varying delay under arbitrary switching rule in (Li and Cao 2007). However, common Lyapunov function method requires all the subsystems of the switched system (Liu et al. 2009) to share a positive definite radially unbounded common Lyapunov function. Generally, this requirement is difficult to achieve. The average dwell time method is proposed to deal with the analysis and stability of switched networks, which is regarded as an important and attractive method to find a suitable switching signal to guarantee switched system stability or improve other performance, and has been widely applied to investigate the analysis and stability for switched system with or without time-delay. In (Lian and Zhang 2011), employing the average dwell time approach (ADT), novel multiple Lyapunov functions were employed to investigate the stability of the switched neural networks under the switching rule depending on time. Generally speaking, switching rule is a piecewise constant function dependent on the state or time, most of existing works focus on stability for switched networks with switching rule dependent on time. Perhaps it is limited by existing method and technique, to the best of our knowledge, there are few scholars to deal with the robust stability (He and Cao 2008; Xu et al. 2012) for switched uncertain networks under state-dependent switching rule (Thanha and Phat 2013; Ratchagit and Phat 2011), despite its potential and practical importance.

Due to the finite switching speed of amplifiers, time delay especially time-varying delay is inevitably encountered in many engineering applications and hardware implementations of networks, it is often the main cause for instability and poor performance of system. Consequently, the stability of networks with time-varying delay is a meaningful research topic (Liu and Chen 2007). What the most we concern is how to choose the appropriate Lyapunov–Krasovskii functional, derive the better stability criteria, which can be shown that the results has less conservativeness. To reduce the conservatism of the existing results, new analysis methods such as free weighting matrix method, matrix inequality method, input–output approach are proposed. However, it is impossible to derive a less conservative result by using the common Lyapunov–Krasovskii functional, the delay central-point (DCP) method was firstly proposed in (Yue 2004), to solve the problem for robust stabilization of uncertain systems with unknown input delay. In this approach, introducing the central point of variation of the delay, the variation interval of the delay is divided into two subintervals (Zhang et al. 2009) with equal length. The main advantage of the method is that more information on the variation interval of the delay is employed, and the idea of delay-decomposing (Zhang et al. 2010; Zeng et al. 2011; Wang et al. 2012; Hu and Wang 2011; Wang et al. 2008) has been successfully applied in investigating the \(H_{\infty}\) control and the delay-dependent stability analysis for discrete-time or continuous-time systems with time-varying delay, which significantly reduced the conservativeness of the derived stability criteria. In (Zhang et al. 2010), the delay interval [0, d(t)] was divided into some variable subintervals by employing weighting delays, the stability results based on the weighting delay method were related to the number of subintervals, and the size of the variable subintervals or the position of the variable points. Authors considered the exponential stability analysis for a class of cellular neural networks, constructed a more general Lyapunov–Krasovskii functional by utilizing the central point of the lower and upper bounds of delay, since more information was involved and no useful item was ignored throughout the estimate of upper bound of the derivative of Lyapunov functional, the developed conditions were expected to be less conservative than the previous ones (Wang et al. 2012). Up to now, there no results have been proposed for the switched uncertain systems with discrete time-varying delay based on the delay-decomposing approach. Therefore, it is of great importance to study robust stability of switched uncertain networks with interval time-varying delay.

Motivated by the aforementioned discussions, the purpose of this paper is to deal with the robust asymptotic stability problem for switched interval networks with interval time-varying delays and general activation functions, the activation function can be unbounded and the lower bound of time-varying delay do not need to be zero. Inspired by the (DCP) method in (Yue 2004), constructing new Lyapunov–Krasovskii functional decomposing the delays in integral terms, based on the strictly complete property of the matrices system the delay-decomposing approach, some new delay-dependent robust stability criteria are derived in terms of LMIs, which can be efficiently solved by the interior point method (Boyd et al. 1994). The main novelty of this paper can be summarized as following: (1) switching signal in the paper depends on state of networks. (2) consider the parameters fluctuation, a new mathematical model of the switched networks with parameters in interval is established, it become much closer to the actual model. (3) introduce the delay-decomposing idea and piecewise delay method, analyzing the variation of the Lyapunov functional in every different subintervals, some new delay-dependent robust stability criteria are derived. Note that the delay-decomposing approach has proven to be effective in reducing the conservatism.

The rest of this paper is organized as follows: In “Switched networks model and preliminaries” section, the model formulation and some preliminaries are presented. In “Main results” section, some delay-dependent robust stability criteria for switched interval networks are obtained. An numerical example is given to demonstrate the validity of the proposed results in “An illustrative example” section. Some conclusions are drawn in “Conclusion” section.

Notations Throughout this paper, R denotes the set of real numbers, R n denotes the n-dimensional Euclidean space, R m × n denotes the set of all m × n real matrices. For any matrix A, A T denotes the transpose of A, A > 0 (A < 0) means that A is positive definite (negative definite), * represents the symmetric form of matrix. \(\dot{x}(t)\) denotes the derivative of x(t). Matrices, if their dimensions not explicitly stated, are assumed to have compatible dimensions for algebraic operations.

Switched networks model and preliminaries

Consider the interval network model with discrete time-varying delay described by the following differential equation in the form:

where \(y(t)=\left(y_{1}(t),\ldots,y_{n}(t)\right)^{T}\in R^{n}\) denotes the state vector associated with n neurons; \(g(y)=\left(g_{1}(y_{1}),\ldots,g_{n}(y_{n}\right))^{T}{:}R^{n}\rightarrow R^{n}\) is a vector-valued neuron activation function; \(u=\left(u_{1},\ldots,u_{n}\right)^{T}\) is a constant external input vector. τ(t) denotes the discrete time-varying delay. \(A=\hbox{diag}(a_{1},\ldots,a_{n})>0\) is an n × n constant diagonal matrix, denotes the rate with which the cell i resets its potential to the resting state when being isolated from other cells and inputs; \(B_{k}=(b_{ij}^{(k)})\in R^{n\times n}, k=1,2\), represent the connection weight matrices, and \(A_{l}=[\underline{A},\overline{A}]= \{A=\hbox{diag}(a_{i}){:}\,0<\underline{a}_{i}\leq a_{i}\leq \overline{a}_{i},i=1,2,\ldots,n\}\,B_{l}^{(k)}=\left[\underline{B}_{k},\overline{B}_{k}\right] =\{B_{k}=(b_{ij}^{(k)}){:} \underline{b}_{ij}^{(k)}\leq b_{ij}^{(k)}\leq \overline{b}_{ij}^{(k)},\,i, j=1,2,\ldots,n\}\) with \(\underline{A} =\hbox{diag}(\underline{a}_{1},\underline{a}_{2},\ldots,\underline{a}_{n}), \overline{A}=\hbox{diag}(\overline{a}_{1},\overline{a}_{2}, \ldots,\overline{a}_{n}), \underline{B}_{k}=(\underline{b}_{ij}^{(k)})_{n\times n}, \overline{B}_{k}=(\overline{b}_{ij}^{(k)})_{n\times n}\).

Throughout this paper, the following assumptions are made on the activation functions \(g_{j}(\bullet),j=1,\,2,\ldots,\,n\) and discrete time-varying delay τ(t):

\((\mathcal{H}_{1})\): There exist known constant scalars \(\check{l}_{i}\) and \(\hat{l}_{i}\), such that the activation function g j (•) are continuous on R and satisfy:

\((\mathcal{H}_{2})\): The time-varying delay τ(t) is differentiable and bounded with constant delay-derivative bounds:: \(\tau_n\leq \tau(t)\leq \tau_N, \,\dot{\tau}(t)\leq \mu<1\), where τ n , τ N , μ are positive constants.

\((\mathcal{H}_{3})\): The time-varying delay τ(t) satisfies: τ n ≤ τ(t) ≤ τ N , where τ n , τ N are positive constants.

Remark 1

In assumption \((\mathcal{H}_{2})\), the time-varying delay τ(t) is differentiable with the derivative less than 1, it is called ’slow delay’; when removing the derivability, τ(t) maybe show a large rate of change, hence, we call it as ’fast delay’. In this paper, we will discuss interval network model with slow delay and fast delay respectively.

The initial value associated with (1) is assumed to be y(s) = ψ(s), ψ(s) is a continuous function on [ − τ N , 0]. Similar with proof of Theorem 3.3 in (Balasubramaniam et al. 2011), we can show that system (1) has one equilibrium point \(y^{\ast}\) under the above assumptions, the equilibrium \(y^{\ast}\) will be always shifted to the origin by letting \(x(t)=y(t)-y^{\ast}\), and the network system (1) can be represented as follows:

where \(f_{j}(x_{j}(t))=g_{j}(x_{j}(t)+y_{j}^{\ast})-g_{j}(y_{j}^{\ast})\), and \(f_{j}(0)=0,\,j=1,2,\ldots,n\).

The initial condition associated with (2) is given in the form \(x(s)=y(s)-y^{\ast}=\varphi(s)=\psi(s)-y^*,\,s\in[-\tau_{N},0]\). It is easy to see f(x(t)) satisfy the assumption \((\mathcal{H}_1)\).

Based on some transformations, the system (2) can be written as an equivalent form:

where \(\Upsigma_{A}\in \Upsigma,\,\Upsigma_{k}\in \Upsigma,\,k=1,2\).

where \(e_{i}\in R^{n}\) denotes the column vector with ith element to be 1 and others to be 0.

System (3) can be changed as

where E = [E A , E 1, E 2],

and \( \Updelta(t)\) satisfies the following matrix quadratic inequality:

In this paper, our main purpose is to study the switched interval networks, it consists of a set of interval network with discrete time-varying delays and a switching rule. Each of the interval networks regards as an individual subsystem. The operation mode of the switched networks is determined by the switching rule. According to (2), the switched interval network with discrete interval delay can be described as follows:

where \(A_{l_{\sigma}}=[\underline{A}^{\sigma},\overline{A}^{\sigma}]= \{A^{\sigma}=\hbox{diag}(a_{i_{\sigma}}){:}\,0<\underline{a}_{i_{\sigma}}\leq a_{i_{\sigma}}\leq \overline{a}_{i_{\sigma}},i=1,2,\ldots,n\}\,B_{l_{\sigma}}^{(k)}=[\underline{B}_{k}^{\sigma}, \overline{B}_{k}^{\sigma}]=\{B_{k}^{\sigma}=[b_{ij_{\sigma}}^{(k)}]{:} \,0<\underline{b}_{ij_{\sigma}}^{(k)}\leq b_{ij_{\sigma}}^{(k)}\leq \overline{b}_{ij_{\sigma}}^{(k)},\,i, j=1,2,\ldots,n\}\) with \(\underline{A}^{\sigma}=\hbox{diag}(\underline{a}_{1_{\sigma}}, \underline{a}_{2_{\sigma}},\ldots,\underline{a}_{n_{\sigma}})\,\overline{A}^{\sigma}=\hbox{diag}(\overline{a}_{1_{\sigma}}, \overline{a}_{2_{\sigma}},\ldots,\overline{a}_{n_{\sigma}})\,\underline{B}_{k}^{\sigma}=[\underline{b}_{ij_{\sigma}}^{(k)}]_{n\times n},\,\overline{B}_{k}^{\sigma}=[\overline{b}_{ij_{\sigma}}^{(k)}]_{n\times n}\).

\(\sigma{:}R^{n}\rightarrow \Upgamma=\{1, 2, \ldots,N\}\) is the switching signal, which is a piecewise constant function dependent on state x(t). For any \(i\in \{1, 2, \ldots,N\}\,A^{i}=A_{0}^{i}+E_{A}^{i}\Upsigma_{A}^{i}F_{A}^{i}\,B_{k}^{i}=B_{k0}^{i}+E_{k}^{i}\Upsigma_{k}^{i}F_{k}^{i}\), and \(\Upsigma_{A}^i\in \Upsigma,\,\Upsigma_{k}^i\in \Upsigma,\,k=1,2\). This means that the matrices (A σ, B σ1 , B σ2 ) are allowed to take values, at an arbitrary time, in the finite set \(\{(A^{1},B_{1}^{1},B_{2}^{1}), (A^{2},B_{1}^{2},B_{2}^{2}), \ldots, (A^{N},B_{1}^{N},B_{2}^{N})\}\).

By (4), the system (5) can be written as

where E σ = [E σ A , E σ1 , E σ2 ]and \( \Updelta^{\sigma}(t)\) satisfies the following quadratic inequality:

To derive the main results in the next section, the following definitions and lemmas are introduced.

Definition 2.1

The switched interval neural network model (5) is said to be globally robustly asymptotically stable if there exists a switching function \(\sigma(\cdot)\) such that the neural network model (5) is globally asymptotically stable for any \(A^{\sigma}\in{A_{l_{\sigma}}},\,B_{k}^{\sigma}\in{B_{l_{\sigma}}^{(k)}},\,k=1,2\).

Definition 2.2

The system of matrices \(\{G_{i}\}quad i=1,2,\ldots,N\), is said to be strictly complete if for every \(x\in R^{n} \backslash \{0\}\) there is \(i\in\{1,2,\ldots,N\}\) such that x T G i x < 0.

Let us define N regions

where \(\Upomega_{i}\) are open conic regions, obvious that the system {G i } is strictly completely if and only if these open conic regions overlap and together cover R n \ {0}, that is

Proposition 2.1

(Uhlig 1979)The system \(\{G_{i}\},\,i=1,\,2, \ldots,\,N\), is strictly complete if there exist \(\lambda_{i} \geq 0,\,i=1,\,2,\ldots,\,N,\,\sum\limits_{i=1}^{N}\lambda_{i}=1\), such that

Lemma 2.1

(Han and Yue 2007) Given any real matrix M = M T > 0, for any t > 0, function τ(t) satisfies τ n ≤ τ(t) ≤ τ N , and \(\dot{x(t):[-\tau_{N},-\tau_{n}]}\longrightarrow R^{n}\), the following integration is well defined:

Lemma 2.2

(Zhang et al. 2009) For any constant matrices ψ1 and ψ2 and \(\Upomega\) of appropriate dimensions, function τ(t) satisfies τ n ≤ τ(t) ≤ τ N , then

holds, if and only if

In the following section, we use the generalized the DCP method, partition the interval delay into m subintervals with equal length, be some scalars satisfying

Obviously, \([\tau_{n},\tau_{N}]=\bigcup\limits_{j=1}^{m}[\tau_{j-1},\tau_{j}]\). For convenience, we denote the length of the subinterval δ = τ j − τ j-1, therefor, for any t > 0, there should exist an integer k, such that \(\tau(t)\in[\tau_{k-1},\tau_{k}]\).

Remark 2

In this paper, we consider the case when m = 3, interval delay is decomposed into three subintervals: [τ n , τ1], [τ1, τ2], and [τ2,τ N ]. Let \(\mathcal{S}_{1}=\{t|t>0,\,\tau(t)\in[\tau_{n},\tau_{1}]\}\,\mathcal{S}_{2}=\{t|t>0,\,\tau(t)\in(\tau_{1},\tau_{2}]\}\,\mathcal{S}_{3}=\{t|t>0,\,\tau(t)\in(\tau_{2},\tau_{N}]\}\), in the proof of our main results, applying a piecewise analysis method (Zhang et al. 2009) to check the variation of derivative of the Lyapunov functional in S1 S2 and S3 respectively.

Main results

In this section, the global robust asymptotic stability of the proposed model (5) will be discussed. By delay fractioning approach, designing a effective switch rule and constructing a suitable Lyapunov functional, a new robust delay-dependent criterion for the global asymptotic stability of switched network system (5) is derived in terms of LMIs.

Theorem 3.1

Under the assumption \((\mathcal{H}_1)\) and \((\mathcal{H}_2)\), if there exist matrices P > 0, T 1 > 0, T 2 > 0, Q j > 0, R j > 0 (j = 1, 2, 3, 4) and diagonal matrices \(\gamma_{k}=diag\{\gamma_{k,1},\,\gamma_{k,2}, \ldots,\,\gamma_{k,n}\}>0\,(k=1,\,2,\,3)\), and matrices \(N_{l},\,M_{l},\,Z_{l},\,S_{l}(l=1,\,2,\,\ldots,\,9)\) with appropriate dimensions such that for all m and n, the following conditions hold:

-

(i)

\(\exists\,\xi^{i}\geq 0, \quad i=1,\,2,\,\ldots, N, \quad\sum_{i=1}^{N}\xi^{i}=1: \sum_{i=1}^{N}\xi^{i}\) G i (A i0 , Q 1, Q 2, Q 3) < 0.

-

(ii)

$$ \left[\begin{array}{cc} \Uppi^{i}+\Uptheta_{m}^{i} &\ast \\ \Upupsilon_{mn}^{i} & -R_{m}^{i} \end{array} \right]<0,\quad m=1,\,2,\,3,\quad n=1,\,2 $$(8)

where

$$ \Uppi^{i}=\left[\begin{array}{ccccccccc} \Uppi_{11}^{i} & \ast & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ R_{4} & -Q_{1}-R_{4} & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ 0 & 0 & -Q_{2} & \ast & \ast & \ast & \ast & \ast & \ast \\ 0 & 0 & 0 & -Q_{3} & \ast & \ast & \ast & \ast & \ast \\ 0 & 0 & 0 & 0 & -Q_{4} & \ast & \ast & \ast & \ast \\ 0 & 0 & 0 & 0 & 0 & \Uppi_{66}^{i} & \ast & \ast & \ast \\ \Uppi_{71}^{i} & 0 & 0 & 0 & 0 & 0 & \Uppi_{77}^{i} & \ast & \ast \\ \Uppi_{81}^{i}& 0 & 0 & 0 & 0 & \gamma_{3}L_{2} & \Uppi_{87}^{i} & \Uppi_{88}^{i} & \ast \\ (E^{i})^{T}P-(E^{i})^{T}\phi A_{0}^{i} & 0 & 0 & 0 & 0 & 0 &\Uppi_{97}^{i} &\Uppi_{98}^{i} &\Uppi_{99}^{i} \end{array} \right]<0, $$where

$$ \begin{aligned} \Uppi_{11}^{i}&=T_{1}+(A_{0}^{i})^{T}\phi A_{0}^{i}+Q_{4}-R_{4}-\gamma_{2}L_{1}-L_{1} \gamma_{2}+(F_{A}^{i})^{T}F_{A}^{i} \\ \Uppi_{66}^{i}&=-(1-\mu)T_{1}-\gamma_{3}L_{1}-L_{1}\gamma_{3} \\ \Uppi_{71}^{i}&=L_{2}\gamma_{2}+(B_{10}^{i})^{T} P-\gamma_{1}A_{0}^{i}-(B_{10}^{i})^{T}\phi A_{0}^{i}+(F_{A}^{i})^{T}F_{1}^{i} \\ \Uppi_{77}^{i}&=\gamma_{1}B_{10}^{i}+(B_{10}^{i})^{T}\gamma_{1} +T_{2}-\gamma_{2}-\gamma_{2}^{T}+(B_{10}^{i})^{T}\phi B_{10}^{i}+(F_{1}^{i})^{T}F_{1}^{i} \\ \Uppi_{81}^{i}&=(B_{20}^{i})^{T}P-(B_{20}^{i})^{T}\phi A_{0}^{i}+(F_{A}^{i})^{T}F_{2}^{i} \\ \Uppi_{87}^{i}&=(B_{20}^{i})^{T}\gamma_{1}+(B_{20}^{i})^{T}\phi B_{10}^{i}+(F_{1}^{i})^{T}F_{2}^{i} \\ \Uppi_{88}^{i}&=-(1-\mu)T_{2}-\gamma_{3}-\gamma_{3}^{T}+(B_{20}^{i})^{T}\phi B_{20}^{i}+(F_{2}^{i})^{T}F_{2}^{i} \\ \Uppi_{97}^{i}&=(E^{i})^{T}\gamma_{1}+(E^{i})^{T}\phi^{T}(B_{10}^{i})^{T} \\ \Uppi_{98}^{i}&=(E^{i})^{T}\phi^{T}(B_{20}^{i})^{T} \\ \Uppi_{99}^{i}&=(E^{i})^{T}\phi E^{i}-I \\ \end{aligned} $$$$ \Uptheta_{1}^{i}=\left[\begin{array}{ccccccccc} 0 &\ast & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ \delta N_{1}^{T} & \delta N_{2}^{T}+\delta N_{2}^{T} & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ -\delta M_{1}^{T} & \delta N_{3}^{T}-\delta M_{2}^{T} & \Uptheta_{1_{33}}^{i} & \ast & \ast & \ast & \ast & \ast & \ast \\ 0 & \delta N_{4}^{T} & R_{2}-\delta M_{4}^{T} & -R_{2}-R_{3} & \ast & \ast & \ast & \ast & \ast\\ 0 & \delta N_{5}^{T} & -\delta M_{5}^{T} & R_{3} & -R_{3} & \ast & \ast & \ast & \ast \\ \delta M_{1}^{T}-\delta N_{1}^{T} & \Uptheta_{1_{62}}^{i} & \Uptheta_{1_{63}}^{i} & -\delta N_{4}^{T}+\delta M_{4}^{T} & \Uptheta_{1_{65}}^{i} & \Uptheta_{1_{66}}^{i}& \ast & \ast & \ast \\ 0 & \delta N_{7}^{T} & -\delta M_{7}^{T} & 0 & 0 & -\delta N_{7}^{T}+\delta M_{7}^{T} & 0 & \ast & \ast \\ 0 & \delta N_{8}^{T} & -\delta M_{8}^{T} & 0 & 0 & -\delta N_{8}^{T}+\delta M_{8}^{T} & 0 & 0 & \ast \\ 0 & \delta N_{9}^{T} & -\delta M_{9}^{T} & 0 & 0 & -\delta N_{9}^{T}+\delta M_{9}^{T} & 0 & 0 & 0\\ \end{array} \right]<0, \\ $$$$ \begin{aligned} \Uptheta_{1_{33}}^{i}&=-R_{2}-\delta M_{3}^{T}-\delta M_{3} \\ \Uptheta_{1_{62}}^{i}&=\delta N_{6}^{T}-\delta N_{2}^{T}+\delta M_{2}^{T} \\ \Uptheta_{1_{63}}^{i}&=-\delta N_{3}^{T}+\delta M_{3}^{T}-\delta M_{6}^{T} \\ \Uptheta_{1_{65}}^{i}&=-\delta N_{5}^{T}+\delta M_{5}^{T} \\ \end{aligned} $$$$ \Uptheta_{2}^{i}=\left[\begin{array}{ccccccccc} 0 &\ast & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ 0 & -R_{1}& \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ \delta Z_{1}^{T} & R_{1}+\delta Z_{2}^{T} & \Uptheta_{2_{33}}^{i} & \ast & \ast & \ast & \ast & \ast & \ast\\ -\delta S_{1}^{T} & -\delta S_{2}^{T} & \delta Z_{4}^{T}-\delta S_{3}^{T} & \Uptheta_{2_{44}}^{i} & \ast & \ast & \ast & \ast & \ast \\ 0 & 0 & \delta Z_{5}^{T} & R_{3}-\delta S_{5}^{T} & -R_{3} & \ast & \ast & \ast & \ast\\ -\delta Z_{1}^{T}+\delta S_{1}^{T} & -\delta Z_{2}^{T}+\delta S_{2}^{T} & \Uptheta_{2_{63}}^{i} & \Uptheta_{2_{64}}^{i} & \Uptheta_{2_{65}}^{i} & \Uptheta_{2_{66}}^{i} & \ast & \ast & \ast \\ 0 & 0 & \delta Z_{7}^{T} & -\delta S_{7}^{T} & 0 & -\delta Z_{7}^{T}+\delta S_{7}^{T} & 0 & \ast & \ast \\ 0 & 0 & \delta Z_{8}^{T} & -\delta S_{8}^{T} & 0 & -\delta Z_{8}^{T}+\delta S_{8}^{T} & 0 & 0 & \ast\\ 0 & 0 & \delta Z_{9}^{T} & -\delta S_{9}^{T} & 0 & -\delta Z_{9}^{T}+\delta S_{9}^{T} & 0 & 0 & 0 \end{array} \right]<0, \\ $$$$ \begin{aligned} \Uptheta_{2_{33}}^{i}&=-R_{1}+\delta Z_{3}+\delta Z_{3}^{T} \\ \Uptheta_{2_{44}}^{i}&=-R_{3}-\delta S_{4}^{T}-\delta S_{4} \\ \Uptheta_{2_{63}}^{i}&=\delta Z_{6}^{T}-\delta Z_{3}^{T}+\delta S_{3}^{T} \\ \Uptheta_{2_{64}}^{i}&=-\delta Z_{4}^{T}+\delta S_{4}^{T}-\delta S_{6}^{T} \\ \Uptheta_{2_{65}}^{i}&=-\delta Z_{5}+\delta S_{5} \\ \Uptheta_{2_{66}}^{i}&=-\delta Z_{6}-\delta Z_{6}^{T}+\delta S_{6}+\delta S_{6}^{T} \\ \end{aligned} $$$$ \Uptheta_{3}^{i}=\left[\begin{array}{ccccccccc} 0 &\ast & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ 0 & -R_{1}& \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ 0 & R_{1} & -R_{1}-R_{2} & \ast & \ast & \ast & \ast & \ast & \ast\\ \delta X_{1}^{T} & \delta X_{2}^{T} & \delta X_{3}^{T}+R_{2} & \Uptheta_{3_{44}}^{i} & \ast & \ast & \ast & \ast & \ast \\ -\delta Y_{1}^{T} & -\delta Y_{2}^{T} & -\delta Y_{3}^{T} & \delta X_{5}^{T}-\delta Y_{4}^{T} & -\delta Y_{5}^{T}-\delta Y_{5} & \ast & \ast & \ast & \ast\\ -\delta X_{1}^{T}+\delta Y_{1}^{T} & -\delta X_{2}^{T}+\delta Y_{2}^{T} & \Uptheta_{3_{63}}^{i} & \Uptheta_{3_{64}}^{i} & \Uptheta_{3_{65}}^{i} &\Uptheta_{3_{66}}^{i} & \ast & \ast & \ast \\ 0 & 0 & 0 & \delta X_{7}^{T} & -\delta X_{7}^{T} & -\delta X_{7}^{T}+\delta Y_{7}^{T} & 0 & \ast & \ast \\ 0 & 0 & 0 & \delta X_{8}^{T} & -\delta Y_{8}^{T} & -\delta X_{8}^{T}+\delta Y_{8}^{T} & 0 & 0 & \ast\\ 0 & 0 & 0 & \delta X_{9}^{T} & -\delta Y_{9}^{T} & -\delta X_{9}^{T}+\delta Y_{9}^{T} & 0 & 0 & 0 \end{array} \right]<0, \\ $$$$ \begin{aligned} \Uptheta_{3_{44}}^{i}&=-R_{2}+\delta X_{4}^{T}+\delta X_{4} \\ \Uptheta_{3_{63}}^{i}&=-\delta X_{3}^{T}+\delta Y_{3}^{T} \\ \Uptheta_{3_{64}}^{i}&=\delta X_{6}^{T}-\delta X_{4}^{T}+\delta Y_{4}^{T} \\ \Uptheta_{3_{65}}^{i}&=-\delta X_{5}^{T}+\delta Y_{5}^{T}-\delta Y_{6}^{T} \\ \Uptheta_{3_{66}}^{i}&=-\delta X_{6}-\delta X_{6}^{T}+\delta Y_{6}^{T}+\delta Y_{6} \\ \phi&=\delta^{2}R_{1}+\delta^{2}R_{2}+\delta^{2}R_{3}+\tau_{n}^{2}R_{4} \\ \Upupsilon_{11}^{i}&=\delta N\,\Upupsilon_{12}^{i}=\delta M\,\Upupsilon_{21}^{i}=\delta S \,\Upupsilon_{22}^{i}=\delta Z\,\Upupsilon_{31}^{i}=\delta X \,\Upupsilon_{32}^{i}=\delta Y \end{aligned} $$then, switched interval network (5) is global robust asymptotic stable, the switching rule is chosen as σ(x(t)) = i whenever \(x(t)\in \bar{\Upomega_{i}}\).

Proof

Consider the following Lyapunov–Krasovskii functional

where

Calculating the time derivative of V(t, x t ) along the trajectory of (6), it can follow that

By applying Lemma 2.1, we have

Based on (10)–(14), we can get

By the assumption \((\mathcal{H}_1)\), one has

It follows from (16) and (17) that

where e i denotes the unit column vector with a “1′′ on its ith row and zeros elsewhere.

By substituting (7) and (18), (19) into (15), it yields

where

In the following, we will consider three cases: that is \(t \in \mathcal{S}_{1},\,t \in \mathcal{S}_{2},\,t \in \mathcal{S}_{3}\).

Case 1: when \(t \in \mathcal{S}_{1}\), i.e. \(\tau(t)\in[\tau_{n},\tau_{1}]\).

By using Lemma 2.1, we have

Combing (20)–(22), and applying Newton-Leibniz formula and adding the free weighting matrices N and M, it can be obtained

It is easy to deduce the following inequality:

By substituting (24)–(25) into (23), it follows that

when m = n = 1, using Schur complement, (8) is equivalent to

Similarly, when m = 1 and n = 2, (8) is equivalent to

From (27) and (28), by using Lemma 2.2, we can obtain

Therefore, we finally obtain from (26) and (29) that

Case 2: when \(t \in \mathcal{S}_{2}\), i.e. \(\tau(t)\in(\tau_{1},\tau_{2}]\).

Similar to case 1, we have

Combing (20), (31), (32), and applying Newton-Leibniz formula and adding the free weighting matrices S and Z, it can be obtained

Then, according to a similar method in Case 1, we have

when m = 2, n = 1, using Schur complement, (8) is equivalent to

Similarly, when m = 2 and n = 2, (8) is equivalent to

From (35) and (36), by using Lemma 2.2, it yields

Therefore, we finally obtain from (34) and (37) that

Case 3: when \(t \in \mathcal{S}_{3}\), i.e. \(\tau(t)\in(\tau_{2},\tau_{N}]\).

From the above (21) and (31), we can get

Similar to the analysis methods in case 1 and case 2, it can be obtained:

From the above discussions, for all t > 0, (8) with m = 1, 2, 3, n = 1 and 2, we can get the following equality:

By the condition (i) and Proposition 2.1, the system of matrices G i (A i0 , Q 1, Q 2, Q 3) is strictly complete. Then we have

Hence, for any \(x(t)\in R ^{n}\), there exists \(i\in\{1,\,2,\,\ldots,\,N\}\) such that \(x(t)\in \bar{\Upomega_{i}}\). By choosing switching rule as σ(x) = i whenever \(\sigma(x)\in \bar{\Upomega_{i}}\), from (41), it can derive

According to Definition 2.1, the switched interval network (5) is global robust asymptotic stable. The proof is completed. □

Next, we will consider the situation when the time-varying delay τ(t) becomes the fast delay, by structuring the different Lyapunov–Krasovskii functional, it is easy to obtain the following corollary:

Corollary 3.1

Under the assumption \((\mathcal{H}_1)\) and \((\mathcal{H}_3)\), if there exist matrices P > 0, Q j > 0, R j > 0 (j = 1, 2, 3, 4), and diagonal matrices \(\gamma_{k}=diag\{\gamma_{k,1},\,\gamma_{k,2},\,\ldots,\,\gamma_{k,n}\}>0 (k=1,\,2,\,3)\), and matrices \(N_{l},\,M_{l},\,Z_{l},\,S_{l}\,(l=1,\,2,\,\ldots,\,9)\) with appropriate dimensions such that for all m and n, the following LMIs hold:

-

(i)

\(\exists\,\xi^{i}\geq 0, i=1,\,2,\,\ldots,\,N, \sum_{i=1}^{N}\xi^{i}>0:\quad \sum_{i=1}^{N}\xi^{i}G_{i}(A_{0}^{i},Q_{1},Q_{2},Q_{3})<0\).

-

(ii)

$$ \left[\begin{array}{cc} \Uppi^{i}+\Uptheta_{m}^{i} &\ast \\ \Upupsilon_{mn}^{i} & -R_{m}^{i} \end{array} \right]<0,\quad \,m=1,\,2,\,3,\,n=1,\,2 $$(44)

where

$$ \Uppi^{i}=\left[\begin{array}{ccccccccc} \Uppi_{11}^{i} &\ast & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ R_{4} & -Q_{1}-R_{4} & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ 0 & 0 & -Q_{2} & \ast & \ast & \ast & \ast & \ast & \ast \\ 0 & 0 & 0 & -Q_{3} & \ast & \ast & \ast & \ast & \ast \\ 0 & 0 & 0 & 0 & -Q_{4} & \ast & \ast & \ast & \ast \\ 0 & 0 & 0 & 0 & 0 & \Uppi_{66}^{i} & \ast & \ast & \ast \\ \Uppi_{71}^{i} & 0 & 0 & 0 & 0 & 0 & \Uppi_{77}^{i} & \ast & \ast \\ \Uppi_{81}^{i}& 0 & 0 & 0 & 0 & \gamma_{3}L_{2} & \Uppi_{87}^{i} & \Uppi_{88}^{i} & \ast \\ (E^{i})^{T}P-(E^{i})^{T}\phi A_{0}^{i} & 0 & 0 & 0 & 0 & 0 &\Uppi_{97}^{i} &\Uppi_{98}^{i} &\Uppi_{99}^{i} \end{array} \right]<0, $$where

$$ \begin{aligned} \Uppi_{11}^{i}&=(A_{0}^{i})^{T}\phi A_{0}^{i}+Q_{4}-R_{4}-\gamma_{2}L_{1}-L_{1}\gamma_{2}+(F_{A}^{i})^{T}F_{A}^{i} \\ \Uppi_{66}^{i}&=-\gamma_{3}L_{1}-L_{1}\gamma_{3} \\ \Uppi_{71}^{i}&=L_{2}\gamma_{2}+(B_{10}^{i})^{T}P -\gamma_{1}A_{0}^{i}-(B_{10}^{i})^{T}\phi A_{0}^{i}+(F_{A}^{i})^{T}F_{1}^{i} \\ \Uppi_{77}^{i}&=\gamma_{1}B_{10}^{i}+(B_{10}^{i})^{T}\gamma_{1} -\gamma_{2}-\gamma_{2}^{T}+(B_{10}^{i})^{T}\phi B_{10}^{i}+(F_{1}^{i})^{T}F_{1}^{i} \\ \Uppi_{81}^{i}&=(B_{20}^{i})^{T}P-(B_{20}^{i})^{T}\phi A_{0}^{i}+(F_{A}^{i})^{T}F_{2}^{i} \\ \Uppi_{87}^{i}&=(B_{20}^{i})^{T}\gamma_{1}+(B_{20}^{i})^{T}\phi B_{10}^{i}+(F_{1}^{i})^{T}F_{2}^{i} \\ \Uppi_{88}^{i}&=-\gamma_{3}-\gamma_{3}^{T}+(B_{20}^{i})^{T}\phi B_{20}^{i}+(F_{2}^{i})^{T}F_{2}^{i} \\ \Uppi_{97}^{i}&=(E^{i})^{T}\gamma_{1}+(E^{i})^{T}\phi^{T}(B_{10}^{i})^{T} \\ \Uppi_{98}^{i}&=(E^{i})^{T}\phi^{T}(B_{20}^{i})^{T} \\ \Uppi_{99}^{i}&=(E^{i})^{T}\phi E^{i}-I \\ \end{aligned} $$$$ \Uptheta_{1}^{i}=\left[\begin{array}{ccccccccc} 0 &\ast & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ \delta N_{1}^{T} & \delta N_{2}^{T}+\delta N_{2}^{T} & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ -\delta M_{1}^{T} & \delta N_{3}^{T}-\delta M_{2}^{T} & \Uptheta_{1_{33}}^{i} & \ast & \ast & \ast & \ast & \ast & \ast \\ 0 & \delta N_{4}^{T} & R_{2}-\delta M_{4}^{T} & -R_{2}-R_{3} & \ast & \ast & \ast & \ast & \ast\\ 0 & \delta N_{5}^{T} & -\delta M_{5}^{T} & R_{3} & -R_{3} & \ast & \ast & \ast & \ast \\ \delta M_{1}^{T}-\delta N_{1}^{T} & \Uptheta_{1_{62}}^{i} & \Uptheta_{1_{63}}^{i} & -\delta N_{4}^{T}+\delta M_{4}^{T} & \Uptheta_{1_{65}}^{i} & \Uptheta_{1_{66}}^{i}& \ast & \ast & \ast \\ 0 & \delta N_{7}^{T} & -\delta M_{7}^{T} & 0 & 0 & -\delta N_{7}^{T}+\delta M_{7}^{T} & 0 & \ast & \ast \\ 0 & \delta N_{8}^{T} & -\delta M_{8}^{T} & 0 & 0 & -\delta N_{8}^{T}+\delta M_{8}^{T} & 0 & 0 & \ast \\ 0 & \delta N_{9}^{T} & -\delta M_{9}^{T} & 0 & 0 & -\delta N_{9}^{T}+\delta M_{9}^{T} & 0 & 0 & 0\\ \end{array} \right]<0, \\ $$$$ \begin{aligned} \Uptheta_{1_{33}}^{i}&=-R_{2}-\delta M_{3}^{T}-\delta M_{3} \\ \Uptheta_{1_{62}}^{i}&=\delta N_{6}^{T}-\delta N_{2}^{T}+\delta M_{2}^{T} \\ \Uptheta_{1_{63}}^{i}&=-\delta N_{3}^{T}+\delta M_{3}^{T}-\delta M_{6}^{T} \\ \Uptheta_{1_{65}}^{i}&=-\delta N_{5}^{T}+\delta M_{5}^{T} \\ \end{aligned} $$$$ \Uptheta_{2}^{i}=\left[\begin{array}{ccccccccc} 0 & \ast & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ 0 & -R_{1}& \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ \delta Z_{1}^{T} & R_{1}+\delta Z_{2}^{T} & \Uptheta_{2_{33}}^{i} & \ast & \ast & \ast & \ast & \ast & \ast\\ -\delta S_{1}^{T} & -\delta S_{2}^{T} & \delta Z_{4}^{T}-\delta S_{3}^{T} & \Uptheta_{2_{44}}^{i} & \ast & \ast & \ast & \ast & \ast \\ 0 & 0 & \delta Z_{5}^{T} & R_{3}-\delta S_{5}^{T} & -R_{3} & \ast & \ast & \ast & \ast\\ -\delta Z_{1}^{T}+\delta S_{1}^{T} & -\delta Z_{2}^{T}+\delta S_{2}^{T} & \Uptheta_{2_{63}}^{i} & \Uptheta_{2_{64}}^{i} & \Uptheta_{2_{65}}^{i} & \Uptheta_{2_{66}}^{i} & \ast & \ast & \ast \\ 0 & 0 & \delta Z_{7}^{T} & -\delta S_{7}^{T} & 0 & -\delta Z_{7}^{T}+\delta S_{7}^{T} & 0 & \ast & \ast \\ 0 & 0 & \delta Z_{8}^{T} & -\delta S_{8}^{T} & 0 & -\delta Z_{8}^{T}+\delta S_{8}^{T} & 0 & 0 & \ast\\ 0 & 0 & \delta Z_{9}^{T} & -\delta S_{9}^{T} & 0 & -\delta Z_{9}^{T}+\delta S_{9}^{T} & 0 & 0 & 0 \end{array} \right]<0, \\ $$$$ \begin{aligned} \Uptheta_{2_{33}}^{i}&=-R_{1}+\delta Z_{3}+\delta Z_{3}^{T} \\ \Uptheta_{2_{44}}^{i}&=-R_{3}-\delta S_{4}^{T}-\delta S_{4} \\ \Uptheta_{2_{63}}^{i}&=\delta Z_{6}^{T}-\delta Z_{3}^{T}+\delta S_{3}^{T} \\ \Uptheta_{2_{64}}^{i}&=-\delta Z_{4}^{T}+\delta S_{4}^{T}-\delta S_{6}^{T} \\ \Uptheta_{2_{65}}^{i}&=-\delta Z_{5}+\delta S_{5} \\ \Uptheta_{2_{66}}^{i}&=-\delta Z_{6}-\delta Z_{6}^{T}+\delta S_{6}+\delta S_{6}^{T} \\ \end{aligned} $$$$ \Uptheta_{3}^{i}=\left[\begin{array}{ccccccccc} 0 &\ast & \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ 0 & -R_{1}& \ast & \ast & \ast & \ast & \ast & \ast & \ast\\ 0 & R_{1} & -R_{1}-R_{2} & \ast & \ast & \ast & \ast & \ast & \ast\\ \delta X_{1}^{T} & \delta X_{2}^{T} & \delta X_{3}^{T}+R_{2} & \Uptheta_{3_{44}}^{i} & \ast & \ast & \ast & \ast & \ast \\ -\delta Y_{1}^{T} & -\delta Y_{2}^{T} & -\delta Y_{3}^{T} & \delta X_{5}^{T}-\delta Y_{4}^{T} & -\delta Y_{5}^{T}-\delta Y_{5} & \ast & \ast & \ast & \ast\\ -\delta X_{1}^{T}+\delta Y_{1}^{T} & -\delta X_{2}^{T}+\delta Y_{2}^{T} & \Uptheta_{3_{63}}^{i} & \Uptheta_{3_{64}}^{i} & \Uptheta_{3_{65}}^{i} &\Uptheta_{3_{66}}^{i} & \ast & \ast & \ast \\ 0 & 0 & 0 & \delta X_{7}^{T} & -\delta X_{7}^{T} & -\delta X_{7}^{T}+\delta Y_{7}^{T} & 0 & \ast & \ast \\ 0 & 0 & 0 & \delta X_{8}^{T} & -\delta Y_{8}^{T} & -\delta X_{8}^{T}+\delta Y_{8}^{T} & 0 & 0 & \ast\\ 0 & 0 & 0 & \delta X_{9}^{T} & -\delta Y_{9}^{T} & -\delta X_{9}^{T}+\delta Y_{9}^{T} & 0 & 0 & 0 \end{array} \right]<0, \\ $$$$ \begin{aligned} \Uptheta_{3_{44}}^{i}&=-R_{2}+\delta X_{4}^{T}+\delta X_{4} \\ \Uptheta_{3_{63}}^{i}&=-\delta X_{3}^{T}+\delta Y_{3}^{T} \\ \Uptheta_{3_{64}}^{i}&=\delta X_{6}^{T}-\delta X_{4}^{T}+\delta Y_{4}^{T} \\ \Uptheta_{3_{65}}^{i}&=-\delta X_{5}^{T}+\delta Y_{5}^{T}-\delta Y_{6}^{T} \\ \Uptheta_{3_{66}}^{i}&=-\delta X_{6}-\delta X_{6}^{T}+\delta Y_{6}^{T}+\delta Y_{6} \\ \phi&=\delta^{2}R_{1}+\delta^{2}R_{2}+\delta^{2}R_{3}+\tau_{n}^{2}R_{4} \\ \Upupsilon_{11}^{i}&=\delta N\,\Upupsilon_{12}^{i}=\delta M\,\Upupsilon_{21}^{i}=\delta S \,\Upupsilon_{22}^{i}=\delta Z\,\Upupsilon_{31}^{i}=\delta X \,\Upupsilon_{32}^{i}=\delta Y \end{aligned} $$then, switched interval network (5) is global robust asymptotic stable, the switching rule is chosen as σ(x(t)) = i whenever \(x(t)\in \bar{\Upomega_{i}}\).

Proof

By choosing the following Lyapunov–Krasovskii functional:

where

the derivation process of Corollary 3.1 is similar to Theorem 3.1.

Remark 3

In (Zhang et al. 2009), author investigate the global asymptotic stability of a class of recurrent neural networks with interval time-varying delays via delay-decomposing approach, the variation interval of the time delay is divided into two subintervals with equal length by introducing its central point, several new stability criteria are derived in terms of LMIs. However, in this paper, we divide the interval time delay into three subintervals, as we all know, when the number of the divided subintervals increases, the corresponding criteria can be improved in results, hence, the proposed criteria expand and improve the results in the existing literatures. Moreover, when N = 1 and without regard to robustness in (5), the model in our paper is degenerated as the nonlinear functional differential equation (1) in (Zhang et al. 2009), so model studied in (Zhang et al. 2009; Shen and Cao 2011; Liu and Cao 2011; Phat and Trinh 2010) can be seen a special case of the model (5).

An illustrative example

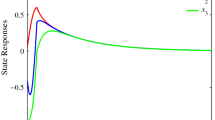

In this section, an illustrative example will be given to check the validity and effectiveness of the proposed stability criterion obtained in Theorem 3.1.

Example

Consider the the following second-order switched interval networks with interval time-varying delay described by

where \(\sigma(x(t)): R^{n}\rightarrow \{1,2\}\), and \(\check{l}_{1}=0.1, \,\check{l}_{2}=0.2, \,\hat{l}_{1}=0.3, \,\hat{l}_{2}=0.6, \,\tau_{n}=0.5, \,\tau_{N}=2, \,\mu=\delta=0.5\), The networks system parameters are defined as

Solving the LMI in condition (ii) by using appropriate LMI solver in the Matlab, the feasible positive definite matrices P, Q 1, Q 2, Q 3, and diagonal matrices could be as

Let ξ1 = 0.1,ξ2 = 0.9, it can be shown that

Moreover, the sum

The sets \(\Upomega_{1}\) and \(\Upomega_{2}\) are given as

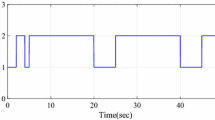

then, the switching regions (Figs. 1, 2) are defined as

The switching rule σ(x(t)) can be given by

By Theorem 3.1, this switched interval network (45) is global robust asymptotic stable.

Conclusion

In this paper, we have proposed a new scheme of switched interval networks with interval time-varying delay and general activation functions. By introducing the delay fractioning approach, the variation interval of the time delay is divided into three subintervals, by checking the variation of the Lyapunov functional for the case when the value of the time delay is in every subinterval, the switching rule which depends on the state of the network is designed and some new delay-dependent robust stability criteria are derived in terms of LMIs. An illustrative example has been also provided to demonstrate the validity of the proposed robust asymptotic stability criteria for switched interval networks.

References

Balasubramaniam P, Vembarasan V, Rakkiyappan R (2011) Leakage delays in T–S fuzzy cellular neural networks. Neural Process Lett 33:111–136

Boyd S, Ghaoui LE, Feron E, Balakrishnan V (1994) Linear matrix inequalities in system and control theory. SIAM, Philadelphia

Brown TX (1989) Neural networks for switching. IEEE Commun Mag 27(11):72–81

Han QL, Yue D (2007) Absolute stability of Lur’e systems with time-varying delay. Control Theory Appl IET 1(3):854–859

He W, Cao J (2008) Robust stability of genetic regulatory networks with distributed delay. Cogn Neurodyn 2(4):355–361

Hu J, Wang Z (2011) A delay fractioning approach to robust sliding mode control for discrete-time stochastic systems with randomly occurring non-linearities. IMA J Math Control Inf 28:345–363

Huang H, Qu Y, Li H (2005) Robust stability analysis of switched Hopfield neural networks with time-varying delay under uncertainty. Phys Lett A 345(4–6):345–354

Li P, Cao J (2007) Global stability in switched recurrent neural networks with time-varying delay via nonlinear measure. Nonlinear Dyn 49(1–2):295–305

Lian J, Zhang K (2011) Exponential stability for switched Cohen–Grossberg neural networks with average dwell time. Nonlinear Dyn 63:331–343

Liberzon D (2003) Switching in systems and control. Springer, Berlin

Liu X, Cao J (2011) Local synchronization of one-to-one coupled neural networks with discontinuous activations. Cogn Neurodyn 5(1):13–20

Liu H, Chen G (2007) Delay-dependent stability for neural networks with time-varying delay. Chaos Solitons Fractals 33(1):171–177

Liu L, Han Z, Li W (2009) Global stability analysis of interval neural networks with discrete and distributed delays of neutral-type. Expert Syst Appl Part 2 36(3):7328–7331

Niamsup P, Phat VN (2010) A novel exponential stability condition of hybrid neural networks with time-varying delay. Vietnam J Math 38(3):341–351

Phat VN, Trinh H (2010) Exponential stabilization of neural networks with various activation functions and mixed time-varying delays. IEEE Trans Neural Netw 21(7):1180–1184

Ratchagit K, Phat VN (2011) Stability and stabilization of switched linear discrete-time systems with interval time-varying delay. Nonlinear Anal Hybrid Syst 5:605–612

Shen J, Cao J (2011) Finite-time synchronization of coupled neural networks via discontinuous controllers. Cogn Neurodyn 5(4):373–385

Thanha N, Phat V (2013) Decentralized stability for switched nonlinear large-scale systems with interval time-varying delays in interconnections. Nonlinear Anal Hybrid Syst 11:22–36

Uhlig F (1979) A recurring theorem about pairs of quadratic forms and extensions. Linear Algebra Appl 25:219–237

Wang Y, Wang Z, Liang J (2008) Delay fractioning approach to global synchronization of delayed complex networks with stochastic disturbances. Phys Lett A 372(39):6066–6073

Wang Y, Yang C, Zuo Z (2012) On exponential stability analysis for neural networks with time-varying delays and general activation functions. Commun Nonlinear Sci Numer Simulat 17:1447–1459

Xu H, Wu H, Li N (2012) Switched exponential state estimation and robust stability for interval neural networks with discrete and distributed time delays. Abstr Appl Anal 20 ID:103542. doi:10.1155/2012/103542

Ye H, Michel AN, Hou L (1998) Stability theory for hybrid dynamical systems. IEEE Trans Autom Control 43(4):461–474

Yue D (2004) Robust stabilization of uncertain systems with unknown input delay. Automatica 40:331–336

Zeng H, He Y, Wu M, Zhang C (2011) Complete delay-decomposing approach to asymptotic stability for neural networks with time-varying delays. IEEE Trans Neural Netw 22(5):806–811

Zhang W, Yu L (2009) Stability analysis for discrete-time switched time-delay systems. Automatica 45(10):2265–2271

Zhang Y, Yue D, Tian E (2009) New stability criteria of neural networks with interval time-varying delay: a piecewise delay method. Appl Math Comput 208:249–259

Zhang H, Liu Z, Huang GB, Wang Z (2010) Novel weighting-delay-based stability criteria for recurrent neural networks with time-varying delay. IEEE Trans Neural Netw 21(1):91–106

Acknowledgments

The work was funded by the National Natural Science Foundation of China under Grant 61272530, the Natural Science Foundation of Jiangsu Province of China under Grant BK2012741, the Specialized Research Fund for the Doctoral Program of Higher Education under Grant 20110092110017 and 20130092110017 and supported by “the Fundamental Research Funds for the Central Universities”, the JSPS Innovation Program under Grant CXLX13_075.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, N., Cao, J. & Hayat, T. Delay-decomposing approach to robust stability for switched interval networks with state-dependent switching. Cogn Neurodyn 8, 313–326 (2014). https://doi.org/10.1007/s11571-014-9279-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-014-9279-z