Abstract

This paper establishes explicit criteria in form of inequalities for all solutions to a class of second order nonlinear differential equations (with and without delay) to be bounded, ultimately bounded and globally asymptotically stable using Lyapunov second method. Obtained results are new and they complement existing results in the literature. Some examples are given to illustrate the main results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is well known that stability and boundedness properties of solutions are fundamental in the theory and application of differential equations. The study of qualitative properties of solution to second order nonlinear differential equations has attracted the interest of many researchers. As a tool of investigation, the Lyapunov second method has been one of the most effective tool used and it is still playing a central role in studying the qualitative behaviour of solution of both linear and nonlinear differential equations.

Consider the second order nonlinear differential equations of the form

where a, f, g and p are continuous functions that depend (at most) only on the arguments displayed explicitly and \(\tau \in [0, h]\) (\(\tau >0\)). Here and elsewhere, all the solutions considered and all the functions which appear are supposed real. The dots indicate differentiation with respect to t. The continuity of these functions is sufficient for the existence of the solutions of Eqs. (1.1) and (1.2). Furthermore, it is assumed that the functions f, g and p satisfy a Lipschitz condition in their respective arguments which guarantee the uniqueness of solutions of Eqs. (1.1) and (1.2). The derivative of g also exists and is continuous. The dots denote differentiation with respect to the independent variable t.

Equations (1.1) and (1.2) are ordinary and delay differential equations with nonlinear terms respectively. Let us observe that when \(f(x,\dot{x}) = \dot{x},~~g(x)= x\) and \(\tau = 0\), Eqs. (1.1) and (1.2) reduce to

which has remained one of the oldest problems in differential equations for more than six decades. This equation appears in a number of physical models and it is important in describing fluid mechanical and nonlinear elastic mechanical phenomena [2]. Some notable contributions in the dynamical behaviour of this class of equation include but are not limited to Bilhari [7], Hatvani [12], Karsai [13, 14], Napoles [17], Napoles and Repilado [18], Pucci and Serrin [21, 22]. An immense body of relevant literature has been devoted to stability, boundedness, convergence and periodic solutions to equations of the form (1.1), we can mention in this regard the work of [25], Tunc and Ayhan [26], Tunc and Tunc [27] as well as the expositions in [5–24, 28] and references cited therein. With the aid of Lyapunov’s second method, Burton [8], Napoles [17] and Napoles and Repilado [18] discussed the boundedness of solutions. Burton and Hatvani [9], Murakami [16], Thurston and Wong [24] are further examples on the subject matter that employed the second method of Lyapunov to discuss stability (asymptotic) of solutions to the Eq. (1.1). In Napoles [17], ultimate boundedness of solutions of the equation (1.1) was considered for the autonomous case while Ogundare and Okecha [20] discussed the boundedness, periodicity and stability of solutions to certain class of the Eq. (1.1). Recently, Ogundare and Afuwape [19], discussed the boundedness and stability properties of solutions of

via the Lyapunov second method. Also Ademola [1] as well as Alaba and Ogundare [3, 4] discussed boundedness and stability of solutions of non-autonomous equation of second order from where (1.4) was easily treated.

In [9], the authors considered (1.1) and (1.2) and developed a transformation which allowed the treatment of the two equations in a unified way using the Lyapunov second method.

The motivation for the current paper comes from the work of Burton and Hatvani [9] where the Lyapunov functions employed mainly were incomplete ones. In this work, a suitable complete Lyapunov function is used to discuss the stability and boundedness of (1.2). Conditions on the nonlinear terms that guarantee global asymptotic stability and boundedness of solutions of the Eq. (1.2) are obtained. Our results complement existing results on qualitative behaviour of solutions of second order nonlinear differential equations with and without delay.

The paper is organised in this order: basic assumptions are presented in Sect. 2 alongside with the main results. Section 3 is devoted to some preliminary results and in Sect. 4, the proofs of the main theorems are given while related examples are given to illustrate the main results in Sect. 5.

2 Formulation of results

An associated system to the Eq. (1.2) of interest to us is

where \(N(t)=\int ^{0}_{-\tau }{g'(x(t+\theta ))y(t+\theta )}d\theta + p(t,x,y)\).

Let a(t) be continuous and non decreasing, in addition let \( 0 < a_{0}\le a(t)\le a_{1}\) with \(a' \le \displaystyle \frac{1}{2}\); also let the functions f, g and p be continuous with the following conditions:

-

(i)

$$\begin{aligned} \alpha _{0}\le \displaystyle \frac{f(x,y)-f(x,0)}{y}=\alpha \le \alpha _{1}, \quad y\ne 0; \end{aligned}$$

-

(ii)

$$\begin{aligned} \beta _{0}\le \displaystyle \frac{f(x,y)-f(0,y)}{x}=\beta \le \beta _{1}, \quad x\ne 0; \end{aligned}$$

-

(iii)

$$\begin{aligned} \gamma _{0}\le \displaystyle \frac{g(x)-g(0)}{x}=\gamma \le \gamma _{1}, \quad x\ne 0; \end{aligned}$$

-

(iv)

$$\begin{aligned} f(x,0) = f(0,y) =g(0) = 0, \end{aligned}$$

where \(\alpha ,~\alpha _{0},~\alpha _{1},~\beta ,~\beta _{0},~\beta _{1},~\gamma ,~ \gamma _{0}~~\text{ and }~~\gamma _{1}\) are all positive constants belonging to a closed sub-interval of the Routh-Hurwitz interval \(I_{0} = [0, \kappa ], \kappa =\max \{\alpha , \beta , \gamma \}\).

Next we state our main results.

Theorem 2.1

Suppose that conditions (i)–(iv) are satisfied with \(p(t) \equiv 0\), then the trivial solution of the Eq. (1.2) is globally asymptotically stable.

Corollary 2.1

If \(g(x(t-\tau ))=g(x)\), then the trivial solution of the Eq. (1.1) is globally asymptotically stable.

Theorem 2.2

In addition to conditions (i)–(iv), suppose that

-

(v)

\(|p(t,x,y)|\le M,\)

for all \(t\le 0\), then there exists a constant \(\sigma , (0< \sigma < \infty )\) depending only on the constants \(\alpha ,~\beta \) and \(\gamma \) such that every solution of Eq. (1.2) satisfies

for all t \(\ge t_{0}\), where the constant \(A_{1}>0\), depends on \(\alpha ,~\beta \) and \(\gamma \) as well as on \(t_{0}, x(t_{0}), \dot{x}(t_{0});\) and the constant \(A_{2}>0\) depends on \(\alpha ,~\beta \) and \(\gamma \).

Corollary 2.2

If \(g(x(t-\tau ))=g(x)\), then the inequality (1.2) holds for the Eq. (1.1).

Theorem 2.3

Suppose the conditions of the Theorem 2.2 are satisfied with condition (iv) replaced with

-

(vi)

\(|p(t, x, y)|\le (|x|+|y|)\phi (t),\)

where \(\phi (t)\) is a non negative and continuous function of t such that \(\int ^{t}_{0}{\phi (s)ds}\le M < \infty \) is satisfied with a positive constant M. Then, there exists a constant \(K_{0}\) which depends on \(M, K_{1}, K_{2}\) and \(t_{0}\) such that every solution x(t) of the Eqs. (1.1) and (1.2) satisfies

for sufficiently large t.

Corollary 2.3

If \(g(x(t-\tau ))=g(x)\), then every solution of the Eq. (1.1) satisfies

for sufficiently large t.

Remark

We wish to remark here that while the Theorem 2.1 is on the global asymptotic stability of the trivial solution, Theorems 2.2 and 2.3 deal with the boundedness and ultimate boundedness of the solutions respectively.

Notations

Throughout this paper \(K, K_{0}, K_{1},\ldots K_{12}\) denote finite positive constants whose magnitudes depend only on the functions f, g and p as well as constants \(\alpha ,~\beta ,~\gamma \) and \(\delta \) but are independent of solutions of the Eqs. (1.1) and (1.2). \(K_{i}'s\) are not necessarily the same for each time they occur, but each \(K_{i}, i=1,2,\ldots \) retains its identity throughout. \(V, V_{1}\) and \(V_{2}\) denote Lyapunov functionals and \(\dot{V}|_{(*)}=\frac{d}{dt}V|_{(*)}\) stands for the derivative of V with respect to t along the solution path of a system \((*)\) (say).

3 Preliminary results

We shall use as a tool to prove our main results a Lyapunov functional V(t; x, y) defined by

with

where \(\alpha , \beta \) and \(\delta \) are positive real numbers, a is a continuous non-decreasing function with \(a'\le 2\), and

The following lemmas are needed to prove the Theorems 2.1, 2.2 and 2.3.

Lemma 3.1

Subject to the assumptions of Theorem 2.1 there exist positive constants \(K_{i}= K_{i}(a~,\delta ), i = 1,2 \) such that

Proof

First, it is clear from the Eq. (3.1) that \(V(0; 0, 0)\equiv 0.\)

We can re-arrange \(V_{1}\) in Eq. (3.1) to have

from which the estimate

is obtained.

It follows from the above that there exists a constant \(K_1\) such that

where

On one hand, it is not difficult to establish that

since \(V_{2}\) is always positive, while on the other hand, on using the inequality \(|xy|\le \displaystyle \frac{1}{2}(x^{2}+y^{2})\) in Eq. (3.1),

Consequently, from the inequality (3.7),

where

Furthermore,

At last, combination of inequalities (3.6) and (3.9) give

which is equivalent to

\(\square \)

The Lemma 3.1 is established.

Lemma 3.2

In addition to the assumptions of the Theorem 2.1, let condition (v) of the Theorem 2.2 be satisfied. Then there are positive constants \(K_{j}=K_{j}(a,~\alpha ,~\delta ,~\gamma ) (j=4,5)\) such that for any solution (x, y) of the system (2.1),

where V is as given in Eq. (3.1).

Proof

Differentiating the Eq. (3.1) along the solution path of the system (2.1), we have

where

and

Next is to obtain an upper bound on \(\dot{V_{1}}\). This shall be done using the hypotheses on the functions f and g. Thus we have

Further simplification yields

On choosing \(\beta = \frac{1}{2}(a^{2}(t)+2)\), Eq. (3.14) becomes

It is obvious that

From the inequality (3.15), it follows that

where \(K_{3} = \frac{\delta }{a^{2}_{1}}\times \max \left\{ a^{3}_{1}(2\gamma _{1}+a^{2}_{1}+2)+ a^{2}_{1} + 2 - 2a^{2}_{1}a'_{1}, 2[2a^{2}_{1}\alpha _{1} + a'_{1}]\right\} \)

and \(K_{4} = \frac{\delta }{a^{2}_{1}}\times \max \left\{ 2a_{1}^{3}, 4a_{1}\right\} \).

Similarly, on simplifying \(\dot{V_{2}}\), we have

Combining estimates (3.16) and (3.18), yield

where \(K_{5} = K_{3}+2\mu \).

For the case when \(p(t)\equiv 0\),

Hence the proof of Lemma 3.2. \(\square \)

4 Proof of main results

We shall now give the proofs of the main results.

Proof of Theorem 2.1

The proof of the Theorem 2.1 follows from Lemmas 3.1 and 3.2 where it has been established that the trivial solution of the Eq. (1.1) is globally asymptotically stable. i.e every solution \((x(t),\dot{x}(t))\) of the system (2.1) satisfies \(x^{2}(t)+\dot{x}^{2}(t)\longrightarrow 0 ~\text{ as }~ t\longrightarrow \infty \). \(\square \)

Proof of Theorem 2.2

Indeed, by using the inequality (3.19), it follows that

Again, it also follows from the inequality (3.8) that

Thus, the inequality (3.19) becomes

It is noted that \(K_{3}(x^{2}+y^{2})= K_{3}\cdot \frac{V}{K_{1}}\) and

where \(K_{6}=\frac{K_{5}}{K_{1}}\) and \(K_{7}=\frac{K_{4}}{K_{2}^\frac{1}{2}}.\)

Furthermore, from the above inequality we have

where \(K_{8}=\frac{1}{2}K_{6}.\)

Therefore

On choosing a constant \(K_{9}\) such that \(K_{9}= \frac{K_{8}}{K_{7}}\) gives

Thus the inequality (4.2) becomes

where

When \(\left| p\right| \le K_{9}V^{\frac{1}{2}}\), then

and when \(\left| p\right| \ge K_{9}V^{\frac{1}{2}}\), we have

On substituting the inequality (4.3) into the inequality (4.2), we have

where

This implies that

Multiplying both sides of the inequality (4.4) by \(e^{\frac{1}{2}K_{8}t}\), gives

i.e

Integrating both sides of inequality (4.5) from \(t_{0}\) to t gives

Further simplifications give

or

On utilizing inequalities (3.8) and (3.11), we have

for all \(t \ge t_{0}.\)

Thus,

where \(A_{1}\) and \( A_{2}\) are constants depending on \(\{K_{1},K_{2}, \ldots K_{10}\) and \((x^{2}(t_{0})+\dot{x}^{2}(t_{0}))\).

On substituting \(K_{8}=\sigma \) in the inequality (4.7), we have

This completes the proof. \(\square \)

Proof of Theorem 2.3

From the definition of function V and the conditions of the Theorem 2.3, the conclusion of Lemma 3.1 can be obtained as

and since \(p\ne 0 \) we can revise the conclusion of the Lemma 3.2, i.e,

to obtain

by using the condition (v) as stated in the Theorem 2.3. On employing the inequality \(|xy|\le \displaystyle \frac{1}{2}(x^{2}+y^{2})\) on inequality (4.9), we have

where \(K_{11}=2K_{4}.\)

From inequalities (4.8) and (4.10) we have,

Integrating inequality (4.11) from 0 to t gives

where \(K_{12}=\frac{K_{11}}{K_{1}}=\frac{2K_{4}}{K_{1}}\). Thus,

At last on applying the Gronwall-Reid-Bellman theorem on the inequality (4.13), we have

Hence the proof of Theorem 2.3. \(\square \)

Remark

The proofs of Corollaries 2.1, 2.2 and 2.3 follow respectively from the proofs of Theorems 2.1, 2.2 and 2.3 with appropriate modifications.

5 Examples

Example 5.1

We shall consider a second order delay differential equation given as

and its equivalent system of first order delay differential equations

Comparing the system (2.1) with system (5.2) when \(p(t,x,y)=0,\) we note that

-

(i)

\(a(t):=\dfrac{\sin t}{2(1+t^{2})}.\) Furthermore,

$$\begin{aligned} -\frac{3}{10}< \frac{\sin t}{2(1+t^{2})}<\frac{3}{10},\quad \forall ~t\ge 0. \end{aligned}$$It then follows that

$$\begin{aligned} 0=a_0\le a(t)\le a_1=\frac{3}{10},\quad \forall ~t\ge 0, \end{aligned}$$and

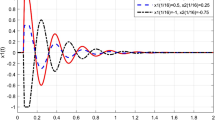

$$\begin{aligned} a'(t):=\frac{2(1+t^{2})\cos t-4t\sin t}{4(1+t^{2})^{2}}\le \frac{1}{2},\quad \forall ~t\ge 0. \end{aligned}$$The behaviour of a(t) and \(a'(t)\) are shown in Fig. 1.

-

(ii)

\(f(x,y):=\frac{y^{2}\sin x}{1+x^{2}}\). Clearly \(f(x,0)=0.\) Let

$$\begin{aligned} F_{11}(x,y):=\frac{f(x,y)-f(x,0)}{y}=\frac{y\sin x}{1+x^{2}},\quad \forall x,y\ne 0. \end{aligned}$$It follows that

$$\begin{aligned} 0=\alpha _{0}\le F_{11}(x,y)=\alpha \le \alpha _{1}=\frac{4}{4+\pi ^{2}},\quad \forall ~x,y\ne 0. \end{aligned}$$ -

(iii)

Similarly

$$\begin{aligned} 0=\beta _{0}\le F_{12}(x,y)\!:=\!\frac{f(x,y)-f(0,y)}{x}\!=\!\beta \le \beta _{1}=\frac{8}{\pi (\pi +4)^{2}},\quad \forall ~y,x\ne 0. \end{aligned}$$The behaviour of the functions \(F_{11}(x,y)\) and \(F_{12}(x,y)\) are shown respectively in Fig. 2.

-

(iv)

The function \(g(x)=2x+\frac{2x}{1+x^{2}},\) from where we have \(g(0)=0\) for all x. Let

$$\begin{aligned} G_{1}(x):=\frac{g(x)-g(0)}{x}=2+\frac{2}{x^{2}+5},\quad \forall ~x\ne 0. \end{aligned}$$Then

$$\begin{aligned} 0\le \frac{2}{x^{2}+5}\le 1\quad \forall ~x. \end{aligned}$$It follows that

$$\begin{aligned} 2=\gamma _{0}\le G_{1}(x)=\gamma \le \gamma _{1}=3\quad \forall ~x\ne 0, \end{aligned}$$this is depicted by Fig. 3.

-

(v)

From (ii), (iii) and (iv) we have the assumption that

$$\begin{aligned} f(x,0)=f(0,y)=g(0)=0,\quad \forall ~x,y. \end{aligned}$$Furthermore, since \(\alpha ,\beta \) and \(\gamma \) are non negative constants, it follows that \(I_{0}=[0,2]\). We can see that hypotheses (i)–(v), of the Theorem 2.1 hold. Hence by the Theorem 2.1, the trivial solution of the Eq. (5.1) [or the system (5.2)] is globally asymptotically stable.

Example 5.2

Consider the second order delay differential equation

where

The Eq. (5.3) is equivalent to system of first order delay differential equation

It is easy to see, from the system of Eqs. (2.1) and (5.4), that

-

(i)

\(a(t):=1+\frac{t+2}{(1+e^{t})^{2}}\) from where we obtain

$$\begin{aligned} 0<1=a_{0}\le a(t)\le a_{1}=\frac{3}{2}, \end{aligned}$$for all \(t\ge 0\) and

$$\begin{aligned} a'(t)=\frac{1-(2t+3)e^{t}}{(1+e^{t})^{3}}\le \frac{3}{10}<\frac{1}{2}, \end{aligned}$$for all \(t\ge 0.\) The bounds on the functions a(t) and \(a'(t)\) are shown in Fig. 4.

-

(ii)

\(f(x,y):=\sin (xy),\) with \(f(x,0)=0.\) If we define

$$\begin{aligned} F_{21}(x,y):=\frac{f(x,y)-f(x,0)}{y}=\frac{\sin (xy)}{y},\quad \forall ~x,y\ne 0. \end{aligned}$$It is not difficult to show that

$$\begin{aligned} 0=\alpha _{0}\le F_{21}(x,y)=\alpha \le \alpha _{1}=1, \end{aligned}$$for all \(x,y\ne 0.\)

-

(iii)

\(f(x,y):=\sin (xy),\) with \(f(0,y)=0\) for all x, y. If we define

$$\begin{aligned} F_{22}(x,y):=\frac{f(x,y)-f(0,y)}{x}=\frac{\sin (xy)}{x},\quad \forall ~y,x\ne 0. \end{aligned}$$We see that

$$\begin{aligned} 0=\beta _{0}\le F_{22}(x,y)=\beta \le \beta _{1}=1, \end{aligned}$$for all \(y,x\ne 0\). Figure 5 shows the behaviour of functions \(F_{21}(x,y)\) and \(F_{22}(x,y).\)

-

(iv)

Let \(g(x):=x+\displaystyle \frac{x}{1+x^{2}},\) so that \(g(0)=0\) and define

$$\begin{aligned} G_{2}(x):=\frac{g(x)-g(0)}{x}=1+\frac{1}{1+x^{2}},\quad \forall ~x\ne 0. \end{aligned}$$It follows that

$$\begin{aligned} 1=\gamma _{0}\le G_{2}(x)=\gamma \le \gamma _{1}=2, \end{aligned}$$for all \(x\ne 0.\) The upper and lower bounds of the function \(G_{2}\) are shown in Fig. 6.

-

(v)

From (ii), (iii) and (iv) we note that

$$\begin{aligned} f(x,0)=f(0,y)=g(0)=0,\quad \forall ~x, y. \end{aligned}$$Also \(I_{0}=[0,2]\). For the case \(p(t,x,y)=0,\) all assumptions of the Theorem 2.1 hold. By Theorem 2.1 the trivial solution of the system (5.4) [or the Eq. (5.3)] is globally asymptotically stable.

-

(vi)

If p(t, x, y) in the system (2.1) is replaced by

$$\begin{aligned} p(t):=\frac{2t}{3+5t^{2}},\quad \forall ~t\ge 0, \end{aligned}$$then, since

$$\begin{aligned} 0\le \frac{2t}{3+5t^{2}}< \frac{3}{10}, \end{aligned}$$for all \(t\ge 0,\) it follows that

$$\begin{aligned} |p(t)|< 1=M<\infty . \end{aligned}$$The behaviour of p(t) is described by Fig. 7.

At this point, assumptions (i)–(vi) of Theorem 2.2 hold and the conclusion is immediate.

-

(vii)

Finally, we shall consider the forcing term defined as

$$\begin{aligned} p(t,x,y):=\frac{(2t\cos t+(t^{2}+1)\sin t-2t)(\sin x+\cos \dot{x})}{(1+t^{2})^{2}}. \end{aligned}$$This implies that

$$\begin{aligned} |p(t,x,y)|\le (|\sin x|+|\cos y|)\phi (t) \end{aligned}$$for all x, y and \(t\ge 0,\) where

$$\begin{aligned} \phi (t):=\frac{2t\cos t+(1+t^{2})\sin t-2t}{(t^{2}+1)^{2}}. \end{aligned}$$Let

$$\begin{aligned} \Phi (t)=\int ^{t}_{0}\phi (\mu )d\mu =\frac{1-\cos t}{t^{2}+1}<\frac{3}{10}<M,\quad \forall ~t\ge 0. \end{aligned}$$The bounds on \(\Phi (t)\) are shown in Fig. 8.

All hypotheses of Theorem 2.3 are satisfied and the conclusion followed.

References

Ademola, T.A.: Boundedness and stability of solutions to certain second order differential equations. Differ. Equ. Control Processes. 3, 38–50 (2015)

Ahmad, S., Rama, M., Rao, M.: Theory of ordinary differential equations with applications in biology and engineering. Affiliated East-West Press (Pvt), New Delhi (1999)

Alaba, J.G., Ogundare, B.S.: Asymptotic behaviour of solutions of certain second order non-autonomous nonlinear ordinary differential equations. Int. J. Pure Appl. Math. 90(4), 469–484 (2014)

Alaba, J.G., Ogundare, B.S.: On stability and boundedness properties of solutions of certain second order non-autonomous nonlinear ordinary differential equation. Krag. J. Math. 39(2), 255–266 (2015)

Atkinson, F.A.: On second order non-linear oscillations. Pac. J. Math. 5, 643–647 (1955)

Ballieu, R.J., Peiffer, K.: Attractivity of the origin for the equation \(x^{\prime \prime }+f(t, x, x^{\prime })|x^{\prime }|^\alpha x^{\prime }+g(x)=0\). J. Math. Anal. Appl. 65, 321–332 (1978)

Bihari, I.: On Periodic solutions of certain second order ordinary differential equations with periodic coefficients. Acta Math. Acad. Sci. Hung. 11, 11–16 (1960)

Burton, T.A.: Liapunov functions and boundedness. J. Math. Anal. Appl. 58, 88–97 (1977)

Burton, T.A., Hatvani, L.: Asymptotic stability of second order ordinary, functional and partial differential equations. J. Math. Anal. Appl. 176, 261–281 (1993)

Haddock, J.R.: On Liapunov functions for non autonomous systems. J. Math. Anal. Appl. 47, 599–603 (1974)

Hahn, W.: Theory and application of Liapunov’s direct method. Prentice-Hall Inc., New Jersey (1963)

Hatvani, L.: On stability properties of solutions of second order differential equations. EJQDE Proc. 6th Coll. QTDE. 11, 1–6 (2000)

Karsai, J.: On the global asymptotic of the zero solution of the equation \(x^{\prime \prime }+g(t, x, x^{\prime })x^{\prime }+f(x)=0\). Stud. Sci. Math. Hungar. 19, 385–393 (1984)

Karsai, J.: On the asymptotic stability of the zero solution of certain nonlinear second order differential equation. Colloq. Math. Soc. Janos Bolyai. 47, 495–503 (1987)

Morosanu, G., Vladimirescu, C.: Stability for a nonlinear second order ODE. Funkcialaj Ekvacioj. 48, 49–56 (2005)

Murakami, S.: Asymptotic behaviour of solutions of ordinary differential equations. Tohoku Math. J. 34, 559–574 (1982)

Napoles, J.E.: On the ultimate boundedness of solutions of systems of differential equations. Rev. Integr. 13, 41–47 (1995)

Napoles, J.E., Repilado, J.A.: On the boundedness and asymptotic stability in the whole of the solutions of a system of differential equations. Rev. Ciencias Matematicas. 16, 83–86 (1995)

Ogundare, B.S., Afuwape, A.U.: Boundedness and stability properties of solutions of generalized Lienard equations. Kochi J. Math. 9, 97–108 (2014)

Ogundare, B.S., Okecha, G.E.: Boundedness, periodicity and stability of solutions to \(\ddot{x}+a(t)g(\dot{x})+b(t)h(x)=p(t; x, \dot{x})\). Math. Sci. Res. J. 11(5), 432–443 (2007)

Pucci, P., Serrin, J.: Precise damping conditions for global asymptotic stability for nonlinear second order systems. Acta Math. 170, 275–307 (1993)

Pucci, P., Serrin, J.: Precise damping conditions for global asymptotic stability for nonlinear second order systems II. J. Differ. Equ. 113, 815–835 (1994)

Smith, R.: Asymptotic stability of \(x^{\prime \prime }+a(t)x^{\prime }+x=0\). Q. J. Math. Oxford Ser. 12, 123–126 (1961)

Thurston, L.H., Wong, J.S.: On global stability of certain second order differential equations with integrable forcing term. SIAM J. Appl. Math. 24, 50–61 (1973)

Tunc, C.: A note on the stability and boundedness of non-autonomous differential equations of second order with a variable deviating argument. Afr. Math. 25(2), 417–425 (2014)

Tunc, C., Ayhan, T.: Global existence and boundedness of solutions of a certain nonlinear integro-differential equations of second order with multiple deviating aruguments. J. Inequal. Appl. 46, 7 (2016). doi:10.1186/s13660-016-0987-2

Tunc, C., Tunc, O.: A note on certain qualitative properties of a second order linear differential system. Appl. Math. Inf. Sci. 9(2), 953–956 (2015)

Vladimirescu, C.: Stability for damped oscillators. An. Univ. Craiova Ser. Mat. Inform. 32, 227–232 (2005)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ogundare, B.S., Ademola, A.T., Ogundiran, M.O. et al. On the qualitative behaviour of solutions to certain second order nonlinear differential equation with delay. Ann Univ Ferrara 63, 333–351 (2017). https://doi.org/10.1007/s11565-016-0262-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11565-016-0262-y