Abstract

As the mainstream chip packaging technology, plastic-encapsulated chips (PEC) suffer from process defects such as delamination and voids, which seriously impact the chip's reliability. Therefore, it is urgent to detect defects promptly and accurately. However, the current manual detection methods cannot meet the application's requirements, as they are both inaccurate and inefficient. This study utilized the deep convolutional neural network (DCNN) technique to analyze PEC's scanning acoustic microscope (SAM) images and identify their internal defects. First, the SAM technology was used to collect and set up datasets of seven typical PEC defects. Then, according to the characteristics of densely packed PEC and an incredibly tiny size ratio in SAM, a PECNet network was established to detect PEC based on the traditional RetinaNet network, combining the CoTNet50 backbone network and the feature pyramid network structure. Furthermore, a PEDNet was designed to classify PEC defects based on the MobileNetV2 network, integrating cross-local connections and progressive classifiers. The experimental results demonstrated that the PECNet network's chip recognition accuracy reaches 98.6%, and its speed of a single image requires only nine milliseconds. Meanwhile, the PEDNet network's average defect classification accuracy is 97.8%, and the recognition speed of a single image is only 0.0021 s. This method provides a precise and efficient technique for defect detection in PEC.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Plastic packaging is one of the most critical manufacturing processes for providing electrical interconnection and physical protection for chips because of its advantages of miniaturization, lightweight, and high reliability compared to ceramic and metallic packaging technologies [1,2,3]. However, since plastic-encapsulated chips (PEC) are a non-hermetic packaging technology, it is easy to produce some defects inside the chip that are difficult to detect visually, such as delamination, voids, dislocation, and incomplete filling. These defects will cause severe chip reliability issues, so detecting internal defects is essential for PEC [4,5,6]. Due to the non-destructive testing characteristics of scanning acoustic microscope (SAM) technology, SAM is typically used to obtain defective images of PEC [7,8,9].

PEC defects and their detection methods have drawn considerable research interest. Cai et al. analyzed the failure mechanisms and potential reliability risks of common defects that are likely to occur in the PEC packaging process but cannot be identified solely through visual inspection or electrical parameter testing. This investigation established a technical foundation for detecting PEC defects [1]. Hullinger et al. [10] attempted to construct a stretchable thermomechanical test chip using sensors such as crack, conductivity, short circuit, and corrosion to evaluate the reliability of PEC. Liang et al. [11] used an ultrasonic scanning microscope to analyze defects such as cracks, impurities, and delamination of PEC using different scanning modes. They demonstrated that SAM can accurately characterize the internal defects of PEC. Liao et al. [7] proposed a defect judgment method based on waveform amplitude and phase that can autonomously generate defect images with color marks, thereby reducing the workload of ultrasonic inspection. It significantly increases the efficiency of manual defect detection. Chen et al. [12] investigated the application of deep convolutional neural network (DCNN) technology to the automatic visual detection of fastener defects in rail vehicles, which led to the realization that the possibility of applying artificial intelligence (AI) technology to detect the internal defect of PEC. Overall, internal defect detection of PEC relies primarily on the manual visual inspection method aided by imaging auxiliary tools. Nevertheless, there are still some issues:

-

(1)

Procedure: difficult and complex Due to the small size and dense arrangement of chips in ultrasonic images, it is difficult to accurately identify and classify tiny and randomly distributed internal defects only by manual inspection methods.

-

(2)

Result: poor stability and low efficiency Very dependent on the state and experience of the inspector. Furthermore, human eyes are less effective at identifying minor defects, and long-term observation can readily result in visual fatigue, decreasing the stability and efficiency of inspection.

-

(3)

Cost: very high The inspector must be a mature technician with rich experience, which induces high time and energy costs.

Therefore, there is an urgent need to introduce AI technology to realize the automatic detection of internal defects of PEC [13,14,15]. However, in the SAM image of PEC, the chips are arranged densely, and each size ratio is tiny. Therefore, the automatic detection of internal defects in PEC takes time to implement. It is urgent to resolve two technical challenges immediately: the precise identification and marking of a single chip and the accurate identification and classification of randomly distributed small-size defects.

CNN is a typically used deep learning model used mainly on data characterized by a grid structure, such as photos, videos, and audio. The fundamental concept of the method is gathering input data features using convolution and pooling operations, followed by performing classification or regression tasks via fully connected layers. CNN may build up an understanding of the characteristics of the input data through these procedures, resulting in effective data processing and analysis. CNN is extensively employed in various computer vision applications, including but not limited to picture classification, target recognition, and image segmentation. One notable advantage of this technique is its ability to automatically acquire and extract characteristics from input data, avoiding manual feature extraction. Furthermore, CNN possesses unique translation invariance and local perception abilities, enabling them to manage variations in position and local deformations inside images effectively. Additionally, it exhibits a certain level of robustness and tolerance against noise and shifts in the input data. CNN achieved excellent results in practical applications by learning previous information from massive data and continuously upgrading the model's structure and parameters. Consequently, CNN has become an essential field of research and practical application within deep learning.

DCNN has achieved current success in the recognition and positioning of small targets. Wen et al. [16] proposed a TCNN (ResNet50) network for small-volume sample fault diagnosis, combined transfer learning to train feature extractors, and increased the accuracy to more than 98.95%. Zhou et al. proposed a method for small target detection in high-resolution remote sensing images. Using depthwise separable deconvolution to restore the lost feature information effectively and combining dilated convolution and CoTNet to extract local context features in the HRSC2016 dataset [17, 18], the recognition and detection rate of the rotation-invariant target increased to 96% [19].

Moreover, DCNN has also obtained excellent results in defect detection and classification [20, 21]. Sandler et al. [22] described a new architecture, MobileNetV2, which incorporates an inverted residual structure to drastically reduce the number of operations and memory required while maintaining the accuracy of feature classification. Liu et al. [23] introduced an enhanced receptive field block (RFB) structure, which improved the feature extraction capability of the network, enhanced the accuracy of multi-scale target detection, and made the model lighter in quantity. Ma et al. [24] introduced the convolutional block attention module (CBAM) into MobileNetV2 and proposed the I_CBAM_MobileNetV2 model, automatically achieving a 98.21% recognition accuracy rate for corn seeds.

This work introduces DCNNs to solve the defect detection problem in PEC. A dataset of typical PEC defects is first collected and prepared using SAM technology. Then, considering PEC's small size and dense arrangement, the backbone network ResNet in the RetinaNet is replaced by CoTNet50. The feature pyramid networks (FPN) are used to improve the algorithm's feature fusion and extraction capabilities. The PECNet (net for plastic-encapsulated chips) network is constructed to position and identify a single chip. Furthermore, based on the internal defect characteristics of PEC and the requirements for an accurate and quick network, a cross-local connection network backbone and a progressive classifier are added to the MobileNetV2 network. The PEDNet (net for plastic-encapsulated defects) network is proposed for fast and accurate identification and classification of various PEC defects.

2 Methodology

2.1 System framework and process

As shown in Fig. 1, when using SAM technology to collect defect images of PEC, the ultrasonic transceiver sends signal pulses to the ultrasonic transducer to generate ultrasonic waves of a specific frequency. While scanning internal defects of the PEC, each layer of material in the device has a different density and atomic weight, so the ultrasonic wave will be reflected when it reaches the various detection surfaces of the sample. Then, the receiver receives the ultrasonic wave reflected from the sample surface. After data processing, a high-resolution ultrasonic scanning image is acquired, representing defect information at various depths. Due to its high sensitivity and lack of sample damage, SAM is widely utilized in screening PEC.

As depicted in Fig. 2a, the frame is typically used as a unit for batch packaging in the chip plastic packaging procedure, and each frame contains approximately 10–20 individual chips. As depicted in Fig. 2b, three frames are typically scanned simultaneously to efficiently produce an ultrasound image comprising 30–60 chips. The single chip size is small, and the arrangement is dense. Furthermore, the internal defects are randomly distributed in the SAM. Consequently, it is difficult for the manual to identify the defects due to low accuracy and poor real-time performance.

As depicted in Fig. 3, if there are defects such as voids or delaminations within the PEC, it will appear red around the chip in SAM. Figure 3a reveals that the ultrasonic image contains images of multiple chips that are arranged in an orderly manner and have a transparent background. However, the defects on each chip have a variety of shapes and are distributed randomly. According to the different shapes of the internal defects of the PEC, as depicted in Fig. 3b–h, the defect types can be divided into seven categories: no defect (NF), strip delamination (SD), top void (TV), pin delamination (PD), semi-cycle delamination (SCD), cycle delamination (CD), and fully delamination (FD). The ratio of defects to chip area varies across different types of defects. TV, SD, and PD have a lower rate, below 5.0%, whereas SCD, CD, and FD have more severe defects, with higher defect rates of about 9.5–20%.

Ultrasonic detection of defects in PEC, a a portion of the original SAM and various categories of defects on each single chip, b no defect (ND), c strip delamination (SD), d top void (TV), e pin delamination (PD), f semi-cycle delamination (SCD), g cycle delamination (CD), and h fully delamination (FD)

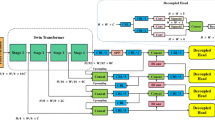

The procedure to detect PEC defects is illustrated in Fig. 4. First, the plastic chip packaging process experiment is designed, multi-chip SAM images are collected, and a dataset for training and testing models is constructed. Afterward, the detection approach was divided into two steps: chip location and defect classification, considering the features of the densely packed plastic chips and the small size of each chip in the SAM images. The PECNet and PEDNet models are trained by employing the improved RetinaNet and MobileNetV2 models, respectively. The defect detection system has been constructed with well-trained PECNet and PEDNet networks. When the SAM images of plastic packaging chips were input into the detection system, the PECNet model was used to identify and locate a single chip. In contrast, the PEDNet model was used to identify and classify chip defects. Finally, the PEC defect identification results were then quantitatively analyzed and compared horizontally.

2.2 Defect dataset setup

The dataset provides an accurate way of guaranteeing precision defect detection. To obtain chip images containing various defects, we modify essential process parameters in the plastic packaging process, including wake-up time, injection time, curing time, and post-curing time. This modification is performed based on the variable sensitivity of these process parameters to the quality of the packaging. A comprehensive set of nine different process conditions was established, and 60 chips were fabricated under each condition. Subsequently, a SAM device (YTS500, SHSIWI, Shanghai) was utilized to collect ultrasound images of PEC, as shown in Fig. 5. To address issues such as noise in ultrasonic photos, the image receives filtration and histogram equalization. Furthermore, this dataset also includes defect images obtained from a packaging company (MC-Power Semiconductor Co., Ltd.) to confirm the dataset's sufficiency.

In addition, the collected defect images undergo data expansion using cropping, horizontal mirroring, rotation, and brightness changes. Eventually, the increased dataset size prevents model overfitting due to insufficient data.

The chip region in the ultrasonic image can be manually labeled using marking software. Subsequently, the ultrasonic image can be divided into various defect datasets in Table 1, according to the defect types depicted in Fig. 3. The number of ultrasonic images obtained differs due to the various probabilities of different defects happening under different plastic packaging process conditions. Furthermore, all images are normalized to a resolution of 224 × 224 pixels per inch (PPI) to improve the efficiency of following network training while also enhancing the generalization to different images. Moreover, we have confirmed that the normalized resolution procedure never negatively affects detection accuracy. Subsequently, every defect image is divided into training and test sets in an 8:2 ratio.

2.3 Chip detection network by PECNet

Before detecting various defects on PEC, it is necessary to identify individual chips precisely from original ultrasound images. However, a single chip occupies a small proportion of the original ultrasound image, making it challenging to detect in the densely packed original ultrasound image. In general, the size of the original ultrasonic test image is about 1676 × 1628 pixels. In contrast, the size of a single chip is only 65 × 65 pixels, and its area ratio in the original image is only 0.15%. Therefore, such a small ratio imposes stringent requirements on chip inspection networks' feature extraction efficacy.

To efficiently improve the feature extraction capability of PEC, the backbone network is RetinaNet, a single-stage network known for its quickness and precise detection. This network uses a residual module defined as a "shortcut connection" to connect the input and output. This connection method has effectively addressed the gradient explosion-induced issues of network degradation and feature extraction capability within deep networks [16, 25]. Then, as shown in Table 2, The CoTNet50 core network, which incorporates the self-attention mechanism, is used to replace the 3 × 3 convolution in RetinaNet to further improve the feature extraction capability [26]. Furthermore, the FPN is also used to enhance the feature extraction capability under a minimal ratio [27,28,29]. Finally, the proposed PECNet enables the accurate extraction of chip features and possesses the benefits of thin layers and high speed.

Utilizing CoTNet50 to replace the RetinaNet backbone network can enhance the chip's ability to extract features because it introduces a CoTBlock module. As depicted in Fig. 6, CotBlock is a transformer-style self-attention mechanism module that is superior at capturing the internal correlation of data or features. First, the input keys are context-encoded using 3 × 3 convolutions, yielding a static contextual representation of the input. Next, the encoded static keys are concatenated with the input query x to discover a dynamic multi-head attention matrix using two 1 × 1 convolutions. The input values are then multiplied by the learned attention matrix to produce a dynamic contextual representation of the input data. Finally, the merged static and dynamic contextual representations are taken as the final output. Using the core module with CotBlock can integrate context learning and self-attention learning in PEC ultrasound images, effectively enhancing the detection backbone network's feature extraction and expression capabilities.

After using the CoTNet50 backbone network with the CotBlock module as the core to obtain feature-enhanced images, an FPN network for feature fusion detection is employed to identify PEC accurately. As depicted in Fig. 7, a low-resolution feature map with a size of 1/32 of the original image is up-sampled to a high-resolution feature map with a size of 1/16. Then, a 1/16-sized feature map is generated using 3 × 3 convolutional linear superposition, and the two feature maps are initially fused. Continue using the same method to develop a 1/8-scale feature map. Finally, the 1/8 large-scale feature map obtained by progressively merging these three-level feature maps is then utilized for classification and regression. The shallow feature map in the FPN contains more position and detail information, but its semantics are weaker. In contrast, the high-level feature map has more robust semantics but poor resolution and detail perception. Adopting this method of step-by-step fusion of three-level features can ensure that the network for detecting PEC has a sufficiently large receptive field and robust high-dimensional semantics and is more conducive to accurately identifying chips with a tiny area ratio.

The PECNet constructed with CoTNet50 feature extraction and FPN feature fusion can obtain a feature map that is 1/8 the size of the original image, as well as output the location of the target chip. The regression sub-network consists of four convolutional layers, each with a step size of 1, a convolution kernel size of 3 × 3, and a padding value of 1. The network processes the feature map, whose size remains unchanged and whose number of channels is 4 × num_Anchor. The classification sub-network is comparable to the regression sub-network, with the addition of 2 × num_Anchor channels. RetinaNet places nine Anchors at each feature point, with an aspect ratio of (0.5, 1, 2) and scaling ratios of (20, 22/3, 21/3). To obtain all Anchor, the generated Anchor is translated according to a fixed step size [19].

ECNet follows RetinaNet's classification loss function FocalLoss and regression loss function SmoothL1. By assigning various weights to samples with varying degrees of difficulty, FocalLoss can balance the contribution of positive and negative examples to the loss and prevent the number of negative samples from influencing the direction of model optimization. SmoothL1 computes the loss of the coordinate prediction value of the regression frame separately before adding them linearly, thereby reducing the correlation between the coordinates and achieving the correction of the anchor frame.

2.4 Defect recognition network by PEDNet

The MobileNetV2 is chosen as a primary network to identify plastic chip defects due to its advantages of relative lightness and high efficiency [22, 30, 31]. MobileNetV2 is a lightweight neural network that employs an inverse residual structure. It increases the dimension of the input vector through point-wise convolution and then extracts features from each vector channel through depth convolution. Ultimately, features' dimensions are compressed and reduced via point-by-point convolution. Not only can the reverse residual enhance the number of features and improve precision, but it may also reduce the network's overall computation load. Due to the random distribution of defects in PEC and the recognition requirement for high accuracy and speed, the chip defect recognition network faces significant feature extraction and classification performance challenges. Therefore, the network must be enhanced based on MobileNetV2 to improve the accuracy and efficiency of defect detection.

The original MobileNetV2 backbone network was redesigned to derive the fundamental characteristics of chip defects, as shown in Table 3, where n is the number of times the bottleneck block is used. First, replace the fourth bottleneck structure in the original MobileNetV2 backbone network with pooling and convolution operations, and then merge the derived features from the two replaced branches into the following bottleneck structure. This enhancement can increase the detection network's receptive field and extend the number and types of defect fundamental characteristics. Then, a cross-local connection structure is added concurrently at the sixth bottleneck structure of the original MobileNetV2 backbone network. This enhancement can increase the scale-specific information of output features, making the backbone network more familiar with various defect shapes and sizes to identify defects in PEC precisely.

The primary function of the classifier is to transform the target features extracted by the backbone network into specific classification results. When classifying PEC defects, it is necessary to retain the characteristics as much as possible and classify them rapidly according to the seven types of defects that have been defined. As demonstrated in Table 4, the original MobileNetV2 classifier directly classifies the output of the final bottleneck and generates a 320-channel feature map. However, the PEC only needs to output seven categories of defects. Suppose the number of neurons in the original network classifier is only modified stiffly to match the defect types. In that case, it will not be conducive to fully utilizing the feature extraction ability of the model during transfer learning, negatively impacting the accuracy and efficiency of defect classification. Therefore, this work enhances the original classifier with an additional progressive convolution layer to better preserve features and refine the classification of the PEC defects [12, 32].

As shown in Fig. 8, the enhanced PEDNet consists of the defect recognition backbone network and the classifier network. Initially, the defect recognition backbone network improves the problems in the original network by strengthening the original backbone network's fourth and fifth bottleneck structures: the fundamental characteristics loss problem caused by the linear superposition of the bottleneck's deep network layers. Moreover, the receptive field of the PEDNet is significantly increased, and the number and types of defect features such as color, texture, and edge are expanded. By adding a progressive convolution layer, the defect classifier effectively overcomes the feature loss caused by directly altering the number of neurons in the original classifier. PEDNet effectively retains defect features and refines the classification according to actual demands, thereby improving defect identification and classification accuracy and efficiency.

3 Results and discussion

3.1 Training details

A server was constructed for the training and testing of the model, with the Ubuntu 18.04 operating system being configured. The central processing unit utilized in the system was the Intel Core i7-7700K, operating at 4.20 GHz. The graphics processing unit employed was the GeForce GTX 1660 Ti. The system's memory capacity was 6 GB, and the DCNN utilized was Pytorch with CUDA version 10.0.

Before the training and testing processes, the original image was scaled to dimensions of 320 × 320 pixels using linear interpolation and fed into the neural network for further analysis. Then, before training the chip detection network PECNet on the given dataset, it underwent training on the ImageNet dataset to acquire pre-trained weights. The Adam optimization algorithm, a kind of stochastic gradient descent, was employed to optimize the model [33]. The optimization parameters encompass the initial learning rate of 0.0001, the weight decay value of 0.0001, the batch size of 8, and the maximum number of iterations of 10,000 to achieve complete network convergence. Furthermore, the PEDNet model, designed for defect identification, was utilized for training and testing. The optimization parameters for the model consist of an initial learning rate of 0.001, a weight decay value of 0.0001, a batch size of 16, and a total of 300 epochs. Similarly, once the network model has undergone training, it will undergo testing using the internal defect test set specific to the chip.

To quantitatively assess the impact of the chip detection model, the evaluation criteria employed include the recall rate, accuracy, precision, and comprehensive evaluation indicators, all measured under a 0.5IoU threshold.

The formula incorporates several variables, including R, which represents the recall rate, and P, which denotes the accuracy rate. AP signifies precision, and the F1 measure serves as the total assessment index. TP signifies the accurate identification of the chip, while TN denotes the proper background identification. FP refers to the misidentification of the background as the chip, and FN represents the wrong identification of the chip as the backdrop.

The training loss function of the PECNet chip detection network model exhibits a notable decline as the number of iterations grows. After around 4400 iterations, the curve reaches a state of stability, suggesting that the network model tends to converge following a training period.

3.2 Experimental results and discussion

3.2.1 Chip detection by PECNet

Figure 9 demonstrates the precision and effectiveness of the chip detection network. It illustrates the successful identification of a single chip using the PECNet network. Additionally, the network can partition the chip's position area and mark the chip's potential value simultaneously.

The efficacy of PECNet is further verified through a horizontal quantitative comparison, as shown in Table 5. Multiple representative target detection networks were employed to evaluate and compare their performance on the identical self-constructed dataset. Among these target detection networks, ResNet50 and single-shot multi-Box detector (SSD) represent two-stage and one-stage networks, respectively. The comparison results show that the original ResNet50 has the highest detection accuracy rate of 99.8%. However, it was also discovered that this network's detection time was far too long before any improvements were made. The PECNet model demonstrates a detection accuracy of 98.5% and a recall rate of 100%. Additionally, it exhibits notable enhancements in detection speed, with a single-chip detection time of only 9 ms. This detection time is 96.4% and 66.7% lower than the ResNet50 and SSD models. Hence, the designed PECNet is capable of efficiently and precisely detecting PEC.

3.2.2 Defect detection by PEDNet

To evaluate the defect detection capabilities of the PEDNet network model, several kinds of defects were tested and compared to the testing performance of other typical network models. As shown in Fig. 10a, the PEDNet model demonstrates that the detection accuracy of all types of defects exceeds 96%. Notably, the detection performance is powerful for top void, complete delamination, and no damage, with accuracy rates of 98.50%, 99.30%, and 99.60%, respectively. According to the data presented in Fig. 10b, when comparing the four well-known classification networks, namely MobileNetV2, ResNet50, DenseNet121, and InceptionV3, it is observed that PEDNet achieves an average accuracy of 97.8%. This accuracy is comparable to InceptionV3, which is 98.1% and surpasses the performance of alternative models. Furthermore, the PEDNet model has superior detection speed, with a mere 0.0021 s per image recognition time, which is 53.3% faster than the InceptionV3 model.

Figure 10 presents the performance characterization of the PEDNet defect identification network. Subfigure (a) displays the detection accuracy for various defect types, while subfigure (b) compares the detection accuracy among different detection networks and shows the average recognition time for a single image.

As shown in Table 6, the enhancement of the MobileNetV2 classifier has significantly improved the defect detection performance of PEDNet. Specifically, there was a 1.2% rise in the average accuracy rate of all defect types, a 20.8% drop in the number of network parameters, a 20.5% decrease in the model size, and an 8.7% increase in the average recognition rate of a single image. The outcomes demonstrate that the enhancements to PEDNet's backbone network significantly affect the model's capability to extract features. Additionally, the newly designed classifier can learn these characteristics effectively, resulting in a more accurate classification of defects on time. Furthermore, it is essential to recognize that PEDNet exhibits slightly lower accuracy than InceptionV3, with a tiny difference of 0.3%. Fortunately, all other performance parameters show better results for PEDNet. In particular, there is a significant decrease in the parameter amount and the model size compared to ResNet50, with reductions of 92.4% and 92.2%, respectively. Consequently, the PEDNet created through this investigation demonstrates precise identification and classification of defects in PECs and illustrates the benefits of being lightweight and fast. Therefore, it meets the demands of PEC reliability testing.

4 Conclusion

We proposed an original method for applying DCNNs to defect detection inside PECs. Initially, a dataset containing seven types of internal defects was collected and produced using SAM techniques. Furthermore, the PECNet chip detecting network was developed to address the issue of densely packed chips and the tiny ratio of single chip area. The network architecture is constructed based on the RetinaNet framework, which integrates the CotNet50 model and the FPN network structure. The test result shows that the chip recognition accuracy rate reaches 98.6%, and its inference speed for processing a single image is only nine milliseconds. Additionally, PEDNet, a network for PEC defect detection, has been established to address the demands of high-precision, rapid, and lightweight industrial applications in microelectronics reliability testing. PEDNet was founded on the base of the MobileNetV2 architecture, combining both cross-local connections and progressive classifiers. Moreover, the network achieved an average recognition and classification accuracy of 97.8%, taking only 0.0021 s for each image recognition. The proposed method offers an accurate and effective solution for detecting defects in PEC. This method serves as a technological assurance to improve the plastic encapsulation process and enhance the reliability of the chips.

Data Availability

No datasets were generated or analysed during the current study.

References

Cai, J.B., Li, W., Chen, X.L., et al.: Investigation on failures of plastic package devices with unidentifiable defects related to deficient molding process. In: 2020 21st International Conference on Electronic Packaging Technology (ICEPT), Guangzhou, China, pp. 1–5 (2020)

Wang, Z.J., Yi, Z.X., Qin, M., et al.: Low-drift MEMS thermal wind sensor with symmetric packaging using plastic injection molding process. IEEE Trans. Instrum. Meas. 70, 1–8 (2021)

Li, S.: MicroSystem Based on SiP Technology. Springer Nature Singapore, Singapore (2023)

Angelov, G., Rusev, R., Nikolov, D., et al.: Identifying of delamination in integrated circuits using surface acoustic microscopy. In: 2021 XXX International Scientific Conference Electronics (ET), Sozopol, Bulgaria, pp. 1–5. IEEE (2021)

Kravchenko, G., Bohm, C.: A study of chip top delamination in plastic encapsulated packages under temperature loading. In: 2007 9th Electronics Packaging Technology Conference, Singapore, pp. 675–679. IEEE (2007)

Cai, J.B., Chen, X.L., Wu, H.W., et al.: Typical failure mechanisms of plastic encapsulated devices’ internal connection. In: 2016 17th International Conference on Electronic Packaging Technology (ICEPT), Wuhan, China, pp. 1323–1326. IEEE (2016)

Liao, X.Y., Ye, L.Z., Zhang, Y.L.Z.: Study on chip defect detection algorithm based on ultrasonic scanning. In: 2022 23rd International Conference on Electronic Packaging Technology (ICEPT), Dalian, China, pp. 1–6. IEEE (2022)

Zhao, H.L., Zhang, K., Zhou, Z.H., et al.: Effect of environmental factors on ultrasound detection of plastic encapsulated microcircuits. J. Phys. Conf. Ser. 1885(4), 042064 (2021)

Zhao, H.L., Zhang, K., Zhou, Z.H., et al.: Analysis of scanning acoustic microscopy problems for plastic encapsulated microcircuits with complex structure. J. Phys. Conf. Ser. 1885(5), 052054 (2021)

Hullinger, A.K., Duffalo, J.M., Niederkorn, A.J., et al.: Evaluation of a plastic encapsulated package using a scalable thermal mechanical test chip. In: 33rd IEEE International Reliability Physics Symposium, pp. 112‒115. IEEE, Las Vegas, NV (1995)

Liang, Y.F., Zhang, S.J.: A case study of the delamination analysis of plastic encapsulated microcircuits based on scanning acoustic microscope inspection. In: 2014 Prognostics and System Health Management Conference (PHM-2014 Hunan), Zhangiiaijie City, China, pp. 190‒193. IEEE (2014)

Chen, J.W., Liu, Z.G., Wang, H.R., et al.: Automatic defect detection of fasteners on the catenary support device using deep convolutional neural network. IEEE Trans. Instrum. Meas. 67(2), 257–269 (2018)

Huang, S.H., Pan, Y.C.: Automated visual inspection in the semiconductor industry: a survey. Comput. Ind. 66, 1–10 (2015)

Pang, S.L., Chen, M.Y., Ta, S.W., et al.: Void and solder joint detection for chip resistors based on X-ray images and deep neural networks. Microelectron. Reliab. 135, 114587 (2022)

Su, T.J., Chen, Y.F., Cheng, J.C., et al.: An artificial neural network approach for wafer dicing saw quality prediction. Microelectron. Reliab. 91, 257–261 (2018)

Wen, L., Li, X.Y., Gao, L.: A transfer convolutional neural network for fault diagnosis based on ResNet-50. Neural Comput. Appl. 32(10), 6111–6124 (2019)

Xie, X.X., Cheng, G., Wang, J.B., et al.: Oriented R-CNN for object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3520–3529 (2021)

Zhou, L.L., Rao, X.H., Li, Y.H., et al.: A lightweight object detection method in aerial images based on dense feature fusion path aggregation network. ISPRS Int. J. Geo-Inf. 11(3), 189 (2022)

Zhou, H.P., Guo, W., Zhao, Q.: An anchor-free network for increasing attention to small objects in high resolution remote sensing images. Appl. Sci. 13(4), 2073 (2023)

Wang, T., Chen, Y., Qiao, M.N., et al.: A fast and robust convolutional neural network-based defect detection model in product quality control. Int. J. Adv. Manuf. Technol. 94(9–12), 3465–3471 (2018)

Bhatt, P.M., Malhan, R., Rajendran, P., et al.: Image-based surface defect detection using deep learning: a review. J. Comput. Inf. Sci. Eng. 21(4), 040801 (2021)

Sandler, M., Howard, A., Zhu, M.L., et al.: MobileNetV2: inverted residuals and linear bottlenecks. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4510‒4520. IEEE, Salt Lake City, UT (2018)

Liu S.W., Kong W.M., Chen X.F., et al.: Multi-scale ship detection algorithm based on a lightweight neural network for spaceborne SAR images. Remote Sens. 14, 5, p.1149 (2022)

Ma, R., Wang, J., Zhao, W., et al.: Identification of maize seed varieties using mobileNetV2 with improved attention mechanism CBAM. Agric. BASEL 13(1), 11 (2022)

Vecvanags, A., Aktas, K., Pavlovs, I., et al.: Ungulate detection and species classification from camera trap images using RetinaNet and faster R-CNN. Entropy 24(3), 353 (2022)

Li, Y.H., Yao, T., Pan, Y.W., et al.: Contextual transformer networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 45(2), 1489–1500 (2022)

Wang, C.Y., Zhong, C.M.: Adaptive feature pyramid networks for object detection. IEEE Access. 9, 107024–107032 (2021)

Chen, Q., Wang, Y.M., Yang, T., et al.: You only look one-level feature. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13039–13048 (2021)

Lin, T.Y., Dollar, P., Girshick, R., et al.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2117–2125 (2017)

Qiu, Z., Zhu, X., Liao, C., Shi, D., Qu, W.: Detection of transmission line insulator defects based on an improved lightweight YOLOv4 model. Appl. Sci. BASEL 12(3), 1207 (2022)

Liu, J., Wang, X.W.: Early recognition of tomato gray leaf spot disease based on MobileNetv2-YOLOv3 model. Plant Methods 16(1), 83 (2020)

Koklu, M., Unlersen, M.F., Ozkan, I.A., et al.: A CNN-SVM study based on selected deep features for grapevine leaves classification. Measurement 188, 110425 (2022)

Arouri, Y., Sayyafzadeh, M.: An adaptive moment estimation framework for well placement optimization. Comput. Geosci. 26(4), 957–973 (2022)

Funding

Funding was provided by Science and Technology on Electronic Information Control Laboratory (Grant No. 6142105200203).

Author information

Authors and Affiliations

Contributions

Wanchun Ren: Writing-original draft preparation, Methodology, Pengcheng Zhu: Visualization, Shaofeng Cai: Supervision, Yi Huang: Investigation, Haoran Zhao: Software, Youji Hama: Data curation, Zhu Yan: Writing-reviewing, Tao Zhou: Editing, Junde Pu: Experiment, Hongwei Yang: Dataset.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ren, W., Zhu, P., Cai, S. et al. Automatic detection of defects in electronic plastic packaging using deep convolutional neural networks. J Real-Time Image Proc 21, 152 (2024). https://doi.org/10.1007/s11554-024-01534-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01534-5