Abstract

Purpose

Precise needle placement is an important task during several medical procedures. Ultrasound imaging is often used to guide the needle toward the target region in soft tissue. This task remains challenging due to the user’s dependence on image quality, limited field of view, moving target, and moving needle. In this paper, we present a novel dual-robot framework for robotic needle insertions under robotic ultrasound guidance.

Method

We integrated force-controlled ultrasound image acquisition, registration of preoperative and intraoperative images, vision-based robot control, and target localization, in combination with a novel needle tracking algorithm. The framework allows robotic needle insertion to target a preoperatively defined region of interest while enabling real-time visualization and adaptive trajectory planning to provide safe and quick interactions. We assessed the framework by considering both static and moving targets embedded in water and tissue-mimicking gelatin.

Results

The presented dual-robot tracking algorithms allow for accurate needle placement, namely to target the region of interest with an error around 1 mm.

Conclusion

To the best of our knowledge, we show the first use of two independent robots, one for imaging, the other for needle insertion, that are simultaneously controlled using image processing algorithms. Experimental results show the feasibility and demonstrate the accuracy and robustness of the process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Several medical procedures require the targeted insertion of a needle for diagnostic or therapeutic purposes. These include a wide range of biopsies of cysts, lymph nodes, or lesions as well as brachytherapy or tissue ablation [1]. The latter requires the insertion of a needle to locally treat cancerous lesions, as an alternative or complement to traditional approaches such as surgery, radiotherapy, or chemotherapy. In the case of radiofrequency (RF) ablation, unresectable malignant lesions are destroyed by heating them with an electrical current produced by a radio wave [2]. Cryoablation uses hollow needles (cryoprobes) through which cooled thermally conductive fluid is circulated to freeze and destroy neighboring tissue. Beyond cryoablation for prostate cancer, it is a potential treatment for lung, liver, kidney, bone, and soft tissue tumors [3]. These procedures are mostly performed under fluoroscopic or ultrasound (US) guidance, in which the RF needle or cryoprobe is placed into the vicinity of the target region. Although it is strongly dependent on the operator, US is preferred due to its noninvasive nature, low cost, high frame rate, and lack of radiation. It further allows real-time monitoring of patient motion and treatment progression. The outcome of the treatment depends not only on the analytic skills and the dexterity of the medical expert when handling the needle, but also on his experience with the US device, including appropriate pressure applied to the soft tissue and orientation relative to needle and target. Indeed, accurate needle placement remains challenging in cases with poor target visibility, if the tumor is located in a location difficult to access, if a multifocal disease has to be treated, or if the US imaging plane has to be placed out of the axis of the needle.

The development of an ultrasound-guided robotically assisted percutaneous needle placement framework, possibly combined with Computed tomography (CT) or magnetic resonance (MR) to ultrasound image fusion could not only improve the clinical outcome but could also reduce radiation dose, when compared to fluoroscopic or CT-guided needle insertions, or improve simplicity, when compared to MR-guided insertions [4]. The increasing complexity in these approaches drives the development for robotic solutions to support physicians in successfully performing targeted ablation and to extend the approach to other pathologies. Robotic systems equipped with an RF needle or cryoprobe and an ultrasound probe, applying constant pressure from a desired angle, and maintaining visibility of the needle in the ultrasound frame throughout the intervention, could allow for accurate needle placement.

Some aspects of such a robotic framework, consisting of imaging and action components, have already been studied. On the one hand, robotic manipulators can be employed to acquire real-time imaging in the interventional setting. While nuclear detectors such as gamma cameras do not require skin contact [5], special control strategies are required for robotic ultrasound imaging [6, 7].

Research on ultrasound-guided robotic needle insertion has become of great interest to the scientific community in the past decade. Using a fixed ultrasound transducer, a robotic needle insertion technique has been presented by Hong et al. [8]. That early work required the needle to be aligned with the ultrasound transducer, and the robotic component was designed to insert the needle along the ultrasound B-mode plane to target a region of interest in the image. Similar robotic needle insertion and steering concepts were presented for brachytherapy [9], soft tissue insertion [10], or obstacle avoidance [11]. While strongly focusing on the needle trajectory, the cited publications did not incorporate a moving (robotic) ultrasound acquisition. Therefore, they are limited by the field of view of the ultrasound transducer, and they possibly require a manual repositioning.

To enable 3D needle steering, movement of the transducer may be required, as demonstrated in [12]. That proposed framework is valuable and worked well for the performed experiments, but it may be difficult to apply the methodology in a clinical scenario due to the robot kinematics (linear stages might not be sufficient to cover patient motions realistically) and lack of force sensing.

In this paper, we present a system to combine planning, imaging, and action, aiming to provide a proof of concept for developments toward dedicated clinical applications. The proposed solution incorporates two lightweight robots (i) to autonomously acquire ultrasound images with one arm and place the transducer so that (ii) a needle can be inserted accurately with the other arm based on a preoperative definition of the region of interest and an intended needle target position.

The transformations flange-to-camera \(^\mathrm {{C}\{1,2\}}\) T \(_\mathrm {F\{1,2\}}\), flange-to-needle tip \(^\mathrm {NT}\) T \(_\mathrm {F2}\), and base-to-base \(^\mathrm {B2}\) T \(_\mathrm {B1}\) need to be calibrated, while the transformations base-to-flange \(^\mathrm {F\{1,2\}}{} \mathbf T _\mathrm {B\{1,2\}}\) are provided by the robot

Materials and methods

System overview and workflow

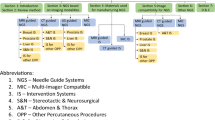

To achieve robotic ultrasound-guided needle insertion based on preoperative imaging, several tasks need to be performed, which can be organized into three phases: initialization, ultrasound volume acquisition and processing, and ultrasound-guided needle insertion. The following paragraph refers to the workflow depicted in Fig. 1.

During the initialization phase (red boxes), medical experts review preoperative images and define the region of interest (A). The robot–robot calibration (B) is performed once the robots are positioned in the intervention room, before the patient arrives. In the second phase, an autonomous ultrasound volume acquisition is performed using the first robot (blue boxes). This phase includes a 3D surface scan and planning of the ultrasound acquisition (1), ultrasound acquisition and volume compounding (2), and the registration of the preoperative images with the ultrasound volume (3). At this point, the region of interest is transferred to the robot coordinate system. In the third phase, the ultrasound-guided needle insertion is performed using the second robot (green boxes). Based on the ultrasound volume and preoperative imaging, the needle trajectory is planned. Then, the ultrasound transducer mounted on the first robot is automatically positioned to enable the simultaneous observation of the needle and the region of interest (4). We define the ideal transducer position so that the needle moves within the imaging plane, as it is desired to observe the needle during the entire injection process. Following an automatic initialization of the needle position close to the point of entry to allow a final safety check, the second robot slowly inserts the rigid needle (5). Visual servoing allows the update of the needle trajectory based on the live ultrasound images, the tracked target anatomy, and the needle tip detected therein (6). The required steps for each of the three phases are explained in detail in the following sections.

Initialization: robot and camera calibrations

In this section, we explain how to obtain the unknown transformations. Figure 2 illustrates the chain of transformations in our dual-robot framework, where each robot is equipped with a RGB-D camera, robot 1 holds the ultrasound transducer, and the needle is mounted on robot 2.

We use classical pivot calibration to compute the transformation flange-to-needle tip \(^\mathrm {NT}\) T \(_\mathrm {F2}\) (see, e.g., [13]). The transformations \(^\mathrm {C\{1,2\}}\) T \(_\mathrm {F\{1,2\}}\) are obtained through hand-eye calibration [14], where {1, 2} refers to robots 1 and 2. For one robot at two poses h and \(h+1\), the chain of transformations can be defined as:

where the camera centers for pose h and \(h+1\) are obtained by tracking a checkerboard [15]. Finally, Eq. (1) needs to be solved for \(^\mathrm {C1}\) T \(_\mathrm {F1}\). The calibration for the second robot is performed analogously. The known design of the transducer mount is used to initialize the standard ultrasound-to-tracking calibration [16]. The Tool Center Points (TCP) are defined to be the ultrasound transducer apex and needle tip. After flange-to-camera calibrations are performed for both robots, the base-to-base transformation \(^\mathrm {B2}\) T \(_\mathrm {B1}\) is obtained by observing one common target, such as a checkerboard (CB):

where \(^\text {CB}\mathbf {T}_{\text {C}\{1,2\}}\) are the transformations from camera center to checkerboard (CB) obtained as presented in [15].

The final base-to-base transformation \(^\mathrm {B2}\) T \(_\mathrm {B1}\) is calculated by averaging the base-to-base transformations computed at different robot poses.

It is assumed that during the procedure \(^\text {B2}\mathbf {T}_\text {B1}\) is not changed, therefore only one depth camera is used in the experimental setup. Due to the rigid mount of the camera, US transducer, and the needle, all calibration steps are performed only once; therefore, they do not to be carried out for each intervention by the clinical staff.

Ultrasound volume acquisition and processing

3D surface scan To obtain a 3D scan of the patient’s surface, one robot is manually positioned in an observation pose. Using the RGB-D camera mounted to the end-effector, the real-time RGB-D data are visualized and presented to the operator for interactive selection by drawing a rectangle of the region of interest (ROI) containing the relevant part of the patient’s surface. To acquire optimal ultrasound images of the selected ROI, the transducer needs to be placed perpendicular to the surface. Therefore, surface normal vectors are computed using a kNN-based approach [17].

Trajectory planning and execution The trajectory is planned by projecting the ROI onto the patient’s surface, and optimizing for the coverage of the target volume [6]. This includes a principal component of the ROI, which allows to compute a trajectory along its main axis to optimize ROI coverage. Before the ultrasound is acquired, the operator applies sufficient ultrasound gel to achieve acoustic coupling and is prompted to verify the planned trajectory. Two-dimensional ultrasound images are then recorded simultaneously with the mechanical tracking data provided by the robotic system. After the data are recorded, the ultrasound volume is compounded using a quadrilateral interpolation for a good trade-off between computational performance and image quality [18].

During the entire procedure, the impedance control mode is used for achieving compliant robot behavior. The motion model is based on virtual springs and dampers, whose tensions vary based on the measured and specified position of the TCP. The robot exerts constant force onto the patient’s surface, enabling a continuous contact between the ultrasound transducer and the patient surface. In combination with the previously applied ultrasound gel, this ensures sufficient acoustic coupling. The impedance controller is described in detail in [19].

Registration of preoperative and intraoperative imaging During the initialization phase, the physician selects the ROI in preoperative images, such as ultrasound, CT, or MRI volumes. To obtain the position of this target in the robot coordinate system, the ultrasound volume and preoperative images are registered. Using the LC\(^{2}\) similarity measure [20, 21] and a nonlinear optimizer, such as BOBYQA [22], the volumes can be aligned. In the current scenario, we expect the tissue deformation to primarily be caused by the pressure applied by the transducer. Therefore, we perform the registration by estimating an affine transformation to find the target within the ultrasound volume.

Ultrasound-guided needle insertion

Robot positioning and planning The needle insertion point and trajectory are computed under the constraint that the ultrasound transducer is positioned perpendicular to the patient’s surface, and the needle and target appear on the same image plane. For safety reasons, the needle is only allowed to traverse tissue that has already been imaged during the ultrasound volume acquisition, allowing the avoidance of critical anatomical structures.

While one robot holds the ultrasound transducer, the second robot injects the needle. The point of injection is computed by intersecting the image plane with the patient’s surface. Additional constrains arise from collision avoidance and needle length. Needle tracking (yellow line) within the needle neighborhood (diagonal gray rectangular), as well as target tracking (red circle) under consideration of the target neighborhood (gray square) is explained in Sect. 2.4. The adaptive planned needle trajectory is visualized in red

The imaging plane is defined by the patient’s surface, the target, and the first principal component of the ultrasound volume. This reduces the free configuration of the second robot, as the needle injection point now needs to be along a curve defined by the intersection of the image plane and patient’s surface (see Fig. 3). We aim at a needle path inside the patient as short as possible, which limits the damage inflicted to the tissue and minimized the possibility of needle bending. By solving the kinematics of the robots under consideration of collision avoidance and minimal safety distances, the needle injection point is determined and visualized within the previously acquired ultrasound volume.

Target tracking for visual servoing The visual error is directly determined by performing intensity-based registration of ultrasound images [7]. First, an initial target neighborhood \(\varOmega _0\) is defined based on the region of interest, which was previously transferred from diagnostic imaging to the ultrasound image “Ultrasound volume acquisition and processing” section. To guarantee sufficient overlap for intensity-based registration, while minimizing the computational effort, the size of the target neighborhood is suggested to be approximately \(10\,\%\) of the image width. The initial target neighborhood \(\varOmega _0\) is confirmed by the users.

Then, the movement of the target neighborhood can be determined frame-to-frame by registration of the neighborhood \(\varOmega _{t-1}\) to the current ultrasound image \(I_{t}\) using NCC as similarity measure and BOBYQA as optimizer. Because the deformation between two frames is assumed to be very small, a rigid transformation can be used. In terms of target tracking, we are interested in the position of the target relative to the ultrasound apex which corresponds to the TCP. Knowing the original pose of the target at \(t=0\), the result can be described as transformation \(^\mathrm {US}\) T \(_\mathrm {Target}\) which represents the position of the target in the current image \(I_t\) relative to the ultrasound origin (US).

Needle tracking for visual servoing The needle appears in the image as a thin, high-intensity line. The point of needle injection and planned trajectory are known. Furthermore, speed and approximate trajectory of the needle are known, which allows the reduction in the search space to a region which we will refer to as needle neighborhood \(\varTheta \). Using a RANSAC-based approach with Kalman filtering [23], the needle can be detected and tracked in real time. At each time t, the changes in \(\varTheta \) are determined by simple subtraction \(\varDelta \varTheta _t = |\varTheta _t - \varTheta _{t-1}|\).

A set of candidate pixels are detected by thresholding using Otsu’s method [24]: \(W_t = \{w_{i,t} \in \varTheta _t | \varDelta \varTheta _t \ge T_{\text {Otsu},t}\}\). Later, artifacts from ultrasound speckles are removed using a median filter, resulting in a reduced candidate set \(\hat{W}_{t}\). At each time t, the needle is modeled by a polynomial curve \(C_t\) of degree n with n control points \(P_t = \{p_{m,t} | p_{m,t} = [x_{m,t}, y_{m,t}, z_{m,t}]\}^n_{m=1}\). The k polynomial curves are fit to the reduced candidate set \(\hat{W}_{t}\) using the RANSAC algorithm [25], and the best fit is determined by computing the distance between the points in the candidate set and the polynomial curve.

However, in real tissue or realistic phantoms, this approach is prone to fail due to tissue structures occluding the needle or appearing too similar. We introduce knowledge based on the previous needle path and current shape such as to consider new candidate points only in a ROI around the estimated needle tip. For each candidate pixel, p(x, y), at the current image frame, k, we calculate the minimum Euclidean distance d to the second-degree polynomial curve, \(C_t\). After finding the solutions of the cubic equation, its first solution \(W_{Rk}=\arg \min _W d(p,C_t(W))\) is used to update the weight map at that pixel location by \(W_k(p)=W_{k-1}+W_{Rk}\). Only the pixels above a weight threshold are considered as candidate points in the RANSAC polynomial fitting.

Using an extended Kalman filter [26], the update of the control points is performed based on the tracking information provided by the robot performing the needle injection and the needle tracking algorithm. This filtering step significantly improves the localization stability and results in a transformation from the observed needle tip (oNT) to the ultrasound origin \(^\mathrm {US}\) T \(_\mathrm {oNT}\). Finally, the visual error between expected (based on mechanical tracking) and observed (based on RANSAC and Kalman filter) needle tip positions can be computed:

Visual control law and needle trajectory update The visual control law now determines the new needle trajectory under consideration of the transformations provided by the robot holding the ultrasound transducer \(^\mathrm {F1}\) T \(_\mathrm {B1}\) (constantly updated as movement is possible, while it remains in impedance control mode), the target tracking algorithm \(^\mathrm {US}\) T \(_\mathrm {Target}\), and the needle tracking algorithm \(^\mathrm {oNT}\) T \(_\mathrm {NT}\). The TCP pose (the needle tip and orientation) can now be updated according to:

Target tracking and needle detection are continuously executed, allowing the visual servoing to be performed in near real time. All trajectories are generated using cubic polynomials with via-points. The maximum force applied is set to 5 Newton.

Experiments and results

Experimental setup

For both intraoperative and preoperative imaging, we use an Ultrasonix® SonixTable\(^\mathrm {TM}\) system together with a curvilinear transducer C5-2/60 to obtain the ultrasound images (Ultrasonix Medical Corp., Richmond, BC, Canada). The acquisition rate, frequency, depth, and gain are set to 32 Hz, 5.0 MHz, 90 mm, and to 32 %, respectively. Using the Ultrasonix® API, the images are transferred via Ethernet to the image processing computer. The needle is a standard 18 gauge needle for seed placement. The RGB-D camera is an Intel® RealSense\(^\mathrm {TM}\) F200 3D camera, which provides RGB data at \(1920 \times 1080\) pixels at 30 frames per second and depth images at a resolution of \(640 \times 480\) pixels at 60 frames per second. The observable depth range is 0.2–1.2 m. To mount the ultrasound transducer, needle, and RGB-D cameras to the robots, custom adapters were designed and 3D printed. For experiments, we use two identical KUKA LBR Intelligent Industrial Work Assistant (iiwa) 7 R800 robots (KUKA Roboter GmbH, Augsburg, Germany). Each robotic system is comprised of a 7-joint arm with corresponding control units and consequently enables one redundant degree of freedom (7 in total). As a result of this design, the robot provides dynamic movement and flexible adaption of trajectories to the working environment. With respect to robotic ultrasound, the incorporated high-accuracy torque sensors in every of the seven joints are evenly important, as a robotic ultrasound framework has to be fully compliant to both patient and staff. Detailed specifications can be found in [27]. The experimental setup is shown in Fig. 4.

During needle placement, the target ROI is continuously co-registered between two sequential images to compensate for its motion. a The red overlay displays the target in its initial position and the blue overlay after translational movement of \(\pm \)20 mm. b The final registration result is shown

Implementation details

The image processing computer runs the ImFusion Suite (ImFusion GmbH, Munich, Germany) for which we have designed and implemented additional modules to acquire the RGB-D point clouds, allow user interaction, trajectory planning, and real-time ultrasound visualization. The implementation uses parallelization on Graphics Processing Units (GPUs) for fast and efficient processing.

The robots are controlled by a driver software using the KUKA SmartServo. Command messages are sent from the image processing computer via an OpenIGTLink connection. KUKA SmartServo can currently process commands at a rate of 50 Hz. The Euclidean positions of the robot are transferred via the Fast Research Interface (FRI) [28], from the robot controller to the image processing computer at a rate of 250 Hz, which outperforms any commonly used clinical tracking system.

Target tracking accuracy validation

The target tracking experiments include the two robots, where one operates the ultrasound transducer and the other one moves the phantom by a series of translations (\(\pm \)20 mm). Over a time of 180 s, the transducer was robotically moved to follow the motion of the target. As shown in Fig.5, the registration results indicate reliable tracking, even considering drift over time. The overall translational accuracy for N = 9 experiments is 0.6 \(\pm \) 0.1 mm. The registration to the initial US image took around 500 ms.

The spatial transformation \(^\mathrm {US}\) T \(_\mathrm {F1}\) from the origin of the ultrasound image to the transducer apex is determined by using the PLUS library [29] and a calibration phantom. The mean calibration error is 0.56 \(\pm \) 0.20 mm. The mean calibration error of the needle tip to robot flange (\(^\mathrm {NT}{} \mathbf T _\mathrm {F2}\)) is 0.55 \(\pm \) 0.11 mm. We then performed two sets of experiments, using two different types of phantoms. The first type of phantom is a 260 \(\times \) 190 \(\times \) 135 mm box filled with water. The target is a 7 mm sphere which is submerged at around 80 mm below the surface. A water bath allows easy ultrasound imaging, but the impedance controller cannot be used. The other phantom is made by filling a 240 \(\times \) 150 \(\times \) 120 mm box with 7 weight percent (wt%) 300 bloom pork gelatin as suggested in [30, 31], to achieve acoustic tissue-mimicking properties. Different organic spherical objects with diameters between 7 and 17 mm were then submerged at different depths below the surface. For our tracking accuracy experiments, we again used a 7 mm sphere, around 80 mm below the surface. The user defines the desired target position, which is illustrated as red circle in Fig. 6. The target positions are set to be on the surface of the sphere in all our experiments. During the gelatin phantom tests, all movements are executed using the impedance controller. Similar to a common clinical scenario, we used a needle of 100 mm length and observed the target area with 90 \(\times \) 70 \(\times \) 90 mm US volume which is not stationary during insertion , namely following a patient motion of \(\pm \)20 mm. For each phantom, we have performed needle insertion with two different angles, 45\(^\circ \) and 30\(^\circ \) with respect to the base plane of the box, and three different needle insertion velocities: 5, 3, and 1 mm/s. The needle insertion has been performed five times in each test case, resulting in a total of 60 evaluated needle insertions. We evaluated the robustness of the needle tracking by placing a finger in the vicinity of or even touching the needle shaft (as shown in Fig. 3), such as to create additional signal and movement in the image. We evaluated the needle tracking algorithm by recording the detected needle tip at different instances of time, as shown in Fig. 6. When the robot reached the planned end point of the trajectory, we calculated the distance between the detected needle tip and the desired target position in the ultrasound image and, using the known calibration, the metric error. The computational time for the needle tracking is around 32 ms. The results are summarized in Table 1.

Discussion and conclusion

In this work, we presented a framework for robotic needle placement under robotic ultrasound image guidance. In particular, we demonstrated the first use of a dual-robot system that takes advantage of impedance control for ultrasound imaging and allows for a consistent representation of the workspace. In comparison with systems with a needle guide or device attached to the ultrasound transducer [9], using a second robotic arm for the needle insertion retains the full versatility with respect to meaningful acoustic windows for imaging and anatomically possible needle insertion paths. In our experiments, we have demonstrated that our framework is able to successfully and robustly hit targets of 7 mm in diameter, as required in a wide range of clinical scenarios [3, 32].

Our framework encompasses state-of-the-art ultrasound image compounding techniques for representing the targeted domain, as well as needle tracking techniques suitable for real-time operation. This work combines the advantages of previous approaches, namely the preoperative planning, the real-time tracking of the needle, and the trajectory update. All our techniques are efficiently implemented using GPU parallelization, allowing for the use of the full image resolutions at high frame rates.

We demonstrated needle placement in a simplified setting using two different types of phantoms (water and gel). We obtained order of 1 mm targeting accuracy when considering a target point submerged in the above phantoms, irrespective of the needle orientation and speed. As shown in Table 1, we have noticed that the water bath experiments had slightly higher needle tracking inaccuracies compared to the tissue-mimicking gel phantom. This is due to the mechanical vibration of the robot, which causes slight vibration of the needle itself in mechanically unconstrained environments.

The obtained tracking errors support the suitability of the proposed approach in a range of more realistic operating conditions. For instance, more extensive evaluation considering physically representative tissue (e.g., soft biological tissues) will be necessary. In a more realistic scenario, flexible needle deformation in soft tissue should be also considered. Moreover, as an intermediate step toward improving the platform we also plan to develop an automatic robot-to-robot calibration scheme which will further simplify preclinical setup. Indeed, most of the technical details that are related to the current developmental stage (and that must be therefore documented in this first study on the dual-robot framework) will be hidden in a later version of the user control interface, so as to foster usability by the clinical staff.

By identifying the technical requirements and the necessary methodology using currently available hardware, we establish the imaging and control software as the basis for a clinically deployable system in the future.

Nonetheless, in order to adapt size and optimal robot setup we need to further analyze the clinical environment and workflow of the intervention, as well as adapt it to patient and culture of the department. Therefore, this proof of concept is an important initial step toward clinical applicability. With the development of dedicated domain-specific robotic systems, purchase and maintenance of such manipulators come into the clinically and economically expedient range, in particular in the light of the current cost-effectiveness of relevant clinical applications such as RF ablation and cryoablation [33].

While we presented a fully automatic framework for needle placement, cooperative robots as in [5] might be employed for a particularly dedicated clinical application, rather supporting the physician in positioning and inserting the needle himself. Our work, focused on determining the reachable targeting accuracy and validating the whole dual-robot system, paves the way for more specific usage of the proposed interventional framework. We will address its potential integration into the workflow of additional applications (e.g., RF ablation or cryoablation), by considering the corresponding clinical constraints.

References

Abolhassani N, Patel R, Moallem M (2007) Needle insertion into soft tissue: a survey. Med Eng Phys 29(4):413–431

Curley SA, Izzo F, Delrio P, Ellis LM, Granchi J, Vallone P, Fiore F, Pignata S, Daniele B, Cremona F (1999) Radiofrequency ablation of unresectable primary and metastatic hepatic malignancies: results in 123 patients. Ann Surg 230(1):1

Yılmaz S, Özdoğan M, Cevener M, Ozluk A, Kargi A, Kendiroglu F, Ogretmen I, Yildiz A (2016) Use of cryoablation beyond the prostate, Insights into imaging, pp. 1–10

Taylor RH (2006) A perspective on medical robotics. Proc IEEE 94(9):1652–1664

Esposito M, Busam B, Hennersperger C, Rackerseder J, Lu A, Navab N, Frisch B (2015) Cooperative robotic gamma imaging: enhancing us-guided needle biopsy, in Medical Image Computing and Computer-Assisted Intervention-MICCAI. Springer, Berlin

Graumann C, Fuerst B, Hennersperger C, Bork F, Navab N (2016) Robotic ultrasound trajectory planning for volume of interest coverage. In: IEEE international conference on robotics and automation (ICRA)

Zettinig O, Fuerst B, Kojcev R, Esposito M, Salehi M, Wein W, Rackerseder J, Sinibaldi E, Frisch B, Navab N (2016) Toward real-time 3d ultrasound registration-based visual servoing for interventional navigation. In: IEEE international conference on robotics and automation (ICRA)

Hong J, Dohi T, Hashizume M, Konishi K, Hata N (2004) An ultrasound-driven needle-insertion robot for percutaneous cholecystostomy. Phys Med Biol 49(3):441

Wei Z, Ding M, Downey D, Fenster A (2005) 3d trus guided robot assisted prostate brachytherapy. In Medical image computing and computer-assisted intervention-MICCAI. Springer, Berlin, pp 17–24

DiMaio SP, Salcudean S (2005) Needle steering and motion planning in soft tissues. IEEE Trans Biomed Eng 52(6):965–974

Alterovitz R, Goldberg K, Okamura A (2005) Planning for steerable bevel-tip needle insertion through 2d soft tissue with obstacles, in Robotics and Automation, 2005. Proceedings of the IEEE international conference on IEEE, pp. 1640–1645

Abayazid M, Vrooijink GJ, Patil S, Alterovitz R, Misra S (2014) Experimental evaluation of ultrasound-guided 3D needle steering in biological tissue. Int J Comput Assist Radiol Surg 9(6):931–939

Niccolini M, Castelli V, Diversi C, Kang B, Mussa F, Sinibaldi E (2016) Development and preliminary assessment of a robotic platform for neuroendoscopy based on a lightweight robot. Int J Med Robot Comput Assist Surg 12(1):4–17

Shiu YC, Ahmad S (1989) Calibration of wrist-mounted robotic sensors by solving homogeneous transform equations of the form AX=XB. IEEE Trans Robot Autom 5(1):16–29

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 22(11):1330–1334

Mercier L, Langø T, Lindseth F, Collins LD (2005) A review of calibration techniques for freehand 3-d ultrasound systems. Ultrasound Med Biol 31(2):143–165

Rusu RB, Marton ZC, Blodow N, Dolha M, Beetz M (2008) Towards 3D point cloud based object maps for household environments. Rob Auton Syst 56(11):927–941

Karamalis A, Wein W, Kutter O, Navab N (2009) Fast hybrid freehand ultrasound volume reconstruction. In: SPIE medical imaging. International society for optics and photonics, pp 726 114–726 114

Albu-Schäffer A, Ott C, Frese U, Hirzinger G (2003) Cartesian impedance control of redundant robots: Recent results with the DLR-Light-Weight-arms. In Proceedings on international conference on robotics and automation, vol. 3. IEEE, 2003, pp. 3704–3709

Wein W, Ladikos A, Fuerst B, Shah A, Sharma K, Navab N (2013) Global registration of ultrasound to mri using the lc2 metric for enabling neurosurgical guidance. In: Medical image computing and computer-assisted intervention–MICCAI. Springer, Berlin, pp. 34–41

Fuerst B, Wein W, Müller M, Navab N (2014) Automatic ultrasound-MRI registration for neurosurgery using the 2D and 3D LC2 metric. Med Image Anal 18(8):1312–1319

Powell MJ (2009) The BOBYQA algorithm for bound constrained optimization without derivatives

Chatelain P, Krupa A, Marchal M (2013) Real-time needle detection and tracking using a visually servoed 3d ultrasound probe. In Robotics and Automation (ICRA), 2013 IEEE International Conference on IEEE, pp 1676–1681

Otsu N (1975) A threshold selection method from gray-level histograms. Automatica 11(285–296):23–27

Uherčík M, Liebgott H, Kybic J, Cachard C (2009) Needle localization methods in 3D ultrasound data. In International congress on ultrasonics, pp. 11–17

Julier s, Uhlmann J (2004) Unscented filtering and nonlinear estimation. In Proceedings of the IEEE, vol 92, no 3, pp. 401–422

Bischoff R, Kurth J, Schreiber G, Koeppe R, Albu-Schaeffer A, Beyer A, Eiberger O, Haddadin S, Stemmer A, Grunwald G, Hirzinger G (2010) The kuka-dlr lightweight robot arm—a new reference platform for robotics research and manufacturing, In Robotics (ISR), 2010 41st International Symposium on and 2010 6th German Conference on Robotics (ROBOTIK), June 2010, pp. 1–8

Schreiber G, Stemmer A, Bischoff R (2010) The fast research interface for the KUKA lightweight robot. In: IEEE workshop on innovative robot control architectures for demanding applications how to modify and enhance commercial controllers

Lasso A, Heffter T, Rankin A, Pinter C, Ungi T, Fichtinger G (2014) Plus: Open-source toolkit for ultrasound-guided intervention systems. IEEE Trans Biomed Eng 10:2527–2537

Cook JR, Bouchard RR, Emelianov SY (2011) Tissue-mimicking phantoms for photoacoustic and ultrasonic imaging. Biomed Opt Express 2(11):3193–3206

Hall TJ, Bilgen M, Insana MF, Krouskop TA (1997) Phantom materials for elastography. IEEE Trans Ultrason Ferroelectr Freq Control 44(6):1355–1365

Künzli BM, Abitabile P, Maurer CA (2011) Radiofrequency ablation of liver tumors: actual limitations and potential solutions in the future. World J Hepatol 3(1):8–14

Bhan SN, Pautler SE, Shayegan B, Voss MD, Goeree RA, You JJ (2013) Active surveillance, radiofrequency ablation, or cryoablation for the nonsurgical management of a small renal mass: a cost-utility analysis. Ann Surg Oncol 20(11):3675–3684

Acknowledgments

The authors wish to thank Wolfgang Wein and his team (ImFusion GmbH, Munich, Germany) for the great support and opportunity to use the ImFusion framework. This work was partially funded by the Bayerische Forschungsstiftung award number AZ-1072-13 (project RoBildOR).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors. This article does not contain patient data.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (mp4 12286 KB)

Rights and permissions

About this article

Cite this article

Kojcev, R., Fuerst, B., Zettinig, O. et al. Dual-robot ultrasound-guided needle placement: closing the planning-imaging-action loop. Int J CARS 11, 1173–1181 (2016). https://doi.org/10.1007/s11548-016-1408-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-016-1408-1