Abstract

When latent variables are used as outcomes in regression analysis, a common approach that is used to solve the ignored measurement error issue is to take a multilevel perspective on item response modeling (IRT). Although recent computational advancement allows efficient and accurate estimation of multilevel IRT models, we argue that a two-stage divide-and-conquer strategy still has its unique advantages. Within the two-stage framework, three methods that take into account heteroscedastic measurement errors of the dependent variable in stage II analysis are introduced; they are the closed-form marginal MLE, the expectation maximization algorithm, and the moment estimation method. They are compared to the naïve two-stage estimation and the one-stage MCMC estimation. A simulation study is conducted to compare the five methods in terms of model parameter recovery and their standard error estimation. The pros and cons of each method are also discussed to provide guidelines for practitioners. Finally, a real data example is given to illustrate the applications of various methods using the National Educational Longitudinal Survey data (NELS 88).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

It is not uncommon to have latent variables as dependent variables in regression analysis. For instance, the item response theory (IRT) scaled \(\theta \) score is often used as an outcome measure to make high-stakes decisions such as evaluating performance of individual teachers or schools. However, there exist potential errors in estimating the latent \(\theta \) scores (or any other latent variables from factor analysis perspective), and ignoring the measurement errors will adversely bias the subsequent statistical inferences (Fox & Glas, 2001, 2003). In particular, measurement error can diminish the statistical power of impact studies, yield inconsistent or biased estimates of model parameters (Lu, Thomas, & Zumbo, 2005), and weaken the ability of researchers to identify relationships among different variables affecting outcomes (Rabe-Hesketh & Skrondal, 2004). The consequence can be especially severe when the sample size is small, when the hierarchical structure is sparsely populated, or when the number of items is small (e.g., Zwinderman, 1991).

When measurement error follows a normal distribution with a constant variance, correcting for the error can be easily handled via reliability adjustment (e.g., Bollen, 1989; Hsiao, Kwok, & Lai, 2018). The main challenge of having IRT \(\theta \) score as dependent variable is that the measurement error in \(\hat{\theta }\) is heteroscedastic with its variance depending on true \(\theta \). With the growing computational power nowadays, a recommended approach to address the measurement error challenge is to use an integrated multilevel IRT model (Adams et al., 1997; Fox & Glas, 2001, 2003; Kamata, 2001; Pastor & Beretvas, 2006; Wang, Kohli, & Henn, 2016) such that all model parameters are estimated simultaneously. This unified one-stage approach incorporates the standard errors of the latent trait estimates into the total variance of the model, avoiding the possible bias when using the estimated \(\theta \) as the dependent variable in subsequent analysis.

Despite the statistical appeal of the one-stage approach, we advocate that a “divide-and-conquer” two-stage approach has its practical advantages. In the two-stage approach, an appropriate measurement model is first fitted to the data, and the resulting \(\hat{\theta }\) scores are used in subsequent analysis. This idea is in the same spirit as “factor score regression” proposed decades ago (Skrondal & Laake, 2001). The benefit of this approach includes clearer definition of factors, convenience for secondary data analysis, convenience for model calibration and fit evaluation, and avoidance of improper solutions. Indeed, it is known that unless an adequate number of good indicators of each latent factor are available, improper solutions (a.k.a., Heywood cases, negative variance estimates) can occur. Anderson and Gerbing (1984) found that with correct models, their simulation showed 24.9% of replications had improper solutions. With improper solutions, test statistics no longer have their assumed distributions, and consequently statistical inference and model evaluation become difficult (e.g., Stoel, Garre, Dolan, & van den Wittenboer, 2006).

Moreover, it has been known that partial misspecification in a model causes large bias in the estimates of other free parameters in structural equation modeling (SEM). In the presence of misspecification, a one-step approach will suffer from interpretational confounding (Burt, 1973, 1976), which refers to the inconsistency between the empirical meaning assigned to an unobserved construct and the a priori meaning of the construct. The potential for interpretation confounding is minimized when the two-step estimation approach is employed (Anderson & Gerbing, 1988). Furthermore, the specification errors in particular parts of an integrated model can be isolated by using the separate estimation approach.

Another compelling argument in support of two-stage estimation is the convenience for secondary data analysis. In a large-scale survey such as NAEP or NELS88, usually hundreds of test items and educational, demographic, and attitudinal variables are included, such that droves of descriptive statistics, multiple regression analyses, and SEM models might be entertained. In this case, neither carrying out all of these analyses nor providing sufficient statistics for them is feasible. Oftentimes, these survey data provide either item parameters or estimated \(\theta \)’s along with their standard errors. Hence, the methods introduced in this paper will come in handy to handle secondary data analysis with limited available information.

In this paper, we investigate different methods of addressing the measurement error challenge within a two-stage framework. These methods will be compared to the naïve two-stage method and an integrative one-stage Markov chain Monte Carlo (MCMC) method (Fox & Glas, 2001, 2003; Wang & Nydick, 2015) in a simulation study. We intend to show that the proposed two-stage methods outperform the naïve method and they produce comparable results to the MCMC method.

1 Literature Review

With the advent and popularity of item response theory (IRT), the IRT-based scaled scores (i.e., \(\theta \)) have been widely used as an indicator of different latent traits, such as academic achievement in education. Hence, \(\theta \) is treated as a dependent variable in various statistical analysis, including simple descriptive statistics (Fan, Chen, & Matsumoto, 1997), two-sample t test (Jeynes, 1999), multiple regressions (Goldhaber & Brewer, 1997; Nussbaum, Hamilton, & Snow, 1997), analysis of variance (ANOVA, Cohen, Bottge, & Wells, 2001), linear mixed models (Hill, Rowan, & Ball, 2005), hierarchical linear modeling (Bacharach, Baumeister, & Furr, 2003), and latent growth curve modeling (Fraine, Damme, & Onghena, 2007). In all these cited studies, \(\theta \) scores were first obtained from separate IRT model fitting, and then they were used as variables in different statistical models as if they were “true” values without measurement errors. Complications arise, however, if the latent \(\theta \) scores were estimated with non-ignorable measurement errors.

If a linear test when a fixed number of items is given to students, the resulting measurement error (or standard error, SE) typically follows a bowl shape with SE being smaller when the true latent trait is in the middle (e.g., Kolen, Hanson, & Brennan, 1992; Wang, 2015) of the \(\theta \) scale. When an adaptive test is given to students, the resulting SE is more of a uniform shape (e.g., Thompson & Weiss, 2011; van der Linden & Glas, 2010). The differential SE, depending on the true \(\uptheta \) level and test mode, complicates the treatment of measurement error issue in the subsequent statistical analysis.

There are quite a few studies that have accounted for the measurement errors in \(\hat{\theta }\) assuming a constant measurement error term. In other words, simple measurement error models precipitate corrections to estimate “true” variances and correlations from their “observed” counterparts. For instance, Hong and Yu (2007) analyzed the Early Childhood Longitudinal Study Kindergarten Cohort (ECLS-K) data using a multivariate hierarchical model to study the relationship between early-grade retention and children’s reading and math learning. Let \(Y_{\textit{tij}} \) denote child i’s T-scoreFootnote 1 in school j in Year t, then the level-1 model in their analysis is generically expressed as

The test reliability was then used to compute the error variance \(\sigma _{t}^{2} \) in each year. Although correctly accounting for measurement error improves the estimation precision, this treatment overlooks the fact that the measurement error of IRT \(\theta \) scores is not constant across the \(\theta \) scale. A statistically sound approach that follows through the assumption of IRT is to let \(e_{\textit{tij}} \sim N(0,\sigma _{\textit{tij}}^{2}\)); however, the relaxation of the common variance assumption in Eq. (1) imposes computational complexity to the model. The objective of this paper, therefore, is to investigate methods for addressing challenging measurement error issues in the two-stage approach. We need to acknowledge that this paper only focuses on the measurement errors occurred on the dependent variables, whereas there is extensive literature on dealing with measurement errors in covariates (i.e., independent variables). Methods for the latter scenario may include the method of moment (Carroll, et al., 2006; Fuller, 2006), simulation extrapolation (Carroll et al., 2006; Devanarayan & Stefanski, 2002), and latent regression (Bianconcini & Cagnone, 2012; Bollen, 1989; Skrondal & Rabe-Hesketh, 2004). For a comparison of methods, please refer to Lockwood and McCaffrey (2014).

The rest of the paper is organized as follows. First, we will introduce the multilevel model that is considered throughout the study. In other applications, both the measurement model and the structural model can take other forms as long as the latter is a linear mixed effects model, and all methods introduced in the paper still apply. Second, four different methods are introduced within the two-stage framework, including a naïve method. Then, a simulation study is designed to evaluate and compare the performance of different methods, followed by a real data example. A discussion is given in the end that summarizes the pros and cons of each method.

2 Models

The model is comprised of two main levels, the measurement model and structural model. In this paper, we will focus specifically on the linear mixed effects model (LME) as the structural model in stage II inference. In particular, we will base the discussion on the scenario of longitudinal assessment, i.e., modeling individual and group level growth trajectories of student latent abilities over time via the latent growth curve model (LGC). Because the LGC model belongs to the family of LME models, the methods introduced in this paper can be easily applied in all specific types of LME models for different nested structures.

At the measurement model level, the three-parameter logistic (3PL) model (Baker & Kim, 2004) is considered. The probability for a correct response \(y_{\textit{ijt}}\) at time \(t (t = 1,\ldots , T\)) for item \(j (j = 1,\ldots , J)\) and person \(i (i = 1,\ldots , N)\) can be written as

where D is a scaling constant that usually set to be 1.7. \(a_{jt}\), \(b_{jt}\), and \(c_{jt}\) are the discrimination, intercept, and pseudo-guessing parameter of item j at time t, and \(\theta _{it}\) is the ability of person i at time t. In longitudinal assessment, although the item parameters could differ across time (i.e., the subscript t is embedded for item parameters in (2)), anchor items need to be in place to link the scale across years (e.g., Wang, Kohli, Henn, 2016).

In the structural model level, we have a LME model with \(\varvec{\theta }_{i}\) as dependent variables written as follows

Considering the LGC model as a special case of (3), if assuming a unidimensional \(\theta _{i}\) is measured per time point, then both \(\varvec{\theta }_{i}\) and \({\varvec{e}}_{i}\) are T-by-1 vectors. \({\varvec{X}}_{i}\) and \({\varvec{Z}}\) are the T-by-p and T-by-q design matrices, and \({\varvec{\beta }} \) and \({\varvec{u}}_{i}\) are p-by-1 and q-by-1 vectors denoting fixed and random effects, respectively. T is the total number of time points. In a more general case, \({\varvec{Z}}\) can also differ across individuals (\({\varvec{Z}}_{i}\)).

For the rest of the paper, we consider a simplest linear growth pattern, i.e., \({{\varvec{X}}} \equiv {{\varvec{Z}}}= \left[ {{\begin{array}{c@{\quad }c} 1 &{} 0 \\ 1 &{} 1 \\ \vdots &{} \vdots \\ 1 &{} {T-1} \\ \end{array} }} \right] \). But the methods discussed can be easily generalized to the conditions when \({{\varvec{X}}}\) and \({\varvec{Z}}\) differ. For instance, if one is interested in the treatment effect, and let \(g_{i}\) denote the observed covariate of treatment, with \(g_{i}=1\) indicating person i belongs to the treatment group, and 0 otherwise. Then \({\varvec{X}}_{i}\) is updated as \({\varvec{X}}_{i}=({\varvec{Z}},g_{i}\times \mathbf 1 _{1\times 4})\), whereas Z stays the same. Similarly, if one is interested in the treatment by time interaction, then \({\varvec{X}}_{i}=({\varvec{Z}}, g_{i}\times {[0,1, \ldots ,T-1]}^{\mathrm {t}})\) where the superscript “t” denotes the transpose throughout the paper.

The random effects, \({\varvec{u}}_{i}\), are typically assumed to follow multivariate normal distribution,

and for simplicity, we assume an independent error structure, i.e., \(e_{it} \sim N(0,\sigma ^{2} )\).

If a multivariate latent trait (i.e., D dimensions) is measured at each time point, let \(\varvec{\theta }_{i}={[\theta _{i11},\ldots ,\theta _{i1T},\ldots ,\theta _{iD1},\ldots ,\theta _{iDT}]}^{t}\) with the first T elements refer to the latent trait at dimension 1 across T time points, Eq. (3) still holds. But \({{\varvec{X}}}\) becomes a (\(D\times T\)) -by-(\(D\times \textit{2}\)) matrix taking the form of \({\varvec{I}}_{D} \otimes \left[ {{\begin{array}{c@{\quad }c} 1 &{} 0 \\ 1 &{} 1 \\ \vdots &{} \vdots \\ 1 &{} {T-1} \\ \end{array} }} \right] \), where \({\varvec{I}}_{D}\) is an identity matrix of size D-by-D, and \(\otimes \) is the Kronecker product. \({\varvec{\beta }} =(\beta _{01},\ldots ,\beta _{0D},\beta _{11},\ldots ,\beta _{1D})^{t}\) and \({\varvec{u}}_{i}={(u_{i01},\ldots ,u_{i0D},u_{i11},\ldots ,u_{i1D})}^{t}\) both become (\(D\times \textit{2}\))-by-1 vectors of fixed and random effects, respectively.

3 Model Estimation

3.1 Unified One-Stage Estimation

To estimate the multilevel IRT model simultaneously, the current available estimation methods include, but are not limited to, the generalized linear and nonlinear methodologies described in De Boeck and Wilson (2004), the generalized linear latent and mixed model framework of Skrondal and Rabe-Hesketh (2004), Bayesian methodology of Lee and Song (2003) including the Gibbs sampler and Markov chain Monte Carlo (MCMC, Fox & Glas, 2001, 2003; Fox, 2010). These methods are suitable for a general family of models allowing linear/nonlinear relations among normal latent variables and a variety of indicator types (e.g., ordinal, binary).

Among them, the first two approaches require numerical integration and calculation of the likelihood, which becomes computationally prohibitive or even impossible when the model is complex or the number of variables is large. Rabe-Hesketh and Skrondal (2008) admitted that “estimation can be quite slow, especially if there are several random effects.” The Bayesian approach requires careful selection of prior distributions for each parameter, which might not come naturally for researchers who are unfamiliar with Bayesian methods. Other methods that supposedly alleviate the high-dimensional challenge (von Davier & Sinharay, 2007) include adaptive Gaussian quadrature (Pinheiro & Bates, 1995), limited-information weighted least squares (WLS), and graphical models approach (Rijmen, Vansteelandt, & De Boeck, 2008). All of these methods have proven to work well in respective studies. Even so, a divide-and-conquer two-stage estimation approach still has its own advantages (e.g., reasons presented at the beginning) and it is the main focus of this paper. Given the flexibility MCMC offers to deal with the 3PL model, we will use it as a comparison to the two-stage estimation methods.

3.2 Two-Stage Estimation

Let \({\varvec{\Psi }} = ({\varvec{\upbeta }},\Sigma _{u},\sigma ^{2}\)) denote the set of structural parameters of interest, and let \(\varvec{\vartheta }_{M} =({\varvec{a}},{\varvec{b}},{\varvec{c}})\) denote the set of item parameters pertaining only to the measurement part of the integrated model (Skrondal & Kuha, 2012). Throughout this paper, we assume the item parameters \(\varvec{\vartheta }_{M} =({\varvec{a}},{\varvec{b}},{\varvec{c}})\) are known to alleviate any propagation of errors (such as sampling error) from item parameter calibration. For readers who are concerned about item calibration errors, please refer to the method proposed in Liu and Yang (2018), namely the Bootstrap-calibrated interval estimation approach.

Within the divide-and-conquer two-stage estimation scheme, because the latent outcome variable \(\varvec{\theta }_{i}\) (for person i) is measured with error, instead of observing \(\varvec{\theta }_{i}\), one only observes \(\hat{{\varvec{\theta }}}_{i}\) from stage I IRT calibration, and

where \(\varvec{\varepsilon }_{i}\) is the vector of measurement errors with a mean 0 and covariance matrix, \({\varvec{\Sigma }}_{\theta _{i}}\). \({\varvec{\Sigma }}_{\theta _{i}}\) is also known as the error covariance matrix, the magnitude of which depends on many factors, including (1) test information at \(\varvec{\theta }_{i}\), which also depends on whether the test is delivered via linear mode or adaptive mode; and (2) IRT model data fit. In the first stage, both \(\hat{{\varvec{\theta }} }_{i}\) and \(\hat{{\varvec{\varepsilon }} }_{i}\) are estimated. Either the maximum a posteriori (MAP) or the expected a posteriori (EAP) is used to obtain the point estimate of \(\hat{{\varvec{\theta }} }_{i}\) along with the error covariance matrix estimate, \(\hat{{\varvec{\Sigma }} }_{\theta _{i}}\), for each person separately. Chang and Staut (1993) have shown that when test length is sufficiently long and when MLE is used, \(\varepsilon _{i}\) will follow normal distribution with mean 0 and variance proportional to the inverse of the Fisher information evaluated at true \(\theta _{i}\), i.e., \(\varvec{\Sigma }_{\theta _{i}} \approx I^{-1}(\theta _{i} )\). Their results can be generalized to multidimensional \({\varvec{\theta }}\)’s and to MAP (e.g., Wang, 2015). Even though true \(\theta _{i}\) is unknown in practice, we have \(\hat{\Sigma }_{\theta _{i}}\approx {\varvec{I}}^{-1}(\hat{{\varvec{\theta }}}_{i})\) by plugging in \(\hat{{\varvec{\theta }} }_{i}\) instead of \(\varvec{{\varvec{\theta }} }_{i}\). That is, using \({\varvec{I}}^{-1}(\hat{{\varvec{\theta }} }_{i})\) as a proxy to the error variance of \(\hat{{\varvec{\theta }}}_{i}\) is still viable as long as \(\hat{{\varvec{\theta }}}_{i}\) is close to the true value (e.g., Koedel, Leatherman, & Parons, 2012; Shang, 2012). Although Lockwood and McCaffrey (2014) argued that \(E[{\varvec{I}}^{-1}(\hat{{\varvec{\theta }}}_{i})]\) is likely an overestimate of \(E[{\varvec{I}}^{-1}(\varvec{\theta }_{i})]\), and such a positive bias can lead to systematic errors in measurement error correction based on test reliability, this bias is no longer problematic in our methods because we treat each \(\hat{{\varvec{\theta }}}_{i}\) and \(\hat{{\varvec{\Sigma }}}_{\theta _{i}}\) individually, and we do not need a reliability estimate from \(E[{\varvec{I}}^{-1}(\hat{{\varvec{\theta }}}_{i})\) to correct for measurement error.

Given the linear mixed effects model defined in Eq. (3), the likelihood of both random and fixed effects is therefore

where N denotes sample size and \(\phi (.)\) denotes the multivariate normal density. The likelihood in Eq. (5) assumes that the random effect follows a multivariate normal distribution with a covariance matrix of \(\Sigma _{u}\). A non-normal distribution of the random effect is also allowed if needed. Maximum likelihood estimation proceeds with integrating out the random effects first, leading to a marginal likelihood of

which needs to be maximized to find the solution of \({\hat{\varvec{\beta }}},{\hat{\sigma }}^{2},{\hat{\varvec{\Sigma }}}_{u}\). Then the individual coefficient \({\varvec{u}}_{i}\) will be predicted via the best linear unbiased predictor (BLUP).

Combining the linear mixed effects model in Eq. (3) with the measurement error model in (4), the likelihood in Eq. (5) is updated as

in which case both random coefficient \({\varvec{u}}_{i}\) and latent factors \(\varvec{\theta }_{i}\) need to be integrated to obtain the marginal likelihood. In Eq. (7), \(\varphi (.)\) denotes the density of the measurement error distribution. Therefore, the joint log-likelihood of \({\varvec{\Psi }} = ({\varvec{\upbeta }},\Sigma _{u}, \sigma ^{2})\) based off (7) is written as

This equation will be used throughout the following explication.

We need to emphasize that the discussion hereafter is based on the assumption that the measurement error follows normal or multivariate normal distribution with error covariance matrix \(\hat{{\varvec{\Sigma }}}_{\theta _{i}}\). Diakow (2013) suggested using Warm (1989)’s weighted maximum likelihood in stage I along with a more precise version of the asymptotic standard error (Magis & Raiche, 2012). As the paper unfolds below, the non-normal measurement error distribution is also allowed in the method described in Sect. 3.2.3. In fact, both methods provided in Sects. 3.2.2 and 3.2.3 are suitable for a level-1 variance-known problem (Raudenbush & Bryk, 2002, Chapter 7), and our goal is to provide an accurate method for secondary data analysis that is convenient and understandable for applied research (Diakow, 2013).

3.2.1 Method I: Marginalized MLE (MMLE)

When both \(\phi (.)\) and \(\varphi (.)\) in Eq. (8) follow or can be well approximated by a normal distribution (or multivariate normal), it can be derived that the marginal likelihood of the combined model, after integrating out both random coefficient \({\varvec{u}}_{i}\) and latent factors \(\varvec{\theta }_{i}\) in (8), has a closed form up to a certain constant (for detailed derivations, please see the “Appendix A” section) expressed below. To be specific, given the joint likelihood in Eq. (7), the marginal log-likelihood of the target model parameters can be shown to be

where

In the above equations, \(|\cdot |\) denotes the determinant of a matrix, and \(\Vert \Vert ^{2}\) denotes an inner product of a vector. The closed-form marginal likelihood for the longitudinal MIRT model is also presented in the “Appendix A” section.

The MMLE proceeds with maximizing the closed-form marginal log-likelihood in Eq. (9). The “optim” function in “stats” library of R is used for solving the maximization problem. This function provides general purpose optimization based on Nelder–Mead, quasi-Newton, and conjugate gradient algorithms. It allows for user-specified box constraints on parameters. Instead of using the default Nelder–Mead method (Nelder & Mead, 1965) which tends to be slow, we choose to use “L-BGFS-B” method available in the function because our objective function in Eq. (9) is differentiable. In particular, BGFS is the quasi-Newton method proposed by Broyden (1970), Fletcher (1970), Goldfarb (1970), and Shanno (1970), which uses both function values and gradients to construct a surface to be optimized. L-BGFS-B is then an extension of BGFS (Byrd et al., 1995) that allows box constraints in which each variable is given a lower and/or upper bound as long as the initial values satisfy the constraints. In our application, the constraints include \(-\,1000< \beta _{0},\beta _{1}< 1000\), \(.001< \sigma _{u_{0}},\sigma _{u_{1}}< 5\), \(-\,.99< \rho < .99\), and \(.001< \sigma ^{2}< 1000\).Footnote 2 The initial values for all parameters are set at .1. Both the parameter point estimates and their standard errors are output from the function, with the former being the final estimates upon convergence, and the latter obtained from the Hessian matrix. In some extreme cases when Hessian matrix is not available, we use numeric differentiation available in the “numDeriv” package instead.

3.2.2 Method II: Expectation–Maximization (EM)

In this section, we will describe an alternative method to resolve the challenge of high-dimensional integration involved in the marginal likelihood. It is complementary to Method I when the closed-form marginal likelihood is not available, or when the numeric optimization fails to converge properly.

In particular, when treating the random effects and latent variables, \({\varvec{u}}_{i}\) and \(\varvec{\theta }_{i}\), as missing data, this method proceeds iteratively between the expectation (E) and maximization (M) steps until convergence. At the (\(m+1\))th iteration, in the E-step, take the expectation of log-likelihood with respect to the posterior distribution of \({\varvec{u}}_{i}\) and \(\varvec{\theta }_{i}\) as

where \(f(\varvec{\theta }_{i},{\varvec{u}}_{i} \vert {\hat{\varvec{\theta }}}_{i},{\hat{\varvec{\Sigma }}}_{\theta _{i} },{\hat{\varvec{\psi }}}^{(m)})\) denotes the posterior distribution, and \(l(\varvec{\psi },\varvec{\theta }_{i},{\varvec{u}}_{i})\) takes the form in Eq. (8). The integration in (12) can be obtained easily when one samples directly from the posterior distribution, such that

where \((\varvec{\theta }_{i}^{q},{\varvec{u}}_{i}^{q} )\) is the qth draw from the posterior distribution, and Q is the total number of Monte Carlo draws. This Monte Carlo-based integration is appropriate even if the measurement error or random effects do not follow normal distributions, and hence, we consider this approach more general than the MMLE method.

If both the measurement error and random effects are indeed normal, then the conditional expectation in (12) has a closed form which can be directly computed without resorting to numeric integration. That is, given \(\hat{{\varvec{\theta }}}_{i}\) and \(\hat{\Sigma }_{\theta _{i}}^{-1}\) from stage I estimation and \({\hat{\varvec{\psi }}}^{(m)}\) from the mth EM cycle, the joint posterior distribution of (\(\varvec{\theta }_{i},{\varvec{u}}_{i}\)) follows a multivariate normal, with a variance of

and a mean of

M-step proceeds with maximizing the conditional expectation in (12) with respect to \(\varvec{\psi }\). Given the form of \(l(\varvec{\psi },\varvec{\theta }_{i},{\varvec{u}}_{i} )\) in Eq. (8), \({\varvec{\beta }}\), \(\varvec{\Sigma }_{u}\), \(\sigma ^{2}\) all have the closed-form solution as follows, which greatly simplifies the maximization step,

The notation of \(E^{(m)}\) indicates that, at the (\(m+1\))th EM cycle, the expected values in (16)–(18) are obtained from the first and second moments of the posterior multivariate normal distribution \(f(\varvec{\theta }_{i},{\varvec{u}}_{i} \vert {\hat{\varvec{\theta }}}_{i},{\hat{\varvec{\Sigma }}}_{\theta _{i} },{\hat{\varvec{\psi }}}^{(m)})\) with mean and variance specified in (14) and (15). Equation (17) adopts the expectation conditional maximization (ECM) idea in Meng and Rubin (1993) in that the closed-form solution for residual variance only exists conditioning on the updated parameter \({\hat{\varvec{\beta }}}^{(m+1)}\). The ECM algorithm shares all the appealing convergence properties of EM.

If the measurement model is the multidimensional IRT model with D dimensions, and if the residual error covariance matrix is still assumed to be diagonal, then the aforementioned EM algorithm only needs to be modified minimally. In particular, in the E-step, one simply needs to replace \({\varvec{I}}_{T}\) with \({\varvec{I}}_{DT}\), whereas \({\varvec{\Sigma }}_{u}\) and \({\varvec{X}}_{i}\) take the updated forms. In the M-step, at the (\(m+1\))th iteration, the closed-form update for \(\hat{{\varvec{\beta }}}^{(m+1)}\) stays exactly the same as in (16). The update for \(\hat{{\sigma }}^{2(m+1)}\) is modified as

The standard error of the parameter estimates is obtained using the supplemented EM algorithm (Dempster, Laird, & Rubin, 1977; Cai, 2008). The principle idea is reiterated briefly as follows. The large sample error covariance matrix of MLE is known to be

where \({\varvec{I}}({\hat{\varvec{\psi }}}| {{\varvec{Y}}})\) is the Fisher information matrix based on observed response data, Y. \({\varvec{I}}_{c} ({\hat{\varvec{\psi }}})\) is the natural by-product of the E-step as it is simply the second derivative of Eq. (12) with respect to all elements in \({\varvec{\varPsi }}\). \(\varvec{\Delta }({\hat{\varvec{\psi }}})\) is the fraction of missing information, which can be obtained via numerical differentiation as

where \(M(\varvec{\psi })\) defines the vector-valued EM map as \(\varvec{\psi }^{(m+1)}=M(\varvec{\psi }^{(m)})\). Upon convergence,\({\hat{\varvec{\psi }}}=M({\hat{\varvec{\psi }}})\). For details regarding the calculation of \(\varvec{\Delta }({\hat{\varvec{\psi }}})\) in general, please refer to Cai (2008) or Tian et al. (2013). We use a direct forward difference method (i.e., Eqs. 8 and 9 in Tian et al., 2013) with a perturbation tuning parameter \(\eta =1\). For details with respect to the specific form of \({\varvec{I}}_{c} ({\hat{\varvec{\psi }}})\), please see the “Appendix B” section.

3.2.3 Method III: Moment Estimation Method

If framing the estimation problem from a slightly different perspective, the linear mixed effects model in Eq. (3) actually leads to the mean and covariance structure as follows,

It implies that to recover the structural parameters, \({\varvec{\Psi }} =({\varvec{\upbeta }}, \varvec{\Sigma }_{u}, \sigma ^{2})\), only the \({\hat{\varvec{\mu }}}_{\theta }\) and \({{\hat{{{\varvec{\Sigma }}}}}}_{\theta } \) (i.e., estimated population mean and covariance of \({\varvec{\theta }}\)) need to be obtained in stage I, rather than the individual point estimate of \(\varvec{\theta }_{i}\) and \(\Sigma _{\theta _{i}}\). This is consistent with the traditional wisdom in structural equation modeling (SEM), in which the inputs can be the mean and covariance matrix rather than the raw data. In our application, we assume \(\theta _{it}\)’s follow multivariate normal in the population. When this assumption is satisfied, the mean and covariance contain all information (i.e., sufficient statistics), and when this assumption is violated, this method may still provide robust, consistent parameter estimates.

In stage I, the \({\varvec{\upmu }}_{{\varvec{\theta }}}\), and \({\varvec{\Sigma }}_{{\varvec{\theta }}}\) are estimated from raw response data via the EM algorithm (Mislevy, Beaton, Kaplan, & Sheehan, 1992). In particular, without imposing any particular growth pattern on latent traits over time, the full joint likelihood is

where \(\phi (\cdot )\) again denotes multivariate normal density. Then in the E-step, for the (\(m+1\))th cycle, the conditional expectation of (\(\upmu _{\varvec{\theta }}, \Sigma _{\varvec{\theta }})\) is

where the integral can be obtained via Monte Carlo integration by drawing Q samples of \(\varvec{\theta }^{q}\)’s from multivariate normal with mean \({\hat{\varvec{\mu }}}_{\theta }^{(m)} \) and covariance \({{\hat{\varvec{\Sigma }}}}_{\theta }^{(m)} \).

M-step follows with maximizing the conditional expectation in (24) with respect to \((\varvec{\mu }_{\theta } ,{{\varvec{\Sigma }}}_{\theta } )\), using the following closed-form expressions,

The estimators in (25) and (26) are maximum likelihood estimates of \((\upmu _{\varvec{\theta }}, \Sigma _{\varvec{\theta }})\), consistent in sample size (i.e., N) regardless of test length (Mislevy et al., 1992).

In stage II, \(\hat{{\varvec{\varPsi }}}\) can be estimated using any off-the-shelf SEM packages, using \({\hat{\varvec{\mu }}}_{\theta } \) and \({{\hat{\varvec{\Sigma }}}}_{\theta } \) as input. An example is the R package “lavaan” (Rosseel, 2012), from which the MLE estimates of \(\hat{{\varvec{\varPsi }}}\) are provided. Or in essence, the generalized least squares solution to \({\varvec{\beta }}\) is \({\hat{\varvec{\beta }}}=({\varvec{X}}^{\mathrm{T}}{\varvec{V}}^{-1}{\varvec{X}})^{-1}{\varvec{X}}^{\mathrm{T}}{\varvec{V}}^{-1}\varvec{\mu }_{\theta }\) and the MLE of \(\hat{\Sigma }_{u}\) and \(\hat{\sigma }^{2}\) can be found based on the likelihood function

where “tr” denotes the trace of a matrix. As there are no closed-form solutions to (27), Newton–Raphson method is usually used (e.g., Lindstrom & Bates, 1988). The only input in (27) is \({{\hat{\varvec{\Sigma }}}}_{\theta } \) from stage I. This method is extremely fast computationally. Because the individual latent score \(\hat{\theta }_{i}\) is not needed in stage II estimation, the measurement error challenge vanishes.

Please note that this moment estimation method could also apply when \({\varvec{X}}_{i}\) differs across individuals, i.e., when evaluating treatment effect is of interest. In this case, the sample mean of \({\varvec{X}}_{i}\) along with \(\hat{\upmu }_{\theta }\) estimated from stage I will be treated as the mean structure input, whereas an expanded covariance matrix including \({{\hat{\varvec{\Sigma }}}}_{\theta } \) as well as the covariance between \({\varvec{X}}_{i}\) and \({\varvec{\theta }}\) will be put into “lavaan.” In this regard, stage II estimation needs minimum update, whereas stage I estimation (i.e., Eqs. 25, 26) need to be updated accordingly.

In sum, the two-stage methods introduced in Sects. 3.2.1 and 3.2.2 rely on the assumption that \(\hat{{\varvec{\theta }}}_{i}\) and \(\hat{\Sigma }_{\theta _{i}}\) are asymptotically unbiased. Whereas previous methods might suffer from such divide-and-conquer strategy due to finite sample bias in \(\hat{{\varvec{\theta }}}_{i}\) and \(\hat{\Sigma }_{\theta _{i}}\), the third moment estimation method should be fine theoretically. One limitation of the method, however, is that sample size needs to be large enough to enable accurate (and consistent) recovery of \({\hat{\varvec{\mu }}}_{\theta } \) and \({{\hat{\varvec{\Sigma }}}}_{\theta } \) in stage I, especially \({{\hat{\varvec{\Sigma }}}}_{\theta } \) has to be positive definite. The MMLE and EM methods, on the other hand, do not seem to be affected much by small sample size.

4 Simulation Study

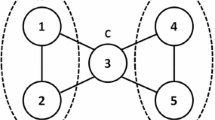

Two simulation studies were conducted to evaluate the performance of five different methods, they are: (1) direct maximization of the closed-form marginal likelihood (MMLE), (2) the EM algorithm, (3) the moment estimation method, (4) the naïve two-stage estimation, and (5) the one-stage MCMC estimation. The first simulation study focused on the unidimensional 3PL model as the measurement model, along with the latent growth curve model as the structural model, whereas the second simulation study focused on the two-dimensional compensatory IRT model along with the associative latent growth curve model. Throughout the simulation studies, all item parameters were fixed at known values to eliminate any potential contamination of item parameter estimation bias on the other targeted parameters. In addition, only dichotomous items were considered, but the 3PL and M3PL model could be easily replaced by the polytomous response models if needed.

4.1 Study I

4.1.1 Design

The fixed and manipulated factors in the study were drawn from the previous literature. Two factors were manipulated: examinee sample size (200 vs. 2000) and covariance matrix of the random effects (Raudenbush & Liu, 2000; Ye, 2016). The 200 sample size is typically seen in psychology research, whereas the 2000 sample size is seen in education research. The medium and small covariance matrix of \(\varvec{\Sigma }_{u}\) were set as follows (Raudenbush & Liu, 2000; Ye, 2016),

The number of measurement waves was fixed at 4 (Khoo, Wes, Wu, & Kwok, 2006; Ye, 2016), and test length was fixed at 25, which is similar to the test length for science subject in NELS (National Educational Longitudinal Study).

In terms of fixed effects, the mean intercept was set at 0 (i.e., \(\beta _{0}=0\)), and mean slope was set at .15 (i.e., \(\beta _{1}=.15\)). Given the medium slope variance of .1 specified above, the mean slope of .15 leads to a medium standardized effect size of .5 (see Raudenbush & Liu, 2000). Regarding the 3PL item parameters, a-parameters were drawn from Uniform (1.5, 2.5), b-parameters were drawn from Normal (0, 1), and c-parameters were drawn from Uniform (.1, .2). The scaling factor D was set at 1.7. Residual variance was \(\sigma _{e}^{2}=\sigma ^{2}=.15\) (Kohli et al., 2015).

The details of the MCMC method including the priors are presented in the “Appendix C” section. As shown in the “Appendix C” section, conjugate priors are used whenever possible to enable direct Gibbs sampler. However, because we considered the logistic model throughout the paper, the Metropolis–Hastings algorithm is used to construct the Markov chains of certain parameters (i.e., \({\varvec{\theta }}\)). Otherwise, when the normal Ogive model is considered, the efficiency of MCMC will be further improved.

In stage I estimation, a combination of maximum likelihood estimator (MLE) and maximum a posteriori (MAP) estimator was used. That is, MLE was considered first and if the absolute value of the estimate was larger than 3, then the estimation method switched to MAP with a normal prior N(0, 5). The recovery of the structural model parameters is the focus of this report, including mean intercept (\(\beta _{0}\)), slope (\(\beta _{1},\)), residual variance (\(\sigma ^{2}\)), and covariance matrix of random effects (\({\Sigma }_{u}\)). For these parameters, the average bias was computed as the mean of all bias estimates from all replications. Taking the mean intercept parameter as an example, the relative bias and RMSE were computed as \(\frac{1}{R}\sum \nolimits _{r=1}^R {\frac{(\hat{{\beta }}_{0}^{r} -\beta _{0} )}{\beta _{0} }}\) and \(\sqrt{\frac{1}{R}\sum \nolimits _{r=1}^R {(\hat{{\beta }}_{0}^{r} -\beta _{0})^{2}}}\). Here, R denotes the total number of replications, and \(\hat{{\beta }}_{0}^{r} \) denotes the estimate from the rth replication. 50 replications were conducted per condition. In addition, the average estimated standard error for every parameter from each replication was computed, and the final mean values across replications were reported.

4.1.2 Results

Table 1 presents the bias and relative bias (in the parentheses) for the structural model parameters. Several trends can be spotted from this table. First and consistent with our expectation, the naïve two-stage method generated the largest bias for the residual variance \(\sigma ^{2}\), and in most cases, the largest bias for the elements in the random effects covariance matrix \({\Sigma }_{u}\) (e.g., \(\tau _{00}\)). However, not all elements in \({\Sigma }_{u}\) suffered from high bias, which might be due to the unsystematic measurement errors across time (i.e., the measurement error is not in an explicit increasing or decreasing order). Second, both MMLE and EM method tended to perform well in most conditions by reducing the bias of \(\sigma ^{2}\) and elements in \({\Sigma }_{u}\). There is no appreciable difference between these two methods. Although the MMLE works with the closed-form marginal likelihood, it circumvents the numerical integration that subjects to Monte Carlo error, and the optimization in the six-dimensional space can still cause numeric error. On the other hand, the EM works either with Monte Carlo-based integration or closed-form integration in the E-step, but the closed-form solution in M-step avoids numeric optimization. Therefore, numeric approximations appear in different steps of these two methods, resulting in slight to no differences between them. Third, the moment estimation method generated the most accurate parameter recovery among all methods as this method does not depend on the assumption of normal measurement error. Hence, when the population distribution is assumed normal, this method is recommended. Unsurprisingly, the MCMC method also produced accurate parameter estimates, and in the cases when sample size is large, the best parameter estimates among all methods. It is only when the sample size is small and when the covariance matrix of random effects is small that MCMC yielded slightly higher bias in \(\hat{\Sigma }_{u}\). This could be explained by the known effect of “regression toward mean” for Bayesian estimates, and such an effect will diminish when sample size increases.

In terms of the manipulated factors, the true value of the random effects covariance matrix did not seem to affect the results much, so did not the sample size. The parameters (especially \(\beta _{0}\), \(\beta _{1}\), and \(\sigma ^{2})\) from the moment estimation method seemed to improve slightly with larger sample size, simply because the mean and covariance matrix of \(\theta \) recovered better in stage I with a larger sample size. The other three methods treated each individual \(\theta _{i}\) from stage I as a fallible estimate from its own measurement error model (i.e., Eq. 5), so increasing sample size does not help reduce the measurement error. Overall, our observations of results are similar to Diakow (2013)’s conclusion where she used gllamm command (Rabe-Hesketh, Skrondal & Pickles, 2004) in Stata (StatCorp, 2011) with adaptive Gauss–Hermite quadrature method.

On a separate note, because the accurate estimation of \(\hat{\theta }_{i}\) and \(\hat{\Sigma }_{\theta _{i}}\) is pivotal to the success of the proposed MMLE and EM methods, Tables 2 and 3 present \(\hat{\theta }_{i}\) and \(\hat{\Sigma }_{\theta _{i}}\) recovery results. Note that for Table 3, the bias of the measurement error estimate is computed as \(\sqrt{I^{-1}(\hat{{\varvec{\theta }}}_{i})} -\sqrt{I^{-1}(\varvec{\theta }_{i})} \) for person i where \(\varvec{\theta }_{i}\) is the true value for person i. Then the average bias is computed across all individuals, and finally, the medium value is obtained across replications. The medium is used instead of mean because there are a couple of outliers that may severely inflate the bias. As shown in Table 2, MCMC produced the smallest absolute bias and RMSE simply because it uses information from all time points. The estimation precision from MLE/MAP is also acceptable. A clear trend is that the RMSE is evidently larger at later time points, which is due to the way we simulated item parameters, resulting in a lack of “difficult” items. Regarding the recovery of the measurement error, \(\hat{\Sigma }_{\theta _{i}}\), Table 3 shows that on average, there is about 10% bias. Therefore, it is expected that if Warm’s WLE and bias-corrected measurement error computation is used (Diakow, 2013, Wang, 2015), the improvement of MMLE and EM over naïve method should be more salient.

Table 4 presents the average standard error (SE) of all structural model parameters for different methods under different conditions. Consistent with our expectation, the naïve method generated higher SE for all parameters compared to MMLE and EM methods under all conditions. The SEs from MCMC were also slightly high because they contained Monte Carlo sampling error by nature. Again, the level of covariance (i.e., \(\Sigma _{u}\)) did not affect the magnitude of SE much, and EM yielded slightly lower standard error than MMLE, but the difference is marginal. The moment estimation method generated slightly higher SE because it did not take into account all individual information in stage I but rather only used mean and covariance estimates, hence “limited” information. For all methods, SE dropped when sample size increased.

4.2 Study II

4.2.1 Design

In this second simulation study, the two-dimensional simple structure IRT model was used. The test length was fixed at 40 at each measurement wave; hence, there were 20 items loading on each dimension. The item parameters per domain were simulated the same as in Study I. The only difference is, the mean of the difficulty parameter increased over time, which were taken to be the average of the mean \(\theta \) from the two dimensions at the corresponding time point. This way, the items tend to align better with \(\theta \) as the respective time points. The number of measurement waves were also fixed at 4, and the fixed effects were set at \(\beta =[0,0, .15,.15]\). Here the first two elements refer to the mean intercepts and the last two elements refer to the mean slopes. Residual variance was fixed at \(\sigma ^{2}=.15\) for simplicity. Given that the size of the random effects covariance matrix did not affect the results much from study I, we decided to fix the covariance matrix as

As shown above, the intercepts and slopes were uncorrelated, whereas the two intercepts correlated and the two slopes correlated. This simplification resulted in a reduction of the total number of parameters, which, to some extent, benefited the MMLE method. This is because in MMLE, larger number of parameters means searching in a high-dimensional space. The EM method was not affected, however, because of the closed-form solution in both the E-step and the M-step. But similar constraints were still added in the EM estimation to make a fair comparison.

4.2.2 Results

Table 5 presents the bias and relative bias (in the parenthesis) of the structural model parameters. First of all, as expected, the MCMC method produced the most accurate parameter estimates for all parameters under both conditions. Second, consistent with the findings from the previous simulation study, all methods produced acceptable fixed parameter estimates, and the bias for \(\beta _{01}\) and \(\beta _{02}\) are second smallest for the moment estimation method. This may be because, with slightly shorter test length (20 per dimension vs. 25 from study I), the individual \(\hat{\theta }\) and its SE may be prone to larger error, whereas the population mean and covariance estimates are less affected. However, the difference is not salient. The naïve method again yielded the largest positive bias for residual variance (\(\sigma ^{2}\)) and intercept variance (\(\sigma _{u_{01}}^{2}\) and \(\sigma _{u_{02}}^{2}\)). The moment estimation method, on the other hand, resulted in slightly large negative bias for residual variance but it generated accurate slope variance estimates. In contrast, the other three methods resulted in slightly negative bias for slope variance, and naïve method even outperformed the other two by a little margin. These results match both Diakow (2013) and Verhelst (2010), who found that in the hierarchical linear modeling, “within-cluster variance is overestimated by the naïve method while between-cluster variance is recovered.”

Tables 6 and 7 present the recovery of \(\varvec{\theta }_{i}\) and \({\varvec{\Sigma }}_{\varvec{\theta }_{i}}\), respectively. In general, the MCMC produced more accurate \(\hat{{\varvec{\theta }} }_{i}\) estimates than the MLE/MAP method unsurprisingly. The RMSE increases slightly at a later time also due to lack of suitable items for the certain range of \(\theta \). As to the recovery of the measurement error, while the relative bias is around 10% for the first three time points, which is similar to the results in Table 5, the relative bias drops considerably for the last time point and the bias itself increases dramatically. This is again because of the mismatch between the item difficulties and \(\theta \) at time 4. From the LGC model where true \(\theta \)’s were simulated, the ranges of \(\theta \) are (\({-}\) 2.5, 2.5), (\({-}\) 2.5, 3), (\({-}\) 3, 4), and (\({-}\) 4, 6) for the four time points, respectively. However, the variance of item difficulty was fixed at 1 across all time points, so there were not enough items with extreme difficulty levels for extreme \(\theta \)’s at time 4. It is anticipated that both the RMSE in Table 6 and the measurement error bias will decrease if items with wider difficulty levels are added.

Table 8 presents the estimated standard error for structural parameters. Overall, the results are consistent with the previous findings that the naïve method generated somewhat larger standard error because “the biased estimates of the variance components might affect the estimated standard errors of the regression coefficients” (Diakow, 2013).

5 A Real Data Illustration

In this section, we briefly compared the performance of five methods using a real data example from the National Educational Longitudinal Study 88 (NELS 88). A nationally representative sample of approximately 24,500 students were tracked via multiple cognitive batteries from 8th to 12th grade (the first three studies) in years 1988, 1990, and 1992. The science subject data were used in this section. The sample size was 7282 after initial data cleaning, and we used list-wise deletion to eliminate the effect of missing dataFootnote 3. The data contain binary responses to 25 items in each year. The true item parameters were obtained from NELS 88 psychometrics report (https://nces.ed.gov/pubs91/91468.pdf). The mean discrimination parameters were .85, .95, and .95 for the three measurement occasions, with the standard deviation of .29, .30, and .30, respectively. The mean and standard deviation of difficulty parameters were (− .28, .10, .22) and (.90, .71, .96), respectively. The mean and standard deviation of guessing parameters were (.20, .19, .18) and (.14, .13, .12), respectively. In stage I analysis, the unidimensional 3PL model was considered, and both the MLE estimation for individual ability (\(\hat{\theta }^{\mathrm{MLE}})\) and the EM algorithm for population mean and covariance were obtained. The estimated population mean and covariance were \(\hat{\upmu } = (-\,.43, .08, .28)\) and \(\hat{\Sigma }=\left[ {\begin{array}{c@{\quad }c@{\quad }c} .92 &{} .77 &{} .77\\ .77 &{} .96 &{} .84\\ .77 &{} .84 &{} .96\\ \end{array} } \right] \), whereas the sample mean and covariance estimates from \(\hat{\theta }^{\mathrm{MLE}}\) were \(\hat{\upmu }=(-\,.43, .09, .28)\) and \(\hat{\Sigma }=\left[ {\begin{array}{c@{\quad }c@{\quad }c} 1.25 &{} .89 &{} .86\\ .89 &{} 1.31 &{} .98\\ .86 &{} .98 &{} 1.26\\ \end{array} } \right] \). The two means are close, whereas the sample variances were larger.

Table 9 presents the parameter estimates and their standard error (in the parenthesis) for the five different methods. As reflected in Table 9, the fixed effects estimates from different methods were close. The naïve method, as expected, resulted in largest residual variance and intercept variance estimates. Both MMLE and EM tended to yield smaller variance estimates, which are consistent with the findings in Diakow (2013). This is because the random variances in the data can actually be decomposed as measurement error, randomness across individuals (random effects), and randomness within individuals (i.e., residual error). By actively incorporating the measurement error term in the model, the other two variances were reduced.

Also of note is that the estimated measurement error obtained in stage I for extreme \(\hat{\theta }^{\mathrm{MLE}}\) (i.e., close to \({-}\) 3 or 3) could be over 1 (in particular for measurement waves 2 and 3) due to lack of information in the tests for students with extreme abilities. In this case, the imprecision in the estimated measurement error could adversely affect the parameter estimates in the MMLE and EM methods (Diakow, 2013).

6 Discussion

In this paper, we considered three model estimation methods for (secondary) data analysis when the outcome variable in a linear mixed effects model is latent and therefore measured with error. All of them fall within the scheme of two-stage estimation that embraces the advantages of “divide-and-conquer” strategy. Such advantages include convenience for model calibration and fit evaluation, avoidance of improper solutions, and convenience of secondary data analysis. The last aspect is especially appealing from a practical perspective because oftentimes, the raw response data are considered restricted-use data and not publicly available, whereas \(\hat{\theta }\) (or certain linear transformation of it) with its SE are publicly available.

The three methods explored in the study overcome the limitation of the naïve two-stage estimation that ignores the measurement errors in latent trait estimates (\(\hat{\theta })\) when treating them as dependent variables. It is known that ignoring the measurement error in \(\hat{\theta }\) when \(\hat{\theta }\) is treated as a dependent variable still yields a consistent and unbiased estimate of fixed effects (i.e., \({\varvec{\beta }}\)), but the standard error of \({\varvec{\beta }}\) will be inflated, and the random effects estimates (i.e., \(\hat{{\varvec{\varSigma }}}_{u}\)) as well as residual variances will be distorted. For the MMLE and EM methods, the point estimate \(\hat{\theta }_{i}\) and its corresponding measurement error for each student per time point are obtained in stage I measurement model calibration. And these two pieces of information become the key input for stage II estimation. The moment estimation method, on the other hand, only needs population estimates of the mean and covariance matrix from stage I as input.

To elaborate, the MMLE method builds upon the assumption of (multivariate) normal measurement errors such that the marginal joint likelihood of the model parameters can be written in a closed form. This closed-form marginal likelihood is then directly maximized to obtain parameter estimates. Neither the known challenge of curse of dimensionality (i.e., numerical approximation of a high-dimensional integration) nor the lengthy sampling iterations is an issue any more. Comparing to MMLE, the EM method has greater flexibility because it no longer requires the (multivariate) normal measurement error, which may not always be satisfied in practice especially when there are few items per dimension. Although in this paper and in the simulation studies, we still assume the measurement error of \(\hat{\theta }\) follows normal/multivariate normal just to check the feasibility of the algorithm, it can be modified to incorporate non-normal measurement error cases.

The modification can be established based on the importance sampling idea. The critical piece to facilitate the entire importance sampling machinery is the change-of-measure sampling distribution, \(H(\varvec{\theta }_{i},{\varvec{u}}_{i})\). Regardless of whether or not the multivariate normality assumption is satisfied, \(H(\varvec{\theta }_{i},{\varvec{u}}_{i})\) can take the form of joint multivariate normal because it serves as a close approximation to the actual (and sometimes complicated) joint distribution of\( (\varvec{\theta }_{i},{\varvec{u}}_{i})\). Moreover, the random values drawn from the sampling distribution of \(H( \varvec{\theta }_{i},{\varvec{u}}_{i} )\) are all independent, as opposed to the correlated draws from Gibbs or Metropolis–Hastings sampler in MCMC. The form of \(H( \varvec{\theta }_{i},{\varvec{u}}_{i})\) can be derived based on the results from stage I, and drawing samples from multivariate normal distribution is very easy; hence, the numerical approximation to the expectation in EM becomes quite straightforward.

The proposed MMLE and EM are based on the measurement error model that is essentially a random effects meta-regression (Raudenbush & Bryk, 1985; Raudenbush & Bryk, 2002, chapter 7), and it is in the broader framework for considering second-stage estimates in the presence of heteroscedasticity (Buonaccorsi, 1996). In particular, Buonaccorsi (1996) derived unbiased estimates of the structural model parameters (i.e., \(\Sigma _{u}\)) under different specific forms of heteroscedasticity. Because the conditional standard error of measurement from the 3PL model is a nonlinear function of both item parameters and \(\uptheta \), Buonaccorsi’s (1996) derived results may not directly apply. However, the takeaway message is the analytic results hold under the assumption of conditionally unbiased estimators and conditionally unbiased standard errors in stage I. Therefore, it is of paramount importance to obtain reliable \(\hat{\theta }\) estimates in stage I. Diakow (2013) suggested using weighted maximum likelihood (WLE, Warm, 1989), and it is promising to check in the future for both unidimensional models and multidimensional models (Wang, 2015).

The plausible value multiple-imputation method is another method of addressing measurement error issues in large-scale educational statistical inference. The statistical theory of this method is that, as long as the plausible values are constructed from the results of a comprehensive extensive marginal analysis, population characteristics can be estimated accurately without attempting to produce accurate point estimates for individual students (Sirotnik & Wellington, 1977; Mislevy, et al., 1992). Because most imputation procedures available in standard statistical software packages (e.g., SAS, Stata, and SPSS) assume that observations are independent, research on imputation strategies in the context of linear mixed effects models (or multilevel models) is still limited. From a theoretical perspective, using a multilevel model at the imputation stage is recommended to ensure congeniality between the imputation model and the model used by the analyst (Meng, 1994; Drechsler, 2015). Several researches have demonstrated plausible values drawn from a simplified model without accounting for higher-level dependency yielded substantial bias for random effects and negligible bias for fixed effects in secondary analysis (Monseur & Adams, 2009; Diakow, 2010; Drechsler, 2015). Future research could compare the proposed methods with the plausible value approach.

The two methods considered in the paper (MMLE and EM) account for the potentially non-constant error variance in the dependent variable by including a measurement error model with heteroscedastic variance at the lowest level of the multilevel model. We consider these two methods convenient and useful alternative to the well-studied multiple-imputation method. One profound advantage of the proposed methods is that is does not require a correct conditioning model, which is required in the multiple-imputation method. This is important because it is almost infeasible to find, and to sample from, a correct conditioning model that is exhaustive of all possible nesting structures and secondary analyses are impossible to predict. However, these two proposed methods do rely on the precision of \(\hat{\theta }\) and its SE estimates.

In this paper, we provide technical details for the three two-stage methods for interested readers to replicate and extend our study for other types of linear or nonlinear mixed effects models. The source code of all methods will also make available to readers upon request. On the other hand, the combined model (e.g., Eq. 7) could potentially be fitted using off-the-shelf specialized software packages that can handle heteroscedastic variance at the lowest level, such as the gllamm command (Rabe-Hesketh, et al., 2004) in Stata (StatCorp, 2011) and HLM (Raudenbush, Bryk & Congdon, 2004).

There are two limitations of the study that worth mentioning. First, the IRT item parameters are assumed known throughout the study. If in case the calibration sample size is small that the sampling error can no longer be ignored, the Bootstrap-calibrated interval estimates for \(\theta \) (Liu & Yang, 2018) could be applied in stage I of the proposed two-stage framework. Second, while we focused only on the model parameters’ point estimates and standard error estimates, future studies could go one step further to evaluate the power of detecting significant covariates (Ye, 2016). For that purpose, the simulation design will focus on manipulating the effect size of the covariate (treatment effect) and the amount of measurement error (which could be manipulated by test length).

Notes

The T-score is a standardized score, which was in fact a transformation of an IRT \(\theta \) score.

Originally, \({\varvec{\Sigma }}_{u}\) needs to be constrained to be nonnegative definite. However, this is not a box constraint that “optim” function can handle. We therefore impose constraints on the variance and correlation terms.

We used the list-wise deletion because we wanted to create a complete data set for illustration. Our intention was to evaluate the performance of different methods without possible interference of missing data. Because we used the NELS provided item parameters and because our structural model is simple, the possible bias introduced by list-wise deletion may be ignored.

References

Adams, R. J., Wilson, M., & Wu, M. (1997). Multilevel item response models: An approach to errors in variables regression. Journal of Educational and Behavioral Statistics, 22(1), 47–76.

Anderson, J. C., & Gerbing, D. W. (1984). The effect of sampling error on convergence, improper solutions, and goodness-of-fit indices for maximum likelihood confirmatory factor analysis. Psychometrika, 49(2), 155–173.

Anderson, J. C., & Gerbing, D. W. (1988). Structural equation modeling in practice: A review and recommended two-step approach. Psychological Bulletin, 103, 411–423.

Bacharach, V. R., Baumeister, A. A., & Furr, R. M. (2003). Racial and gender science achievement gaps in secondary education. The Journal of Genetic Psychology, 164(1), 115–126.

Baker, F. B., & Kim, S.-H. (2004). Item response theory: Parameter estimation techniques. NewYork: Dekker.

Bianconcini, S., & Cagnone, S. (2012). A general multivariate latent growth model with applications to student achievement. Journal of Educational and Behavioral Statistics, 37, 339–364.

Bollen, K. A. (1989). Structural equations with latent variables. New York: Wiley.

Broyden, C. G. (1970). The convergence of a class of double-rank minimization algorithms 1. General considerations. IMA Journal of Applied Mathematics, 6, 76.

Buonaccorsi, J. P. (1996). Measurement error in the response in the general linear model. Journal of the American Statistical Association, 91(434), 633–642.

Burt, R. S. (1973). Confirmatory factor-analytic structures and the theory construction process. Sociological Methods and Research, 2(2), 131–190.

Burt, R. S. (1976). Interpretational confounding of unobserved variables in structural equation models. Sociological Methods and Research, 5(1), 3–52.

Byrd, R. H., Lu, P., Nocedal, J., & Zhu, C. (1995). A limited memory algorithm for bound constrained optimization. SIAM Journal on Scientific Computing, 16, 1190–1208.

Cai, L. (2008). SEM of another flavour: Two new applications of the supplemented EM algorithm. British Journal of Mathematical and Statistical Psychology, 61(2), 309–329.

Carroll, R., Ruppert, D., Stefanski, L., & Crainiceanu, C. (2006). Measurement error in nonlinear models: A modern perspective (2nd ed.). London: Chapman and Hall.

Chang, H., & Stout, W. (1993). The asymptotic posterior normality of the latent trait in an IRT model. Psychometrika, 58, 37–52.

Cohen, A. S., Bottge, B. A., & Wells, C. S. (2001). Using item response theory to assess effects of mathematics instruction in special populations. Exceptional Children, 68(1), 23–44. https://doi.org/10.1177/001440290106800102.

Congdon, P. (2001). Bayesian statistical modeling. Chichester: Wiley.

De Boeck, P., & Wilson, M. (2004). A framework for item response models. New York: Springer.

De Fraine, B., Van Damme, J., & Onghena, P. (2007). A longitudinal analysis of gender differences in academic self-concept and language achievement: A multivariate multilevel latent growth approach. Contemporary Educational Psychology, 32(1), 132–150.

Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society Series B (Methodological), 39, 1–38.

Devanarayan, V., & Stefanski, L. (2002). Empirical simulation extrapolation for measurement error models with replicate measurements. Statistics and Probability Letters, 59, 219–225.

Diakow, R. (2010). The use of plausible values in multilevel modeling. Unpublished masters thesis. Berkeley: University of California.

Diakow, R. P. (2013). Improving explanatory inferences from assessments. Unpublished doctoral dissertation. University of California-Berkley.

Drechsler, J. (2015). Multiple imputation of multilevel missing data—Rigor versus simplicity. Journal of Educational and Behavioral Statistics, 40(1), 69–95.

Fan, X., Chen, M., & Matsumoto, A. R. (1997). Gender differences in mathematics achievement: Findings from the National Education Longitudinal Study of 1988. Journal of Experimental Education, 65(3), 229–242.

Fletcher, R. (1970). A new approach to variable metric algorithms. The Computer Journal, 13, 317.

Fox, J.-P. (2010). Bayesian item response theory modeling: Theory and applications. New York: Springer.

Fox, J.-P., & Glas, C. A. (2001). Bayesian estimation of a multilevel IRT model using Gibbs sampling. Psychometrika, 66(2), 271–288.

Fox, J.-P., & Glas, C. A. (2003). Bayesian modeling of measurement error in predictor variables using item response theory. Psychometrika, 68(2), 169–191.

Fuller, W. (2006). Measurement error models (2nd ed.). New York, NY: Wiley.

Goldfarb, D. (1970). A family of variable metric updates derived by variational means. Mathematics of Computation, 24, 23–26.

Goldhaber, D. D., & Brewer, D. J. (1997). Why don’t schools and teachers seem to matter? Assessing the impact of unobservables on educational productivity. The Journal of Human Resources, 32(3), 505–523.

Hill, H. C., Rowan, B., & Ball, D. L. (2005). Effects of teachers’ mathematical knowledge for teaching on student achievement. American Educational Research Journal, 42(2), 371–406.

Hong, G., & Yu, B. (2007). Early-grade retention and children’s reading and math learning in elementary years. Educational Evaluation and Policy Analysis, 29, 239–261.

Hsiao, Y., Kwok, O., & Lai, M. (2018). Evaluation of two methods for modeling measurement errors when testing interaction effects with observed composite scores. Educational and Psychological Measurement, 78, 181–202.

Jeynes, W. H. (1999). Effects of remarriage following divorce on the academic achievement of children. Journal of Youth and Adolescence, 28(3), 385–393. https://doi.org/10.1023/A:1021641112640.

Kamata, A. (2001). Item analysis by the hierarchical generalized linear model. Journal of Educational Measurement, 38, 79–93.

Khoo, S., West, S., Wu, W., & Kwok, O. (2006). Longitudinal methods. In M. Eid & E. Diener (Eds.), Handbook of psychological measurement: A multimethod perspective (pp. 301–317). Washington, DC: APA.

Koedel, C., Leatherman, R., & Parsons, E. (2012). Test measurement error and inference from value-added models. The B. E. Journal of Economic Analysis and Policy, 12, 1–37.

Kohli, N., Hughes, J., Wang, C., Zopluoglu, C., & Davison, M. L. (2015). Fitting a linear–linear piecewise growth mixture model with unknown knots: A comparison of two common approaches to inference. Psychological Methods, 20(2), 259.

Kolen, M. J., Hanson, B. A., & Brennan, R. L. (1992). Conditional standard errors of measurement for scale scores. Journal of Educational Measurement, 29, 285–307.

Lee, S., & Song, X. (2003). Bayesian analysis of structural equation models with dichotomous variables. Statistics in Medicine, 22, 3073–3088.

Lindstrom, M. J., & Bates, D. (1988). Newton–Raphson and EM algorithms for linear mixed-effects models for repeated measure data. Journal of the American Statistical Association, 83, 1014–1022.

Liu, Y., & Yang, J. (2018). Bootstrap-calibrated interval estimates for latent variable scores in item response theory. Psychometrika, 83, 333–354.

Lockwood, L. R., & McCaffrey, D. F. (2014). Correcting for test score measurement error in ANCOVA models for estimating treatment effects. Journal of Educational and Behavioral Statistics, 39, 22–52.

Lu, I. R., Thomas, D. R., & Zumbo, B. D. (2005). Embedding IRT in structural equation models: A comparison with regression based on IRT scores. Structural Equation Modeling, 12(2), 263–277.

Magis, D., & Raiche, G. (2012). On the relationships between Jeffrey’s model and weighted likelihood estimation of ability under logistic IRT models. Psychometrika, 77, 163–169.

Meng, X. (1994). Multiple-imputation inferences with uncongenial sources of input. Statistical Science, 10, 538–573.

Meng, X., & Rubin, D. (1993). Maximum likelihood estimation via the ECM algorithm: A general framework. Biometrika, 80, 267–278.

Mislevy, R. J., Beaton, A. E., Kaplan, B., & Sheehan, K. M. (1992). Estimating population characteristics from sparse matrix samples of item responses. Journal of Educational Measurement, 29(2), 133–161.

Monseur, C., & Adams, R. J. (2009). Plausible values: How to deal with their limitations. Journal of Applied Measurement, 10(3), 320–334.

Murphy, K. (2007). Conjugate Bayesian analysis of the Gaussian distribution. Online file at https://www.cs.ubc.ca/~murphyk/Papers/bayesGauss.pdf

Nelder, J. A., & Mead, R. (1965). A simplex algorithm for function minimization. Computer Journal, 7, 308–313.

Nussbaum, E., Hamilton, L., & Snow, R. (1997). Enhancing the validity and usefulness of large-scale educational assessment: IV.NELS:88 Science achievement to 12th grade. American Educational Research Journal, 34, 151–173.

Pastor, D. A., & Beretvas, N. S. (2006). Longitudinal Rasch modeling in the context of psychotherapy outcomes assessment. Applied Psychological Measurement, 30, 100–120.

Pinheiro, J. C., & Bates, D. M. (1995). Approximations to the log-likelihood function in the nonlinear mixed-effects model. Journal of computational and Graphical Statistics, 4(1), 12–35.

Rabe-Hesketh, S., & Skrondal, A. (2008). Multilevel and longitudinal modeling using Stata. New York: STATA Press.

Rabe-Hesketh, S., Skrondal, A., & Pickles, A. (2004). GLLAMM manual. Oakland/Berkeley: University of California/Berkeley Electronic Press.

Raudenbush, S. W., & Bryk, A. S. (1985). Empirical Bayes meta-analysis. Journal of Educational and Behavioral Statistics, 10, 75–98.

Raudenbush, S. W., & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods. Thousand Oaks, CA: Sage.

Raudenbush, S. W., Bryk, A. S., & Congdon, R. (2004). HLM 6 for windows (computer software). Lincolnwood, IL: Scientific Software International.

Raudenbush, S. W., & Liu, X. (2000). Statistical power and optimal design for multisite randomized trials. Psychological Methods, 5(2), 199.

Rijmen, F., Vansteelandt, K., & De Boeck, P. (2008). Latent class models for diary method data: Parameter estimation by local computations. Psychometrika, 73(2), 167–182.

Rosseel, Y. (2012). Lavaan: An R package for structural equation modeling. Journal of Statistical Software, 48(2), 1–36. https://doi.org/10.18637/jss.v048.i02.

Shang, Y. (2012). Measurement error adjustment using the SIMEX method: An application to student growth percentiles. Journal of Educational Measurement, 49, 446–465.

Shanno, D. F. (1970). Conditioning of quasi-Newton methods for function minimization. Mathematics of Computation, 24, 647–656.

Sirotnik, K., & Wellington, R. (1977). Incidence sampling: an integrated theory for “matrix sampling”. Journal of Educational Measurement, 14, 343–399.

Skrondal, A., & Kuha, J. (2012). Improved regression calibration. Psychometrika, 77, 649–669.

Skrondal, A., & Laake, P. (2001). Regression among factor scores. Psychometrika, 66(4), 563–575.

Skrondal, A., & Rabe-Hesketh, S. (2004). Generalized latent variable modeling: Multilevel, longitudinal, and structural equation models. Boca Raton: CRC Press.

StataCorp., (2011). Stata statistical software: Release 12. College Station, TX: StataCorp LP.

Stoel, R. D., Garre, F. G., Dolan, C., & Van Den Wittenboer, G. (2006). On the likelihood ratio test in structural equation modeling when parameters are subject to boundary constraints. Psychological Methods, 11(4), 439.

Thompson, N., & Weiss, D. (2011). A framework for the development of computerized adaptive tests. Practical Assessment, Research and Evaluation, 16(1). http://pareonline.net/getvn.asp?v=16&n=1.

Tian, W., Cai, L., Thissen, D., & Xin, T. (2013). Numerical differentiation methods for computing error covariance matrices in item response theory modeling: An evaluation and a new proposal. Educational and Psychological Measurement, 73(3), 412–439.

van der Linden, W. J., & Glas, C. A. W. (Eds.). (2010). Elements of adaptive testing (Statistics for social and behavioral sciences series). New York: Springer.

Verhelst, N. (2010). IRT models: Parameter estimation, statistical testing and application in EER. In B. P. Creemers, L. Kyriakides, & P. Sammons (Eds.), Methodological advances in educational effectiveness research (pp. 183–218). New York: Routledge.

von Davier, M., & Sinharay, S. (2007). An importance sampling EM algorithm for latent regression models. Journal of Educational and Behavioral Statistics, 32(3), 233–251.

Wang, C. (2015). On latent trait estimation in multidimensional compensatory item response models. Psychometrika, 80, 428–449.

Wang, C., Kohli, N., & Henn, L. (2016). A second-order longitudinal model for binary outcomes: Item response theory versus structural equation modeling. Structural Equation Modeling: A Multidisciplinary Journal, 23, 455–465.

Wang, C., & Nydick, S. (2015). Comparing two algorithms for calibrating the restricted non-compensatory multidimensional IRT model. Applied Psychological Measurement, 39, 119–134.

Warm, T. A. (1989). Weighted likelihood estimation of ability in item response theory. Psychometrika, 54, 427–450.

Ye, F. (2016). Latent growth curve analysis with dichotomous items: Comparing four approaches. British Journal of Mathematical and Statistical Psychology, 69, 43–61.

Zwinderman, A. H. (1991). A generalized Rasch model for manifest predictors. Psychometrika, 56(4), 589–600.

Acknowledgements

This project is supported by IES R305D160010 and NSF SES-1659328

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Closed-form Marginal Likelihood

In this appendix, we provide detailed derivations for the closed-form marginal likelihood for a general model where the design matrices for the fixed and random effects in the latent growth curve model are different, i.e., Eq. (3) in the paper is updated as

The subscript in \(\varvec{X}_i\) and \(\varvec{Z}_i\) indicates the model allows for unbalanced design.

Given (1) and the measurement error model, the marginal likelihood of the structural parameters, \(L(\beta , \varvec{\Sigma }_u, \sigma ^2)\), is proportional to

where \(|\cdot |\) denote the determinant of a matrix and \(||\theta ||^2 = \theta ^t\theta \).

Observing the coefficient of the squared term of \(\varvec{u}_i\) in the power of e is

and the coefficient of \(u_i\) in the power of e is

Thus,

where

For multivariate models, let \(\varvec{\theta }_i=(\theta _{i11},\ldots ,\theta _{i1T},\ldots ,\theta _{iD1},\ldots ,\theta _{iDT})^t\), a \(n_iD \times 1\) vector where \(n_i\) denotes the number of measurement waves for person i. Then in the general form, the associative latent growth curve model still takes the same form as in (1), but \(\varvec{X}_i\) becomes a \(n_iD \times Dp\) design matrix, and \(\varvec{\beta } = (\beta _{01}, \beta _{02},\ldots , \beta _{0D}, \beta _{11}, \beta _{12},\ldots , \beta _{1d},\ldots , \beta _{(p-1)1},\ldots , \beta _{(p-1)D})^t\) is a \(Dp \times 1\) vector. \(\varvec{Z}_i\) is \(n_iD \times Dk\) design matrix, assuming there are k random effects. In our simulation setting, \(n_i=4\), \(p=k\); hence, \(\varvec{Z}\) takes the same form as \(\varvec{X}\).

\(\varvec{u}_i\) is a \(Dk \times 1\) vector. The covariance matrix of \(\varvec{u}_i\) is \(\varvec{\Sigma }_u\). \(e_i = (e_{i11},\ldots ,e_{i1T},\ldots ,e_{iD1},\ldots ,e_{iDT})^t\) is a \(n_iD \times 1\) vector of residuals. The covariance matrix of \(e_i\), \(\varvec{\Sigma }\), is a diagonal block matrix. It has the structure of \(\begin{pmatrix} \varvec{\Sigma } &{}\quad \cdots &{}\quad \cdots &{}\quad 0 \\ 0 &{}\quad \varvec{\Sigma } &{}\quad \cdots &{}\quad 0 \\ \vdots &{}\quad \vdots &{}\quad \ddots &{}\quad \vdots \\ 0 &{}\quad \cdots &{}\quad \cdots &{}\quad \varvec{\Sigma }\\ \end{pmatrix}_{n_iD \times n_iD}\) where \(\Sigma _{D\times D} = diag((\sigma _1)^2, (\sigma _2)^2,\ldots , (\sigma _D)^2)\) and \(\Sigma \) has \(n_i\) such diagonal blocks. Then the marginal likelihood of model parameters is:

where

Appendix B: Computational Details of the EM Standard Error (MIRT)

The important component of computing the standard error is the complete data Fisher information matrix \({\varvec{I}}_{c} ({\hat{\varvec{\psi }}})\). Below, we present the specific forms of these components for the MIRT models. Results from the UIRT models can be considered as a special case. Assuming a Monte Carlo sampling version of the EM algorithm is used, i.e., Eq. (19), we have,

To obtain the second derivatives with respect to the elements in the covariance matrix

where \(x_{p}\) and \(x_{q}\) are the two elements in the covariance matrix \(\hat{\Sigma }_{u}\). For instance, using UIRT set up as an example, if taking the second derivative of log-likelihood with respect to \(\tau _{00}\), we would set \(x_{p}=x_{q}=\tau _{00}\) in (B4), and then \(\frac{\partial \hat{\Sigma }_{u}}{\partial \tau _{00}}=\left[ {\begin{array}{c@{\quad }c} 1 &{} 0\\ 0 &{} 0\\ \end{array} } \right] \). The parameters in (B1)–(B4) are final estimates upon convergence. Hence, the Fisher information matrix for the complete data has the following form as

The information matrix can also be obtained similarly if a closed-form conditional expectation is obtained.