Abstract

Diffusion tensor magnetic resonance imaging (DT-MRI) is a non-invasive imaging technique allowing to estimate the molecular self-diffusion tensors of water within surrounding tissue. Due to the low signal-to-noise ratio of magnetic resonance images, reconstructed tensor images usually require some sort of regularization in a post-processing step. Previous approaches are either suboptimal with respect to the reconstruction or regularization step. This paper presents a Bayesian approach for simultaneous reconstruction and regularization of DT-MR images that allows to resolve the disadvantages of previous approaches. To this end, estimation theoretical concepts are generalized to tensor valued images that are considered as Riemannian manifolds. Doing so allows us to derive a maximum a posteriori estimator of the tensor image that considers both the statistical characteristics of the Rician noise occurring in MR images as well as the nonlinear structure of tensor valued images. Experiments on synthetic data as well as real DT-MRI data validate the advantage of considering both statistical as well as geometrical characteristics of DT-MRI.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Diffusion tensor magnetic resonance imaging (DT-MRI) allows estimating diffusion tensor images.Footnote 1 Each voxel contains a second order tensor represented by a \(3 \times 3\) symmetric positive definite matrix. Basis of this technique builds a physical model of water self-diffusion, here the Stejskal–Tanner equation (Stejskal and Tanner 1965), which relates the observed DT-MRI data with the diffusion tensor image. In this paper we generalize estimation theoretical concepts from Euclidean space to non-Euclidean spaces, i.e. the Riemannian manifold of diffusion tensor images. This allows us to estimate diffusion tensor images from DT-MRI data in a way respecting the specific geometry of diffusion tensor images as well as considering the statistical properties of DT-MRI data.

To this end, a likelihood function based on the statistical properties of the DT-MRI data and the Stejskal–Tanner equation is derived. We examine prior distributions and relate them to their deterministic counterparts. In particular, we generalize the concept of anisotropic diffusion filtering from gray-scale images to diffusion tensor images within the Riemannian framework. This paper closes with experimental evaluations of our framework.

1.1 Related Work

Our approach combines reconstruction with regularization of diffusion tensor images. Consequently, it is related to both techniques that have been proposed in this field since the seminal work of Bihan et al. (1986, 2001).

The maybe most common way to reconstruct diffusion tensors is based on a linearized version of the Stejskal–Tanner equation (1) combined with a least squares estimator (cf. Bihan et al. 2001). In order to obtain a consistent and effective estimator from a least squares approach requires the image noise to be identical independent Gaussian distributed. For high signal-to-noise ratios the underlying Gaussian assumption on the image noise has been shown to be satisfied (Gudbjartsson and Patz 1995). However, the independent assumption does not hold for the linearized Stejskal–Tanner equation. Moreover, the linear estimator of Bihan et al. (2001) does not account for the nonlinear structure of the space of diffusion tensors and therefore may lead to physical meaningless non-positive definite diffusion tensors.

There are reconstruction methods considering Rician noise and/or positive definiteness. Andersson (2008) proposed a Bayesian estimator for diffusion tensor images including a Rician noise model. Landman et al. (2007a) proposed a maximum likelihood estimator based on the Rician noise model. A robust variant, considering observations beyond the Rician noise model, has been proposed in Landman et al. (2007b). Cox and Glen (2006) force positive definiteness, but did not consider Rician noise. All methods (Cox and Glen 2006; Landman et al. 2007a; Andersson 2008) do not account for the nonlinear structure of diffusion tensor images. A Riemannian approach respecting the nonlinear structure has been proposed in Lenglet et al. (2006). Unfortunately, no Rician noise has been considered. Both approaches, Landman et al. (2007a) and Lenglet et al. (2006) did not incorporate any denoising or regularization strategies.

Besides the reconstruction of diffusion tensor images from DTI-MRI data, numerous methods for denoising and regularization of diffusion tensor images have been proposed. These methods can be classified into ones based on the Euclidean metric (Tschumperlé and Deriche 2001; Martin-Fernandez et al. 2003; Weickert and Brox 2002; Coulon et al. 2001; Feddern et al. 2006; Burgeth et al. 2007, 2009) and others based on the Riemannian metric (Gur and Sochen 2005; Moakher 2005; Fletcher and Joshi 2004, 2007; Lenglet et al. 2005, 2006; Batchelor et al. 2005; Fillard et al. 2005; Pennec et al. 2006; Castano-Moraga et al. 2007; Zéraï and Moakher 2007a; Gur et al. 2007, 2009, 2012). Methods using the Euclidean metric consider the diffusion tensor image to be embedded in the space of symmetric matrix-valued images which constitutes a vector space. Distances between tensors are computed with respect to the Euclidian metric of the space of symmetric matrices. To keep tensors positive definite, they are projected back onto the manifold of positive definite tensors (Tschumperlé and Deriche 2001), only positive definite tensors are accepted within stochastic sampling steps (Martin-Fernandez et al. 2003), additional constraints are incorporated (Tschumperlé and Deriche 2002) or image processing is restricted to operations assuring positive definiteness, e.g. convex filters (Weickert and Brox 2002; Westin and Knutsson 2003; Krajsek and Mester 2006; Burgeth et al. 2007). Although tensors are forced to be positive definite within such approaches, the Euclidean metric turns out to be less appropriate for regularizing diffusion tensor images as tensors become deformed. This is known as eigenvalue swelling effect and can be circumvented by using the Riemannian metric (Chefd’hotel et al. 2004; Pennec et al. 2006; Castano-Moraga et al. 2007).

Riemannian approaches consider diffusion tensor images as a Riemannian manifoldFootnote 2 equipped with a metric on the tangent bundle which is invariant under affine transformations. For instance, Fillard et al. (2005) and Pennec et al. (2006) proposed a ‘Riemannian framework for tensor computing’ which generalizes several well established image processing techniques, originally developed for gray-scale images, to diffusion tensor images, including interpolation, restoration and nonlinear isotropic diffusion filtering. The framework is based on the matrix representation of diffusion tensors and heavily uses computational costly matrix operations like the exponential and the logarithmic map. A computational more efficient approach than the Riemannian framework of Pennec et al. (2006) is based on the so called log-Euclidean metric (Arsigny et al. 2005, 2006; Fillard et al. 2007). The log-Euclidean metric is not invariant under affine coordinate transformations and consequently it depends on the position of the origin of the coordinate system. Zéraï and Moakher (2007a), Gur et al. (2007, 2009, 2012) propose Riemannian regularization approaches which are based on local coordinates and therefore less computationally demanding as the matrix representation of Fillard et al. (2005), Pennec et al. (2006).

In principle, Euclidean denoising and regularization approaches are compatible with the reconstruction approach of Landman et al. (2007a), i.e. they can be combined with the Rician noise model to obtain a simultaneous reconstruction and denoising method but in general suffer from the eigenvalue swelling effect. On the other hand, Riemannian denoising and regularization approaches do not show the eigenvalue swelling effect and are compatible with the Riemannian reconstruction approach proposed by Lenglet et al. (2006). However, such a reconstruction scheme leads to a bias towards smaller diffusion tensors as it does not consider the Rician distributed noise in the DT-MRI data as we will show in this paper.

In summary, we observe that in the literature on diffusion tensor image reconstruction several approaches have been proposed either considering the Rician noise in DT-MRI data or considering the Riemannian geometry of the diffusion tensor images. However no method has been published so far, that simultaneously

-

(a)

assures positive definiteness,

-

(b)

considers the Riemannian geometry of diffusion tensor images,

-

(c)

considers Rician noise, and

-

(d)

provides a Bayesian estimator with error bounds.

All previous papers fail in providing at least two of the points (a)–(d). For instance, the method proposed in Andersson (2008) covers (a) and (c) but does not include (b) and (d). The approach proposed in Lenglet et al. (2006) does not provide (c) leading to biased estimates and does not cover (d).

1.2 Own Contribution

We present a Riemannian approach to Bayesian estimation of diffusion tensor images from DT-MRI data covering (a) to (d). To this end, we derive a Bayesian estimation framework for diffusion tensor images from DT-MRI data considering both the statistical characteristics of the measured DT-MRI data as well as the specific Riemannian geometry of diffusion tensor images. To the best of our knowledge, only classical statistical (frequentistic) methods or deterministic regularization approaches have been generalized to diffusion tensor images within the Riemannian framework. Thus this Bayesian framework is new.

In a first step, we reformulate estimation theoretical concepts, e.g. the Bayesian risk, from Euclidean spaces to the Riemannian space of diffusion tensor images in Sect. 5. In Sect. 6 we derive a likelihood model for diffusion tensor images that accounts for the Rice distribution. In Sect. 7.2 we generalize the regularization taxonomy for gray-scale images to diffusion tensor images needed for the covariance estimator in Sect. 9. To this end, we relate already known regularization schemes to their MRF counterparts but also derive new ones that have not been considered in the literature so far. The latter are linear and nonlinear anisotropic regularization schemes. In Sect. 7.1.1 we derive mixed second order derivatives needed for anisotropic regularization. In Sect. 7.1.3 we derive discrete approximations for the continuous regularization schemes and demonstrate their stability in numerical experiments in Sect. 10.1.1. Our approach is based on the matrix representation introduced by Fletcher and Joshi (2004, 2007), Fillard et al. (2005), Pennec et al. (2006). A major drawback of the matrix representation is its large computational costs due to the heavy use of matrix operations. In Sect. 7.1.4 we introduce an analytical computation of these matrix functions leading to a considerable speedup compared to commonly used numerical computations. In Sect. 7.1.2 we relate the matrix representation of the diffusion tensor used here to the local coordinate representation used in e.g. Zéraï and Moakher (2007a), Gur et al. (2007) and show that numerical cumbersome Christoffel symbols can be avoided within our approach. In addition to the maximum a posteriori (MAP) estimator, we derive in Sect. 9 an estimator for the covariance matrix of the posterior probability distribution.

Parts of this paper have already been presented at two conferences Krajsek et al. (2008) as well as Krajsek et al. (2009). In addition to the work in Krajsek et al. (2008, 2009) the current paper introduces (1) the speedup of the matrix operations via analytic matrix operations, (2) new stable discretization schemes compared to less stable ones presented in Krajsek et al. (2008), (3) a robust likelihood function in order to cope with noise statistics beyond the Rice distribution, (4) a regularization taxonomy generalizing Euclidean approaches to Riemannian ones, as well as (5) new experiments evaluating the new framework in detail.

In addition to the above mentioned contributions, Sects. 2–4 introduce diffusion tensor imaging, Riemannian manifolds, and treatment of diffusion tensors as Riemannian manifolds, in order to introduce notations and make the paper more self-contained.

2 Diffusion Tensor Imaging

In this section we give a brief overview on the physical diffusion process of water molecules within biological tissues and how it can be measured by means of an NMR scanner. It is beyond the scope of this contribution to give a detailed introduction in diffusion tensor imaging (DTI). We refer the interested reader to the review paper (Bihan et al. 2001).

DTI is a variant of magnetic resonance imaging (MRI) that allows measuring the tensor of water self-diffusion. The basic characteristics of diffusion tensors like their trace or fractional anisotropy (FA) have been shown to be valuable indicators in medical diagnostic/therapy (Müller et al. 2007; Alexander et al. 2007), e.g. being used in medical imaging to delineate infarcted tissue from healthy brain (Edlow et al. 2016). Therefore a precise estimate of the diffusion tensors is a crucial step, helping to provide reliable diagnostics in these cases. However, the clinical application of our method goes far beyond the scope of this paper.

Self diffusion of water origins from thermally induced Brownian motion and takes place irrespective from the concentration gradient of the water molecules. The diffusion can be described by means of the diffusion tensor, i.e. a symmetric positive definite \(3 \times 3\) matrix. The eigenvalues of the diffusion tensor encode the amount of diffusion along the principal directions given by the corresponding eigenvectors. The most common model for estimating the diffusion tensor \(\varSigma _k\) at spatial position \(x_k\) is given by the Stejskal–Tanner equation (Stejskal and Tanner 1965)

where N denotes the number of pixels. It relates the diffusion tensor \(\varSigma _k\) with the diffusion weighted (DW) image values \(A_{j k}\), the reference signal \(A_{0 k}\), and the so called ‘b-value’ \(b_j\), a scalar value containing a few material constants and experimental parameters, as well as the L unit vectors \(g_j \in {\mathbb {R}}^3, \Vert g_j\Vert =1\) indicating the direction of the diffusion encoding. The ‘b-values’ as well as the diffusion encoding directions \(g_j\) are usually determined by the experimental design. Thus, by measuring the DW image values for different ‘b-values’ and different diffusion encoding directions allows to estimate the diffusion tensor components by means of the Stejskal–Tanner equation (1). Six or more signals per image pixel measured with non-collinear \(g_j\) vectors can be used to estimate the diffusion tensor by minimizing a cost functional of the residua of the corresponding Stejskal–Tanner equations.

3 Riemannian Manifolds

This section gives a short introduction into Riemannian manifolds (cf. Helgason 1978). A manifold \({\mathcal {M}}\) is an abstract mathematical space that locally looks like the Euclidean space. A typical example of a 2D manifold is the sphere \({\mathcal {S}}^2\) embedded in the 3D Euclidean space. In general, an n-dimensional manifold can at least be embedded into a 2n dimensional Euclidean space according to Whitney’s embedding theorem (Whitney 1944; Cohen 1985). Thus, each manifold can be represented by a surface in a higher dimensional Euclidean space which is denoted as the extrinsic view. Except from the extrinsic view, we can describe an n-dimensional manifold locally by the n-dimensional real space \({\mathbb {R}}^n\) which is denoted as the intrinsic view. A local chart \((\vartheta ,U)\) is an open subset of the manifold \(U \subseteq {\mathcal {M}}\) together with a one to one map \(\vartheta :U\rightarrow {\mathbb {R}}^n\) from this open subset to an open set of the Euclidean space. The image \(\vartheta (p) \in {\mathbb {R}}^n\) of a point of the manifold \(p \in {\mathcal {M}}\) is denoted as local coordinates. The piecewise one to one mapping to the Euclidean space allows the generalization of concepts developed for the Euclidean space onto manifolds. For instance, a function \(f: {\mathcal {M}}\rightarrow {\mathbb {R}}\) defined on the manifold is denoted as differentiable at point \(p\in {\mathcal {M}}\) if the function \(f_{\vartheta }:=f \circ \vartheta ^{-1}\) is differentiable under the chart at point \(\vartheta (p)\). At each point on the manifold \(p \in {\mathcal {M}}\) we can attach a tangent space \(T_p{\mathcal {M}}\) that contains all directions one can pass through p. More precisely, let \(\gamma (t): {\mathbb {R}}\rightarrow {\mathcal {M}}\) denote a continuously differentiable curve in the manifold going through \(\gamma (0)=p \in {\mathcal {M}}\) and \(\gamma _\vartheta (t)=\vartheta \circ \gamma (t)\) its representation in a local chart \((\vartheta ,U)\). A representation of a tangent vector \(\overrightarrow{p v}_\vartheta \) is then given by the instantaneous speed \(\overrightarrow{pv}_\vartheta :=\left. \partial _{t}\gamma _\vartheta (t)\right| _{t=0}\) of the curve and the speed vectors of all possible curves constitute the tangent space at p. The definition of the tangent vector is independent of the chosen local chart and is denoted as \(\overrightarrow{p v} \in T_p{\mathcal {M}}\). The set of all tangent spaces of the manifold is denoted as the tangent bundle. A Riemannian manifold owns additional structure that allows to define distances between different points on the manifold. Each tangent space is equipped with an inner product

defined by the Riemannian metric \(G_p: T_p{\mathcal {M}}\times T_p{\mathcal {M}} \rightarrow {\mathbb {R}}\) (with its matrix representation \(G_p^\vartheta \) in the local chart) that smoothly varies from point to point on the manifold. The inner product induces the norm \(||\overrightarrow{px}||_p=\sqrt{\langle \overrightarrow{px}, \overrightarrow{px} \rangle _p }\) on the manifold. The curve length \(\mathcal {L}_{q_1}^{q_2}(\gamma )\) of the curve \(\gamma (t)\) between two points \(q_1\) and \(q_2\) with \(q_1=\gamma (a)\), \(q_2=\gamma (b)\) is then given in a natural way by integrating the norm of the instantaneous speed \(\dot{\gamma _\vartheta }(t):=\partial _t \gamma _\vartheta (t)\) along the curveFootnote 3

The distance \(\text{ dist }(q_1,q_2)\) between two points \(q_1,q_2 \in {\mathcal {M}}\) is defined by the infimum of the set of curve lengths of all possible curves between them. The locally shortest path between two points is denoted as a geodesic \(\gamma ^g\). The Riemannian metric is intrinsic as it does not make use of any space in which the manifold might be embedded and allows the computation of distances on the manifold without using the extrinsic view. Important tools for working on manifolds are the Riemannian logarithmic and exponential map. The exponential map \(\exp _p: T_p{\mathcal {M}} \rightarrow {\mathcal {M}}\) is a mapping between the tangent space \(T_p{\mathcal {M}}\) and the corresponding manifold \({\mathcal {M}}\). It maps the tangent vector \(\overrightarrow{px}\) to the element of the manifold \(\exp _p(\overrightarrow{px})=x\) that is reached by the geodesic at time step one, i.e. \(x=\gamma ^g(1)\) with \(p=\gamma ^g(0)\). The manifold of positive definite tensors considered in this paper is equipped with additional structure, namely, it is a so called homogenous space with non-negative curvature from which follows that the exponential map is one to one (Helgason 1978). In particular there exists an inverse, the logarithmic map \(\log _p(x)=\overrightarrow{px}\).

Minimizing a function f defined on the manifold might require the computation of its gradient \(\nabla f\). Let us denote with \(\gamma (t)\) a curve in \({\mathcal {M}}\) passing through p at time \(t=0\) with its corresponding tangent vector \(\overrightarrow{px}\). Furthermore, let us assume that the directional derivative

exists for all possible tangent vectors \(\overrightarrow{px} \in T_p{\mathcal {M}}\). The gradient \(\nabla f\) at point p and its representation \(\nabla f_{\vartheta }\) in the chart \((\vartheta ,U)\) is then uniquely defined by the relation

Applying the chain rule, we can rewrite the directional derivative

where \(\gamma _{\vartheta _j}(t)=\vartheta _j \circ \gamma (t)\) denotes the j coordinate in the chart \(\vartheta \) and we introduce the abbreviation \(\nabla \!_{\perp }f_{\vartheta }:=(\partial _1 f_{\vartheta } ,\ldots ,\partial _n f_{\vartheta })^T\). Comparing (5) with (6) allows us to express the gradient in a local chart by means of the partial derivatives and the inverse metric tensor

As the gradient points in the direction of largest ascent of the function value, the gradient can be used for designing a gradient descent scheme which will be discussed in Sect. 4.3.

4 Diffusion Tensor Riemannian Manifolds

A diffusion tensor image contains at each pixel (or voxel in case of a three dimensional image domain) position x a symmetric positive definite \(n \times n\) matrix (also denoted as a tensor in the following). Mathematically, such an image can be described by a tensor valued function \(f: \Omega \rightarrow {\mathcal {P}}(n)\) from the image domain \(\Omega \subset {\mathbb {R}}^m\), (usually \(m=2\) or \(m=3\)) into the space of \(n \times n\) positive definite tensors \({\mathcal {P}}(n):=Sym^{+}(n,{\mathbb {R}})=\{A \in {\mathbb {R}}^{n \times n}|A^T=A, A \succ 0 \}\) where the symbol \(\succ \) denotes the positive definiteness. In case of a discrete image domain we consider one tensor at each spatial position. Such an image can be described by a point in the N-times Cartesian product \({\mathcal {P}}^N(n):={\mathcal {P}}_1(n) \times {\mathcal {P}}_2(n)\times \cdots \times {\mathcal {P}}_N(n)\) of the individual tensor manifolds at each of the N grid points. Independent from a continuous or discrete modeling, image processing techniques, e.g. denoising or interpolation, need some mechanism to compare image values at different spatial positions which can be done by a metric on the space of tensors.

4.1 The Euclidean Metric of \({\mathcal {P}}(n)\)

The space of positive definite tensors can be considered as a manifold embedded into the vector space of symmetric matrices \(Sym(n,{\mathbb {R}})=\{A \in {\mathbb {R}}^{n \times n}|A^T=A \}\). The space \(Sym(n,{\mathbb {R}})\) together with the Frobenius norm \(||A||_F=\sqrt{\text{ trace }\left( A^T A \right) }\) (Golub and Loan 1996) is isometric to the \(n^2\)-dimensional Euclidean space with the usual Euclidean metric (\(L_2\) norm), i.e. there exists a distance preserving isomorphism \(\text{ Vec }: {\mathcal {P}}(n)\rightarrow {\mathbb {R}}^{n^2}\) that is commonly denoted as vectorization by stacking the columns (or alternatively the rows) of a matrix \(A \in Sym(n,{\mathbb {R}})\) on top of one another yielding the \(n^2\)-dimensional column vector \(\text{ Vec }(A)=(A_{11},\ldots ,A_{n 1},A_{12},\ldots ,A_{n n})^T\) where \(A_{i j}\) denote elements of A. Due to the redundancy of symmetric tensors, \({\mathcal {P}}(n)\) is even isometric to the \(\frac{n(n+1)}{2}\) dimensional Euclidean space which can be obtained by a projection \(v:{\mathbb {R}}^{n^2}\rightarrow {\mathbb {R}}^{\frac{n(n+1)}{2}}\) with a \(\frac{n(n+1)}{2} \times n^2 \) projection matrix P

The factor \(\sqrt{2}\) takes the redundance of the off-diagonal elements into account such that the Frobenius norm in the matrix notation corresponds to the canonical inner product within the vector representation, i.e. \(||A||_{F}^2=(P \text{ Vec }(A))^T P \text{ Vec }(A)\).

The inverse mapping \(v^{-1}:{\mathbb {R}}^{\frac{n(n+1)}{2}}~\rightarrow ~{\mathbb {R}}^{n^2}\) from the reduced Euclidean representation v(A) to the vectorized matrix representation is given by the pseudo inverse \(P^{\dagger }\) of the projection matrix.

The space of positive definite tensors \({\mathcal {P}}(n)\) lies within the vector space of symmetric tensors, i.e. \(Sym^{+}(n,{\mathbb {R}}) \subset Sym(n,{\mathbb {R}})\). However, \(Sym^{+}(n,{\mathbb {R}})\) does not form a vector space but a curved sub-manifold of \({\mathbb {R}}^{\frac{n(n+1)}{2}}\) which will be denoted with \({\mathcal {D}}\). Using the Euclidean metric within \({\mathcal {P}}(n)\) restricted on \({\mathcal {D}}\) corresponds to an exterior view, i.e. distances are measured in the Euclidean space \(Sym(n,{\mathbb {R}})\) in which the manifold is embedded.

Illustration of \({\mathcal {P}}(2)\) embedded in the 3D Euclidean space. We neglect the factor \(\sqrt{2}\) of the off-diagonal term for visualization purpose. The space \({\mathcal {P}}(2)\) is the interior of a cone. The plot shows surfaces in \({\mathcal {P}}(2)\) for which tensors have the same determinant. The solid line shows the distance between two points on \({\mathcal {P}}(2)\) with respect to the flat Euclidean metric. The dotted curve illustrates the corresponding geodesic with respect to the affine invariant metric

An argument against applying the exterior view in the context of diffusion tensors is illustrated in Fig. 1 showing the space \({\mathcal {P}}(2)\) being isomorphic to the interior of a cone embedded in the 3D Euclidian space. The factor \(\sqrt{2}\) of the off-diagonal term has neglected for visualization purpose, i.e. each axis shows the value of the corresponding matrix entry. The iso-surfaces of different colors depict surfaces of constant values of the tensor determinant. The determinant is directly related to the size of the tensor as it is given by the product of its eigenvalues and each eigenvalue determines the length of the corresponding principal axis. In case of DTI, diffusion tensors encode the average thermal induced Brownian motion of water molecules within some local area. It is evident that the encoded average amount of motion should not be changed by image processing operations applied to the diffusion tensor image. However, the Euclidean metric does not fulfill this requirement. The straight line indicates the distance between two tensors having the same determinant. As the average of two points in a metric space lies on the geodesic between them (Helgason 1978), the average of both tensors lies somewhere on the straight line above the isosurface of both original tensors. Consequently, the corresponding determinant increases through the average process. This phenomenon is known as the eigenvalue swelling effect (Tschumperlé and Deriche 2001; Chefd’hotel et al. 2004; Pennec et al. 2006; Castano-Moraga et al. 2007).

4.2 The Affine Invariant Metric of \({\mathcal {P}}(n)\)

Alternative to the extrinsic view, we can consider the space of positive definite tensors \({\mathcal {P}}(n)\) as a Riemannian manifold where distances are defined by its interior metric.Footnote 4 At each position \(\varSigma \in {\mathcal {P}}(n)\) a tangent space \(T_{\varSigma }{\mathcal {P}}(n)\) is attached equipped with an inner product

that smoothly varies from point to point inducing a metric on \({\mathcal {P}}(n)\). A preferred property of a metric is affine invariance. On \({\mathcal {P}}(n)\) such metric has been shown to be induced by the inner product

where the matrix square-root of a symmetric matrix is defined by the square root of its eigenvalues. This affine invariant inner product and the corresponding metric have been motivated from information geometric arguments (Rao 1945) by considering the space of probability distributions as Riemannian manifolds with the Fisher information matrix as an appropriate metric. In case of multivariate normal distributions with fixed mean the Fisher information matrix boils down to the affine invariant metric (Atkinson and Mitchell 1981; Skovgaard 1981; Lenglet et al. 2006). The metric properties of (11) have been examined in Förstner and Moonen (1999). Originally developed as a distance measure between fixed mean normal distributions, the affine invariant metric has been extensively used in conjunction with tensor valued data (Fletcher and Joshi 2004; Lenglet et al. 2005, 2006; Fillard et al. 2005; Pennec et al. 2006; Zéraï and Moakher 2007a).

4.3 The Geodesic Marching Scheme

As our diffusion tensor image reconstruction approach is formulated as an energy minimization problem some optimization method is required. The geodesic marching scheme (GMS) (Pennec 1999, 2006) is a generalization of the classical gradient descent approach to Riemannian manifolds. The main components of the GMS are the exponential and logarithmic map. Besides its theoretical justifications, e.g. independence of the chosen coordinate system, the affine invariant metric allows to derive analytical expressions of the Riemannian exponential map

with \( \varLambda \in T_{\varSigma }{\mathcal {P}}(n)\) and logarithmic map

for any \(\Xi \in {\mathcal {P}}(n)\) (Fletcher and Joshi 2004) and the matrix exponential and matrix logarithm are defined by the exponentials of their eigenvalues. According to Eq. (7), the gradient of a function \(f: {\mathcal {P}}(n)\rightarrow {\mathbb {R}}\) is the product of the inverse metric tensor times the partial derivatives, i.e. \(\nabla f = G^{-1} \nabla _{\perp }f\). Before we describe the GMS, we derive a general expression of the gradient in the matrix notation needed for diffusion tensor images.

By means of the definition of the inner product (2) and the vectorization map (8), the inner product can be expressed as a matrix vector product

where G denotes the matrix representation of the affine invariant metric. On the other hand, we can transform the affine invariant metric (11) using the (cyclic) permutation invariance property of the trace, i.e. \(\text{ trace }\left( \varSigma \varLambda \right) =\text{ trace }\left( \varLambda \varSigma \right) \), as

Comparing \(v\left( \varLambda _1\right) ^T v\left( \varSigma ^{-1} \varLambda _2 \varSigma ^{-1}\right) \) with \(v\left( \varLambda _1\right) ^T G v\left( \varLambda _2\right) \) reveals that the left multiplication of a vectorized tangent vector at \(\varSigma \) with the matrix form of the metric tensor G equals the vectorized tangent vector translated by \(\varSigma ^{-1}\), i.e. \(G v\left( \varLambda _2\right) =v\left( \varSigma ^{-1} \varLambda _2 \varSigma ^{-1}\right) \). Thus, the left multiplication of the inverse metric tensor \(G^{-1} v\left( \varLambda _2\right) \) equals the vector version of vector translated by \(\varSigma \), i.e. \(G^{-1} v\left( \varLambda _2\right) =v\left( \varSigma \varLambda _2 \varSigma \right) \). Finally, the matrix valued gradient \(\nabla f\) at \(\varSigma \)

can be computed conveniently by means of the partial derivative \((\nabla _{\perp } f)_{i j}=\partial _{\varSigma _{i j}}f\) with respect to the i, j-th matrix element followed by a transformation with \(\varSigma \).

So far we considered only a single tensor manifold \({\mathcal {P}}(n)\). In case of a tensor valued image we are confronted with a tensor at each position in the image domain. As we minimize only functions on a discrete image domain we restrict our discussion to this case. Such a tensor valued image is described by the N-tuple \(\varSigma =(\varSigma _1,\varSigma _2,\ldots ,\varSigma _N)^T\) with the corresponding tangent vector \(\varLambda =(\varLambda _1,\varLambda _2,\ldots ,\varLambda _N)^T \in T_{\varSigma }{\mathcal {M}}\). Affine transformations of tensors of such a tensor valued image are explained by an n-tuple of invertible matrices \(W=(W_1,W_2,\ldots ,W_N)^T\) where the transformation is applied element wise, i.e. \(W *\varSigma =(W_1 *\varSigma _1,W_2 *\varSigma _2,\ldots ,W_N *\varSigma _N)^T\) and \(W *\varLambda =(W_1 *\varLambda _1,W_2 *\varLambda _2,\ldots ,W_N *\varLambda _N)^T\) with \(W_i *\varSigma _i:=W_i \varSigma _i W_{i}^{T}\) and \(W_i *\varLambda _i:=W_i \varLambda _i W_{i}^{T}\). The affine invariant inner product generalized to

and the gradient of a function \(f:{\mathcal {P}}^N(n) \rightarrow {\mathbb {R}}\) generalizes to

with components

The geodesic marching scheme is based on the fact that the gradient \(\nabla f\) is an element of the tangent space, \(\nabla f \in T{\mathcal {P}}^N(n)\), and indicates the direction of steepest ascent. Thus we can find an argument with a lower value of f by going a sufficiently small step in the negative direction of the gradient \(\varLambda =- dt \nabla f\) and mapping this point back on the manifold using the exponential map (12). This procedure is then iterated until convergence (cf. with Sect. 8).

5 Bayesian Estimation

5.1 Probabilities on Tensor Fields

Based on (Pennec 2006) we introduce basic concepts of probability theory on manifolds and extend them with respect to Bayesian interpretation of probability and estimation theory.

Let \(\left( \varTheta ,{\mathcal {F}},P \right) \) denote the probability space consisting of the sample space \(\varTheta \), the \(\sigma \)-algebra \({\mathcal {F}}\) of events and a probability measure P and let \({\mathcal {M}}\) denote the state space with corresponding \(\sigma \)-algebra \({\mathcal {E}}\). A \({\mathcal {M}}\)-valued random variable \(\varSigma :\varTheta \rightarrow {\mathcal {M}}\) is a function from the sample space \(\varTheta \) to the state space \({\mathcal {M}}\) which is \(({\mathcal {F}},{\mathcal {E}})\) measurable. The state space \({\mathcal {M}}\) consists of the Cartesian product \({\mathcal {M}}={\mathcal {P}}^N(n)\) of the space of tensors.

To each subset \({\mathcal {A}}\in {\mathcal {E}}\) a probability \(P(\varSigma \in {\mathcal {A}}):=P(\left\{ \omega \in {\mathcal {F}}: \varSigma (\omega ) \in {\mathcal {A}}\right\} )\) can then be assigned describing the chance to find a realization of the random variable \(\varSigma \) within \({\mathcal {A}}\). Generalizing the concept of probability density functions to Riemannian manifolds requires a measure which is induced in a natural way by the volume form \(d{\mathcal {M}}\) on \({\mathcal {M}}\). In a local coordinate system \(z=(v(\varSigma _1);v(\varSigma _2);\ldots ;v(\varSigma _N)) \in {\mathcal {D}} \subset {\mathbb {R}}^{\frac{nN(n +1)}{2}}\) the volume form reads

The metric tensor G can be inferred from the definition of the affine invariant inner product (20) and the isometry (8) between the space of \(n \times n\) symmetric tensors and the \(\frac{n(n +1)}{2}\) Euclidean space (cf. Zéraï and Moakher 2007b)

where \(\otimes \) denotes the tensor product and \(P^{\dagger }\) denotes the pseudo inverse of P. The determinant of the metric tensor at position \(\varSigma _j\) can then be calculated from (24) and using the relation \(\det {\left( G^{-1}\right) }=\left( \det {G}\right) ^{-1}\).

A function \(p:{\mathcal {M}}\rightarrow {\mathbb {R}}^{+}_0\) is then denoted as probability density function (pdf) with respect to the volume form \(d{\mathcal {M}}\) if the probability of any event \({\mathcal {A}} \in {\mathcal {E}}\) can be expressed in the form

In order to stress the special choice of the reference measure \(d{\mathcal {M}}\), the pdf is also denoted as a volumetric probability in the literature (Tarantola and Valette 1982; Tarantola 2005).

The expectation value \({\mathbb {E}}_{\varSigma }\left[ \cdot \right] \) of a function \(f:{\mathcal {M}}~\rightarrow ~{\mathbb {R}}\) with respect to the pdf \(p(\varSigma )\) is defined by

Important expectation values are the moments of a distribution, in particular the mean and the variance. The variance can be defined as the expectation value of the squared distance \(\sigma ^2_{\bar{z}}\left( z \right) ={\mathbb {E}}_{z}\left[ \text{ dist }(z,\bar{z})^2 \right] \) from the mean value \(\bar{z}\). However, on manifolds the mean is not necessarily unique. Furthermore, a mean value \(\bar{z}\) cannot be defined by an integral or sum over a random variable as the concept of addition and integration is not defined for elements of a manifold. The Frechét mean is defined by the set (if it exists) minimizing the variance (Pennec 2006)

Alternatively to the Frechét mean, Karcher means (Karcher 1977) are defined by all local minima in (28). The covariance matrix (Pennec 2006) is defined by the expectation value of the outer product of tangent vectors \(u \in T_{\bar{z}}{\mathcal {D}}\) attached at a mean value

with \(p_{\bar{z}}(u):=p(\exp _{\bar{z}}(u))\).

5.2 Estimation Theory

Bayesian decision theory in Euclidean space \({\mathbb {R}}^n\) (cmp. with Kay 1993) defines a Bayesian estimator of a random vector \(z \in {\mathbb {R}}^n\) given some observation \(g \in {\mathbb {R}}^m\) by the minimum of a Bayesian risk \(\mathcal {R}(\varepsilon )={\mathbb {E}}_{z|g}[L(\varepsilon )]\). The Bayesian risk depends on a loss function \(L(\varepsilon )\) weighting the error \(\varepsilon =z-{\hat{z}}\) between the estimate \({\hat{z}} \in {\mathbb {R}}^n\) and the current realization z. Commonly used loss functions are the quadratic loss function \(L_2(\varepsilon )=||\varepsilon ||^2\) penalizing the squared norm of the error leading to the minimum mean squared error (MMSE) estimator \({\hat{z}}={\mathbb {E}}_{z|g}[z]\). The hit and miss loss function penalizes all errors equal whose norm is above a small threshold \(\rho \) and zero otherwise and leads to the maximum a posteriori (MAP) estimator \({\hat{z}}=\arg \max _{z}\{p(z|g)\}\).

When we generalize the concept of Bayesian decision theory to the manifold of diffusion tensorsFootnote 5 we have to assure the estimators to be invariant with respect to the chosen chart (Jermyn 2005). We denote with \(g \in {\mathcal {X}}\) an observation which is an entity of the observation manifold \({\mathcal {X}}\) and with \(z \in {\mathcal {D}}\) the entity which we like to estimate based on the posterior pdf p(z|g). We define the quadratic loss function as \(L_2(z,{\hat{z}})= \text{ dist }(z,{\hat{z}})^2\). The condition of the minimum of the corresponding Bayesian risk is obtained by setting its gradient with respect to the estimate \({\hat{z}}\) equal zero

where we denote with \(u:=\overrightarrow{{}{\hat{z}} z}\) the element of the tangent space attached at \({\hat{z}}\) that is mapped to z by the exponential map. From (30) to (31) we exchange differentiation and integration and from (31) to (32) we apply a coordinate transformation by means of the logarithmic map \(\log _{{\hat{z}}}\) where \(G_{{\hat{z}}}(u)\) denotes the metric tensor in the transformed coordinate system. From (32) to (33) we use \( \nabla _{{\hat{z}}} \Vert u \Vert _{{\hat{z}}}^2 = -2 u\) which directly follows from Theorem 2 in Pennec (2006). The Riemannian MMSE estimator is invariant with respect to the chosen coordinate system as it is fully defined by the integral equation (33,34).

A loss function sharing the same idea as the hit and miss loss function, i.e. giving the same weight to all errors, is given by the negative delta distribution \(L_{\delta }(z,{{\hat{z}}}):=-\delta _{{\hat{z}}}(z)\). It gives no weight to all points \(z\ne {\hat{z}}\) and a negative ‘infinitive’ weight to \(z = {{\hat{z}}}\). The corresponding Bayesian risk yields

which is obviously minimized by the value \({\hat{z}}\) maximizing the volumetric probability function p(z|g).

Thus, we can take over the concepts of Euclidean estimation theory by considering volumetric probability functions, i.e. pdfs defined with respect to the volume form. In particular, the Bayesian rule holds for volumetric probability functions such that we can derive the posterior pdf by means of a corresponding likelihood function and prior distribution as in case of the Euclidean space (Jermyn 2005; Tarantola 2005).

6 Likelihood Models

6.1 Noise in NMR Images

Examinations on NMR noise characteristics can be traced back to Hoult and Richards (1976), Libove and Singer (1980), Edelstein et al. (1983, 1984), Ortendahl et al. (1983, 1984), Henkelman (1986). The Rician distribution, also denoted as Rice distribution (Rice 1944), has first been examined by Bernstein et al. (1989) as a theoretical model for the noise in the NMR signal. Since then, a large number of different analyzing and denoising methods based on the Rician noise model have been proposed, e.g. McGibney and Smith (1993), Gudbjartsson and Patz (1995), Macovski (1996), Andersen (1996), Sijbers et al. (1998, 2007), Nowak (1999), Wood and Johnson (1999), Sijbers and Dekker (2004), Koay and Basser (2006). NMR imaging systems provide at each spatial position \(x_k\) and direction \(g_j\) a quadrature pair \(I_{j k}, R_{j k}\) of signal values that can be interpreted as a complex image value \(R_{j k}+ j I_{j k}\). The magnitude image \(S_{j k}:=S_j(x_k)\) is related with the complex NMR image via

The noise in the real part \(R_{j k}\) as well as in the imaginary part \(I_{j k}\) are well described by additive Gaussian noise components with same standard deviation \(\sigma \) for both channels (Wang and Lei 1994). Furthermore, the noise at different spatial positions \(x_k\) as well as in different channels can be assumed to be statistically independent (Wang and Lei 1994). Due to the nonlinear relation (36) between the complex valued NMR signal and real valued magnitude signal the latter is not Gaussian distributed any more, but follows a Rician distribution (Rice 1944)

where \(A_{0 k}\) denotes the noise free reference image, \(A_{j k}\), \(j>0\) the noise free DW images at position \(x_k\) and \(I_0\) the zero order modified Bessel function of first kindFootnote 6In accordance with the literature on DTI reconstruction, e.g. (Chen and Hsu 2005), we define the signal-to-noise ratio (SNR) as the quotient \(\text{ SNR }=\mu /\sigma _{b}\) of the mean \(\mu \) of the image magnitude in the region of interest divided by the background standard deviation. The background standard deviation can be estimated from regions containing no tissue. For high signal-to-noise ratios (i.e. approx. \(\text{ SNR }\ge 3\)) the Rician distribution is quite well approximated by a Gaussian distribution (cf. Fig. 2, lower right) with standard deviation \(\sigma \) and mean \(\sqrt{\mu ^2+\sigma ^2}\) (Gudbjartsson and Patz 1995). But due to the nonlinear relationship between the signal and noise, the observed signal \(S_{i k}\) is not related by an additive noise term with the true underlying noise free signal \(\mu \), i.e. the mean of the Gaussian approximation does not correspond with the true underlying signal value \(\mu \).

Upper left Rician probability density function \(p(x|\mu ,\sigma )\) with \(\mu =1\) and different standard deviations: red curve \(\sigma =0.3\), \(\text{ SNR } \approx 5.1\), green curve \(\sigma =0.9\), \(\text{ SNR } \approx 1.7\), blue curve \(\sigma =1.4\), \(\text{ SNR } \approx 1.1\); Upper right image and lower row The Rician pdfs and corresponding Gaussian approximations (dotted curves) with same standard deviation as the Rician pdfs. In case of high SNR (lower right image) also a Gaussian distribution (dotted dashed curve) of the additive Gaussian noise model with mean \(\mu =1\) and standard deviation \(\sigma =0.3\) is shown (Color figure online)

For low SNR (Fig. 2, upper right) and medium SNT (Fig. 2, lower left) SNR the distribution is skewed with a longer right tail distinguished clearly from the corresponding Gaussian approximation with standard deviation \(\sigma \) and mean \(\sqrt{\mu ^2+\sigma ^2}\). In particular, for all SNRs, the observed DW image is not related to true underlying noise free signal \(\mu \) by an additive noise term. Our real data set (cmp. with Sect. 10.2.2) has a SNR of 5.2. We conclude that an additive Gaussian model for NMR signal is not always a good idea even in the case of high signal-to-noise ratios.

6.2 The Likelihood Model

Although the Rician noise model has been applied for restoration MMR magnitude images for a long time (McGibney and Smith 1993; Gudbjartsson and Patz 1995; Macovski 1996; Andersen 1996; Sijbers et al. 1998, 2007; Nowak 1999; Wood and Johnson 1999; Sijbers and Dekker 2004; Koay and Basser 2006), as a noise model for the likelihood function of diffusion tensors images it has been proposed quite recently (Landman et al. 2007a; Andersson 2008; Jeong and Anderson 2008). In contrast to (Landman et al. 2007a; Andersson 2008; Jeong and Anderson 2008) where the likelihood function has been formulated within an Euclidean setting, we formulate the likelihood function within the Riemannian framework. In order to relate the observations \(S_{j k}\) with the diffusion tensor image \(\varSigma _k:=\varSigma (x_k)\) we insert the Stejskal–Tanner equation (1) of the jth measurement into the Rician noise model (37)

where \(A_{0 k}\) denotes the noise free reference signal at position \(x_k\). The noise in each DW signal \(S_{j k}\) for j different ‘b-values’ is mutually independent as DW images are acquired in independent measurements steps.

As we can assume statistical independence (Gudbjartsson and Patz 1995) of signal magnitudes at different spatial positions \(x_k\) we can express the overall sampling distribution by the product of sampling distributions at different spatial positions and different ‘b-values’

where we introduced the abbreviations \(\underline{S}=\{S_{j k}\}\), \(\underline{A}_0=\{A_{0 k}\}\) and \(\underline{\varSigma }=\{\varSigma _k\}\). After inserting the measurements \(\underline{S}\), the sampling distribution (39) serves as the likelihood function of the tensor image \(\varSigma \), the noise free reference image \(\underline{A}_0\) and the noise levels \(\sigma \). In a next step, we decouple the estimation of nuisance parameters \((\underline{A}_0,\sigma )\) from the estimation of the tensor valued image \(\varSigma \). We first estimate the noise level directly from water-free regions in NMR data volume such that all signal values above zero are due to noise. Using a non-informative prior for the noise variance, i.e. \(p(\sigma )\propto 1/\sigma \), and the Rician pdf \(p(S_{j k}|0,\sigma )\) for mean valueFootnote 7 equal 0 we estimate noise variance by the MAP estimator

The estimate \(\hat{\underline{A}}_0\) of the noise-free reference image \(\underline{A}_0\) can be estimated from the observed reference image \(\underline{S}_{0}\) using one the denoising approaches for Rician distributed data proposed by Sijbers et al. (1998).

6.2.1 Maximum Likelihood

Applying the geodesic marching scheme to the posterior pdf

requires the calculation of the gradient of the likelihood function or its negative logarithm. According to (19) the gradient of the negative log likelihood, \(E_L:=-\log (p_L)\), with \(p_L\) given by (38) with respect to the tensor at spatial position m yields

with

We left out the arguments of the modified Bessel functions of zero order \(I_0(x)\) and first order \(I_1(x)\) in favor of an uncluttered notation. Let us compare this gradient with the gradient of the log likelihood model we would obtain for an additive Gaussian noise model, i.e. \(S_{i k} =A_{i k}+\varepsilon _{j k}\) with \(\varepsilon _{i k}\sim \mathcal {N}(0,\sigma )\) and \(p(S_{i k}|A_{i k})= \mathcal {N}(A_{i k},\sigma )\) proposed by Lenglet et al. (2006). The likelihood function for the tensor image is then obtained by inserting the Stejskal–Tanner equation in the noise model

The gradient of the negative log likelihood of (45) equals the gradient of the Rician noise model (43) except for the correction term \(\frac{I_1(x)}{I_0(x)}\) which equals one for the additive Gaussian noise model. The correction term \(S_j\mapsto S_j \frac{I_1}{I_0}\) accounts for the skewed Rician probability density function with heavy right tail. For the Rician noise model, the correction term becomes nearly one for large arguments, e.g. \(\frac{I_1(x)}{I_0(x)}>0.99\) for \(x=\frac{\hat{A}_{0 k} S_{j k}}{\sigma ^2}>51\). However, one should be careful in applying the Gaussian approximation as the DW magnitude depends on the diffusion process. Even a high SNR in the reference image \(A_{0 m}\) might correspond with low SNRs in the DW images (cf. Eq. (1)) making the correction term indispensable. In order to better understand the influence of the correction term on the estimated diffusion tensor image we examine the maximum of the likelihood function (39). For the moment being we neglect the influence of the prior distribution which occurs for instance in case of flat priors or in case of a sufficient large amount of observations. At the maximum of the likelihood function the term (44) needs to be zeroFootnote 8 for each observation j and each spatial position m, i.e.

The term \(g_{j}^{T} \varSigma _m g_j\) describes the diffusion component in direction \(g_j\). As \(\frac{I_1(x)}{I_0(x)}<1\) for finite arguments x the right hand side of (46) is smaller compared to the additive Gaussian noise model. In return, the term on the left hand side must also be smaller at the likelihood maximum which can be accomplished by a larger diffusion component \(g_{j}^{T} \varSigma _m g_j\). Consequently, the correction term leads to estimates \({\hat{\varSigma }}_m\) encoding larger diffusion as for the additive Gaussian noise model or reversely, the Gaussian noise model leads to a bias towards too small diffusion.

6.2.2 Robust Likelihood Functions

So far, we assumed the MRI images, i.e. the imaginary and real part, to be corrupted by additive Gaussian noise. However, outliers that do not follow the assumed statistical model might lead to serious estimation errors. If the statistical distribution of other error sources are available, the estimators can be made robust by modifying the potential function of the error model as illustrated with the following example.

Example

Figure 3 (left) shows a Gaussian distribution (red curve) with mean \(\mu =1\) and standard deviation \(\sigma =1\) and Fig. 3 (right) the corresponding Rician distribution \(p(x|\mu ,\sigma )\) (red curve). If we model outliers by Gaussian distributions with larger standard deviation (\(\sigma =4\)) we end up with a mixture of Gaussian distributions [(Fig. 3 (left, blue bar plot)] with larger tails than the single Gaussian distribution. These tails in the Gaussian mixture distribution lead to a larger tail on the right side of the distribution of the signal magnitude \(S=\sqrt{x^2+y^2}\) where x, y follow the Gaussian mixture distribution [(cf. Fig. 3 (right, blue bar plot)].

The modified distribution explains both types of random variables such that outliers with respect to the Rician noise model become part of the new probability model. However, such outlier model might not be available in closed form.

Left The normal distribution (red curve) with mean \(\mu =1\) and standard deviation \(\sigma =1\) as well as the (normalized) histogram of \(10^6\) samples of a Gaussian mixture model, i.e. \(p_m(x)=0.7 \, {\mathcal {N}}(1,1)+ 0.3 \, {\mathcal {N}}(1,4)\). Right The Rician distribution (red curve) and the (normalized) histogram (blue bars) of the magnitude distribution \(S=\sqrt{x^2+y^2}\) where x, y follow the Gaussian mixture distribution shown on the left side (Color figure online)

Instead of explicitly modeling outlier distributions we use the idea of robust statistics: outliers are detected and excluded (or at least their influence is reduced) from the estimation process without modeling them explicitly (Huber 1981; Hampel et al. 1986). Additive Gaussian noise models can be made robust against outliers by introducing potential functions depending on the residua of the constraint equations. These potential functions have a lower slope than the quadratic potential function of the Gaussian noise model for residua which are unlikely to occur. As a consequence the influence function, as the derivative of the potential function, reduces the influence of the corresponding terms in the gradient or constraint equation. A corresponding modification of Rician noise model is not that obvious as the residua of the constraint equation cannot be isolated from the true signal due to the nonlinear relation between magnitude image and noise. Thus, instead of modifying the likelihood function, we change the negative log likelihood gradient by introducing the influence functions \(\psi (\zeta _{j m})\) depending on the likelihood function

Multiplying each summand in (43) by the influence function

allows outliers to be suppressed or reduced by choosing a low value \(\psi (\zeta _{j m})\) for unlikely arguments.

7 Prior Models

7.1 Mathematical Issues

In this subsections several mathematical concepts are discussed that will be useful for the subsequent analysis.

7.1.1 Energy Functionals

An intrinsic linear isotropic regularization scheme for tensor valued images has been derived in Pennec et al. (2006) and has been used to define nonlinear anisotropic regularization schemes by giving different weights to different directions of the linear regularizer. In Fillard et al. (2005) an intrinsic nonlinear isotropic regularization has been derived from a corresponding energy functional. A semi-intrinsic nonlinear isotropic diffusion scheme has been derived in Gur et al. (2007, 2009), i.e. the data term has been handled extrinsic whereas the regularization term has intrinsically been handled. In this section we extend the intrinsic approach of Pennec et al. (2006) to the linear anisotropic case which serves as a basis for a further generalization to nonlinear isotropic and nonlinear anisotropic regularization/diffusion schemes. Let us denote with \(\partial _i\varSigma (x)\) the partial derivatives of the diffusion tensor images in the direction \(i=1,\ldots ,n\) and with \(d^{i j}\) the components of the diffusion tensorFootnote 9 which locally controls the amount and direction of the regularization and does not depend on the tensor valued image \(\varSigma \). We define the energy functional for linear anisotropic regularization by

A stationary image \(\varSigma \) is an image for which the energy attains a (local) extremum. A variation of the image is parameterized with the test vector field \(W:\Omega \rightarrow T{\mathcal {P}}(n)\) and defined by \(\varSigma _{\varepsilon }=\exp _{\varSigma }(\varepsilon W)\). According to the fundamental lemma of calculus of variations (Courant and Hilbert 1953), the functional derivative \(\delta E\) can then be deduced by the relation

leading to

For \(i=j\) and \(d^{i j}=1\) (51) reduces to the operator \(\Delta =\sum _i \Delta _{i i}\) derived in Pennec et al. (2006) with the components

In addition to Pennec et al. (2006), we also derive the mixed components

needed for anisotropic regularization. The nonlinear anisotropic regularizer can then be deduced from (51) by making the diffusion tensor \(d^{i j}\) dependent on the tensor field \(\varSigma \).

7.1.2 Local Coordinate Representation

In this section, we discuss the relation of our energy formulation (49) to the energy formulation proposed by Gur et al. (2007, 2009). Firstly, the energies in both approaches are different. The authors in Gur et al. (2007, 2009) motivate their energy from a differential geometric point of view, i.e. they consider the tensor valued image as a section in the fibre bundle of the trivial product space of image—and tensor domain and derive their energy from high energy physics (Polyakov action). We motivate our energy functional from a generalization of the linear anisotropic diffusion scheme for gray-scale images. Explaining the whole idea of Gur et al. (2007, 2009) goes beyond the scope of this paper and we have to refer the interested reader to the original work. The point we would like to make here concerns the different representations of both approaches. In our energy formulation we use the metric in the form (11). We call this the matrix representation in the following. The approach of Gur et al. uses a matrix form of the metric with vectorized tangent vectors which will be denoted as local coordinate representation in the following.

These are two equivalent ways for writing down the same mathematical entity. But the matrix representation leads to considerable practical simplifications of the variational problem: We write our energy functional in the local coordinate representation with Einstein summation conventionFootnote 10

where \(g_{\alpha \beta }\) denote the \(\alpha , \beta \)th component of the metric tensor. The variation yields

with the Christoffel symbols \(\Gamma ^{\eta }_{\alpha \beta }\) defined by

From (55) we can infer the \(\alpha \)th component of the functional derivative as

Although of outstanding importance in theory, Christoffel symbols become a nuisance if they have to be computed explicitly. In the case of DTI (Gur et al. 2007, 2009) have been faced with 78 nontrivial Christoffel symbols when using the standard matrix chartFootnote 11 resulting in numerical difficulties when computing the functional derivative. As a solution, they proposed a particular chart, given by the so called Iwasawa decomposition of the tensors, which allows them to reduce the number of nonzero Christoffel symbols to 26. If we compare (57) with the \(\alpha \)th component of the functional derivative in matrix form in (51) we see that the term containing the Christoffel symbols is given implicitly in the matrix representation

Consequently, the matrix representation allows to compute the functional derivative without the need for explicitly computing any Christoffel symbols at all.

7.1.3 Discretization of Differential Operators

So far we assumed the tensor valued image \(\varSigma (x)\) to be defined on a continuous image domain \(\Omega \). In an experiential setting we are confronted with diffusion tensor images defined on a discrete grid \(\mathcal {G}_h\) where h denotes the width between the nodes of the regular grid. Consequently, we require discrete approximations of \(\partial _i \varSigma \) and \(\Delta _{i j} \varSigma \). In principle, we could apply finite difference approximations as proposed in Moakher (2005), Gur et al. (2007, 2009), Zéraï and Moakher (2007a) but such a treatment might lead to unstable regularization schemes (cmp. with Sect. 10.1.1).

Alternatively, we can rely on intrinsic approximation schemes that make use of the tangent space in order to approximate partial derivatives. In the following we denote with \(T\varSigma _x^{\pm e_j}:=\overrightarrow{\varSigma (x)\varSigma (x \pm h e_j)}\) the tangent vector defined by the logarithmic map (13)

and we denote with \(e_j\) the unit vector pointing in jth direction.

Proposition 1

The second order discrete approximation of the partial derivative in direction j reads

Proposition 2

The second order discrete approximation of \(\Delta _{i i} \varSigma \) reads

where \(e_i\) denotes a unit vector in direction i.

The proofs of Proposition I and II can be found in (Fillard et al. 2005; Pennec et al. 2006). For the anisotropic regularization schemes, we also need the mixed derivative operator \(\Delta _{i j} \varSigma \) provided by Proposition III

Proposition 3

The second order discrete approximation of the mixed derivative operator \(\Delta ^{[i j]}:=\Delta _{i j} \varSigma + \Delta _{j i} \varSigma \) in direction \(e_i\) and \(e_j\) is given by

with the unit vectors \(e_n=\frac{e_i+e_j}{\sqrt{2}}\) and \(e_p=\frac{e_i-e_j}{\sqrt{2}}\) pointing towards the diagonal adjacent grid points \(x_n:=\sqrt{2}e_n\) and \(x_p:=\sqrt{2}e_p\).

Proof

We start with expanding the tangent vector in a Taylor series

In a second step we express the derivative in direction n by derivatives along the coordinate axes in i and j direction , \(\partial _{n}=\frac{1}{\sqrt{2}}\partial _i+\frac{1}{\sqrt{2}}\partial _j\), yielding

Computing the sum \(T\varSigma _x^{\Delta x_{n}}:=T\varSigma _x^{x_{n}}+T\varSigma _x^{-x_{n}}\) becomes a fourth order approximation as all uneven terms with respect to h cancel out

Expanding \(T\varSigma _x^{\Delta x_p}:=T\varSigma _{x}^{x_p}+T\varSigma _{x}^{-x_p}\) in the same way yields

By subtracting Eqs. (66) from (65) and dividing by \(2 h^2\) we obtain the second order approximation for the mixed derivative \(\Delta _{i j}\varSigma +\Delta _{j i} \varSigma \) which concludes the proof. \(\square \)

7.1.4 Analytic Matrix Functions

In addition to its theoretical justification, the affine invariant metric has outperformed the flat Euclidean metric in numerous applications (Gur and Sochen 2005; Moakher 2005; Fletcher and Joshi 2004; Lenglet et al. 2005, 2006; Fillard et al. 2005; Pennec et al. 2006; Zéraï and Moakher 2007a). In order to become an established method in DTI image processing, the involved matrix functions need to be computed in an effective way. In this section we present an analytical method for matrix functions \(f:{\mathcal {P}}(3)\rightarrow {\mathcal {P}}(3)\) which allows us to evaluate them much faster than their numerical counterparts. This analytical scheme has been extensively examined in the context of finite element computation (Morman 1986; Hartmann 2003) but has not been applied in the context of DTI estimation so far. In fact, an analytical scheme for eigenvector and eigenvalue computation has been proposed in Hasan et al. (2001). However, this scheme can only deal with pairwise different eigenvalues not being guarantied in DTI. For instance, at convergence of the geodesic marching scheme, the energy gradient converges to the zero matrix such that we also need to handle the case of three identical eigenvalues, i.e. zero eigenvalues in this case.

In the following we discuss the direct analytical computation of matrix functions by means of eigendyades (Morman 1986). The starting points are the three principle matrix invariants

from which eigenvalues \(\lambda _k\), \(k=1,2,3\) can then analytically be computed using Cardano’s formula (Dunham 1990)

In case of \({\mathcal {I}}_1^2=3 {\mathcal {I}}_2\) all three eigenvalues are identical equal \(\lambda _k = {\mathcal {I}}_1/3 \). The case \({\mathcal {I}}_1^2<3 {\mathcal {I}}_2\) does not occur which can be simply proven by inserting \(\text{ tr }\left( \varSigma \right) =\sum _k \lambda _k\) in the matrix invariants \({\mathcal {I}}_1\) and \({\mathcal {I}}_2\). For computing the matrix functions f(A) we have to distinguish the cases of (a) three pairwise different eigenvalues, (b) exact two identical eigenvalues and (c) three identical eigenvalues. In the first case (a) the matrix function in analytical form is given by Morman (1986)

where the eigendyades read

with

The analytical matrix function requires besides the matrix invariants, only the computation of the squared matrix \(A^2\) and basic algebraic operations. In the second case (b), i.e. \(\lambda _i=\lambda _{j}\ne \lambda _k\), the analytical tensor function reads

Finally, for three identical eigenvalues (c), i.e. \(\lambda =\lambda _i\), \(i=1,2,3\) the analytical matrix function is given by

We evaluate the run-time of our analytical Matlab implementation both on the CPU as well as on the GPU. As a reference method we implemented a matrix function in C based on the Lapack library.Footnote 12 Table 1 shows the run-times (averaged over \(\varepsilon \)) for different numbers of matrices and confirms a significant speedup of the analytical approach by a factor 2.8–7.2

It is a well known fact that the analytical computation of eigenvalues are sensitive to numerical inaccuracies,Footnote 13 especially in the case of similar eigenvalues \(\lambda _i\simeq \lambda _j\) (Hartmann 2003) when the nominator in (69) tends to become nearly zero. We examine these numerical inaccuracies by computing the matrix logarithm subsequently followed by the matrix exponential function of the matrices

using the build-in Matlab functions expm and logm as well using our own analytical implementation. As an error measure we compute the Frobenius norm of the difference original matrix and the transformed one i.e. error\(=\Vert A_{\varepsilon }-\exp \left( \log \left( A_{\varepsilon }\right) \right) \Vert _{F}\).

Table 2 shows the numerical error for the function \(\exp \left( \log (A_{\varepsilon })\right) \). As expected, we observe a significant smaller error for the numerical approach. The error of the analytical implementation depends on the transformed matrices and its largest value is in the range of \(10^{-14}\).

One has to keep this differences in mind and examine if the precision of the analytical schemes is sufficient for the problem at hand. For our purpose, i.e. reconstruction and diffusion/regularization of tensor valued images, we observed no increase of the estimation error when applying the analytical matrix valued functions. In order to prevent the nominator in (69) to become zero we add or subtract a small \(\varepsilon =10^{-10}\) to one eigenvalue if two of them are closer than \(10^{-10}\).

7.2 Regularization Taxonomy

In this section we present the regularization taxonomy for tensor valued images. We discuss the energy functionals and derive the functional derivatives as well as their discrete approximation. If possible, we derive for each energy functional the corresponding MRF energy function which is later needed for estimating the posterior covariance (cmp. with Sect. 9).

7.2.1 Isotropic Regularization

In order to define an edge-preserving regularizer, we need a proper definition of edges within tensor valued images. In case of gray-scale images, region boundaries have been characterized by significant changes in the intensity indicated by a sufficiently large local change in the gradient norm \(|\nabla f(x)|\) (e.g. Canny 1986). In analogy to gray-scale images, we characterize region boundaries of tensor valued images by means of the norm of the spatial gradient \(||\nabla \varSigma (x)||_{\varSigma (x)}=\sqrt{\sum _{j=1}^m \left\langle \partial _j \varSigma (x), \partial _j \varSigma (x) \right\rangle _{\varSigma (x)}}\) of the image manifold. Here, the feature space \({\mathcal {P}}(n)\) consists of a multidimensional manifold such that region boundaries might origin from changes in different degrees of freedom of the image features. However, changes in each particular degree of freedom in \({\mathcal {P}}(n)\) yield a change in the gradient magnitude.

Example

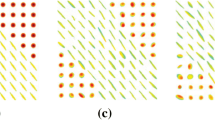

Different types of image boundaries occurring in tensor valued images are illustrated with a synthetically generated tensor valued image \(\varSigma (x): {\mathbb {R}}^2\rightarrow {\mathcal {P}}(2)\) defined on a 2D image domain (see Fig. 4). The feature space, i.e. the domain of the diffusion tensor images, has three degrees of freedom as a symmetric \(2 \times 2\) matrix is uniquely determined by its two eigenvalues \(\lambda _j\) and the orientation \(\alpha \) of eigenvectors. Figure 4 illustrates some possible boundaries due to changes of either one of the eigenvalues or the eigenvector orientation. General boundaries have their origin in a combination of changes in all degrees of freedom.

Variational formulation In Fillard et al. (2005) an energy functional for nonlinear isotropic regularization has been defined by

with the corresponding functional derivative

They discretize (77) approximating \(\Delta _{i i}\varSigma \) and \(\partial _i \varSigma \) using Proposition I (60) and II (61), respectively. Partial derivatives \(\partial _i \phi '\) have been approximated by standard finite difference technique. As we will show in Sect. 10.1.1, such a scheme leads to an unstable diffusion process. Furthermore it is, according to Pennec et al. (2006), considered as ineffective.

We do not have a stability proof for numerical regularization schemes for diffusion tensor images. However, we require that a numerical scheme is consistent with a stable scheme in a special Riemannian space, the flat Euclidean space, i.e. it converges to the Euclidean counterpart in the Euclidean limit.

Such a numerical scheme can be derived from (77) via basic algebraic manipulations and approximating \(\delta E_p(x_s)=\nabla \!_{\varSigma _{s}}\!E_p(x_s) + O(h)\) with

This scheme converges to the vector version of a well known scheme in the Euclidean limit (cf. with Weickert (1999b), pp. 436) which can be proven easily by setting \(T\varSigma _{s}^{\pm e_{i}}\approx \pm \left( \varSigma _{x_s \pm h e_i} \mp \varSigma _{s} \right) \).

MRF formulation The gradient of the MRF energy

with respect to the tensor at position \(x_s\) leads directly to (78).

7.2.2 Anisotropic Regularization

Variational formulation In a similar way as in case of gray-scale images (cf. Weickert 1996), we design the diffusion tensor \(d^{i j}\) at position x by analyzing the structure of the image \(\varSigma \) in a local neighborhood V around x. Let us denote with \(\nabla _{\perp } \varSigma (x)=(\partial _1 \varSigma (x), \ldots ,\partial _m \varSigma (x))^T\) the vector containing the partial derivatives along all m spatial coordinate directions and let a denote a unit vector in \({\mathbb {R}}^m\) such that directional derivatives in direction a can be written as \(\partial _{a}\varSigma (x) =a^{T} \nabla _{\perp } \varSigma (x)\). The direction \(a_{\min }\) of least variation of the image value around position \(x_m\) can then be inferred by minimizing the local energy, i.e. \(a_{\min }= \arg \min _{a} E(a)\) with

where \(w_m(x)\) denotes a weighting mask around the position \(x_m\). In the last term (81) we defined the structure tensor J by its components

The direction of least/largest variation is characterized by a minimum/maximum of the energy E(a) and can be deduced by a spectral decomposition of the structure tensor. The diffusion tensor \(D=\underline{\psi }(J)\) is finally obtained by applying a (matrix valued) influence function \(\underline{\psi }\) to the structure tensor J, i.e. a scalar valued influence function \(\psi \) is applied to the eigenvalues of J.

As for the gray-scale counterpart (Weickert 1996), nonlinear anisotropic diffusion filtering schemes with a diffusion tensor D defined above cannot be derived from a corresponding energy formulation (cf. Scharr et al. 2003). However, we can define such a nonlinear diffusion scheme as a generalization of the corresponding linear scheme by making the components of the diffusion tensor \(d^{i j}\) depended on the evolving diffusion tensor image.

In order to approximate (51) on a discrete grid we split the functional derivative \(\delta E_{p}(x)=\delta E^1_{p}(x)+\delta E^2_{p}(x)\) in a part \(\delta E^1_{p}(x):=\delta E_{p;i=j}(x)\) containing the diagonal terms of the diffusion tensor and a part \(\delta E^2_{p}(x):=\delta E_{p;i j \in \chi }(x)\) with \(\chi :=\{i,j: i \ne j \text{ and } \, i,j \in \{1,\ldots ,m\} \}\), containing the off-diagonal terms of the diffusion tensor. We observe that terms with \(i=j\) can directly be deduced from the corresponding derivation of the isotropic regularizer [(cf. Eq. (78)] by exchanging \(\phi '\) with \(d^{i i}\). In addition, we have to approximate the mixed derivative terms

An approximation schemeFootnote 14 being consistent with its Euclidean limit is given by \(\delta E^2_{p}(x_s)=\nabla \!_{\varSigma _{s}}\!E_{p}^2(x_s) + O(h)\) with

MRF formulation In order to find the MRF energy whose gradient leads to the discrete approximated linearFootnote 15 anisotropic diffusion scheme, we first make use of the fact that the condition equation is the sum of terms involving second derivatives, i.e. \(i=j\) and terms involving mixed derivatives, i.e. \(i \ne j\). Due to the linearity property of the gradient, we can model energies for each of these terms separately denoted as \(E_{iso}\) for terms with \(i=j\) and \(E_{an}\) for terms with \(i \ne j\) with \(E( \underline{\varSigma }) = E_{iso}( \underline{\varSigma })+E_{an}( \underline{\varSigma })\). The energy \(E_{iso}( \underline{\varSigma })\) is obtained from the MRF energy of the linear isotropic regularizer

by exchanging \(\phi '(x_k)\) with \(d^{i i}(x_k)\). For the anisotropic part it is not difficult to see that the gradient of the energy

leads to (84).

8 Point Estimation

We consider two point estimates: diffusion filtering on given diffusion tensor images and reconstruction of a diffusion tensor images from MRI data.

8.1 Diffusion Filtering

Diffusion filtering can be applied for denoising or interpolating observed diffusion tensor images. To this end, the observed image \(\underline{\varSigma }^0\) is evolved by means of the geodesic marching scheme (cf. Table 3): In a first step, the gradient

is computed by one of the discrete schemes presented in Sect. 7.2. In a second step, we map the negative gradient (scaled by some time step dt) onto the manifold by means of the exponential map. This process is repeated until the maximum number of iteration steps have been accomplished. Table 3 summarizes the diffusion filtering algorithm in pseudo-code.

8.2 Image Reconstruction

In addition to isotropic and anisotropic diffusion filtering, we consider the MAP estimation of diffusion tensor images from MRI data, i.e. by minimizing the posterior energy

Due to page number limitations we restrict the MAP estimate to isotropic regularization terms. The posterior energy \(E(\underline{\varSigma })\) is proportional to the linear-combination of likelihood \(E_L(\underline{\varSigma })\) and prior energy \(E_p(\underline{\varSigma })\) where the regularization parameter \(\lambda \) balances both terms. In a first step, diffusion tensor image \(\underline{\varSigma }^{\tau =0}\) is initialized, e.g. by isotropic diffusion tensors. In a next step, the energy gradient \(\left. \nabla \!_{\underline{\varSigma }}\!E \right| _{\underline{\varSigma }=\underline{\varSigma }^{\tau =0}}\) is evaluated at the initial diffusion tensor image \(\underline{\varSigma }^{\tau =0}\). The posterior energy gradient consists of the sum of likelihood (sampling) energy gradient \(\nabla \!_{\underline{\varSigma }}\!E_L\) which is either based on the Gaussian noise model (45) or the Rician noise model (43), as well as the prior energy gradient \(\nabla \!_{\underline{\varSigma }}\!E_p\) (87). As the energy gradient points in the direction of the largest ascent, we can minimize the energy by going in the opposite direction. To this end, we map the negative gradient, scaled by some small ‘time’ step dt, back on the manifold by means of the exponential map. This process is repeated until convergence, i.e. the change in the evolved diffusion tensor image falls below some threshold. Table 4 summarizes the geodesic marching based MAP estimator in pseudo-code.

9 Covariance Estimation

In case of image reconstruction (cf. Sect. 8.2) we can make use not only of the maximum of the posterior pdf but also use its width as a reliability measure of the point estimate. We apply the Laplace approximation to the posterior pdf to approximate the posterior covariance. To this end, we expand the negative log posterior pdf \(E(\underline{\varSigma })=-\log (p(\underline{\varSigma }|\underline{S},\hat{\underline{A}}_0,{\hat{\sigma }}))\) in a second order Taylor series around its minimum \(\underline{\varSigma }_{\min }\)

which requires the computation of the Hessian. On general manifolds, the computation of the Hessian H in local coordinates of a function \(f:{\mathcal {M}}\rightarrow {\mathbb {R}}\) reads (cf. Pennec 2006)

requiring the cumbersome evaluation of the Christoffel symbols \(\Gamma _{i j}^k\). For our Riemannian manifold \({\mathcal {P}}^{N}\!(n)\), the exponential/logarithmic map allows us to define an exponential chart \(\varphi := v \circ \log _{\underline{\varSigma }_a}\!\left( \underline{\varSigma } \right) \) mapping each point \(\underline{\varSigma }\) of the manifold first to the tangent space attached at \(\underline{\varSigma }_a\) and subsequently mapped on an element of the Euclidean space \({\mathbb {R}}^{\frac{n(n+1)}{2}}\) by means of the projection map \(v: Sym(n,{\mathbb {R}}) \rightarrow {\mathbb {R}}^{\frac{n(n+1)}{2}}\) , \(A \mapsto a\), defined in Sect. 4.1.

In the exponential chart \(\varphi \) all Christoffel symbols become zero, i.e. \(\Gamma _{i j}^k=0\). In order to see this, let us remind (cf. Sect. 3) that geodesics \(\gamma (t)\) between \(\underline{\varSigma }_a\) and \(\underline{\varSigma }_b\) are mapped on straight lines by the exponential chart, i.e. \(v \circ \varphi \circ \gamma (t)=a t\) with \(a := v \circ \overrightarrow{\underline{\varSigma }_a\!\underline{\varSigma }_b} \in {\mathbb {R}}^{\frac{n(n+1)}{2}}\). Each geodesic is represented as a curve \(\underline{\gamma }(t):=v \circ \varphi \circ \gamma (t)=a t\) in the Euclidean space \({\mathbb {R}}^{\frac{n(n+1)}{2}}\). On the other hand, a geodesic in local coordinates needs to fulfill the geodesic equations (Helgason 1978)

which reduces to \(\Gamma _{i j}^k a_i a_j =0\) in our exponential chart. In general, this geodesic equation can only be fulfilled for zero Christoffel symbols leading to the Hessian components \(H_{i j}(f)=\partial _i \partial _j f\) evaluated in the exponential chart. In favor of an uncluttered notation we define \(P_j:=\varSigma _{\min }(x_j)\), \(A_j:= \overrightarrow{P_j \varSigma (x_j)}\), \(A_{s p q}:=(A_s)_{p q}\), \(J^{p q}_s:=\frac{\partial A_s}{\partial A_{s p q}}\). A rather length but straightforward computation of the second derivative of the energy of the isotropic regularization energy reads

The Hessian of the nonlinear isotropic energy can directly be deduced from (78) as

where we introduce the abbreviation \(a_{s t}:= a_t(x_s)\) as well as \(x_{s}^{+j}:=x_{s}+he_j\) and \(x_{s}^{-j}:=x_{s}-he_j\). Finally, we estimate the covariance matrix by means of a Gauss Markov random sampling algorithm. To this end, we consider (29) as the expectation

of the outer product of the tangent vectors times the Jacobian factor \(\sqrt{|G_x(a)|}\) with respect to the pdf in the tangent space at x. The inverse of H is estimated via Gauss Markov Monte Carlo sampling. To this end let \(p_k \sim \mathcal {N}(0,I)\), \(k\in {1,\ldots ,K}\) be K samples from the zero mean Gaussian distribution with identity covariance matrix I. Further let \(H=LL^T\) be the Cholesky decomposition of H. The solutions \(q_k\) of the linear equation systems \(L^{T} q_k=p_k\) have then the desired covariance matrix \(C(q)=C(L^{-T} p)=\left( L L^T \right) ^{-1}=H^{-1} \), and thus \(C \approx \sum _k \sqrt{|G_x(a_k)|} q_k q_{k}^{T}/K \). In contrast to the case of gray-scale images, here the empirical mean has to be weighted by the Jacobian factor \(\sqrt{|G_x(a_k)|}\).

10 Experiments