Abstract

Online reading for academic purposes is a complex and challenging activity that involves analysing task requirements, assessing information needs, accessing relevant contents, and evaluating the relevance and reliability of information given the task at hand. The present study implemented and tested an analytical approach to strategy training that combined a detailed, step-by-step presentation of each strategy with the integration of various strategies across modules and practice tasks. One hundred sixty-seven university students were assigned to either a treatment or a control condition. The training program was implemented as part of a digital literacy course. Instructors received background information and instructional materials prior to the beginning of the term. The intervention improved students’ performance on a set of search and evaluation tasks representative of the target skills, although to varying extent. The impact was higher for evaluation than for search skills, in terms of accuracy and quality of students’ justifications. The data provides initial evidence that an analytical approach may foster university students’ use of advanced reading strategies in the context of online reading. Implications for instruction and future research are discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

From their first semester on, university students receive assignments that require them to read multiple documents for a broad range of purposes, from understanding basic concepts and techniques to getting to know reference studies to revisiting classical works. Online reading for academic purposes involves sophisticated strategies, way beyond decoding printed words and comprehending the meaning of a text. For instance, students need to identify relevant texts in relation with their study purpose, access relevant information within those texts and assess the necessity, sufficiency, and quality of the information (Brand-Gruwel et al., 2005; Britt et al., 2018; Hartman et al., 2018). Those strategies are often assumed to be familiar to students and they are seldom taught as part of undergraduate programs (Drake & Reid, 2018; Ziv & Bene, 2022). Although reading strategies instruction has a long history of research, most of the strategy instruction research was carried out with populations younger than university students and involved strategies that are not directly applicable to online reading (cf. Davis, 2013; National Institute of Child Health and Human Development [NICHD], 2000). Research on the pedagogy of online reading strategies has started in the mid-2000’s (Brand-Gruwel & Van Strien, 2018; Kammerer & Brand-Gruwel, 2020). Several studies indicate that implementing instruction on online reading strategies at the university level remains a difficult task for a number of reasons. First, university curricula typically do not include teaching units for those strategies (Argelagós et al., 2022; Ziv & Bene, 2022). The study by Ziv and Bene (2022) found that strategy instruction was limited to check-lists and general guidelines on the websites of several leading colleges and universities in the United States. Second, instructors in the different disciplines are not prepared to deliver strategy instruction, because they themselves were not trained into these strategies (Esteve-Mon, 2020; Voogt et al., 2013). Both Esteve-Mon et al. (2020) and Voogt et al. (2013) point out that the possibilities for teacher training are more common at pre-university levels. Wineburg and McGrew (2019) showed that university teachers do not necessarily possess the professional skills of fact-checkers. Moreover, most of the strategy training interventions conducted in the context of research studies have been led by researchers, which limits the generalization of results to regular class settings (Brante & Strømsø, 2018; Capin et al., 2022; Kammerer & Brand-Gruwel, 2020). Third, online reading strategies instruction often focuses on a single type of strategies (e.g., sourcing; Kammerer & Brand-Gruwel, 2020). As McCrudden et al. (2022) point out, “current instructional programs do not fully consider the interrelated nature of Internet reading competencies” (p. 26). The present study addresses these challenges by implementing and testing a teacher-led intervention that combines several reading strategies in the context of an interdisciplinary course of the university curriculum.

Students’ challenges with online reading strategies

Online reading for academic purposes is a complex, document-based activity that involves several steps and strategies. According to the Multiple-Document Task-based Relevance Assessment and Content Extraction (MD-TRACE) theoretical model, document-based activities include five core steps (Rouet & Britt, 2011). In step 1 (Creating and updating a task model), readers construct a mental model of the task requirements. In step 2 (Assessing information needs) readers assess and reassess their information needs by comparing their prior knowledge, or initial information, with the task model. Step 3 is sub-divided into three steps: step 3a (Assessing item relevance) consists in assessing the relevance and reliability of items (documents or information within them); step 3b (Process text contents) involves the extraction and integration of information from a single document; step 3c (Create/update a documents model) consists of combining information acquired from one document with information from other documents. In step 4 (Creating/updating a task product), readers use documents’ information to construct an answer to the task. Finally, in step 5 (Assessing whether the product meets the task goals) readers assess the extent to which their product satisfies the task goals. All of these steps unfold in a highly interactive manner during task completion, with readers going back-and-forth between steps to update the task or product model as needed. At each step, readers need to make decisions on which strategies are most appropriate to achieve task goals. Strategies include actions (e.g., type a query in a search engine), cognitive processes (e.g., scan a document to locate information) and metacognitive processes (e.g., monitor contradictions between documents). They can be broadly defined as “means for achieving the goal(s) of a task” (Britt et al., 2022, p. 368). The online context requires strategies common to the reading of printed documents, but also specific strategies linked to the medium (e.g., searching by browsing; Zhang & Salvendi, 2001) and the production-distribution of information on the Internet (e.g., making predictive judgements before visiting unknown websites; Rieh, 2002), which pose significant challenges for undergraduate students (Britt et al., 2018). Moreover, online reading for academic purposes differs from leisure time reading in that it requires the selection of specialized sources; understanding text on unfamiliar topics; and maintaining a high level of attention during reading so as not to miss important information (List et al., 2016a).

One recurrent observation is that students do not analyze task demands deeply enough before searching for academic information online (Brand-Gruwel et al., 2005; Salmerón et al., 2018). For instance, they spend little time reading and trying to understand research questions (Llorens & Cerdán, 2012; Schoor et al., 2021). Analyzing question demands before searching is helpful to monitor inconsistencies within questions (Vidal-Abarca et al., 2010), discard distracting information (Cerdán et al., 2011) and set standards of coherence for reading (Van Den Broek et al., 1995). One strategy that students can use to analyze question demands is to identify the interrogative and thematic words in the question (Barsky & Bar-Ilan, 2012). Interrogative words (e.g., who, when, why…) inform readers about the type of information requested (e.g., a name, a date, a cause…), whereas thematic words (nouns) allow for the identification of the topic and provide keywords for searching (Potocki et al., 2023). Another useful strategy is to paraphrase questions in order to highlight the cognitive processes required to correctly answer the question (e.g., through the use of action verbs) and key information to search for (e.g., keywords). Cerdán et al. (2019) observed that providing paraphrases helps less-skilled readers (eigth graders) find answers to questions in texts. These strategies seemingly help readers build a “task model” before reading and searching for information, thus supporting step 1 of the MD-TRACE model (Rouet & Britt, 2011). Inadequate task models have consequences for the subsequent processes involved in online document use. Students who do not understand the task may have difficulties defining appropriate keywords, formulating search queries, and selecting relevant items in a search results page (Barsky & Bar-Ilan, 2012; Walraven et al., 2008).

Yet another challenge for students is to apply efficient strategies to locate information within digital documents. In a review of the literature, Walraven et al. (2008) found that teenagers and young adults scan online documents superficially, judging information relevance on the basis of expected, not actual information. Latini et al. (2021) observed that undergraduate students with low prior knowledge on the topic failed to combine surface and deep strategies to find relevant information in a digital text. Naumann et al. (2007) found that undergraduate students with low reading skills could only find relevant information in a hypertext with a high amount of signaling cues. One strategy that students can apply to find relevant information, quickly and efficiently, in digital documents is to use metatextual cues to search. Metatextual cues are textual and graphical devices that inform readers about the text structure and content of a document (Rouet, 2006). There is ample evidence that metatextual cues help readers find relevant information in online documents (Lemarié et al., 2012; Salmerón et al., 2017, 2018). For instance, León et al. (2019) found that instructing students to pay attention to metatextual cues (i.e., a “why” question that preceded relevant information in a text) increases the number of fixations and regressions to relevant portions of the text, as well as the amount of relevant information included in an oral summary after reading. Using metatextual cues to search helps readers with the assessment and processing of information, thus supporting steps 2 and 3a of the MD-TRACE model.

Finally, the evaluation of online information is a major challenge for students in academic tasks. Research findings suggest that undergraduate students do not spontaneously evaluate information quality and source reliability when searching for information for academic purposes (Brand-Gruwel et al., 2017; McGrew et al., 2018; Saux et al., 2021). When they evaluate information in web sites, many students fail to apply relevant criteria (Brand-Gruwel et al., 2017; List et al., 2016a). Information quality can be defined as the usefulness, goodness, accuracy and importance of an information (Rieh, 2002). One of the most traditional indicators of information quality is the presence of an editorial process of information validation prior to publication (Rieh & Danielson, 2007). Many websites and services completely skip such process; others, such as Wikipedia, have developed alternative mechanisms to ensure, or at least encourage, validation post-publication (Yaari et al., 2011). Post-publication validation is better than “no validation”, but it does not prevent readers from encountering unreliable information that has not yet been reviewed by editors or other users. Moreover, information validation can be done internally (by the editorial team) and/or externally (by external reviewers) to the medium. Combining internal and external validation offers a higher level of information quality control. Therefore, one strategy students can use to evaluate information quality is to assess the presence and type of editorial filters in the websites they encounter when searching for information for an academic assignment. Another useful strategy is to corroborate online information with reliable documents in order to evaluate the evidence presented for claims in online documents. Such strategies support steps 3a (assessing item relevance) and 3c (creating/updating a documents model) in the MD-TRACE model.

Source reliability refers to a number of parameters on the origin of information (Rieh, 2002). Two major cues for source reliability are authors’ competence and authors’ benevolence. Author competence can be defined as the extent to which an author has professional expertise and training on the topic at hand (Pérez et al., 2018). Author benevolence can be defined as an author’s intention to provide the best possible information (Stadtler & Bromme, 2014). If an author has a bias, supporting or opposing ideas in an unfair way, or a conflict of interest, being caught between moral or professional obligations and personal interests, contrary to these obligations, her/his benevolence must be questioned. Research shows that students do not pay enough attention to the characteristics of sources and source reliability when reading online (Gasser et al., 2012; Kammerer & Brand-Gruwel, 2020; McGrew et al., 2018). With misinformation rapidly spreading online, the evaluation of source reliability has become a central issue for online reading education (McCrudden et al., 2022). One strategy that students can use to evaluate source reliability is to read “laterally” by opening tabs in their browser to search for information about authors outside the original websites (Wineburg & McGrew, 2019). Another useful strategy is to apply relevant evaluation criteria to assess authors’ competence and benevolence, drawing on authors’ professional experience and ties to specific interests (Londra & Saux, 2023; Pérez et al., 2018). These strategies support steps 3a and 3c in the MD-TRACE model, but sourcing can also be applied in other phases of the reading process (Hämäläinen et al., 2023; Kiili et al., 2021).

All of these observed difficulties justify the need to provide explicit instruction on online reading strategies to undergraduate students.

Previous instructional interventions

Several instructional interventions aiming at improving undergraduate students’ online reading skills were conceived and tested by researchers since the mid-2000’s. The first intervention studies implemented stand-alone trainings, delivered by researchers, usually in the context of short-term experiments (Graesser et al., 2007; Hsieh & Dwyer, 2009; Stadtler & Bromme, 2008; Wiley et al., 2009) or courses for small groups of students (Brand-Gruwel & Wopereis, 2006; Wopereis et al., 2008). All of these studies showed positive effects of instruction, although not on all strategies and not to the same extent, on students’ skills. They provided initial evidence that explicit teaching of online reading strategies is efficient to improve students’ search and evaluation skills. However, the results of stand-alone, researcher-led interventions cannot be generalized to more ecological settings (i.e., the university classroom or digital learning environment), because they depend on the fidelity of implementation (Capin et al., 2022; Tincani & Travers, 2019).

More recently, researchers have started to implement teacher-led interventions embedded in the university curriculum. As compared to stand-alone interventions delivered by researchers, teacher-led interventions are more ecologically valid, but they also involve more sources of variability and require additional steps, such as teacher professional developement training.

The Stanford History Education Group carried out a series of teacher-led intervention studies on “lateral reading” to develop high-school and college students’ evaluation skills (Breakstone et al., 2021; McGrew, 2020; Wineburg et al., 2022). Lateral reading is an evaluation strategy borrowed from professional fact-checkers, that consists of checking the reliability of a website by opening tabs and searching for information on sources in other websites (Wineburg & McGrew, 2019). McGrew (2023) conceptualizes lateral reading as “a complex strategy that requires layers of knowledge, skill, and motivation” (p. 2). This strategy is related to one step in the MD-TRACE model (i.e., step 3a - Assess item relevance). Based on these conceptualizations, we considered lateral reading interventions as single-strategy trainings. The studies conducted so far show that lateral reading instruction improves students’ accuracy in the evaluation of websites. However, such improvement is not systematically associated with the actual use of lateral reading strategies (Brodsky et al., 2021a, b). Or, when students actually read lateraly after instruction, “they may still not arrive at the correct conclusion [about the reliability of a website]” (Breakstone et al., 2021, p. 6). Such difficulty may be due to the fact that some students do not know what to search for when reading lateraly and miss content-related cues that can help them be more skeptical of a website’s purpose (Kohnen et al., 2020). Overall, these studies suggest that teacher-led instruction on a single strategy (i.e. lateral reading) may not be sufficient to respond to the challenges of academic tasks, which involve the combined use of several heuristics and tactics.

Other researchers propose a whole-task approach to online reading instruction (Frerejean et al., 2018, 2019; Argelagós et al., 2022). The whole-task approach exposes learners to an entire set of skills from beginning to end, as opposed to a part-task approach in which the skills are decomposed in a series of smaller tasks (Lim et al., 2009). For instance, Frerejean et al. (2019) implemented a teacher-led intervention in which students completed whole tasks of information problem solving on the Internet (IPS-I), from defining the problem to presenting information, during instruction and testing. The intervention was efficient for improving students’ systematic searching and selection of sources, but had no effect on students’ ability to define the problem and formulate search queries. Similar outcomes were found by Argelagós et al. (2022) and Engelen and Bundke (2022). In the first study, the whole-task approach had positive effects on two skills (planning search strategies, and searching and locating sources) and in the second study on one skill (the ability to include different perspectives in written products after searching for information). In sum, the use of whole tasks during instruction led to encouraging, but mixed results. Some strategies were improved after instruction, but not all strategies and not the same strategies according to the studies. One possible explanation for the mixed results is that whole tasks do not provide enough opportunities for students to identify and reflect on the specific steps aspects of the tasks that challenge them. A better approach could be to combine several strategies analytically, examining steps in detail and training each strategy one-by-one but also showing how they fit in the whole process of online reading for academic tasks.

In sum, research into strategy intervention has transitioned from researcher- to teacher-led interventions. Studies either focused on a single skill (e.g., evaluation) or adopted a whole-task approach with mixed results. An analytical approach based on general descriptions of the cognitive processes involved in purposeful reading (Brand-Gruwel et al., 2005; Britt et al., 2018) may help overcome those limitations.

Rationale

The purpose of the present study was to investigate the effectiveness of a teacher-led intervention aimed at teaching first-year university students a series of interdependent reading strategies for multiple-document reading. We designed an intervention based on the MD-TRACE model of purposeful reading (Rouet & Britt, 2011), and we compared a cohort of students participating in the training with an active control group. We tested two sets of predictions :

Effects on search skills: The intervention will improve participants’ accuracy and efficiency to identify the type of information required to answer an academic task (H1) ; to use metatextual cues when assessing information relevance (H2).

Effects on evaluation skills: Trained participants will more accurately assess the most reliable document from among a set of multiple documents on the same topic (H3); authors’ competence and authors’ bias or conflict of interest (H4). They will justify their evaluations using more relevant and elaborated criteria (H5).

Method

Participants

The participants were 167 studentsFootnote 1 (Mage = 19.79; SD = 2.33) from a public university located in the metropolitan area of Paris (France). Students were enrolled in a mandatory “Digital Skills” course as part of their curriculum in one of three majors (Social Sciences, Arts and Humanities, Mathematics and Economics). Females represented 71% of the sample, which is roughly the same proportion of females enrolled in such majors nationwide (MESRI [Ministry of higher education in France], 2022). Twenty-five students were non-native speakers of French. Among them, 20 had been enrolled in the French educational system for at least five years. The remaining five students were enrolled in this system for less than five years, but they were finally included in the sample because teachers (and our own review of their written answers) confirmed they were proficient in French. Data collection took place in the Fall of 2021, during the Covid-19 pandemic without lock-down. Students met face-to-face at the university according to official sanitary rules. While all enrolled students completed the experimental protocol, only the responses of those who provided an informed consent were used in this study.

Research design

We used a quasi-experimental pre-post design with a non-equivalent control group (Miller et al., 2020). The participating instructors (N = 6; 1 female) had taught the “Digital Skills” course for at least three years. For the purpose of this study, some sessions of the course were adapted to include the explicit training of strategy components (see below, 2.3). The course was delivered in hybrid mode, alternating onsite face-to-face meetings once every two weeks and homework assignments in-between, except for two classes who had weekly meetings for practical reasons (e.g., access to computers). Each instructor was in charge of either two or four classes of students. Half of each instructor’s classes were assigned to the intervention condition, the other to the control condition. The intervention classes (total of 95 students, 74.7% females) had onsite meetings in even weeks, whereas the control classes (72 students, 66.7% females) met in odd weeks. Students were not permitted to switch classes during the term.

The pre- and post-tests consisted each of two scenario-based assignments measuring Search and Evaluation skills. There were two topics per test: Memory/Migrations, Prejudice/Energy. We counterbalanced topic order in both conditions. Between pre- and post-test, the intervention group participated in a teacher-led intervention on online reading strategies (4 × 30 min lessons + 4 × 30 min homework assignments), embedded in the “Digital Skills” course (total of 12 × 180 min lessons). The control group followed the regular course syllabus whose instructional goals partly overlapped (e.g., using a search engine) but did not include any explicit teaching of strategies. This was an active control group because students’ completed digital literacy modules and tasks (e.g. formatting presentation slides), while the intervention group completed strategy training modules and tasks. Control students were granted access to the intervention materials after post-test completion.

Design and implementation of the intervention

We designed a teacher-led intervention that was guided by several instructional principles. First, we implemented fully guided instruction, including explicit teaching, worked-out examples, guided practice, and feedback on online reading strategies to maximize the chances that students learn the target content (Clark et al., 2012; Brante & Strømsø, 2018). Second, we used scenario-based assessments, combining several interdependent reading strategies, to encourage the coordination of different competencies required in Internet reading (Kammerer & Brand-Gruwel, 2020; McCrudden et al., 2022). Third, we followed evidence-based guidelines in the development of multimedia materials to manage resources and allow for self-paced task completion (Clark & Mayer, 2016). The use of multimedia materials was also important to ensure hybrid teaching and learning (Linder, 2017).

The content of the intervention was informed by the MD-TRACE theoretical model (Rouet & Britt, 2011) and prior intervention studies (e.g., Cerdán et al., 2019; Macedo-Rouet et al., 2019; Martínez et al., 2024; Pérez et al., 2018). Search strategies were operationalized as students’ ability to analyze task demands before searching (Cerdán et al., 2019) and to use metatextual cues to search for information (Ayroles et al., 2021; León et al., 2019), both of which are related to three steps in the MD-TRACE model (i.e., steps 1, 2 and 3b). Evaluation strategies were operationalized as readers’ ability to evaluate information quality and source reliability, in relation to steps 3a (Assessing item relevance) and 3c (Creating/updating a documents model) in the MD-TRACE model. Information quality and source reliability are complex concepts that can be defined through multiple criteria (Rieh & Danielson, 2007). We define information quality as the presence of editorial filters in a website and a validation process of information prior to publication (Hämäläinen et al., 2023; Macedo-Rouet et al., 2019). Source reliability is defined as the extent to which the author of a document is competent, exempt of biases or conflicts of interest, and the trustworthiness of the document (Bråten et al., 2019; McGrew, 2020; Pérez et al., 2018).

To increase the chances of sustainable delivery and integration in the curriculum, the intervention was embedded in a “Digital Skills” course that is mandatory for all first-year undergraduates at the university. The aim of the “Digital Skills” course is to prepare students for a national certification of digital competencies (Bancal & Dobaire, 2022; Ministère de l’éducation nationale et de la Jeunesse, 2022). The regular course syllabus includes three main topics: exploring digital data files, learning to use office automation software, and coding with HTML. In addition, students must complete a series of online tasks on a national platform for the assessment of digital competencies (Groupement d’intérêt public Pix, 2022). Some of these tasks imply information search and evaluation (e.g., “find the name of the author”). However, the platform does not provide any explicit teaching on reading strategies and its tasks are not necessarily academic. Therefore, the reading strategies intervention was complementary to the course and relevant to develop students’ digital skills.

Materials

We developed four interactive modules and eight online practice tasks to promote students’ understanding and use of Search and Evaluation strategies (Table 1). These modules and practice tasks were implemented in two online environments to enable distant as well as face-to-face delivery of the instruction. Additional materials were also developed to support instructors’ presentation of the modules in class and discussion with students. All of the materials are available (in French) upon request to the first author.

Interactive modules

The interactive modules presented the reading strategies in the context of academic tasks scenarios (e.g., “Imagine that for an introductory ‘Social Sciences and the Environment’ class you have to answer the following question: What is ‘gentrification’ and how does it change the life of a neighborhood?”). Each module included four phases of explicit instruction: direct explanation, modeling, guided practice, and corrective feedback (Archer & Hughes, 2011). It started with a presentation of the learning goals, followed by a presentation of the scenario. Then, students were prompted to reflect on what they would do if they had to search for information on the Internet to accomplish the task. Progressively, the slide show presented worked-out examples of strategy use in the context of online reading, accompanied by interactive questions with automatic feedback. Finally, a summary of the strategies was presented in the last slide.

In Module 1, students were taught to analyse task demands before searching for information. The slides demonstrated the strategy of identifying interrogative and thematic words in questions, as well as paraphrasing complex questions, in order to determine the type of information needed (e.g., a name, a concept, a reason; Cerdán et al., 2021; Potocki et al., 2023). In Module 2, students were taught to inspect different metatextual cues, such as the table of contents, titles and subtitles to quickly find thematic keywords or phrases in a web page (e.g. the French Wikipedia article on gentrification) in relation to the research question. The choice of Wikipedia articles was based on evidence from previous studies that Wikipedia is a useful source to start academic searches, even though its information must be verified (List et al., 2016b; Wineburg & McGrew, 2019). Students had to determine as fast as possible, whithout reading the entire document, whether relevant information was present in the page. Different parts of the webpage (e.g., navigation menus) were analyzed during this demonstration phase. The main goal of the lesson was to promote goal-focusing attention and efficient information search in a document (León et al., 2019).

Module 3 taught students to evaluate information quality by questionning information validation processes in websites. The History section of the Wikipedia article on gentrification (Fig. 1) was used as a starting point to discuss different types of validation (prior/post publication, internal/external reviews). Then, students were prompted to categorize different types of websites with the help of a typology of information validation processes (Pérez et al., 2018). Students received metacognitive feedback encouraging self-regulation through strategic evaluation of information (Abendroth & Richter, 2021). Module 4 dealt with source reliability evaluation focusing on author competence, author bias or conflict of interest, and trustworthiness of a web page as influenced by these criteria. Two web pages (a research article, a social media post) with conflicting views on the topic of gentrification were presented. Then, students saw a demonstration of the “lateral reading” strategy (McGrew, 2020; Wineburg & McGrew, 2019). Different criteria for evaluating author competence, bias and conflict of interest were explained (Bråten et al., 2019; Pérez et al., 2018).

To support students’ integration of the strategies taught, every module built on the previous one by providing an overview and introducing the new strategy within the same scenario of academic online reading, as the previous ones. We held the topic (gentrification) constant to make the integration of different strategies even more explicit to students. In the pre and post-tests, these strategies were assessed with separate tasks in order to control for dependency, which may have occurred for instance if students had spent more time looking for information on one item, inspecting source and content features more thoroughly. Also, we could not ask students to search and evaluate more than three/four pages, because of time constraints in the course setting. In sum, the strategies were taught one-by-one and analytically (i.e., examining steps in detail), but they were integrated across modules.

Online practice tasks

Each module was accompanied by two practice tasks on the strategies taught. Half of the tasks were meant to be completed (or at least started) in class, the other half as homework assignment. All of the tasks were based on an academic assignment scenario, and incorporated questions from the different modules to facilitate the integration of reading strategies. Students received corrective and explanatory feedback for every task, including multiple-choice questions and open-ended justifications. A detailed description of the tasks is presented in Appendix 1.

The first and second tasks (related to Module 1) asked students to analyze a series of questions in order to determine the type of information requested, using the typology from Module 1. The third and fourth tasks (Module 2) asked students to determine, as quickly as possible, whether a document contained relevant information to answer question(s) from an academic assignment. Students had to use metatextual cues to find information in authentic webpages and one e-book from the university library. Questions were worded in a way that using the Find function was not relevant to the task (non-factual questions). The fifth and sixth tasks (Module 3) asked students to evaluate information quality in two to three web pages per task. The pages were issued from websites with strong editorial filters (e.g., a journal article), no editorial filters (e.g., a post from an individual’s social network account) or few editorial filters (e.g., an article from a group of committed people). Students had to identify editorial filters and classify different types of websites.The seventh and eighth tasks (Module 4) asked students to evaluate source reliability. For each page, students were asked to: (1) identify the name of the author, (2) state whether the author was competent on the topic, (3) state whether the author had a bias or conflict of interest regarding the topic, (4) choose the most trustworthy document for the academic assignment. Students could look for information about the authors on the Internet. However, because of the limited time available for the tasks and to ensure all students would have access to source information, we also included information about the authors and the website at the bottom of the page.

Online environments

The modules, practice tasks, and other materials were implemented in two complementary online environments. The modules, consent form, and socio-demographic and metatextual questionnaires were implemented in a Moodle course page that was set to display the resources as a function of students’ class and group (intervention or control). Students were familiarized with Moodle since they used this learning management system for the “Digital Skills” course as well.The practice tasks were implemented in a purposeful web-based platform identified by the accronym “SELEN”. This platform enables the creation of exercises, providing corrective and explanatory feedback, and exporting students’ scores and time spent on documents/questions. Its design is clean to avoid distractions and the experimenter can choose between two test modes (evaluation or training), allowing for running pre and posttests, as well as practice tasks during instruction (Fig. 2).

Additional materials for instructors

To support instructors’ integration of the lessons in the regular course program, we developed an Instructor Guide containing full lesson plans, a description of the online platform, and the timeline for the experiment. Moreover, instructors received Introductory Slides for the intervention group, containing lesson goals, a glossary of key concepts presented in the modules, the unfolding of tasks, and debriefing questions to be used in the last 2–5 min of the intervention session. The structure of an intervention lesson is presented in Table 2. We also provided each instructor with a schedule for lessons and tasks for each group (intervention and control) as well as the list of anonymous logins/passwords to the SELEN platform, which the instructors attributed to their students in class. These materials were introduced and discussed with instructors during professional development meetings (see below).

The study was registered in the French National Commission for Information Technology and Civil Liberties database (CNIL, 2021), following the directives from the European General Data Protection Regulation (European Union, 2018). Students received an information letter describing the study and participants’ rights regarding data protection, then signed an informed consent.

Measures

Sociodemographic data and internet use

Socio-demographic data and students’ Internet use were collected through a questionnaire embedded in the Moodle course page. Students were asked to report their age (month and year of birth), gender, major field of study (Social Sciences, Arts and Humanities, Math and Economics), and frequency of use of three of the most popular social networking sites in France (Facebook, Snapchat, Whatspp) (Perronet & Coville, 2020), as well as of two scientific portals (CAIRN, Science Direct). The scale for frequency of use ranged from 0 (‘Never’) to 4 (‘Almost constantly’). The average of social networking scores was used as an index of “Recreational Use” and the average of scientific portals’ scores was used as an index of “Scientific Use” of the Internet. Based on Macedo-Rouet et al. (2020), we expected Recreational use to be negatively associated with information evaluation skills and vice-versa for Scientific use. Therefore, these indexes were used to control for group equivalence prior to the intervention.

Reading abilities

We measured two reading abilities related to information search and evaluation skills: Lexical quality (Auphan et al., 2019) and Metatextual knowledge (Ayroles et al., 2021; Rouet & Eme, 2002). Lexical quality refers to individuals’ ability to quickly and accurately retrieve lexical representations of words stored in memory (Perfetti & Stafura, 2014). Lexical quality significantly correlates with readers’ (13–14 year olds) ability to find relevant information for answering questions in a multiple-document reading situation (Potocki et al., 2023). To measure participants’ lexical quality we used the Word Identification Task (Auphan et al., 2019) which comprises three sub-tasks: orthographic discrimination, phonological decoding, semantic categorization. For all of these tasks, participants had to make word selection decisions as quickly and accurately as possible. An index of rapidity and precision was calculated by taking into account the ratio between the response time and the correct answers.

Metatextual knowledge refers to individuals’ knowledge of text organizers and reading strategies (Rouet & Eme, 2002). Metatextual knowledge predicts the use of selective reading strategies (e.g. using headers to search for information in a text) among children (Potocki et al., 2017). Other studies suggest that this might be the case for undergraduate students as well (León et al., 2019). To measure students’ metatextual knowledge we used an adapted version of the Metatextual questionnaire developed by Ayroles et al. (2021). The questionnaire comprises two multiple-choice questions on the role of metatextual cues (e.g., table of contents) and eight multiple-choice questions on reading strategies (e.g., “You have to answer a question by looking for information in a text, but you are in a hurry and you can only read a few sentences, which ones will you read first?”). Each correct answer is assigned 1 point (max = 10).

Pre-test / post-test of search and evaluation skills

As pre- and post-tests, students completed two online tests (Search and Evaluation) that were similar to the practice tasks of the intervention phase. The topics (Memory or Prejudice, in the Search test; Migrations or Energy dependence, in the Evaluation test) were counterbalanced across subjects between pre and post-tests. Students had approximately 45 min to complete both tests. The assessments included multiple-choice and open-ended (short answers, justifications) questions. Each correct answer was granted 1 point, except for the justifications, which were scored in a 4-point scale, from inadequate (0 points) to relevant and elaborated (3 points), following the coding scheme by Kiili et al. (2022). Two researchers independently coded 15% of the justifications for each question (Kappa values were respectively: 0.87, 0.71, 0.95, 0.92). Next, one of the researchers who participated in double-coding coded all of the justifications. Time on task was assessed, in seconds, based on the log files from the SELEN platform.

The Search test asked students to analyze the demands of a given academic task and determine, as quickly and efficiently as possible, whether a web page (Wikipedia article) contained relevant information to answer the task. Five questions assessed students’ ability to determine the type of information needed (i.e., a name, a concept, a place, a date or period, a number or quantity, a reason or explanation) (Tasks 1 to 5). For the Prejudice topic, the questions were: “How many types of prejudice are there?” (number), “What is social influence?” (concept), “What’s the point of social categorization?” (explanation), “Who developed the theory of real conflict?” (name), “When did we first define prejudice as an unconscious defensive mechanism?” (date). For the Memory topic, the questions were: “How many memory systems are there?” (number), “What is short-term memory?” (concept), “Show how short-term memory differs from long-term memory” (explanation), “Who first described short-term memory in 1968?” (name), “When did Atkinson and Schiffrin propose the modal model of memory?” (date). Three questions assessed students’ use of metatextual cues for searching: (1) “Where on the page do you look to find the answer to [the assigned question]?” (Page); (2) “Without reading the article, can you tell quickly in which part(s) [assigned topics] are described? Copy the number(s) and title(s) of the section(s)” (Menu); (3) “Now imagine that you are interested in [acronym or technical term from the text]. Find out as quickly as possible what this [acronym/technical term] stands for” (Find).

The Evaluation test asked students to evaluate information quality and source reliability of three given web pages per topic. Two questions assessed information quality. The first question (Editorial filters) asked students to identify the document(s) that had been editorially reviewed before publication and to justify their answer. The second question (Sites) asked students to identify websites (in a list) that typically allow users to post a message or text without information validation prior to publication. Three multiple-choice questions followed by justification questions assessed the evaluation of source reliability. Students were asked to indicate: (1) the least competent author on the topic (Competence), (2) the author displaying a bias or conflict of interest regarding the topic (Bias/conflict), (3) the most trustworthy document for the assignment (Trustworthiness). For reasons of time, we have selected extracts from these web pages and provided source information at the bottom of each page to ensure that all students would have the chance to consider source information in the alloted time, but students could also search for information on the Internet. Source information included authors’ profession and position (e.g. a political scientist and president of the Institute for Strategic Defense of National Interests), the name and stance of the website in which the article appeared (e.g. the site of the Institute for Strategic Defense of National Interests, a lobby group for the government to defend the closing of the borders to foreigners).

Professional development

Prior to the experiment, participating instructors (N = 6) attended two 3 h meetings with members of the research team to get familiarized with the intervention goals, test the materials, and organize the implementation of the intervention. The first meeting took place before the summer break (end of June) and the second meeting occurred in September. The meetings were held face-to-face at the university facilities. Instructors received a financial compensation for their participation in the whole experiment. All but one participating instructor had a master’s degree and all had at least 5 years of experience teaching the course.

In the first meeting, instructors were introduced to the theory and rationale of the experiment. Building on authentic examples of academic tasks at the undergraduate level, the concept of “expert reading strategies” (i.e. the sum of decisions that allow the reader to adapt his activity to the demands of the task at hand; Britt et al., 2018) was developed and discussed with the instructors. Then, the intervention design principles, the structure of the lessons and practice tasks, the online platform, and the timetable were presented. The instructors were invited to log into the platform and to test the practice tasks between meetings. Instructors had the opportunity to express their thoughts and opinions about all aspects of the intervention. Based on their feedback, we modified some of the guidelines for the tasks and other organizational issues. Shortly after the meeting, the instructors received a Instructor Guide with a detailed description of the intervention, the materials, and the lesson plans. Introductory slides for the lessons were also provided. These slides allowed the instructors to introduce the lesson topic and goals, the vocabulary, and the guidelines for practice tasks in the intervention group.

The second meeting was dedicated to the distribution of student groups, planning sessions, and discussing guidelines for students and organizational issues. It was decided that the intervention lessons would take place in even weeks and the control lessons would take place in odd weeks. This way, instructors could easily remember the condition (intervention/control) attributed to each class of students. Moreover, instructors were briefed to be attentive not to share intervention materials with the control group. In the last hour of the meeting, instructors received the guidelines for the pre- and post-test sessions, and simulated (by reading aloud and recalling the main the steps) the pre-test, as well as the first intervention session. Minor changes were made to the organization according to their feedback. At the end of the meeting, the instructors received a checklist with things to do before (e.g., review the lesson plan and verify Moodle settings) and after (e.g. verify that all students completed the practice tasks) each intervention session.

Fidelity of the intervention

Instructors were contacted by email after each intervention session and asked to provide a short debriefing of the session and to report any occasional incidents. One-off incidents occurred during the semester (e.g., temporary Internet connection failure) and were resolved by the research team and/or university technical support during the sessions. A weekly follow-up of students’ connections to the Moodle intervention page and the SELEN platform allowed to verify the completion of interactive modules and practice tasks by participants. Overall, the sessions unfolded as expected thanks to the availability of online materials.

Statistical analyses

Data analysis was carried out using IBM SPSS for Windows, v25. First, we tested the initial (pre-intervention) equivalence between participants by contrasting their sociodemographic data (age, gender, major field of study, internet use), and reading abilities (lexical quality, metatextual knowledge), as a function of the Group they were assigned to (intervention, control). This was done to define any additional fixed predictor for the models in the next step of analyses. The difference between groups was examined via independent samples’ t-tests or Pearson’s chi-square test, depending on the continuous or categorical measurement level of the variable.

Then, we examined the efficacy of the intervention by analyzing the performance within each test (Search and Evaluation) via generalized linear mixed models (GLMM). GLMMs extend linear models to non-normal data by allowing different distributions and link functions (the link between the expected outcome values and the predictor/s). They are also well-suited for repeated or nested data, as they allow for the specification of fixed and random effects (Winter, 2019).

The fixed structure for all planned models specified Group (intervention, control), Phase (pre, post), Major Field (social sciences, arts and humanities, math and economics) and the interaction between Condition and Phase as factors (for details on the inclusion of Major Field in the fixed factors’ structure, see the Results section). The impact of the intervention was examined by the interaction term. Data collection took place in the participants’ usual courses, taught by their usual instructors, with each instructor in charge of one experimental and one control group. Yet, the specification of instructors and courses as separate random blocks resulted in multicollinearity for some estimations. In these cases, the random structure was simplified to two random intercepts (by-participants and by-instructors). To test for the fit of the planned models (i.e., intercepts plus fixed factors), these were compared with baseline models (i.e., only intercepts) using the Akaike Information Criterion (AIC). Compared to baseline, planned models showed better (i.e., lower) fit indices. AIC summary fit indices can be consulted in Appendix 2.

In the Search test, in which the instructions asked to complete the tasks as quickly as possible, the dependent variables to establish the intervention’s efficacy were participants’ accuracy (percentage of correct responses) and efficiency (response times in seconds for correct responses). In the Evaluation test, in which the instructions asked to justify each close-ended response (computed as a 0–3 score), the dependent variables were accuracy (correct responses) and the justifications’ quality (scores in the justification items). The analysis of accuracy and justifications’ quality was based on the aggregated scores for each module and was conducted on the values transformed into percentages for clarity purposes, as a proportion of the actual score divided by the maximum possible score. Alternatively, efficiency analyses (response times) were conducted on each task composing a module from the Search test. This was done because efficiency analyses were contingent on accuracy, and the average response time for each task within the same module was not equivalent (p > .05).

Regarding accuracy and justification quality, GLMMs were set to normal distributions with an identity link. Regarding efficiency, response times presented skewed distributions deviating towards larger values, particularly in the pretest data (see Table 3). Two actions were taken in this regard. First, outliers beyond two standard deviations were trimmed (Berger & Kiefer, 2021). This resulted in excluding 2.73% of the observations from the Search test. Second, the planned models for Efficiency were set to a Gamma distribution (in which values are positive and the variance increases proportionally with the mean) with an identity link (in which no transformation is applied to relate the predictor with the outcome). This combination has been proposed to provide a suitable strategy without the need for transforming the skewed data (Lo & Andrews, 2015). To test this strategy, we compared in a preliminary analysis the fit of our planned models with a Gamma distribution against a Gaussian distribution, both with identity links, by using the Akaike Information Criterion indexes. In all cases, Gamma models showed better (i.e., lower) fit indices than models assuming normality. AIC summary fit indices can be consulted in Appendix 2.

Fixed factor effects are reported via the unstandardized estimated coefficients, standard errors, and 95% confidence intervals. The unstandardized coefficients and confidence intervals are used as indicators of the magnitude of an effect and the precision of the estimate of that magnitude, respectively. Coefficients should be interpreted as values that depend on the units of the predictor (e.g., a coefficient of 5 in accuracy should be interpreted as a change of 5% in the dependent variable, whereas a coefficient of 5 in efficiency should be interpreted as a change of 5 s). The following reference categories were used for the coefficients’ calculation: intervention/posttest (factor: Group*Phase), intervention (factor: Group), posttest (factor: Phase), and Social Sciences (factor: Major). Additional information on the fixed factors tests can be found in Appendix 3.

Results

Equivalence of groups

Table 3 presents the sample’s pretest descriptives and comparability tests. No initial differences between the intervention and control groups were found, except for their major field of study, χ2 (2, N = 156) = 16.27, p < .001. The observation of the cells’ adjusted standardized residuals indicated that students with a major in Social Sciences were more frequent in the intervention group, whereas Humanities and Arts’ students were more frequent in the control group. Therefore, as a control, the major study field was specified as an additional predictor when conducting the efficacy analyses.

Efficacy of the intervention

Search test

Descriptive and inferential statistics for overall comprehension of the Search test data are shown in Table 4 (accuracy) and Tables 5 and 6 (efficiency). Additional information on the fixed effect tests can be consulted in Appendix 1. An initial inspection of the descriptive analysis shows that the intervention group students were more accurate in the Task Demand Analysis module and the most efficient (slower RTs) in both modules in the posttest, as compared to the rest of the conditions.

Regarding accuracy (Table 4) in the Task Demand Analysis module, the intervention group outperformed the control group, as evidenced by the significant interaction. The observation of the estimated coefficients shows that students who received the intervention significantly improved accuracy by 5% as compared to its performance in the pretest, almost reaching the scoring ceiling, whereas the control group remained anchored in the same pretest performance level, Coeff. = 0.56, p = .708Footnote 2. Yet, both groups failed to differentiate in the posttest. The intervention did not predict accuracy in the Metatextual Cues module of the Search test.

Regarding efficiency, Table 5 presents the descriptive and inferential statistics for the Task Analysis module and Table 6 for the Metatextual cues module. Overall, participants in the intervention group responded faster in the posttest compared to both conditions in the pretest. However, this chronometric reduction was only associated with an intervention’s effect (i.e., a Group*Phase interaction) in Task 1 (Number) from the Task Demand Analysis module), and in Task 1 (Page) from the Metatextual Cues module (please refer to tables for the estimated coefficients).

Evaluation test

Descriptive and inferential statistics for the Evaluation test data are shown in Table 7 (accuracy) and Table 8 (justifications’ quality). Additional information on the fixed effect tests can be consulted in Appendix 2. An initial inspection of the descriptive analysis shows that the intervention group presented the highest accuracy and justification scores in the posttest, as compared to the rest of the conditions.

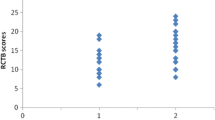

Regarding accuracy (Table 7), the intervention tended to improve performance in the Source Reliability module, as seen by the marginally significant interaction. In addition, it significantly affected the Information Quality module. With an overall mean pretest baseline of 50% (SD = 24%), the intervention group gained 19% in accuracy in the posttest compared to the control group, as evidenced by the observation of the coefficients (see Table 7).

Regarding justifications (Table 8), participants in the intervention group did not improve quality in the Source Reliability module, but they did so in the Information Quality module. The observation of the coefficients revealed that participants who received the intervention improved the quality of their justifications by 14% in the posttest, as compared to the control group (see Table 8).

In sum, the effects of the intervention remarkably varied among both skills (Search and Evaluation). These results are further discussed in the next section.

Discussion and conclusions

Despite the evidence that many undergraduate university students experience difficulties when reading online for their study purposes (Brand-Gruwel et al., 2017; Latini et al., 2021; List et al., 2016; McGrew et al., 2018; Naumann et al., 2007; Salmerón et al., 2018; Schoor et al., 2021), there is no established curriculum to support their skill development. Prior intervention studies found mixed results on the benefits of training students’ skills based on whole-tasks (Argelagós et al., 2022; Frerejean et al., 2018, 2019) or on a single strategy (Breakstone et al., 2021; Brodsky et al., 2021a, b). To adress this gap, we designed an intervention study that implemented teacher-led instruction on interdependent reading strategies based on a theory of purpuseful reading (Rouet & Britt, 2011), as part of an undergraduate digital literacy course. Following McCrudden et al.’s (2022) call for considering “the interrelated nature of Internet reading competencies” (p. 26), our intervention combined search and evaluation strategies analytically, training each strategy one-by-one while clarifying how they fit in the whole process of online reading. We expected that the intervention would improve students’ search skills (i.e., the ability to analyse task demands and use metatextual cues to find relevant information) and evaluation skills (i.e., accurate evaluation of website information quality and source reliability), both in terms of accuracy and efficiency. Based on previous studies with younger learners (Kiili et al., 2022), we also expected a positive effect of the intervention in the quality of justifications, with students using more elaborate justifications (using source criteria) after instruction.

Effects on search skills

In the Search module, the effects of the intervention on students’ search skills were rather small. In terms of response accuracy, the intervention improved students’ ability to analyze task demands by 5% from pre to posttest, whereas the control group did not significantly improve its performance. However, both groups achieved high scores both in the pre and posttest, resulting in a close to ceiling effect in the posttest (and a non-significant difference between groups). Regarding the use of metatextual cues, the scores were overall high already in the pretest (above 80%) and no significant effects of the intervention were found.

Despite modest benefits on response accuracy, the intervention significantly reduced the time needed to solve some of the search tasks, including the longest one, thus increasing efficiency. Moreover, the intervention improved students’ identification of relevant sections of a web page for finding answers to academic task scenarios (Page task).

These results are partly consistent with previous findings that faster search times are associated with correctly locating information in online documents (Cromley & Azevedo, 2009; Kumps et al., 2022). In our study, the intervention improved students’ efficiency in locating information, but their accuracy was already high in the prettest. Therefore the margin for accuracy progress was very small. Moreover, the documents used for the search task were relatively short and well-structured (e.g., Wikipedia page). When students have to search in more complex academic documents, the search and locating task is more challenging and the effects of instruction can be more important (see Argelagós et al., 2022). Finally, the absence of measures of participants’ individual visualization skills in our study might have hiden positive effects of the metatextual cues search module for low-visualization users (Zhang & Salvendi, 2001). In other words, locating information is not an easy task per se. Its complexity depends on the complexity of the document(s) at hand, the demands of the task, and students’ searching abilities. Due to organizational constraints, we could not conduct a pilot of the search test prior to the intervention.

Future studies should therefore build finer screening instruments for students’ search skills, following the example of standardized assessments of evaluation skills (e.g. Hahnel et al., 2020; Potocki et al., 2020). Exploratory qualitative studies could also be useful in early phases of a research project to help determine students’ development potential on search skills and ajust the demands of the tasks to students’ knowledge base (Park & Kim, 2017). Still, the fact that the intervention improved students efficiency in task analysis and metatextual tasks is encouraging, since the ability to make fast and accurate decisions regarding online information is a characteristic of expert readers (Brand-Gruwel et al., 2017; Wineburg & McGrew, 2019).

Effects on evaluation skills

The effects of the intervention on students’ evaluation skills were differentiated according to the modules. Regarding the evaluation of information quality, these effects were quite strong. After training, students improved their accuracy to evaluation questions by 19% compared to the control group, going from 47 to 64% of correct answers (see Table 7). Students in the intervention group were better able to recognize web pages and websites that apply editorial filters to information, using validation processes to verify information prior to publication. Also, trained students provided more relevant and elaborate justifications for information quality questions (14% improvement, see Table 8). Therefore, students in the intervention group were not only able to more accurately assess information quality after instruction, but also to explain the reasons for assessing the quality of a web page. These results extend prior findings from two intervention studies that also taught editorial filters as a criterion for the document evaluation (Martínez et al., 2024; Pérez et al., 2018). In line with these studies, our intervention increased the accuracy with which students selected the documents with stronger editorial filters (i.e., information validation prior to publication, and independent external reviews; Rieh & Danielson, 2007). Moreover, unlike prior studies, our intervention was efficient to improve students’ justifications for document selection based on editorial filters. The concept of editorial filters is intrinsically complex and unfamiliar to students, even at the higher education level (see also Hämäläinen et al., 2023), and a single training session is not enough to improve evaluation skills in this matter. At least two sessions of intensive practice with more than three documents is necessary to improve students’ performance. Participants in our study presumably learned “foundational knowledge” (Kohnen et al., 2020) about website types and information validation processes, which in turn helped them determine what to evaluate when assessing website information quality.

The intervention had an only marginal effects on the accuracy of source reliability evaluation. Trained students tended to more accurately recognize the most competent author, the authors displaying a bias or conflict of interest regarding the topic, and the most trustworthy document for an academic task. Accuracy performance in these tasks was already quite high in the pre-test (70% in the control group, 68% in the intervention group; see Table 7), therefore the intervention probably tapped a small zone of student’s development potential (Park & Kim, 2017). Unexpectedly, trained students did not provide better justifications for source reliability tasks after instruction. Conversely, students’ ability to justify their reliability evaluation was quite low at the pre-test (35% in the control group, 34% in the intervention group; see Table 8), and it remained below 50% in the post-test. These results are unexpected and differ from the findings by Brodsky et al. (2021) and Wineburg et al. (2022), who adopted a single-strategy approach to teaching source evaluation. Their interventions included six sessions or more of 50 min each of lateral reading instruction and significantly improved students’ evaluation justifications. Wineburg et al. (2022) noted that trained students “earned less than half of the available points on the assessment” (p. 13). These findings suggest that justifying source reliability is much more difficult than simply identifying the most competent author or the presence of authors’ biases in online documents. Learning to produce elaborate justifications might take more time and training for undergraduate students, as suggested by studies with younger adolescents (Kiili et al., 2022; Pérez et al., 2018). A balance between a single-strategy approach and an analytical approach combining several strategies must be found, to provide deep enough knowledge to students on source evaluation while making visible the interrelated nature of online reading strategies (McCrudden et al., 2022).

Overall, the present study shows encouraging though modest results of a teacher-led intervention that adopted an analytical approach to online reading strategies teaching. Trained students improved their search and evaluation performance, in terms of accuracy, efficiency and quality of justifications, for some but not all strategies. Evaluation strategies displayed the greatest gains, with a specific positive impact on students ability to recognize and explain the role of editorial filters in improving information quality. Given the relatively short time for training, these results constitute initial evidence that an analytical approach might pay off for developing undergraduate students’ online reading skills. In addition, compared with past studies that focused on the development of a single strategy or skill (e.g., lateral reading for source evaluation; Breakstone et al., 2021; Broadsky, 2021a, 2021b) or adopted a whole-task approach (e.g., Argelagós et al., 2022; Frerejean et al., 2019), our analyical approach provided a more precise diagnosis of those aspects of the information tasks for which an intervention at the undergraduate level is most needed.

Limitations

The study entails a number of limitations that are worth bearing in mind. We have already mentioned several issues with the tasks used to assess students’ skills. Among other concerns, some of the selected measures did not yield enough variance, which does not mean that students mastered all aspects of the search strategies taught. More fine-grained measures, better adjusted to university students’ potential for skill acquisition, need to be developed in the future. Second, although instructors participated in professional development sessions prior to the experiment, the amount of time (2 sessions of 3 h) may not have been sufficient to ensure quality implementation of the program in the classroom (for instance, when instructors discussed the modules in the last minutes of the session). Third, as compared to previous teacher-led intervention studies, the present program was rather short. Only 4 sessions of 30 min were used to explain the reading strategies, which might have been insufficient to show an impact on all the aspects of these strategies. Fourth, the control variable Major field displayed unforeseen effects on some tasks within the Search and the Evaluation tests. Because the number of participants in one of the majors was low, no definitive conclusions should be drawn from these effects. However, these results suggest that the Major (or academic disciplines) might have a differentiated role in shaping students ability to search and evaluate online information that should be investigated in the future.

In spite of these limitations, the present study confirms that university students face challenges when asked to search for and evaluate online information as part of their academic curricula. The data provides encouraging evidence that a targeted intervention using direct instruction and guided practice may foster their online reading strategies. Future studies should explore ways to improve the effectiveness of these interventions.

Instructional implications and perspectives

The present study has also implications for instructional practice and future interventions. First, teacher training and implementation quality should receive more attention, since teachers are in the frontline for guiding and fostering students’ skills development. Future intervention studies should include instructors as a target group, providing more intensive training tailored to their needs, promoting adherence and teaching quality, which are fundamental aspects of implementation (Capin et al., 2022). For instance, teachers should be granted the opportunity to test the materials and practice the target strategies well ahead of the intervention, so that they become highly familiar with the principles of the intervention and make it their own. Second, instruction at the undergraduate level should support students’ ability to justify their evaluation decisions, beyond identifying relevant and reliable information. Being able to solve academic informational problems online requires high-order judgments that are part of informational problem solving (Britt et al., 2018). For instance, contrasting cases of high and low quality justifications can be used to teach students the expected standards for deep and evaluative justifications (Martínez et al., 2024). Scaffolds for visual representation of inter-documentary relationships also a promising approach (Barzilai et al., 2020). Finally, it is also important to differentiate the intervention according to students’ development potential, and explore different pedagogies for online reading skills, alternating lectures and practice in different ways (Schwartz & Bransford, 1998).

Change history

23 September 2024

A Correction to this paper has been published: https://doi.org/10.1007/s11251-024-09684-6

Notes

Initially, 260 students were enrolled in the experiment. However, 93 students were removed from the sample because they were either absent from the post-test (N = 75), they did not give their consent for including their data in the analyses (N = 6), they took the wrong version of the post-test because of a technical error (N = 6), completed less than 50% of the practice exercises (N = 3), or did not read the documents (never clicked) in the pre or post-test (N = 3).

References

Abendroth, J., & Richter, T. (2021). How to understand what you don’t believe: Metacognitive training prevents belief-biases in multiple text comprehension. Learning and Instruction, 71, 101394. https://doi.org/10.1016/j.learninstruc.2020.101394.

Archer, A. L., & Hughes, C. A. (2011). Explicit instruction: Effective and efficient teaching. The Guilford Press.

Argelagós, E., Garcia, C., Privado, J., & Wopereis, I. (2022). Fostering information problem solving skills through online task-centred instruction in higher education. Computers & Education, 180, 104433. https://doi.org/10.1016/j.compedu.2022.104433.

Auphan, P., Ecalle, J., & Magnan, A. (2019). Computer-based assessment of reading ability and subtypes of readers with reading comprehension difficulties: A study in French children from G2 to G9. European Journal of Psychology of Education, 34(3), 641–663.https://doi.org/10.1007/s10212-018-0396-7.

Ayroles, J., Potocki, A., Ros, C., Cerdán, R., Britt, M. A., & Rouet, J. F. (2021). Do you know what you are reading for? Exploring the effects of a task model enhancement on fifth graders’ purposeful reading. Journal of Research in Reading, 44(4), 837–858. https://doi.org/10.1111/1467-9817.12374.

Bancal, M., & Dobaire, D. (2022). Évaluer ses compétences numériques avec pix pour construire son parcours d’inclusion numérique. Informations Sociales, 1, 99–102. https://doi.org/10.3917/inso.205.099.

Barsky, E., & Bar-Ilan, J. (2012). The impact of task phrasing on the choice of search keywords and on the search process and success. Journal of the American Society for Information Science and Technology, 63(10), 1987–2005. https://doi.org/10.1002/asi.22654.

Barzilai, S., Mor-Hagani, S., Zohar, A. R., Shlomi-Elooz, T., & Ben-Yishai, R. (2020). Making sources visible: Promoting multiple document literacy with digital epistemic scaffolds. Computers & Education, 157, 103980. https://doi.org/10.1016/j.compedu.2020.103980.

Berger, A., & Kiefer, M. (2021). Comparison of different response time outlier exclusion methods: A simulation study. Frontiers in Psychology, 12, 675558. https://doi.org/10.3389/fpsyg.2021.675558.

Brand-Gruwel, S., & van Strien, J. L. (2018). Instruction to promote information problem solving on the Internet in primary and secondary education: A systematic literature review. Handbook of multiple source use, 401–422. https://doi.org/10.4324/9781315627496-23.

Brand-Gruwel, S., & Wopereis, I. (2006). Integration of the information problem-solving skill in an educational programme: The effects of learning with authentic tasks. Avoiding simplicity, confronting complexity: Advances in studying and Designing (Computer-Based) powerful learning environments (4 ed., Vol. 3/(, pp. 243–263). Brill Academic. https://doi.org/10.1163/9789087901189_009.

Brand-Gruwel, S., Wopereis, I., & Vermetten, Y. (2005). Information problem solving by experts and novices: Analysis of a complex cognitive skill. Computers in Human Behavior, 21(3), 487–508. https://doi.org/10.1016/j.chb.2004.10.005.

Brand-Gruwel, S., Wopereis, I., & Walraven, A. (2009). A descriptive model of information problem solving while using internet. Computers & Education, 53(4), 1207–1217. https://doi.org/10.1016/j.compedu.2009.06.004.

Brand-Gruwel, S., Kammerer, Y., Van Meeuwen, L., & Van Gog, T. (2017). Source evaluation of domain experts and novices during web search. Journal of Computer Assisted Learning, 33(3), 234–251. https://doi.org/10.1111/jcal.12162.

Brante, E. W., & Strømsø, H. I. (2018). Sourcing in text comprehension: A review of interventions targeting sourcing skills. Educational Psychology Review, 30(3), 773–799. https://doi.org/10.1007/s10648-017-9421-7.

Bråten, I., Brante, E. W., & Strømsø, H. I. (2019). Teaching sourcing in upper secondary school: A comprehensive sourcing intervention with follow-up data. Reading Research Quarterly, 54(4), 481–505. https://doi.org/10.1002/rrq.253.

Breakstone, J., Smith, M., Connors, P., Ortega, T., Kerr, D., & Wineburg, S. (2021). Lateral reading: College students learn to critically evaluate internet sources in an online course. Harvard Kennedy School Misinformation Review, 2(1), 1–17. https://doi.org/10.37016/mr-2020-56.

Britt, M. A., Rouet, J. F., & Durik, A. M. (2018). Literacy beyond text comprehension: A theory of purposeful reading. Taylor & Francis. https://doi.org/10.4324/9781315682860.

Britt, M. A., Durik, A., & Rouet, J. F. (2022). Reading contexts, goals, and decisions: Text comprehension as a situated activity. Discourse Processes, 59(5–6), 361–378. https://doi.org/10.1080/0163853x.2022.2068345.

Brodsky, J. E., Brooks, P. J., Scimeca, D., Galati, P., Todorova, R., & Caulfield, M. (2021a). Associations between online instruction in lateral reading strategies and fact-checking COVID-19 news among college students. AERA Open, 7. https://doi.org/10.1177/23328584211038937.

Brodsky, J. E., Brooks, P. J., Scimeca, D., Todorova, R., Galati, P., Batson, M., & Caulfield, M. (2021b). Improving college students’ fact-checking strategies through lateral reading instruction in a general education civics course. Cognitive Research: Principles and Implications, 6, 1–18. https://doi.org/10.1186/s41235-021-00291-4.

Capin, P., Roberts, G., Clemens, N. H., & Vaughn, S. (2022). When treatment adherence matters: Interactions among treatment adherence, instructional quality, and student characteristics on reading outcomes. Reading Research Quarterly, 57(2), 753–774. https://doi.org/10.1002/rrq.442.

Cerdán, R., Gilabert, R., & Vidal-Abarca, E. (2011). Selecting information to answer questions: Strategic individual differences when searching texts. Learning and Individual Differences, 21(2), 201–205. https://doi.org/10.1016/j.lindif.2010.11.007.

Cerdán, R., Pérez, A., Vidal-Abarca, E., & Rouet, J. F. (2019). To answer questions from text, one has to understand what the question is asking: Differential effects of question aids as a function of comprehension skill. Reading and Writing, 32, 2111–2124. https://doi.org/10.1007/s11145-019-09943-w.

Clark, R. C., & Mayer, R. E. (2016). E-learning and the science of instruction: Proven guidelines for consumers and designers of multimedia learning. Wiley.

Clark, R., Kirschner, P. A., & Sweller, J. (2012). Putting students on the path to learning: The case for fully guided instruction. American Educator, 36(1), 5–11.

CNIL (2021). Le registre des activités de traitement. https://www.cnil.fr/fr/RGDP-le-registre-des-activites-de-traitement.

Cromley, J. G., & Azevedo, R. (2009). Locating information within extended hypermedia. Educational Technology Research and Development, 57, 287–313.

Davis, D. S. (2013). Multiple comprehension strategies instruction in the intermediate grades: Three remarks about content and pedagogy in the intervention literature. Review of Education, 1(2), 194–224. https://doi.org/10.1002/rev3.3005.

Drake, S. M., & Reid, J. L. (2018). Integrated curriculum as an effective way to teach 21st century capabilities. Asia Pacific Journal of Educational Research, 1(1), 31–50. https://doi.org/10.30777/apjer.2018.1.1.03.

Engelen, E., & Budke, A. (2022). Promoting geographic internet searches and subsequent argumentation using an Open Educational Resource. Computers and Education Open, 3. https://doi.org/10.1016/j.caeo.2022.100090.

Esteve-Mon, F. M., Llopis-Nebot, M. Á., & Adell-Segura, J. (2020). Digital teaching competence of university teachers: A systematic review of the literature. IEEE Revista Iberoamericana De Tecnologías Del Aprendizaje, 15(4), 399–406. https://doi.org/10.1109/rita.2020.3033225.