Abstract

Neural systems process information. This platitude contains an interesting ambiguity between multiple senses of the term “information.” According to a popular thought, the ambiguity is best resolved by reserving semantic concepts of information for the explication of neural activity at a high level of organization, and quantitative concepts of information for the explication of neural activity at a low level of organization. This article articulates the justification behind this view, and concludes that it is an oversimplification. An analysis of the meaning of claims about Shannon information rates in the spiking activity of neurons is then developed. On the basis of that analysis, it is shown that quantitative conceptions of information are more intertwined with semantic concepts than they seem to be, and, partially for that reason, are also more philosophically interesting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

If anything deserves to be called an information processing device, the brain does. Sophisticated behavior requires a device that can track and process an enormous amount of data. How does the brain manage it? The neuron doctrine, first established on the basis of anatomical evidence in 1905 by Ramón y Cajal, says that individual neurons are the brain’s fundamental functional units, and that they play this role by sending chemical and electrical signals to one another. Neurons, it seems reasonable to infer, must themselves be simple information processing devices. This idea is old, and has played a fundamental role in the development of neuroscience. McCulloch and Pitts began referring to it as a law of neural science back in 1943 (McCulloch and Pitts 1943).

Despite this, it is not easy to find a straight answer to the question: what kind of information do neurons process? Philosophers have suggested that, at the most coarse-grained level of analysis, there are just two kinds: Shannon information, and semantic information (Godfrey-Smith and Sterelny 2008; Piccinini and Scarantino 2011). A physical signal conveys semantic information if it conveys an instruction or reports a fact. Human language provides the most obvious examples of semantic information transmission, but semantic information can also be transmitted without the use of language. For example, a stop sign and a red traffic light convey the same instruction, but only one of them uses language to get the message across. Shannon information, which derives from an area of applied mathematics called information theory, has less to do with the meanings of signs, and more to do with the frequency with which different signal types appear.

When cognitive psychologists talk about information processing operations in the brain, they are typically talking about a version of semantic information. Cognitive psychologists are typically interested in understanding how purposeful behavior gets generated by the mental representations of the world that are stored in our heads. Mental representations can transmit both varieties of semantic information mentioned above. Perceptual representations function like reports about the nature of the perceived environment, while motor representations function like instructions to behave this way or that. However, when we descend to the level of a single neuron, and attempt to describe the spiking behavior observed at that level, semantic concepts no longer have any clear application. Neuroscientists who study the properties of individual spike trains tend to talk readily about Shannon information, but not about particular instructions or commands that action potentials are meant to convey.

So, at least at first glance, the best answer to the question “what kind of information is brain information?” seems to have two parts. At a high level of neural organization, brain information is semantic, but down at the level of single neurons, semantic properties are irrelevant, and the only information to speak of is Shannon information. My aim in this article is to flesh out this bifurcated view of how informational concepts apply to the brain and ask whether it is justified. The upshot of the discussion is that the bifurcation is somewhat less clear than our first glance suggests, and that claims about Shannon information at the single neuron level are not entirely independent from concerns about semantic properties.

2 Why Action Potentials Do Not Transmit Semantic Information

In order to assess the bifurcation view of brain information, we need to understand the philosophical rationale behind the application of semantic terms. Ordinary language philosophers, inspired by Ryle and Wittgenstein, were the first to make the articulation of this rationale a core feature of philosophical theory. On their view, semantic properties emerge only in contexts in which human agency is at work. They argued that terms like “perceive,” “think,” and “process information” can only be sensibly applied to rational agents. For them, to apply these terms to small neural structures within the brain is to make a kind of category mistake called the “mereological fallacy” (Bennett and Hacker 2003). Their reasoning can be summarized with the following argument: (i) agential language can only be applied to persons; (ii) to say that a thing processes information is to describe it in agential language; (iii) neurons are not persons; (iv) neurons, therefore, cannot be described as information processing devices.

Today, most philosophers of mind and language are usually happy to reject premise (i), and are, accordingly, willing to countenance semantic phenomena in systems far simpler than fully rational human persons. Nevertheless, at least within naturalistic philosophy, a kernel of the ordinary language view has been retained. It can be expressed as a necessary condition on the realization of semantic properties. A physical signal has semantic properties only where there is an interest-driven justification for the response it engenders. This principle is one of the core ideas behind recent work on the evolution of meaning and communication.Footnote 1 Since human persons clearly have interests, and since they typically respond to perceptual information by behaving in ways that further their interests, their perceptual and cognitive states can, according to this principle, justifiably be described as signals that transmit semantic information. More interestingly, this principle also justifies the use of semantic description in very simple organisms. Consider quorum sensing. Some bacteria will emit a signaling molecule once they detect that the density of conspecifics has surpassed some threshold. If the signal is successfully received by neighboring bacteria, it can trigger interesting collective behaviors such as the formation of a biofilm (Rutherford and Bassler 2012). In this example, the relevant sense of “interest” is evolutionary rather than rational. The formation of the biofilm is in the interest of the initial bacterium because it is adaptive. It might, for example, allow the bacterial colony to remain attached to a surface where it is likely to get continued access to nutrients.

We now have an initial understanding of a naturalistic philosophical rationale behind the description of behavior in semantic terms. Given this rationale, we can ask: do spike trains carry semantic information? Surprisingly, and despite the fact that neurons clearly participate in the generation of semantic phenomena, there are at least two good reasons to think that they do not themselves exhibit semantic properties. The first reason is that, unlike bacteria, neurons do not have interests in the standard evolutionary sense. Most neurons do not undergo mitosis, and therefore cannot form anything like cell lineages within the lifetime of an individual organism. Because they do not form lineages, they are not subject to natural selection. As a result, the notion of “evolutionary interests” does not apply to neurons in the relatively clear way that it does apply to bacteria. If neurons do have interests, it is only in an extremely attenuated sense, the usefulness of which is controversial.Footnote 2

There is, in any case, a more fundamental reason to think that spike trains do not carry semantic information: they do not have the right kind of causal connection to the environment. To see this, consider the strategy for attributing semantic content that Daniel Dennett calls “the intentional stance” (Dennett 1989). In order to predict the behavior of an organism, you treat it as a rational agent. Given your knowledge of the organism’s goals and the environment in which it is embedded, you formulate a hypothesis about which mental content it would make sense for it to have. The attribution of content is justified to the extent that it allows you to make more efficient and/or more accurate predictions about the behavior of the organism.Footnote 3

The intentional stance will typically provide little justification for the ascription of semantic content to a spike train because, when we observe the spike train of a single neuron, it is typically far from clear exactly which behavioral goal it is meant to realize. This is because, as Rosa Cao has eloquently demonstrated (Cao 2012), whole - organism behaviors are typically generated by a symphony of neural activity to which any given neuron makes only a small contribution. Most individual spike trains do not reliably cause whole-organism behaviors that can be interpreted as furthering the interests of the organism, and therefore cannot be reasonably viewed as sending signals with a particular meaning that is derived from their association with that behavior.

In his new book, which includes a lengthy discussion of the distinction between Shannon information and semantic information, Dennett seems to share Cao’s view. Although the intentional stance might be applicable to some extent at the coarse-grained level of functional neuroanatomy, such as in the discovery that the fusiform face area has the function of processing perceptual information about faces, it seems inapplicable, given the current state of knowledge, to “the incredibly convoluted details of individual neuron connectivity and activity” (Dennett 2017, p. 111).

So we seem to have a clear rationale for attributing semantic properties to whole organisms, but no corresponding rationale for attributing semantic properties to the behaviors of individual neurons. When viewed as an isolated fact, this is not surprising. After all, we attribute all sorts of interesting properties to whole organisms that we do not attribute to their parts. But the situation does become puzzling when we reflect on the widespread use of informational terminology at the single neuron level. Open any introductory neuroscience textbook and you are bound to find some version of the the claim that neurons send information to one another. Moreover, in the first paragraph of this essay, I gave a casual argument in support of the claim that neurons are information processing devices. But if the reasoning in the past few paragraphs has been sound, and we therefore have no rationale for the ascription of semantic properties to trains of action potentials, then either that casual argument is flawed, or it appeals to a sense of the word “information” that is distinct from the more common, semantic sense.

One of the reasons that the concept of Shannon information seems useful is that it supplies this distinct sense of the term “information.” Shannon information is austere and mathematical. It depends on nothing other than the probabilities associated with spike trains, and those probabilities can be estimated by means of direct empirical measurement. And direct empirical measurement, one hopes, does not require the application of controversial principles from the philosophy of mind! As Shannon and Weaver claimed in the opening of their landmark book on information theory, “These semantic aspects of communication are irrelevant to the engineering problem” (Shannon and Weaver 1949, p. 2). But if Shannon information has nothing at all to do with semantic information - if it is just a scientifically neutral mathematical concept - why bother describing spike trains as informational in the first place? Is it just a linguistic accident that we use the term “information” to describe these two sets of properties? I think the relationship between semantic information and Shannon information is more subtle than that. To see why, I will now introduce some basic ideas from information theory, and then briefly describe how they are used in the study of spike trains.

3 Information Theory and Its Use in Neuroscience

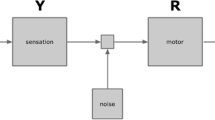

The central quantity in information theory is called entropy. Entropy is a measure of how much information is associated with a single message (or a series of messages in a channel). How to capture that idea quantitatively? Intuitively, the amount of information in a communication system is related to its capacity to reduce uncertainty. If a message is highly probable, then one can be fairly certain that it will be expressed. If improbable, one has very little certainty that it will be expressed. This suggests that the entropy of a message should be inversely proportional to its probability. Another natural requirement is that the amount of information in a sequence of two messages should be the sum of the information provided by each individual message. Probabilities combine multiplicatively (the probability of two heads in a row is (1 / 2)(1 / 2)). Additivity is imposed by taking the logarithm. So, the expression for the entropy of an individual message x is the logarithm of the inverse of its probability, or log(1 / p(x)). This shows that, on any given occasion, the production of a low probability message provides a large amount of information. However, since low probability messages occur infrequently, they contribute less to the average entropy of an information source than do higher probability messages. To compute the average entropy of an informational source, we therefore weight the entropies of individual messages by their probabilities. Summing over those weighted entropies yields the average entropy of an informational source.

One consequence of this expression is that the more variation there is in a set of signals, the more entropy there is in the source from which they are derived. Imagine I regularly report to you the results of the football games that occur at my home stadium. Then, we can think of my home stadium as an information source. There is probability distribution over the possible game outcomes. There is a distinct distribution over the possible things I might say about those outcomes. The term “messages” refers to the outcomes themselves. The term “signals” refers to the things I say in order to relay the messages.

Message and signal entropies are properties of individual components within a communication system. In order for the communication system to function well, the signals and the messages must be systematically related. The measure of that relationship is called the mutual information. If my reports to you on the football games deserve to be called informative, there must be a correlation between the reports and the outcomes themselves. From a mathematical perspective, mutual information is similar to statistical measures of correlation between random variables, except that it scales with the entropy (variability) in the source variable. If we assume that my reports about the football games are always accurate, so that the correlation between signals and messages is 1, the amount of mutual information in the system is equivalent to the initial entropy (variability) in the distribution of game outcomes. So, if our arrangement is that I report to you the final score of each game, the amount of mutual information expressed by our communication system will be substantially higher than it would have been, had we arranged for me to report only the name of the winning team.

We can think about the entropy of the distribution of game outcomes as equivalent to your average degree of uncertainty about game outcomes. Call that variable H(X). To compute the mutual information, we subtract the information that you could in principle acquire about the value of X, given knowledge of the value of Y. This term can be expressed as H(X|Y). The mutual information, therefore, can be written as:

Mutual information is measured in bits. But since the mutual information between two variables can change over time, the quantity of interest in theoretical neuroscience is more often the bit rate; that is the number of bits one variable carries about another per time unit.

Most of the experimental data on bit rates for individual neurons comes from experiments on perceptual neurons. The organism is fixed in place, presented with a particular class of stimuli, and recordings are made from the neuron of interest.

The estimates in Table 1, which are taken from a classic review paper, constitute canonical examples. The quantities in the first column represent the bits per second transmitted by a perceptual neuron under natural stimulus conditions. Those in the second represent the average coding efficiency of the spike train of that same neuron. The coding efficiency is the ratio of the rate in the first column to the default entropy of the neuron’s spiking behavior - that is, its behavior in the absence of a characteristic stimulus. The ratio is so called because it describes how much of the variance in a neuron’s spike train is exploited to carry information about changes in a time-dependent stimulus. As the ratio approaches one, the neuron is said to approach the physical limits on the transmission of information (Rieke et al. 1993; Koch et al. 2004).

4 The Ontological View

So far, I’ve described very briefly how information theoretic ideas are used in neuroscience. Now I want to shift focus to a related question: what exactly does it mean to say that a neuron transmits Shannon information? In other words, what is the empirical content of this claim? Answering this question will help us to evaluate the justification for the bifurcation view of brain information discussed at the outset.

According to one prominent tradition, to say that a physical thing carries or expresses Shannon information simply means that there is an empirical correlation between it and some other physical thing. The empirical correlation view is expressed, for example, in the Stanford Encyclopedia of Philosophy entry on information in biology. There, Kim and Godfrey-Smith say that information is present wherever there is contingency and correlation.

For Shannon, anything is a source of information if it has a number of alternative states that might be realized on a particular occasion. And any other variable carries information about the source if its state is correlated with the state of the source (Godfrey-Smith and Sterelny 2008).

What is the motivation for this extremely permissive view of information? Philosophers interested in the mind and brain who have discussed Shannon information have usually done so in the context of either endorsing or denying a proposal to provide a reductive explanation of semantic phenomena in terms of raw empirical probabilities. This is a long-standing project undertaken first by Fred Dretske (whose ultimate goal was a naturalistic account of knowledge), and continued today by Brian Skyrms and his followers. In order for that kind of reductive project to make sense, the notion of Shannon information cannot presuppose the existence of semantic properties. Philosophers interested in making progress on this reductive project therefore have a reason to conceptualize Shannon information in such a way as to ensure that ascribing it to a physical system is thoroughly uncontroversial. Given this understanding of the concept, Shannon information will be instantiated in the relation between a stimulus and a perceptual neuron, but it will also be instantiated in the relation between any two arbitrarily chosen neurons, provided that those neurons are not perfectly stochastically independent.

This permissive ontological view is not adequate for interpreting the scientific content of the claim that a neuron carries or transmits Shannon information. Common causes and spurious correlations are everywhere. Covariation among empirical variables is therefore cheap. Without some independently motivated theoretical framework, bare correlation is no aid to understanding how a system works. This is especially true in a complex networked system like the brain. In such a system, correlation is practically ubiquitous. So, if Shannon information is just a fancy term for empirical correlation, as the ontological view suggests, Shannon information is ubiquitous. If Shannon information is ubiquitous, having particularly high rates of Shannon information flowing through a system cannot be viewed as a functional capacity of the system.

As the appeal to efficiency in Table 1 illustrates, however, information rates do describe performance capacities. To reinforce this idea, consider the design of experiments used to evoke the appropriate data. Above, I said that the coding efficiency of a neuron is the ratio of its active firing rate, in the presence of a stimulus, to an information theoretic measure of the default variability in the neuron’s behavior. Designing an experiment that evokes the relevant data requires that we understand both the neuron’s default behavior when it is not engaged in a task, as well as its behavior when it is optimally active, helping to process the kind of stimulus to which it is best attuned. So, if we are to correctly estimate the information rate of a perceptual neuron, our choice of stimulus matters crucially. In a discussion of information theory as applied to vision in particular, Dayan and Abbott say:

The basic assumption is that these receptive fields serve to maximize the amount of information that the associated neural responses convey about natural scenes in the presence of noise. Information theoretic analyses are sensitive to the statistical properties of the stimuli being represented, so the statistics of natural scenes play an important role in these studies (Dayan and Abbott 2001, p. 135).

So, if we want an accurate estimate of the neuron’s information rate, we need to design an experiment in which the kind of stimulus we employ corresponds to the biological function of the neuron from which we record. The relevant notion of function here is the kind that is applicable in evolutionary explanations of biological traits, sometimes known as etiological functions. A trait has a function in this sense if it has played the right sort of fitness-enhancing causal role in the organism’s ancestral lineage.

This idea is bound to provoke skepticism. If neural information rates can only be estimated accurately if the researchers know what role the neural signal played in the evolutionary history of the organism, then we might as well pack it in and go study something more tractable. The situation is more hopeful than it looks, however. As is the case elsewhere in biology, functional ascriptions are not typically made on the basis of detailed knowledge of the historical record. Instead, they are grounded in adaptationist reasoning (Dennett 1996). In perceptual neuroscience, adaptationist reasoning yields a simple principle: the stimulus that best reflects the etiological function of the neuron is the one that maximizes the mutual information between stimulus and spike train. This optimality assumption allows neuroscientists not only to fine tune their understanding of neural function, but in some cases, it allows them to discover functionally appropriate stimuli in the first place. For example, one can generate artificial stimuli with a range of statistical parameters, and then use principal components analysis or other bottom up search techniques to identify which parameter settings lead to maximal informational performance (Sharpee et al. 2004).

The lesson here is that estimates of neural information transmission are about the performance capacity of the neuron; and to measure the performance capacity of a neuron, you have to create the right experimental conditions. Creating those conditions forces you to draw on an understanding of the what task the neuron is trying to perform. Because the ontological view is blind to the function of a neuron, it is not the conception of information we need to interpret the scientific content of neural information rates.

5 The Reification View

Because estimates of neural information rates are highly sensitive to experimental design and choice of stimulus, its scientific proponents are often anxious to demonstrate that their methods are objective and empirically sound. They want to show that the amount of information flowing through a neural circuit is not just a matter of the scientist’s perspective on the situation. However, the desire to show that informational quantities are genuinely empirical sometimes leads to an awkward sort of reification. In the context of a paper on information-theoretic approaches to retinal physiology, Meister and Berry, well-established practitioners in the field, make the following remark:

It has long been recognized that the essential substance transmitted by neurons is not electric charge or neuro-chemicals, but information. In analyzing a neural system, it is essential to measure and track the flow of this substance, just as in studies of the vascular system one might want to measure blood flow.

This remark (Meister and Berry 1999) is such an obvious exaggeration that one is forced to wonder whether the authors really meant it. Nevertheless, the claim warrants closer attention. Although it may be obvious that it is misleading, it is not entirely obvious what makes it so. In my view, the analogy between information and blood is flawed primarily because it suggests that information is material stuff, which it is not. Blood can be removed from the body and nevertheless continue to deserve its status as blood (blood banks would be pointless were this not so). Action potentials are not like this. They play the role of an informational signal when they are embedded in an organism that moves about in the world. In vivo, an action potential is just a burst of electrochemical activity. There’s nothing particularly informational about it.

This is not merely an intuitive judgment. The quantity of information carried by a signal depends essentially on that signal being incorporated into a functional system capable of reading it, as well as on the manner in which it is read. To see this, consider an example from communications technology. Last year, the National Security Agency in the United States discovered that terrorists were communicating with one another via codes embedded in JPEG files. A picture of a puppy would be sent to the attacker, but deep in the file was pattern that could be decoded into natural language. How much information did the file contain? From the perspective of the modem used to download the file, it might have been exactly 2Mb. But from the perspective of the would-be terrorist, it could have been just 1 bit. It might, for example, have resolved the uncertainty between just two options: “attack” and “wait.”

The lesson implicit in this example is that the quantity of Shannon information attached to a signal is not determined entirely by its intrinsic material properties. It depends also on the capacity of a receiver to recognize the signal, and the manner in which it is recognized. This makes informational quantities inherently functional. Blood has a function, of course; but, unlike information, its quantity does not depend on whether it is measured in a functional context.

How do neuroscientists take this receiver relativity into account when estimating neural information rates? Once again, an adaptationist perspective is called for. Adaptationism gives us reason to think that a spike train which is finely calibrated to a perceptual stimulus is not just a wasted burst of energy. We assume, and in some cases have neuroanatomical evidence to believe, that downstream receiver mechanisms are standing by; ready, at least on some occasions, to make use of the signal in the service of the organism’s behavioral goals.

6 A Functional Analysis of Shannon Information

We’ve seen that the claim that a neural spike train transmits Shannon information cannot be interpreted as the rather bland claim that the time course of the spike train just happens to be correlated with some other empirical property. Nor can it be interpreted as the rather mysterious claim that spike trains constitute a special sort of material substance that is the hidden target of neuroscientific investigation. So how should we interpret it? What positive account can we provide, given the discussion thus far?

One lesson that emerged form the discussion of the ontological and reification views of Shannon information was that neural information rate claims rely on adaptationist reasoning. Consequently, their scientific content includes an ineliminable functional commitment: the spiking properties of neurons came to be the way they are for a reason. Another problem with the two analyses discussed above was that they lacked any clear conceptual relationship to the definitions introduced in Sect. 3. My positive analysis is designed to remedy these shortcomings. On my view, the claim that a neuron transmits Shannon information should be interpreted to mean (i) that the neuron functions as a component in a semantic system, (ii) that the functional capacities of the semantic system depend on the degree to which it can exploit variations in the physical states of its component parts, and (iii) the efficiency of that exploitation can, at least in principle, be measured.

This analysis shows how the relatively abstract idea behind information theory—entropy—can have functional significance in a biological system. Recall from Sect. 3 that the entropy of an informational source is proportional to the number of physical states it can realize. From an adaptationist perspective, the constant variation in neurons’ output signals must contribute to the brain’s ability to process semantic information about what is going on in the environment and what to do about it. The more entropy the average neuron can express, therefore, the more semantic processing the brain can achieve with its relatively fixed stock of physical resources.

From this perspective, the Shannon properties of individual neural spike trains can help explain how a brain manages to process so much semantic information. It also helps us to think more clearly about the relationship between information and evolution. If neurons only carry Shannon information, and if Shannon information has nothing at all to do with semantic information, one might reasonably wonder why neurons would have evolved such impressive rates of coding efficiency. As Peter Godfrey-Smith has pointed out (Godfrey-Smith 2011), there is no reason for an informational system to evolve unless the information it carries is worth getting across.

It is true that much of information theory can proceed without paying attention to the specific messages being sent over an information channel, but there is no point in maintaining and using the channel unless the messages sent do bear on something in the world, and can guide actions or inferences of some kind.

Although this passage is drawn from a discussion that is not particularly concerned with neuroscience, Godfrey-Smith’s subtle formulation is exactly right for our purposes. We can say with considerable confidence that spike trains “bear on something in the world” and also that they “guide actions or inferences,” without committing ourselves to the view that there is some particular chunk of semantic content that a spike train has been selected to convey. The informational properties of individual neurons were selected, rather, in order to increase the efficency of the semantic processing that becomes visible only at a higher level of neural organization.

If this interpretation is correct, the bifurcation view discussed in the introduction cannot be quite right. The bifurcation view says that semantic properties are irrelevant to understanding the spiking behavior of single neurons. But according to the functional analysis just suggested, claims about the rate of Shannon information only make sense in a context in which semantic information is being transmitted. This claim is not meant to suggest that all applications of information theory will involve semantic systems. Information theory is a branch of applied mathematics, and has a staggering range of interesting applications. For example, it is used to determine how hugely complex genomic data sets can be represented most efficiently, in order to simplify computation (Vinga 2013). I set cases like this one aside. The claim I am making is that if an informational rate is intended as a description of a functional capacity within a system that uses information, then semantic properties must also be involved.

7 On the Conceptual Fecundity of Shannon Information

To this author, the suggestion that information in biological systems can be measured is as inspiring as it is bold. The neuroscientists working in this area are saying, at least by implication, that we can bring quantitative rigor to the study of meaning and representation in animals. How could philosophers fail to take interest in a claim like that? It is surprising, therefore, that the philosophical literature includes precious little discussion of the topic.Footnote 4 One reason for the lack of interest in working out the philosophical implications of this area of science may have to do with the fact that information theory has its roots in computer science and communications technology, rather than biology. In computer science, there is no significant danger that we will be mistaken about what counts as an elementary symbol. There is no need to worry about whether you have correctly understood the functional decomposition of the information processor before thinking through coding strategies. What counts as a symbol is underwritten by engineering conventions that we created, and which are baked into the way we learn to handle questions about information-theoretic properties. If you consult a book on image compression algorithms, for example, there will be plenty of discussion of the properties Shannon introduced, but little or nothing on the manner in which the image is stored in hardware. One can restrict one’s attention to mathematical transformations of bit strings since there is, at least quite often, no need to know anything about the physical characteristics of the machine that will execute the algorithm.

This is very much unlike the world of biology, where basic questions about how semantic signals are encoded remain open. If it turns out that, as some neuroscientists believe, neurobiological information transmission occurs in large part at the dendritic level rather than at the level of spiking neurons (Ovsepian and Dolly 2011), then the mainstream understanding of functional decomposition of information processing in the brain will be wrong. If that is the case, our current estimates of the rate of information transmission will also be wrong. Moreover, the adaptationist principles that neuroscientists use to reason about the functional decomposition of neural signaling systems are themselves open to challenge and revision. For example, the optimality assumption suggested in Sect. 3 stated that the stimulus that best reflects the etiological function of a neuron is the one that maximizes the mutual information between stimulus and spike train. Other principles are possible, however. For example, Levy and Baxter (2002) suggest that the quantity nature actually tries to maximize is the ratio of mutual information to metabolic cost. The lesson here is that if we accept that semantic information and Shannon information are not entirely independent from one another in the domain of biological signaling, the study of information theoretic properties in the brain becomes both more error-prone, and also far more philosophically interesting than we might otherwise have thought.

Notes

For a discussion of this issue, see Chapter 8 of Dennett (2017).

Notice that this strategy entails, but is not entailed by, the necessary condition for the attribution of semantic properties mentioned above. This places Dennett’s approach to semantic information within the naturalistic tradition.

But see Rathkopf (2017) for a recent exception.

References

Bennett MR, Hacker PMS (2003) Philosophical foundations of neuroscience, 1st edn. Wiley, Malden

Borst A, Theunissen FE (1999) Information theory and neural coding. Nat Neurosci 2(11):947–957

Calcott B, Griffiths PE, Pocheville A (2017) Signals that make a difference. Br J Philos Sci

Cao R (2012) A teleosemantic approach to information in the brain. Biol Philos 27(1):49–71

Dayan P, Abbott LF (2001) Theoretical neuroscience, vol 10. MIT Press, Cambridge, MA

Dennett DC (1989) The intentional stance. MIT press, Cambridge

Dennett DC (1996) Darwin’s dangerous idea: evolution and the meanings of life. Simon & Schuster, New York

Dennett DC (2017) From bacteria to bach and back: the evolution of minds. Penguin, London

Godfrey-Smith P (2011) Signals: evolution, learning, and information, by Brian Skyrms. Mind 120(480):1288–1297

Godfrey-Smith P, Martínez M (2013) Communication and common interest. PLoS Comput Biol 9(11):e1003282

Godfrey-Smith P, Sterelny K (2008) Biological information. In: Zalta EN (ed) The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University, Stanford fall 2008 edition.

Harms WF (2006) What is information? three concepts. Biol Theory 1(3):230–242

Koch K, McLean J, Berry M, Sterling P, Balasubramanian V, Freed MA (2004) Efficiency of information transmission by retinal ganglion cells. Curr Biol 14(17):1523–1530

Levy W, Baxter RA (2002) Energy-efficient neuronal computation via quantal synaptic failures. J Neurosci 22(11):4746–4755

McCulloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 5(4):115–133

Meister M, Berry MJ (1999) The neural code of the retina. Neuron 22(3):435–450

Ovsepian SV, Dolly JO (2011) Dendritic snares add a new twist to the old neuron theory. Proc Natl Acad Sci 108(48):19113–19120

Piccinini G, Scarantino A (2011) Information processing, computation, and cognition. J Biol Phys 37(1):1–38

Rathkopf C (2017) Neural information and the problem of objectivity. Biol Philos 32(3):321–336

Rieke F, Warland D, Bialek W (1993) Coding efficiency and information rates in sensory neurons. EPL (Europhys Lett) 22(2):151

Rutherford ST, Bassler BL (2012) Bacterial quorum sensing: its role in virulence and possibilities for its control. Cold Spring Harbor Perspect Med 2(11):a012427

Shannon CE, Weaver W (1949) The mathematical theory of information. University of Illinois Press, Champaign

Sharpee T, Rust NC, Bialek W (2004) Analyzing neural responses to natural signals: maximally informative dimensions. Neural Comput 16(2):223–250

Skyrms B (2010) Signals: evolution, learning, and information. Oxford University Press, New York

Vinga S (2013) Information theory applications for biological sequence analysis. Brief Bioinform 15(3):376–389

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Rathkopf, C. What Kind of Information is Brain Information?. Topoi 39, 95–102 (2020). https://doi.org/10.1007/s11245-017-9512-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11245-017-9512-6