Abstract

The Jeffreys–Lindley paradox displays how the use of a \(p\) value (or number of standard deviations \(z\)) in a frequentist hypothesis test can lead to an inference that is radically different from that of a Bayesian hypothesis test in the form advocated by Harold Jeffreys in the 1930s and common today. The setting is the test of a well-specified null hypothesis (such as the Standard Model of elementary particle physics, possibly with “nuisance parameters”) versus a composite alternative (such as the Standard Model plus a new force of nature of unknown strength). The \(p\) value, as well as the ratio of the likelihood under the null hypothesis to the maximized likelihood under the alternative, can strongly disfavor the null hypothesis, while the Bayesian posterior probability for the null hypothesis can be arbitrarily large. The academic statistics literature contains many impassioned comments on this paradox, yet there is no consensus either on its relevance to scientific communication or on its correct resolution. The paradox is quite relevant to frontier research in high energy physics. This paper is an attempt to explain the situation to both physicists and statisticians, in the hope that further progress can be made.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

On July 4, 2012, the leaders of two huge collaborations (CMS and ATLAS) presented their results at a joint seminar at the CERN laboratory, located on the French–Swiss border outside Geneva. Each described the observation of a “new boson” (a type of particle), suspected to be the long-sought Higgs boson (Incandela and Gianotti 2012). The statistical significances of the results were expressed in terms of “\(\sigma \)”: carefully calculated \(p\) values (not assuming normality) were mapped onto the equivalent number of standard deviations in a one-tailed test of the mean of a normal (i.e., Gaussian) distribution. ATLAS observed 5\(\sigma \) significance by combining the two most powerful detection modes (different kinds of particles into which the boson decayed) in 2012 data with full results from earlier data. With independent data from a different apparatus, and only partially correlated analysis assumptions, CMS observed 5\(\sigma \) significance in a similar combination, and when combining with some other modes as CMS had planned for that data set, 4.9\(\sigma \).

With ATLAS and CMS also measuring similar values for the rates of production of the detected particles, the new boson was immediately interpreted as the most anticipated and publicized discovery in high energy physics (HEP) since the Web was born (also at CERN). Journalists went scurrying for explanations of the meaning of “\(\sigma \)”, and why “high energy physicists require 5\(\sigma \) for a discovery”. Meanwhile, some who knew about Bayesian hypothesis testing asked why high energy physicists were using frequentist \(p\) values rather than calculating the posterior belief in the hypotheses.

In this paper, I describe some of the traditions for claiming discovery in HEP, which have a decidedly frequentist flavor, drawing in a pragmatic way on both Fisher’s ideas and the Neyman–Pearson (NP) approach, despite their disagreements over foundations of statistical inference. Of course, some HEP practitioners have been aware of the criticisms of this approach, having enjoyed interactions with some of the influential Bayesian statisticians (both subjective and objective in flavor) who attended HEP workshops on statistics. These issues lead directly to a famous “paradox”, as Lindley (1957) called it, when testing the hypothesis of a specific value \(\theta _0\) of a parameter against a continuous set of alternatives \(\theta \). The different scaling of \(p\) values and Bayes factors with sample size, described by Jeffreys and emphasized by Lindley, can lead the frequentist and the Bayesian to inconsistent strengths of inferences that in some cases can even reverse the apparent inferences.

However, as described below, it is an understatement to say that the community of Bayesian statisticians has not reached full agreement on what should replace \(p\) values in scientific communication. For example, two of the most prominent voices of “objective” Bayesianism (J. Berger and J. Bernardo) advocate fundamentally different approaches to hypothesis testing for scientific communication. Furthermore, views in the Bayesian literature regarding the validity of models (in the social sciences for example) are strikingly different than those common in HEP.

This paper describes today’s rather unsatisfactory situation. Progress in HEP meanwhile continues, but it would be potentially quite useful if more statisticians become aware of the special circumstances in HEP, and reflect on what the Jeffreys–Lindley (JL) paradox means to HEP, and vice versa.

In “high energy physics”, also known as “elementary particle physics”, the objects of study are the smallest building blocks of matter and the forces among them. (For one perspective, see Wilczek (2004).) The experimental techniques often make use of the highest-energy accelerated beams attainable. But due to the magic of quantum mechanics, it is possible to probe much higher energy scales through precise measurements of certain particle decays at lower energy; and since the early universe was hotter than our most energetic beams, and still has powerful cosmic accelerators and extreme conditions, astronomical observations are another crucial source of information on “high energy physics”. Historically, many discoveries in HEP have been in the category known to statisticians as “the interocular traumatic test; you know what the data mean when the conclusion hits you between the eyes.” (Edwards et al. 1963, p. 217, citing J. Berkson). In other cases, evidence accumulated slowly, and it was considered essential to quantify evidence in a fashion that relates directly to the subject of this review.

A wide range of views on the JL paradox can be found in reviews with commentary by many distinguished statisticians, in particular those of Shafer (1982), Berger and Sellke (1987), Berger and Delampady (1987a), and Robert et al. (2009). The review of Bayes factors by Kass and Raftery (1995) and the earlier book by economist Leamer (1978) also offer interesting insights. Some of these authors view statistical issues in their typical data analyses rather differently than do physicists in HEP; perhaps the greatest contrast is that physicists do often have non-negligible belief that their null hypotheses are valid to a precision much greater than our measurement capability. Regarding the search by ATLAS and CMS that led to the discovery of “a Higgs boson”, statistician van Dyk (2014) has prepared an informative summary of the statistical procedures that were used.

In Sects. 2–4, I review the paradox, discuss the concept of the point null hypothesis, and observe that the paradox arises if there are three different scales in \(\theta \) having a hierarchy that is common in HEP. In Sect. 5, I address the notions common among statisticians that “all models are wrong”, and that scientists tend to be biased against the null hypothesis, so that the paradox is irrelevant. I also describe the likelihood-ratio commonly used in HEP as the test statistic. In Sect. 6, I discuss the difficult issue of choosing the prior for \(\theta \), and in particular the scale \(\tau \) of those values of \(\theta \) for which there is non-negligible prior belief. Section 7 briefly describes the completely different approach to hypothesis testing advocated by Bernardo, which stands apart from the bulk of the Bayesian literature. In Sect. 8, I discuss how measured values and confidence intervals, for quantities such as production and decay rates, augment the quoted \(p\) value, and how small but precisely measured effects can provide a window into very high energy physics. Section 9 discusses the choice of Type I error \(\alpha \) (probability of rejecting \(H_0\) when it is true) when adopting the approach of NP hypothesis testing, with some comments on the “5\(\sigma \) myth” of HEP. Finally, in Sect. 10, I discuss the seemingly universal agreement that a single \(p\) value is (at best) a woefully incomplete summary of the data, and how confidence intervals at various confidence levels help readers assess the experimental results. I summarize and conclude in Sect. 11.

As it is useful to use precisely defined terms, we must be aware that statisticians and physicists (and psychologists, etc.) have different naming conventions. For example, a physicist says “measured value”, while a statistician says “point estimate” (and while a psychologist says “effect size in original units”). This paper uses primarily the language of statisticians, unless otherwise stated. Thus “estimation” does not mean “guessing”, but rather the calculation of “point estimates” and “interval estimates”. The latter refers to frequentist confidence intervals or their analogs in other paradigms, known to physicists as “uncertainties on the measured values”. In this paper, “error” is generally used in the precisely defined sense of Type I and Type II errors of Neyman–Pearson theory (Sect. 9), unless obvious from context. Other terms are defined in context below. Citations are provided for the benefit of readers who may not be aware that certain terms (such as “loss”) have specific technical meanings in the statistics literature. “Effect size” is commonly used in the psychology literature, with at least two meanings. The first meaning, described by the field’s publication manual (APA 2010, p. 34) as “most often easily understood”, is simply the measured value of a quantity in the original (often dimensionful) units. Alternatively, a “standardized” dimensionless effect size is obtained by dividing by a scale such as a standard deviation. In this paper, the term always refers to the former definition (original units), corresponding to the physicist’s usual measured value of a parameter or physical quantity. Finally, the word “model” in statistics literature usually refers to a probabilistic equation that describes the assumed data-generating mechanisms (Poisson, binomial, etc.), often with adjustable parameters. The use of “model” for a “law of nature” is discussed below.

2 The original “paradox” of Lindley, as corrected by Bartlett

Lindley (1957), with a crucial correction by Bartlett (1957), lays out the paradox in a form that is useful as our starting point. This exposition also draws on Sect. 5.0 of Jeffreys (1961) and on Berger and Delampady (1987a). It mostly follows the notation of the latter, with the convention of upper case for the random variable and lower case for observed values. Figure 1 serves to illustrate various quantities defined below.

Illustration of quantities used to define the JL paradox. The unknown parameter is \(\theta \), with likelihood function \({\mathcal {L}}(\theta )\) resulting from a measurement with uncertainty \({\sigma _{\mathrm{tot}}} \). The point MLE is \(\hat{\theta }\), which in the sketch is about \(5{\sigma _{\mathrm{tot}}} \) away from the null hypothesis, the “point null” \(\theta _0\). The point null hypothesis has prior probability \(\pi _0\), which can be spread out over a small interval of width \(\epsilon _0\) without materially affecting the paradox. The width of the prior pdf \(g(\theta )\) under \(H_1\) has scale \(\tau \). The scales have the hierarchy \(\epsilon _0 \ll {\sigma _{\mathrm{tot}}} \ll \tau \)

Suppose \(X\) having density \(f(x|\theta )\) is sampled, where \(\theta \) is an unknown element of the parameter space \(\Theta \). It is desired to test \(H_0\): \(\theta =\theta _0\) versus \(H_1\): \(\theta \ne \theta _0\). Following the Bayesian approach to hypothesis testing pioneered by Jeffreys (also referred to as Bayesian model selection), we assign prior probabilities \(\pi _0\) and \(\pi _1 = 1 - \pi _0\) to the respective hypotheses. Conditional on \(H_1\) being true, one also has a continuous prior probability density \(g(\theta )\) for the unknown parameter.

As discussed in the following sections, formulating the problem in this manner leads to a conceptual issue, since in the continuous parameter space \(\Theta \), a single point \(\theta _0\) (set of measure zero) has non-zero probability associated with it. This is impossible with a usual probability density, for which the probability assigned to an interval tends to zero as the width of the interval tends to zero. Assignment of non-zero probability \(\pi _0\) to a single point \(\theta _0\) is familiar to physicists by using the Dirac \(\delta \)-function (times \(\pi _0\)) at \(\theta _0\), while statisticians often refer to placing “probability mass” at \(\theta _0\), or to using “counting measure” for \(\theta _0\) (in distinction to “Lebesgue measure” for the usual density \(g\) for \(\theta \ne \theta _0\)). The null hypothesis corresponding to the single point \(\theta _0\) is also commonly referred to as a “point null” hypothesis, or as a “sharp hypothesis”. As discussed below, just as a \(\delta \)-function can be viewed as useful approximation to a highly peaked function, for hypotheses in HEP it is often the case that the point null hypothesis is a useful approximation to a prior that is sufficiently concentrated around \(\theta _0\).

If the density \(f(x|\theta )\) under \(H_1\) is normal with mean \(\theta \) and known variance \(\sigma ^2\), then for a random sample \(\{x_1, x_2, \ldots \, x_n\}\), the sample mean is normal with variance \(\sigma ^2/n\), i.e., \(\overline{X}\) has density \(N(\theta , \sigma ^2/n)\). For conciseness (and eventually to make the point that “\(n\)” can be obscure), let

The likelihood is then

with maximum likelihood estimate (MLE) \(\hat{\theta } = {\overline{x}}\). By Bayes’s Theorem, the posterior probabilities of the hypotheses, given \(\hat{\theta }\), are:

and

Here \(A\) is a normalization constant to make the sum of the two probabilities equal unity, and the integral is over the support of the prior \(g(\theta )\).

There will typically be a scale \(\tau \) that indicates the range of values of \(\theta \) over which \(g(\theta )\) is relatively large. One considers the case

so that \(g(\theta )\) varies slowly where the rest of the integrand is non-negligible, and therefore the integral approximately equals \(g(\hat{\theta })\), so that

Then the ratio of posterior odds to prior odds for \(H_0\), i.e., the Bayes factor (\(\mathrm{BF}\)), is independent of \(A\) and \(\pi _0\), and given by

where

is the usual statistic providing the departure from the null hypothesis in units of \({\sigma _{\mathrm{tot}}} \). Some authors (e.g., Kass and Raftery (1995)) use the notation \(B_{01}\) for this Bayes factor, to make clear which hypotheses are used in the ratio; as this paper always uses the same ratio, the subscripts are suppressed. Then the \(p\) value for the two-tailed test is \(p=2(1 - \Phi (z))\), where \(\Phi \) is the standard normal cumulative distribution function. (As discussed in Sect. 5.2, in HEP often \(\theta \) is physically non-negative, and hence a one-tailed test is used, i.e., \(p=1 - \Phi (z)\).)

Jeffreys (1961, p. 248) notes that \(g(\hat{\theta })\) is independent of \(n\) and \({\sigma _{\mathrm{tot}}} \) goes as \(1/\sqrt{n}\), and therefore a given cutoff value of \(\mathrm{BF}\) does not correspond to a fixed value of \(z\). This discrepancy in the sample-size scaling of \(z\) and \(p\) values compared to that of Bayes factors (already noted for a constant \(g\) on p. 194 in his first edition of 1939) is at the core of the JL paradox, even if one does not take values of \(n\) so extreme as to make \(P(H_0|\hat{\theta })>P(H_1|\hat{\theta })\).

Jeffreys (1961, Appendix B, p. 435) curiously downplays the discrepancy at the end of a sentence that summarizes his objections to testing based on \(p\) values (almost verbatim with p. 360 of his 1939 edition): “In spite of the difference in principle between my tests and those based on [\(p\) values], and the omission of the latter to give the increase in the critical values for large \(n\), dictated essentially by the fact that in testing a small departure found from a large number of observations we are selecting a value out of a long range and should allow for selection, it appears that there is not much difference in the practical recommendations.” He does say, “At large numbers of observations there is a difference”, but he suggests that this will be rare and that the test might not be properly formulated: “internal correlation should be suspected and tested”.

In contrast, Lindley (1957) emphasized how large the discrepancy could be, using the example where \(g(\theta )\) is taken to be constant over an interval that contains both \(\hat{\theta }\) and the range of \(\theta \) in which the integrand is non-negligible. For any arbitrarily small \(p\) value (arbitrarily large \(z\)) that is traditionally interpreted as evidence against the null hypothesis, there will always exist \(n\) for which the \(\mathrm{BF}\) can be arbitrarily large in favor of the null hypothesis.

Bartlett (1957) quickly noted that Lindley had neglected the length of the interval over which \(g(\theta )\) is constant, which should appear in the numerator of the \(\mathrm{BF}\), and which makes the posterior probability of \(H_0\) “much more arbitrary”. More generally, the normalization of \(g\) always has a scale \(\tau \) that characterizes the extent in \(\theta \) for which \(g\) is non-negligible, which implies that \(g(\hat{\theta }) \propto 1/\tau \). Thus, there is a factor of \(\tau \) in the numerator of \(\mathrm{BF}\). For example, Berger and Delampady (1987a) and others consider \(g(\theta )\) having density \(N(\theta _0, \tau ^2)\), which, in the limit of Eq. 5, leads to

There is the same proportionality in the Lindley/Bartlett example if the length of their interval is \(\tau \). The crucial point is the generic scaling,

Of course, the value of the proportionality constant depends on the form of \(g\) and specifically on \(g(\hat{\theta })\).

Meanwhile, from Eq. 2, the ratio \(\lambda \) of the likelihood of \(\theta _0\) under \(H_0\) and the maximum likelihood under \(H_1\) is

Thus, unlike the case of simple-vs-simple hypotheses discussed below in Sect. 2.2, this maximum likelihood ratio takes the side of the \(p\) value in disfavoring the null hypothesis for large \(z\), independent of \({\sigma _{\mathrm{tot}}}/\tau \), and thus independent of sample size \(n\). This difference between maximizing \({\mathcal {L}}(\theta )\) under \(H_1\), and averaging it under \(H_1\) weighted by the prior \(g(\theta )\), can be dramatic.

The factor \({\sigma _{\mathrm{tot}}}/\tau \) (arising from the average of \(\mathcal L\) weighted by \(g\) in Eq. 4) is often called the “Ockham factor” that provides a desirable “Ockham’s razor” effect (Jaynes 2003, Chap. 20) by penalizing \(H_1\) for imprecise specification of \(\theta \). But the fact that (even asymptotically) BF depends directly on the scale \(\tau \) of the prior \(g(\theta )\) (and more precisely on \(g(\hat{\theta })\)) can come as a surprise to those deeply steeped in Bayesian point and interval estimation, where typically the dependence on all priors diminishes asymptotically. The surprise is perhaps enhanced since the BF is often introduced as the factor by which prior odds (even if subjective) are modified in light of the observed data, giving the initial impression that the subjective part is factorized out from the \(\mathrm{BF}\).

The likelihood ratio \(\lambda = \exp (-z^2/2)\) takes on the numerical values 0.61, 0.14, 0.011, 0.00034, and 3.7E-06, as \(z\) is equal to 1, 2, 3, 4, and 5, respectively. Thus, in order for the Ockham factor to reverse the preferences of the hypotheses in the BF compared to the maximum likelihood ratio \(\lambda \), the Ockham factor must be smaller than these numbers in the respective cases. Some examples of \({\sigma _{\mathrm{tot}}} \) and \(\tau \) in HEP that can do this (at least up to \(z=4\)) are in Sect. 5.1. As discussed below, even when not in the extreme case where the Ockham factor reverses the preference of the hypotheses, its effect deserves scrutiny.

From the derivation, the origin of the Ockham factor (and hence sample-size dependence) does not depend on the chosen value of \(\pi _0\), and thus not on the commonly suggested choice of \(\pi _0 = 1/2\). The scaling in Eq. 10 follows from assigning any non-zero probability to the single point \(\theta =\theta _0\), as described above using the Dirac \(\delta \)-function, or “probability mass”.

The situation clearly invited further studies, and various authors, beginning with Edwards et al. (1963), have explored the impact of changing \(g(\theta )\), making numerical comparisons of \(p\) values to Bayes factors in contexts such as testing a point null hypothesis for a binomial parameter. Generally they have given examples in which the \(p\) value is always numerically smaller than the BF, even when the prior for \(\theta \) “gives the utmost generosity to the alternative hypothesis”.

2.1 Is there really a “paradox”?

A trivial “resolution” of JL paradox is to point out that there is no reason to expect the numerical results of frequentist and Bayesian hypothesis testing to agree, as they calculate different quantities. Still, it is unnerving to many that “hypothesis tests” that are both communicating scientific results for the same data can have such a large discrepancy. So is it a paradox?

I prefer to use the word “paradox” with the meaning I recall from school, “a statement that is seemingly contradictory or opposed to common sense and yet is perhaps true” (Webster 1969, definition 2a). This is the meaning of the word, for example, in the celebrated “paradoxes” of Special Relativity, such as the Twin Paradox and the Pole-in-Barn Paradox. The “resolution” of a paradox is then a careful explanation of why it is not a contradiction. I therefore do not use the word paradox as a synonym for contradiction—that takes a word with (I think) a very useful meaning and wastes it on a redundant meaning of another word. It can however be confusing that what is deemed paradoxical depends on the personal perspective of what is “seemingly” contradictory. If someone says, “What Lindley called a paradox is not a paradox”, then typically they either define paradox as a synonym for contradiction, or it was always so obvious to them that the paradox is not a contradiction that they think it is not paradoxical. (It could also be that there is a contradiction that cannot be resolved, but I have not seen that used as an argument for why it is not a paradox.) Although it may still be questionable as to whether there is a resolution satisfactory to everyone, for now I think that the word paradox is quite apt. As the deep issue is the scaling of the BF with sample size (for fixed \(p\) value) as pointed out by Jeffreys already in 1939, I follow some others in calling it the Jeffreys–Lindley (JL) paradox.

Other ambiguities in discussions regarding the JL paradox include whether the focus is on the posterior odds of \(H_0\) (which includes the prior odds) or on the BF (which does not). In addition, while one often introduces the paradox by noting the extreme cases where the \(p\) value and the BF seem to imply opposite inferences, one should also emphasize the less dramatic (but still disturbing) cases where the Ockham factor plays a large (and potentially) arbitrary role, even if the BF favors \(H_1\). In the latter cases, it can be claimed that the \(p\) value overstates the evidence against \(H_0\). In this paper I focus on the BF, following some others, e.g. Edwards et al (1963, who somewhat confusingly denote it by \(L\), p. 218) and Bernardo (1999, p. 102). I also take a rather inclusive view of the paradox, as the issue of differences in sample size scaling is always present, even if not taken to the extreme limit where the Ockham factor overwhelms the BF, and even reverses arbitrarily small prior probability for \(H_0\).

2.2 The JL paradox is not about testing simple \(H_0\) vs simple \(H_1\)

Testing simple \(H_0\): \(\theta =\theta _0\) vs simple \(H_1\): \(\theta = \theta _1\) provides another interesting contrast between Bayesian and frequentist hypothesis testing, but this is not an example of the JL paradox. The Bayes factor and the likelihood ratio are the same (in the absence of nuisance parameters), and therefore in agreement as to which hypothesis the data favor. This is in contrast to the high-\(n\) limit of the JL paradox,

In the situation of the JL paradox, there is a value of \(\theta \) under \(H_1\) that is equal to the MLE \(\hat{\theta }\), and which consequently has a likelihood no lower than that of \(\theta _0\). The extent to which \(\hat{\theta }\) is not favored by the prior is encoded in the Ockham factor of Eq. 14, which means that the BF and the likelihood ratio \(\lambda \) can disagree on both the magnitude and even the direction of the evidence.

Simple-vs-simple hypothesis tests are far less common in HEP than simple-vs-composite tests, but have arisen as the CERN experiments have been attempting to infer properties of the new boson, such as the quantum numbers that characterize its spin and parity. Again supposing \(X\) having density \(f(x|\theta )\) is sampled, now one can form two well-defined \(p\) values, namely \(p_0\) indicating departures from \(H_0\) in the direction of \(H_1\), and \(p_1\) indicating departures from \(H_1\) in the direction of \(H_0\). A physicist will examine both \(p\) values in making an inference.

Thompson (2007, p. 108) argues that the set of the two \(p\) values is “the evidence”, and many in HEP may agree. Certainly neglecting one of the \(p\) values can be dangerous. For example, if \(\theta _0 < \hat{\theta } < \theta _1\), and \({\sigma _{\mathrm{tot}}} \ll \theta _1 - \theta _0\), then it is conceivable that \(H_0\) is rejected at 5\(\sigma \), while if \(H_1\) were the null hypothesis, it would be rejected at 7\(\sigma \). A physicist would be well aware of this circumstance and hardly fall into the straw-man trap of implicitly accepting \(H_1\) by focusing only on \(p_0\) and “rejecting” (only) \(H_0\). The natural reaction would be to question both hypotheses; i.e., the two-simple-hypothesis model would be questioned. (In this context, Senn (2001, pp. 200–201) has further criticism and references regarding the issue of sample-size dependence of \(p\) values.)

3 Do point null hypotheses make sense in principle, or in practice?

In the Bayesian literature, there are notably differing attitudes expressed regarding the relevance of a point null hypothesis \(\theta =\theta _0\). Starting with Jeffreys, the fact that Bayesian hypothesis testing can treat a point null hypothesis in a special way is considered by many proponents to be an advantage. (As discussed in Sect. 9, frequentist testing of a point null vs a composite alternative is tied to interval estimation, a completely different approach.) The hypothesis test is often phrased in the language of model selection: the “smaller” model \(H_0\) is nested in the “larger” model \(H_1\). From this point of view, it seems natural to have one’s prior probabilities \(\pi _0\) and \(\pi _1\) for the two models. However, as mentioned above, from the point of view of putting a prior on the entire space \(\Theta \) in the larger model, this corresponds to a non-regular prior that has counting measure (\(\delta \)-function to physicists) on \(\theta _0\) and Lebesgue measure (usual probability density to physicists) on \(\theta \ne \theta _0\).

As discussed by Casella and Berger (1987a), some of the more disturbing aspects of the JL paradox are ameliorated (or even “reconciled”) if there is no point null, and the test is the so-called “one-sided test”, namely \(H_0\): \(\theta \le \theta _0\) versus \(H_1\): \(\theta > \theta _0\). Given the importance of the issue of probability assigned to the point null, some of the opinions expressed in the statistics literature are highlighted below, to contrast with the attitude in HEP described in Sect. 5.

Lindley (2009) lauds the “triumph” of Jeffreys’s “general method of significance tests, putting a concentration of prior probability on the null—no ignorance here—and evaluating the posterior probability using what we now call Bayes factors.” As a strong advocate of the use of subjective priors that represent personal belief, Lindley views the probability mass on the point null as subjective. (In the same comment, Lindley criticizes Jeffrey’s “error” of integrating over the sample space of unobserved data in formulating his eponymous priors for use in point and interval estimation.)

At the other end of the spectrum of Bayesian theorists, Bernardo (2009) comments on Robert et al. (2009): “Jeffreys intends to obtain a posterior probability for a precise null hypothesis, and, to do this, he is forced to use a mixed prior which puts a lump of probability \(p=Pr(H_0)\) on the null, say \(H_0 \equiv {\theta =\theta _0}\) and distributes the rest with a proper prior \(p(\theta )\) (he mostly chooses \(p=1/2\)). This has a very upsetting consequence, usually known as Lindley’s paradox: for any fixed prior probability \(p\) independent of the sample size \(n\), the procedure will wrongly accept \(H_0\) whenever the likelihood is concentrated around a true parameter value which lies \(O(n^{-1/2})\) from \(H_0\). I find it difficult to accept a procedure which is known to produce the wrong answer under specific, but not controllable, circumstances.” When pressed by commenters, Bernardo (2011b) says that “I am sure that there are situations where the scientist is willing to use a prior distribution highly concentrated at a particular region and explore the consequences of this assumption\(\ldots \) What I claim is that, even in precise hypothesis testing situations, the scientist is often interested in an analysis which does not assume this type of sharp prior knowledge....” Bernardo goes on to advocate a different approach (Sect. 7), which “has the nontrivial merit of being able to use for both estimation and hypothesis testing problems a single, unified theory for the derivation of objective ‘reference’ priors.”

Some statisticians find point null hypotheses irrelevant to their own work. In the context of an unenthusiastic comment on the Bayesian information criterion (BIC), Gelman and Rubin (1995) say “More generally, realistic prior distributions in social science do not have a mass of probability at zero....” Raftery (1995b) disagrees, saying that “social scientists are prepared to act as if they had prior distributions with point masses at zero\(\dots \) social scientists often entertain the possibility that an effect is small ”.

In the commentary of Bernardo (2011b), C. Robert and J. Rousseau say, “Down with point masses! The requirement that one uses a point mass as a prior when testing for point null hypotheses is always an embarrassment and often a cause of misunderstanding in our classrooms. Rephrasing the decision to pick the simpler model as the result of a larger advantage is thus much more likely to convince our students. What matters in pointwise hypothesis testing is not whether or not \(\theta =\theta _0\) holds but what the consequences of a wrong decision are.”

Some comments on the point null hypothesis are related to another claim, that all models and all point nulls are at best approximations that are wrong at some level. I discuss this point in more detail in Sect. 5, but include a few quotes here. Edwards et al. (1963) say, “\(\dots \) in typical applications, one of the hypotheses—the null hypothesis—is known by all concerned to be false from the outset,” citing others including Berkson (1938). Vardeman (1987) claims, “Competent scientists do not believe their own models or theories, but rather treat them as convenient fictions. A small (or even 0) prior probability that the current theory is true is not just a device to make posterior probabilities as small as \(p\) values, it is the way good scientists think!”

Casella and Berger (1987b) object specifically to Jeffreys’s use of \(\pi _0=\pi _1=1/2\), used in modern papers as well: “Most researchers would not put 50 % prior probability on \(H_0\). The purpose of an experiment is often to disprove \(H_0\) and researchers are not performing experiments that they believe, a priori, will fail half the time!” Kadane (1987) expresses a similar sentiment: “For the last 15 years or so I have been looking seriously for special cases in which I might have some serious belief in a null hypothesis. I have found only one [testing astrologer]...I do not expect to test a precise hypothesis as a serious statistical calculation.”

As discussed below, such statisticians have evidently not been socializing with many HEP physicists. In fact, in the literature I consulted, I encountered very few statisticians who granted, as did Zellner (2009), that physical laws such as \(E=mc^2\) are point hypotheses, and “Many other examples of sharp or precise hypotheses can be given and it is incorrect to exclude such hypotheses a priori or term them ‘unrealistic’....”

Condensed matter physicist and Nobel Laureate Philip Anderson (1992) argued for Jeffreys-style hypothesis testing with respect to a claim for evidence for a fifth force of nature. “Let us take the ‘fifth force’. If we assume from the outset that there is a fifth force, and we need only measure its magnitude, we are assigning the bin with zero range and zero magnitude an infinitesimal probability to begin with. Actually, we should be assigning this bin, which is the null hypothesis we want to test, some finite a priori probability—like 1/2—and sharing out the remaining 1/2 among all the other strengths and ranges.”

Already in Edwards et al. (1963, p. 235) there was a key point related to the situation in HEP: “Bayesians\(\dots \) must remember that the null hypothesis is a hazily defined small region rather than a point.” They also emphasized the subjective nature of singling out a point null hypothesis: “At least for Bayesian statisticians, however, no procedure for testing a sharp null hypothesis is likely to be appropriate unless the null hypothesis deserves special initial credence.”

That the “point” null can really be a “hazily defined small region” is clear from the derivation in Sect. 2. The general scaling conclusion of Eq. 10 remains valid if “hazily defined small region” means that the region of \(\theta \) included in \(H_0\) has a scale \(\epsilon _0\) such that \(\epsilon _0 \ll {\sigma _{\mathrm{tot}}} \). To a physicist, this just means that computing integrals using a \(\delta \)-function is a good approximation to integrating over a finite region in \(\theta \). (Some authors, such as Berger and Delampady (1987a) have explored quantitatively the approximation induced in the \(\mathrm{BF}\) by non-zero \(\epsilon _0\)).

4 Three scales for \(\theta \) yield a paradox

From the preceding sections, we can conclude that for the JL paradox three scales in the parameter space \(\Theta \), namely:

-

1.

\(\epsilon _0\), the scale under \(H_0\);

-

2.

\({\sigma _{\mathrm{tot}}} \), the scale for the total measurement uncertainty; and

-

3.

\(\tau \), the scale under \(H_1\);

and that they have the hierarchy

This situation is common in frontier experiments in HEP, where, as discussed in Sect. 5.1, the three scales are often largely independent. We even have cases where \(\epsilon _0=0\), i.e., most of the subjective prior probability is on \(\theta =0\). This is the case if \(\theta \) is the mass of the photon.

As noted for example by Shafer (1982), the source of the precision of \({\sigma _{\mathrm{tot}}} \) does not matter as long as condition in Eq. 15 is satisfied. The statistics literature tends to focus on the case where \({\sigma _{\mathrm{tot}}} \) arises from a sample size \(n\) via Eq. 1. This invites the question as to whether \(n\) can really be arbitrarily large in order to make \({\sigma _{\mathrm{tot}}} \) arbitrarily small. In my view the existence of a regime where the \(\mathrm{BF}\) goes as \(\tau /{\sigma _{\mathrm{tot}}} \) for fixed \(z\) (as in Eq. 10) is the fundamental characteristic that can lead to the JL paradox, even if this regime does not extend to \({\sigma _{\mathrm{tot}}} \rightarrow 0\). As I discuss in Sect. 5.1, such regimes are present in HEP analyses, and there is not always a well-defined \(n\) underlying \({\sigma _{\mathrm{tot}}} \), a point I return to in Sects. 5.2 and 6 below in discussing \(\tau \). But we first consider the model itself.

5 HEP and belief in the null hypothesis

At the heart of the measurement models in HEP are well-established equations that are commonly known as “laws of nature”. By some historical quirks, the current “laws” of elementary particle physics, which have survived several decades of intense scrutiny with only a few well-specified modifications, are collectively called a “model”, namely the Standard Model (SM). In this review, I refer to the equations of such “laws”, or alternatives considered as potential replacements for them, as “core physics models”. The currently accepted core physics models have parameters, such as masses of the quarks and leptons, which with few exceptions have all been measured reasonably precisely (even if requiring care to define).

Multiple complications arise in going from the core physics model to the full measurement model that describes the probability densities for observations such as the momentum spectra of particles emerging from proton-proton collisions. Theoretical calculations based on the core physics model can be quite complex, requiring, for example, approximations due to truncation of power series, incomplete understanding of the internal structure of colliding protons, and insufficient understanding of the manner in which quarks emerging from the collision recombine into sprays of particles (“jets”) that can be detected. The results of such calculations, with their attendant uncertainties, must then be propagated through simulations of the response of detectors that are parametrized using many calibration constants, adjustments for inefficient detection, misidentification of particles, etc. Much of the work in data analysis in HEP involves subsidiary analyses to measure and calibrate detector responses, to check the validity of theoretical predictions to describe data (especially where no departures are expected), and to confirm the accuracy of many aspects of the simulations.

The aphorism “all models are wrong” (Box 1976) can certainly apply to the detector simulation, where common assumptions of normal or log-normal parameterizations are, at best, only good approximations. But the pure core physics models still exist as testable hypotheses that may be regarded as point null hypotheses. Alternatives to the SM are more generalized models in which the SM is nested. It is certainly worth trying to understand if some physical parameter in the alternative core physics model is zero (corresponding to the SM), even if it is necessary to do so through the smoke of imperfect detector descriptions with many uninteresting and imperfectly known nuisance parameters. Indeed much of what distinguishes the capabilities of experimenters is how well they can do precisely that by determining the detector response through careful calibration and cross-checks. This distinction is over-looked in the contention (Berger and Delampady 1987a, p. 320) that a point null hypothesis in a core physics model cannot be precisely tested if the rest of the measurement model is not specified perfectly.

There is a deeper point to be made about core physics models concerning the difference between a model being a good “approximation” in the ordinary sense of the word, and the concept of a mathematical limit. The equations of Newtonian physics have been superseded by those of special and general relativity, but the earlier equations are not just approximations that did a good job in predicting (most) planetary orbits; they are the correct mathematical limits in a precise sense. The kinematic expressions for momentum, kinetic energy, etc., are the limits of the special relativity equations in the limit as the speed goes to zero. That is, if you specify a maximum tolerance for error due to the approximation of Newtonian mechanics, then there exists a speed below which it will always be correct within that tolerance. Similarly, Newton’s universal law of gravity is the correct mathematical limit of General Relativity in the limit of small gravitational fields and low speeds (conditions that were famously not satisfied to observational precision for the orbit of the planet Mercury).

This limiting behavior can often be viewed through an appropriate power series. For example, we can expand the expression for kinetic energy \(T\) from special relativity, \(T = \sqrt{p^2+m^2} - m\), in powers of \(p^2/m^2\) in the non-relativistic limit where momentum \(p\) is much smaller than the mass \(m\). The Newtonian expression, \(T=p^2/2m\), is the first term in the series, followed by the lowest order relativistic correction term of \(p^4/8m^3\). (I use the usual HEP units in which the speed of light \(c\) is 1 and dimensionless; to use other units, substitute \(pc\) for \(p\), and \(mc^2\) for \(m\).)

An analogous, deeper concept arises in the context of effective field theories. An effective field theory in a sense consists of the correct first term(s) in a power series of inverse powers of some scale that is much higher than the applicable scale of the effective theory (Georgi 1993). When a theory is expressed as an infinite series, a key issue is whether there is a finite number of coefficients to be determined experimentally, from which all other coefficients can be (at least in principle) calculated, with no unphysical answers (in particular infinity) appearing for measurable quantities. Theories having this property are called renormalizable, and are naturally greatly favored over theories that give infinities for measurable quantities or that require in effect an infinite number of adjustable parameters. It was a major milestone in HEP theory when it was shown that the SM (including its Higgs boson) is in a class of renormalizable theories (’t Hooft 1999); removing the Higgs boson destroys this property.

In the last three or four decades, thousands of measurements have tested the consistency of the predictions of the SM, many with remarkable precision, including of course measurements at the LHC. Nonetheless, the SM is widely believed to be incomplete, as it leaves unanswered some obvious questions (such as why there are three generations of quarks and leptons, and why their masses have the values they do). If the goal of a unified theory of forces is to succeed, the current mathematical formulation will become embedded into a larger mathematical structure, such that more forces and quanta will have to be added. Indeed much of the current theoretical and experimental research program is aimed at uncovering these extensions, while a significant effort is also spent on understanding further the consequences of the known relationships. Nevertheless, whatever new physics is added, we also expect that the SM will remain a correct mathematical limit, or a correct effective field theory, within a more inclusive theory. It is in this sense of being the correct limit or correct effective field theory that physicists believe that the SM is “true”, both in its parts and in the collective whole. (I am aware that there are deep philosophical questions about reality, and that this point of view can be considered “naive”, but this is a point of view that is common among high energy physicists.)

It may be that on deeper inspection the distinction between an ordinary “approximation” and a mathematical limit will not be so great, as even crude approximations might be considered as types of limits. Also, the usefulness of power series breaks down in certain important “non-perturbative” regimes. Nonetheless, the concepts of renormalizability, limits, and effective field theories are helpful in clarifying what is meant by belief in core physics models. Comparing the approach of many physicists to that of statisticians working in other fields, an important distinction appears to be the absence of core “laws” in their models. Under such circumstances, one would naturally be averse to obsession about exact values of model parameters when the uncertainty in the model itself is already dominant.

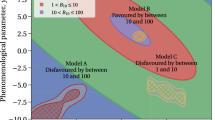

5.1 Examples of three scales for \(\theta \) in HEP experiments

Many searches at the frontier of HEP have three scales with the hierarchy in Eq. 15. An example is an experiment in the 1980s that searched for a particular decay of a particle called the long-lived neutral kaon, the \(\mathrm{K}^0_\mathrm{L}\). This decay, to a muon and electron, had been previously credibly ruled out for a branching fraction (probability per kaon decay) of \(10^{-8}\) or higher. With newer technology and better beams, the proposal was to search down to a level of \(10^{-12}\). This decay was forbidden at this level in the SM, but there was a possibility that the decay occurred at the \(10^{-17}\) level (Barroso et al. 1984) or lower via a process where neutrinos change type within an expanded version of the SM; since this latter process was out of reach, it was included in the “point null” hypothesis. This search was therefore a “fishing expedition” for “physics beyond the Standard Model” (BSM physics), in this case a new force of nature with \({\sigma _{\mathrm{tot}}} \approx 10^{-12}\) and \(\epsilon _0 \approx 10^{-17}\). Both the scale \(\tau \) of prior belief and \(g(\theta )\) would be hard to define, as the motivation for performing the experiment was the capability to explore the unknown with the potential for a major discovery of a new force. For me personally, \(\pi _1\) was small (say 1%), and the scale \(\tau \) was probably close to that of the range being explored, \(10^{-8}\). (The first incarnation of the experiment reached \({\sigma _{\mathrm{tot}}} \approx 10^{-11}\), without evidence for a new force (Arisaka et al. 1993)). As discussed in Sect. 8.2, searches for such rare decays are typically interpreted in terms of the influence of possible new particles with very high masses, higher than can be directly produced.

As another example, perhaps the most extreme, it is of great interest to determine whether or not protons decay, i.e., whether or not the decay rate is exactly zero, as so far seems to be the case experimentally. Experiments have already probed values of the average decay rate per proton of 1 decay per \(10^{31}\)–\(10^{33}\) years. This is part of the range of values predicted by certain unified field theories that extend the SM (Wilczek 2004). As the age of the universe is order \(10^{10}\) years, these are indeed very small rates. Thanks to the exponential nature of such decays in quantum mechanics, the search for such tiny decay rates is possible by observing nearly \(10^{34}\) protons (many kilotons of water) for several years, rather than by observing several protons for \(10^{34}\) years! Assigning the three scales is rather arbitrary, but I would say that \({\sigma _{\mathrm{tot}}} \approx 10^{-32}\) and \(\tau \) initially was perhaps \(10^{-28}\). Historically the null hypothesis under the SM was considered to be a point exactly at zero decay rate, until 1976 when ’t Hooft (1976) pointed out an exotic non-perturbative mechanism for proton decay. But his formula for the SM rate has a factor of about \(\exp (-137\pi ) = 10^{-187}\) that makes it negligible even compared to the BSM rates being explored experimentally. (See Babu et al. (2013) for a recent review.)

Finally, among the multitude of current searches for BSM physics at the LHC to which Eq. 15 applies, I mention the example of the search for production a heavy version of the \(\mathrm{Z^0}\) boson (Sect. 8), a so-called \(\mathrm{Z}^\prime \) (pronounced “Z-prime”). The \(\mathrm{Z}^\prime \) would be the quantum of a new force that appears generically in many speculative BSM models, but without any reliable prediction as to whether the mass or production rate is accessible at the LHC. For these searches, \(\epsilon _0=0\) in the SM; \({\sigma _{\mathrm{tot}}} \) is determined by the LHC beam energies, intensities, and the general-purpose detector’s measuring capabilities; the scale \(\tau \) is again rather arbitrary (as are \(\pi _0\) and \(g\)), but much larger than \({\sigma _{\mathrm{tot}}} \).

In all three of these examples, the conditions of Eq. 15 are met. Furthermore, the three scales are largely independent. There can be a loose connection in that an experiment may be designed with a particular subjective value of \(\tau \) in mind, which then influences how resources are allocated, if feasible, to obtain a value of \({\sigma _{\mathrm{tot}}} \) that may settle a particular scientific issue. But this kind of connection can be tenuous in HEP, especially when an existing general-purpose apparatus such as CMS or ATLAS is applied to a new measurement. Therefore there is no generally applicable rule of thumb relating \(\tau \) to \({\sigma _{\mathrm{tot}}} \).

Even if some sense of the scale \(\tau \) can be specified, there still exists the arbitrariness in choosing the form of \(g\). Many experimenters in HEP think in terms of “orders of magnitude”, with an implicit metric that is uniform in the log of the decay rate. For example, some might say that “the experiment is worth doing if it extends the reach by a factor of 10”, or that “it is worth taking data for another year if the number of interactions observed is doubled”. But it is not at all clear that such phrasing really corresponds to a belief that is uniform in the implicit logarithmic metric.

5.2 Test statistics for computing \(p\) values in HEP

There is a long tradition in HEP of using likelihood ratios for both hypothesis testing and estimation, following established frequentist theory (Stuart et al. 1999, Chap. 22) such as the NP Lemma and Wilks’s Theorem. This is sometimes described in the jargon of HEP (James 1980), and other times with more extensive sourcing (Eadie et al. 1971; Baker and Cousins 1984; James 2006; Cowan et al. 2011). When merited, quite detailed likelihood functions (both binned and unbinned) are constructed. In many cases, \(\theta \) is a physically non-negative quantity (such as a mass or a Poisson mean) that vanishes under the null hypothesis (\(\theta _0=0\)), and the alternative is \(H_1\): \(\theta >0\). The likelihood-ratio test statistic, denoted by \(\lambda \), and its distribution under the null hypothesis (see below) are used in a one-tailed test to obtain a \(p\) value, which is then converted to \(z\), the equivalent number of standard deviations (\(\sigma \)) in a one-tailed test of the mean of a normal distribution,

For example, \(z=3\) corresponds to a \(p\) value of \(1.35 \times 10^{-3}\), and \(z=5\) to a \(p\) value of \(2.9 \times 10^{-7}\). (For upper confidence limits on \(\theta \), \(p\) values are commonly modified to mitigate some issues caused by downward fluctuations, but this does not affect the procedure for testing \(H_0\)).

Nuisance parameters arising from detector calibration, estimates of background rates, etc., are abundant in these analyses. A large part of the analysis effort is devoted to understanding and validating the (often complicated) descriptions of the response of the experimental apparatus that is included in \(\lambda \). For nuisance parameters, the uncertainties are typically listed as “systematic” in nature, the name that elementary statistics books use for uncertainties that are not reduced with more sampling. Nevertheless, some systematic uncertainties can be reduced as more data is taken and used in the subsidiary analyses for calibrations.

A typical example is the calibration of the response of the detector to a high-energy photon (\(\gamma \)), crucial for detecting the decay of the Higgs boson to two photons. The basic detector response (an optical flash converted to an analog electrical pulse that is digitized) must be converted to units of energy. The resulting energy “measurement” suffers from a smearing due to resolution as well as errors in offset and scale. Special calibration data and computer simulations are used to measure both the width and shape of the smearing function, as well as to determine offsets and scales that still have residual uncertainty. In terms of the simple \(N(\theta ,{\sigma _{\mathrm{tot}}} ^2)\) model discussed throughout this paper, we have complications: the response function may not be normal but can be measured; the bias on \(\theta \) may not be zero but can be measured; and \({\sigma _{\mathrm{tot}}} \) is also measured. All of the calibrations may change with temperature, position in the detector, radiation damage, etc. Many resources are put into tracking the time-evolution of calibration parameters, and therefore minimizing, but of course never eliminating, the uncertainties.

Such calibration takes place for all the subdetectors used in a HEP experiment, for all the basic types of detected particles (electrons, muons, pions, etc.). Ultimately, with enough data, certain systematic uncertainties approach constant values that limit the usefulness adding more data. (Example of limiting systematics would include finite resolution on the time dependence of detector response; control of the lasers used for calibration; magnetic field inhomogeneities not perfectly mapped; imperfect material description in the detector simulation; and various theoretical uncertainties.)

Once models for the nuisance parameters are selected, various approaches can be used to “eliminate” them from the likelihood ratio \(\lambda \) (Cousins 2005). “Profiling” the nuisances parameters (i.e., re-optimizing the MLEs of the nuisance parameters for each trial value of the parameter of interest) has been part of the basic HEP software tools (though not called profiling) for decades (James 1980). The results on the Higgs boson at the LHC have been based on profiling, partly because asymptotic formulas for profile likelihoods were generalized (Cowan et al. 2011) and found to be useful. It is also common to integrate out (marginalize) nuisance parameters in \(\lambda \) in a Bayesian fashion (typically using evidence-based priors), usually through Monte Carlo integration (while treating the parameter of interest in a frequentist manner).

In many analyses, the result is fairly robust to the treatment of nuisance parameters in the definition of \(\lambda \). For the separate step of obtaining the distribution of \(\lambda \) under the null hypothesis, asymptotic theory (Cowan et al. 2011) can be applicable, but when feasible the experimenters also perform Monte Carlo simulations of pseudo-experiments. These simulations treat the nuisance parameters in some frequentist and Bayesian-inspired ways, and are typically (though not always) rather insensitive to the choice of method.

To the extent that integrations are performed over the nuisance parameters, or that profiling yields similar results, the use of \(\lambda \) as a test statistic for a frequentist \(p\) value is reminiscent of Bayesian-frequentist hybrids in the statistics literature (Good 1992, Sect. 1), including the prior-predictive \(p\) value of Box (1980). Within HEP, this mix of paradigms has been advocated (Cousins and Highland 1992) as a pragmatic approach, and found in general to yield reasonable results under a variety of circumstances.

The complexity of such analyses is worth keeping in mind in Sect. 6, when the concept of the “unit measurement” with \(\sigma = \sqrt{n}{\sigma _{\mathrm{tot}}} \) is introduced as a basis for some “objective” methods of setting the scale \(\tau \). The overall \({\sigma _{\mathrm{tot}}} \) is a synthesis of many samplings of events of interest as well as events in the numerous calibration data sets (some disjoint from the final analysis, some not). It is unclear what could be identified as the number of events \(n\), since the analysis does not fit neatly into the concept of \(n\) identical samplings.

5.3 Are HEP experimenters biased against their null hypotheses?

Practitioners in disciplines outside of HEP are sometimes accused of being biased against accepting null hypotheses, to the point that experiments are set up with the sole purpose of rejecting the null hypothesis (Bayarri 1987). Strong bias against publishing null results (i.e., results that do not reject the null hypothesis) has been described, for example, in psychology (Ferguson and Heene 2012). Researchers might feel the need to reject the null hypothesis in order to publish their results, etc. It is unclear to what extent these characterizations might be valid in different fields, but in HEP there is often significant prior belief in both the model and the point null hypothesis (within \(\epsilon _0\)). In many searches in HEP, there is a hope to reject the SM and make a major discovery of BSM physics in which the SM is nested. But there is nonetheless high (or certainly non-negligible) prior belief in the null hypothesis. There have been hundreds of experimental searches for BSM physics that have not rejected the SM.

In HEP, it is normal to publish results that advance exploration of the frontiers even if they do not reject the null hypothesis. The literature, including the most prestigious journals, has many papers beginning with “Search for...” that report no significant evidence for the sought-for BSM physics. Often these publications provide useful constraints on theoretical speculation, and offer guidance for future searches.

For physical quantities \(\theta \) that cannot have negative values, the unbiased estimates will be in the unphysical negative region about half of the time if the true value of \(\theta \) is small compared to \({\sigma _{\mathrm{tot}}} \). It might appear that the measurement model is wrong if half the results are unphysical. But the explanation in retrospect is that the null hypotheses in HEP have tended to be true, or almost so. As no BSM physics has been observed thus far at the LHC, the choices of experiments might be questioned, but they are largely constrained by resources and by what nature has to offer for discovery. Huge detector systems such as CMS and ATLAS are multipurpose experiments that may not have the desired sensitivity to some specific processes of interest. Within constraints of available resources and loosely prioritized as to speculation about where the BSM physics may be observed, the collaborations try to look wherever there is some capability for observing new phenomena.

5.4 Cases of an artificial null that carries little or no belief

As noted above, the “core physics models” used in our searches typically include the SM as well larger models in which the SM is embedded. In a typical search for BSM physics, the SM is the null hypothesis and carries a non-negligible belief. However, there does exist a class of searches for which physicists place little prior belief on the null hypothesis, namely when the null hypothesis is the SM with a missing piece! This occurs when experimenters are looking for the “first observation” of a phenomenon that is predicted by the SM to have non-zero strength \(\theta =\theta _1\), but which is yet to be confirmed in data. The null hypothesis is then typically defined to be the simple hypothesis \(\theta =\theta _0=0\), i.e., everything in the SM except the as-yet-confirmed phenomenon. While the alternative hypothesis could be taken to be the simple hypothesis \(\theta =\theta _1\), it is more common to take the alternative to be \(\theta >0\). Results are then reported in two pieces: (i) a simple-vs-composite hypothesis test that reports the \(p\) value for the null hypothesis, and (ii) confidence interval(s) for \(\theta \) at one or more confidence level, which can be then compared to \(\theta _1\). This gives more flexibility in interpretation, including rejection of \(\theta _0=0\), but with a surprising value of \(\hat{\theta }\) that points to an alternative other than the SM value \(\theta _1\). Furthermore as in all searches, collaborations typically present plots showing the distribution of \(z\) values obtained from Monte Carlo simulation of pseudo-experiments under each of the hypotheses. From these plots one can read off the “expected \(z\)” (usually defined as median) for each hypothesis, and also get a sense for how likely is a statistical fluctuation to the observed \(z\).

An example from Fermilab is the search for production of single top quarks via the weak force in proton-antiproton collisions (Abazov et al. 2009; Aaltonen et al. 2009; Fermilab 2009). This search was performed after the weak force was clearly characterized, and after top quarks were observed via their production in top-antitop quark pairs by the strong force. The search for single top-quark production was experimentally challenging, and the yields could have differed from expectations of the SM due to the possibility of BSM physics. But there was not much credence in the null hypothesis that production of single top quarks did not exist at all. Eventually that null was rejected at more than 5\(\sigma \). The interest remains on measured values and particularly confidence intervals for the production rates (via more than one mechanism), which thus far are consistent with SM expectations.

Another example is the search for a specific decay mode of the \({\mathrm{B}_\mathrm{s}}\) particle that contains a bottom quark (b) and anti-strange-quark (\(\overline{\mathrm{s}}\)). The SM predicts that a few out of \(10^9\) \({\mathrm{B}_\mathrm{s}}\) decays yield two muons (heavy versions of electrons) as decay products. This measurement has significant potential for discovering BSM physics that might enhance (or even reduce) the SM probability for this decay. The search used the null hypothesis that the \({\mathrm{B}_\mathrm{s}}\) decay to two muons had zero probability, a null that was recently rejected at the 5\(\sigma \) level. As with single top-quark production, the true physics interest was in the measured confidence interval(s), as there was negligible prior belief in the artificial null hypothesis of exactly zero probability for this decay mode. Of course, a prerequisite for measuring the small decay probability was high confidence in the presence of this process in the analyzed data. Thus the clear observation (rejection of the null) at high significance by each of two experiments was one of the highlights of results from the LHC in 2013 (Chatrchyan et al. 2013a; Aaij et al. 2013; CERN 2013).

As the Higgs boson is an integral part of the SM (required for the renormalizability of the SM), the operational null hypothesis used in searching for it was similarly taken to be an artificial model that included all of the SM except the Higgs boson, and which had no BSM physics to replace the Higgs boson with a “Higgs-like” boson. However, the attitude toward the hypotheses was not as simple as in the two previous examples. The null hypothesis of having “no Higgs boson” carried some prior belief, in the sense that it was perhaps plausible that BSM physics might mean that no SM Higgs boson (or Higgs-like boson) was observable in the manner in which we were searching. Furthermore, the search for the Higgs boson had such a long history, and had become so well-known in the press, that there would have been a notable cost to a false discovery claim. In my opinion, this was an important part of the justification for the high threshold that the experimenters used for declaring an observation. (Sect. 9 discusses factors affecting the threshold).

Analogous to the two previous examples, the implementation of the alternative hypothesis was as the complete SM with a composite \(\theta \) for the strength of the Higgs boson signal. (This generalized alternative allowed for a “Higgs-like” boson that perhaps could not be easily distinguished with data in hand.) However, the mass of the Higgs boson is a free parameter in the SM, and had been only partially constrained by previous measurements and theoretical arguments. Compared to the two previous examples, this complicated the search significantly, as the probabilities of different decay modes of the Higgs boson change dramatically as a function of its mass.

This null hypothesis of no Higgs (or Higgs-like) boson was definitively rejected upon the announcement of the observation of a new boson by both ATLAS and CMS on July 4, 2012. The confidence intervals for signal strength \(\theta \) in various decay sub-classes, though not yet precise, were in reasonable agreement with the predictions for the SM Higgs boson. Subsequently, much of the focus shifted to measurements of describing different production and decay mechanisms. For measurements of continuous parameters, the null hypothesis has reverted to the complete SM with its Higgs boson, and the tests (e.g., Chatrchyan et al. (2013b, Fig. 22) and Aad et al. (2013, Figs. 10–13)) use the frequentist duality (Sect. 9 below) between interval estimation and hypothesis testing. One constructs (approximate) confidence intervals and regions for parameters controlling various distributions, and checks whether the predicted values for the SM Higgs boson are within the confidence regions. For an important simple-vs-simple hypothesis test of the quantum mechanical property called parity, \(p\) values for both hypotheses were reported (Chatrchyan et al. 2013c), as described in Sect. 2.2.

6 What sets the scale \(\tau \)?

As discussed by Jeffreys (1961, p. 251) and re-emphasized by Bartlett (1957), defining the scale \(\tau \) (the range of values of \(\theta \) over which the prior \(g(\theta )\) is relatively large) is a significant issue. Fundamentally, the scale appears to be personal and subjective, as is the more detailed specification of \(g(\theta )\). Berger and Delampady (1987a), Berger and Delampady (1987b) state that “the precise null testing situation is a prime example in which objective procedures do not exist,” and “Testing a precise hypothesis is a situation in which there is clearly no objective Bayesian analysis and, by implication, no sensible objective analysis whatsoever.” Nonetheless, as discussed in this section, Berger and others have attempted to formulate principles for specifying default values of \(\tau \) for communicating scientific results.

Bartlett (1957) suggests that \(\tau \) might scale as \(1/\sqrt{n}\), canceling the sample-size scaling in \({\sigma _{\mathrm{tot}}} \) and making the Bayes factor independent of \(n\). Cox (2006, p. 106) suggests this as well, on the grounds that “\(\ldots \) in most, if not all, specific applications in which a test of such a hypothesis [\(\theta =\theta _0\)] is thought worth doing, the only serious possibilities needing consideration are that either the null hypothesis is (very nearly) true or that some alternative within a range fairly close to \(\theta _0\) is true.” This avoids the situation that he finds unrealistic, in which “the corresponding answer depends explicitly on \(n\) because, typically unrealistically, large portions of prior probability are in regions remote from the null hypothesis relative to the information in the data.” Part of Cox’s argument was already given by Jeffreys (1961, p. 251), “\(\dots \) the mere fact that it has been suggested that [\(\theta \)] is zero corresponds to some presumption that [\(\theta \)] is small.” Leamer (1978, p. 114) makes a similar point, “\(\dots \) a prior that allocates positive probability to subspaces of the parameter space but is otherwise diffuse represents a peculiar and unlikely blend of knowledge and ignorance”. (As Sect. 5.1 discusses, this “peculiar and unlikely blend” is common in HEP.) Andrews (1994) also explores the consequences of \(\tau \) shrinking with sample size, but these ideas seem not to have led to a standard. As another possible reconciliation, Robert (1993) considers \(\pi _1\) that increases with \(\tau \), but this seems not to have been pursued further.

Many attempts in the Bayesian literature to specify a default \(\tau \) arrive at a suggestion that does not depend on \(n\), and hence does not remove the dependence of the Ockham factor on \(n\). In the search for any non-subjective \(n\)-independent scale, the only option seemingly at hand is \({\sigma _{\mathrm{tot}}} \) when \(n=1\), i.e., the original \(\sigma \) (Eq. 1) that expresses the uncertainty of a single measurement. This was in fact suggested by Jeffreys (1961, p. 268), on the grounds that there is nothing else in the problem that can set the scale, and was followed, for example, in generalizations by Zellner and Siow (1980).

Kass and Wasserman (1995) do the same, which “has the interpretation of ‘the amount of information in the prior on [\(\theta \)] is equal to the amount of information about [\(\theta \)] contained in one observation’ ”. They refer to this as a “unit information prior”, citing Smith and Spiegelhalter (1980) as also using this “appealing interpretation of the prior.” It is not clear to me why this “unit information” approach is “appealing”, or how it could lead to useful, universally cross-calibrated Bayes factors in HEP. As discussed in Sect. 5.2 the detector may also have some intrinsic \({\sigma _{\mathrm{tot}}} \) for which no preferred \(n\) is evident. Raftery (1995a, pp. 132, 135) points out the same problem. After defining a prior for which, “roughly speaking, the prior distribution contains the same amount of information as would, on average, one observation”, he notes the obvious problem in practice: the “important ambiguity\(\dots \) the definition of [\(n\)], the sample size.” He gives several examples for which he has a recommendation.

Berger and Pericchi (2001, with commentary) review more general possibilities based on use of the information in a small subset of the data, and for one method claim that “this is the first general approach to the construction of conventional priors in nested models.” Berger (2008, 2011) applied one of these so-called “intrinsic priors” to a pedagogical example and its generalization from HEP. Unfortunately, I am not aware of anyone in HEP who has pursued these suggestions. Meanwhile, recently Bayarri et al. (2012) have reconsidered the issue and formulated principles resulting “\(\dots \) in a new model selection objective prior with a number of compelling properties.” I think that it is fair to conclude that this is still an active area of research.

6.1 Comments on non-subjective priors for estimation and model selection

For point and interval estimation, Jeffreys (1961) suggests two approaches for obtaining a prior for a physically non-negative quantity such as the magnitude of the charge \(q\) of the electron. Both involve invariance concepts. The first approach (pp. 120–123) considers only the parameter being measured. In his example, one person might consider the charge \(q\) to be the fundamental quantity, while another might consider \(q^2\) (or some other power \(q^m\) ) to be the fundamental quantity. In spite of this arbitrariness of the power \(m\), everyone will arrive at consistent posterior densities if they each take the prior for \(q^m\) to be \(1/q^m\), since all expressions \(d(q^m)/q^m)\) differ only by a proportionality constant. (Equivalently, they can all take the prior as uniform in \(\ln q^m\), i.e., in \(\ln q\).)

Jeffreys’s more famous second approach, leading to his eponymous rule and priors, is based on the likelihood function and some averages over the sample space (i.e., over possible observations). The likelihood function is based on what statisticians call the measurement “model”. This means that “Jeffreys’s prior” is derived not by considering only the parameter being measured, but rather by examining the measuring apparatus. For example, Jeffreys’s prior for a Gaussian (normal) measurement apparatus is uniform in the measured value. If the measuring apparatus has Gaussian response in \(q\), the prior is uniform in \(q\). If the measuring apparatus has Gaussian response in \(q^2\), then the prior is uniform in \(q^2\). If the physical parameter is measured with Gaussian resolution and is physically non-negative, as for the charge magnitude \(q\), then the functional form of the prior remains the same (uniform) and is set to zero in the unphysical region (Berger 1985, p. 89).

Berger and Bernardo refer to “non-subjective” priors such as Jeffreys’s prior as “objective” priors. This strikes me as rather like referring to “non-cubical” volumes as “spherical” volumes; one is changing the usual meaning to the word. Bernardo (2011b) defends the use of “objective” as follows. “No statistical analysis is really objective, since both the experimental design and the model assumed have very strong subjective inputs. However, frequentist procedures are often branded as ‘objective’ just because their conclusions are only conditional on the model assumed and the data obtained. Bayesian methods where the prior function is directly derived from the assumed model are objective in this limited, but precise sense.”

Whether or not this defense is accepted, so-called “objective” priors can be deemed useful for point and interval estimation, even to frequentists, as there is a deep (frequentist) reason for their potential appeal. Because the priors are derived by using knowledge of the properties of the measuring apparatus, it is at least conceivable that Bayesian credible intervals based on them might have better-than-typical frequentist coverage properties when interpreted as approximate frequentist confidence intervals. As Welch and Peers (1963) showed, for Jeffreys’s priors this is indeed the case for one-parameter problems. Under suitable regularity conditions, the approximate coverage of the resulting Bayesian credible intervals is uniquely good to order \(1/n\), compared to the slower convergence for other priors, which is good to order \(1/\sqrt{n}\). Hence, except at very small \(n\), by using “objective” priors, one can (at least approximately) obey the Likelihood Principle and obtain decent frequentist coverage, which for some is a preferred “compromise”. Reasonable coverage can also be the experience for Reference Priors with more than one parameter (Philippe and Robert 1998, and references therein). This can happen even though objective priors are improper (i.e., not normalizable) for many prototype problems; the ill-defined normalization constant cancels out in the calculation of the posterior. (Equivalently, if a cutoff parameter is introduced to make the prior proper, the dependence on the cutoff vanishes as it increases without bound.)

For model selection, Jeffreys proposed a third approach to priors. As discussed in Sect. 2 and 3, from the point of view of the larger model, the prior is irregular, as it is described by a probability mass (a Dirac \(\delta \)-function) on the null value \(\theta _0\) that has measure zero. The prior \(g(\theta )\) on the rest of \(\Theta \) must be normalizable (eliminating improper priors used for estimation) in order for the posterior probability to be well-defined. For Gaussian measurements, Jeffreys argued that \(g\) should be a Cauchy density (“Breit–Wigner” in HEP).

Apart from the subtleties that led Jeffreys to choose the Cauchy form for \(g\), there is the major issue of the scale \(\tau \) of \(g\), as discussed in Sect. 6. The typical assumption of “objective Bayesians” is that, basically by definition, an objective \(\tau \) is one that is derived from the measuring apparatus. And then, assuming that \({\sigma _{\mathrm{tot}}} ^2\) reflects \(n\) measurements using an apparatus that provides a variance for each of \(\sigma ^2\), as in Eq. 1, they invoke \(\sigma \) as the scale of the prior \(g\).

Lindley (e.g., in commenting on Bernardo (2011b)) argues in cases like this that objective Bayesians can get lost in the Greek letters and lose contact with the actual context. I too find it puzzling that one can first argue that the Ockham’s factor is a crucial feature of Bayesian logic that is absent from frequentist reasoning, and then resort to choosing this factor based on the measurement apparatus, and on a concept of sample size \(n\) that can be difficult to identify. The textbook by Lee (2004, p. 130) appears to agree that this is without compelling foundation: “Although it seems reasonable that [\(\tau \)] should be chosen proportional to [\(\sigma \)], there does not seem to be any convincing argument for choosing this to have any particular value....”

It seems that, in order to be useful, any “objective” choice of \(\tau \) must provide demonstrable cross-calibration of experiments with different \({\sigma _{\mathrm{tot}}} \) when \(n\) is not well-defined. Another voice emphasizing the practical nature of the problem is that of Kass (2009), saying that Bayes factors for hypothesis testing “remain sensitive—to first order—to the choice of the prior on the parameter being tested.” The results are “contaminated by a constant that does not go away asymptotically.” He says that this approach is “essentially nonexistent” in neuroscience.

7 The reference analysis approach of Bernardo

Bernardo (1999) (with critical discussion) defines Bayesian hypothesis testing in terms very different from calculating the posterior probability of \(H_0\): \(\theta =\theta _0\). He proposes to judge whether \(H_0\) is compatible (his italics) with the data:

“Any Bayesian solution to the problem posed will obviously require a prior distribution \(p(\theta )\) over \(\Theta \), and the result may well be very sensitive to the particular choice of such prior; note that, in principle, there is no reason to assume that the prior should necessarily be concentrated around a particular \(\theta _0\); indeed, for a judgement on the compatibility of a particular parameter value with the observed data to be useful for scientific communication, this should only depend on the assumed model and the observed data, and this requires some form of non-subjective prior specification for \(\theta \) which could be argued to be ‘neutral’; a sharply concentrated prior around a particular \(\theta _0\) would hardly qualify.” He later continues, “\(\dots \) nested hypothesis testing problems are better described as specific decision problems about the choice of a useful model and that, when formulated within the framework of decision theory, they do have a natural, fully Bayesian, coherent solution.”