Abstract

We provide a method for fast and exact simulation of Gaussian random fields on the sphere having isotropic covariance functions. The method proposed is then extended to Gaussian random fields defined over the sphere cross time and having covariance functions that depend on geodesic distance in space and on temporal separation. The crux of the method is in the use of block circulant matrices obtained working on regular grids defined over longitude and latitude.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Simulation of Gaussian random fields (GRFs) is important for the use of Monte Carlo techniques. Considerable work has been done to simulate GRFs defined over the d-dimensional Euclidean space, \(\mathbb {R}^{d},\) with isotropic covariance functions. The reader is referred to Wood and Chan (1994), Dietrich and Newsam (1997), Gneiting et al. (2006) and Park and Tretyakov (2015) with the references therein. See also Emery et al. (2016) for extensions to the anisotropic and non-stationary cases. Yet, the literature on GRFs defined over the two-dimensional sphere or the sphere cross time has been sparse. Indeed, only few simulation methods for random fields on the sphere can be found in the literature. Among them are Cholesky decomposition and Karhunen–Loève expansion (Lang and Schwab 2015). More recently, Creasey and Lang (2018) proposed an algorithm that decomposes a random field into one-dimensional GRFs. Simulations of the 1d process are performed along with their derivatives, which are then transformed to an isotropic Gaussian random field on the sphere by Fast Fourier Transform (FFT). Following Wood and Chan (1994), Cholesky decomposition is considered as an exact method, that is, the simulated GRF follows an exact multivariate Gaussian distribution. Simulation based on Karhunen–Loève expansion or Markov random fields is considered as approximated methods, because the simulated GRF follows an approximation of the multivariate Gaussian distribution (see Lang and Schwab 2015; Møller et al. 2015). Extensions to the spatially isotropic and temporally stationary GRF on the sphere cross time using space-time Karhunen–Loève expansion were considered in Clarke et al. (2018).

It is well known that the computational cost to simulate a random vector at n space-time locations using the Cholesky decomposition is \({\mathcal {O}}(n^{3}),\) which is prohibitively expensive for large values of n. Karhunen–Loève expansion requires the computation of Mercer coefficients of the covariance function (Lang and Schwab 2015; Clarke et al. 2018) which are rarely known. Finally, the method proposed by Creasey and Lang (2018) is restricted to a special case of spectral decomposition, which makes the method lacking generality.

One way to reduce the computational burden is through the relationship between torus-wrapping, block circulant matrices and FFT. The use of this relationship has been introduced by Wood and Chan (1994) and Dietrich and Newsam (1997) when developing circulant embedding methods to simulate isotropic GRFs over regular grids of \(\mathbb {R}^{d}\). Regular polyhedra are good candidates to be used as meshes for the sphere. However, on the sphere there exist only five regular polyhedra, the Platonic solids. The regular polyhedron with most edges and faces is the icosahedron with 12 vertices, 20 faces and 30 edges (Coxeter 1973), from which we can draw at most 30 regular points on the spheres, defined as the intersections of the lines joining the midpoints of two opposite edges.

For a GRF on the sphere, using spherical coordinates, Jun and Stein (2008) make use of circulant matrices to compute the exact likelihood of non-stationary covariance functions when the GRF is observed on a regular grid over \((0,\pi )\times (0,2\pi )\).

In this paper, we revisit the circulant structure obtained by Jun and Stein (2008) and we use it to develop a fast and exact simulation method for isotropic GRFs on the sphere. Moreover, such simulation method is extended to simulate spatially isotropic and temporally stationary GRFs on the sphere cross time. The proposed method requires an isotropic covariance function on the sphere or a spatially isotropic and temporally stationary covariance function on the sphere cross time. One of the advantages of this method is the huge reduction in the computational cost to \({\mathcal {O}}(n\log (n))\).

The paper is organized as follows. Section 2 details how to obtain circulant matrices on the sphere for isotropic covariance functions. In Sect. 3, we extend the simulation method to the case of the sphere cross time for spatially isotropic and temporally stationary covariance functions. The algorithms are detailed in Sect. 4, and in Sect. 5 we provide a simulation study. Finally, Sect. 6 contains some concluding remarks.

2 Circulant matrices over two-dimensional spheres

Let \({\mathbb {S}}^{2} = \{s \in \mathbb {R}^{3}: \Vert s\Vert = 1\} \subset \mathbb {R}^{3}\) be the unit sphere centered at the origin, equipped with the geodesic distance \(\theta (s_1,s_2) := \arccos (\langle s_1,s_2 \rangle )\), for \(s_1,s_2 \in {\mathbb {S}}^{2}\). We propose a new approach to simulate a finite-dimensional realization from a real-valued and zero mean, stationary, geodesically isotropic GRF \(\mathbf{X } = \{X(s): s \in {\mathbb {S}}^{2}\}\) with a given covariance function

Through the paper, we equivalently refer to R or r as the covariance function of \(\mathbf{X }\). A list of isotropic covariance functions is provided in Gneiting (2013). In what follows, we use the shortcut \(\theta \) for \(\theta (s_1,s_2)\) whenever there is no confusion.

For a stationary isotropic random field on \({\mathbb {S}}^{2},\) the covariance functions and variograms are uniquely determined through the relation (Huang et al. 2011)

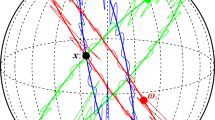

where \(\sigma ^{2} = r(0)\). The basic requirements to simulate a GRF with the proposed method are a grid, being regular over both longitude and latitude, and the computation of the covariance function over this grid. For two integers \(M,N \ge 2\) let \(I = \{1,\ldots ,N \}\)\(J = \{1,\ldots ,M \},\) and define \(\lambda _{i} = 2\pi i/N\) and \(\phi _{j} = \pi j/M\) for \(i \in I\) and \(j \in J \), respectively. In the following, \(s_{ij}=(\lambda _i,\phi _j)\) will denote the longitude–latitude coordinates of the point \(s_{ij} \in {\mathbb {S}}^{2}\) and the set \(\varOmega _{MN} = \{(\lambda _{i},\phi _{j}): i \in I, j \in J \}\) defines a regular grid over \({\mathbb {S}}^{2}\) (see Fig. 1). The Cartesian coordinates of \(s_{ij}\), expressed in \(\mathbb {R}^{3}\), are

Let us now define the random vector

and the random field restricted to \(\varOmega _{MN}\) by \(\mathbf{X }_\varOmega = [\mathbf{X }_{1}, \mathbf{X }_{2}, \ldots , \mathbf{X }_{N}]\). The matrix \(\pmb \varSigma = \text{ Var }[\mathbf{X }_{\varOmega }]\) has a block structure

where \(\pmb \varSigma _{i,j} = \text{ cov }(\mathbf{X }_{i},\mathbf{X }_{j}) = \pmb \varSigma _{j,i}\). Notice that the grid \(\varOmega _{MN}\) assumes that the north pole \(e_{N} = (0,0,1)\). However, under isotropy assumption, the elements of \(\pmb \varSigma \) depend only on the geodesic distance, and so, the north pole does not play any role here. Moreover, the geodesic distance can be written as

Equation (3) implies \(\pmb \varSigma _{i,j} = \pmb \varSigma _{1,|j-i|+1} = \pmb \varSigma _{1,N - (|i-j| +1)}\). Thus, writing \(\pmb \varSigma _{i} = \pmb \varSigma _{1,i}\), we get

Equation (4) shows that \(\pmb \varSigma \) is a symmetric block circulant matrix (Davis 1979) related to the discrete Fourier transform as follows. Let \(\varvec{I}_{M}\) be the identity matrix of order M and \(\varvec{F}_{N}\) be the Fourier matrix of order N, that is, \([\varvec{F}_{N}]_{i,k} = w^{(i-1)(k-1)}\) for \(1\le i,k \le N\) where \(w = \hbox {e}^{-2\pi \imath /N}\) and \(\imath = \sqrt{-1}\). Following Zhihao (1990), the matrix \(\pmb \varSigma \) is unitary block diagonalizable by \(\varvec{F}_{N} \otimes \varvec{I}_{M}\), where \(\otimes \) is the Kronecker product. Then, there exist N matrices \(\pmb \varLambda _{i}\), with \(i\in I\), having dimension \(M\times M,\) such that

with

where \(\varvec{B}^{*}\) denotes the conjugate transpose of the matrix \(\varvec{B}\) and \(\mathbf 0\) is a matrix of zeros of adequate size. The decomposition (5) implies that the block matrix \(\pmb \varLambda \) can be computed through the discrete Fourier transform of its first block row, that is,

Componentwise, (6) becomes

where \(j,l \in J.\) Since \(\pmb \varSigma \) is positive definite (semi-definite), it is straightforward from the decomposition (5) that the matrix \(\pmb \varLambda \) is positive definite (semi-definite) and thus that each matrix \(\pmb \varLambda _i\) is also positive definite (semi-definite), for \(i \in I\). Hence, we get

which is what is needed for simulation. A simulation algorithm based on Eq. (8) is provided in Sect. 4.

3 Circulant embedding on the sphere cross time

We now generalize this approach on the sphere cross time for a spatially isotropic and temporally stationary GRF \(\mathbf{X } = \{X(s,t) : (s,t) \in {\mathbb {S}}^{2}\times {\mathbb {R}} \}\) with zero mean and a given covariance function, R, defined as

\((s_i,t_i) \in {\mathbb {S}}^2 \times \mathbb {R}, \quad i=1,2\). We analogously define the space-time stationary variogram \(\gamma : [0,\pi ] \times {\mathbb {R}} \mapsto \mathbb {R}\) as

Let \(H>0\) be the time horizon at which we wish to simulate. In addition, let T be a positive integer and define the regular time grid \(t_{\tau } = \tau H/T\) with \(\tau \in \{0,\ldots ,T\}\). Define the set \(\varOmega _{NMT} = \{(\lambda _{i},\phi _{j},t_{\tau }): i \in I, j\in J,\tau \in \{{0},\dots ,T\} \},\) and the random vectors

The associated covariance matrices are

with \(i,k \in I\) and \({0} \le \tau ,\tau ' \le T\). The assumption of temporal stationarity implies that \(\pmb \varPsi _{i,k}(\tau ,\tau ') = \pmb \varPsi _{i,k}(|\tau '-\tau |).\) Therefore, \(\pmb \varPsi (\tau ,\tau ') = \pmb \varPsi (\tau ',\tau ) = \pmb \varPsi (|\tau '- \tau |).\) As a consequence, for fixed \(\tau ,\tau '\) the matrix \(\pmb \varPsi (|\tau '-\tau |)\) is a block circulant matrix with dimension \(NM\times NM\), however, \(\pmb \varPsi \) is not. To tackle this problem, we consider the torus-wrapped extension of the grid \(\varOmega _{MNT}\) over the time variable which is detailed as follows. First, let \(\kappa \) be a positive integer and let \(g: \mathbb {R}\mapsto \mathbb {R}\) be defined as

Then, the matrix

is a \((NM(2\kappa T - 1))\times (NM(2\kappa T - 1))\) block circulant matrix. Considering that each \(\pmb \varPsi (g({t}))\) is a block circulant matrix for \(0 \le {t} \le (2\kappa T - 1)\) and using decomposition (5), we get

with

where \(\pmb \Upsilon _{i}\) is a \(M\times M\) matrix for all \(i \in I\). When \({\tilde{\pmb \varPsi }}\) is a positive definite matrix, this decomposition allows to compute the square root of \({\tilde{\pmb \varPsi }}\) using the FFT algorithm twice.

There is no way to guarantee that \({\tilde{\pmb \varPsi }}\) is a positive definite matrix; however, Møller et al. (1998) and Davies et al. (2013) remark that this has rarely been a problem in practice. In the case that \({\tilde{\pmb \varPsi }}\) is not a positive definite matrix, we adopt the two methods proposed by Wood and Chan (1994):

- 1.

Let assume that \(\kappa = 2^{\zeta }\) for \(\zeta > 0\). Then, we find the minimum value of \(\zeta \) such that \({\tilde{\pmb \varPsi }}\) is a positive definite matrix.

- 2.

If the resulting \(\kappa \) is a very large number to be computable, then we approximate the square root of \({\tilde{\pmb \varPsi }}\) by computing the generalized square root of \(\Upsilon \).

In practice, \(\kappa \) depends on the covariance function model and the grid (Gneiting et al. 2006). Even though \(\kappa \) increases the computational burden, the simulation procedure is still exact. The use of generalized square roots solves the problem, at expenses of a not exact simulation method (see Wood and Chan 1994).

4 Simulation algorithms

Sections 2 and 3 provide the mathematical background for simulating GRFs on a regular (longitude, latitude) grid based on circulant embedding matrices. Algorithms 1 and 2 presented in this section detail the procedures to be implemented for simulation on \({\mathbb {S}}^{2}\) and \({\mathbb {S}}^{2}\times \mathbb {R}\), respectively. The suggested procedure is fast to compute and requires moderate memory storage since only blocks \(\pmb \varSigma _{i}\) (resp. \(\pmb \varPsi (i)\)) and their square roots, of size \(M \times M\) each, need to be stored and computed. FFT algorithm is used to compute the matrices \(\pmb \varLambda \) and \(\pmb \Upsilon \) by Eq. (12). The last step of both Algorithms can be calculated using FFT, generating a complex-valued vector \(\mathbf{Y }\) where the real and imaginary parts are independent. In addition, both algorithms can be parallelized. Also, a small value of \(\kappa \) means that less memory is required to compute Algorithm 2.

Some comments about the computation of \(\pmb \varLambda ^{1/2}\) and \(\pmb \Upsilon ^{1/2}\) are worth to mention. For a random field over \({\mathbb {S}}^{2}\) or \({\mathbb {S}}^{2} \times \mathbb {R},\) the matrices \(\pmb \varLambda \) and \(\pmb \Upsilon \) can be obtained through Cholesky decomposition if the underlying covariance function r is strictly positive definite on the appropriate space. In the case of a positive semi-definite covariance function, both matrices become ill-conditioned and thus generalized square roots must be used.

5 Simulations

Through this section, we assume that \(r(0) = 1\) and \(r(0,0) = 1\) for covariance functions in \({\mathbb {S}}^{2}\) and \({\mathbb {S}}^{2}\times \mathbb {R}\), respectively. For the case \({\mathbb {S}}^{2}\), we use the following covariance function models:

- 1.

The exponential covariance function defined by

$$\begin{aligned} r_{0}(\theta ) = \exp \left( -\frac{\theta }{\phi _{0}}\right) , \quad \theta \in [0,\pi ], \end{aligned}$$(13)where \(\phi _{0} > 0.\)

- 2.

The generalized Cauchy model defined by

$$\begin{aligned} r_{1}(\theta ) = \left( 1+ \left( \frac{\theta }{\phi _{1}}\right) ^{\alpha }\right) ^{-\frac{\beta }{\alpha }}, \end{aligned}$$where \(\alpha \in (0,1]\) and \(\phi _{1}, \beta > 0.\)

- 3.

The Matérn model defined as

$$\begin{aligned} r_{2}(\theta ) = \frac{2^{1-\nu }}{\varGamma (\nu )} \left( \frac{\theta }{\phi _{2}}\right) ^{\nu } K_{\nu }\left( \frac{\theta }{\phi _2}\right) , \end{aligned}$$

where \(\nu \in (0,1/2], \phi _{2} > 0 \), \(K_{\nu }(\cdot )\) is the Bessel function of second kind of order \(\nu \) and \(\varGamma (\cdot )\) is the Gamma function. In this simulation study, we use \(\phi _0 = 0.5243\), \(\alpha = 0.75\), \(\beta = 2.5626\), \(\phi _{1} = 1\), \(\nu = 0.25\) and \(\phi _{2} = 0.7079\). Such setting ensures that \(r_{i}(\pi /2) = 0.05\) for \(i=0,1,2\). Note that the regularity parameter is restricted to the interval (0, 1 / 2] to ensure positive definiteness on \({\mathbb {S}}^2\) (Gneiting 2013).

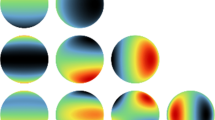

To illustrate the speed and accuracy of Algorithm 1, we compare it with Cholesky and eigenvalue decompositions, to obtain a square root of the covariance matrix (Davies et al. 2013). Because Algorithm 1 only needs the first block row of the covariance matrix, a fair comparison is made by measuring the time of the calculation after the covariance matrix was calculated. To reduce time variations, we repeat, for each grid, 25 simulations and we report the average time. Reported times are based on a server with 32 cores 2x Intel Xeon e52630v3, 2.4 GHz processor and 32 GB RAM. Tables 1, 2, and 3 show the computational time (in seconds) needed for each method to generate the GRF on each grid using the exponential, generalized Cauchy and the Matérn covariance function models, respectively. Except for the smallest grid Algorithm 1 is always faster. For the largest mesh, the eigenvalue and the Cholesky decomposition methods do not work because of storage problems. An example of a realization of the GRF using an exponential covariance function is shown in Fig. 2.

To study the simulation accuracy, we make use of variograms as defined through (1). Specifically, we estimate the variogram nonparametrically through

where \({\mathbb {I}}_{A}(x)\) is the indicator function of the set A, l is a bandwidth parameter and

We perform our simulations, using \(M = 30, N = 60\) (that is, \(n = 1800\)), corresponding to a \(6~\times ~6\) degree regular longitude-latitude grid on the sphere, under the three covariance models described above.

Realization of a GRF with covariance function given by Eq. (13). The parameters of the simulations are \(M=180\), \(N=360\)

Variogram estimates for 100 simulations of different random fields with different models using circulant embedding. a–c Each realization, and d–f envelopes for simulations using Cholesky (blue envelope) and circulant embedding (red envelope). The black line is the true variogram function, gray lines are the estimated functions, while dot-dashed and dashed lines are the mean of the simulations obtained using Cholesky and Circulant Embedding, respectively. (Color figure online)

For each simulation, Cholesky decomposition is used to compute \(\varLambda ^{1/2}\) and the variogram estimates in Eq. (14) are computed. 100 simulations have been performed for each covariance model using Algorithm 1 and using direct Cholesky decomposition for comparison. Figure 3a–c shows the estimated variogram for each simulation and the average variogram as well. Also, global rank envelopes (Myllymäki et al. 2017) were computed for the variogram under each simulation algorithms. The theoretical variogram matches the average variogram perfectly. The superimposition of the envelopes shows that our approach generates the same variability as the Cholesky decomposition.

A similar simulation study is provided for a temporal stationary and spatially isotropic random field on \({\mathbb {S}}^{2}\times {\mathbb {R}}.\) We provide a nonparametric estimate of (9) through

where \(\theta \in [0,\pi ], u \in \mathbb {R}\) and

We simulate using the spherical grid \(M = 30, N = 60\) and the temporal grid \(\tau = \{1, 3/2, \ldots , T-1/2, T \}\) where \(T = 8\), that is, \(n = 28{,}800\) and \(\kappa = 1\). We use the following family of covariance functions (Porcu et al. 2016):

where \(\delta \in (0,1)\), \(\tau > 0\) and \(g_{i}(u)\) is any temporal covariance function. In this case, we consider \(g_{0}(u) = \exp (-u/c_{0})\) and \(g_{1}(u) = (1+(u/c_{1})^{2})^{-1}\). We set \(\delta = 0.95, \tau = 1/4\), \(c_{0} = 1.8951\) and \(c_{1} = 1.5250\). Such setting ensures that \(0.0470< C_{0}(\theta ,3) = C_{1}(\theta ,3) < 0.0520\) for \(\theta \in [0,\pi ]\). Following Porcu et al. (2016), Equation (15) is a positive semi-definite covariance function, and so we use SVD decomposition to compute \(\varLambda ^{1/2}\). In addition to Tables 1, 2, and 3, the number of points used in this experiment does not allow to use Cholesky decomposition, and so envelopes were not computed this time. Figure 4 shows the empirical variogram for 100 simulations. Some amount of variations is to be expected since each realization is different. Their magnitude depends on the space and timescale parameters of the covariance compared to the dimension of the simulation grid. As a proxy to the measure of variations on the empirical variograms, we will use

which is the variance of the prediction of the mean of the simulation, at one time step. The values of \(\sigma _0^{2}\) are \(1/1800 \simeq 0.0006\), 0.166 and 0.117 for the independent case, \(g_{0}\) and \(g_{1}\), respectively. This shows that for the spatial margin we expect larger variations for simulations with \(g_0\) than with \(g_1\), as illustrated in Figure 4a, d. Regarding the temporal margin, we must consider that in time there is only 16 grid points, which is considerably smaller than the number of grid points in space, equal to \(30 \times 60 = 1800\). Variations could be reduced by increasing the number of temporal lags, but this is not the aim of this simulation experiment.

Finally, we use the method to simulate a spatiotemporal process with \(N = 180\), \(M = 360\), \(\tau = \{0, 0.1, \ldots , 7.9, 8.0\}\) where \(T = 8\), that is, \(n = 5,248,800\), and \(\kappa = 1\) for \(C_{0}(\theta ,u)\) and \(C_{1}(\theta ,u)\), respectively. Such realizations are shown in Movie 1 and Movie 2, respectively (Fig. 5).

Plots of the spatial margin, temporal margin and residuals with respect to the mean variogram for 100 simulated random fields. a–f The behavior of the variogram when the true covariance function is given by (15) using \(g_{0} = \exp (-u/c_{0})\) and \(g_{1} = (1+(\theta /c_{1})^{2})^{-1}\), respectively. The black line is the true variogram function, gray lines are the estimated variogram function for each simulation, and black dot-dashed points are the mean of the simulations

Movies 1 and 2 show a realization of a spatiotemporal GRF with covariance function given by (15) using \(g_{0} = \exp (-u/c_{0})\) and \(g_{1} = (1+(\theta /c_{1})^{2})^{-1}\), respectively

6 Discussion

Circulant embedding technique was developed on \({\mathbb {S}}^{2}\) and \({\mathbb {S}}^{2} \times {\mathbb {R}}\) for an isotropic covariance function and for a spatially isotropic and temporally stationary covariance function, respectively. All the calculations were done using the geodesic distance on the sphere. However, this method can be used with the chordal distance and axially symmetric covariance functions (Huang et al. 2012). As shown in our simulation study, this method allows to simulate seamlessly up to \(5 \times 10^6\) points in a spatiotemporal context. Traditional functional summary statistics, like the variogram, require a high computational cost, which motivates the development of different functional summary statistics or algorithms that can deal with a huge number of points.

We have several possibilities if we wish to simulate a GRF, say \(\varvec{X}\), in some specific area at some specific locations that are not on the regular grid for both \({\mathbb {S}}^{2}\) and \({\mathbb {S}}^{2}\times {\mathbb {R}}\). For example, Dietrich and Newsam (1996) consider the extended grid \(\varOmega = \varOmega _{MN} \cup {\tilde{\varOmega }},\) where \(\varOmega _{MN}\) is obtained by (2). The covariance matrix of \(\varvec{X}\) over \(\varOmega \), say \(\pmb \varSigma \), can be partitioned. Thus, the computation of \(\pmb \varSigma ^{1/2}\) can be done efficiently. Another way to simulate \(\varvec{X}\) on \({\tilde{\varOmega }}\) is by performing simulations on \(\varOmega _{MN}\) using the procedure detailed in Sect. 2. Then, perform a local conditional simulation using classical techniques Chiles (1999).

The procedures detailed in Sects. 2 and 3 should be investigated to compute different powers of the covariance function matrix. That is, for an integer k, \(\varSigma ^{k}\) can be obtained using circulant embedding by computing \(\pmb \varLambda ^{k}\) or \(\pmb \Upsilon ^{k}\). Such result is also useful to compute \(\varSigma ^{k}\) with \(k = -1\) which corresponds to the inverse of a matrix (Jun and Stein 2008). Indeed, this case is important for the computation of maximum likelihood estimators and Kriging predictors (Stein 2012).

References

Chiles, J.P., Delfiner, P.: Geostatistics: Modelling Spatial Uncertainty. Wiley, New York (1999)

Clarke, J., Alegría, A., Porcu, E.: Regularity properties and simulations of Gaussian random fields on the sphere cross time. Electron. J. Stat. 12, 399–426 (2018)

Coxeter, H.S.M.: Regular Polytopes. Methuen, London (1973)

Creasey, P.E., Lang, A.: Fast generation of isotropic Gaussian random fields on the sphere. Monte Carlo Methods Appl. 24(1), 1–11 (2018)

Davies, T.M., Bryant, D., et al.: On circulant embedding for Gaussian random fields in R. J. Stat. Softw. 55(9), 1–21 (2013)

Davis, P.J.: Circulant Matrices. Wiley, New York (1979)

Dietrich, C., Newsam, G.: A fast and exact method for multidimensional Gaussian stochastic simulations: extension to realizations conditioned on direct and indirect measurements. Water Resour. Res. 32(6), 1643–1652 (1996)

Dietrich, C., Newsam, G.N.: Fast and exact simulation of stationary Gaussian processes through circulant embedding of the covariance matrix. SIAM J. Sci. Comput. 18, 1088–1107 (1997)

Emery, X., Arroyo, D., Porcu, E.: An improved spectral turning-bands algorithm for simulating stationary vector Gaussian random fields. Stoch. Environ. Res. Risk Assess. 30(7), 1863–1873 (2016)

Gneiting, T.: Strictly and non-strictly positive definite functions on spheres. Bernoulli 19, 1327–1349 (2013)

Gneiting, T., Ševčíková, H., Percival, D.B., Schlather, M., Jiang, Y.: Fast and exact simulation of large Gaussian lattice systems in \({\mathbb{R}}^2\): exploring the limits. J. Comput. Graph. Stat. 15(3), 483–501 (2006)

Huang, C., Zhang, H., Robeson, S.M.: On the validity of commonly used covariance and variogram functions on the sphere. Math. Geosci. 43, 721–733 (2011)

Huang, C., Zhang, H., Robeson, S.M.: A simplified representation of the covariance structure of axially symmetric processes on the sphere. Stat. Probab. Lett. 82(7), 1346–1351 (2012)

Jun, M., Stein, M.L.: Nonstationary covariance models for global data. Ann. Appl. Stat. 2, 1271–1289 (2008)

Lang, A., Schwab, C.: Isotropic Gaussian random fields on the sphere: regularity, fast simulation and stochastic partial differential equations. Ann. Appl. Probab. 25, 3047–3094 (2015)

Møller, J., Syversveen, A.R., Waagepetersen, R.P.: Log Gaussian Cox processes. Scand. J. Stat. 25, 451–482 (1998)

Møller, J., Nielsen, M., Porcu, E., Rubak, E.: Determinantal point process models on the sphere. Bernoulli 24, 1171–1201 (2015)

Myllymäki, M., Mrkvička, T., Grabarnik, P., Seijo, H., Hahn, U.: Global envelope tests for spatial processes. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 79(2), 381–404 (2017)

Park, M.H., Tretyakov, M.: A block circulant embedding method for simulation of stationary Gaussian random fields on block-regular grids. Int. J. Uncertain. Quantif. 5, 527544 (2015)

Porcu, E., Bevilacqua, M., Genton, M.G.: Spatio-temporal covariance and cross-covariance functions of the great circle distance on a sphere. J. Am. Stat. Assoc. 111(514), 888–898 (2016)

Stein, M.L.: Interpolation of Spatial Data: Some Theory for Kriging. Springer, Berlin (2012)

Wood, A.T., Chan, G.: Simulation of stationary Gaussian processes in [0,1]\(^d\). J. Comput. Graph. Stat. 3, 409–432 (1994)

Zhihao, C.: A note on symmetric block circulant matrix. J. Math. Res. Expo. 10, 469–473 (1990)

Acknowledgements

The first author was supported by The Danish Council for Independent Research—Natural Sciences, Grant DFF 7014-00074 “Statistics for point processes in space and beyond,” and by the “Centre for Stochastic Geometry and Advanced Bioimaging,” funded by Grant 8721 from the Villum Foundation. Third author was supported by FONDECYT Number 1170290.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cuevas, F., Allard, D. & Porcu, E. Fast and exact simulation of Gaussian random fields defined on the sphere cross time. Stat Comput 30, 187–194 (2020). https://doi.org/10.1007/s11222-019-09873-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-019-09873-1