Abstract

Hyperspectral data processing typically demands enormous computational resources in terms of storage, computation, and I/O throughputs. In this paper, a compressive sensing framework with low sampling rate is described for hyperspectral imagery. It is based on the widely used linear spectral mixture model. Abundance fractions can be calculated directly from compressively sensed data with no need to reconstruct original hyperspectral imagery. The proposed abundance estimation model is based on the sparsity of abundance fractions and an alternating direction method of multipliers is developed to solve this model. Experiments show that the proposed scheme has a high potential to unmix compressively sensed hyperspectral data with low sampling rate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During the last several decades, hyperspectral imagery processing has been exploited extensively in remote sensing for versatile applications such as environmental monitoring, mineral exploration and food safety. Hyperspectral imagery makes use of as many as hundreds of contiguous spectral bands covering the visible, near-infrared, and shortwave infrared spectral bands (in the range 0.3–2.5 μm [1]) to expand the capability of multispectral sensors. Hyperspectral data analysis has become a valuable technique and a powerful tool for extracting the rich information provided in the spectra for the imaged areas.

In hyperspectral imagery, Due to the relatively coarse spatial resolution of imaging spectrometers and mixing effects in surfaces, a single pixel is generally mixed by the scattered energy of several different material substances present in the scene [2]. Hyperspectral unmixing refers to any process that separates hyperspectral imagery into a collection of constituent spectra or spectral signatures (called endmembers) with a set of fractional abundances for the endmembers for each pixel in the image. The endmembers are generally assumed to represent the pure materials present in the image and the set of abundances, or simply abundances at each pixel to represent the percentage of each endmember that is present in the pixel [1]. Usually, both the spectra of the pure materials as well as their abundances in each pixel are considered unknown. Decomposing a mixed pixel into endmember signatures labeled as endmember extraction and the corresponding abundance fractions labeled as unmixing or abundance estimation is a challenging task underlying many hyperspectral imagery applications.

Depending on the mixing ways at each pixel, two models have been proposed in the past to describe such mixing activities. The first is the linear mixing model and the second is called the intimate spectral mixture, which uses a nonlinear mixing of materials [3]. Most spectral unmixing algorithms start from the linear mixing assumption, or the assumption that an observed spectrum is a linear combination of a limited number of endmember spectra and the linear coefficient of each endmember is its abundance. Consequently, only a linear spectral mixture model (LSMM) will be considered in this paper.

Hyperspectral unmixing based on LSMM is referred to as linear spectral unmixing (LSU). LSU require a priori knowledge of the signatures of materials present in the image scene, which can be obtained from a spectral library (e.g., ASTER and USGS) or codebook. Algorithms can also be used to determine endmember in a scene, such as N-FINDER [4], VCA (vertex component analysis) [5], SGA (simplex growing algorithm) [6]; NMF-MVT (nonnegative matrix factorization minimum volume transform) [7], MVSA (minimum volume simplex analysis) [8] and SISAL (simplex identification via split augmented Lagrangian) [9]. Most of these algorithms are mainly concerned with endmember extraction. Once the endmembers have been found, the estimation of the abundances requires the inversion of the linear mixing equation and since one only has the data points and the endmembers available, and one would like to estimate the linear coefficients (the abundances) [10]. The constrained sparse unmixing by variable splitting and augmented Lagrangian (C-SUnSAL) [11] is one of the state-of-the-art algorithms for abundance estimation. C-SUnSAL employs the alternating direction method of multipliers (ADMM) as a variable splitting procedure followed by the adoption of an augmented Lagrangian method to solve the constrained basis pursuit problem and the constrained basis pursuit denoising problem. When estimating abundance fractions and solving linear inversion, two constraints are often employed for the abundances of materials in a pixel: (1) abundance sum-to-one constraint (ASC) and (2) abundance nonnegativity constraint (ANC). In this paper, we focus exclusively on estimating the abundance fractions of given endmembers.

A hyperspectral imagery can be thought of as a 3D array. The first two dimensions correspond to standard spatial coordinates, and the third dimension corresponds to wavelength. Because of the their enormous volume, hyperspectral data processing typically demands enormous computational resources in terms of storage, computation, and I/O throughputs, especially when real-time processing is desired. Abundance estimation directly using hyperspectral data cubes may be difficult in real-time or near real-time. However, hyperspectral data are highly compressible with two-fold compressibility: (1) each spatial image for each wavelength is compressible, and (2) the entire cube, when treated as a matrix, is of low rank. To fully exploit such rich compressibility, some image compression algorithms and dimensionality reductions methods can be applied to hyperspectral cubes, such as PCA (principal component analysis) [12], ICA (independent component analysis) [13, 14] and CS (Compressive Sensing) [15,16,17,18]. In this paper we estimate abundance fraction of hyperspectral imagery acquired by means of compressive sensing with low sample rate.

During the past few years, compressive sensing (CS) [19] theory has been introduced as a new approach to replace the Shannon’s sampling theorem. The theory of CS shows that, when the signal is sparse enough, it can be accurately recovered from its compressive measurements. For hyperspectral imagery, the sparse representation of signal structure is different on each of its different dimensions or coordinates. The reflectivity values at a given spectral band correspond to an image, which is often sparse or compressible in a wavelet basis. Additionally, the spectral signature of a given pixel is usually smooth or piecewise smooth and often sparse or compressible in the Fourier basis, depending on the spectral range and materials present in the observed area. Previous works on the CS of hyperspectral datacube [15,16,17,18] has exploited on the correlations across the channels to further decrease the number of the compressive measurements and a variety of algorithms are proposed to reconstruct the original data. Three Dimensional Compressive Sampling (3DCS) [20] constructed a generic 3D sparsity measure to exploit 3D piecewise smoothness and spectral low-rank property in hyperspectral CS and explored sparsity prior, total variation knowledge and low-rank property to recover signal. A key enabler of CS for recovering signal is the well known convex regularizer, ℓ1 norm. However, the ℓ1 norm of abundance fractions, that is the sum of absolute value, is a constant in terms of ASC as described in Sect. 2. Therefore, two solutions are shown here. The first uses ℓ1/2 norm as sparsity constraint, with the second solution using sparsity in the wavelet domain, which is equivalent to the ℓ1 norm of the weight abundance.

In this paper, we present a scheme which estimate abundance fractions directly from compressively sensed hyperspectral data with no need to reconstruct full hyperspectral imagery, we term our scheme as compressed abundance estimation (CAE). The proposed scheme is composed of three parts: (1) spatial-spectral compressive sensing, (2) ℓ1/2 norm sparsity constraint of abundance fractions in the wavelet domain and (3) efficient algorithm based on ADMM. The potential of the proposed scheme to unmix compressively sensed hyperspectral data is demonstrated by experiments using synthetic and real hyperspectral data. The contribution of this paper is to provide an abundance estimation scheme from compressed data collected by a compressive sampling camera. Here, the traditional abundance estimation method cannot be employed, since it is only applicable to uncompressed data.

This paper is organised as follows: Section 2 focuses on formulating our abundance fractions estimating model, Sect. 3 specializes the ADMM algorithm to solve the proposed abundance fractions estimating model, Sect. 4 presents experimental results, and Sect. 5 ends the paper with conclusions.

2 Problem Formulation

In this section, we first briefly review the LSMM, which is widely used in hyperspectral unmixing. Next, we introduce our Spatial-spectral Compressive Sensing (SSCS) of hyperspectral data. Finally, we address to the problem formulation of abundance fractions estimation model of hyperspectral data cubes acquired by means of compressive sensing.

2.1 Linear Spectral Mixture Model

Assuming a linear mixing scenario, the observed data in the hyperspectral imagery can be expressed as linear combination of some pure materials (endmembers) and their fractional proportions (abundances) as follows

where \( {\mathbf{X}} \) is an N × L matrix representing the hyperspectral imagery (N is the number of pixels, L is the number of bands). \( {\mathbf{E}} \equiv [{\mathbf{e}}_{1} ,{\mathbf{e}}_{2} \ldots ,{\mathbf{e}}_{p} ]^{T} \) is a p × L matrix containing p endmember signatures (\( {\mathbf{e}}_{i} \) denotes the ith endmember signature and p is the number of endmembers present in the mixing scenario). The notation (·)T stands for vector or matrix transposed. \( {\mathbf{S}} \equiv [{\mathbf{s}}_{1} ,{\mathbf{s}}_{2} \ldots ,{\mathbf{s}}_{p} ] \) is an N × p matrix containing the fractions of each endmember (\( {\mathbf{s}}_{i} \) denotes abundances vector of the ith endmember). N × L dimensional error matrix \( {\mathbf{N}} \) models system additive noise. In LSMM, we consider the term \( {\mathbf{N}} \) as zero mean with additive Gaussian noise, which is a reasonable and widely used assumption in designing hyperspectral unmixing algorithms.

Owing to the physical constraints, the abundances in Eq. (1) have to meet the two constraints: abundance nonnegativity constraint (ANC) means that the fractional abundances of a pixel cannot be negative and abundance sum-to-one constraint (ASC) means that the sum of the fractional abundances of a pixel must be 1. In short, the hyperspectral data model has the form

where \( {\mathbf{1}}_{p} \) and \( {\mathbf{1}}_{N} \) denote the column vectors of all ones with length p and N. Some remarks on the negative effects of applying these two constraints are better to provide [21]. In the later experiments, we impose these two constraints on synthetic data, but ignore them for real hyperspectral imagery.

2.2 CS of Hyperspectral Data

Since each slice of the hyperspectral imagery \( {\mathbf{X}} \) represents a 2D image corresponding to a particular spectral band, we can collect the compressed hyperspectral data by randomly sampling all the columns of \( {\mathbf{X}} \) using the same measurement matrix \( {\mathbf{A}} \). Sensors are collecting n ≪ N linear measurements from each column of \( {\mathbf{X}} \) in a vector. Note that, \( {\mathbf{A}} \) can be explicitly expressed by a matrix \( {\mathbf{A}} \in {\mathbb{R}}^{n \times N} \). Mathematically, the data acquisition model can be described as

Equation (3) is standard compressive sensing used to compress the hyperspectral imagery. Several camera designs have been so far proposed for the single-channel image compressive acquisition [22, 23]. These designs can easily be extended to hyperspectral compressive imaging. This can be achieved by repeating the same acquisition scheme for all spectral bands using an independent random pattern of sampling per channel, which is referred to as distributed CS [15]. In this case, the corresponding measurement matrix \( {\mathbf{A}} \) would be a block diagonal matrix of the form

where \( {\mathbf{A}}_{j} \in {\mathbb{R}}^{n \times N} \) is the random measurement matrix, which is applied on channel j independently from the other spectral bands. n denotes the number of compressive measurements of per channel. In contrast with the single-pixel hyperspectral imager [24] using a unique random pattern for all spectral bands (i.e., \( {\mathbf{A}}_{1} = {\mathbf{A}}_{2} = \cdots = {\mathbf{A}}_{J} \)), independent blocks shown in Eq. (4) benefit the existing information diversity across multiple spectral channels. However, the measurement matrix \( {\mathbf{A}} \) of Eq. (4) is usually very large and using independent blocks is not the most effective measurement for spectral information of hyperspectral imagery.

Standard compressive sensing do not exploit spatial and spectral correlation of hyperspectral imagery simultaneously during the compressive sampling stage. Distributed compressed sensing [15, 17, 25] only exploited part of spectral correlation. In our previous works, Spatial-spectral compressive sensing (SSCS) [18] was proposed to sense spatial and spectral correlation of hyperspectral imagery simultaneously. In this paper, we use SSCS to compressive sampling hyperspectral data cube. The formation of SSCS is written as follows in mathematics:

where \( {\mathbf{A}}_{1} \) is an n × N spatial random measurement matrix to sense spatial information of hyperspectral imagery, \( {\mathbf{A}}_{2} \) is an L × l spectral random measurement matrix, \( {\mathbf{Y}} \) is an n × l matrix representing the compressed hyperspectral data. In the paper, the random measurement matrices \( {\mathbf{A}}_{1} \) and \( {\mathbf{A}}_{2} \) are generated by partial Fourier transform. With the assumption that endmember spectral signatures are given, SSCS show an advantage in compressing hyperspectral data cube. Because spectral compressive measurement is similar to dimension reduction and then l = p, owing to p ≪ L, the spectral sampling rate (l/L) will be very low and typically less than 0.1.

2.3 Formulation of Abundance Estimation

Combining Eqs. (2) and (5) and neglecting noise term, the SSCS model can be rewritten as:

For now, we assume that the endmember spectral signatures in \( {\mathbf{E}} \) are known, our goal is to find their abundance fractions in \( {\mathbf{S}} \), given the measurement matrix \( {\mathbf{A}}_{1} \), \( {\mathbf{A}}_{2} \) and the compressed hyperspectral data \( {\mathbf{Y}} \). Actually, Eq. (6) can be expressed with more convenient form using the following transform.

Next, let us simplify Eq. 6. First, \( \alpha {\mathbf{A}}_{1} \) is left multiplied to the second term of Eq. (6)

where α ≥ 0 is a scale parameter and determines constraint proportion of ASC in Eq. (6). Now, construct new data matrix \( {\tilde{\mathbf{Y}}} \) and measurement matrix \( {\mathbf{A}}_{e} \):

where \( {\tilde{\mathbf{Y}}} \) is an n × (l + 1) matrix by appending \( {\mathbf{A}}_{1} {\mathbf{1}}_{N} \) to the right of \( {\mathbf{Y}} \). Similarly, \( {\mathbf{A}}_{e} \) is an p × (l + 1) matrix by appending \( {\mathbf{S1}}_{p} \) to the right of \( {\mathbf{EA}}_{2} \). With these definitions, Eq. (6) can be written as

In other words, Eq. (6) can be expressed as a simply form of Eq. (9) by adding one column on the observed compressed hyperspectral data.

In order to present the above equation in a more general form, Kronecker product and its properties are applied to Eq. (9). We can draw a conclusion:

where \( {\mathbf{A}} = {\mathbf{A}}_{1} \otimes {\mathbf{A}}_{e}^{T} \) is an n(l + 1) × Np dimension measurement matrix. \( {\mathbf{s}} \in {\mathbb{R}}^{Np} \) is vectorization of \( {\mathbf{S}} \), \( {\mathbf{b}} \in {\mathbb{R}}^{n(l + 1)} \) is vectorization of \( {\tilde{\mathbf{Y}}} \). For now, the goal is to find abundance vector \( {\mathbf{s}} \) when \( {\mathbf{b}} \) and \( {\mathbf{A}} \) are known. In general, the Eq. (10) is a underdetermined system, it is necessary to add some prior knowledge of \( {\mathbf{s}} \) to find it.

The sparsity priors are well known constraints in compressive sensing reconstruction. In recent years, there has been an explosion of researches on the properties of the ℓ1 regularizer. However, for many practical applications, the solutions of the ℓ1 norm regularizer are often less sparse than those of the ℓ0 norm regularizer [26,27,28]. Meanwhile, ℓ1 norm regularizer of \( {\mathbf{s}} \) is in conflict with ASC. To find more sparse solutions than ℓ1 norm regularizer is imperative. In any case, it is the reasonable assumption that abundance fractions for each endmember, corresponding to an image, are mostly and approximately sparse. For highly mixed abundances, however, the assumption is not exact. Therefore, we propose to recover the abundance vector \( {\mathbf{s}} \) by solving the following unmixing model:

where \( {\mathbf{W}} \in {\mathbb{R}}^{Np \times Np} \) is the orthonormal basis and \( \left\| {\mathbf{s}} \right\|_{1/2} = \sum\nolimits_{i = 1}^{Np} {(s_{i}^{1/2} )^{2} } \) denotes ℓ1/2 norm. In this paper, we choose wavelet basis as the orthonormal basis.

3 Application of ADMM

In this section, we specialize the ADMM to the optimization problem (11) stated in Sect. 2. Constrained optimization problem can be converted to unconstrained optimization problem. So we start by rewriting the Eq. (11) to the equivalent form

where μ ≥ 0 is a parameter controlling the relative weight between the ℓ2 and ℓ1/2 terms. Contrast to Eq. (11), the nonnegativity of \( {\mathbf{s}} \) is omitted. In the later experiments, we impose this condition on synthetic data with less noise by forcing negative in \( {\mathbf{s}} \) to zero during each iteration, but skip it for synthetic data with serious noise and real hyperspectral data.

We now introduce auxiliary variables and apply alternating minimization to the corresponding augmented Lagrangian functions. First, with an auxiliary variable \( {\mathbf{z}} \in {\mathbb{R}}^{Np} \), Eq. (12) is clearly equivalent to

Equation (13) has an augmented Lagrangian subproblem of the form

where \( {\mathbf{y}} \in {\mathbb{R}}^{Np} \) is a multiplier and ρ > 0 is a penalty parameter. Given \( ({\mathbf{z}}^{k} ,{\mathbf{y}}^{k} ) \), \( ({\mathbf{s}}^{k + 1} ,{\mathbf{z}}^{k + 1} ,{\mathbf{y}}^{k + 1} ) \) can be obtained by applying alternating minimization to Eq. (14). For \( {\mathbf{z}} = {\mathbf{z}}^{k} \) and \( {\mathbf{y}} = {\mathbf{y}}^{k} \) fixed, the minimizer of Eq. (14) with respect to \( {\mathbf{s}} \) is a least squares problem and the corresponding normal equation is

Since \( {\mathbf{W}} \) is the orthonormal basis, \( {\mathbf{W}}^{T} {\mathbf{W}} = I \). If \( {\mathbf{A}}^{T} {\mathbf{A}} = I \), the solution \( {\mathbf{s}}^{k + 1} \) of Eq. (15) is given easily by

But unfortunately, \( {\mathbf{A}}^{T} {\mathbf{A}} \ne I \), the solution of Eq. (16) could be costly. In this case, we take a steepest descent step in the \( {\mathbf{s}} \) direction and obtain the following solution

where \( {\mathbf{r}}^{k} \) and \( \beta_{k} \) are given by

where \( {\langle } \cdot {\rangle } \) denotes inner product.

Now, for \( {\mathbf{s}} = {\mathbf{s}}^{k + 1} \) and \( {\mathbf{y}} = {\mathbf{y}}^{k + 1} \) fixed, simple manipulation shows that the minimization of Eq. (14) with respect to \( {\mathbf{z}} \) is equivalent to

The solution of Eq. (18) is given explicitly by solving the \( \ell_{ 1 / 2} \) regularizer, which can be transformed into that of a series of convex weighted Lasso with an existing \( \ell_{ 1} \) regularizer algorithm [26,27,28]. The p-shrinkage to solve Eq. (18) is given by

where all the operations are performed componentwise and t 0 is a lower constant factor to avoid divide by zero (or very small numbers) conditions. Finally, we update the multiplier \( {\mathbf{y}} \) by

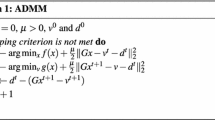

Putting all components together, our algorithm for solving abundance estimation of compressed hyperspectral data, can be summarized as follows.

4 Experimental Results

In this section, we utilize the synthetic and real hyperspectral data to demonstrate the performance of the proposed CAE scheme and compare it with C-SUnSAL [11] and 3DCS [20]. It is worth mentioning that the data to be processed by our CAE scheme and 3DCS scheme are compressed sample hyperspectral imagery. But the data applied to C-SUnSAL scheme is full hyperspectral imagery. In other words, the input hyperspectral data to the C-SUnSAL scheme given in the following experiments are not compressed, while CAE scheme and 3DCS scheme are compressed. The 3DCS scheme recovers hyperspectral data first, then estimates endmember and abundance by VCA (vertex component analysis) [5] and least squares algorithm. The purpose of the comparison is to prove that the direct abundance estimation (CAE) scheme of the compressed data is closer to the uncompressed data (C-SUnSAL).

All numerical experiments reported in this paper were performed on a regular desktop machine running Windows 7 and MATLAB R2008a (64-bit), equipped with a 3.4 GHz Intel Core 2 Duo CPU i7-4770 and 8 GB of DDR3 memory.

For the unmixing accuracy assessment, The error between the estimated and the true fractions can be used as the criterion [29]. However, the true abundances are unknown for real hyperspectral imagery. So the reconstruction signal-to-noise ratio (RSNR) is used as the criterion of accuracy assessment. For the synthetic hyperspectral data, we use the RSNR of abundances to evaluate the quality of abundance estimation. For the real hyperspectral imagery, we use the RSNR of hyperspectral data matrix to evaluate the quality of the unmixing methods. The RSNR is defined as

where \( E\left[ \cdot \right] \) is the expectation operator. \( {\varvec{\uptheta}} \) and \( {\hat{\mathbf{\theta }}} \) are the true matrix and its estimated matrix.

In all types of experiments, we use partial Fourier transform to generate measurement matrices \( {\mathbf{A}}_{1} \) and \( {\mathbf{A}}_{2} \). The total sampling rate nl/NL is p/2L when n = N/2 and l = p, i.e., the half of ratio between the number of endmembers and bands. Generally, the total sampling rate will be very low and typically less than 0.05. The multiplier \( {\mathbf{y}} \) and variable \( {\mathbf{s}} \), \( {\mathbf{z}} \) were always initialized to 0.

5 Results on Synthetic Hyperspectral Data

In the first experiment, the synthetic data sets are created as linear mixtures of a set of spectra with synthesized abundance maps. The spectral signatures are selected from the mineral spectra in United States Geological Survey (USGS) digital spectral library, which consists of 420 spectras with 224 bands. Figure 1 shows three of these endmember signatures. An approximate color image of abundance maps corresponding to 3 endmembers is shown in Fig. 2 with a spatial resolution of 256 × 256, which is mixed by three hyperspectral imagery according to the ANC and ASC. Figures 1 and 2 give the “true” \( {\mathbf{E}} \) and \( {\mathbf{S}} \) respectively. Then we generated an observation data \( {\mathbf{Y = A}}_{1} {\mathbf{XA}}_{2} \) for some measurement matrices \( {\mathbf{A}}_{1} \) and \( {\mathbf{A}}_{2} \) from synthetic hyperspectral data \( {\mathbf{X}} = {\mathbf{SE}} \). Here, the total sampling rate p/2L is about 0.0067.

First, we test the sensitivity of penalty parameters μ and ρ. When testing μ, we let ρ equal to 10−3, and similarly, When testing ρ, we let μ equal to 10−3. The test results are shown in Table 1. As can be seen from Table 1, the CAE algorithm is more sensitive to the parameter μ, and slightly less sensitive to the parameter ρ. The best reconstruction performance is obtained when the parameters μ and ρ are all 10−3. In later experiments, therefore, the parameters μ and ρ are set to 10−3.

In order to simulate the possible errors and sensor noise, zero mean Gaussian random noise measured by signal-to-noise ratio (SNR) is added to the synthetic scenes. In order to test sensitivity to noise of these schemes, we change the SNR from 25 to 50 dB.

Figure 3 shows the RSNR of abundances for three schemes with different noise levels. It can be seen that C-SUnSAL has prefect unmixing results and our scheme has acceptable results with adding low noise, however, the 3DCS has poor results. With the decrease of SNR, our scheme and C-SUnSAL algorithm perform worse, but the 3DCS stay the low RSNR since the reconstruction hyperspectral data distorted seriously and have no useful signal. Compared with C-SUnSAL algorithm, the result of our scheme is much worse. However, our scheme only use 0.67% of the data, while C-SUnSAL algorithm using 100% of the data. Particularly, our scheme will be an appropriate choice when compressed hyperspectral data is collected by CS imaging device such as Single-Pixel camera [24] and CS camera [30]. At this point, the C-SUnSAL algorithm cannot accurately estimate abundance fractions, because the original data collected by the CS camera is compressed and it is difficult to reconstruct the data with low sampling rate.

The second experiment compares CAE and C-SUnSAL schemes using synthesis data of 5 endmembers, since the 3DCS scheme is almost impossible to effectively estimate the abundance at low sampling rates. The C-SUnSAL scheme requires colleting 100% of the hyperspectral data, however, the CAE scheme requires only 1.12% of the data. The 5 endmembers are randomly selected USGS. The simulated image consists of a set of 5 × 5 squares of 10 × 10 pixels each one, for a total size of 90 × 90 pixels. The first row of squares contains the endmembers, the second row contains mixtures of two endmembers, the third row contains mixtures of three endmembers, and so on. The true abundance maps of 5 endmembers in the synthetic hyperspectral data are shown in Fig. 4. Zero-mean Gaussian noises are added to the synthetic scenes in with SNR changed from 25 to 50 dB.

Figure 5 shows the RSNR of CAE and C-SUnSAL schemes with different noise levels. The change trend of RSNR curve of Fig. 5 is similar to that of Fig. 3. Reconstruction performance of C-SUnSAL scheme is better than CAE. Since the synthetic hyperspectral data of experiment 2 is sparser than that of experiment 1, the RSNR of CAE in experiment 2 are higher than experiment 1 with different noise levels. This is because the CAE scheme is based on the assumption that the signal is sparse. The sparser the signal is, the better the reconstruction is.

The estimated abundance maps of all endmembers for the previous two synthetic experiments are shown in Fig. 6, where the white is high and black is low. From the visual comparisons of Fig. 6, there is no obvious difference between the CAE and C-SUnSAL. For the first synthetic scene, due to the complexity of scene, the estimated abundance map of the third endmember for CAE is slightly noisy.

5.1 Results on Real Hyperspectral Imagery

Here, we generated compressed observed data \( {\mathbf{Y}} \) by applying data acquisition model (5) to two real hyperspectral data to illustrate proposed scheme performance. One is the publicly available Low Altitude hyperspectral data collected by airborne visible/infrared imaging spectrometer (AVIRIS) [31] sensor, which contained 224 bands in a range from 0.41 to 2.45 mm with a 10-nm bandwidth. The other is Hengdian hyperspectral data collected by push-broom hyperspectral imaging (PHI) of shanghai institute of technical physics of the Chinese academy of sciences, which contained 124 bands. The spatial resolutions of the two hyperspectral imagery used in this paper are 256 × 256. The images at band 30 of the two data are shown in Fig. 7.

Unlike the synthetic data set, the endmember signatures in this area are unknown. In our experiment, we assumed the number of endmembers p = 4 and employed VCA [5] method to find endmember signatures for the two data. Now, the total sampling rate p/2L is about 0.0089 and 0.0179. Because of the lack of true abundance maps, we only use the RSNR of hyperspectral data to evaluate the unmixing results of three schemes. Table 2 shows the quantitative results and the running time of three schemes for two hyperspectral data. From the running time of Table 2, we can see that the runtime of our scheme has the same order of magnitude as C-SUnSAL algorithm. Although the reconstruction speed of our scheme is not faster than C-SUnSAL algorithm, but due to the low amount of data collected by CS, the time of data transmission is far less than that of C-SUnSAL. The runtime of 3DCS algorithm is of two orders of magnitude higher than our scheme. This is because the reconstruction object of our scheme is abundance matrix and the reconstruction object of 3DCS is the whole hyperspectral data matrix. The amount of data processing of our scheme has greatly reduced. From the RSNR of hyperspectral data in Table 2, we can see that 3DCS algorithm fails to achieve high recovery accuracy and the RSNR of C-SUnSAL algorithm is higher 10 dB than our scheme. However, the amount of data of our scheme is only about 1% of the C-SUnSAL algorithm.

The estimated abundance maps of endmember 1 are shown in Figs. 8 and 9, where the white is high and black is low. Our unmixing results with 0.89 and 1.79% of the data are shown in Figs. 8b and 9b. Although, either for qualitative or quantitative analysis, our scheme is worse than abundance estimation from 100% of the data. However, when hyperspectral data is collected by CS, our scheme is an appropriate choice. Since abundance estimation from reconstructing data matrix is practically impossible with very low measurements and scarcely any useful signals are show in Figs. 8a and 9a.

Figure 10 shows the numbers of endmember of two hyperspectral dataset influence the reconstruction performance of CAE and C-SUnSAL schemes. From Fig. 10, we can see that the RSNR of C-SUnSAL schemes increases with the increase of the numbers of endmember, while the RSNR curves of CAE schemes up and down. When the numbers of endmember is low, the reconstruction performance of CAE is better. This means that our CAE scheme is difficult to be applied to the scene with rich spectral information of the ground objects.

From the synthetic and the real hyperspectral imagery experiments, we can see that the computational cost of CAE almost the same as C-SUnSAL and the estimation accuracy of CAE slightly worse than C-SUnSAL. The significant advantage of the proposed CAE is that CAE can be applied to the abundance estimation of the CS camera. However, the C-SUnSAL algorithm is only applicable to the conventional hyperspectral imaging spectrometer. If the data is collected by CS camera [30], the C-SUnSAL algorithm will not be able to estimate abundance.

6 Conclusion and Future Work

In this paper, a scheme to perform abundance fractions estimation is developed for compressively sampled hyperspectral data. The scheme estimates abundance fractions directly without reconstructing hyperspectral imagery. The proposed scheme consists of two major parts: data acquisition by spatial-spectral compressive sensing and abundance fractions estimating by solving a compressed unmixing model with sparse prior.

In the first-part, we have collected hyperspectral data by spatial-spectral compressive sensing and considered that the spectral signatures of the endmembers are either precisely or approximately known. The experimental results on the synthetic and real hyperspectral imagery showed that our scheme is an appropriate choice when hyperspectral data is collected by compressive sensing.

In the second-part, we employ ℓ1/2 norm of abundance fractions in the wavelet domain to avoid the contradiction between ℓ1 norm minimization and sum-to-one constraint of abundance fractions. At the same time, an efficient algorithm has been constructed for solving a compressed unmixing model based on the alternating direction method of multipliers.

In future research efforts, there are two aspects worthy to be investigated. Initially, the reconstruction abundance fractions from compressively sensed hyperspectral data are far worse than from full data. In further work, we will seek for the combination regularization using more prior knowledge such as sparsity, total variation, and simplex projection to improve unmixing accuracy. Next, in more practical situations, knowledge about endmember spectral signatures is either very rough or even totally missing. We will investigate a more difficult task to blindly unmix from compressively sensed hyperspectral data.

References

Bioucas-Dias, J. M., Plaza, A., Dobigeon, N., Parente, M., Qian, D., Gader, P., et al. (2012). Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 5(2), 354–379. doi:10.1109/jstars.2012.2194696.

Pu, H., Xia, W., Wang, B., & Jiang, G. M. (2013). A fully constrained linear spectral unmixing algorithm based on distance geometry. IEEE Transactions on Geoscience and Remote Sensing. doi:10.1109/tgrs.2013.2248013.

Heinz, D. C., & Chein, I. C. (2001). Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Transactions on Geoscience and Remote Sensing, 39(3), 529–545. doi:10.1109/36.911111.

Winter, M. E. N-FINDR: an algorithm for fast autonomous spectral end-member determination in hyperspectral data. In Proceedings of the 1999 imaging spectrometry, Denver, CO, USA, 1999 (Vol. 3753, pp. 266-275, Proceedings of SPIE—The International Society for Optical Engineering). Bellingham: SPIE.

Nascimento, J. M. P., & Dias, J. M. B. (2005). Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Transactions on Geoscience and Remote Sensing, 43(4), 898–910. doi:10.1109/tgrs.2005.844293.

Chein, I. C., Chao-Cheng, W., Wei-min, L., & Yen-Chieh, O. (2006). A new growing method for simplex-based endmember extraction algorithm. Geoscience and Remote Sensing, IEEE Transactions on, 44(10), 2804–2819. doi:10.1109/tgrs.2006.881803.

Xuetao, T., Bin, W., Liming, Z., & Jian Qiu, Z. (2007). A new scheme for decomposition of mixed pixels based on nonnegative matrix factorization. In Geoscience and remote sensing symposium, 2007. IGARSS 2007. IEEE International, 23–28 July 2007 (pp. 1759–1762). doi:10.1109/igarss.2007.4423160.

Li, J., & Bioucas-Dias, J. M. (2008). Minimum volume simplex analysis: A fast algorithm to unmix hyperspectral data. In Geoscience and remote sensing symposium, 2008. IGARSS 2008. IEEE international, 7–11 July 2008 (Vol. 3, pp. III-250–III-253). doi:10.1109/igarss.2008.4779330.

Bioucas-Dias, J. M. (2009). A variable splitting augmented Lagrangian approach to linear spectral unmixing. In Hyperspectral image and signal processing: Evolution in remote sensing, 2009. WHISPERS ‘09. First Workshop on, 26–28 Aug. 2009 (pp. 1–4). doi:10.1109/whispers.2009.5289072.

Heylen, R., Burazerovic, D., & Scheunders, P. (2011). Fully constrained least squares spectral unmixing by simplex projection. IEEE Transactions on Geoscience and Remote Sensing, 49(11), 4112–4122. doi:10.1109/tgrs.2011.2155070.

Bioucas-Dias, J. M., & Figueiredo, M. A. T. (2010). Alternating direction algorithms for constrained sparse regression: Application to hyperspectral unmixing. In Hyperspectral image and signal processing: Evolution in remote sensing (WHISPERS), 2010 2nd Workshop on, 14–16 June 2010 (pp. 1–4). doi:10.1109/whispers.2010.5594963.

Kaarna, A., Zemcik, P., Kalviainen, H., et al. (2000). Compression of multispectral remote sensing images using clustering and spectral reduction. Geoscience and Remote Sensing, IEEE Transactions on, 38(2), 1073–1082. doi:10.1109/36.841986.

Jing, W., & Chein, I. C. (2006). Independent component analysis-based dimensionality reduction with applications in hyperspectral image analysis. IEEE Transactions on Geoscience and Remote Sensing, 44(6), 1586–1600. doi:10.1109/tgrs.2005.863297.

Feng, Y., He, M., Song, J., & Wei, J. (2007). ICA-based dimensionality reduction and compression of Hyperspectral imagery. Journal of Electronics & Information Technology, 29(12), 2871–2875.

Duarte, M. F., Sarvotham, S., Baron, D., Wakin, M. B., & Baraniuk, R. G. (2005). Distributed compressed sensing of jointly sparse signals. In The thirty-ninth Asilomar conference on signals, systems and computers, Pacific Grove, October 28–November 1, 2005 (pp. 1537–1541). doi:10.1109/acssc.2005.1600024.

Golbabaee, M. Multichannel compressed sensing via source separation for hyperspectral imagery. In European signal processing conference, Aalborg, Denmark, 2010 (pp. 1326–1329). Poland: EUSIPCO.

Duarte, M. F., & Baraniuk, R. G. (2012). Kronecker compressive sensing. IEEE Transactions on Image Processing, 21(2), 494–504. doi:10.1109/tip.2011.2165289.

Wang, Z., Yan, F., & Jia, Y. (2013). Spatial-spectral compressive sensing of hyperspectral image. In Third IEEE international conference on information science and technology, Yangzhou, Jiangsu, China, 23–25 March 2013 (pp. 1256-1259).

Donoho, D. L. (2006). Compressed sensing. IEEE Transactions on Information Theory, 52(4), 1289–1306. doi:10.1109/tit.2006.871582.

Xianbiao, S., & Ahuja, N. (2011). Imaging via three-dimensional compressive sampling (3DCS). In IEEE international conference on computer vision, Barcelona, 6–13 Nov. 2011 (pp. 439-446). Piscataway: IEEE. doi:10.1109/iccv.2011.6126273.

Liguo, W., & Xiuping, J. (2009). Integration of soft and hard classifications using extended support vector machines. IEEE Geoscience and Remote Sensing Letters, 6(3), 543–547. doi:10.1109/lgrs.2009.2020924.

Duarte, M. F., Davenport, M. A., Takhar, D., Laska, J. N., Ting, S., Kelly, K. F., et al. (2008). Single-pixel imaging via compressive sampling. IEEE Signal Processing Magazine, 25(2), 83–91. doi:10.1109/msp.2007.914730.

Marcia, R. F., & Willett, R. M. (2008). Compressive coded aperture superresolution image reconstruction. In IEEE international conference on acoustics, speech and signal processing, March 31 2008–April 4 2008 (pp. 833-836). Piscataway: IEEE. doi:10.1109/icassp.2008.4517739.

Duarte, M. F., Davenport, M. A., Takhar, D., Laska, J. N., Ting, S., Kelly, K. F., et al. (2008). Single-pixel imaging via compressive sampling. Signal Processing Magazine, IEEE, 25(2), 83–91. doi:10.1109/msp.2007.914730.

Golbabaee, M. Hyperspectral image compressed sensing via low-rank and joint-sparse matrix recovery. In The 37th international conference on acoustics, speech, and signal processing, Kyoto, 2012 (pp. 2741–2744). Piscataway: IEEE.

Candes, E. J., Wakin, M. B., & Boyd, S. P. (2008). Enhancing sparsity by reweighted L1 minimization. Journal of Fourier Analysis and Applications, 14(5–6), 877–905. doi:10.1007/s00041-008-9045-x.

Xu, Z., Zhang, H., Wand, Y., Chang, X., & Yong, L. (2010). L1/2 regularization. Science in China, series F, 53(06), 1159–1169.

Chartrand, R. (2009). Fast algorithms for nonconvex compressive sensing: MRI reconstruction from very few data. In IEEE international symposium on biomedical imaging: From nano to macro, June 28 2009–July 1 2009 (pp. 262–265). Piscataway: IEEE. doi:10.1109/isbi.2009.5193034.

Xiuping, J., & Liguo, W. (2014). Fuzzy assessment of spectral unmixing algorithms. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 7(6), 1947–1955. doi:10.1109/jstars.2013.2264313.

August, Y., Vachman, C., Rivenson, Y., & Stern, A. (2013). Compressive hyperspectral imaging by random separable projections in both the spatial and the spectral domains. Applied Optics, 52(10), 46–54. doi:10.1364/ao.52.000d46.

Vane, G., Green, R. O., Chrien, T. G., Enmark, H. T., Hansen, E. G., & Porter, W. M. (1993). Airborne visible/infrared imaging spectrometer (AVIRIS). Remote Sensing of Environment, 44(2–3), 127–143. doi:10.1016/0034-4257(93)90012-m.

Acknowledgements

This work was supported by the Key Projects of Natural Science Research of Universities of Anhui Province under Grant KJ2016A884, Quality Engineering Project of Universities of Anhui Province under Grant 2016zy126 and the National Natural Science Foundation of China under Grant 61071171.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of the Topical Collection on Hyperspectral Imaging and Image Processing.

Rights and permissions

About this article

Cite this article

Wang, Z., Feng, Y. Abundance Estimation of Hyperspectral Data with Low Compressive Sampling Rate. Sens Imaging 18, 23 (2017). https://doi.org/10.1007/s11220-017-0168-5

Received:

Revised:

Published:

DOI: https://doi.org/10.1007/s11220-017-0168-5