Abstract

An efficient method and system for distributed compressive sensing of hyperspectral images is presented, which exploit the low rank and structure similarity property of hyperspectral imagery. In this paper, by integrating the respective characteristics of DSC and CS, a distributed compressive sensing framework is proposed to simultaneously capture and compress hyperspectral images. At the encoder, every band image is measured independently, where almost all computation burdens can be shifted to the decoder, resulting in a very low-complexity encoder. It is simple to operate and easy to hardware implementation. At the decoder, each band image is reconstructed by the method of total variation norm minimize. During each band reconstruction, the low rand structure of band images and spectrum structure similarity are used to give birth to the new regularizers. With combining the new regularizers and other regularizer, we can sufficiently exploit the spatial correlation, spectral correlation and spectral structural redundancy in hyperspectral imagery. A numerical optimization algorithm is also proposed to solve the reconstruction model by augmented Lagrangian multiplier method. Experimental results show that this method can effectively improve the reconstruction quality of hyperspectral images.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Hyperspectral images (HSI) are a collection of hundreds of images which are acquitted simultaneously in narrow and adjacent spectral bands, and have a high spectral resolution. HSI are widely applied in terrain classification, mineral detection, environmental monitoring, military surveillance. HSI provide such high spatio-spectral resolution at the cost of extremely large data size. The HSI acquisition is confronted with the challenge for storage and transmission on limited resource platform. The low-power, low-complexity HSI compression algorithm is crucial.

Compressive sensing (CS) [1, 2] is one of the recent emerging areas in signal and image processing. This new technology can exploits the sparsity of a signal to perform sampling at a smaller rate compared to the Nyquist rate. If the signal is sparse or sparse in some transform domain, then the signal can be sufficiently reconstructed from a limited number of measurements with very high probability. The value of CS in hyperspectral imaging is that computational burden would shift from a resource-constrained sensor device to a reconstruction process implemented on a more powerful receiving device. Thus, the application of CS to hyperspectral imaging has the potential for significantly reducing the sampling rate, which is benefit for reducing memory storage and computational complexity.

In distributed source coding (DSC) [3, 4], every band of hyperspectral image is encoded independently, which can obtain the same low sample ratio with combination coding. Combining DSC and CS, Baron [5] firstly proposed distributed compressive sensing (DCS), which defines three joint sparsity model. HSI are simultaneously imaging for the same ground feature, which have the strong correlation and distributed feature. Therefore, DCS is suitable for hyperspectral imagery.

2 Compressive Sensing Theorem

Consider the image signal \( x \in R^{N} \) has sparse representation over a fixed dictionary \( \Psi \in R^{N \times P} \) (i.e. DWT basis), and we typically assume that \( \Psi \) is redundant, it means that P > N. So the image signal x can be denoted as \( x = \Psi \alpha , \) where \( \alpha \in R^{P} \) and \( \left\| \alpha \right\|_{0} \ll N. \) The \( l_{0} \)-norm used here simply counts the nonzero elements in \( \alpha \). Based on CS theory, we can denote N-dimensional vector x by a M-dimensional vector y projecting on the measurement matrix \( \Phi \) with \( \Phi \in R^{M \times N} \) and M < N. In detail, \( y =\Phi x. \) Based on above analysis, y can be rewritten as equation.

The original signal x can be reconstructed from the measurement vector y by exploring its sparse representation and seek the sparsest expression among all possible \( \alpha \) that satisfy \( y = {\Phi \Psi }\alpha . \) The reconstruction requires the solution of the well known underdetermined problem with sparsity constraint [1]:

where \( \left\| \alpha \right\|_{0} = \left| {\sup (\alpha )} \right| = \{ j:\alpha_{j} \ne 0\} \) and \( D = \Phi \Psi \) is defined as equivalent dictionary. We can observe that the reconstruction problem can be considered as an \( l_{0} \) minimization problem [2]. Unfortunately, the \( l_{0} \) minimization problem has been proved to be N P-hard, and cannot be used in practical applications. Donoho and Candes Point out that if the measurement matrix D satisfies the so-called restricted isometry property (RIP) with a constant parameter, \( l_{1} \) optimization can take place of \( l_{0} \) optimization. The \( l_{1} \) minimization problem can be described as follows:

3 Distributed Hyperspectral Compressive Sensing

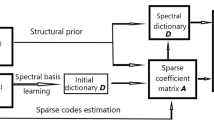

HSI are simultaneously imaging for the same ground feature, which have the strong correlation and distributed feature. Each band of HSI can seem to be the related signal source. Therefore, the correlation can be used in hyperspectral combination reconstruction. A distributed compressive sensing of HSI frame is proposed. At the encoder, every band image is measured independently, where almost all computation burdens can be shifted to the decoder, resulting in a very low-complexity encoder. It is simple to operate and easy to hardware implementation. At the decoder, each band image is reconstruction by the method of total variation norm minimize. During each band reconstruction, the low rand structure of band images and spectrum structure similarity are used to give birth to the new regularizers as the side information. With combining the new regularizers and other regularizer, we can sufficiently exploit the spatial correlation, spectral correlation and spectral structural redundancy in hyperspectral imagery. The hyperspectral compressive sensing scheme is as follows in Fig. 1.

HSI can be represented by three-dimension cube \( m \times n \times K, \) where K is the number of spectral bands; m and n are the number of horizontal and vertical pixels, respectively, in one band image. The hyperspectral cube is changed to represent for the 2-dimension matrix \( X = \left[ {x_{1} , \ldots x_{i} , \ldots x_{K} } \right] \). \( x_{i} \) corresponds to the ith band image, reshaped in a vector, whose number is \( N = mn. \) Then X is \( N \times K \) matrix. In the HSI distributed compressive acquisition process, every sensor respectively collects M(\( M \ll N \)) linear measurements in a vector \( y_{i} \in R^{M} \) from the band image \( x_{i} \in R^{N} \) by the random projection matrix \( \Phi \in R^{M \times N} . \) For the ith band image, projection process is \( y_{i} = \Phi x_{i} . \) Then, we get

where \( Y = \left[ {y_{1} , \ldots y_{i} , \ldots y_{K} } \right] \). The sample rate is \( {M \mathord{\left/ {\vphantom {M N}} \right. \kern-0pt} N} \).

In CS, the signal reconstruction is a inverse problem, which can not be directly solved. We can use the regularization theorem to solve this problem. By increasing some constraint conditions, we solve the underdetermined function to reconstruct hyperspectral image from measurements \( Y \).

3.1 Total Variation Regularization

Rudin et al. [6] proposed the total variation (TV) model based on the piecewise smooth property in image. Therefore, TV regularization makes reconstructed images sharper by preserving the edges or boundaries more accurately. As a result, TV regularization has recently attracted numerous research activities in CS for image processing [7]. Each band image of HSI has the piecewise smooth property and significant structure information. The hyperspectral image can be reconstructed by using the TV norm on each band

where \( \left( {D_{h} ,D_{v} } \right) \) respectively represent the finite horizontal vertical gradient operators in 2D spatial domain.

3.2 Low Rank Constraint

TV model only consider the spatial correlation in image, which is widely applied in the 2D image. However, HSI collect the different spectral information for the same ground feature, which has strong spectral correlation. Therefore, we bring the spectral low rank property in the hyperspectral image reconstruction to wipe out the spectral correlation.

As the hyperspectral data matrix \( X \in R^{N \times K} \) is low rank, we consider the recovery of HSI as a low rank matrix recovery. Current theoretical results indicate that a low rank matrix can be perfectly recovered from its linear measurements. Based on DCS, hyperspectral low rank property is considered as the side information to be applied in hyperspectral reconstruction, which can not only increase the reconstruction accuracy, but also shift the computation burden to decode from encode. When \( \Phi \) is chosen at random, a robust recovery of X is achievable from the following convex nuclear norm minimization [8].

where \( \left\| X \right\|_{ * } = \sum\nolimits_{k} {\sigma_{k} \left( X \right)} , \) defined the sum of singular value \( \sigma_{k} \left( X \right). \)

3.3 Structure Similarity Constraint

Although the pixel values may be different in spatial neighbors and neighboring bands, the relationships between a pixel and its spatial neighbors may be very similar in adjoining spectral bands. In [9], a technique for effective reconstruction of HSI is proposed, which makes use of the structure similarity. The HIS can be reconstructed by:

where \( SS(X) \) is a regulaizer based on structure similarity.

Let \( G_{h}{:}\,R^{n} \to R^{n} \) denote a linear operator computing the horizontal structure image \( H_{i} \) of the original image \( x_{i} . \) Horizontal structure image can be expressed as \( H_{i} = (x_{i} )_{j} - (x_{i} )_{jh} = G_{h} x_{i} , \) with \( (x_{i} )_{j} \) and \( (x_{i} )_{jh} \) representing a pixel and its horizontal right neighbour in the 2D spatial domain, respectively. With the same argument, we can get the vertical structure image V.

The horizontal and vertical structure images of the whole HIS can be represented, respectively, as:

The structure similarity constraints depend on the continuity of each pixel along with the spectral direction in the structure image. In the vertical structure image, the similarity constraint between adjoining spectral is \( \left\| {\left( {V_{i} } \right)_{j} - \left( {V_{i + 1} } \right)_{j} } \right\| = 0. \) In the horizontal structure, it is \( \left\| {\left( {H_{i} } \right)_{j} - \left( {H_{i + 1} } \right)_{j} } \right\| = 0. \) As a result, the structure similarity constraints can be written as follow:

where D is the gradient operator in spectral direction.

3.4 Algorithm and Implementation

Combining the above three constraints, a distributed hyperspectral compressive sensing frame is proposed, which makes the best of the spatial piecewise-smoothed, low rank and structure similarity property. Joining Eqs. (5), (6) and (7), we obtain the optimization model

By introducing auxiliary parameters \( Z = \left( {Z_{1} ,Z_{2} ,Z_{3} ,Z_{4} } \right). \) Equation can be written as the following equivalent formulation:

This linear constraint problem can be solved by the augmented Lagrangian multipliers (ALM) [10]. The Lagrangian formulation of equation is

By using perfect square, we simplify (11) to obtain

where \( \beta = \left[ {\beta_{1} ,\beta_{2} ,\beta_{3} ,\beta_{4} } \right],\,g_{i} = \frac{{\gamma_{i} }}{{\beta_{i} }},\,g = \left[ {g_{1} ,g_{2} ,g_{3} ,g_{4} } \right]. \)

The iterating process of the proposed algorithm is as follows:

-

1)

update \( Z^{i + 1} ,\,Z^{i + 1} = \arg \mathop {\hbox{min} }\limits_{Z} L\left( {X^{i} ,Z,g^{i} } \right); \)

-

2)

update \( X^{i + 1} ,\,X^{i + 1} = \arg \mathop {\hbox{min} }\limits_{X} L\left( {X,Z^{i} ,g^{i} } \right) \)

-

3)

update parameter \( g^{i + 1} ,\,g_{1}^{i + 1} = g_{1}^{i} - \left( {Z_{1} - D_{h} X^{i + 1} } \right), \)

$$ \begin{aligned} g_{2}^{i + 1} & = g_{2}^{i} - \left( {Z_{2} - D_{v} X^{i + 1} } \right) \\ g_{3}^{i + 1} & = g_{3}^{i} - \left( {Z_{3} - DG_{h} X^{i + 1} } \right) \\ g_{4}^{i + 1} & = g_{4}^{i} - \left( {Z_{4} - DG_{h} X^{i + 1} } \right) \\ \end{aligned} $$ -

4)

\( k = k + 1 \)

-

5)

if \( \left\| {\frac{{X^{k + 1} - X^{k} }}{{X^{k} }}} \right\| < \varepsilon , \) the algorithm is end. If not, return step 1.

4 Experiment Result and Analysis

In order to verify the performance of the proposed method for HSI, experiments are conducted on HSI. We choose a 512 × 512 × 224 cuprite and lunar lake HSI to test our proposed method, which derive from AVIRIS (http://aviris.jpl.nasa.gov). The measurement matrix is chosen as random matrix. In all our experiments, we empirically choose \( \lambda_{1} = 3,\,\lambda_{2} = 2,\,\beta_{i} = 100\left( {\forall i} \right),\,\varepsilon = 0.0001. \) Although these parameters may not be optimal, they are effective to demonstrate the algorithm. The experimental results show the mean reconstruction SNR of the 224 bands of different reconstruction methods under different sampling rate in Fig. 2.

From Fig. 2, it can be seen that the proposed method improve the reconstruction SNR around 3–4 dB compared with the other methods. While the rate of data measurement is medium, the advantage of the proposed method is more obvious. Thus, the proposed method is suitable for practical hyperspectral compression.

For better intuitive comparison of the reconstruction performance, Fig. 3 shows the reconstruction images of the 50-band of the Cuprite at 0.2. It can be seen from Fig. 3 that the reconstruction performance of our proposed method obviously outperforms the other methods.

5 Conclusion

This paper has proposed a distributed hyperspectral compressive sensing frame based on low rand and structure similarity property. Due to efficiently utilizing spatial correlation, spectral low rank property and structure similarity property in HSI, experimental results show that the proposed distributed compressive hyperspectral sensing method provides a more accurate reconstruction from random projections. More efforts will be made in future work to improve the measurement matrix. And More types of HIS data need to be verified for the proposed method.

References

Donoho, D. L. (2006). Compressed sensing. IEEE Transactions on Information Theory, 52(4), 1289–1306.

Candes, E. J., Romberg, J., & Tao, T. (2006). Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Transactions on Information Theory, 52(2), 489–509.

Slepian, D., & Wolf, J. K. (1973). Noiseless coding of correlated information sources. IEEE Transaction on Information Theory, 9, 471–480.

Wyner, A. D., & Ziv, J. (1976). The rate-distortion function for source coding with side information at the decoder. IEEE Transaction on Information Theory, 22, 1–10.

Duarte, M. F., Sarvotham, S., & Baron, D. et al. (2004) Distributed compressed sensing of jointly sparse signals. In The 39-th Asilomar conference on signals, systems and computers (pp. 1537–1541).

Rudin, L., Osher, S., & Fatemi, E. (1992). Nonliner total variation noise removal algorithms. Physics D, 60, 259–268.

Eason, D. T., & Andrews, M. (2015). Total variation regularization via continuation to recover compressed hyperspectral images. IEEE Transactions on Image Processing, 24(1), 284–293.

Golbabaee, M. & Vandergheynst, P. (2012). Joint trace/TV norm minimization: A new efficient approach for spectral compressive imaging. In 19th IEEE international conference on image processing, Orlando, USA (pp. 933–936).

Jia, Y., Feng, Y., & Wang, Z. (2015). Reconstructing hyperspectral images from compressive sensors via exploiting multiple priors. Spectroscopy Letters, 48(1), 22–26.

Yang, J., Zhang, Y., & Yin, W. (2010). A fast alternating direction method for TVL1-L2 signal reconstruction from partial fourier data. IEEE Journal of Selected Topics in Signal Processing, 4(2), 288–297.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of the Topical Collection on Hyperspectral Imaging and Image Processing.

Rights and permissions

About this article

Cite this article

Huang, B., Xu, K., Wan, J. et al. Distributed Compressive Sensing of Hyperspectral Images Using Low Rank and Structure Similarity Property. Sens Imaging 16, 13 (2015). https://doi.org/10.1007/s11220-015-0115-2

Received:

Revised:

Published:

DOI: https://doi.org/10.1007/s11220-015-0115-2