Abstract

This paper deals with the consistency and a rate of convergence for a Nadaraya–Watson estimator of the drift function of a stochastic differential equation driven by an additive fractional noise. The results of this paper are obtained via both some long-time behavior properties of Hairer and some properties of the Skorokhod integral with respect to the fractional Brownian motion. These results are illustrated on the fractional Ornstein–Uhlenbeck process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the stochastic differential equation

where B is a fractional Brownian motion of Hurst index \(H\in ]1/2,1[\), \(b :\mathbb R\rightarrow \mathbb R\) is a continuous map and \(\sigma \in \mathbb R^*\).

Along the last 2 decades, many authors studied statistical inference from observations drawn from stochastic differential equations driven by fractional Brownian motion.

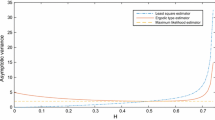

Most references on the estimation of the trend component in Eq. (1) deals with parametric estimators. Kleptsyna and Le Breton (2001) and Hu and Nualart (2010), estimators of the trend component in Langevin’s equation are studied. Kleptsyna and Le Breton (2001) provide a maximum likelihood estimator, where the stochastic integral with respect to the solution of Eq. (1) returns to an Itô integral. Tudor and Viens (2007) extend this estimator to equations with a drift function depending linearly on the unknown parameter. Hu and Nualart (2010) provide a least square estimator, where the stochastic integral with respect to the solution of Eq. (1) is taken in the sense of Skorokhod. Hu et al. (2018) extend this estimator to equations with a drift function depending linearly on the unknown parameter.

Neuenkirch and Tindel (2014), the authors study a least square-type estimator defined by an objective function tailor-maid with respect to the main result of Tudor and Viens (2009) on the rate of convergence of the quadratic variation of the fractional Brownian motion. Chronopoulou and Tindel (2013) provide a likelihood based numerical procedure to estimate a parameter involved in both the drift and the volatility functions in a stochastic differential equation with multiplicative fractional noise.

On the nonparametric estimation of the trend component in Eq. (1), there are only few references. Saussereau (2014) and Mishra and Prakasa Rao (2011) study the consistency of some Nadaraya–Watson’s-type estimators of the drift function b in Eq. (1). On the nonparametric estimation in Itô’s calculus framework, the reader is referred to Kutoyants (2004).

Let \(K :\mathbb R\rightarrow \mathbb R_+\) be a kernel that is a nonnegative function with integral equal to 1. The paper deals with the consistency and a rate of convergence for the Nadaraya–Watson estimator

of the drift function b in Eq. (1), where the stochastic integral with respect to X is taken in the sense of Skorokhod. Since to compute the Skorokhod integral is a challenge, by denoting by \(X_{x_0}\) the solution of Eq. (1) with initial condition \(x_0\in \mathbb R\), the following estimator is also studied:

with \(\varepsilon > 0\) and \(x\in \mathbb R\). In this second estimator, the stochastic integral is taken pathwise. It depends on H, but an estimator of this parameter is for instance provided in Kubilius and Skorniakov (2016).

As detailed in Sect. 2.2, the Skorokhod integral is defined via the divergence operator which is the adjoint of the Malliavin derivative for the fractional Brownian motion. If \(H = 1/2\), then the Skorokhod integral coincides with Itô’s integral on its domain. When \(H\in ]1/2,1[\), it is more difficult to compute the Skorokhod integral, but not impossible as explained at the end of Sect. 2.2. Note that, the pathwise stochastic integral defined in Sect. 2.1 would have been a more natural choice, but unfortunately, it does not provide a consistent estimator (see Proposition 3.3).

Clearly, to be computable, the estimator \(\widehat{b}_{T,h,\varepsilon }(x)\) requires an observed path of the solution of Eq. (1) for two close but different values of the initial condition. This is not possible in any context, but we have in mind the following application field: if \(t\mapsto X_{x_0}(\omega ,t)\) denotes the concentration of a drug along time during its elimination by a patient \(\omega \) with initial dose \(x_0 > 0\), \(t\mapsto X_{x_0 +\varepsilon }(\omega ,t)\) could be approximated by replicating the exact same protocol on patient \(\omega \), but with initial dose \(x_0 +\varepsilon \) after the complete elimination of the previous dose.

We mention that we do not study the additional error which occurs when only discrete time observations with step \(\Delta \) on [0, T] (\(T = n\Delta \)) are available. Formula (3) has then to be discretized and a study in the spirit of Saussereau (2014) (Sect. 4.3) must be conducted.

Section 2 deals with some preliminary results on stochastic integrals with respect to the fractional Brownian motion and an ergodic theorem for the solution of Eq. (1). The consistency and a rate of convergence of the Nadaraya–Watson estimator studied in this paper are stated in Sect. 3. Almost all the proofs of the paper are provided in Sect 4.

Notations:

-

(1)

The vector space of Lipschitz continuous maps from \(\mathbb R\) into itself is denoted by \(\text {Lip}(\mathbb R)\) and equipped with the Lipschitz semi-norm \(\Vert .\Vert _{\text {Lip}}\) defined by

$$\begin{aligned} \Vert \varphi \Vert _{\text {Lip}} := \sup \left\{ \frac{|\varphi (y) -\varphi (x)|}{|y - x|} \text { ; } x,y\in \mathbb R \text { and } x\not = y\right\} \end{aligned}$$for every \(\varphi \in \text {Lip}(\mathbb R)\).

-

(2)

For every \(m\in \mathbb N\),

$$\begin{aligned} C_{b}^{m}(\mathbb R) := \left\{ \varphi \in C^m(\mathbb R) : \max _{k\in \llbracket 0,m\rrbracket } \Vert \varphi ^{(k)}\Vert _{\infty } <\infty \right\} . \end{aligned}$$ -

(3)

For every \(m\in \mathbb N^*\),

$$\begin{aligned} \text {Lip}_{b}^{m}(\mathbb R) := \left\{ \varphi \in C^m(\mathbb R) : \varphi \in \text {Lip}(\mathbb R) \text { and } \max _{k\in \llbracket 1,m\rrbracket } \Vert \varphi ^{(k)}\Vert _{\infty } <\infty \right\} \end{aligned}$$and for every \(\varphi \in \text {Lip}_{b}^{m}(\mathbb R)\),

$$\begin{aligned} \Vert \varphi \Vert _{\text {Lip}_{b}^{m}} := \Vert \varphi \Vert _{\text {Lip}}\vee \max _{k\in \llbracket 1,m\rrbracket }\Vert \varphi ^{(k)}\Vert _{\infty }. \end{aligned}$$The map \(\Vert .\Vert _{\text {Lip}_{b}^{m}}\) is a semi-norm on \(\text {Lip}_{b}^{m}(\mathbb R)\). Note that for every \(m\in \mathbb N^*\),

$$\begin{aligned} C_{b}^{m}(\mathbb R) \subset \text {Lip}_{b}^{m}(\mathbb R). \end{aligned}$$ -

(4)

Consider \(n\in \mathbb N^*\). The vector space of infinitely continuously differentiable maps \(f :\mathbb R^n\rightarrow \mathbb R\) such that f and all its partial derivatives have polynomial growth is denoted by \(C_{p}^{\infty }(\mathbb R^n,\mathbb R)\).

2 Stochastic integrals with respect to the fractional Brownian motion and an ergodic theorem for fractional SDE

On the one hand, this section presents two different methods to define a stochastic integral with respect to the fractional Brownian motion. The first one is based on the pathwise properties of the fractional Brownian motion. Even if this approach is very natural, it is proved in Proposition 3.3 that the pathwise stochastic integral is not appropriate to get a consistent estimator of the drift function b in Eq. (1). Another stochastic integral with respect to the fractional Brownian motion is defined via the Malliavin divergence operator. This stochastic integral is called Skorokhod’s integral with respect to B. If \(H = 1/2\), which means that B is a Brownian motion, the Skorokhod integral defined via the divergence operator coincides with Itô’s integral on its domain. This integral is appropriate for the estimation of the drift function b in Eq. (1). On the other hand, an ergodic theorem for the solution of Eq. (1) is stated in Sect. 2.3.

2.1 The pathwise stochastic integral

This subsection deals with some definitions and basic properties of the pathwise stochastic integral with respect to the fractional Brownian motion of Hurst index greater than 1 / 2.

Definition 2.1

Consider x and w two continuous functions from [0, T] into \(\mathbb R\). Consider a partition \(D := (t_k)_{k\in \llbracket 0,m\rrbracket }\) of [s, t] with \(m\in \mathbb N^*\) and \(s,t\in [0,T]\) such that \(s < t\). The Riemann sum of x with respect to w on [s, t] for the partition D is

Notation. With the notations of Definition 2.1, the mesh of the partition D is

The following theorem ensures the existence and the uniqueness of Young’s integral (see Friz and Victoir 2010, Theorem 6.8).

Theorem 2.2

Let x (resp. w) be a \(\alpha \)-Hölder (resp. \(\beta \)-Hölder) continuous map from [0, T] into \(\mathbb R\) with \(\alpha ,\beta \in ]0,1]\) such that \(\alpha +\beta > 1\). There exists a unique continuous map \(J_{x,w} : [0,T]\rightarrow \mathbb R\) such that for every \(s,t\in [0,T]\) satisfying \(s < t\) and any sequence \((D_n)_{n\in \mathbb N}\) of partitions of [s, t] such that \(\delta (D_n)\rightarrow 0\) as \(n\rightarrow \infty \),

The map \(J_{x,w}\) is the Young integral of x with respect to w and \(J_{x,w}(t) - J_{x,w}(s)\) is denoted by

for every \(s,t\in [0,T]\) such that \(s < t\).

The following proposition is a change of variable for Young’s integral.

Proposition 2.3

Let x be a \(\alpha \)-Hölder continuous map from [0, T] into \(\mathbb R\) with \(\alpha \in ]1/2,1[\). For every \(\varphi \in {\text {Lip}}_{b}^{1}(\mathbb R)\) and \(s,t\in [0,T]\) such that \(s < t\),

For any \(\alpha \in ]1/2,H[\), the paths of B are \(\alpha \)-Hölder continuous (see Nualart 2006, Section 5.1). So, for every process \(Y := (Y(t))_{t\in [0,T]}\) with \(\beta \)-Hölder continuous paths from [0, T] into \(\mathbb R\) such that \(\alpha +\beta > 1\), by Theorem 2.2, it is natural to define the pathwise stochastic integral of Y with respect to B by

for every \(\omega \in \Omega \) and \(t\in [0,T]\).

2.2 The Skorokhod integral

This subsection deals with some definitions and results on Malliavin calculus in order to define and to provide a suitable expression of Skorokhod’s integral.

Consider the vector space

Equipped with the scalar product

\(\mathcal H\) is the reproducing kernel Hilbert space of B. Let \(\mathbf B\) be the map defined on \(\mathcal H\) by

which is the Wiener integral of h with respect to B. The family \((\mathbf B(h))_{h\in \mathcal H}\) is an isonormal Gaussian process.

Definition 2.4

The Malliavin derivative of a smooth functional

where \(n\in \mathbb N^*\), \(f\in C_{p}^{\infty }(\mathbb R^n,\mathbb R)\) and \(h_1,\dots ,h_n\in \mathcal H\) is the \(\mathcal H\)-valued random variable

Proposition 2.5

The map \(\mathbf D\) is closable from \(L^2(\Omega ,\mathcal A,\mathbb P)\) into \(L^2(\Omega ;\mathcal H)\). Its domain in \(L^2(\Omega ,\mathcal A,\mathbb P)\) is denoted by \(\mathbb D^{1,2}\) and is the closure of the smooth functionals space for the norm \(\Vert .\Vert _{1,2}\) defined by

for every \(F\in L^2(\Omega ,\mathcal A,\mathbb P)\).

For a proof, see Nualart (2006, Proposition 1.2.1).

Definition 2.6

The adjoint \(\delta \) of the Malliavin derivative \(\mathbf D\) is the divergence operator. The domain of \(\delta \) is denoted by \( {\text {dom}}(\delta )\) and \(u\in {\text {dom}}(\delta )\) if and only if there exists a deterministic constant \(c > 0\) such that for every \(F\in \mathbb D^{1,2}\),

For every process \(Y := (Y(s))_{s\in \mathbb R_+}\) and every \(t > 0\), if \(Y\mathbf 1_{[0,t]}\in \text {dom}(\delta )\), then its Skorokhod integral with respect to B is defined on [0, t] by

With the same notations:

The following proposition provides the link between the Skorokhod integral and the pathwise stochastic integral of Sect. 2.1.

Proposition 2.7

If \(b\in {\text {Lip}}_{b}^{1}(\mathbb R)\), then Eq. (1) with initial condition \(x\in \mathbb R\) has a unique solution \(X_x\) with \(\alpha \)-Hölder continuous paths for every \(\alpha \in ]0,H[\). Moreover, for every \(\varphi \in {\text {Lip}}_{b}^{1}(\mathbb R)\),

where \(\alpha _H = H(2H - 1)\).

Moreover, we can prove the following Corollary, which allows us to propose a computable form for the estimator.

Corollary 2.8

Assume that \(b\in {\text {Lip}}_{b}^{2}(\mathbb R)\) and there exists a constant \(M > 0\) such that

For every \(\varphi \in {\text {Lip}}_{b}^{1}(\mathbb R)\), \(x\in \mathbb R\) and \(\varepsilon ,t > 0\),

where

and

As mentioned in the Introduction, the formula for \( S_{\varphi }(x,\varepsilon ,t)\) can be used if two paths of X can be observed with different but close initial conditions.

Lastly, the following theorem, recently proved by Hu et al. (2018) (see Proposition 4.4), provides a suitable control of Skorokhod’s integral to study its long-time behavior.

Theorem 2.9

Assume that \(b\in {\text {Lip}}_{b}^{2}(\mathbb R)\) and there exists a constant \(M > 0\) such that

There exists a deterministic constant \(C > 0\), not depending on T, such that for every \(\varphi \in {\text {Lip}}_{b}^{1}(\mathbb R)\):

2.3 Ergodic theorem for the solution of a fractional SDE

On the ergodicity of fractional SDEs, the reader can refer to Hairer (2005), Hairer and Ohashi (2007) and Hairer and Pillai (2013) (see Sect. 4.3 for details).

In the sequel, the map b fulfills the following condition.

Assumption 2.10

The map b belongs to \( {\text {Lip}}_{b}^{\infty }(\mathbb R)\) and there exists a constant \(M > 0\) such that

Remark

-

(1)

Since \(b\in \text {Lip}_{b}^{1}(\mathbb R)\), Eq. (1) has a unique solution.

-

(2)

Under Assumption 2.10, the dissipativity conditions of Hairer (2005), Hairer and Ohashi (2007) and Hu et al. (2018) are fulfilled by b:

$$\begin{aligned} (x - y)(b(x) - b(y)) \leqslant -M(x - y)^2;\quad \forall x,y\in \mathbb R \end{aligned}$$and there exists a constant \(M' > 0\) such that

$$\begin{aligned} xb(x)\leqslant M'(1 - x^2);\quad \forall x\in \mathbb R. \end{aligned}$$Therefore, Assumption 2.10 is sufficient to apply the results proved in Hairer (2005), Hairer and Ohashi (2007) and Hu et al. (2018) in the sequel.

Proposition 2.11

Consider a measurable map \(\varphi :\mathbb R\rightarrow \mathbb R_+\) such that there exists a nonempty compact subset C of \(\mathbb R\) satisfying \(\varphi (C)\subset ]0,\infty [\). Under Assumption 2.10, there exists a deterministic constant \(l(\varphi ) > 0\) such that

3 Convergence of the Nadaraya–Watson estimator of the drift function

This section deals with the consistency and rate of convergence of the Nadaraya–Watson estimator of the drift function b in Eq. (1).

In the sequel, the kernel K fulfills the following assumption.

Assumption 3.1

\( {\text {supp}}(K) = [-1,1]\) and \(K\in C_{b}^{1}(\mathbb R,\mathbb R_+)\).

3.1 Why is pathwise integral inadequate

First of all, let us prove that, even if it seems very natural, the pathwise Nadaraya–Watson estimator

where

is not consistent.

For this, we need the following lemma providing a convergence result for \(\widehat{f}_{T,h}(x)\). It will also be used to prove Proposition 3.4.

Lemma 3.2

Under Assumptions 2.10 and 3.1, there exists a deterministic constant \(l_h(x) > 0\) such that

Proof

Under Assumption 3.1, the map

satisfies the condition on \(\varphi \) of Proposition 2.11, which applies thus here and gives the result. \(\square \)

Now, we state the result proving that \(\widetilde{b}_{T,h}(x)\) is not consistent to recover b(x).

Proposition 3.3

Under Assumptions 2.10 and 3.1:

Proof

Let \(\mathcal K\) be a primitive function of K. By the change of variable formula for Young’s integral (Proposition 2.3):

Then,

Since \(\mathcal K\) is differentiable with bounded derivative K:

Finally, as we know by Hairer (2005, Proposition 3.12) that

is uniformly bounded, and by Lemma 3.2 that \(\widehat{f}_{T,h}(x)\) converges almost surely to \(l_h(x) > 0\) as \(T\rightarrow \infty \), it follows that \(\widetilde{b}_{T,h}(x)\) converges to 0 in probability, when \(T\rightarrow \infty \). \(\square \)

This is why the Skorokhod integral replaces the pathwise stochastic integral in \(\widehat{b}_{T,h}(x)\).

3.2 Convergence of the Nadaraya–Watson estimator

This subsection deals with the consistency and rate of convergence of the estimators.

The Nadaraya–Watson estimator \(\widehat{b}_{T,h}(x)\) defined by Eq. (2) can be decomposed as follows:

where \(\widehat{f}_{T,h}(x)\) is defined by (6),

and

By using the Lipschitz assumption 2.10 on b together with the technical lemmas proved in Sect. 2, the estimators \( \widehat{b}_{T,h}(x)\text { and } \widehat{b}_{T,h,\varepsilon }(x) \) can be studied.

Proposition 3.4

Under Assumptions 2.10 and 3.1,

and there exists a positive constant C such that

As a consequence, for fixed \(h>0\), we have

Moreover, for \(\widehat{b}_{T,h, \varepsilon }\) defined by (3), \(\forall \varepsilon >0\),

Heuristically, Proposition 3.4 says that the pointwise quadratic risk of the kernel estimator \(\widehat{b}_{T,h}(x)\) involves a squared bias of order \(h^2\) and a variance term of order \(1/(h^4T^{2(1 - H)})\). The best possible rate is thus \(T^{-\frac{2}{3} (1-H)}\) with a bandwidth choice of order \(T^{-\frac{1}{3} (1-H)}\). A more rigorous formulation of this is stated below.

Note also that it follows from (9) that the rate of \(\widehat{b}_{T,h,\varepsilon }(x)\) is preserved for any small \(\varepsilon \).

We want to emphasize that no order condition is set on the kernel, and the bias term is not bounded in the usual way for kernel setting (see e.g. Tsybakov (2004), Chapter 1). Indeed, we can not refer to the expectation of the numerator as a convolution product, because the existence of a stationary density is not ensured. Would it exist, it would be difficult to set adequate regularity conditions on it.

Now, consider a decreasing function \(h : [t_0,\infty [\rightarrow ]0,1[\) (\(t_0\in \mathbb R_+\)) such that

and assume that \(\widehat{f}_{T,h(T)}(x)\) fulfills the following assumption.

Assumption 3.5

There exists \(l(x)\in ]0,\infty ]\) such that \(\widehat{f}_{T,h(T)}(x)\) converges to l(x) in probability as \(T\rightarrow \infty \).

Section 3.3 deals with the special case of fractional SDE with Gaussian solution in order to prove that Assumption 3.5 holds in this setting.

In Proposition 3.6, the result of Proposition 3.4 is extended to the estimator \(\widehat{b}_{T,h(T)}(x)\) under Assumption 3.5.

Proposition 3.6

Under Assumptions 2.10, 3.1 and 3.5:

-

(1)

If there exists \(\beta \in ]0,1 - H[\) such that \(T^{-\beta } =_{T\rightarrow \infty } o(h(T)^2)\), then

$$\begin{aligned} \widehat{b}_{T,h(T)}(x) \xrightarrow [T\rightarrow \infty ]{\mathbb P} b(x). \end{aligned}$$ -

(2)

For every \(\gamma \in ]0,\beta [\) such that

$$\begin{aligned} h(T) =_{T\rightarrow \infty } o(T^{-\gamma }) \text { and } T^{H - 1 +\gamma } =_{T\rightarrow \infty } o(h(T)^2), \end{aligned}$$then

$$\begin{aligned} T^{\gamma }|\widehat{b}_{T,h(T)}(x) - b(x)| \xrightarrow [T\rightarrow \infty ]{\mathbb P} 0. \end{aligned}$$

Example

Consider

-

\(Th(T) = T^{H +\frac{2}{3}(1 - H)}\xrightarrow [T\rightarrow \infty ]{}\infty \).

-

\(T^{-\beta }/h(T)^2 = T^{-\beta +\frac{2}{3}(1 - H)}\xrightarrow [T\rightarrow \infty ]{} 0\).

-

For every \(\gamma \in ]0,(1 - H)/3[\), \(h(T)/T^{-\gamma } = T^{\frac{H - 1}{3} +\gamma }\xrightarrow [T\rightarrow \infty ]{} 0\).

-

For every \(\gamma \in ]0,(1 - H)/3[\), \(T^{H - 1 +\gamma }/h(T)^2 = T^{\frac{H - 1}{3} +\gamma }\xrightarrow [T\rightarrow \infty ]{} 0\).

In Corollary 3.7, the result of Proposition 3.6 is extended to \(\widehat{b}_{T,h(T),\varepsilon (T)}(x)\) where

Corollary 3.7

Under Assumptions 2.10, 3.1 and 3.5:

-

(1)

If there exists \(\beta \in ]0,1 - H[\) such that

$$\begin{aligned} T^{-\beta } =_{T\rightarrow \infty } o(h(T)^2) \text { and } \varepsilon (T) =_{T\rightarrow \infty } o(h(T)^{-2}T^{2H - 2}), \end{aligned}$$then

$$\begin{aligned} \widehat{b}_{T,h(T),\varepsilon (T)}(x) \xrightarrow [T\rightarrow \infty ]{\mathbb P} b(x). \end{aligned}$$ -

(2)

For every \(\gamma \in ]0,\beta [\) such that

$$\begin{aligned} \left\{ \begin{array}{lll} &{}h(T) =_{T\rightarrow \infty } &{} o(T^{-\gamma }),\\ &{}T^{H - 1 +\gamma } =_{T\rightarrow \infty } &{} o(h(T)^2)\\ &{}\varepsilon (T) =_{T\rightarrow \infty } &{} o(h(T)^{-2}T^{2H - 2 +\gamma }) \end{array}\right. , \end{aligned}$$then

$$\begin{aligned} T^{\gamma }|\widehat{b}_{T,h(T),\varepsilon (T)}(x) - b(x)| \xrightarrow [T\rightarrow \infty ]{\mathbb P} 0. \end{aligned}$$

Example

Example One can take \(\varepsilon (T) := h(T)^2\).

3.3 Special case of fractional SDE with Gaussian solution

The purpose of this subsection is to show that Assumption 3.5 holds when the drift function in Eq. (1) is linear with a negative slope. Note also that if \(H = 1/2\), then \(\widehat{f}_{T,h(T)}\) is a consistent estimator of the stationary density for Eq. (1) (see Kutoyants 2004, Section 4.2).

Assume that Eq. (1) has a centered Gaussian stationary solution X and consider the normalized process \(Y := X/\sigma _0\) where \(\sigma _0 :=\sqrt{\text {var}(X_0)}\).

Throughout this subsection, \(\nu \) is the standard normal density and the autocorrelation function \(\rho \) of Y fulfills the following assumption.

Assumption 3.8

\(\displaystyle {\int _{0}^{T}\int _{0}^{T} |\rho (v - u)|dvdu =_{T\rightarrow \infty } O(T^{2H})}\).

The following proposition ensures that under Assumption 3.8, \(\widehat{f}_{T,h(T)}\) fulfills Assumption 3.5 for every \(x\in \mathbb R^*\).

Proposition 3.9

Under Assumptions 2.10 and 3.1, if Eq. (1) has a centered, Gaussian, stationary solution X, the autocorrelation function \(\rho \) of \(Y := X/\sigma _0\) satisfies Assumption 3.8 and \(T^{2H - 2} =_{T\rightarrow \infty } o(h(T))\), then

for every \(x\in \mathbb R^*\).

Now, consider the fractional Langevin equation

where \(\lambda ,\sigma > 0\). Equation (11) has a unique solution called Ornstein–Uhlenbeck’s process.

On the one hand, the drift function of Eq. (11) fulfills Assumption 2.10. So, under Assumption 3.1, by Proposition 3.4,

where

On the other hand, by Cheridito et al. (2003, Section 2), Eq. (11) has a centered, Gaussian, stationary solution X such that:

Moreover, Cheridito et al. (2003, Theorem 2.3), the autocorrelation function \(\rho \) of \(Y := X/\sigma _0\) satisfies

So, \(\rho \) fulfills Assumption 3.8.

Consider \(\beta \in ]0,2H - 1[\) and \(\gamma \in ]0,2H - 1 -\beta [\) such that

Then,

Therefore, by Propositions 3.6 and 3.9:

4 Proofs

4.1 Proof of Proposition 2.7

On the existence, uniqueness and regularity of the paths of the solution of Eq. (1), see Lejay (2010).

Now, let us prove (4).

Let \(X_x\) be the solution of Eq. (2.7) with initial condition \(x\in \mathbb R\). Consider also \(\varphi \in \text {Lip}_{b}^{1}(\mathbb R)\) and \(t > 0\). By Nualart (2006), Proposition 5.2.3:

Consider \(u,v\in [0,t]\). On the one hand,

Then,

On the other hand,

Then,

Therefore,

and

4.2 Proof of Corollary 2.8

Consider \(x\in \mathbb R\) and \(\varepsilon ,t > 0\). For every \(s\in [0,t]\),

and, by Taylor’s formula,

So, for every \((u,v)\in [0,t]^2\) such that \(v < u\),

and

For a given \(\varphi \in \text {Lip}_{b}^{1}(\mathbb R)\), by Proposition 2.7,

where

and, for every \((u,v)\in [0,t]^2\) such that \(v < u\),

Since \(b'(\mathbb R)\subset ]-\infty ,0]\) and b is two times continuously differentiable,

Consider \(s\in \mathbb R_+\). By Eq. (1):

By the mean-value theorem, there exists \(x_s\in \mathbb R\) such that

and then,

Therefore,

Finally, using the above bounds, and in a second stage, the integration by parts formula, we get:

4.3 Proof of Proposition 2.11

Consider \(\gamma \in ]1/2,H[\), \(\delta \in ]H -\gamma ,1 -\gamma [\) and \(\Omega :=\Omega _-\times \Omega _+\), where \(\Omega _-\) (resp. \(\Omega _+\)) is the completion of \(C_{0}^{\infty }(\mathbb R_-,\mathbb R)\) (resp. \(C_{0}^{\infty }(\mathbb R_+,\mathbb R)\)) with respect to the norm \(\Vert .\Vert _-\) (resp. \(\Vert .\Vert _+\)) defined by

(resp.

By Hairer (2005, Section 3) or more clearly by Hairer and Ohashi (2007, Lemmas 4.1 and 4.2), there exist a Borel probability measure \(\mathbb P\) on \(\Omega \) and a transition kernel P from \(\Omega _-\) to \(\Omega _+\) such that:

-

The process generated by \((\Omega ,\mathbb P)\) is a two-sided fractional Brownian motion \(\widetilde{B}\).

-

For every Borel set U (resp. V) of \(\Omega _-\) (resp. \(\Omega _+\)),

$$\begin{aligned} \mathbb P(U\times V) = \int _U P(\omega _-,V)\mathbb P_-(d\omega _-) \end{aligned}$$where \(\mathbb P_-\) is the probability distribution of \((\widetilde{B}(t))_{t\in \mathbb R_-}\).

Let \(I :\mathbb R\times \Omega _+\rightarrow C^0(\mathbb R_+,\mathbb R)\) be the Itô (solution) map for Eq. (1). In general, I(x, .) with \(x\in \mathbb R\) is not a Markov process. However, the solution of Eq. (1) can be coupled with the past of the driving signal in order to bypass this difficulty. In other words, consider the enhanced Itô map \(\mathfrak I :\mathbb R\times \Omega \rightarrow C^0(\mathbb R_+,\mathbb R\times \Omega _-)\) such that for every \((x,\omega _-,\omega _+)\in \mathbb R\times \Omega \) and \(t\in \mathbb R_+\),

where \(p_{\Omega _-}\) is the projection from \(\Omega \) onto \(\Omega _-\),

and \(\omega _-\sqcup \omega _+\) is the concatenation of \(\omega _-\) and \(\omega _+\). By Hairer (2005, Lemma 2.12), the process \(\mathfrak I(x,.)\) is Markovian and has a Feller transition semigroup \((Q(t))_{t\in \mathbb R_+}\) such that for every \(t\in \mathbb R_+\), \((x,\omega _-)\in \mathbb R\times \Omega _-\) and every Borel set U (resp. V) of \(\mathbb R\) (resp. \(\Omega _-\)),

where \(\delta _y\) is the delta measure located at \(y\in \mathbb R\) and \(P(t;\omega _-,.)\) is the pushforward measure of \(P(\omega _-,.)\) by \(\theta (\omega _-,.)(t)\).

In order to prove Proposition 2.11, let us first state the following result from Hairer (2005) and Hairer and Ohashi (2007).

Theorem 4.1

Under Assumption 2.10:

-

(1)

(Irreducibility) There exists \(\tau \in ]0,\infty [\) such that for every \((x,\omega _-)\in \mathbb R\times \Omega _-\) and every nonempty open set \(U\subset \mathbb R\),

$$\begin{aligned} Q(\tau ; (x,\omega _-),U\times \Omega _-) > 0. \end{aligned}$$ -

(2)

There exists a unique probability measure \(\mu \) on \(\mathbb R\times \Omega _-\) such that \(\mu (p_{\Omega _-}\in \cdot ) =\mathbb P_-\) and

$$\begin{aligned} Q(t)\mu =\mu ;\quad \forall t\in \mathbb R_+. \end{aligned}$$

For a proof of Theorem 4.1.(1), see Hairer and Ohashi (2007, Proposition 5.8). For a proof of Theorem 4.1.(2), see Hairer (2005, Theorem 6.1) which is a consequence of Proposition 2.18, Lemma 2.20 and Proposition 3.12.

Since the Feller transition semigroup Q has exactly one invariant measure \(\mu \) by Theorem 4.1, \(\mu \) is ergodic, and since the first component of the process generated by Q is a solution of Eq. (1), by the ergodic theorem for Markov processes:

Moreover, \(\mu = Q(\tau )\mu \). So,

Since

by Theorem 4.1.(1), then

Therefore, \(\mu (\varphi \circ p_{\mathbb R}) > 0\).

4.4 Proof of Proposition 3.4

First write that, under Assumption 2.10, for any \(s\in [0,T]\) such that \(X(s)\in [x - h,x + h]\),

So,

Next, the following Lemma provides a suitable control of \(\mathbb E(|S_{T,h}(x)|^2)\).

Lemma 4.2

Under Assumptions 2.10 and 3.1, there exists a deterministic constant \(C > 0\), not depending on h and T, such that:

Proof

Since K belongs to \(C_{b}^{1}(\mathbb R,\mathbb R_+)\), the map

belongs to \(\text {Lip}_{b}^{1}(\mathbb R)\). Moreover, since K and \(K'\) are continuous with bounded support \([-1,1]\),

and

Therefore, by Theorem 2.9, there exists a deterministic constant \(C > 0\), not depending on h and T, such that:

\(\square \)

First, by Inequality (12) and Eq. (7),

where \(V_{T,h}(x)\) is defined by (8).

Consider \(\beta \in [0,1 - H[\). By Lemma 4.2:

So,

Moreover, by Lemma 3.2:

Therefore, by Slutsky’s lemma:

Lastly, the bound (9) follows from the following Lemma.

Lemma 4.3

Under Assumptions 2.10 and 3.1, there exists a deterministic constant \(C > 0\), not depending on \(\varepsilon \), h and T, such that:

Proof

Since K belongs to \(C_{b}^{1}(\mathbb R,\mathbb R_+)\), the map

belongs to \(\text {Lip}_{b}^{1}(\mathbb R)\). Consider

By Corollary 2.8:

where

Therefore,

\(\square \)

4.5 Proof of Proposition 3.6

On the one hand, assume that there exists \(\beta \in ]0,1 - H[\) such that

in order to show the consistency of the estimator \(\widehat{b}_{T,h(T)}(x)\). First, let us prove that

For \(\varepsilon > 0\) arbitrarily chosen:

By Lemma 4.2:

So, since

the convergence result (13) is true.

Moreover, by Inequality (12):

Therefore, by the convergence results (13) and (14) together with Eq. (7):

On the other hand, let \(\gamma \in ]0,\beta [\) be arbitrarily chosen such that

in order to show that

First, by Inequality (12) and Eq. (7):

By Lemma 4.2:

So, since

by Slutsky’s lemma:

Finally, since \(h(T) =_{T\rightarrow \infty } o(T^{-\gamma })\), by Eq. (16), the convergence result (15) is true.

4.6 Proof of Corollary 3.7

In order to establish a rate of convergence for \(\widehat{b}_{T,h,\varepsilon }(x)\), Lemmas 4.2 and 4.3 provide a suitable control.

Indeed, by Lemma 4.3, there exists a deterministic constant \(C > 0\) such that:

Proposition 3.6 allows to conclude.

4.7 Proof of Proposition 3.9

Consider a random variable \(U\rightsquigarrow \mathcal N(0,1)\) and

which is a subset of \(L^2(\mathbb R,\nu (y)dy)\).

The Hermite polynomials

form a complet orthogonal system of functions of \(L^2(\mathbb R,\nu (y)dy)\) such that

By Taqqu (1975) (see p. 291) and Puig et al. (2002, Lemma 3.3):

-

(1)

For any \(G\in \mathcal G\) and \(y\in \mathbb R\),

$$\begin{aligned} G(y) =\sum _{q = m(G)}^{\infty }\frac{J(q)}{q!}H_q(y) \end{aligned}$$(17)in \(L^2(\mathbb R,\nu (y)dy)\), where

$$\begin{aligned} J(q) :=\mathbb E(G(U)H_q(U));\quad \forall q\in \mathbb N \end{aligned}$$and

$$\begin{aligned} m(G) := \inf \{q\in \mathbb N : J(q)\not = 0\}. \end{aligned}$$ -

(2)

(Mehler’s formula) For any centered, normalized and stationary Gaussian process Z of autocorrelation function R:

$$\begin{aligned} \mathbb E(H_q(Z(u))H_p(Z(v))) = q!R(v - u)^q\delta _{p,q};\quad \forall u,v\in \mathbb R_+ \text {, } \forall p,q\in \mathbb N. \end{aligned}$$(18)

Consider the map \(K_T :\mathbb R\rightarrow \mathbb R\) defined by:

In order to use (17) and (18) to prove the convergence result (10), note that \(\widehat{f}_{T,h(T)}(x)\) can be rewritten as

where

and

Lemma 4.4

The map \(G_{T,x}\) belongs to \(\mathcal G\) and there exists \(T_x > 0\) such that

Proof

On the one hand, since \(K_T\) is continuous and its support is compact, \(G_{T,x}\in L^2(\mathbb R,\nu (y)dy)\). Moreover,

So, \(G_{T,x}\in \mathcal G\).

On the other hand, for every \(q\in \mathbb N\), by putting \(J_{T,x}(q) :=\mathbb E(G_{T,x}(U)H_q(U))\),

For any \(x > 0\), there exists \(T_{x}^{+} > 0\) such that for every \(T > T_{x}^{+}\),

For every \(T > T_{x}^{+}\), since \(y\mapsto K_T(\sigma _0y - x)\), \(\nu \) and \(\text {Id}_{\mathbb R}\) are continuous and strictly positive on \(I_{T,x}^{\circ }\), \(J_{T,x}(1) > 0\). Symmetrically, for every \(x < 0\), there exists \(T_{x}^{-} > 0\) such that for every \(T > T_{x}^{-}\), \(J_{T,x}(1) < 0\). This concludes the proof. \(\square \)

Lemma 4.5

For every \(x\in \mathbb R^*\),

Proof

Since \(G_{T,x}\in L^2(\mathbb R,\nu (y)dy)\), by Parseval’s inequality:

On the one hand,

So,

On the other hand,

Therefore,

\(\square \)

In order to prove the convergence result (10), since

let us prove that

By the decomposition (17) and Mehler’s formula (18) applied to \(G_{T,x}\) and Y, for every \(u,v\in [0,T]\),

So, since \(\rho \) is a \([-1,1]\)-valued function,

Then, by Assumption 3.8 and Lemma 4.5:

Therefore, the convergence result (19) is true.

References

Bajja S, Es-Sebaiy K, Viitasaari L (2017) Least square estimator of fractional Ornstein–Uhlenbeck processes with periodic mean. J Korean Stat Soc 36(4):608–622

Bosq D (1996) Nonparametric statistics for stochastic processes: estimation and prediction. Springer, Berlin

Cheridito P, Kawaguchi H, Maejima M (2003) Fractional Ornstein–Uhlenbeck processes. Electron J Probab 8(3):1–14

Chronopoulou A, Tindel S (2013) On inference for fractional differential equations. Stat Inference Stoch Process 16(1):29–61

Friz P, Hairer M (2014) A course on rough paths. Springer, Berlin

Friz P, Victoir N (2010) Multidimensional stochastic processes as rough paths: theory and applications. Cambridge studies in applied mathematics, vol 120. Cambridge University Press, Cambridge

Hairer M (2005) Ergodicity of stochastic differential equations driven by fractional Brownian motion. Ann Probab 33(3):703–758

Hairer M, Ohashi A (2007) Ergodic theory for SDEs with extrinsic memory. Ann Probab 35(5):1950–1977

Hairer M, Pillai NS (2013) Ergodicity of hypoelliptic SDEs driven by fractional Brownian motion. Annales de l’IHP 47(4):2544–2598

Hu Y, Nualart D (2010) Parameter estimation for fractional Ornstein–Uhlenbeck processes. Stat Probab Lett 80:1030–1038

Hu Y, Nualart D, Zhou H (2018) Drift parameter estimation for nonlinear stochastic differential equations driven by fractional Brownian motion. arXiv:1803.01032v1

Kleptsyna ML, Le Breton A (2001) Some explicit statistical results about elementary fractional type models. Nonlinear Anal 47:4783–4794

Kubilius K, Skorniakov V (2016) On some estimators of the Hurst index of the solution of SDE driven by a fractional Brownian motion. Stat Probab Lett 109:159–167

Kutoyants Y (2004) Statistical inference for ergodic diffusion processes. Springer, Berlin

Lejay A (2010) Controlled differential equations as Young integrals: a simple approach. J Differ Equ 249:1777–1798

Lindgren G (2006) Lectures on stationary stochastic processes. Ph.D. course of Lund’s University

Mishra MN, Prakasa Rao BLS (2011) Nonparameteric estimation of trend for stochastic differential equations driven by fractional Brownian motion. Stat Inference Stoch Process 14(2):101–109

Mishura Y, Ralchenko K (2014) On drift parameter estimation in models with fractional Brownian motion by discrete observations. Austrian J Stat 43(3–4):217–228

Neuenkirch A, Tindel S (2014) A least square-type procedure for parameter estimation in stochastic differential equations with additive fractional noise. Stat Inference Stoch Process 17(1):99–120

Nualart D (2006) The Malliavin calculus and related topics. Springer, Berlin

Puig B, Poirion F, Soize C (2002) Non-Gaussian simulation using Hermite polynomial expansion: convergences and algorithms. Probab Eng Mech 17:253–264

Revuz D, Yor M (1999) Continuous Martingales and Brownian motion. A series of comprehensive studies in mathematics, vol 293, 3rd edn. Springer, Berlin

Saussereau B (2014) Nonparametric inference for fractional diffusion. Bernoulli 20(2):878–918

Taqqu MS (1975) Weak convergence to fractional Brownian motion and to the Rosenblatt process. Z Warscheinlichkeitstheorie verw Gebiete 31:287–302

Tsybakov AB (2009) Introduction to nonparametric estimation. Revised and extended from the 2004 French original. Translated by Vladimir Zaiats. Springer Series in Statistics. Springer, New York

Tudor CA, Viens F (2007) Statistical aspects of the fractional stochastic calculus. Ann Stat 35(3):1183–1212

Tudor CA, Viens F (2009) Variations and estimators for self-similarity parameters via Malliavin calculus. Ann Probab 37(6):2093–2134

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Comte, F., Marie, N. Nonparametric estimation in fractional SDE. Stat Inference Stoch Process 22, 359–382 (2019). https://doi.org/10.1007/s11203-019-09196-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11203-019-09196-y