Abstract

This study investigates the link between basic math skills, remediation, and the educational opportunity and outcomes of community college students. Capitalizing on a unique placement policy in one community college that assigns students to remedial coursework based on multiple math skill cutoffs, I first identify the skills that most commonly inhibit student access to higher-level math courses; these are procedural fluency with fractions and the ability to solve word problems. I then estimate the impact of “just missing” these skill cutoffs using multiple rating-score regression discontinuity design. Missing just one fractions question on the placement diagnostic, and therefore starting college in a lower-level math course, had negative effects on college persistence and attainment. Missing other skill cutoffs did not have the same impacts. The findings suggest the need to reconsider the specific math expectations that regulate access to college math coursework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

There is no question that mathematics plays a central role in shaping college access and opportunities. Not only are math skills and math preparation paramount in the college admissions process (Hacker 2015; Lee 2012), they inform course-taking decisions and can influence students’ academic trajectories in college, for example, away from or towards science, technology, engineering, and mathematics (STEM) fields (Wang 2013). Further, over 90% of postsecondary institutions cite quantitative reasoning as a learning outcome and hold mathematics as a general education requirement for their undergraduates (American Association of Colleges and Universities 2016), cementing mathematics’ status as a gatekeeper in higher education.

Despite the importance of mathematics in higher education, there is surprisingly little evidence on how students’ specific math skills determine college access and opportunity. Skills in advanced algebra and calculus are known to be significant gatekeepers to STEM fields, but how do expectations related to more basic math skills affect college access and outcomes? This inquiry is perhaps most crucial in the context of postsecondary math remediation, which is predicated on the assumption that certain math skills and knowledge, usually up to the intermediate algebra level, are essential for success in college (Burdman 2015). In the community college system, the context for the present study, students entering college without demonstrating proficiency and procedural fluency in basic math topics such as fractions, decimals, and exponents, are typically directed towards developmental courses at the basic math, pre-algebra, or elementary algebra levels (Melguizo et al. 2014). It is estimated that as many as 60% of community college students take remedial math courses in college (Chen 2016). Although these courses are designed to equip students with the skills and knowledge thought to be necessary for success in college, they can also extend time to degree (Ngo and Kosiewicz 2017), divert students from credit-bearing pathways (Martorell and McFarlin 2011; Scott-Clayton and Rodriguez 2015), and significantly decrease the odds of degree completion or transfer to a 4-year university (Bailey et al. 2010; Fong et al. 2015).

The focus of this study is on investigating the link between basic math skills, remediation, and the educational opportunity and outcomes of community college students. I capitalize on skill-specific information gleaned from diagnostic instruments in one large community college in California and a unique policy context in which these data are used to determine how remediation based on basic math skills impacts college outcomes. The data allow me to first identify the skill gaps that most commonly inhibit access to higher-level courses, such as procedural fluency with fractions, solving algebraic equations, and answering word problems. The placement policy in the college, which consists of multiple math skill cutoffs, provides an opportunity to use regression discontinuity (RD) design to examine the impact of remedial placement on student success. I use two forms of multiple rating-score RD—binding-score RD and frontier-score RD—to determine how “just missing” these skill cutoffs and subsequently placing in lower-level courses affected the academic outcomes of community college students.

Although the study is conducted using data from one college and therefore has limited external validity, the findings nevertheless provide unique insight into how institutional expectations related to basic math skills may affect community college students. Several studies of developmental math education have used RD designs and provided evidence on the general impact of postsecondary math remediation on student outcomes [see Valentine et al. (2017) for a review], but none have provided deeper insight into the specific expectations of math proficiency that establish these relationships. This study, by focusing on a distinctive placement policy and diagnostic testing data from one college, contributes new evidence to the literature on the relevance of math for college success. For example, examinations of placement cutoffs and score distributions reveal that proficiency with fractions is the gatekeeper skill for lower-level developmental math (e.g., pre-algebra and elementary algebra), and correctly solving algebra-based word problems is the gatekeeper skill for upper-level developmental math (e.g., intermediate algebra). The corresponding RD analyses show that students who barely missed the fractions cutoff and who were subsequently assigned to lower-level math courses were significantly less likely to attempt and pass required math courses, persist towards degree completion, and earn college credits. This demonstrates that certain math expectations, such as procedural fluency with fractions, keep students from accessing higher-level math and the more efficient pathways to degree completion therein and may therefore need reconsideration.

I next discuss literature examining basic math skills and remediation in community colleges. I then describe the data and methods used in the study, focusing on the respective contributions of the binding-score RD and frontier-score RD analyses I employ. I present the descriptive and quasi-experimental results, and in the discussion, describe ways to re-consider the expectations of math proficiency that affect the college opportunity of community college students.

Basic Math Skills and Postsecondary Remediation

Roughly 30% of students in nonselective 4-year colleges and 60% of students in community colleges take remedial math courses in college (Chen 2016). Although these courses are designed to equip students with the skills thought to be necessary for success in college-level math and math-intensive courses, research that has investigated the impact of remedial/developmental math offerings in community colleges raises concerns about its effectiveness. A meta-analytic review of studies using regression discontinuity designs to examine developmental education revealed mostly null or negative effects of developmental math placement on passing a college-level math course, credit completion, and college attainment (Valentine et al. 2017). Another study of one large community college system found that students assigned to math remediation did not necessarily develop math skills but rather were diverted away from credit-bearing pathways (Scott-Clayton and Rodriguez 2015). Studies that have examined whether these effects vary by level of remediation also report negative effects of initial placement into lower levels of developmental math sequences (Boatman and Long 2018; Xu and Dadgar 2018).

There are a number of possible explanations for these findings, such as the high cost of remediation to students (Melguizo et al. 2008), or the largely traditional and uninspiring nature of curriculum and instruction in developmental math courses (Grubb 1999) being deterrents to persistence. One explanation most relevant to the present study is that students may be incorrectly placed into developmental courses. Research has demonstrated that a significant portion of students—as many as one-quarter in some community college systems—may have been erroneously placed into their math courses following placement testing, most typically into courses that are too easy (Scott-Clayton et al. 2014). Importantly, these misplaced students could have succeeded in higher-level courses and avoided the costs associated with developmental education had they been given the opportunity to do so. Concern about placement testing error has prompted researchers to examine alternatives to placement testing (e.g., Ngo and Melguizo 2016; Scott-Clayton et al. 2014). It has also provoked policy change, with a number of states either now using multiple measures for course placement (e.g., California; Texas) or removing placement testing requirements altogether (e.g., Florida) (Park et al. 2018).

The focus on issues with placement testing raises related concerns about what placement tests in developmental education actually measure. In a study of community college students’ diagnostic test scores and performance on a survey with reasoning tasks in a set of California community colleges, Stigler et al. (2010) found that the vast majority of students in their sample incorrectly answered test questions related to operations with fractions. Their analysis of student answers revealed that poor testing results were largely due to faulty procedural knowledge. However, upon examining student responses to open-ended problems they developed, the researchers also found that when prompted students displayed reasoning skills and offered correct conceptual explanations. They concluded that a key limitation of the current placement testing system is that it seldom provides opportunities to reason mathematically or allow students to demonstrate conceptual understanding. Instead, “the goal of much of developmental math education appears to be to get students to try harder to remember the rules, procedures, and notations they’ve repeatedly been taught,” (Givvin et al. 2011, p. 13).

Taken together, these findings suggest that expectations of math proficiency, manifested in placement testing criteria, may unfairly and unnecessarily sort students to remedial coursework. As such, the goals of this study are to investigate how specific expectations of math proficiency, particularly those pertaining to procedural fluency with basic math topics, affect the college opportunity and outcomes of students in postsecondary math remediation. This is an important research area because some critics have voiced concern about the math proficiencies expected of students pursuing postsecondary education, questioning whether the skills that are commonly associated with college-readiness are actually necessary for success in college and the job market (e.g., Bryk and Treisman 2010; Hacker 2015). In addition, higher education scholarship has drawn attention to the “institutionalized disciplinary assumptions” and “disciplinary logics” within each field of study that inform admissions, evaluation, and perceptions of quality (Posselt 2015). This provides a framework for understanding how expectations of math skills affect placement testing practices and facilitate the gatekeeping role of mathematics.

Ultimately, examining how expectations of math proficiency and procedural fluency are related to subsequent college outcomes can provide greater insight into how math standards play a role in shaping students’ academic trajectories and opportunities, and in perpetuating inequality in higher education at large. This is particularly important in community colleges, since low-income students and students of color are more likely to attend these institutions and are more likely to be enrolled in math remediation upon matriculation (Attewell et al. 2006; Bailey et al. 2010). Given that community colleges are a primary postsecondary pathway for these students, a key goal of the study is to examine both the short- and long-term impact of basic math remediation on community college students’ outcomes. The research can be summarized by the following questions:

-

1.

What basic math skills function as “gatekeepers” and limit access to higher-level courses?

-

2.

How does remedial assignment based on these basic math skills affect college outcomes?

Setting and Data

I answer these questions using student-level administrative records from a large urban community college in California. “College M” serves roughly 25,000 full-time equivalent students each year. This includes a high fraction of Hispanic and Asian/Pacific Islander students, who comprise 76 and 17% of all students, respectively. For reference, about 44% of all California community college students identify as Hispanic and about 15% identify as Asian/Pacific Islander (CCCCO, n.d.). Thirty-seven percent of College M students indicated they were non-native English speakers, and a sizeable portion, 23%, indicated they were either permanent residents or had other visa status. According to the California Community Colleges Student Success Scorecard, College M’s 6-year degree, certificate, or transfer completion rate was just over 40% for the 2011 cohort.

College M, and California community colleges in general, are particularly relevant contexts for examining placement policies because in addition to serving roughly one in ten community college students in the U.S., they are in a state where community colleges have considerable autonomy over choice of placement test and design of placement policies (Melguizo et al. 2014). Like most other California community colleges, College M’s developmental math sequence progresses from basic math/arithmetic to pre-algebra to elementary algebra. The next course in the sequence, intermediate algebra, is a pre-requisite for courses required for transfer to a 4-year institution.

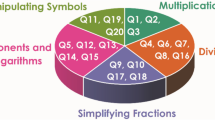

College M was chosen because it used the Mathematics Diagnostic Testing Project (MDTP) to assess and place students into this sequence during the study period 2005–2011. The MDTP is a diagnostic test developed by the California State University and University of California systems to assess mathematics readiness at the secondary and postsecondary levels. The MDTP includes four subtests: Algebra readiness, early/elementary algebra, intermediate algebra, and college-level mathematics/pre-calculus, each of which reports student skill level on several subtopics (MDTP, n.d.). For example, the MDTP Algebra Readiness subtest emphasizes arithmetic operations with integers, decimals, and fractions. The MDTP Elementary Algebra subtest disaggregates scores for linear functions, polynomials, graphing, and other algebra topics. This is inherently different from the commonly-used ACCUPLACER or COMPASS placement tests, which automatically adapt to students’ skill level based on the sequence of correct and incorrect answers a student provides, resulting in a single final score (Mattern and Packman 2009). It is important to note that there is some aspect of choice with the MDTP; either the testing staff or students choose which MDTP subtest students take first, and students can be referred to other subtests depending on their scores and local college policy. Fong and Melguizo (2017) have explored the implications of this test choice, finding in some cases that it limits the course levels to which students have access.

Nevertheless, the focus of this study is on the math diagnostic data available in the MDTP and the fact that College M used this wealth of student math skill information in a unique way—basing placement on multiple cutoff scores. The policy is illustrated in Table 1. To be placed into elementary algebra, a student must not only have a composite test score of at least 24, she must also have at least a score of 7 on the integers subsection, a 6 on the fractions subsection, and a 6 on the decimals subsection. If the student fails to meet any one of these four cut score thresholds, she will be placed into a lower level course, either pre-algebra or arithmetic. For example, Table 1 also shows the hypothetical placements of three students. Student A passed all cutoffs and was assigned to elementary algebra. Student B had the same total score but was short on the integers cutoff and was therefore assigned to pre-algebra. Even though Student C had the highest total score, the low fractions score resulted in a basic math placement. As I will discuss below, this set of multiple cutoffs makes it possible to use a regression discontinuity design to determine the impact of remediation under such a placement system. Importantly, I can estimate the impact of remediation by various math skill cutoffs.

Table 2 presents summary statistics for the focal sample of students in College M, disaggregated by placement level. The analytical sample includes student cohorts assessed for math for the first time between the spring 2005 and the fall 2011 semesters who were not concurrently enrolled in high school, had not already received an associate’s or bachelor’s degree at the time of testing, and were not over the age of 65. This includes 18,330 students taking the MDTP Algebra Readiness subtest for placement into either basic math, pre-algebra, or elementary algebra, and 5638 students taking the Elementary Algebra subtest for placement into elementary algebra or intermediate algebra.

Enrollment data extend to the spring semester of 2013, so I am able to track persistence and course completion outcomes for 8 years for the spring 2005 cohort, 7 years for the 2006 cohort, and so forth. The outcomes of interest are passing the placed math course with a C or better or a B or better (within 1 year of assessment date), and completing 30 and 60 degree applicable units, which are indicators of progress towards an associate’s degree (60 credits are required). Each of these is a dummy indicator with success equal to 0 and non-success equal to 1. I also examine students’ total credit accumulation, an indicator of attainment.

Table 2 also shows the mean MDTP test scores (i.e., percent correct) within each skill area for incoming students between 2005 and 2011. Consistently, the lowest means are in the areas of fractions and geometry for MDTP Algebra Readiness test-takers and in quadratics and word problems for MDTP Elementary Algebra test-takers.

Methods

The detailed diagnostic data allow me to first examine the specific math skills of incoming students, as assessed via the MDTP instruments. These data in conjunction with the context of multiple placement cutoffs enable me to determine “gatekeeping” skills, in other words, those skills that most frequently led to lower-level course placements. I uncover these gatekeeping skills by focusing on the subset of “one-off” students who missed cutoffs by just one point.

Multiple Rating-Score Regression Discontinuity Design

I then estimate the impact of missing these skill-specific cutoffs on academic outcomes using a regression discontinuity (RD) design. The “treatment” is being placed into the lower-level math course at any given placement cutoff. The comparison control condition is placement in the higher-level math course. Provided that there is local continuity in observed and unobserved characteristics at the cutoff threshold, then differences in outcomes between groups at the margin of the cutoff can be directly attributed to treatment status (Imbens and Lemieux 2008), which in this case is assignment to the lower-level developmental math course.

A requirement of the RD design is that an exogenously determined running variable, such as a placement test score, assigns individuals to the treatment and control conditions (Murnane and Willett 2010). However, the lack of a single continuous placement test score in College M, where a diagnostic and several cutoffs are used, posed a problem for traditional RD analysis. As described in Table 1, the total score is used in conjunction with sub-scores to make the placement determination. Therefore, placement in the treatment condition can be determined by more than one assignment variable. Students taking the MDTP Algebra Readiness test may be placed into a course due to missing the integers cutoff, fractions cutoff, or decimals cutoff, or by not having a high enough composite score.

Assignment based on multiple criteria is fairly prevalent in education. For example, Robinson (2011) described the case of English language learner reclassification, which is typically based on students meeting multiple English proficiency criteria. A number of studies have examined policies where both math and ELA test scores are used to determine participation in various educational interventions, such as summer school and grade retention (Jacob and Lefgren 2004; Mariano and Martorell 2013). It also has been used to understand the case of high school exit exams, where multiple criteria must be met in order to graduate (Papay et al. 2011).

There are several approaches outlined in the literature to estimate treatment effects in a multiple rating-score regression discontinuity design (MRRDD) where several scores are used to determine treatment assignment (Papay et al. 2011; Reardon and Robinson 2012; Porter et al. 2017; Wong et al. 2013). These include using a binding-score approach and a frontier-score approach, which are the recommended estimation strategies for real-world applications of MRRDD (Porter et al. 2017). I employ these two techniques to identify the overall treatment effect as well as cutoff-specific effects.

Binding-Score RD

The binding-score RD converts the multi-dimensional context to a single dimension, estimating the treatment effect based on the scores that resulted in the treatment assignment. To determine the binding score for students in College M, I first calculated the raw deviation from each subscore cutoff based on the number of questions that the student was short of in reaching the cutoff, or over in surpassing the cutoff. I then select the minimum score of this set of cutoff deviations as the assignment variable. The binding score Bi for MDTP AR test-takers is notated here as:

In this context, the binding score describes how “far” the student was from the multidimensional cutoff. Students with any negative deviation should be referred downwards to a lower-level course, while students at zero or with a positive deviation should be referred upward to the higher-level course. Thus, this transformation of multiple scores into a single binding score perfectly predic ts treatment assignment (Reardon and Robinson 2012). A single-score RD approach with Bi as the assignment variable, as outlined in Eq. (2), can be used to estimate the causal effects of placement in remedial coursework.

Here i denotes each student-level observation. Yi are the outcomes of interest, β1 is the estimated treatment effect (T) of placement into the lower-level course, and β2 describes the relationship between the binding-score and student outcomes. This is commonly referred to as a “sharp” RD, in which a student’s test score is assumed to perfectly predict treatment within an OLS setting (Murnane and Willett 2010). The resulting estimate can be interpreted as the Intent to Treat (ITT) estimate, which in this context is the effect of assignment to the lower-level math course relative to the higher-level math course at the margin of the cutoff.

I assess sensitivity to functional form by including interactions between the linear, squared, and cubic terms of the binding-score and treatment status in Eq. (3). In Eq. (4) I also include a vector Xi of student-level controls (age, gender, race, language, and resident status) to increase precision of the estimates.

The advantage of the binding-score approach is that all observations around the placement cutoffs are included in the analytical sample. Methodologists have demonstrated that the binding-score RD is the preferred of MRRDD estimation strategies since it results in the lowest mean square errors (Porter et al. 2017). However, the resultant estimates are more difficult to interpret since students assigned to the treatment could have been assigned by any one of the subscore cutoffs; the binding score estimate is merely the weighted estimate of the effects of each subscore cutoff. This approach may therefore mask important heterogeneous treatment effects for each “frontier” score. Since a key question guiding the study concerns how specific expectations of basic math skills impact college opportunity and outcomes, it is important to examine the heterogeneous treatment effects for each cutoff using a frontier-score RD approach.

Frontier-Score RD

Frontier-score RD provides a means to determine precisely how the set of cutoffs described in Table 1 affects student outcomes. I constructed analytical samples around each sub-score cutoff that included students who scored near the cutoff in question but scored at or above the other cutoffs. For example, the frontier-score RD analysis at a bandwidth of two points around the fractions cutoff includes students who were no more than two points below the fractions cutoff and who did not fall below any of the other cutoffs. These students missed higher-level math placement because of their fractions score. The composite cutoff analysis similarly includes students who surpassed all the subskill cutoffs but were placed in a lower-level due to their inadequate composite test score.

I conducted these RD analyses using one of the frontier scores (i.e., composite, integers, fractions, and decimals scores), centered around each cutoff, as the assignment variable. The frontier-score RDs for the pre-algebra and elementary algebra cutoffs are modeled here in Eqs. (5a)–(5d):

I also assess sensitivity of these analyses by including a set of linear, quadratic, and cubic interaction terms to each regression. For example, estimations of the fractions frontier are given by Eq. (6):

Finally, per the recommendation of Porter et al. (2017), I also include the other subscores with the goal of improving precision of the estimate of \(\beta_{1}\):

The estimated treatment effects apply only to the student subpopulations in each analysis, for example, students who “just missed” the cutoff by a fractions question or two. Provided that assumptions about continuity around the cutoff and internal validity (described below) are met, the frontier RD estimate provides an unbiased ITT treatment effect estimate of placement in the lower-level course around each subscore cutoff.

A number of researchers have used RD designs to investigate the impact of math remediation in the community college setting (e.g., Boatman and Long 2018; Martorell and McFarlin 2011; Melguizo et al. 2016; Ngo and Melguizo 2016; Scott-Clayton and Rodriguez 2015; Xu and Dadgar 2018). This study extends the literature by examining a setting where placement is jointly determined by a set of skill-specific placement cutoffs. Applying RD to obtain causal estimates from diagnostic tests in this setting is a particularly interesting RD application because students who have higher total scores may be placed into lower courses because of a very specific skill gap. The college has identified the specific skill gaps that must necessarily be remediated and these RD analyses provide estimates of the impacts of this unique policy.

It also provides an understanding of how and whether these specific skills are important for student success. If for example, missing the fraction cutoff produces a negative RD treatment effect of placement in pre-algebra versus elementary algebra, then this would suggest that proficiency with fractions may not need to be so heavily emphasized as a prerequisite for upper-level courses. A possible implication is that this cutoff could be lowered or removed. Conversely, if the decimals cutoff results in a positive RD estimate then this suggests that students are benefitting from this cutoff and placement in the lower-level course; the cutoff could potentially be raised so that more students obtain the benefit (Melguizo et al. 2016; Robinson 2011).

Internal Validity

Before proceeding with the results from these estimation strategies, I present checks of the internal validity of the RD design in this setting.

Treatment Assignment and Compliance

First, a critical assumption of RD analysis is that an exogenous policy assigns students to differing treatment statuses. Figures 1 and 2 show that students’ first math courses are directly related to their placement test score. There is also a high compliance rate with assignment to basic math when using the binding-score as the running variable (between 90 and 100% depending on the distance from the cutoff). This is expected since students are blocked from enrolling in courses that are a higher level than the one to which they are assigned. The compliance rate with respect to assignment to pre-algebra versus elementary algebra is lower (between 70 and 80%). Even though students can challenge their placement results and request clearance to enroll in a higher-level course, nearly a quarter of students placed in pre-algebra enrolled in basic math for their first math course. Following the presentation of the results, I describe how I checked for bias in the estimation associated with this lower level of compliance.

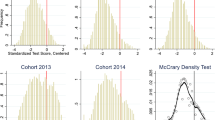

Manipulation Around the Cutoff

A threat to the internal validity of the RD estimates would arise if students were able to manipulate their scores around the cutoff in order to obtain a particular treatment status. This is unlikely since students are not made privy to cutoff scores at any point during the enrollment or testing processes. In accordance with standard practice, I conducted a test of the density of the running variable around each composite score cutoff to check for this potential manipulation of the running variable (McCrary 2008). There is no evidence of differing test score densities around either the basic math/pre-algebra cutoff or the pre-algebra/elementary algebra cutoff (see Appendix). It is therefore likely that variation is due to careless errors in testing and not to manipulation or any “precise” sorting around the cutoff (Lee and Lemieux 2010).

Discontinuities in Covariates

Another threat to the internal validity would arise if there were any observable discontinuities in covariates around the cutoff. A discontinuity in any one covariate would indicate that treatment was correlated with some background variable, thus jeopardizing the internal validity of the ITT estimates. I visually inspected the continuity of covariates at each cutoff using local polynomial smoothing, plotted with 95% confidence intervals (see Figs. 3, 4). There are no discontinuities or “jumps” for any of the covariates at the cutoff, suggesting that any observed discontinuities in outcomes would be attributable to the discontinuity in treatment assignment. As an additional check, I estimated Eq. (2) with the binding score as the running variable and each covariate as the dependent variable. These results are available in the Appendix and corroborate the conclusion of the visual inspection.

Limitations

One limitation of the study is that each skill area has a relatively small number of diagnostic test questions. For example, there are just 10 fractions-based questions on the MDTP Algebra Readiness test. The concern in the context of RD design is that the subscore running variable may correspond to meaningful differences in the student population. That is, a fractions subscore of 5 may be very qualitatively different from a fractions subscore of 6 (the cutoff score), and this may have implications for math outcomes. Relatedly, it is possible that faculty and administrators who set these cutoffs know something about the meaning of the scores and set them accordingly.

These concerns should be minimal since the McCrary tests described above indicate that there is no manipulation or precise sorting around each cutoff. Coupled with the fact that all covariates were also found to be continuous at each cutoff, it is reasonable to assume that only treatment status as a result of placement testing assignment changes at the cutoff. Any differences can therefore be attributed to this change in treatment status rather than to any change in observable covariates.

Finally, although there is high internal validity with RD analyses due to the treatment assignment mechanism, they typically have limited external validity. This analysis is conducted in one California community college that, though large and diverse, is not representative of community colleges in the state or nation. The results should also not be generalized to other diagnostic testing contexts or developmental math contexts. Nevertheless, the methods outlined here provide a robust examination of a unique policy context and enable me to answer the research questions about basic math remediation stated above.

Results

What Basic Math Skills Function as “Gatekeepers” and Limit Access to Higher-Level Courses?

A unique contribution of the study is that the context of multiple cutoffs in College M enables me to determine which skill cutoffs functioned as the most troublesome gatekeeper. To do so, I focus on only those students who missed any cutoff by one point. In other words, these “one-off” students were on the cusp of placement in the next highest-level course. The results of this tabulation, shown in Table 3, are illuminating. At the lower levels of developmental math (basic math and pre-algebra), most students missed placement in the next level due to being one short on the fraction subsection. Specifically, of the students who missed the next level course by one point, 69% of those assigned to basic math instead of pre-algebra missed a fractions question, as did 45% of those assigned to pre-algebra instead of elementary algebra. Decimals were also a gate-keeping skill, with 44% of pre-algebra students being one-off because of a decimals question. In the upper levels (elementary algebra and intermediate algebra), 32% of the one-offs were due to missing a word problem that tested applications of algebra skills.

How Does Remedial Assignment Based on These Math Skills Affect College Outcomes?

The next set of results shows the impact of this placement policy. I first provide a visual inspection of mean outcomes by binding score, with a best-fit line mapping the trends on either side of each placement cutoff. Figure 5 shows the BM/PA cutoff and Fig. 6 shows the PA/EA cutoff. No major jumps are apparent at the BM/PA cutoff, but there does appear to be a sizeable jump at the PA/EA cutoff for passing EA with a C or better. I more precisely estimate this discontinuity using two forms of multiple rating-score regression discontinuity design, binding-score RD and frontier-score RD, and compare the results of the two approaches.

Binding-Score RD

Table 4 shows the results from binding-score RD estimation. The upper panel shows the RD estimates of placement in basic math instead of pre-algebra on the outcomes of attempting pre-algebra, eventually passing pre-algebra with a C or better and with a B or better, completing 60 degree-applicable units, and total credit completion. The lower panel shows the estimates for placement in pre-algebra instead of elementary algebra. For each analysis, the assignment variable is determined by the binding-score, the subscore that was closest to one of the given cutoffs. I show a two-point bandwidth around each cutoff (N = 7436 at BM/PA; N = 5025 at PA/EA), along with a one-point bandwidth (N = 3487 at BM/PA; N = 1785 at PA/EA) in order to assess the sensitivity of the results to bandwidth choice and since two points may be a somewhat large bandwidth given the limited range of possible subscores. Because the validity of RD estimates depends on adequate sample size within a narrow bandwidth around a placement cutoff, the RD analyses for the EA/IA cutoff was not possible. This is largely owing to the fact that significantly fewer students place into intermediate algebra after placement testing via the MDTP Elementary Algebra test.

Nevertheless, the larger sample sizes at the BM/PA cutoff and the PA/EA cutoff allow for a robust analysis using MRRDD. The results show that assignment to the lower-level course significantly reduced the probability of attempting the next course in the sequence. Students placed in basic math instead of pre-algebra were 7 percentage points less likely to attempt pre-algebra than those directly placed in pre-algebra. With respect to passing pre-algebra with a C or better and a B or better, there were null results across all specifications at the BM/PA cutoff. At first glance, one interpretation of these results could be that placement into basic math relative to pre-algebra was not harmful for students since students just below and just above the cutoffs had an equivalent likelihood of eventually passing pre-algebra. However, given the costs of remediation to both the student and the institution, a null effect may also mean students at the margin of the placement cutoff but assigned to basic math could have been just as successful in pre-algebra. The placement cutoff could therefore be slightly lowered so that more students can obtain the benefit of reduced time in remediation (Melguizo et al. 2016). There were also null effects with respect to the longer-term outcomes of completing 60 degree-applicable units and total credit completion.

There was a more pronounced significant negative effect at the PA/EA cutoff, confirming the discontinuity illustrated in Fig. 6. Students missing the multi-dimensional cutoff by one or two points and subsequently assigned to pre-algebra instead of elementary algebra were as many as 23 percentage points less likely to enroll in elementary algebra, and 14 and 11 percentage points less likely, respectively, to eventually pass elementary algebra. This corresponds to reductions of about 35 and 25%, respectively. It is important to note that passing elementary algebra was a minimum requirement for earning an associate’s degree in College M during the period of the study. There is some indication that pre-algebra placement did increase the probability of passing elementary algebra with a B or better by about 2 percentage points, though this was not significant at the 1-point bandwidth. Similar to the BM/PA cutoff, there were null effects with respect to longer-term outcomes for students at the margin of the PA/EA cutoff.

Frontier-Score RD

While the binding-score results suggest that placement cutoffs could be lowered so that fewer students are penalized by basic math placement relative to pre-algebra placement, and by pre-algebra placement relative to elementary algebra placement, it is unclear from this analysis which specific math standards ought to be reevaluated. By design, the binding-score analysis masks heterogeneity by cutoff type. A frontier-score RD analysis is a useful complementary exercise because it enables identification of treatment effects for each type of subskill, and therefore, clearer policy guidance. Tables 5 and 6 provide the results of the frontier-score RD analysis for the composite and subscore cutoffs at the PA/EA and BM/PA thresholds.

The results demonstrate that the frontier-score RDs provide more nuanced results compared to those from the binding-score RD. At both cutoffs, it is apparent that the negative effect of basic math and pre-algebra placement picked up in the binding-score RDs is driven mostly by the fractions cutoff. For example, the BM/PA results in Table 5 reveal a significant negative effect at the fractions cutoff, with basic math placement reducing the probability of attempting pre-algebra by 6–7 percentage points. Mirroring the results of the binding-score RD, the effect of basic math placement on passing pre-algebra and on credit completion were mostly negative but not significant.

Table 6 shows that students who missed elementary algebra placement because of their fractions skills by one or two points were 26–29 percentage points less likely to attempt elementary algebra, and 14–17 percentage points less likely to subsequently pass elementary than students who scored no more than two points above the fractions cutoff. These are relatively large penalties given that the overall elementary algebra attempt rate for students in this specification was just under 70% and the overall pass rate was just under 50%. This corresponds to a nearly 40% decrease in the probability of attempting the next course, and a 35% decrease in passing the next course.

In contrast to the null longer-term effects found in the binding-score RD, the frontier-score RD at the PA/EA cutoff in Table 6 reveals that the penalty to missing the fractions cutoff persisted in the long-term. Students missing the fractions cutoff by one point were 12 percentage points less likely to complete 60 units than students who scored no more than one point above the fractions cutoff, a 57% decrease. Ultimately, they completed nearly 8 fewer credits than their higher-scoring peers.

Interestingly, the PA/EA frontier analysis of the decimals subscore shows a benefit in terms of passing elementary algebra with a B or better (see Table 6). Students who missed the decimals cutoff by one or two points and assigned to pre-algebra were nearly 4 percentage points more likely to subsequently pass elementary algebra than their peers who scored just above the decimals cutoff. This suggests that this decimal component of the MDTP Algebra Readiness may have been relevant for identifying student readiness for elementary algebra and that there was a marginal benefit to taking pre-algebra in terms of earning higher elementary algebra grades.

Given that the percentage of students missing elementary algebra placement because of the fractions cutoff was about the same as those missing elementary algebra due to the decimals cutoff (45 and 44%, respectively), the negative RD estimates at the fractions frontier suggest that the standard associated with this particular skill is perhaps too high. As described above, one interpretation of these negative estimates is that the fractions cutoff could be lowered until the penalty is eliminated or becomes a benefit (Melguizo et al. 2016; Robinson 2011).

Robustness and Sensitivity Analyses

RD designs are sensitive to model specification, so it is therefore important to conduct several checks to assess the robustness of the estimates. The results in Table 7, which only show the outcome of passing a course with a C or better for the PA/EA cutoff, confirm that the estimates are not extremely sensitive to bandwidth choice. To assess sensitivity to functional form, I included linear, quadratic, and cubic interaction terms between the binding-score running variable and treatment status. The treatment effect estimates increase in magnitude but do not change in significance. The results also do not change when I include relevant covariates to the model. The results of these analyses are also consistent for all other outcomes and for the BM/PA cutoff (see Appendix).

I assessed sensitivity of the frontier RD results following the recommendation of Porter et al. (2017). I included polynomial interactions of the running variable and treatment status, and I then also included the other subscores in each regression. In Table 8, I present the results only for the first outcome of interest and only for a two-point bandwidth around the PA/EA cutoff; the one-point bandwidth results are similar and available in the Appendix. These results are robust, showing again that just missing the fractions cutoff significantly decreased the probability of completing the EA course.

Compliance

I conducted two supplemental analyses to assess how students’ choices to enroll in the first course and then enroll in the second course may be biasing the sharp RD estimates. The first considers enrollment compliance with placement assignment. I ran the same binding-score RD as outlined above but only with those students who complied with their assigned course placement. The results of these analyses are shown in the Appendix and can be compared to the binding-score results for the PA/EA cutoff and the outcome of passing the next math course. Like the main results, complier students also experienced a large penalty to PA placement and were about 17 percentage points less likely to pass EA.

Secondly, since a significant negative effect was observed for attempting the higher-level/second course, it is important to assess whether the negative effects on passing derive mostly from the negative effects on attempting. I therefore conditioned the passing outcome on attempting the second course (e.g., attempting EA). In this specification, the sharp RD compares those students around the placement cutoff who eventually enrolled in the higher-level course rather than all students around the cutoff. The results at the two-point bandwidth suggest that the lower course placement may have been beneficial to students, increasing the probability of passing EA with a B or better by nearly 13 percentage points. However, the results are not significant at the one-point bandwidth. Conditioning the passing outcome on course attempt in this manner introduces the possibility of selection into the model, since students who choose to persist in the math sequence may be different from their peers along unobservable characteristics. Nevertheless, these results suggest that the primary effect of PA placement was to deter students from progression through the math sequence rather than not preparing them to pass courses.

Summary and Discussion

This study takes advantage of a distinctive placement policy and diagnostic data available in one community college to provide a unique look at how basic math standards affect the educational opportunity of incoming community college students. Although College M moved away from using the diagnostic test in 2011, this examination of multiple math cutoffs remains relevant given that the test is still in use in other colleges, and since placement tests, though declining in use, continue to be consequential in higher education (Rutschow and Hayes 2018). Importantly, I was able to determine the specific math areas that serve as gatekeepers to higher-level math courses. The findings reveal that many students missed the opportunity to start in pre- and elementary algebra because they missed one question regarding fractions. The same pattern emerged for students in upper-level math (intermediate algebra) with respect to solving word problems and application of math skills.

Various forms of multiple rating-score regression discontinuity design provided a way to identify the impact of this skill-based placement in lower-level math remediation. The binding-score RD analysis at the cutoff between basic math and pre-algebra indicated negative effects of placement into the lower-level course on attempting the next course, and null effects on other outcomes. This can generally be interpreted as a modest penalty to remedial placement since students at the margin of the cutoff had equivalent outcomes. Students placed in the lower-level basic math course likely expended more time and resources to attain these same outcomes. At the pre-algebra/elementary algebra cutoff, the binding-score RD analysis showed a larger negative effect of pre-algebra placement on attempting and completing the elementary algebra course, which was required for associate’s degree attainment during the study period.

The dual RD analyses I conducted also demonstrate how binding-score RD can produce overall treatment effect estimates that mask heterogeneity in effects across multiple cutoffs. The consistent negative frontier-score RD results for the fractions cutoff indicate that there was a persistent penalty to missing the cutoff by a fraction or two. These students were 14–16 percentage points less likely to pass elementary algebra after being placed in pre-algebra and significantly less likely to earn college credits. Although 44% of students at this level “just missed” the decimals cutoff, the negative effects were not as severe as missing the fractions cutoff.

This finer-grained analysis using a frontier-score RD approach suggests there are some areas of possible improvement in math placement policy. Specifically, over-emphasis on certain skill areas such as fractions may be unwarranted. In other words, the fractions proficiency cutoff in College M may not have needed to be so high. Given that students just missing the cutoffs had equivalent chances of attaining each outcome, the practical implication for practitioners is to slightly lower each cutoff so that these students do not have to expend the additional resources associated with taking lower-level developmental math courses.

Yet shouldn’t we be concerned that entering college students could not answer test questions about fractions correctly? Shouldn’t these students be in remedial math courses? The results of the study raise important broader questions about what community college students in developmental education know about mathematics, and how expectations of mathematics proficiency, manifested in placement policy, interface with students’ mathematical knowledge and skills.

As described earlier, research in mathematics education provides some insight into why fractions may be a gatekeeping skill. Community college students in developmental math did reveal errors in their procedural knowledge, especially when solving problems related to fractions (Stigler et al. 2010). However, many demonstrated reasoning and conceptual understanding of mathematics when provided the opportunity to do so (Givvin et al. 2011). Since examination of placement test questions revealed a test emphasis on procedural fluency (Stigler et al. 2010), it is expected that students with faulty procedural knowledge will continue to earn low test scores and be assigned to basic math remediation in contexts where these tests are used. Therefore, in addition to examining whether reevaluating expectations of procedural fluency can make a difference for students, one possible future research area is in alternative forms of assessment. It is possible that assessments that emphasize reasoning and conceptual understanding in addition to procedural fluency may better sort students into developmental and college-level mathematics and lessen the negative impacts of basic math remediation.

To this point, there are few studies that have investigated how assessment and placement policies are actually designed and implemented. One rare qualitative study by Melguizo et al. (2014) found that math faculty and staff do not typically feel that they have the technical support or expertise to set and evaluate their policies. As such, common ways of determining placement cutoffs were to pretend to be a student and take the exam or to mimic the policies of neighboring colleges. The researchers also found that evaluation and calibration of placement cutoffs as an institutional practice was more the exception than the rule, and in a few cases placement policy primarily functioned as an enrollment management tool to keep certain students in certain levels of coursework. There may be an incentive, for example, to set cutoffs high so that instructors in upper-level courses can focus on the intermediate and advanced math necessary for transfer. This research suggests that placement criteria may be more related to administrative and organizational behaviors rather than to concrete understanding of the skills students have and clarity about how they may matter for success in coursework. Future research could investigate how math placement criteria are selected and how the “disciplinary logic” of mathematics influences the evaluative practices inherent in the placement testing system (Posselt 2015).

Aside from reforming placement testing practices, the findings of the study also provide some direction for improving placement testing results. One type of intervention aimed at improving student readiness for college-level math consists of shoring up student skills in a “bootcamp” or summer “bridge” format (Hodara 2013). Since fractions and word problems are the two most troublesome math skill areas identified in the present study, it may behoove these intervention and prep programs to focus on the development of these specific skills. They may want to emphasize fractions for students likely to be placed in the lowest levels of developmental math. They might want to emphasize strategies for solving word problems for those at the higher levels of math. This may translate into improved placement testing results.

Conclusion

There is no doubt that mathematics plays a gatekeeping role in higher education, especially at the point of assessment and placement when students enroll in community colleges. Indeed, the decisions that result from placement testing have significant implications for the nature of students’ math experiences and pathways through college. As shown in the study, students were held back in lower-levels of math remediation due to lower scores in specific skill areas, namely fractions, when it appears they could have passed higher-level courses. Developing these skills prior to college placement testing or re-thinking assessment and placement policies may serve to improve math sorting in the transition to college and the outcomes of students starting college in basic math courses.

References

American Association of Colleges and Universities. (2016). Retrieved from http://www.aacu.org/sites/default/files/files/LEAP/2015_Survey_Report2_GEtrends.pdf

Attewell, P., Lavin, D., Domina, T., & Lavey, T. (2006). New evidence on college remediation. Journal of Higher Education, 77, 886–924.

Bailey, T., Jeong, D. W., & Cho, S. W. (2010). Referral, enrollment, and completion in developmental education sequences in community colleges. Economics of Education Review, 29(2), 255–270.

Boatman, A., & Long, B. T. (2018). Does remediation work for all students? How the effects of postsecondary remedial and developmental courses vary by level of academic preparation. Educational Evaluation and Policy Analysis, 40(1), 29–58.

Bryk, A. S., & Treisman, U. (2010). Make math a gateway, not a gatekeeper. Chronicle of Higher Education, 56(32), B19–B20.

Burdman, P. (2015). Degrees of freedom: Diversifying math requirements for college readiness and graduation. Report 1 of a 3-part series. Berkeley, CA: Jobs for the Future. Retrieved from http://edpolicyinca.org/sites/default/files/PACE%201%2008-2015.pdf

California Community Colleges Chancellor’s Office (CCCCO). (n.d.). California community colleges key facts. Retrieved from http://californiacommunitycolleges.cccco.edu/PolicyInAction/KeyFacts.aspx

Chen, X. (2016). Remedial coursetaking at U.S. public 2- and 4-year institutions: Scope, experiences, and outcomes. NCES 2016-405, U.S. Department of Education. Washington, DC: National Center for Education Statistics. Retrieved from http://nces.ed.gov/pubsearch.

Fong, K. E., & Melguizo, T. (2017). Utilizing additional measures of high school academic preparation to support students in their math self-assessment. Community College Journal of Research and Practice, 41, 566–592.

Fong, K., Melguizo, T., & Prather, G. (2015). Increasing success rates in developmental math: The complementary role of individual and institutional characteristics. Research in Higher Education, 56(7), 719–749. https://doi.org/10.1007/s11162-015-9368-9.

Givvin, K. B., Stigler, J. W., & Thompson, B. J. (2011). What community college developmental mathematics students understand about mathematics, Part II: The interviews. The MathAMATYC Educator, 2(3), 4–18.

Grubb, N. (1999). Honored but invisible: An inside look at America’s community colleges. New York: Routledge.

Hacker, A. (2015). The math myth: And other STEM delusions. New York: The New Press.

Hodara, M. (2013). Improving students’ college math readiness: A review of the evidence on postsecondary interventions and reforms. A CAPSEE working paper, Center for Analysis of Postsecondary Education and Employment.

Imbens, G. W., & Lemieux, T. (2008). Regression discontinuity designs: A guide to practice. Journal of Econometrics, 142(2), 615–635.

Jacob, B. A., & Lefgren, L. (2004). Remedial education and student achievement: A regression-discontinuity analysis. The Review of Economics and Statistics, 86(1), 226–244.

Lee, D. S., & Lemieux, T. (2010). Regression discontinuity designs in economics. Journal of Economic Literature, 48(2), 281–355.

Lee, J. (2012). College for all gaps between desirable and actual P–12 math achievement trajectories for college readiness. Educational Researcher, 41(2), 43–55.

Mariano, L. T., & Martorell, P. (2013). The academic effects of summer instruction and retention in New York City. Educational Evaluation and Policy Analysis, 35(1), 96–117.

Martorell, P., & McFarlin, I., Jr. (2011). Help or hindrance? The effects of college remediation on academic and labor market outcomes. The Review of Economics and Statistics, 93(2), 436–454.

Mathematics Diagnostic Testing Project (MDTP). (n.d.). Uses of MDTP. Retrieved from http://mdtp.ucsd.edu/about/uses.html

Mattern, K. D., & Packman, S. (2009). Predictive validity of ACCUPLACER scores for course placement: A meta-analysis. Research report no. 2009-2. New York: College Board.

McCrary, J. (2008). Manipulation of the running variable in the regression discontinuity design: A density test. Journal of Econometrics, 142(2), 698–714.

Melguizo, T., Bos, J., Ngo, F., Mills, N., & Prather, G. (2016). Using a regression discontinuity design to estimate the impact of placement decisions in developmental math. Research in Higher Education, 57(2), 123–151.

Melguizo, T., Hagedorn, L. S., & Cypers, S. (2008). Remedial/developmental education and the cost of community college transfer: A Los Angeles County sample. The Review of Higher Education, 31(4), 401–431.

Melguizo, T., Kosiewicz, H., Prather, G., & Bos, J. (2014). How are community college students assessed and placed in developmental math?: Grounding our understanding in reality. The Journal of Higher Education, 85(5), 691–722.

Murnane, R. J., & Willett, J. B. (2010). Methods matter: Improving causal inference in educational and social science research. Oxford: Oxford University Press.

Ngo, F., & Kosiewicz, H. (2017). How extending time in developmental math impacts student persistence and success: Evidence from a regression discontinuity in community colleges. The Review of Higher Education, 40(2), 267–306.

Ngo, F., & Melguizo, T. (2016). How can placement policy improve math remediation outcomes? Evidence from community college experimentation. Educational Evaluation and Policy Analysis, 38(1), 171–196.

Papay, J. P., Willett, J. B., & Murnane, R. J. (2011). Extending the regression-discontinuity approach to multiple assignment variables. Journal of Econometrics, 161(2), 203–207.

Park, T., Woods, C. S., Hu, S., Bertrand Jones, T., & Tandberg, D. (2018). What happens to underprepared first-time-in-college students when developmental education is optional? The case of developmental math and Intermediate Algebra in the first semester. The Journal of Higher Education, 89(3), 318–340.

Porter, K. E., Reardon, S. F., Unlu, F., Bloom, H. S., & Cimpian, J. R. (2017). Estimating causal effects of education interventions using a two-rating regression discontinuity design: Lessons from a simulation study and an application. Journal of Research on Educational Effectiveness, 10(1), 138–167.

Posselt, J. R. (2015). Disciplinary logics in doctoral admissions: Understanding patterns of faculty evaluation. The Journal of Higher Education, 86(6), 807–833.

Reardon, S. F., & Robinson, J. P. (2012). Regression discontinuity designs with multiple rating-score variables. Journal of Research on Educational Effectiveness, 5(1), 83–104.

Robinson, J. P. (2011). Evaluating criteria for English learner reclassification: A causal-effects approach using a binding-score regression discontinuity design with instrumental variables. Educational Evaluation and Policy Analysis, 33(3), 267–292.

Rutschow, E. Z., & Hayes, A. K. (2018). Early findings from a survey of developmental education practices. Center for the Analysis of Postsecondary Readiness. Retrieved from https://postsecondaryreadiness.org/wp-content/uploads/2018/02/early-findings-national-survey-developmental-education.pdf

Scott-Clayton, J., Crosta, P., & Belfield, C. (2014). Improving the targeting of treatment: Evidence from college remediation. Educational Evaluation and Policy Analysis, 36(3), 371–393.

Scott-Clayton, J., & Rodriguez, O. (2015). Development, discouragement, or diversion? New evidence on the effects of college remediation. Education Finance and Policy, 10(1), 4–45.

Stigler, J. W., Givvin, K. B., & Thompson, B. J. (2010). What community college developmental mathematics students understand about mathematics. MathAMATYC Educator, 1(3), 4–16.

Valentine, J. C., Konstantopoulos, S., & Goldrick-Rab, S. (2017). What happens to students placed into developmental education? A meta-analysis of regression discontinuity studies. Review of Educational Research, 87(4), 806–833.

Wang, X. (2013). Why students choose STEM majors: Motivation, high school learning, and postsecondary context of support. American Educational Research Journal, 50(5), 1081–1121.

Wong, V. C., Steiner, P. M., & Cook, T. D. (2013). Analyzing regression-discontinuity designs with multiple assignment variables a comparative study of four estimation methods. Journal of Educational and Behavioral Statistics, 38(2), 107–141.

Xu, D., & Dadgar, M. (2018). How effective are community college remedial math courses for students with the lowest math skills? Community College Review, 46(1), 62–81.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

See Fig. 7 and Tables 9, 10, 11, 12, and 13.

Rights and permissions

About this article

Cite this article

Ngo, F. Fractions in College: How Basic Math Remediation Impacts Community College Students. Res High Educ 60, 485–520 (2019). https://doi.org/10.1007/s11162-018-9519-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-018-9519-x