Abstract

This study tracks students’ progression through developmental math sequences and defines progression as both attempting and passing each level of the sequence. A model of successful progression in developmental education was built utilizing individual-, institutional-, and developmental math-level factors. Employing step-wise logistic regression models, we found that while each additional step improves model fit, the largest proportion of variance is explained by individual-level characteristics, and more variance is explained in attempting each level than passing that level. We identify specific individual and institutional factors associated with higher attempt (e.g., Latino) and passing rates (e.g., small class size) in the different courses of the developmental math trajectory. These findings suggest that colleges should implement programs and policies to increase attempt rates in developmental courses in order to increase passing rates of the math pre-requisite courses for specific certificates, associate degrees or transfer.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A substantial proportion of students enter higher education underprepared for college-level work. Data from the National Postsecondary Student Aid Study (NPSAS:04) show that over half of public 2-year college students enroll in at least one developmental education course during their tenure (Horn and Nevill 2006). In California, 85 % of students assessed are referred to developmental math with the largest proportion to two levels below college-level (CCCCO 2011). The considerable number of students placing into developmental education courses is associated with significant costs to the students and the states (Melguizo et al. 2008; Strong American Schools 2008).

Students referred to developmental education bear significant financial costs which may serve as extra barriers to degree completion. Being in developmental education costs students time, money, and often financial aid eligibility when the developmental education coursework is not degree-applicable (Bailey 2009a, 2009b; Bettinger and Long 2007). In the case of California, since the majority of developmental math students are placed into two levels below college-level, these associate degree-seeking students must pass two math courses to meet the math requirement for an associate’s degree. Students who are seeking to transfer to a 4-year institution and who placed two levels below college-level need to pass two courses before they are permitted to enroll in transfer-level math. Both scenarios equate to at least two extra semesters of math, lengthening the student’s time to degree. Not only do these additional semesters consume more school resources and add expenses for the students, but they also take away from students’ potential earnings (Bailey 2009a, 2009b; Bettinger and Long 2007; Breneman and Harlow 1998).

Thus, it is unsurprising that research has found that the amount of developmental coursework community college students are required to complete is associated with student dropout (Bahr 2010; Hawley and Harris 2005–2006; Hoyt 1999). At the same time, however, students placed into developmental education exhibit characteristics associated with a higher likelihood of dropping out prior to enrolling in remediation.Footnote 1 Studies have suggested that some of the negative impacts of remediation may be attributable to selection bias (e.g., Attewell et al. 2006; Bettinger and Long 2005; Melguizo et al. 2013). For example, developmental math students are found to be systematically different from college-level students in terms of gender, ethnicity, first-generation status, academic preparation and experiences in high school, and delayed college entry (Crisp and Delgado 2014). Further, compared to college-level students, developmental students also tend to enroll part-time given financial obligations and their external commitments to their family and work (Hoyt 1999). These factors have been shown to increase the likelihood of dropout (Crisp and Nora 2010; Hoyt 1999; Nakajima et al. 2012; Schmid and Abell 2003).

Developmental education is not only costly to the student, but is an increasingly expensive program to operate in general. A decade ago, the yearly cost of providing developmental education was estimated to be around $1 billion (Breneman and Harlow 1998). This figure has been recently revised with national estimates indicating that the annual cost of developmental education is now at about $2 billion (Strong American Schools 2008). Further, this number may be conservative given that developmental education can act as a barrier to degree completion and predict student dropout (Hawley and Harris 2005–2006; Horn and Nevill 2006; Hoyt 1999). Indeed, Schneider and Yin (2011) calculated that the five-year costs of first-year, full-time community college student dropout are almost $4 billion. In California alone, the costs were about half a billion dollars (Schneider and Yin 2011). Taking together the cost of student dropout with the annual cost of developmental education, it is evident that developmental education is a fiscally-expensive program. However, despite the costs of providing developmental education, proponents argue that underprepared students are better served in developmental courses than left to flounder in college-level courses (Lazarick 1997), so it remains a core function of community colleges (Cohen and Brawer 2008). Given the difficult economic climate in California during the Great Recession and the resulting budget cuts to the state’s community colleges (LAO 2012) as well as the national push to increase college degree attainment, improving success rates among developmental education students has become a top priority for community colleges.

This descriptive study contributes to the growing literature on developmental education by providing a more comprehensive framework to study students’ successful progression through their developmental math trajectory. There are two main objectives of this study: (1) to track community college students’ progression through four levels (i.e., arithmetic, pre-algebra, elementary algebra, and intermediate algebra) of their developmental math sequences; and (2) to build a conceptual model of developmental math education by exploring the extent to which individual-, institutional-, and developmental math-level factors are related to successful progression through the sequence. We define progression as a two-step process: attempting the course and passing the course. This is an important distinction, as other studies have determined the probability of successful progression based on the entire sample of students initially placed into specific developmental levels (e.g., Bailey et al. 2010). Similar to Bahr (2012), this study offers a different view by measuring only those students who are actually progressing (attempting and passing) through each course. The sample is drawn from a Large Urban Community College District (LUCCD) Office of Institutional Research’s computerized database. We analyzed student transcript data which enabled us to provide more detailed information on the initial referral, and on subsequent enrollment and progression patterns for developmental math students. The institutional variables examined in this study were also from the LUCCD Office of Institutional Research’s computerized database and the public records obtained from their website (LUCCD Office of Institutional Research 2012).

The following research questions guide this study:

-

(1)

What are the percentages of students progressing through the developmental math sequence? Does this vary by student placement level? Does this vary by course-level of the developmental math trajectory?

-

(2)

To what extent do individual-, institutional-, and developmental math-level factors relate to students’ successful progression through their developmental math sequence?

Defining successful progression as attempting each level and passing each level in the developmental math trajectory, we found that one of the major obstacles related to low pass rates is actually the low attempt rates. Once students attempted the courses, they passed them at relatively high rates. In terms of the comprehensive model of progression in developmental math, we found that although individual-level variables explained most of the variance in the models, the institutional-level and developmental math-level factors also contributed to explain progression. Specifically, in terms of developmental math-level factors, we found that holding all else constant, class-size was inversely related and type of assessment test was positively related to passing pre-algebra and elementary algebra (middle of the trajectory and where most students in the LUCCD are placed). We found that developmental math-level factors along with individual-level factors such as receiving multiple measure points (i.e., proxy for high school academic preparation) were positively associated with course success rates, which illustrates the importance of the role community colleges can play in increasing success rates in developmental math. These findings have important policy implications related to the need for community colleges to design assessment and placement policies and support systems for students to attempt and successfully complete the math pre-requisites for their desired credential or degree.

The structure of this paper is as follows. We first review the current literature addressing the factors associated with student success in developmental education. Next, we describe the methodological design and empirical model using data from the LUCCD. We then present findings from our analysis. We conclude with a discussion of the findings and their implications for research, policy, and practice.

Literature Review

Although research in higher education has demonstrated a relationship between student success and various institutional factors (Calcagno et al. 2008; Hagedorn et al. 2007; Wassmer et al. 2004), the majority of existing quantitative literature describing student success in developmental education focuses on student characteristics (Bettinger and Long 2005; Glenn and Wagner 2006; Hagedorn et al. 1999; Hoyt 1999). Few studies have explored the extent to which developmental education student success is related to varying institutional characteristics and assessment and placement policies. Notable exceptions include the work by Bahr (2010) and Bailey et al. (2010). As research in developmental education continues to refine our understanding of factors related to student success, it is imperative to recognize the relationship between students and the institutions they enroll in, and the complexities of both.

Characteristics of Students Enrolled in Developmental Education

Historically-underserved student populations are overrepresented in developmental education. Students placed into developmental education are more likely to be African American or Latino (Attewell et al. 2006; Bettinger and Long 2005, 2007; Crisp and Delgado 2014; Grimes and David 1999; Hagedorn et al. 1999; Perry et al. 2010), older (Calcagno et al. 2007), female (Bettinger and Long 2005, 2007; Crisp and Delgado 2014; Hagedorn et al. 1999), low-income (Hagedorn et al. 1999), or first-generation students (Chen 2005; Crisp and Delgado 2014).

Research has also demonstrated that students enrolled in college-level math courses enter institutions with many advantages over students enrolled in developmental math (Crisp and Delgado 2014; Hagedorn et al. 1999). Compared to college-level students, students placed into remediation, report lower high school GPAs, earned less college credit during high school, took lower-level math classes in high school, and delayed entry into college (Crisp and Delgado 2014).

Further, Hagedorn et al. (1999) found that student characteristics that are predictive of their placement into remediation, such as studying less in high school, extend to their relative success in developmental courses as well. Examining the outcome trajectories of developmental education students, Bremer et al. (2013) found that students from White/non-Latino backgrounds, students who attended tutoring services, and students seeking an occupational (commonly referred to as vocational) certificate were more likely to persist and exhibit higher GPAs. Math ability was also identified as a powerful predictor of student success; however, enrollment in developmental math courses was not a significant predictor for retention, and was negatively associated with GPA in college-level courses. This finding suggests that the main factor associated with student success is their initial math ability, and taking the additional remedial courses did not translate into higher educational outcomes.

One limitation of the Bremer et al. (2013) study is that they define remediation as a binary treatment: either a student is placed in a college-level course or placed into developmental education. This is problematic given that developmental education in community colleges is traditionally delivered as a sequence of courses (Bahr 2008, 2012; Bailey 2009a, 2009b; Melguizo et al. 2014). Typically, students assigned to developmental education must successively pass each assigned course in the sequence before they can enroll in college-level courses in those subjects.

Student Progression Through the Developmental Math Sequence

Recent literature on developmental education has examined success based on multiple levels of the remedial sequence (Bahr 2009, 2012; Boatman and Long 2010; Hagedorn and DuBray 2010; Melguizo et al. 2013). Bahr (2012) explored the junctures in developmental sequences to investigate the extent to which students placed into lower levels experienced differential attrition compared to students placed into higher levels. He investigated three reasons that developmental students may elect to drop out of their sequence: nonspecific attrition, skill-specific attrition, and course-specific attrition. Bahr (2012) found evidence of nonspecific and skill-specific attrition for remedial math and writing. Regardless of the point of entry, students experience escalating rates of nonspecific attrition. Therefore, nonspecific attrition partially explains the college-skill attainment gap since low-skill students have more steps in front of them, and thus suffer greater total losses. Bahr (2012) also found evidence of course-specific attrition. Students who progressed to beginning algebra from a lower point of entry (e.g., arithmetic or pre-algebra) exhibited a lower likelihood of passing the course on their first attempt compared to students who advanced to other math courses at the same juncture. Therefore, beginning algebra is associated with the lowest likelihood of success. This also contributes to the gap in college-level skill attainment between low- and high-skill developmental math students.

Existing literature furthers our understanding of individual characteristics of the developmental student population and how these factors relate to student success (e.g., Bremer et al. 2013). The current research also provides rich detail illustrating students’ behavior throughout their developmental sequences (Bahr 2012). However, these previous studies do not include institutional characteristics, which the literature has demonstrated to be influential on students’ academic outcomes (e.g., Calcagno et al. 2008; Hagedorn et al. 2007; Wassmer et al. 2004). Additionally, as Melguizo (2011) argues, traditional models of college completion should expand to developing conceptual frameworks that apply more specific institutional characteristics, and include programs like developmental education as influential factors related to college persistence and attainment.

Developmental Student Outcomes by Individual- and Institutional- Characteristics

A number of descriptive quantitative studies on developmental education have incorporated both individual- and institutional- characteristics. For example, Bahr (2008) used a two-level hierarchical multinomial logistic regression to examine long-term academic outcomes of developmental math students compared to college-level students. He compared students who “remediate successfully”—meaning they passed college-level math—to students who were initially referred to college-level math and passed the course, and found the two groups indistinguishable in terms of credential attainment and transfer. Bahr (2008) interprets these findings as indicative of developmental math programs resolving developmental students’ skill deficiencies. Considering that efficacy of remediation may vary across levels of initial placement, he then categorized students based on the first math course they enrolled in (proxy for initial math placement). Overall, results from this model supported the findings from his previous models. Controlling for other covariates, Bahr (2008) concluded that remediation is equally efficacious in its impact on student outcomes across levels of initial placement.

Bahr (2008) notes, however, that he compared only developmental and college-level students who completed college-level math, thereby eliminating from the analysis 75 % of developmental math students who did not complete the course sequence. Therefore, developmental math was found to be effective, but only for a small percentage of students who are not representative of the developmental student population. Further, though the analytical design nests students within institutions, and allows separation of explained variance by student-level characteristics and institutional-level characteristics, Bahr (2008) does not explore this variation, instead choosing to control for institutional characteristics (institutional size, degree of math competency of entering students, goal orientation of each college) while focusing his discussion on the student-level variables.

Extending the use of institutional predictors, Bahr (2010) investigated whether the major racial/ethnic groups (White, African American, Latino, and Asian)Footnote 2 reap similar benefits from developmental math education. He found that though all students who successfully complete college-level math within 6 years of enrollment experienced favorable long-term academic outcomes at comparable rates, a sizable racial gap still existed for African American and Latino students in the likelihood of successful math remediation. Bahr (2010) concluded that rather than reducing any existing racial disparities in K-12 math achievement, developmental education amplified these disparities. The overrepresentation of African Americans and Latinos among students who performed poorly in their first math course (which dissuaded students from the pursuit of college-level math skill) exacerbated these racial gaps. Though college racial concentration played a role in the likelihood of successful remediation, success varied across racial groups. For example, successful remediation neither increased nor decreased for African American students enrolled in institutions serving a high proportion of African American students, though it declined for White, Latino, and Asian students. Conversely, Latino-majority institutions were not positively associated with better outcomes. However, counter to findings in Hagedorn et al. (2007), Bahr (2010) found that Latino students enrolled in Latino-majority institutions were less likely to successfully remediate compared to their counterparts in colleges serving a smaller Latino population.

Bailey et al. (2010) explored student progression through multiple levels of developmental education, and whether placement, enrollment, and progression varied by student subgroup and by various institutional characteristics. They found that less than half of developmental students complete the entire math sequence, and that 30 % of students referred to developmental education did not enroll altogether. In terms of student characteristics, they reported that female, younger, full-time, and White students had higher odds of progressing through math than male, older, part-time, and African American students. Moreover, African American students in particular had lower odds of progressing through the math sequence when placed into lower developmental levels. In terms of institutional characteristics, they found that the size of the college, student composition, and certificate orientation are associated with developmental student progression even after controlling for student demographics. The odds of students passing their subsequent math course were better when they attended small colleges, while odds were lower when students attended colleges that served high proportions of African American and economically disadvantaged students, had higher tuition, and were more certificate-oriented.

Though Bailey et al. (2010) built a comprehensive model to explain student success in developmental math education, their model does not consider specific institutional assessment and placement policies. Further, the model is limited in explaining initial math ability—because the measurement of math ability is solely reliant on placement level, it does not consider that math skill may vary within each placement level. Qualitative investigations into developmental education have demonstrated diversity in what type of assessment and placement policies colleges adopt (Melguizo et al. 2014; Perin 2006; Safran and Visher 2010). Melguizo et al. (2014) investigated the assessment and placement policies at community colleges within a large urban district, and reported substantial variation in the way the nine colleges of the district were assessing and placing students for the same developmental math sequence. Thus, simply measuring placement level across colleges may not be accurately measuring students’ actual incoming math ability. We build on Bailey et al. (2010) by including specific variables representing the assessment and placement process including which test students were assessed with, and the assessment test score used to direct students’ developmental math placement.

This study builds on the previous literature by first expanding the conceptual framework proposed by Bailey et al. (2010) utilizing individual-, institutional-, and developmental math-level characteristics We attempt to incorporate the complexity of and differences in the assessment and placement processes by including a number of factors such as the test used to assess and place students in the models. Second, we follow Bahr’s (2012) definition of successful student progression through their developmental math trajectory. We argue that community colleges cannot be held accountable if their students are not actually enrolling in the courses. For this reason we explore the association of the individual-, institutional-, and developmental math-level factors in two stages. We first explore the association of the factors with attempting the course, and then we explore the association of the factors with successfully passing the course. As discussed in Bahr (2013), we propose this definition as a more accurate way of understanding progression through the developmental math sequence, given that in order for community colleges to increase success rates they also need to understand the factors associated with attempting these courses.

Methodology

Data

The data employed in this study are from the LUCCD, which is the largest district in California, and is one of the most economically and racially diverse. Student data come from the LUCCD Office of Institutional Research’s computerized system. Student-level information was gathered for students who were assessed at one of the LUCCD colleges between summer 2005 and spring 2008, and then tracked through spring 2010. Thus, data are based on three cohorts of students in developmental math education: (1) students assessed during the 2005–2006 school year (5 years of data), (2) students assessed in 2006–2007 (4 years), and (3) students assessed in 2007–2008 (3 years).

The LUCCD Office of Institutional Research also provides annual statistics regarding student populations and instructional programs for each of the colleges, and makes them publicly available through the LUCCD website (LUCCD Office of Institutional Research 2012). Through these reports, we collected institutional-level data for enrollment trends, student characteristics, and service area population demographics. We also accessed data on the developmental math program, including average class size and proportion of classes taught by full-time faculty. Data were gathered for summer 2005 to spring 2010 to match the student-level data, and merged with the student dataset based on college attendance.

Sample

The initial sample included 62,082 students from eight collegesFootnote 3 who were assessed and then placed into developmental math education, and who enrolled in any class at the college where they were assessed. Students may take classes at multiple community colleges; however, to predict students’ progression based on institutional characteristics and assessment and placement policies, we eliminated students who did not enroll at the same campus they were assessed thus dropping 1164 students (2 %) from the analysis.Footnote 4 We also filtered out 886 students (1 %) because they did not follow the college’s placement policy and took a higher or lower math course than their referred level. Lastly, we removed 4006 students (7 %) who were placed directly into college-level math and thus never enrolled in developmental math, as well as 1200 students (2 %) who were placed into a level below arithmetic because only one college referred students to this level. The final sample included 54,879 students who were placed into developmental math education. Table 1 illustrates the breakdown of the final sample by placement level. As illustrated, there are higher proportions of older and female students placed in lower developmental math levels. There are also a larger proportion of African American and Latino students, and students with financial aid in lower levels of developmental math.

Table 2 summarizes the institutional and developmental math characteristics of each college. The institutional characteristics in the tables are averaged across the 2005–06 to 2009–10 school years, while the developmental math factors are averaged across time and math level.

Variables

This study builds an empirical model to explain the probability of students’ successful progression (attempting and passing) through the developmental math sequence (arithmetic, pre-algebra, elementary algebra, and intermediate algebra) as a function of individual-, institutional-, and developmental math-level factors. We include variables based on findings from existing empirical research. The following empirical model was specified for each progression outcome:

where,

y = students’ successful progression.

x = vector of individual-level characteristics, including student demographics, enrollment status, and assessment scores and placement.

v = vector of institutional-level variables, including institutional size, goal orientation, and student composition.

z = vector of developmental math-level factors, including assessment process and developmental course information.

e = error term.

Individual-Level Variables

The individual-level variables fall into three main categories: student demographics, enrollment status, and assessment scores and placement. Student demographics included in the model are age at the time of assessment, female, and race/ethnicity (Asian, African American, Latino, Other/Unknown, and the reference group, White). These variables have consistently been found in the literature to be associated with student academic success (e.g., Bahr 2009; Choy 2002; Crisp and Delgado 2014; Pascarella and Terenzini 2005).

The enrollment status variables are dichotomous and describe whether students are enrolled full-time and their financial aid status, which is used as a proxy for income. Among others, Bailey et al. (2010) found that part-time students were less likely to progress through their developmental math sequences. Full-time status is a computed variable and represents the average number of units a student attempted in his or her fall and spring semesters during their tenure enrolled in community college. Students who were enrolled a minimum of 12 units per semester were identified as full-time. The literature has also shown that family income is associated with student success (Choy 2002; Pascarella and Terenzini 2005). Students’ financial aid status describes whether the student was ever enrolled in financial aid programs. The majority of students seeking financial aid were granted the Board of Governor’s Fee Waiver Program (BOGGW) or the Pell grant; however, this variable also includes students who received financial aid through the Cal grant, the Extended Opportunity Programs and Services (EOPS) grant and book grant, the Federal Supplemental Educational Opportunity Grant, and federal work study. Since each grant requires proof of financial need to combat the cost of enrollment and supplies, this composite financial aid status is our proxy for family income.

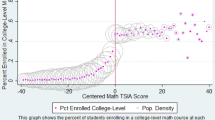

Finally, the majority of prior research has demonstrated a positive association between students’ initial ability (e.g., developmental placement) and past academic performance with student outcomes (e.g., Bailey et al. 2010; Hagedorn et al. 1999; Hoyt 1999). The student assessment variables identify the level of developmental math in which the student was placed, his or her test score, and whether the student was awarded multiple measure points.

The placement and test score variables describe students’ initial math ability. The placement variable refers to the four levels of developmental math education explored in this study. The test score variable describes the percent of questions the student answers correctly within their sub-test and then transformed to incorporate their percentages across sub-tests. This variable was computed by dividing the student’s score by the maximum points per sub-test of each assessment test, which generated a value for the percentage of questions they answered correctly. Then, we evenly weighted these sub-test percentages into thirds creating a continuum of scores across sub-tests.Footnote 5 Considering previous studies have relied solely on placement level—a broad category—to distinguish students’ initial ability (e.g., Bahr 2012; Bailey et al. 2010; Hagedorn and DuBray 2010), the inclusion of this variable is a much better control for individuals’ initial level of academic preparation.

Lastly, the multiple measure point variable measures past academic performance. During the matriculation process in the LUCCD, students provide additional information regarding their educational background and goals. This information is used to determine whether students should receive points (multiple measure points) in addition to their placement test score. Multiple measure points vary across colleges (Melguizo et al. 2014; Ngo and Kwon 2014) and include, among others, the highest level of math completed, high school GPA, importance of college, the amount of time elapsed since their last math course, and hours per week they plan on being employed while attending classes. Multiple measure points can thus result in a student being placed into the next higher-level course (Melguizo et al. 2014; Ngo and Kwon 2014). In this model, the multiple measure point variable is treated as dichotomous and describes whether the student earned any multiple measure points in the assessment and placement process.

Institutional-Level Variables

The institutional-level variables are also comprised of three main categories demonstrated to be associated with student success: institutional size, goal orientation, and student composition. The size of the institution has been found to be negatively associated with student success (Bailey et al. 2010; Calcagno et al. 2008). In this study, as in previous literature, institutional size is measured by full-time equivalent student (FTES) which is a student workload measure representing 525 class hours (CCCCO 1999).

Community colleges may vary in their institutional goal orientation; for example, some colleges emphasize the transfer-function while others may be more certificate-oriented. Certificate-oriented colleges may focus on vocational degrees which may not have a math requirement, rather than associate degrees that do have a math requirement. Research has demonstrated that students have lower odds of successful progression in developmental math when enrolled in certificate-oriented community colleges (Bailey et al. 2010). A certificate orientation variable is included in the model and represents the proportion of certificates granted out of total certificates and associate degrees awarded over the time the data was collected.

A large proportion of LUCCD colleges’ student population is comprised of students from the local and neighboring communities, which results in varying populations across colleges. As illustrated in the existing literature, the composition of the student population is directly related to student outcomes (e.g., Calcagno et al. 2008; Hagedorn et al. 2007; Melguizo and Kosiewicz 2013; Wassmer et al. 2004). Wassmer et al. (2004) examined the effect of student composition on transfer rates in community colleges and found that colleges serving a larger proportion of students with a higher socioeconomic status and with better academic preparation have higher transfer rates. Thus, we include measures of high school achievement, student academic preparedness, and socioeconomic status. These variables are the college’s Academic Performance Index (API) score, the percentage of high school graduates, the percentage of students reporting an educational goal of an associate’s degree and/or transfer to a 4-year institution (percentage of student educational goal), and the college income variable which is a measure of the median income of families residing in each college’s neighboring area. Lastly, much research has been conducted on the effect of racial/ethnic composition of community colleges on student outcomes (Bahr 2010; Calcagno et al. 2008; Hagedorn et al. 2007; Melguizo and Kosiewicz 2013; Wassmer et al. 2004). Findings have been mixed, so it is important to include variables for the colleges within the LUCCD who serve a very ethnically diverse student population, and whose population varies across colleges. In the model, we include variables for the percentage of African American students and percentage of Latino students.

Developmental Math Variables

The developmental math factors range from the assessment process to the math classroom. The assessment test variable represents each of the three assessment tests. At the time the students in this sample were assessed, four colleges were using the ACCUPLACER test, two were using Mathematics Diagnostic Testing Project (MDTP), and two were using COMPASS. Research from K-12 literature has demonstrated that smaller class sizes are positively associated with student success (Akerhielm 1995; Krueger 2003; Finn et al. 2003), so we also included a variable measuring the average developmental math class size. Lastly, since research has demonstrated that students whose first math course in a sequence is with a part-time instructor are less likely to pass the second course when the instructor is full-time (Burgess and Samuels 1999), we included the average proportion of full-time faculty teaching developmental math classes.

Analyses

We first used simple descriptive analyses to track community college students’ progression through four levels of their developmental math sequences. We separated the groups by initial referral and then calculated their attempt and pass rates at each level of their developmental math trajectories.

We then used step-wise logistic regressions to build our model of success in developmental math education in three steps. In the first step, we only included student demographic, enrollment, and assessment variables. In the second step, we added overall institutional-level variables that describe each college’s student population as well as institutional size and certificate-orientation. The final step focuses on developmental math factors; in this step, we included which assessment test the student took, the average developmental math class size, and the proportion of these classes which were taught by full-time faculty. Each of these steps was utilized in each of the eight outcomes we used to examine the progression of students through the developmental math sequence.

The eight outcomes of interest represent successful progression through the developmental math sequence. Because this study explored four levels of developmental math education, each level was treated as two separate dichotomous outcomes: attempting the level and not attempting the level, and passing the level and not passing the level. Each of the eight step-wise logistic regressions analyzed a different sample of students. Since students typically do not attempt courses below their placement, the sample for each outcome variable represents this filter. In examining the probability of attempting arithmetic, for instance, students referred to higher levels of developmental math (i.e., pre-algebra, elementary algebra, intermediate algebra) are not included in the model. An extra restriction is based on how we define progression. As students progress through their math trajectory, they may only attempt the subsequent course if they passed the previous one. In estimating the probability of attempting pre-algebra, for example, of the students initially placed into arithmetic, only those who passed arithmetic may attempt pre-algebra. This logic is extended in investigating the probability that students pass their developmental math course. Since students can only pass a course they attempt, students who do not attempt the course are not included.

Limitations

There are several limitations to this study. One is that this is a descriptive study examining which variables are associated with students attempting and passing the levels in their developmental math sequence. Though we have a rich set of variables in our model there is no reason to assume we have controlled for all possible factors associated with progression through the sequence. Because of potential omitted variable bias and thus a correlation with the residual term, we are unable to make any causal inferences from our findings.

Two, the cohorts used in this study were tracked between three and 5 years. Technically this time frame includes at least six semesters worth of data, which is enough time for students who are referred to the lowest developmental math level to progress through their entire sequence. However, because the failure rate in developmental education courses is higher than that of college-level courses (U. S. Department of Education 1994), students may be attempting courses multiple times which increases the amount of time necessary to complete their sequence (Bahr 2012; Perry et al. 2010). Our estimates may, therefore, be conservative if students continued to attempt previously failed courses and progress through the sequence beyond the time frame of our study.

A third limitation is that the LUCCD dataset did not include important factors that research has demonstrated to be associated with student academic success including student attributes like motivation and perseverance (e.g., Duckworth et al. 2007; Hoyt 1999; Hagedorn et al. 1999), and environmental factors like the number of hours worked and financial obstacles demonstrated to decrease the likelihood of persistence (Crisp and Nora 2010; Hoyt 1999; Nakajima et al. 2012; Schmid and Abell 2003). Further, we did not include the concentration of underprepared population which Bahr (2009) found is positively associated with remedial students’ conditional rate of progress. Lastly, though our model is one of the first to include variables specific to colleges’ developmental math program, we are missing factors related to faculty education level (among others). Research has found that compared to developmental math faculty without a graduate degree, those with a graduate degree are associated with better student outcomes (Fike and Fike 2007).

There are limitations with the variables included in the model as well. For example, financial aid status may be time-varying; however, we only had access to student information at initial assessment. Our student test score variable is also limited. We were able to compute a variable for student test score to assess students’ math ability prior to beginning their sequence rather than solely relying on the broad placement variable. However, there are inherent biases within this variable due to each college’s differing placement criteria. For example, since one of the colleges had a substantial majority of its students take the lowest sub-test, their scores are driven downward in comparison to other colleges. Though this variable has limitations, it is the most accurate measurement for students’ initial math ability we could calculate that controls for the varying range of scores possible for each sub-test as well as the different levels of sub-tests.

Results

Since this study offers an alternative view to examine progression through the developmental math trajectory, we include the traditional understanding and sample definition in the Appendix as a source of comparison.Footnote 6 The method of measurement utilized in this study calculated pass rates based on the number of students who attempted the course, and calculated attempt rates of the subsequent course based on whether they passed the prerequisite; thus, we only included students who were actually persisting through their math trajectories.

Descriptive Statistics of Student Progression

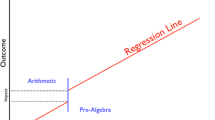

As illustrated in Fig. 1, students are relatively evenly distributed across the developmental math placement levels though slightly more students are referred to lower levels of developmental math. Figure 1 demonstrates the progression of students through the developmental math sequence by their initial placement level.Footnote 7 Starting at the lowest level, of the 15,106 students initially referred to arithmetic, 61 % (n = 9255) attempted arithmetic; and then 64 % (n = 5961) of those attempting the course passed it. Students who pass arithmetic may choose to attempt pre-algebra, which 72 % (n = 4310) do and 71 % pass (n = 3412). Of these 2833 students who attempt elementary algebra (83 %), 75 % (n = 2217) pass the course; 65 % (n = 1393) attempt intermediate algebra and 72 % (n = 1004) pass. Similar patterns exist for the other placement levels. However, it appears that across initial placement levels, the largest proportions of students stop progressing by not attempting their initial math course and not passing this course; this is especially apparent for the lowest levels of math.

All the patterns illustrated in Fig. 1 may be a factor of student observable and unobservable characteristics as well as of each college’s assessment and placement policies and other institutional factors. One important note is that from summer 2005 to spring 2010, the time frame of the dataset, the math requirement to obtain an associate’s degree was elementary algebra. So, students’ attempt rates between the elementary and intermediate algebra levels may be a function of their educational goals. Therefore, two groups may emerge: students whose educational goal is an associate’s degree and students who are intending to transfer to a 4-year institution.

By unpacking the attempt and pass rates for each level of the developmental math sequence, we offer a more detailed illustration of developmental student progression. Figure 1 shows that students who enter at lower developmental levels are passing the higher courses at rates comparable to those who are initially placed in higher levels if they attempt those levels. However, there are a large number of students who exit their sequence at different points along the trajectory. Consistent with Bailey et al. (2010), Fig. 1 reveals that across levels, most students exit the sequence by not attempting or not passing their initial course. Though only a small number of students make it through to the highest levels, Fig. 1 suggests that when students attempt developmental courses, these courses are helping students gain the skills necessary to successfully pass the course required for an associate degree and the pre-requisite course for transfer-level courses. In the next section, we examine what factors influence the probability of successful progression, as measured by these attempt and pass rates.

Model Fit

Though the first stage in the step-wise logistic regressions, which only included observable student characteristics, explained the most variance in each outcome, we found that each further inclusive step in model-building statistically improved model fit to the data. The final model for each outcome demonstrated the best fit; however, the explained variance remained relatively small and ranged from five percent explaining attempting arithmetic and 17 % explaining attempting pre-algebra (See Table 3). In comparing model fit across progression outcomes, we find relatively large differences in the variance explained. With the exception of attempting compared to passing arithmetic, the model explained more variance for attempting each level (pre-algebra, elementary algebra, intermediate algebra) than for passing those levels.

Odds Ratio Results from Final Model of Each Progression Outcome Footnote 8

Student Characteristics

Student characteristics account for the most explained variance in each regression analysis. Female students have higher odds of progressing throughout the entire developmental math trajectory. While each additional year of age decreases the odds of attempting the courses, it increases the odds of passing the courses, though the effect sizes are relatively small. African American students are less likely to progress through the sequence compared to White students. Compared to White students, Latino students have higher odds of attempting each math level but lower odds of passing each level (see Table 4).

Students’ enrollment status variables are associated with the probability of successful progression throughout the entire trajectory. Results indicate that students who are enrolled full-time and students with financial aid have higher odds of progression compared to part-time students and those without financial aid. The only exception is that full-time enrolled students have lower odds of passing arithmetic.

Our transformed student test score variable demonstrates that each additional point results in higher odds of successful progression; however, the practical significance of test score is most strongly associated with passing arithmetic and loses strength at higher levels of math. Overall, having obtained a multiple measure point significantly increases the odds of passing each level though it is not significantly related to attempting the courses.

The most interesting finding is the relationship between students who are persisting through the sequence (persisting-students) compared to students initially-placed at the higher level. Consistent with Bahr (2012), results reveal that the odds of attempting and passing each subsequent course are higher for persisting-students compared to initially-placed students.Footnote 9 For example, students who were initially placed into arithmetic, and attempted and passed arithmetic, exhibited higher odds of both attempting and passing pre-algebra compared to students who were initially placed into pre-algebra (see Table 4). One exception to these findings is that persisting-students who are initially placed into pre-algebra have lower odds of attempting elementary algebra compared to those initially placed into elementary algebra. There are also a few non-significant results listed in Table 4. Overall, findings suggest that students who are actually persisting are “catching up” and are even more successful than their peers who began their sequences with higher math ability (as measured by the assessment test).

Institutional Characteristics

Most of the institutional variables included in the regression analyses are not statistically significant; however, those institutional variables that are significant are concentrated at the elementary algebra level. The significant institutional factors describe the college student composition. For example, each additional percent of an institution’s student population that are Latino is associated with increases in students’ odds of attempting elementary algebra. The same can be said for each additional percent of high school graduates at an institution. Surprisingly, we found that for every increase in median family income, the odds of students passing elementary algebra decreases, though this may be partially due to various support services specifically for low-income students. The concentration of significant institutional variables at the elementary algebra level is most likely due to the fact that elementary algebra was the math requirement for an associate’s degree.

The percent of certificates awarded by an institution is the only institutional variable associated with passing intermediate algebra. For every percent increase of certificate orientation, students’ odds of passing intermediate algebra increases. This finding alludes to Calcagno et al.’s (2008) conclusion that well-prepared students will do well regardless of institution. Thus, students that attempt intermediate algebra may represent a specific student subgroup based on motivational characteristics or who have certain support systems. Another potential explanation is that this finding may be a function of instruction within higher-level math courses.

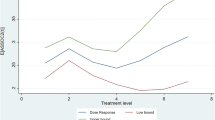

Developmental Math Factors

Similar to the institutional variables, most of the characteristics of each institution’s developmental math program did not significantly influence the probability of students’ successful progression through their trajectory. We did find that students who were assessed and placed by the MDTP test have higher odds of passing elementary algebra compared to students who took the ACCUPLACER test. However, further analysis reveals that colleges using MDTP have a larger percentage of their student population placing into elementary algebra (56.5 %) compared to ACCUPLACER colleges (43.5 %). Thus, this finding may be more a function of student composition and the institutions’ cut-score rather than which assessment test was used. Class size is the only program factor in the model associated with a decrease in the probability of successful student progression. For every additional student in a pre-algebra class, students’ odds of passing pre-algebra decrease; similar results are found for elementary algebra.

Discussion

The results of the final model (including student, institutional, and variables related to the developmental math program at each college) explains only between five and 17 % of the variance of the probability of students’ successful progression through their developmental math sequences in the LUCCD. With the exception of arithmetic, the model explains more variance in attempting each level compared to passing those levels. We found that though each additional step significantly improves the model fit, the largest proportion of variance for each progression outcome is explained by student-level characteristics.

Student Background and Assessment Variables are Related to Successful Student Progression Through the Developmental Math Sequence

In the final logistic model, results show that, on average, female students are more likely to progress at every stage of the developmental math trajectory compared to their male peers. We also found that African American students are less likely to successfully progress compared to White students. These findings are consistent with the current literature (Bailey et al. 2010). Contrary to existing findings, we found that when progression is disaggregated by attempting and passing each level, Latino students have higher odds of attempting, but lower pass rates. Partially explaining this finding are the potential environmental pull factors experienced by a large proportion of community college students, and especially Latino students enrolled in developmental education. Crisp and Nora (2010) found that for these students, environmental pull factors—such as the number of hours worked per week—decreases the likelihood of success (persisting, transferring, or earning a degree). Thus, the competing demands for students’ time may result in lower success rates in developmental education. Because these students are actually trying to progress but seem to still have a difficult time passing, it is imperative that future research seeks to understand teaching pedagogy and strategies employed in the classroom.

Results reveal that students who enter at lower developmental levels are attempting and passing the higher courses at rates comparable to those who are initially placed in higher levels—if they attempt those levels. Similarly, (Martorell et al. 2013) employing a regression-discontinuity design found that placement into developmental education did not act as a discouragement to enrollment. Though only a small number of students make it through to the highest levels, these progression rates are illustrating that persisting-students are “catching up” and even exceeding their peers who were initially placed into higher courses.

This finding is similar to Bahr (2008) who compared students who were initially placed in developmental math and passed college-level math to students who were initially placed into college-level math and passed the course. He found that these two groups of students had similar academic outcomes in terms of credential attainment and successful transfer to a baccalaureate-granting institution. One limitation, though, of this study and Bahr’s (2008), is that we are using a subset of students—students who persevered through their sequences—who may be highly motivated and more college-prepared than the “average” developmental student. Due to the self-selection issue and descriptive nature of our analysis, we cannot conclude whether these highly motivated students would have done worse, just as well, or better had they been placed in higher developmental math levels.

However, studies employing quasi-experimental designs have found similar results; this suggests that developmental education has the potential of helping students when placed in the level that matches their ability. Utilizing a regression-discontinuity design within a discrete-time hazard analysis, Melguizo et al. (2013) estimated the effect of being placed into lower levels of developmental math on passing the subsequent course and accumulating 30 degree-applicable and 30 transferable credits. They conducted the analysis for the four levels of the developmental math sequence (arithmetic, pre-algebra, elementary algebra, and intermediate algebra) and for seven colleges in the LUCCD. The primary finding that emerged was that while, on average, initial placement in a lower-level course increases the time until a student completes the higher-level course by about a year, after this time period, the penalty for lower placement was small and not statistically significant. Still, this finding is dependent on the course level in which the student is placed and the college he or she attends. In terms of accumulating 30 degree-applicable and 30 transferable credits, they concluded that there is little short or long-term cost to a lower placement for students at the margin.

We also find that while student test scores increase the odds of progression throughout the math trajectory, the practical significance decreases with higher levels of math. Similar to the above findings, this result suggests that students’ initial math ability is less important as they progress through their sequence. Having obtained a multiple measure point(s) also increases the odds of passing each level, though it has no significant influence on attempting the courses. Thus, obtaining multiple measure points is predictive of student success. Other community college systems, such as Texas, have begun considering multiple measures (e.g., prior academic coursework, non-cognitive factors) in the assessment and placement process of students (THECB 2013). The finding from this study as well as Ngo and Kwon (2014) demonstrates the importance of these measures. Ngo and Kwon (2014) reported similar findings and concluded that students who were placed in a higher developmental math level because of multiple measure points for prior math background and high school GPA performed just as well as their peers.

Institutional Characteristics were Neither Strongly Related to Student Progression nor Consistent Across the Sequence

Compared to student-level variables, the institutional characteristics and factors related specifically to the institution’s developmental math program are not as strongly related across the progression outcomes. Overall, it appears that it does not matter where students enroll in developmental math, since institutional characteristics have no relationship with students’ probabilities of attempting and passing each level. This finding is similar to the Calcagno et al. (2008) study which concluded that well-prepared students with economic resources will graduate regardless of institution and students with many challenges may have difficulty even in strong colleges. However, placement is directly affected by each college’s placement criteria (Melguizo et al. 2014); thus, while the observed institutional and developmental math factors may not have a direct relationship with the progression outcomes, they do directly determine student placement.

One exception is that institutional size is associated with a decrease in the odds of passing elementary algebra. This result indicates that smaller institutions provide a more conducive environment for successfully passing elementary algebra. Another exception is that an increase in median family income lowers the odds of passing elementary algebra. This finding could be considered counter-intuitive and is inconsistent with Melguizo and Kosiewicz (2013) who found lower success rates for students attending colleges that are segregated either by socioeconomic status or race/ethnicity of the students. However, institutions with higher proportions of low-income students may have higher-funded special support programs (e.g., Extended Opportunity Programs and Services). These results may thus be reflecting what Gándara et al. (2012) highlighted, which is that programs targeting underrepresented and low-income students are important in increasing their academic success.

The percentage of high school graduates enrolled in the colleges increased the odds of attempting elementary algebra. Thus, having this type of student population may create a college culture more driven towards a pure collegiate-function (Cohen and Brawer 2008), since elementary algebra was the math requirement for an associate’s degree. The percentage of Latino students enrolled at the college also increases the odds of attempting elementary algebra. This finding is promising given that Latinos represent the largest (and growing) proportion of students within California community colleges. Our results reflect the Hagedorn et al. (2007) study which found a positive relationship between Latino-representation on Latino student outcomes. These minority-majority colleges appear to create an environment that fosters a collegiate function—perhaps due to an increase in students’ sense of belonging and/or specific programs aimed at increasing degree completion for these student populations. However, it is important to note that while Latino representation increased the odds of attempting developmental math, it had no significant relationship with passing the courses. Thus, further effort is necessary to ensure students are passing courses once they are enrolled.

Finally, one counter-intuitive finding was that certificate orientation is associated with an increase of the odds of passing intermediate algebra. Due to the filtering manner in which the sample for each outcome was selected, this finding may be illustrative of a dichotomy between the types of students within each institution, regardless of the institution’s certificate-, degree-, or transfer-orientation.

Developmental Math Class Size and Assessment Test Variables are Associated with Student Progression

The developmental math variables were significantly related to successful progression for passing pre-algebra and elementary algebra. The class sizes of pre-algebra and elementary algebra courses are associated with a decrease in the odds of passing each course, respectively. This finding suggests that smaller class sizes are beneficial to developmental math students, which is consistently demonstrated in K-12 educational research (e.g., Akerhielm 1995; Krueger 2003; Finn et al. 2003). Given the increases in enrollment and developmental math placement, this finding has implications within community colleges.

Students who were assessed and placed using MDTP rather than ACCUPLACER have higher odds of passing elementary algebra. However, since further analysis revealed a larger percentage of MDTP colleges’ student population placing into elementary algebra, this finding may be a function of student composition and the institutions’ placement criteria rather than which assessment test was used. Future research may be able to further our understanding of how these assessment and placement policies play a role in student progression.

Conclusions and Policy Implications

This study contributes to the literature by providing a more detailed and context-based description of the progression of students through their developmental math sequence. Our analysis demonstrates that more variance is explained for attempting each developmental math level compared to passing the level (with the exception of arithmetic). In addition, this study expands the traditional conceptual framework used to understand student progression by documenting the importance of including additional variables such as institutional-level characteristics (e.g., class size) and all the variables used by colleges to assess and place students (e.g., multiple measures). Below, we summarize the policy implications of some of the main findings.

-

1.

The largest barrier for students placed into developmental math in the LUCCD appears to be attempting their initial course. Since the assessment and placement process varies by state and college, our findings are only generalizable to the LUCCD colleges. However, this result has been consisted with previous research demonstrating large proportions of students failing to attempt their first remedial math course (Bahr 2012; Bailey et al. 2010). The field would benefit from qualitative research exploring what occurs between the time students are assessed and enrollment in math, as well as observing the classroom environment and teaching pedagogy within developmental math courses (Grubb 2012).

-

2.

Attempt rates are clearly aligned with the required courses to attain a degree, and this result helps explain the relatively lower attempt rates of intermediate algebra. This is an important finding that illustrates the need of having a clear understanding of the policy context when conducting studies related to what previous literature has referred to as “gateway” courses.Footnote 10 Degree, transfer, and vocational certificate math requirements vary by state, and in a decentralized system like California, may vary by college. Therefore, it is imperative research aligns course-taking patterns with student educational goals.

-

3.

Pass rates for students progressing through the sequence ranged from 64 to 79 %. Examining these percentages across levels, it appears that students who are actually progressing through their sequence are passing courses at comparable rates to their initially higher placed peers. Though only a small number of students make it through to the highest levels of developmental math, these findings suggest that developmental courses are helping students gain the skills necessary to successfully pass them. In fact, after controlling for other factors, students initially placed in lower levels are attempting and passing subsequent courses with higher odds compared to their higher-placed peers. However, it is important to note that these students represent those who persevered through their sequences and thus represents a subset of students who may be more highly motivated.

-

4.

Students receiving a multiple measure point are more likely to pass each level. Though most of the variance is explained by student-level characteristics, one of the strongest predictors increasing the odds of passing each level is whether students received a multiple measure point. As policymakers and practitioners refine their assessment and placement policies, it is important to recognize that a score on a standardized test is only one factor predicting student success.

Understanding student progression in terms of both attempting and passing courses in the developmental math sequence has important implications for policymakers and practitioners. By disaggregating progression as attempting and passing each developmental math level, practitioners can gain a greater understanding of where their students are exiting the sequence and focus initiatives at these important junctures. For example, our results indicate that the largest proportion of students exit the sequence by not attempting or passing their initial course. It is thus necessary to further understanding of what occurs between students’ placement and attempting the course which results in lower attempt rates, and what occurs in the classroom that may be discouraging or inhibiting their success.

The results of this study suggest that in order for community colleges to increase their degree attainment and transfer rates they need to place students correctly, define clear college pathways, and create programs to make sure that students successfully progress through their developmental math trajectory. While community colleges cannot be held accountable for the outcomes of students who do not pursue the courses, they should be held accountable for making sure the largest possible number of students is attempting and passing the courses needed to attain their desired educational outcome.

Notes

Developmental education is also commonly referred to as remediation or remedial education. These terms will be used interchangeably throughout the article.

For the purposes of utilizing consistent language throughout the article, we describe the ethnic/racial categories as African American, Latino, White and Asian. When previous studies utilize other commonly referred categories like Black and Hispanic, we have changed these to African American and Latino, respectively.

One college was dropped from the analysis because the school lacked data on the multiple measure points employed to place students into developmental math education.

This only applies to having the assessment and placement college match the college in which the student takes their first math course. This was done to ensure that we did not include students who “gamed” the system and had a test score that would have placed them into one level, but because they took the class at another college, were able to enroll in a higher level. Some students placed into arithmetic and enrolled in arithmetic at the same college but then enrolled in pre-algebra at another college; these students are still included in the sample. However, only a small percentage of students take their math courses at multiple colleges.

Computation available upon request from authors.

The traditional manner of measuring successful progression through the developmental math trajectory calculates attempt and pass rates of developmental math students by dividing the number of students who attempt or pass the course by the total number of students initially placed into each level—thus utilizing the entire sample of students regardless of their enrollment in developmental math.

Attempt is defined as enrolling in math and remaining past the no-penalty drop date. When computing the rates for all those who enroll whether or not they remain in the course, the attempt percentages decrease though the pass rates remain stable.

Because of the statistical power in our models, we only discuss findings significant at the p < .01 level. All results are provided in Table 4.

In this context, "gateway” courses relate to the highest level of math required prior to enrolling in college-level math, or math that is required for an associate’s degree and/or transfer. While the gateway course for the LUCCD at the time of this study was elementary algebra (requirement changed to intermediate algebra in 2009), this is not always the case.

References

Akerhielm, K. (1995). Does class size matter? Economics of Education Review, 14(3), 229–241.

Attewell, P., Lavin, D., Domina, T., & Levey, T. (2006). New evidence on college remediation. Journal of Higher Education, 77(5), 886–924.

Bahr, P. R. (2008). Does mathematics remediation work?: A comparative analysis of academic attainment among community college students. Research in Higher Education, 49, 420–450.

Bahr, P. R. (2009). Educational attainment as process: Using hierarchical discrete-time event history analysis to model rate of progress. Research in Higher Education, 50(7), 691–714.

Bahr, P. R. (2010). Preparing the underprepared: An analysis of racial disparities in postsecondary mathematics remediation. Journal of Higher Education, 81(2), 209–237.

Bahr, P. R. (2012). Deconstructing remediation in community colleges: Exploring associations between course-taking patterns, course outcomes, and attrition from the remedial math and remedial writing sequences. Research in Higher Education, 53, 661–693.

Bahr, P. R. (2013). The deconstructive approach to understanding college students’ pathways and outcomes. Community College Review, 41(2), 137–153.

Bailey, T. (2009a). Challenge and opportunity: Rethinking the role and function of developmental education in community college. New Directions for Community Colleges, 145, 11–30.

Bailey, T. (2009b). Rethinking remedial education in community college. New York, NY: Community College Research Center, Teachers College, Columbia University.

Bailey, T., Jeong, D. W., & Cho, S. (2010). Referral, enrollment, and completion in developmental education sequences in community colleges. Economics of Education Review, 29, 255–270.

Bettinger, E. P., & Long, B. T. (2005). Remediation at the community college: Student participation and outcomes. New Directions for Community Colleges, 129, 17–26.

Bettinger, E. P., & Long, B. T. (2007). Institutional responses to reduce inequalities in college outcomes: Remedial and developmental courses in Higher Education. In S. Dickert-Conlin & R. Rubenstein (Eds.), Economic Inequality in higher education: access, persistence, and success. New York, NY: Russell Sage Foundation Press.

Boatman, A., & Long, B. T. (2010). Does remediation work for all students? How the effects of postsecondary remedial and developmental courses vary by level of academic preparation (NCPR Working Paper). New York, NY: National Center for Postsecondary Research.

Bremer, C. D., Center, B. A., Opsal, C. L., Medhanie, A., Jang, Y. J., & Geise, A. C. (2013). Outcome trajectories of developmental students in community colleges. Community College Review, 41(2), 154–175.

Breneman, D., & Harlow, W. (1998). Remedial education: Costs and consequences. Fordham Report, 2(9), 1–22.

Burgess, L. A., & Samuels, C. (1999). Impact of full-time versus part-time instructor status on college student retention and academic performance in sequential courses. Community College Journal of Research and Practice, 23(5), 487–498.

Calcagno, J. C., Bailey, T., Jenkins, D., Kienzl, G., & Leinbach, T. (2008). Community college student success: What institutional characteristics make a difference? Economics of Education Review, 27(6), 632–645.

Calcagno, J. C., Crosta, P., Bailey, T., & Jenkins, D. (2007). Does age of entrance affect community college completion probabilities? Evidence from a discrete-time hazard model. Educational Evaluation and Policy Analysis, 29, 218–235.

California Community Colleges Chancellor’s Office. (1999). Understanding funding, finance and budgeting: A manager’s handbook. Retrieved from http://files.eric.ed.gov/fulltext/ED432331.pdf.

California Community Colleges Chancellor’s Office. (2011). Basic skills accountability: Supplement to the ARCC Report. Retrieved from http://californiacommunitycolleges.cccco.edu/Portals/0/reportsTB/2011_Basic_Skills_Accountability_Report_[Final]_Combined.pdf.

Chen, H. (2005). First generation students in postsecondary education: A look at their college transcripts (NCES 2005-171). Washington, DC: U. S. Department of Education, National Center for Education Statistics, U.S. Government Printing Office.

Choy, S. P. (2002). Access and persistence: Findings from 10 years of longitudinal research on students. Washington, DC: American Council on Education, Center for Policy Analysis.

Cohen, A. M., & Brawer, F. B. (2008). The American community college (5th ed.). San Francisco, CA: Jossey-Bass.

Crisp, G., & Delgado, C. (2014). The impact of developmental education on community college persistence and vertical transfer. Community College Review, 42(2), 99–117.

Crisp, G., & Nora, A. (2010). Hispanic student success: Factors influencing the persistence and transfer decisions of Latino community college students enrolled in developmental education. Research in Higher Education, 51, 175–194.

Duckworth, A. L., Peterson, C., Matthews, M. D., & Kelly, D. R. (2007). Grit: Perseverance and passion for long-term goals. Journal of Personality and Social Psychology, 92(6), 1087–1101.

Fike, D. S., & Fike, R. (2007). Does faculty employment status impact developmental mathematics outcomes? Journal of Developmental Education, 31(1), 2–11.

Finn, J. D., Pannozzo, G. M., & Achilles, C. M. (2003). The “why’s” or class size: Student behavior in small classes. Review of Educational Research, 73(3), 321–368.

Gándara, P., Alvarado, E., Driscoll, A., & Orfield, G. (2012). Building pathways to transfer: Community colleges that break the chain of failure for students of color. Los Angeles, CA: The Civil Rights Project, University of California, Los Angeles.

Glenn, D., & Wagner, W. (2006). Cost and consequences of remedial course enrollment in Ohio public higher education: Six-year outcomes for fall 1998 cohort. Paper presented at the Association of Institutional Research Forum, Chicago. Retrieved from http://regents.ohio.gov/perfrpt/special_reports/Remediation_Consequences_2006.pdf.

Grimes, S. K., & David, K. C. (1999). Underprepared community college students: Implications of attitudinal and experiential differences. Community College Review, 27(2), 73–92.

Grubb, W. N. (2012). Basic skills education in community colleges: Inside and outside of classrooms. New York, NY: Routledge.

Hagedorn, L. S., Chi, W., Cepeda, R. M., & McLain, M. (2007). An investigation of critical mass: The role of Latino representation in the success of urban community college students. Research in Higher Education, 48(1), 73–91.

Hagedorn, L. S., & DuBray, D. (2010). Math and science success and nonsuccess: Journeys within the community college. Journal of Women and Minorities in Science and Engineering, 16(1), 31–50.

Hagedorn, L. S., Siadat, M. V., Fogel, S. F., Nora, A., & Pascarella, E. T. (1999). Success in college mathematics: Comparisons between remedial and nonremedial first-year college students. Research in Higher Education, 40(3), 261–284.

Hawley, T. H., & Harris, T. A. (2005–2006). Student characteristics related to persistence for first-year community college students. Journal of College Student Retention, 7(1–2), 117–142.

Horn, L., & Nevill, S. (2006). Profile of undergraduates in U.S. postsecondary education institutions: 2003–04: With a special analysis of community college students (NCES-184). Washington, DC: U.S. Department of Education, National Center for Education Statistics.

Hoyt, J. E. (1999). Remedial education and student attrition. Community College Review, 27, 51–72.

Krueger, A. B. (2003). Economic considerations and class size. The Economic Journal, 113(485), F34–F63.

Large Urban Community College District, Office of Institutional Research. (2012). Retrieved from http://www.LUCCD.edu/ (pseudonym).

Lazarick, L. (1997). Back to the basics: Remedial education. Community College Journal, 68, 11–15.

Legislative Analyst’s Office. (2012). The 2012-13 budget: Analysis of the Governor’s higher education proposal. Retrieved from http://www.lao.ca.gov/analysis/2012/highered/higher-ed-020812.aspx.

Martorell, P., McFarlin Jr., I., & Xue, Y. (2013). Does failing a placement exam discourage underprepared students from going to college? (NPC Working paper #11-14). University of Michigan: National Poverty Center.

Melguizo, T. (2011). A review of the theories developed to describe the process of college persistence and attainment. In J. C. Smart & M. B. Paulsen (Eds.), Higher education: Handbook of theory and research (pp. 395–424). New York, NY: Springer.

Melguizo, T., Bos, H., & Prather, G. (2013). Using a regression discontinuity design to estimate the impact of placement decisions in developmental math in Los Angeles Community College District (LACCD). (CCCC Working Paper). Rossier School of Education, University of Southern California: California Community College Collaborative. Retrieved from http://www.uscrossier.org/pullias/research/projects/sc-community-college/.

Melguizo, T., Hagedorn, L. S., & Cypers, S. (2008). The need for remedial/developmental education and the cost of community college transfer: Calculations from a sample of California community college transfers. The Review of Higher Education, 31(4), 401–431.